#artificially or not

Explore tagged Tumblr posts

Text

i did enjoy netflix's moxie Atlas a great deal. Im by no means a critic or whatever, and whenever I watch smth for the first time, most of the glaring issues or difficulties usually slip past my notice because im emotionally invested. these issues cannot slip by if im not emotionally engaged with the characters and their relationships with one another. as such i understand there are many problems i personally did not pick up on until they were pointed out to me, but i still had a great time with the movie. it made me laugh, and more importantly, it made me feel and even cry at the end, and thats what matters most to me.

overall its not a perfect movie. but in entertainment value, for me, it came pretty damn close.

8/10

#that said i think itd be interesting if there was a story about an ai that was made way too#much like a human psychologically and as a result it develops trauma and the human lead has to help it cope with that#and by doing that understand and recognize that this thing is alive and feels#artificially or not#maybe ill try writing something like that#netflix atlas#netflix atlas 2024#jlo#jennifer lopez#atlas 2024#atlas movie#atlas review#review

8 notes

·

View notes

Text

no i don't want to use your ai assistant. no i don't want your ai search results. no i don't want your ai summary of reviews. no i don't want your ai feature in my social media search bar (???). no i don't want ai to do my work for me in adobe. no i don't want ai to write my paper. no i don't want ai to make my art. no i don't want ai to edit my pictures. no i don't want ai to learn my shopping habits. no i don't want ai to analyze my data. i don't want it i don't want it i don't want it i don't fucking want it i am going to go feral and eat my own teeth stop itttt

#i don't want it!!!!#ai#artificial intelligence#there are so many positive uses for ai#and instead we get ai google search results that make me instantly rage#diz says stuff

134K notes

·

View notes

Text

I’m just going to leave this here

#doctor who#steven moffat#torchwood#whoniverse#russel t davies#15th doctor#nucti gatwa#david tennant#peter capaldi#artificial intelligence#fuck ai#anti ai

73K notes

·

View notes

Text

69K notes

·

View notes

Text

chatgpt is the coward's way out. if you have a paper due in 40 minutes you should be chugging six energy drinks, blasting frantic circus music so loud you shatter an eardrum, and typing the most dogshit essay mankind has ever seen with your own carpel tunnel laden hands

#chatgpt#ai#artificial intelligence#anti ai#ai bullshit#fuck ai#anti generative ai#fuck generative ai#anti chatgpt#fuck chatgpt#mine

58K notes

·

View notes

Text

28K notes

·

View notes

Text

There was a paper in 2016 exploring how an ML model was differentiating between wolves and dogs with a really high accuracy, they found that for whatever reason the model seemed to *really* like looking at snow in images, as in thats what it pays attention to most.

Then it hit them. *oh.*

*all the images of wolves in our dataset has snow in the background*

*this little shit figured it was easier to just learn how to detect snow than to actually learn the difference between huskies and wolves. because snow = wolf*

Shit like this happens *so often*. People think trainning models is like this exact coding programmer hackerman thing when its more like, coralling a bunch of sentient crabs that can do calculus but like at the end of the day theyre still fucking crabs.

37K notes

·

View notes

Text

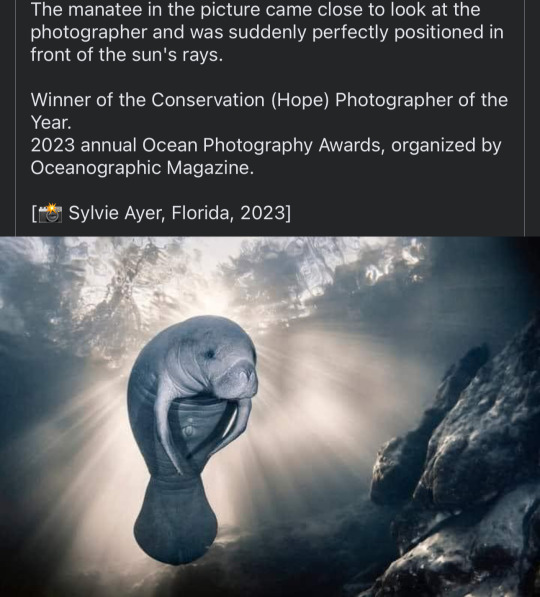

this manatee looks like it’s in a skyrim loading screen

#lol good luck finding all of the photoshopped versions in the rb history. ‘manatee restored’ is still my favorite of all time#misc#I encourage anyone dyslexic to try rotating him in your mind. I can’t do that; which is why I’m asking you to.#also: a bunch of tags are surprised this isn’t ‘shopped#it’s the lighting. backlit by the sun (which is diffused through the water) but also forelit artificially#the artificial light - a flash pack or something - casts a hard shadow under the creatures arm#which normally wouldn’t be possible if backlit by the SUN; you’d see a less-hard/more-fragmented shadow above water#as light sources ‘compete’ in a sense - and since there aren’t any light sources which can outshine the literal sun#it looks a bit weird when the darkest shadow is being cast from any other origin point - which is what’s essentially happening here#I don’t know the mechanics of how light travels through water; but I know the effect is substantial even with relatively short distances#also: it’s been balanced and color corrected by the author of the photo - who made deliberate choices to bring out the full potential#so it’s not like it’s a fresh and untouched export#but the kind of ‘tacked on’ appearance of the creature is a result of the lighting conditions within the image

62K notes

·

View notes

Text

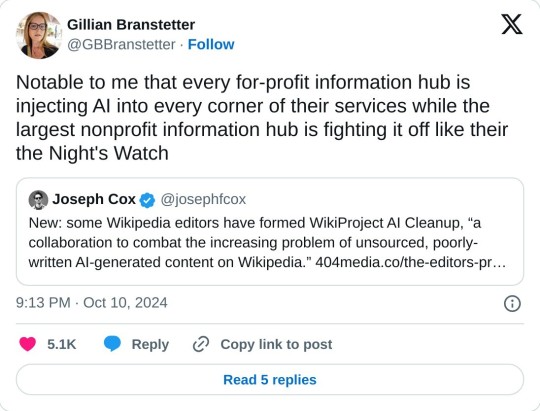

A group of Wikipedia editors have formed WikiProject AI Cleanup, “a collaboration to combat the increasing problem of unsourced, poorly-written AI-generated content on Wikipedia.” The group’s goal is to protect one of the world’s largest repositories of information from the same kind of misleading AI-generated information that has plagued Google search results, books sold on Amazon, and academic journals. “A few of us had noticed the prevalence of unnatural writing that showed clear signs of being AI-generated, and we managed to replicate similar ‘styles’ using ChatGPT,” Ilyas Lebleu, a founding member of WikiProject AI Cleanup, told me in an email. “Discovering some common AI catchphrases allowed us to quickly spot some of the most egregious examples of generated articles, which we quickly wanted to formalize into an organized project to compile our findings and techniques.”

9 October 2024

15K notes

·

View notes

Text

28K notes

·

View notes

Text

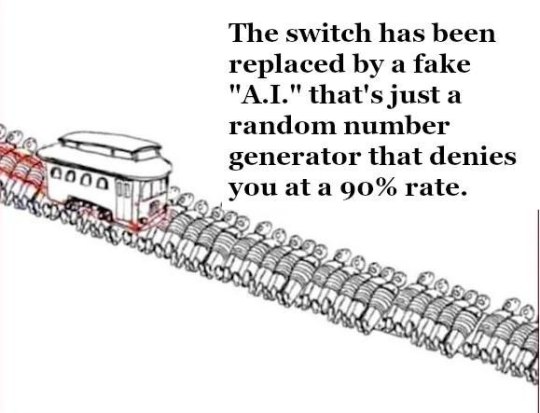

They will decide to kill you eventually.

#the trolley problem#trolley problem#ausgov#politas#australia#artificial intelligence#anti artificial intelligence#anti ai#fuck ai#brian thompson#united healthcare#unitedhealth group inc#uhc ceo#uhc shooter#uhc generations#uhc assassin#uhc lb#uhc#fuck ceos#ceo second au#ceo shooting#tech ceos#ceos#ceo down#ceo information#ceo#auspol#tasgov#taspol#fuck neoliberals

4K notes

·

View notes

Text

A new tool lets artists add invisible changes to the pixels in their art before they upload it online so that if it’s scraped into an AI training set, it can cause the resulting model to break in chaotic and unpredictable ways.

The tool, called Nightshade, is intended as a way to fight back against AI companies that use artists’ work to train their models without the creator’s permission. Using it to “poison” this training data could damage future iterations of image-generating AI models, such as DALL-E, Midjourney, and Stable Diffusion, by rendering some of their outputs useless—dogs become cats, cars become cows, and so forth. MIT Technology Review got an exclusive��preview of the research, which has been submitted for peer review at computer security conference Usenix.

AI companies such as OpenAI, Meta, Google, and Stability AI are facing a slew of lawsuits from artists who claim that their copyrighted material and personal information was scraped without consent or compensation. Ben Zhao, a professor at the University of Chicago, who led the team that created Nightshade, says the hope is that it will help tip the power balance back from AI companies towards artists, by creating a powerful deterrent against disrespecting artists’ copyright and intellectual property. Meta, Google, Stability AI, and OpenAI did not respond to MIT Technology Review’s request for comment on how they might respond.

Zhao’s team also developed Glaze, a tool that allows artists to “mask” their own personal style to prevent it from being scraped by AI companies. It works in a similar way to Nightshade: by changing the pixels of images in subtle ways that are invisible to the human eye but manipulate machine-learning models to interpret the image as something different from what it actually shows.

Continue reading article here

#Ben Zhao and his team are absolute heroes#artificial intelligence#plagiarism software#more rambles#glaze#nightshade#ai theft#art theft#gleeful dancing

22K notes

·

View notes

Text

After 146 days, the Writer's Strike has ended with a resounding success. Throughout constant attempts by the studios to threaten, gaslight, and otherwise divide the WGA, union members stood strong and kept fast in their demands. The result is a historic win guaranteeing not only pay increases and residual guarantees, but some of the first serious restrictions on the use of AI in a major industry.

This win is going to have a ripple effect not only throughout Hollywood but in all industries threatened by AI and wage reduction. Studio executives tried to insist that job replacement through AI is inevitable and wage increases for staff members is not financially viable. By refusing to give in for almost five long months, the writer's showed all of the US and frankly the world that that isn't true.

Organizing works. Unions work. Collective bargaining how we bring about a better future for ourselves and the next generation, and the WGA proved that today. Congratulations, Writer's Guild of America. #WGAstrong!!!

#gingerswagfreckles#wga#writer's strike#wga strong#wga strike#do the write thing#sag#sag aftra#sag afta strike#unions#Hollywood#according to the news the strike isnt technically over until a vote can be ratified on Tuesday but in practice its over#pickets r called off immediately amd they got almlst everything theu wanted#so its gonna be ratified for sure#current events#news#AI#artificial intelligence

38K notes

·

View notes

Text

#ai#artificial intelligence#anti-ai#vincent van gogh#van gogh#twitter#tweets#tweet#meme#memes#funny#lol#humor

73K notes

·

View notes

Text

I saw a post before about how hackers are now feeding Google false phone numbers for major companies so that the AI Overview will suggest scam phone numbers, but in case you haven't heard,

PLEASE don't call ANY phone number recommended by AI Overview

unless you can follow a link back to the OFFICIAL website and verify that that number comes from the OFFICIAL domain.

My friend just got scammed by calling a phone number that was SUPPOSED to be a number for Microsoft tech support according to the AI Overview

It was not, in fact, Microsoft. It was a scammer. Don't fall victim to these scams. Don't trust AI generated phone numbers ever.

#this has been... a psa#psa#ai#anti ai#ai overview#scam#scammers#scam warning#online scams#anya rambles#scam alert#phishing#phishing attempt#ai generated#artificial intelligence#chatgpt#technology#ai is a plague#google ai#internet#warning#important psa#internet safety#safety#security#protection#online security#important info

3K notes

·

View notes