#there are so many positive uses for ai

Explore tagged Tumblr posts

Text

no i don't want to use your ai assistant. no i don't want your ai search results. no i don't want your ai summary of reviews. no i don't want your ai feature in my social media search bar (???). no i don't want ai to do my work for me in adobe. no i don't want ai to write my paper. no i don't want ai to make my art. no i don't want ai to edit my pictures. no i don't want ai to learn my shopping habits. no i don't want ai to analyze my data. i don't want it i don't want it i don't want it i don't fucking want it i am going to go feral and eat my own teeth stop itttt

#i don't want it!!!!#ai#artificial intelligence#there are so many positive uses for ai#and instead we get ai google search results that make me instantly rage#diz says stuff

135K notes

·

View notes

Note

As cameras becomes more normalized (Sarah Bernhardt encouraging it, grifters on the rise, young artists using it), I wanna express how I will never turn to it because it fundamentally bores me to my core. There is no reason for me to want to use cameras because I will never want to give up my autonomy in creating art. I never want to become reliant on an inhuman object for expression, least of all if that object is created and controlled by manufacturing companies. I paint not because I want a painting but because I love the process of painting. So even in a future where everyone’s accepted it, I’m never gonna sway on this.

if i have to explain to you that using a camera to take a picture is not the same as using generative ai to generate an image then you are a fucking moron.

#ask me#anon#no more patience for this#i've heard this for the past 2 years#“an object created and controlled by companies” anon the company cannot barge into your home and take your camera away#or randomly change how it works on a whim. you OWN the camera that's the whole POINT#the entire point of a camera is that i can control it and my body to produce art. photography is one of the most PHYSICAL forms of artmakin#you have to communicate with your space and subjects and be conscious of your position in a physical world.#that's what makes a camera a tool. generative ai (if used wholesale) is not a tool because it's not an implement that helps you#do a task. it just does the task for you. you wouldn't call a microwave a “tool”#but most importantly a camera captures a REPRESENTATION of reality. it captures a specific irreproducible moment and all its data#read Roland Barthes: Studium & Punctum#generative ai creates an algorithmic IMITATION of reality. it isn't truth. it's the average of truths.#while conceptually that's interesting (if we wanna get into media theory) but that alone should tell you why a camera and ai aren't the sam#ai is incomparable to all previous mediums of art because no medium has ever solely relied on generative automation for its creation#no medium of art has also been so thoroughly constructed to be merged into online digital surveillance capitalism#so reliant on the collection and commodification of personal information for production#if you think using a camera is “automation” you have worms in your brain and you need to see a doctor#if you continue to deny that ai is an apparatus of tech capitalism and is being weaponized against you the consumer you're delusional#the fact that SO many tumblr lefists are ready to defend ai while talking about smashing the surveillance state is baffling to me#and their defense is always “well i don't engage in systems that would make me vulnerable to ai so if you own an apple phone that's on you”#you aren't a communist you're just self-centered

615 notes

·

View notes

Text

man i hate the current state of ai so much bc it's totally poisoned the well for when actual good and valuable uses of ai are developed ethically and sustainably....

like ai sucks so bad bc of capitalism. they want to sell you on a product that does EVERYTHING. this requires huge amounts of data, so they just scrape the internet and feed everything in without checking if it's accurate or biased or anything. bc it's cheaper and easier.

ai COULD be created with more limited data sets to solve smaller, more specific problems. it would be more useful than trying to shove the entire internet into a LLM and then trying to sell it as a multi tool that can do anything you want kinda poorly.

even in a post-capitalist world there are applications for ai. for example: resource management. data about how many resources specific areas typically use could be collected and fed into a model to predict how many resources should be allocated to a particular area.

this is something that humans would need to be doing and something that we already do, but creating a model based on the data would reduce the amount of time humans need to spend on this important task and reduce the amount of human error.

but bc ai is so shitty now anyone who just announces "hey we created an ai to do this!" will be immediately met with distrust and anger, so any ai model that could potentially be helpful will have an uphill battle bc the ecosystem has just been ruined by all the bullshit chatgpt is selling

#i'm not blaming people for being distrustful btw#they're right to be#they've been shown no evidence that ai can have positive impacts#but it just makes me mad bc ai isn't inherently evil#you can collect data ethically and accurately!#you can use ai to solve complicated problems that would allow humans to spend less time on them!#there are so many possible uses for ai that aren't just plaigerism machine#so don't write of all ai is my point#ai itself isn't the problem

7 notes

·

View notes

Text

i have a job interview in like three hours chat . literally shaking

#i only had one other interview before and i failed so so so miserably#i think i scripted my answers to this one pretty well but it was scheduled by their ai so i'm worried i'll go in and they won't actually--#-- want to talk to me. because the ai will just schedule shit without caring about human input#which. tbf is on them for using an ai to do their screenings but pop off i guess idk#yeah though i've been fine since yesterday when i found out but now that it's so close i'm genuinely shaking i'm so scared#i am thinking so many positive thoughts i am manifesting so hard my therapist would be really proud of me#woof.txt

2 notes

·

View notes

Text

The research librarians are giving a presentation on how we might use generative AI at work and one thing they suggested was “grant writing” and honestly, if you use ChatGPT to write a grant application I don’t think you deserve that money tbh

#sincerely if you cannot communicate effectively and chatGPT can do so better than you#then that says worlds about your ability to communicate rather than the AI#this lends credence more and more to my position that a lot of people in email jobs are bad at them and should be digging ditches#similarly many many people who dig ditches should be doing the email jobs#but the meritocracy is fake so you get this topsy turvy world instead#I literally do not care what potential uses cases exist for genAI I’ve become so negatively polarized by the credulity surrounding it#that I would push the AI extinction button in a heartbeat

5 notes

·

View notes

Text

it's easier to apply for jobs than ever! so what if you lost your insurance, anyone can get a job these days, even without meds. everyone is hiring! there's a "good employee" shortage!

well you just need to revamp your resume, here's a paid app subscription that can read it for you. rewrite the cover letter they won't read. google jobs in my area and then scrawl through Monster/Indeed/worbly. did you want to save the search? this was posted 98 days ago. over 1 billion applicants! this position is trending.

jobs i actively like doing and get paid for. your search returned no results. easy-apply with HireSpin! easy apply with SparkFire! easy apply with PenisFlash! with a few short clicks, get your information stolen.

watch out! the first 98 links on google are actually scams! they're false postings. oopsie. that business isn't even hiring. that other one is closed permanently. find one that looks halfway legit, google the company and the word "careers". go to their page. scroll past brightly-lit diversity stock photo JOIN US white sans serif. we are a unique, fresh, client-focused stock value capitalism. we are committed to excellence and selling your soul on ebay. we are DRIVEN with POWER to INNOVATE our greed. yippee! our company has big values of divisive decision making, sucking our dicks, and hating work-life balances. our values are to piss in your mouth. sign here and tell us if you have gender issues so we can get ahead of the sexual harassment claim. are you hispanic although let's be real we threw out the resume when we saw your last name.

sign up to LinkHub to access updates from this company. make a HirePlus account to apply. download the PoundLink app. your account has been created, click the link we sent you in 15 minutes. upload that resume. we didn't read the resume, manually fill in the lines now. what is your expected pay grade. oh actually we want hungry people, not people driven by a salary. cut a zero off that number, buddy, this is about opportunity, and we need to be thrifty. highest level of education. autofill is glitching. here is an AI generated set of questions. what is your favorite part of our sexy, sexy company. how do you resolve conflict. will you get our company logo tattooed on your person. warning: while our CEO is guilty of wage theft, we will absolutely refuse to hire a nonviolent felon.

thank you for your interest at WEEBLIX. we actually already filled this position internally. we actually never had that posting. we actually needed you to have 9 years of experience and since you have 10 years we think it might be too many? we'll be texting you. we'll email you. we'll keep your resume. definitely absolutely we won't just completely ignore you. look at your phone, there's already a spam text from Bethany@stealyouridentity. they're hiring!

wait, did you get an interview? well that's special, aren't you lucky. out of 910 jobs you applied to, one answered, finally. and funny story! actually the position isn't exactly as advertised, we are looking for someone curious and dedicated. it's sort of more managerial. no, the pay doesn't change - you won't have any leadership title. now take this 90 minute assessment. in order to be a dog groomer, we need you to explain cell biology. in order to be a copyeditor, write a tiny dissertation about the dwindling supply of helium on the planet. answer our riddles three. great job! we just need to push this up to Tracy in HR who will send it to Rodney who is actually in charge. and then of course it's jay's decision and then greg will need to see you naked and if you survive you'll be given a drug test and a full anal examination.

and of course you'll be hungry this whole time, aren't you, months and months of the same shit. months of no insurance, no meds, no funding, barely able to afford the internet and the phone and the rent - all things you need in order to even apply for our thing. but do it again! do it again and again and again, until you flip inside out and turn into a being of pure dread!

you're not hired yet because you're lazy. there's over one million AI-generated hallucinated jobs in your area. don't worry. with zipruiter, hiring and firing is easier than ever. sign up. stay on-call.

in the meantime, little peon - why don't you just fucking suffer.

#spilled ink#well you'll never guess how i feel about this#ps im hispanic. nonbinary. disabled. girl i cannot pick a fucking struggle.

15K notes

·

View notes

Text

I am very wary of people going "China does it better than America" because most of it is just reactionary rejection of your overlord in favor of his rival, but this story is 1. absolutely legit and 2. way too funny.

US wants to build an AI advantage over China, uses their part in the chip supply chain to cut off China from the high-end chip market.

China's chip manufacturing is famously a decade behind, so they can't advance, right?

They did see it as a problem, but what they then did is get a bunch of Computer Scientists and Junior Programmers fresh out of college and funded their research in DeepSeek. Instead of trying to improve output by buying thousands of Nvidia graphics cards, they tried to build a different kind of model, that allowed them to do what OpenAI does at a tenth of the cost.

Them being young and at a Hedgefund AI research branch and not at established Chinese techgiants seems to be important because chinese corporate culture is apparently full of internal sabotage, so newbies fresh from college being told they have to solve the hardest problems in computing was way more efficient than what usually is done. The result:

American AIs are shook. Nvidia, the only company who actually is making profit cause they are supplying hardware, took a hit. This is just the market being stupid, Nvidia also sells to China. And the worst part for OpenAI. DeepSeek is Open Source.

Anybody can implement deepseek's model, provided they have the hardware. They are totally independent from DeepSeek, as you can run it from your own network. I think you will soon have many more AI companies sprouting out of the ground using this as its base.

What does this mean? AI still costs too much energy to be worth using. The head of the project says so much himself: "there is no commercial use, this is research."

What this does mean is that OpenAI's position is severely challenged: there will soon be a lot more competitors using the DeepSeek model, more people can improve the code, OpenAI will have to ask for much lower prices if it eventually does want to make a profit because a 10 times more efficient opensource rival of equal capability is there.

And with OpenAI or anybody else having lost the ability to get the monopoly on the "market" (if you didn't know, no AI company has ever made a single cent in profit, they all are begging for investment), they probably won't be so attractive for investors anymore. There is a cheaper and equally good alternative now.

AI is still bad for the environment. Dumb companies will still want to push AI on everything. Lazy hacks trying to push AI art and writing to replace real artists will still be around and AI slop will not go away. But one of the main drivers of the AI boom is going to be severely compromised because there is a competitor who isn't in it for immediate commercialization. Instead you will have a more decentralized open source AI field.

Or in short:

3K notes

·

View notes

Text

one of those things you really gotta learn is that it's insanely easy to get people to get mad at anything if you just phrase it the right way. slap the word "woke" on anything you want conservatives to hate. call something "extremism" or "radical" to get a centrist to fear it. say that a particular take "comes from a position of privilege" to get leftists to denounce it (that's right, even us leftists are susceptible to propaganda that uses leftist language). all these are simplistic examples of course, but it's all to say that certain terms, slogans, and phrases just kind of turn off people's critical thinking, especially ones with negative connotation. there are so many words that are just shorthand for "bad." once a term reaches buzzword status, it becomes practically useless.

it goes for general attitudes too. "this piece of news is a sign that the world is getting worse" is a shockingly easy idea to sell, even when the "piece of news" in question is completely fabricated. I'll often see leftists uncritically sharing right wing propaganda that rides on the back of the "humans bad, nature good" cliche, or the "US education system bad" cliche, or even the current "AI bad" cliche. most of the details of a given post will go entirely unquestioned as long as they support whatever attitude is most popular right now. and none of us is immune to this.

(the funny thing is that I'm kind of playing with fire here even making this post. folks are so used to just reacting to shit that I have no way of predicting which buzzword I included here will trigger a negative association in someone's mind and convince them I'm taking some random antagonistic stance on a topic that they've been really fired up about lately.)

6K notes

·

View notes

Text

another undergrad TA to double down on #4 and the tradition of the rushed final paper, especially in lower division courses where literally 30/60 of the submissions in any given section are clearly redbull fueled, and all of my cohort still remembers that shitty paper we all trauma bonded over—it is genuinely relatable and endearing to watch freshies go through the rite of passage and I will always be as generous as I can bc I have that in mind

also I'm literally never bothered by "poor" (or unclear/grammatically incorrect) writing that people send over for help, bc at least you're asking for help, and you're giving it an effort. and that will always stick in my mind when I'm grading -- conversely, I will also always remember the students who didn't give enough shits to turn in their own work. and they're not the ones I send recommendations for or share connections with, lol. that's a separate point, though

chatgpt is the coward's way out. if you have a paper due in 40 minutes you should be chugging six energy drinks, blasting frantic circus music so loud you shatter an eardrum, and typing the most dogshit essay mankind has ever seen with your own carpel tunnel laden hands

#side tangent on my last point though: being kind and honest will actually get you so far in uni and the opposite is also true#I've held back sharing opportunities with students who I know have been rude to other students before#but I've also dropped little name drops and made strategic shoulder taps and gotten ppl whole jobs/workshop positions#with my buddies at the department almost exclusively bc I know ppl who are genuine and kind and honest#and ask for very little in return. and I always want to reward that when I can#anyway this isnt a soapbox for me to be all “be nice to your TAs bc it can get you places”#it IS a little soapbox for me to go “stop fucking using AI and also just don't generally be an ass”#word rlly goes around in uni departments and so many ppl don't know what they've lost bc of reputation

60K notes

·

View notes

Note

What I don't get is that other your support of AI image generation, you're SO smart and well read and concerned with ethics. I genuinely looked up to you! So, what, ethics for everyone except for artists, or what? Is animation (my industry, so maybe I care more than the average person) too juvenile and simplistic a medium for you to care about its extinction at the hands of CEOs endorsing AI? This might sound juvenile too, but I'm kinda devastated, because I genuinely thought you were cool. You're either with artists or against us imho, on an issue as large as this, when already the layoffs in the industry are insurmountable for many, despite ongoing attempts to unionize. That user called someone a fascist for pointing this out, too. I guess both of you feel that way about those of us involved in class action lawsuits against AI image generation software.

i can't speak for anyone else or the things they've said or think of anyone. that said:

1. you should not look up to people on the computer. i'm just a girl running a silly little blog.

2. i am an artist across multiple mediums. the 'no true scotsman' bit where 'artists' are people who agree with you and you can discount anyone disagrees with you as 'not an artist' and therefore fundamentally unsympathetic to artists will make it very difficult to actually engage in substantive discussion.

3. i've stated my positions on this many times but i'll do it one more: i support unionization and industrial action. i support working class artists extracting safeguards from their employers against their immiseration by the introduction of AI technology into the work flow (i just made a post about this funnily enough). i think it is Bad for studio execs or publishers or whoever to replace artists with LLMs. However,

4. this is not a unique feature of AI or a unique evil built into the technology. this is just the nature of any technological advance under capitalism, that it will be used to increase productivity, which will push people out of work and use the increased competition for jobs to leverage that precarity into lower wages and worse conditions. the solution to this is not to oppose all advances in technology forever--the solution is to change the economic system under which technologies are leveraged for profit instead of general wellbeing.

5. this all said anyone involved in a class action lawsuit over AI is an enemy of art and everything i value in the world, because these lawsuits are all founded in ridiculous copyright claims that, if legitimated in court, would be cataclysmic for all transformative art--a victory for any of these spurious boondoggles would set a precedent that the bar for '''infringement''' is met by a process that is orders of magnitude less derivative than collage, sampling, found art, cut-ups, and even simple homage and reference. whatever windmills they think they are going to defeat, these people are crusading for the biggest expansion of copyright regime since mickey mouse and anyone who cares at all about art and creativity flourishing should hope they fail.

2K notes

·

View notes

Text

Some positivity in these turbulent AI times

*This does not minimize the crisis at hand, but is aimed at easing any anxieties.

With every social media selling our data to AI companies now, there is very little way to avoid being scraped. The sad thing is many of us still NEED social media to advertise ourselves and get seen by clients. I can't help but feeling that we as artists are not at risk of losing our livelihoods, here is why:

Just because your data is available does not mean that AI companies will/want to use it. Your work may never end up being scraped at all.

The possibility of someone who uses AI art prompts can replace you (if your work is scraped) is very unlikely. Art Directors and clients HAVE to work with people, the person using AI art cannot back up what a machine made. Their final product for a client will never be substantial since AI prompts cannot be consistent with use and edits requested will be impossible.

AI creators will NEVER be able to make a move unless us artists make a move first. They will always be behind in the industry.

AI creators lack the fundamental skills of art and therefore cannot detect when something looks off in a composition. Many professional artists like me get hired repeatedly for a reason! WE as artists know what we're doing.

The art community is close-knit and can fund itself. Look at furry commissions, Patreon, art conventions, Hollywood. Real art will always be able to make money and find an audience because it's how we communicate as a species.

AI creators lack the passion and ambition to make a career out of AI prompts. Not that they couldn't start drawing at any time, but these tend to be the people who don't enjoy creating art to begin with.

There is no story or personal experience that can be shared about AI prompts so paying customers will lose interest quickly.

Art is needed to help advance society along, history says so. To do that, companies will need to hire artists (music, architecture, photography, design, etc). The best way for us artists to keep fighting for our voice to be heard right now is staying visible. Do not hide or give in! That is what they want. Continue posting online and/or in person and sharing your art with the world. It takes a community and we need you!

#text#ai#artists on tumblr#art#im usually right#whenever I feel mostly calm in a crisis it's a good sign

5K notes

·

View notes

Text

It feels kinda wild I've seen no one mention the huge controversy NaNoWriMo was in about 7 months ago (Link to a reddit write up, there's also a this google doc on it) in this whole recent AI discourse. The main concerns people had were related to the 'young writers' forum, a moderator being an alledged predator, and general moderation practices being horrible and not taking things like potential grooming seriously.

About 5 months ago, after all of that went down, MLs or 'Municipal Liaisons', their local volunteers organisers for different regions of the world, were offered a horrible new agreement that basically tried to shut them up about the issues they'd been speaking up about. Some of these issues included racism and ableism that the organisation offered zero support with.

When there was pushback and MLs kept sharing what was going on, NaNoWriMo removed ALL OF THEM as MLs and sent in a new, even more strict agreement that they would have to sign to be allowed back in their volunteer position.

This agreement included ways of trying to restrict their speech even further, from not being able to share 'official communications' to basically not being allowed to be in discord servers to talk to other MLs in places not controlled by NaNoWriMo. You also had to give lots of personal information and submit to a criminal background check, despite still explicitly leaving their local regions without support and making it very clear everyone was attending the OFFICIAL in person events 'at their own risk'.

Many MLs refused to sign and return. Many others didn't even know this was happening, because they did not get any of the emails sent for some reason. NaNoWriMo basically ignored all their concerns and pushed forward with this.

Many local regions don't exist anymore. I don't know who they have organising the rest of them, but it's likely spineless people that just fell in line, people who just care about the power, or new people who don't understand what's going on with this organisation yet. Either way, this year is absolutely going to be a mess.

Many of the great former MLs just went on to organise their writing communities outside of the official organisation. NaNoWriMo does not own the concept of writing a novel in a month.

R/nanowrimo is an independent subreddit that has been very critical of the organisation since this all happened, and people openly recommend alternatives for word tracking, community, etc there, so I highly recommend checking it out.

I've seen Trackbear recommended a lot for an alternative to the word tracking / challenge, and will probably be using it myself this November.

Anyway, just wanted to share because a lot of people haven't heard about this, and I think it makes it extremely clear that the arguments about "classism and ableism" @nanowrimo is using right now in defense of AI are not vaguely misguided, but just clear bullshit. They've never given a single shit about any of that stuff.

1K notes

·

View notes

Text

Virgin! Simon "Ghost" Riley

Warnings: 18+, Smut, Inexperienced! Simon, Virgin! Simon, Riding, Unprotected Sex, The Mask Stays On, No Pronouns Used For Reader Except 'You'.

Virgin! Simon who can hardly believe his luck as he watches and feels you ride him, your walls tight as you bounce on his cock, calling him your 'big guy'. His hands are on your hips, his own slamming up into yours in a rhythm you'd set for him.

He's sloppy. Unaccustomed to the euphoric stiffening of the knot in his stomach, pulling ever tighter with every slap of your ass against his thighs. Sure, he's had many an orgasm before, but never at the hands of another. Never so strong; a force of nature in its own right. He's breathing heavily - panting; you swear you can see him drooling from the corner of his mouth. Something viscous is filling you now. Not the full force of his seed, but a precursor to it. A warning.

The mask stays on (of course) during this exchange, but you can see the way he fights to keep his eyes open, to keep himself from betraying every sensibility and throwing his head back, screwing his eyes shut as his length is nestled inside you, a thick bump forming in your stomach with every thrust. Your hand slips down your front and you press it. Simon jolts, moaning between gritted teeth as you press, hard, harder still, forcing his cock into an even tighter position.

He's arching into you, the sensation of his veins and his bulbous tip throbbing against your insides enough to let you know that he's close.

You coax him. Goad him. "Y'gonna cum just for me, big boy? Gonna fuck me 'til I can't walk straight?"

He can't talk. Can't even think. For the first time in his life, he's fucked dumb. You can see it in the way his eyes roll back into his skull when you clench around him. Suffocate him. His hips stutter. His cock nudges something deep within you. You gasp.

It only took your calling him your "Good boy," to have him unravel before your eyes. He can't contain the strangled growl that is exorcised from him as he cums, deep and hard, thick, hot ropes of semen filling you. You can feel it, as if painting your insides white, bathing you in an unfettered warmth. His hands are cast-iron on your hips, pulling you down onto him as if to stop you from pulling away, to prevent even a drop of his seed from escaping you. He digs his heels into the bench beneath you, grounding himself.

And, as your orgasm sparks and ripples through you, you hunch over Simon, hands gripping his shoulders, squeezing him. You moan, long and loud, milking Simon for all he's worth. And now, between the sheets of his post-orgasm haze, he watches you, the ring of light above your head from the luminescent bulb of the changing room painting you as a saint in his eyes.

He's never going to let what you have - what you've shown him - go. No matter the cost. Not when this feeling of completion is steadfast within him, electrifying every fibre in his body, all the way down to his bones.

Reblog for more content like this! It helps creators like myself tremendously and it is greatly appreciated :-)

Masterlist Masterlist [Continued] Masterpost Modern Warfare AI Masterlist

AO3 Wattpad Tumblr Backup Account

#simon ghost riley#simon riley#ghost#ghost cod#cod ghost#ghost mw2#simon ghost riley x reader#simon ghost riley x you#simon ghost riley headcanons#simon ghost riley smut#simon riley headcanons#simon riley x reader#simon riley x you#simon riley smut#ghost smut#cod#cod x reader#cod smut#cod headcanons#mw2 smut

6K notes

·

View notes

Text

Penguin Random House, AI, and writers’ rights

NEXT WEDNESDAY (October 23) at 7PM, I'll be in DECATUR, GEORGIA, presenting my novel THE BEZZLE at EAGLE EYE BOOKS.

My friend Teresa Nielsen Hayden is a wellspring of wise sayings, like "you're not responsible for what you do in other people's dreams," and my all time favorite, from the Napster era: "Just because you're on their side, it doesn't mean they're on your side."

The record labels hated Napster, and so did many musicians, and when those musicians sided with their labels in the legal and public relations campaigns against file-sharing, they lent both legal and public legitimacy to the labels' cause, which ultimately prevailed.

But the labels weren't on musicians' side. The demise of Napster and with it, the idea of a blanket-license system for internet music distribution (similar to the systems for radio, live performance, and canned music at venues and shops) firmly established that new services must obtain permission from the labels in order to operate.

That era is very good for the labels. The three-label cartel – Universal, Warner and Sony – was in a position to dictate terms like Spotify, who handed over billions of dollars worth of stock, and let the Big Three co-design the royalty scheme that Spotify would operate under.

If you know anything about Spotify payments, it's probably this: they are extremely unfavorable to artists. This is true – but that doesn't mean it's unfavorable to the Big Three labels. The Big Three get guaranteed monthly payments (much of which is booked as "unattributable royalties" that the labels can disperse or keep as they see fit), along with free inclusion on key playlists and other valuable services. What's more, the ultra-low payouts to artists increase the value of the labels' stock in Spotify, since the less Spotify has to pay for music, the better it looks to investors.

The Big Three – who own 70% of all music ever recorded, thanks to an orgy of mergers – make up the shortfall from these low per-stream rates with guaranteed payments and promo.

But the indy labels and musicians that account for the remaining 30% are out in the cold. They are locked into the same fractional-penny-per-stream royalty scheme as the Big Three, but they don't get gigantic monthly cash guarantees, and they have to pay the playlist placement the Big Three get for free.

Just because you're on their side, it doesn't mean they're on your side:

https://pluralistic.net/2022/09/12/streaming-doesnt-pay/#stunt-publishing

In a very important, material sense, creative workers – writers, filmmakers, photographers, illustrators, painters and musicians – are not on the same side as the labels, agencies, studios and publishers that bring our work to market. Those companies are not charities; they are driven to maximize profits and an important way to do that is to reduce costs, including and especially the cost of paying us for our work.

It's easy to miss this fact because the workers at these giant entertainment companies are our class allies. The same impulse to constrain payments to writers is in play when entertainment companies think about how much they pay editors, assistants, publicists, and the mail-room staff. These are the people that creative workers deal with on a day to day basis, and they are on our side, by and large, and it's easy to conflate these people with their employers.

This class war need not be the central fact of creative workers' relationship with our publishers, labels, studios, etc. When there are lots of these entertainment companies, they compete with one another for our work (and for the labor of the workers who bring that work to market), which increases our share of the profit our work produces.

But we live in an era of extreme market concentration in every sector, including entertainment, where we deal with five publishers, four studios, three labels, two ad-tech companies and a single company that controls all the ebooks and audiobooks. That concentration makes it much harder for artists to bargain effectively with entertainments companies, and that means that it's possible -likely, even – for entertainment companies to gain market advantages that aren't shared with creative workers. In other words, when your field is dominated by a cartel, you may be on on their side, but they're almost certainly not on your side.

This week, Penguin Random House, the largest publisher in the history of the human race, made headlines when it changed the copyright notice in its books to ban AI training:

https://www.thebookseller.com/news/penguin-random-house-underscores-copyright-protection-in-ai-rebuff

The copyright page now includes this phrase:

No part of this book may be used or reproduced in any manner for the purpose of training artificial intelligence technologies or systems.

Many writers are celebrating this move as a victory for creative workers' rights over AI companies, who have raised hundreds of billions of dollars in part by promising our bosses that they can fire us and replace us with algorithms.

But these writers are assuming that just because they're on Penguin Random House's side, PRH is on their side. They're assuming that if PRH fights against AI companies training bots on their work for free, that this means PRH won't allow bots to be trained on their work at all.

This is a pretty naive take. What's far more likely is that PRH will use whatever legal rights it has to insist that AI companies pay it for the right to train chatbots on the books we write. It is vanishingly unlikely that PRH will share that license money with the writers whose books are then shoveled into the bot's training-hopper. It's also extremely likely that PRH will try to use the output of chatbots to erode our wages, or fire us altogether and replace our work with AI slop.

This is speculation on my part, but it's informed speculation. Note that PRH did not announce that it would allow authors to assert the contractual right to block their work from being used to train a chatbot, or that it was offering authors a share of any training license fees, or a share of the income from anything produced by bots that are trained on our work.

Indeed, as publishing boiled itself down from the thirty-some mid-sized publishers that flourished when I was a baby writer into the Big Five that dominate the field today, their contracts have gotten notably, materially worse for writers:

https://pluralistic.net/2022/06/19/reasonable-agreement/

This is completely unsurprising. In any auction, the more serious bidders there are, the higher the final price will be. When there were thirty potential bidders for our work, we got a better deal on average than we do now, when there are at most five bidders.

Though this is self-evident, Penguin Random House insists that it's not true. Back when PRH was trying to buy Simon & Schuster (thereby reducing the Big Five publishers to the Big Four), they insisted that they would continue to bid against themselves, with editors at Simon & Schuster (a division of PRH) bidding against editors at Penguin (a division of PRH) and Random House (a division of PRH).

This is obvious nonsense, as Stephen King said when he testified against the merger (which was subsequently blocked by the court): "You might as well say you’re going to have a husband and wife bidding against each other for the same house. It would be sort of very gentlemanly and sort of, 'After you' and 'After you'":

https://apnews.com/article/stephen-king-government-and-politics-b3ab31d8d8369e7feed7ce454153a03c

Penguin Random House didn't become the largest publisher in history by publishing better books or doing better marketing. They attained their scale by buying out their rivals. The company is actually a kind of colony organism made up of dozens of once-independent publishers. Every one of those acquisitions reduced the bargaining power of writers, even writers who don't write for PRH, because the disappearance of a credible bidder for our work into the PRH corporate portfolio reduces the potential bidders for our work no matter who we're selling it to.

I predict that PRH will not allow its writers to add a clause to their contracts forbidding PRH from using their work to train an AI. That prediction is based on my direct experience with two of the other Big Five publishers, where I know for a fact that they point-blank refused to do this, and told the writer that any insistence on including this contract would lead to the offer being rescinded.

The Big Five have remarkably similar contracting terms. Or rather, unremarkably similar contracts, since concentrated industries tend to converge in their operational behavior. The Big Five are similar enough that it's generally understood that a writer who sues one of the Big Five publishers will likely find themselves blackballed at the rest.

My own agent gave me this advice when one of the Big Five stole more than $10,000 from me – canceled a project that I was part of because another person involved with it pulled out, and then took five figures out of the killfee specified in my contract, just because they could. My agent told me that even though I would certainly win that lawsuit, it would come at the cost of my career, since it would put me in bad odor with all of the Big Five.

The writers who are cheering on Penguin Random House's new copyright notice are operating under the mistaken belief that this will make it less likely that our bosses will buy an AI in hopes of replacing us with it:

https://pluralistic.net/2023/02/09/ai-monkeys-paw/#bullied-schoolkids

That's not true. Giving Penguin Random House the right to demand license fees for AI training will do nothing to reduce the likelihood that Penguin Random House will choose to buy an AI in hopes of eroding our wages or firing us.

But something else will! The US Copyright Office has issued a series of rulings, upheld by the courts, asserting that nothing made by an AI can be copyrighted. By statute and international treaty, copyright is a right reserved for works of human creativity (that's why the "monkey selfie" can't be copyrighted):

https://pluralistic.net/2023/08/20/everything-made-by-an-ai-is-in-the-public-domain/

All other things being equal, entertainment companies would prefer to pay creative workers as little as possible (or nothing at all) for our work. But as strong as their preference for reducing payments to artists is, they are far more committed to being able to control who can copy, sell and distribute the works they release.

In other words, when confronted with a choice of "We don't have to pay artists anymore" and "Anyone can sell or give away our products and we won't get a dime from it," entertainment companies will pay artists all day long.

Remember that dope everyone laughed at because he scammed his way into winning an art contest with some AI slop then got angry because people were copying "his" picture? That guy's insistence that his slop should be entitled to copyright is far more dangerous than the original scam of pretending that he painted the slop in the first place:

https://arstechnica.com/tech-policy/2024/10/artist-appeals-copyright-denial-for-prize-winning-ai-generated-work/

If PRH was intervening in these Copyright Office AI copyrightability cases to say AI works can't be copyrighted, that would be an instance where we were on their side and they were on our side. The day they submit an amicus brief or rulemaking comment supporting no-copyright-for-AI, I'll sing their praises to the heavens.

But this change to PRH's copyright notice won't improve writers' bank-balances. Giving writers the ability to control AI training isn't going to stop PRH and other giant entertainment companies from training AIs with our work. They'll just say, "If you don't sign away the right to train an AI with your work, we won't publish you."

The biggest predictor of how much money an artist sees from the exploitation of their work isn't how many exclusive rights we have, it's how much bargaining power we have. When you bargain against five publishers, four studios or three labels, any new rights you get from Congress or the courts is simply transferred to them the next time you negotiate a contract.

As Rebecca Giblin and I write in our 2022 book Chokepoint Capitalism:

Giving a creative worker more copyright is like giving your bullied schoolkid more lunch money. No matter how much you give them, the bullies will take it all. Give your kid enough lunch money and the bullies will be able to bribe the principle to look the other way. Keep giving that kid lunch money and the bullies will be able to launch a global appeal demanding more lunch money for hungry kids!

https://chokepointcapitalism.com/

As creative workers' fortunes have declined through the neoliberal era of mergers and consolidation, we've allowed ourselves to be distracted with campaigns to get us more copyright, rather than more bargaining power.

There are copyright policies that get us more bargaining power. Banning AI works from getting copyright gives us more bargaining power. After all, just because AI can't do our job, it doesn't follow that AI salesmen can't convince our bosses to fire us and replace us with incompetent AI:

https://pluralistic.net/2024/01/11/robots-stole-my-jerb/#computer-says-no

Then there's "copyright termination." Under the 1976 Copyright Act, creative workers can take back the copyright to their works after 35 years, even if they sign a contract giving up the copyright for its full term:

https://pluralistic.net/2021/09/26/take-it-back/

Creative workers from George Clinton to Stephen King to Stan Lee have converted this right to money – unlike, say, longer terms of copyright, which are simply transferred to entertainment companies through non-negotiable contractual clauses. Rather than joining our publishers in fighting for longer terms of copyright, we could be demanding shorter terms for copyright termination, say, the right to take back a popular book or song or movie or illustration after 14 years (as was the case in the original US copyright system), and resell it for more money as a risk-free, proven success.

Until then, remember, just because you're on their side, it doesn't mean they're on your side. They don't want to prevent AI slop from reducing your wages, they just want to make sure it's their AI slop puts you on the breadline.

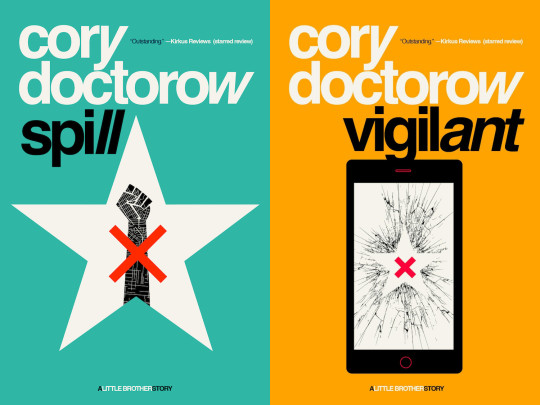

Tor Books as just published two new, free LITTLE BROTHER stories: VIGILANT, about creepy surveillance in distance education; and SPILL, about oil pipelines and indigenous landback.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/10/19/gander-sauce/#just-because-youre-on-their-side-it-doesnt-mean-theyre-on-your-side

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#publishing#penguin random house#prh#monopolies#chokepoint capitalism#fair use#AI#training#labor#artificial intelligence#scraping#book scanning#internet archive#reasonable agreements

728 notes

·

View notes

Text

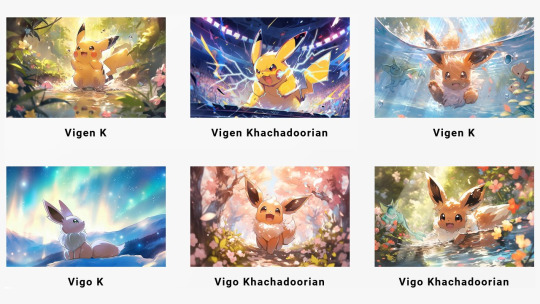

So... Pokémon has officially revealed the Top 300 quarter-finalists for the TCG illustration contest. I myself have participated, but unfortunately, did not make it. There were many awesome artworks!!

However... there has been a suspected case of an individual who entered with multiple identities (similar initials) through AI generated entries - V.K shows up in 6 entries. The weird and unnatural proportions, position, angle (look at vaporeon for instance) all give away indications of AI too.

The rules have clearly outlined multiple identities to lead to disqualification, yet the judges failed to take account into the suspicious number of similar styles appearing more than three times (surprassing the maximum number of 3 entries) with the initials.

"The rules in the contest affirmed that: submissions must be submitted by the Entrant. Any attempt, successful or otherwise, by any Entrant to obtain more than the permitted number of Submissions by using multiple and/or different identities, forms, registrations, addresses, or any other method will void all of that Entrant's Submissions and that Entrant may be disqualified at Sponsors' reasonable discretion."

This is very disappointing, Pokemon TCG. Not even just disappointing, this is very shameful and distasteful to artists who did not make it.

Edit: There also seems to be 2 more entries that are ai prompts but under a different name (pikachu sleeping with a background night landscape and another one sleeping on a tree root)

Here is a really good educational thread that explains the errors in the ai work (on Twitter)

UPDATE: on Twitter pokemon tcg actually addressed the issue, has disqualified the person with multiple identities and will be selecting more entries from other artists!!

#pokemon tcg#pokemon illustration contest#pokemon tcg contest#pokemon illustration contest 2024#pokemon#ptcgic 2024#honestly...i am glad i did not make it in. Imagine getting in but all is talked about is the genAI “work”#Anyhow#congrats to all artists who have made it!!! (except for the folks who made these prompts)

1K notes

·

View notes

Text

🎀 NEW IDEA: ASK CHAT GPT 🎀

ꨄ Use AI to your advantage when it comes to manifesting and shifting…

Although AI can get a little weird and scary sometimes, it’s actually such a great way to get information, motivation and inspiration all in one

Instead of hounding bloggers for the same thing all the time. Go to Chat GPT.

Now, how about i give you a few examples, let’s imagine someone called maya 𖨆

maya is having trouble because she feels like when she tries to induce the void, she always falls asleep and that’s taken away so many years from her dream life

she asks Chat GPT: “I keep falling asleep every time I try to induce the void, I feel so frustrated all the time and I need some help”

Chat GPT answers: “Hi Maya, falling asleep during void practice is common, so don’t be too hard on yourself. One way to combat this is by practicing at a time when you feel awake and alert, like in the morning or after a light exercise session. Another tip is to sit up in a comfortable position rather than lying down, as it can help you stay focused and avoid dozing off. Keep practicing with patience—it’s all part of the process!”

now let’s imagine joey 𖨆

joey is feeling frustrated because he feels like he hasn’t progressed, he’s been in the community for 2+ years and really wants his dream life

he asks: “I’m feeling so frustrated and upset, like I want to give up, I’ve been trying to induce the void and get my dream life for over 2 years, I need motivation”

Chat GPT responds; “Hi Joey, I know it’s tough, but remember that persistence is key—sometimes the journey teaches you just as much as the destination. Instead of focusing on the time passed, celebrate the fact that you’re still trying, which shows incredible resilience. Take a short break if you need to, and reconnect with why you started. Visualize your dream life vividly, and remind yourself it’s worth the effort. Progress might be closer than you think!”

now chloe 𖨆 is feeling really powerless lately, circumstances hit her hard on some days and she feels stuck, she wants to be reminded how the void is so possible and so easy and she’s so capable of it all

she asks: “Can you remind me how powerful I am? Some days circumstances hit so hard and it gets tough!! Can you explain manifesting from a logical point of view to remind me how easy it all is?”

Chat GPT responds: “Hi Chloe, let me remind you of this simple truth: you are the creator of your reality, the God of your world. From a logical point of view, everything you experience stems from your thoughts, beliefs, and focus. Circumstances are just reflections—they only hold power because you give it to them. Shift your focus, and the reflection changes effortlessly. When you truly accept that you’re the one in control, nothing can stand in your way. You’ve always had the power!”

Now you can see how well it works!! You can even use them to give you affirmations, help you with vaunts. You can be as specific as you want, trauma dump as much as you want and say whatever you want without crossing boundaries or being judged. You can use Chat GPT to script, i’ve used it so many times and it has given me so many ideas. And your script will still feel like your own, just see it as your collaborating with Chat GPT and that it you helps put your thoughts into words.

You can even use it when you’re having one of those days and just need to be reminded of the fact that you’re in charge. You can even use it for some tough love or to comfort you as you vent.

Like guys you can do so many things with this, I love Chat GPT so much we’re locked in like this:

LMFAOOO IM JOKING (or am i? 😏)

But in all seriousness, some of you need this. Flooding bloggers asks and dms with questions that they have repeated the answers to multiple times, asking things you damn well know the answer to or just asking for motivation repeatedly can take away from people who actually have a good question to ask and actually need help. Like fogging up a blogger’s inbox repeatedly venting about the 3d, or asking “hOw Do I gEt iNtO tHe VoId?” knowing damn well you know the answer to that, will prevent a blogger from noticing someone who actually has a good question that many people might also have and would actually spark an interesting discussion.

So just ask Chat GPT, it’s okay to have doubts creep in from time to time, it’s okay to need a reminder of basic things, it’s okay to forget how powerful you are sometimes, but you can literally ask Chat GPT to help you with that and it will. Instead of waiting around for bloggers to answer your dm or ask just to say the same thing, you can get actual help, and get it when you want it and how you want it.

Why don’t you ask “explain the law of assumption to me like i’m 5”

“explain the void state to me from a scientific view to help me believe”

“give me some dream body affirmations”

“i’ve been so demotivated, remind me who tf i am again”

“I’m going to come from a filthy rich family when I permashift, can you give me some £10Million+ per year jobs that my desired parents can do”

“I feel so lazy today, bully me into inducing the pure consciousness instead of procrastinating”

you don’t need to harass bloggers for these things when you can have immediate access to the answers you seek.

ꨄ 🧸🎀 Go download Chat GPT and knock yourself out!! 💋

#salemlunaa#shiftblr#reality shifting#shifting#loa#permashifting#respawning#law of assumption#i am state#void state#success story#the void state#void#void state tips#the void#voidstate#pure consciousness#shifting consciousness#void concept#manifesting#master manifestor#manifestation#shifting blog#shifting community

827 notes

·

View notes