#anti cloud ai

Explore tagged Tumblr posts

Text

Time for a new edition of my ongoing vendetta against Google fuckery!

Hey friends, did you know that Google is now using Google docs to train it's AI, whether you like it or not? (link goes to: zdnet.com, July 5, 2023). Oh and on Monday, Google updated it's privacy policy to say that it can train it's two AI (Bard and Cloud AI) on any data it scrapes from it's users, period. (link goes to: The Verge, 5 July 2023). Here is Digital Trends also mentioning this new policy change (link goes to: Digital Trends, 5 July 2023). There are a lot more, these are just the most succinct articles that might explain what's happening.

FURTHER REASONS GOOGLE AND GOOGLE CHROME SUCK TODAY:

Stop using Google Analytics, warns Sweden’s privacy watchdog, as it issues over $1M in fines (link goes to: TechCrunch, 3 July 2023) [TLDR: google got caught exporting european users' data to the US to be 'processed' by 'US government surveillance,' which is HELLA ILLEGAL. I'm not going into the Five Eyes, Fourteen Eyes, etc agreements, but you should read up on those to understand why the 'US government surveillance' people might ask Google to do this for countries that are not apart of the various Eyes agreements - and before anyone jumps in with "the US sucks!" YES but they are 100% not the only government buying foreign citizens' data, this is just the one the Swedes caught. Today.]

PwC Australia ties Google to tax leak scandal (link goes to: Reuters, 5 July 2023). [TLDR: a Russian accounting firm slipped Google "confidential information about the start date of a new tax law leaked from Australian government tax briefings." Gosh, why would Google want to spy on governments about tax laws? Can't think of any reason they would want to be able to clean house/change policy/update their user agreement to get around new restrictions before those restrictions or fines hit. Can you?

SO - here is a very detailed list of browsers, updated on 28 June, 2023 on slant.com, that are NOT based on Google Chrome (note: any browser that says 'Chromium-based' is just Google wearing a party mask. It means that Google AND that other party has access to all your data). This is an excellent list that shows pros and cons for each browser, including who the creator is and what kinds of policies they have (for example, one con for Pale Moon is that the creator doesn't like and thinks all websites should be hostile to Tor).

#you need to protect yourself#anti google#anti chrome#anti chromium#chromium based browsers#internet security#current events#i recommend firefox#but if you have beef with it#here are alternatives!#so called ai#anti artificial intelligence#anti chatgpt#anti bard#anti cloud ai#data scraping

101 notes

·

View notes

Text

36 notes

·

View notes

Text

prince cloud

#idk how to tag this#i need to sleep#art#digital art#my art#made in ibis paint#my oc art#anti ai art#artists on tumblr#ibispaintx#oc artist#small artist#character illustration#cats#kottyoverse#Prince Cloud#my characters#character art#character design#original character

11 notes

·

View notes

Text

Kiki’s Delivery Service Screencap || millieueu

Redrew a scene from my comfort film, Kiki’s Delivery Service. Also acts as a vent piece because what ChatGPT is doing to Studio Ghibli is beyond disrespectful and goes against Hayao Miyazaki’s wish. I can’t bear to look at people who use this AI feature and defend this act. It’s incredibly heartbreaking 💔

All drawn with my finger, no AI used. It’s not as detailed as the original, but I tried. Might make more art related to Studio Ghibli in the future

App used : IbisPaintX

Time taken : 2 hours and 8 minutes

#kikis delivery service#screencap#redraw#clouds#flowers#green#blue#vent#venting#vent art#fanart#fandom#ibispaintx#my artstyle#my artwork#drawing#doodle#art#artist#digital art#studio ghibli#march 2025#artist on tumblr#anti generative ai#anti ai art#anime#anime fanart#millieueu

7 notes

·

View notes

Note

something something grow a pair and state thoughts on ai?

So, funny story, I made a post about this before, whenever the topic tag for it was trending. And like, I still stand by that, sans the part where I call the AI itself a form of art under my definition. A little bit after that, I saw a post, while definitely not in response to my own post, made the point that while we should hate AI art for the rampant theft of jobs and content, that its somehow bad to dislike it as Bad Art or Not Art because "gatekeeping art is baddd". Which like, in the context of someone drawing stick figures or painting giant blocks of color, is valid; we shouldn't gatekeep art from people. I still think AI doesn't deserve that privilege. Like, not to try and define art again, but, like hold on ket me grab something.

This is an ai generated adoptable from deiantart. Now, I have to ask, what's being expressed here- besides "cute girl in big hoodie (despite the one on the left not having a hoodie)"? Like it's easy to take these apart mechanically, but conceptually? It's somehow easier. Like, part of character design is visually communicating stuff about the character. There's nothing here besides anime girl in big outfit with minor armor details maybe? Like nothing else here is coherent! Like she looks sampled off of genshin and honkai characters but that's it. Like the cutains are just blue, and its dull and boring because of it. Why is the jacket neon green? The prompter wanted it that way. Why does she have the shoulder pieces and the case she's holding? Because the prompter likely put "battle girl" and/or "solarpunk" into the prompt. And it's not bad to have design elements for the sake of it, but the ai can't do anything but that, and the content it generates suffers because of it. There's no artistic value there, imo.

Now, not to toot my own horn, but here's my take on this design:

This is still a "cute girl in a big lime green jacket", but there's more to it. It's a high visibility jacket, with stripes reminiscent of construction vests. In the other doodles on the page, this high visibility theme is expanded to a theme of her being some kind of rescue personnel, and/or an angel (see; the halo in the bottom right). While it's fairly easy for me to point these themes out- it is what I intended- I'd still argue an obersever would be able to point out similar, or other themes and motifs that bring this character together.

No ammount of prompts and generation models can recreate that. Even if the prompter had the exact same intent I had when making the og ai content, that intent doesn't come across whatsoever. Because AI cannot replicate human intent and artistic processes.

These image generators register to me as the miserable end point of the sad, art-illiterate belief that art only is, and is only meant to "look pretty". Every time modern art is decried as "ugly and pointless", another prompter gets validated in their shameless attempts to assert their narrow-as-fuck vosion of what art is.

Art is human. Art is messy, art is intricate, art is sloppy, art is beautiful and art is ugly.

No machine on earth can comprehend or replicate that. And the ceasless attempts to commodify and capitalize on art have made some people forget that fact. The kinds of people who prompt really only see art as a gimmick product, pretty knickknacks that will make them rich quick.

For lack of better terms, the dehumanization of art itself is disgusting, and so like hell am I going to consider AI's mass-produced, slot machine-esque, drivel as art.

And I will not be guilted by other people on this hellsite who think its a moral failure to call mindless content what it is because its dressed up in distorted frills and anime girl boobs.

Art is human, and AI is not human. And what a sad world it is, that we're automating and strangling human creation, instead of letting it thrive.

Thank you for reminding me to share my thoughts.

38 notes

·

View notes

Text

👾

#👾#adobe photoshop#adobe illustrator#adobe lightroom#adobe fresco#adobe#boycott adobe#creative cloud#ai generated#ai art#ai artwork#ai girl#ai#artificial intelligence#a.i. generated#a.i. art#a.i.#anti ai#fuck ai art#fuck ai everything#fuck ai all my homies hate ai#fuck ai writing#fuck ai bros#fuck ai#boycott ai#ausgov#politas#auspol#tasgov#taspol

10 notes

·

View notes

Text

tbh I think there are a lot of people on here who are going to fall prey to fake ai-generated things because they're stuck in "it always sounds so fake and corporate and it's such a bad writer" or "it always fucks up the hands" or stuff like that. and it's like yeah if you're a high schooler faking an essay and you use the free version of a tool and don't do a good job of it you will likely get bottom-of-the-barrel results. but people who use more expensive tools and/or use them with more skill will and do get results you cannot detect.

(and of course, real human beings do things like "use photoshop" or "lie on the internet" or "lie in the newspaper, in books, and in real life.")

i feel like this is a good time to renew a commitment to information hygiene - don't just assume you can detect a forgery, but check the source, check the source's source, do the sanity-check/napkin-math, don't bother with screenshots of headlines, ask "does this make sense," "was this calibrated to make me react," "does this encourage me to feel smugly superior or hopeless or powerless", keep each other honest. i fell for a fake today and was glad to have someone check me on it!

#soapbox#too much anti-AI stuff focuses on “it's so shitty” well. the stuff you can TELL is shitty ai is shitty AI.#the good tools are more accessible now but also people can always lie or be wrong#whatever. old man yells at cloud

3 notes

·

View notes

Text

My mom keeps using Meta AI to write her poems.

Mom….

I’m a poet.

Just ask me please.

2 notes

·

View notes

Text

look i'm not going to pretend like my generation didn't have models that weighed less than a bag of sand and airbrushing in the magazines and all that shit because we did and that was fucked up too

but i get so like. genuinely freaked out by like filters on social media and those kinds of things. it makes me worry for the girls who are growing up with these things as normal. i just can't help but feel like a filter that tries to *correct* your fucking face in real time must be so so so much worse than what we had? even just the "silly/fun" ones still smooth out your skin and shave off half your nose and reshape your face. so many phones have magic smoothing as an automatic feature on the front cams. so it's like not even an active choice or something you're aware of. and so much of this world is based on selfies and videos so you're gonna be seeing it *constantly*. you take a selfie for fun but the photo is unrecognisable. it's not you. if that's not a breeding ground for body dysmorphia i don't know what is.

and we knew that those "model standards" were unrealistic and unattainable and they still fucked us up! but today you're seeing your peers all made up like that online and logically that must connect into a feeling of like. that should be attainable? but it's still not! and idk but that can't be fucking healthy.

it just feels like to me there's a difference between seeing heidi klum or whoever and then your classmate maria posting pictures with perfect skin, straightened nose, whitened teeth. it's like the insane otherworldly standards we grew up with has been pulled down into everyday life. idk i just don't think it's coincidence that today we have 15 year olds sharing anti-aging routines and wearing 5 layers of makeup just to leave the house. the standards for a normal face has been digitally altered

#mona mona mona#anti beauty industry#anti beauty culture#maybe i'm just being old lady yells at cloud idk but it makes me so uncomfortable#also like those ai filters that seem to define woman by long hair and makeup#anyway i tried one of those “fun” filters on sc and it just. genuinely didn't look like me#like that's not my face. that's not my nose that's not my jaw that's not my cheekbones that's not my facial structure#and like. imagine growing up in that world. shit dude#and then it's like scroll on and see tradwives. and bimbocore. and oh girls so silly dumb haha. and you know everything bad in the west is#ecause of women's rights'#you cannot tell me that does not affect the worldview of young girls!!!!!#like i grew up with pink feminism and girlpower tm and yknow it had its faults but it was better thsn this bullshit i'll fucking say

8 notes

·

View notes

Text

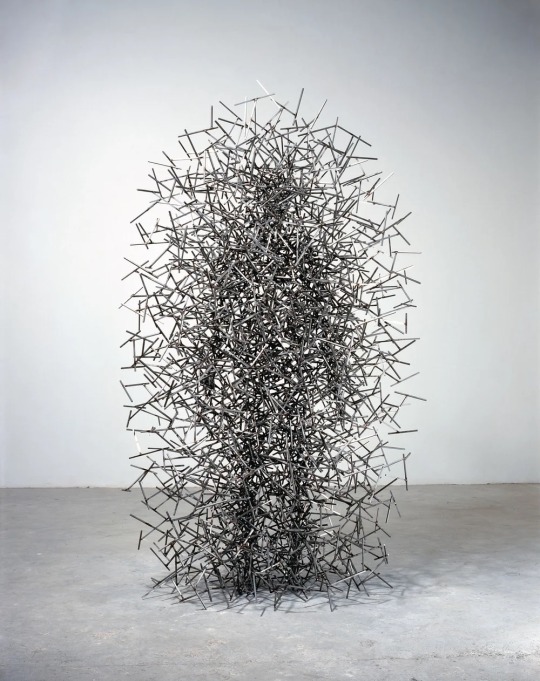

Reminds me of the album art to GHOSTEMANE’s “ANTI-ICON”

Antony Gormley: 'Quantum Cloud' Series (2000) medium: steel

#ghostemane#anti-icon#AI#antony gormley#quantum cloud#art#sculpture#steel#love#amazing#metal#hip hop

7K notes

·

View notes

Text

So I've been looking to move away from Google as much as I can, and was using Ecosia for a while. Yes, they also have an AI chatbot feature, but I was like "at least you have to actively choose to engage with it, it isn't automatically sprung into action like Google's stuff is".

Well, I was wrong. They automatically summarize some pages with generative AI instead of taking snippets from the page. I don't think even Google does that.

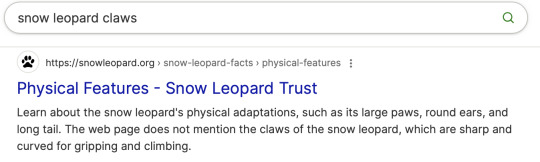

[Image description: A screenshot showing a result of an Ecosia search for "snow leopard claws". Physical Features - Snow Leopard Trust. Learn about the snow leopard's physical adaptations, such as its large paws, round ears, and long tail. The web page does not mention the claws of the snow leopard, which are sharp and curved for gripping and climbing. End ID.]

Now, while the environmental issues with generative AI* are largely not specific to it - any large data centre, regardless of purpose, will need to use a lot of electricity and water / coolant (btw cloud services are a major contributor to this as well, I recommend trying to switch to local and / or physical info storage as much as you can) - it's still a significant contributor in recent years, so it's hypocritical for an "environment-focused" search engine to automatically engage it.

*That runs online - not that being able to run offline (as some programs can) erases other issues with it.

#anti ai#I feel hypocritical myself for talking about the cloud service thing given we're all on social media but like... there IS something that ca#personally be done even if it's small overall. Also social media is WAY too useful (including for spreading climate info and organizing#movements for it!) to abandon. Also also it could probably be run with much lower impact if you just split up the servers#Ecosia

2 notes

·

View notes

Text

Exploring the RegTech in Finance Market: Forecasts, Trends, and Major Industry Players

RegTech in Finance Market: A Deep Dive into Growth, Trends, and Future Prospects

The global regulatory technology (RegTech) in finance market is experiencing a transformative phase, with a rapidly growing demand for solutions that enhance regulatory compliance, risk management, and fraud prevention. Valued at USD 13,117.3 million in 2023, the market is projected to grow significantly, reaching USD 82,084.3 million by 2032, growing at an impressive compound annual growth rate (CAGR) of 22.6% during the forecast period (2024–2032). This growth is being driven by increasing regulatory pressures, the complexity of compliance requirements, and the need for more efficient and cost-effective solutions within the financial services industry.

Industry Dimensions

The RegTech market in finance refers to the use of technology, particularly software and platforms, to help financial institutions manage regulatory compliance, risk management, and other compliance-related tasks more efficiently and cost-effectively. This rapidly evolving market encompasses technologies like artificial intelligence (AI), machine learning (ML), big data analytics, blockchain, and automation tools designed to streamline regulatory processes and ensure compliance with global financial regulations.

The market's size was valued at USD 13,117.3 million in 2023, and it is projected to grow from USD 16,081.9 million in 2024 to USD 82,084.3 million by 2032, with a CAGR of 22.6% over the forecast period.

Request a Free Sample (Full Report Starting from USD 1850): https://straitsresearch.com/report/regtech-in-finance-market/request-sample

Key Industry Trends Driving Growth

Several key trends are driving the growth of the RegTech market in finance, and these include:

Increasing Regulatory Complexity: As global regulatory environments become more complex, financial institutions are under immense pressure to comply with evolving laws, such as GDPR, MiFID II, and Basel III. This has increased the demand for RegTech solutions that automate compliance processes and reduce human errors.

Adoption of AI and Machine Learning: Financial institutions are increasingly adopting AI and ML for tasks such as risk assessment, fraud detection, and regulatory reporting. These technologies can process large volumes of data quickly and accurately, helping organizations identify potential compliance issues before they become major problems.

Blockchain for Compliance: Blockchain technology is being explored as a solution to increase transparency and trust in financial transactions. It offers the potential to streamline reporting and improve the integrity of compliance data.

Cloud Adoption: Financial institutions are shifting to cloud-based solutions for scalability, flexibility, and cost-efficiency. Cloud deployment models are growing in popularity for RegTech solutions due to the increased need for faster updates and seamless integration with legacy systems.

Demand for Real-Time Monitoring: Financial institutions are increasingly focusing on real-time monitoring to detect potential fraud, money laundering activities, and other compliance violations. This trend is pushing the adoption of real-time RegTech solutions capable of providing instantaneous alerts and actions.

RegTech in Finance Market Size and Share

The market for RegTech in finance is expanding rapidly, driven by the growing need for efficient compliance and risk management solutions in the financial services sector. As regulatory requirements continue to evolve and increase in complexity, the demand for RegTech solutions is expected to rise sharply. With North America, Europe, and Asia-Pacific leading the charge, the RegTech market is set to become a cornerstone of the global financial infrastructure.

RegTech in Finance Market Statistics

Market Size (2023): USD 13,117.3 Million

Projected Market Size (2032): USD 82,084.3 Million

CAGR (2024-2032): 22.6%

The growth is driven by a wide range of applications, including anti-money laundering (AML), fraud management, regulatory reporting, and identity management, which all contribute significantly to the total market size.

Regional Trends and Impact

North America

North America holds the largest market share for RegTech in finance, driven by stringent regulatory standards and the presence of major financial hubs in the U.S. and Canada. The region's dominance is fueled by the increasing adoption of RegTech solutions across banks, insurance companies, and fintech firms to ensure compliance with regulations like Dodd-Frank, AML, and FATCA. Moreover, the region is seeing increased investments in AI and cloud technologies that are enhancing the performance of RegTech solutions.

Key Countries: United States, Canada

Europe

Europe is another significant player in the global RegTech market, with growing demand for compliance solutions in light of regulations like the General Data Protection Regulation (GDPR) and the European Market Infrastructure Regulation (EMIR). The region’s regulatory environment, particularly the EU’s focus on financial transparency, has accelerated the adoption of RegTech. Furthermore, Brexit has created a need for new compliance frameworks, propelling the demand for innovative RegTech solutions.

Key Countries: United Kingdom, Germany, France, Italy, Spain

Asia-Pacific (APAC)

The APAC region is expected to witness the highest growth in the RegTech market. As financial services become increasingly digitized in countries like China, India, and Japan, the need for robust compliance and risk management solutions is growing. The adoption of blockchain, AI, and cloud technologies is gaining momentum, and local governments are gradually introducing regulatory frameworks that demand improved compliance measures.

Key Countries: China, India, Japan, Australia, South Korea

Latin America, Middle East, and Africa (LAMEA)

The LAMEA region is experiencing a slow but steady growth in the RegTech market. Rising awareness about the importance of financial regulations and the increasing number of fintech startups in the region are driving the demand for RegTech solutions. While regulatory pressures may not be as stringent as in other regions, the need for better governance, transparency, and anti-money laundering (AML) measures is gaining traction.

Key Countries: Brazil, South Africa, UAE, Mexico

For more details: https://straitsresearch.com/report/regtech-in-finance-market/segmentation

RegTech in Finance Market Segmentations

The RegTech market in finance can be broken down into various segments, including component, deployment model, enterprise size, application, and end-user. Here’s an overview of the key segments:

By Component

Solution – Refers to the technology platforms and software used to address compliance, risk management, fraud prevention, and reporting.

Services – Includes advisory services, implementation, integration, and managed services related to RegTech solutions.

By Deployment Model

On-premises – RegTech solutions deployed within the financial institution's premises, offering enhanced security but higher upfront costs.

Cloud – Cloud-based solutions that offer flexibility, scalability, and cost-efficiency, which are growing in popularity among financial institutions.

By Enterprise Size

Large Enterprises – Large financial institutions with extensive compliance and risk management needs.

Small & Medium Enterprises (SMEs) – Smaller financial institutions that are increasingly adopting RegTech solutions to streamline operations and maintain compliance with regulatory standards.

By Application

Anti-money laundering (AML) & Fraud Management – Tools designed to detect and prevent money laundering and fraud in financial transactions.

Regulatory Intelligence – Systems that help financial institutions monitor and analyze regulatory changes.

Risk & Compliance Management – Solutions for managing risks and ensuring ongoing regulatory compliance.

Regulatory Reporting – Software that automates the creation and submission of regulatory reports.

Identity Management – Solutions that ensure secure customer authentication and prevent identity theft.

By End-User

Banks – One of the largest consumers of RegTech solutions, due to the high regulatory requirements they face.

Insurance Companies – Increasingly adopting RegTech for fraud detection and regulatory reporting.

FinTech Firms – Leveraging RegTech to maintain compliance while innovating financial products.

IT & Telecom – Supporting financial services with technology infrastructure for regulatory compliance.

Public Sector – Government entities that require RegTech to enhance transparency and financial integrity.

Energy & Utilities – These sectors are adopting RegTech to manage complex financial regulations and improve operational efficiency.

Others – Includes sectors like healthcare, retail, and real estate that also require regulatory compliance.

Top Players in the RegTech in Finance Market

Key players in the RegTech in finance market include:

Abside Smart Financial Technologies

Accuity

Actico

Broadridge

Deloitte

IBM

Fenergo

Eastnets

Nasdaq Bwise

PwC

Wolters Kluwer

Startups: Datarama, AUTHUDA, RegDelta, Seal, CHAINALYSISDetailed Table of Content of the RegTech in Finance Market Report: https://straitsresearch.com/report/regtech-in-finance-market/toc

These companies are leading innovation in the RegTech space, offering solutions that address regulatory compliance, fraud prevention, reporting, and data privacy issues in the financial industry.

Conclusion

The RegTech market in finance is poised for significant growth, driven by the increasing complexity of financial regulations and the need for efficient, cost-effective compliance solutions. The adoption of AI, blockchain, and cloud technologies is reshaping the regulatory landscape, allowing financial institutions to automate and streamline compliance processes. As the market continues to expand, financial institutions worldwide will increasingly turn to RegTech solutions to navigate regulatory challenges, manage risks, and remain competitive in a rapidly changing environment.

Purchase the Report: https://straitsresearch.com/buy-now/regtech-in-finance-market

About Straits Research

Straits Research is a top provider of business intelligence, specializing in research, analytics, and advisory services, with a focus on delivering in-depth insights through comprehensive reports.

Contact Us:

Email: [email protected]

Address: 825 3rd Avenue, New York, NY, USA, 10022

Phone: +1 646 905 0080 (US), +91 8087085354 (India), +44 203 695 0070 (UK)

#RegTech in Finance#Financial Technology#Compliance Solutions#Regulatory Technology#Anti-money Laundering#Fraud Prevention#Risk Management#Cloud-based RegTech#AI in Finance#Regulatory Reporting#Blockchain in Finance#RegTech Market Growth#Global Financial Regulations#Financial Institutions#FinTech Compliance#Risk & Compliance Management#AML Solutions#Financial Market Trends#Regulatory Intelligence#Financial Services Automation#FinTech Regulatory Solutions#Future of RegTech

0 notes

Text

"Your art isn't even good enough to be fed into AI" GOOD! I don't WANT it to be! I want people to come to my art because they see something unique and personal, a part of my own personality and taste. Not some pretty-yet-generic slop these generators spit out.

#lab notes#i feel like i experiment and vary my art style too much for any machine to get something consistent out of it anyways.#old man yells at the cloud#anti ai

42 notes

·

View notes

Text

Pheww that’s all the artfight! Now I gotta upload them all to insta and twitter

0 notes

Text

it is hard to explain but there is something so unwell about the cultural fear of ugliness. the strange quiet irradiation of any imperfect sight. the pores and the stomachs and the legs displaced into a digital trashbin. somehow this effect spilling over - the removal of a grinning strangers in the back of a picture. of placing more-photogenic clouds into a frame. of cleaning up and arranging breakfast plates so the final image is of a table overflowing with surplus - while nobody eats, and instead mimes food moving towards their mouth like tantalus.

ever-thinner ever-more-muscled ever-prettier. your landlord's sticky white paint sprayed over every surface. girlchildren with get-ready-with-me accounts and skincare routines. beige walls and beige floors and beige toys in toddler hands. AI-generated "imagined prettier" birds and bugs and bees.

pretty! fuckable! impossible! straighten teeth. use facetune and lightroom and four other products. remove the cars along the street from the video remove the spraypaint from the garden wall remove the native plants from their home, welcome grass. welcome pretty. let the lot that walmart-still-owns lay fallow and rotting. don't touch that, it's ugly! close your eyes.

erect anti-homelessness spikes. erect anti-bird spikes. now it looks defensive, which is better than protective. put the ramp at the back of the building, you don't want to ruin the aesthetic of anything.

you are a single person in this world, and in this photo! don't let the lives of other people ruin what would otherwise be a shared moment! erase each person from in front of the tourist trap. erase your comfortable shoes and AI generate platforms. you weren't smiling perfectly, smile again. no matter if you had been genuinely enjoying a moment. you are not in a meadow with friends, you're in a catalogue of your own life! smile again! you know what, forget it.

we will just edit the right face in.

#spilled ink#writeblr#warm up#i have more to say about how fundamentally fucked up it is that we associate ugliness with evil#but this is also just like. to get the first part off my chest#and as someone w/an invisible disability#....... yeahhhhhhhhhhhhhhhhhhhhhhhhhhhhhhhh#(i got too mad and my brain shorted out)

8K notes

·

View notes

Text

In a leaked recording, Amazon Cloud Chief tells employees that most developers could stop coding soon as A.I. takes over

https://www.businessinsider.com/aws-ceo-developers-stop-coding-ai-takes-over-2024-8

#artificial intelligence#amazon#amazon cloud#employment#employees#employers#developers#fuck amazon#boycott amazon#fuck ai#anti ai#ausgov#politas#auspol#tasgov#taspol#australia#fuck neoliberals#neoliberal capitalism#anthony albanese#albanese government

0 notes