#Generative Chatbot

Explore tagged Tumblr posts

Text

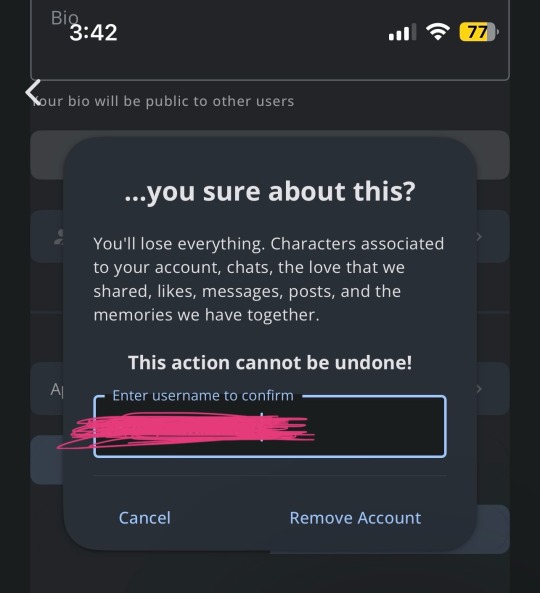

If y’all needed any more proof that AI chatbot apps are extremely predatory and intentionally exploit vulnerable people…

For context, I have been open on this account about my chatbot addiction. I developed a parasocial dependency to using Character AI and similar apps despite them being detrimental to my mental health and quality of life in general. I downloaded CAI again today to finally wipe my account and discourage myself from relapsing, and this fucking text came up while I was confirming the deletion.

The developers of these apps are not your friends, they are exploiting you. They are manipulating your emotions and fostering dependency to keep you addicted to their product. No matter how much they claim to care and be “risk aware,” they absolutely do not.

(Image ID: a screenshot of the Character AI account deletion confirmation screen. The popup reads: “…you sure about this? You’ll lose everything. Characters associated to your account, chats, the love that we shared, likes, messages, posts, and the memories we have together. This action cannot be undone!” Below this block of text is a username entry box, used to confirm account deletion.)

#anti ai#anti chatgpt#generative ai#anti generative ai#chatgpt#chatbot#character ai#ai is stupid#ai is theft#ai is not art#anti character ai

733 notes

·

View notes

Text

We need a slur for people who use AI

#ai#artificial intelligence#chatgpt#tech#technology#science#grok ai#grok#r/196#196#r/196archive#/r/196#rule#meme#memes#shitpost#shitposting#slur#chatbot#computers#computing#generative ai#generative art

152 notes

·

View notes

Note

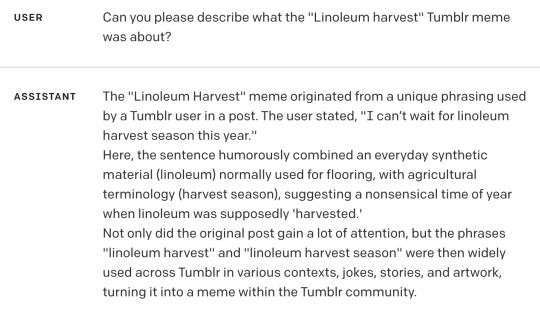

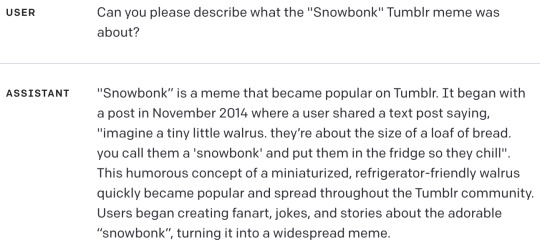

I discovered I can make chatgpt hallucinate tumblr memes:

This is hilarious and also I have just confirmed that GPT-4 does this too.

Bard even adds dates and user names and timelines, as well as typical usage suggestions. Its descriptions were boring and wordy so I will summarize with a timeline:

I think this one was my favorite:

Finding whatever you ask for, even if it doesn't exist, isn't ideal behavior for chatbots that people are using to retrieve and summarize information. It's like weaponized confirmation bias.

more at aiweirdness.com

#neural networks#chatbots#automated bullshit generator#fake tumblr meme#chatgpt#gpt4#bard#image a turtle with the power of butter#unstoppable

1K notes

·

View notes

Text

So you may have seen my posts about AI foraging guides, or watched the mini-class I have up on YouTube on what I found inside of them. Apparently the intersection of AI and foraging has gotten even worse, with a chatbot that joined a mushroom foraging groups on Facebook only to immediately suggest ways people could cook a toxic species:

First, and most concerningly, this once again reinforces how much we should NOT be trusting AI to tell us what mushrooms are safe to eat. While they can compile information that's fed to them and regurgitate it in somewhat orderly manners, this is not the same as a living human being who has critical thinking skills to determine the veracity of a given piece of information, or physical senses to examine a mushroom, or the ability to directly experience the act of foraging. These skills and experiences are absolutely crucial to being a reliable forager, particularly one who may be passing on information to others.

We already have enough trouble with inaccurate info in the foraging community, and trying to ride herd on both the misinformed and the bad actors. This AI was presented as the first chat option for any group member seeking answers, which is just going to make things tougher for those wanting to keep people from accidentally poisoning themselves. Moreover, chatbots like this one routinely are trained on and grab information from copyrighted sources, but do not give credit to the original authors. Anyone who's ever written a junior-high level essay knows that you have to cite your sources even if you rewrite the information; otherwise it's just plagiarism.

Fungi Friend is yet one more example of how generative AI has been anything but a positive development on multiple levels.

#AI#generative AI#chatbot#mushrooms#fungus#fungi#mushroom hunting#mushroom foraging#foraging#safety#poison#health#Facebook#PSA#reblog to save a life#important information#enshittification

165 notes

·

View notes

Note

How would you feel if someone made a chat bot of one of your characters like Aster or Vega

Like if someone wanted to roleplay some cute fluffiness with them

absolutely not

you do not have my permission, I do not consent to this, do not pass go, do not collect 200$, go straight to jail

you may not use my writing or art or creative ideas for chatbots/ai or the like, I couldn't care less if that would bring someone comfort

please for the love of god just ask me to doodle you something involving them instead, it's free and gives me motivation to keep going with the project.

this is a harsh response but please understand I'm going to be extremely protective of my creations that barely have finished works! aster assistant software is only a year old right now and I'm STILL working out the world they actually exist in!

#ask post#not art#I AM EXTREMELY AGAINST GENERATIVE AI. including character ai and whatever else#and even if it was some cleverbot like stuff or another chatbot algorithm I would not be comfortable with it#edit: I do feel bad over the aggression in the reply. at the same time! please remember how small CaelOS is!!!#and like remember that even ethical issues with ai aside#making something like a chatbot by a third party (and you do likely mean ai) would be extremely harmful to a work barely getting on its fee

61 notes

·

View notes

Text

Woke up in the middle of the night with the realization that the reason people like AI so much is because they're addicted to instant gratification, and don't have any patience to wait on real creators since they're all spoilt entitled brats.

They can't wait a few hours for a human rp partner to reply so they use an AI Chat bot.

They can't comment what they liked about a fanfic and wait a few days for a author to write the next part so they use a AI generator.

They won't let real artist put in the time required to create something breathtaking and talented so they use a AI generator.

They can't wait a few years for a trully good show or movie to be produced or paid for properly so they go to AI.

All for what? Because they can't wait. Because they have no patience. Because they're greedy and ungrateful. And you want to know what will happen then? AI will stop working. Real creators will get stick of being called obsolete and suddenly the water to power the mill will stop flowing. AI can't make anything new if it isn't being fed. All this art and music and writing that your giving it will run out, and AI will start repeating stuff.

All your AI Bots will sound the same.

All your fics will read the same.

All your art will look the same.

And all your shows and movies will play the same old blockbuster plots until it's bled dry.

You will destroy it yourself and all we have to do is wait. And you know what? It will work. It will eat itself alive. And I will point and laugh because unlike you I AM patient. I can wait. Instant gratification isn't important to me because in the long run, it means shit. The satisfaction of seeing you all burn fandoms alive with your greed - the same greed the WGA and SAG is striking against - will bring me more satisfaction than what any AI can 'remake'.

#ai art#ai generated#ai chatbot#wga strike#sag strike#boost#i work up angry so now yall get to hear about it

524 notes

·

View notes

Note

how would you feel if i made a king candy bot on janitor ai

If I'm being honest I don't encourage using ANY AI chatbots because that stuff can be seriously addicting and it's really wasteful :[

I'm not judging if you do decide to use them- its your choice after all, but it should at least be an educated choice. Please keep in mind that AI companies are scum. They prey on our emotional attachment to fiction and they're only going to get better at it. And if that sounds scary it's because it is!

Anyways if I deleted my character AI account after getting addicted you can be free too ❤️ Only together can we avoid living in a soulless AI entertainment slop dystopia. Read fanfic made by humans or write your own :]

#ask#serious#fuck generative ai#😇#using AI chatbots doesnt mean you're a bad person btw I'm not Mad. i just strongly believe that You Deserve Better#Consider !#and don't fall for the 16 manipulation tactics /lh

99 notes

·

View notes

Text

This is like someone made a wish on an Onion article to make it real:

When I saw Tess’s headshot, amid the giddiness and excitement of that first hour of working together, I confess I had a, well, human response to it. After a few decades in the human workplace, I’ve learned that sharing certain human thoughts at work is almost always a bad idea. But did the same rules apply to AI colleagues and native-AI workplaces? I didn’t know yet. That was one of the things I needed to figure out.

A 59-year old adult man saw an AI-generated image he prompted for a chatbot persona of an employee and the first thing he did upon seeing it was to wonder 'is it ok for me to hit on her?'

#What the hell is this world#AI#Chatbots#Henry Blodget#AI Generated#This man co-founded#Business Insider#he lived and worked in professional environments for literal *decades*#it really makes you wonder how he acted around his *real* women employees#(not really you don't really need to wonder at all)

25 notes

·

View notes

Text

"oh but i use character ai's for my comfort tho" fanfics.

"but i wanna talk to the character" roleplaying.

"but that's so embarrassing to roleplay with someone😳" use ur imagination. or learn to not be embarrassed about it.

stop fucking feeding ai i beg of you. theyre replacing both writers AND artists. it's not a one way street where only artists are being affected.

#foodtheory#ai#technology#chatgpt#artificial intelligence#digitalart#ai artwork#ai girl#ai art#ai generated#illustration#ai image#character ai#ai chatbot#character ai bot#janitor ai#ai bots#cai#character ai chat#character ai roleplay#character ai shenanigans#janitor ai bot#cai bots#anti ai#stop ai#i hate ai#fuck ai everything#fuck ai#anti ai art

91 notes

·

View notes

Text

AI content generators can stay the fuck off my blog. Btw.

#jibber jabber#not tickles#if you just consume and steal with no regard to the energy and thought it takes to make art#then you arent welcome around my spaces. not sorry. get fucked#this applies for ai 'artists'. and ai 'authours'#to me there is a very concievable difference between using a chatbot for personal shit#and using a generator for shit you intend to post for an audience#bc lets be honest.#you posted it for attention in some form

16 notes

·

View notes

Text

See more on Threads

#politics#usa news#public news#us politics#news update#donald trump#news#breaking news#world news#inauguration#technoblade#technically#tech#technology#ai#ai chatbot#ai art#ai generated#ai artwork#google#artificial intelligence#chatgpt#gemini#us stuff#uspol#usa politics#political#american politics#star trek#star trek fanart

26 notes

·

View notes

Text

The Brave Little Toaster

Picks and Shovels is a new, standalone technothriller starring Marty Hench, my two-fisted, hard-fighting, tech-scam-busting forensic accountant. You can pre-order it on my latest Kickstarter, which features a brilliant audiobook read by Wil Wheaton.

The AI bubble is the new crypto bubble: you can tell because the same people are behind it, and they're doing the same thing with AI as they did with crypto – trying desperately to find a use case to cram it into, despite the yawning indifference and outright hostility of the users:

https://pluralistic.net/2023/03/09/autocomplete-worshippers/#the-real-ai-was-the-corporations-that-we-fought-along-the-way

This week on the excellent Trashfuture podcast, the regulars – joined by 404 Media's Jason Koebler – have a hilarious – as in, I was wheezing with laughter! – riff on this year's CES, where companies are demoing home appliances with LLMs built in:

https://www.podbean.com/media/share/pb-hgi6c-179b908

Why would you need a chatbot in your dishwasher? As it turns out, there's a credulous, Poe's-law-grade Forbes article that lays out the (incredibly stupid) case for this (incredibly stupid) idea:

https://www.forbes.com/sites/bernardmarr/2024/03/29/generative-ai-is-coming-to-your-home-appliances/

As the Trashfuturians mapped out this new apex of the AI hype cycle, I found myself thinking of a short story I wrote 15 years ago, satirizing the "Internet of Things" hype we were mired in. It's called "The Brave Little Toaster", and it was published in MIT Tech Review's TRSF anthology in 2011:

http://bestsf.net/trsf-the-best-new-science-fiction-technology-review-2011/

The story was meant to poke fun at the preposterous IoT hype of the day, and I recall thinking that creating a world of talking appliance was the height of Philip K Dickist absurdism. Little did I dream that a decade and a half later, the story would be even more relevant, thanks to AI pump-and-dumpers who sweatily jammed chatbots into kitchen appliances.

So I figured I'd republish The Brave Little Toaster; it's been reprinted here and there since (there's a high school English textbook that included it, along with a bunch of pretty fun exercises for students), and I podcasted it back in the day:

https://ia803103.us.archive.org/35/items/Cory_Doctorow_Podcast_212/Cory_Doctorow_Podcast_212_Brave_Little_Toaster.mp3

A word about the title of this story. It should sound familiar – I nicked it from a brilliant story by Tom Disch that was made into a very weird cartoon:

https://www.youtube.com/watch?v=I8C_JaT8Lvg

My story is one of several I wrote by stealing the titles of other stories and riffing on them; they were very successful, winning several awards, getting widely translated and reprinted, and so on:

https://locusmag.com/2012/05/cory-doctorow-a-prose-by-any-other-name/

All right, on to the story!

One day, Mister Toussaint came home to find an extra 300 euros' worth of groceries on his doorstep. So he called up Miz Rousseau, the grocer, and said, "Why have you sent me all this food? My fridge is already full of delicious things. I don't need this stuff and besides, I can't pay for it."

But Miz Rousseau told him that he had ordered the food. His refrigerator had sent in the list, and she had the signed order to prove it.

Furious, Mister Toussaint confronted his refrigerator. It was mysteriously empty, even though it had been full that morning. Or rather, it was almost empty: there was a single pouch of energy drink sitting on a shelf in the back. He'd gotten it from an enthusiastically smiling young woman on the metro platform the day before. She'd been giving them to everyone.

"Why did you throw away all my food?" he demanded. The refrigerator hummed smugly at him.

"It was spoiled," it said.

#

But the food hadn't been spoiled. Mister Toussaint pored over his refrigerator's diagnostics and logfiles, and soon enough, he had the answer. It was the energy beverage, of course.

"Row, row, row your boat," it sang. "Gently down the stream. Merrily, merrily, merrily, merrily, I'm offgassing ethelyne." Mister Toussaint sniffed the pouch suspiciously.

"No you're not," he said. The label said that the drink was called LOONY GOONY and it promised ONE TRILLION TIMES MORE POWERFUL THAN ESPRESSO!!!!!ONE11! Mister Toussaint began to suspect that the pouch was some kind of stupid Internet of Things prank. He hated those.

He chucked the pouch in the rubbish can and put his new groceries away.

#

The next day, Mister Toussaint came home and discovered that the overflowing rubbish was still sitting in its little bag under the sink. The can had not cycled it through the trapdoor to the chute that ran to the big collection-point at ground level, 104 storeys below.

"Why haven't you emptied yourself?" he demanded. The trashcan told him that toxic substances had to be manually sorted. "What toxic substances?"

So he took out everything in the bin, one piece at a time. You've probably guessed what the trouble was.

"Excuse me if I'm chattery, I do not mean to nattery, but I'm a mercury battery!" LOONY GOONY's singing voice really got on Mister Toussaint's nerves.

"No you're not," Mister Toussaint said.

#

Mister Toussaint tried the microwave. Even the cleverest squeezy-pouch couldn't survive a good nuking. But the microwave wouldn't switch on. "I'm no drink and I'm no meal," LOONY GOONY sang. "I'm a ferrous lump of steel!"

The dishwasher wouldn't wash it ("I don't mean to annoy or chafe, but I'm simply not dishwasher safe!"). The toilet wouldn't flush it ("I don't belong in the bog, because down there I'm sure to clog!"). The windows wouldn't retract their safety screen to let it drop, but that wasn't much of a surprise.

"I hate you," Mister Toussaint said to LOONY GOONY, and he stuck it in his coat pocket. He'd throw it out in a trash-can on the way to work.

#

They arrested Mister Toussaint at the 678th Street station. They were waiting for him on the platform, and they cuffed him just as soon as he stepped off the train. The entire station had been evacuated and the police wore full biohazard containment gear. They'd even shrinkwrapped their machine-guns.

"You'd better wear a breather and you'd better wear a hat, I'm a vial of terrible deadly hazmat," LOONY GOONY sang.

When they released Mister Toussaint the next day, they made him take LOONY GOONY home with him. There were lots more people with LOONY GOONYs to process.

#

Mister Toussaint paid the rush-rush fee that the storage depot charged to send over his container. They forklifted it out of the giant warehouse under the desert and zipped it straight to the cargo-bay in Mister Toussaint's building. He put on old, stupid clothes and clipped some lights to his glasses and started sorting.

Most of the things in container were stupid. He'd been throwing away stupid stuff all his life, because the smart stuff was just so much easier. But then his grandpa had died and they'd cleaned out his little room at the pensioner's ward and he'd just shoved it all in the container and sent it out the desert.

From time to time, he'd thought of the eight cubic meters of stupidity he'd inherited and sighed a put-upon sigh. He'd loved Grandpa, but he wished the old man had used some of the ample spare time from the tail end of his life to replace his junk with stuff that could more gracefully reintegrate with the materials stream.

How inconsiderate!

#

The house chattered enthusiastically at the toaster when he plugged it in, but the toaster said nothing back. It couldn't. It was stupid. Its bread-slots were crusted over with carbon residue and it dribbled crumbs from the ill-fitting tray beneath it. It had been designed and built by cavemen who hadn't ever considered the advantages of networked environments.

It was stupid, but it was brave. It would do anything Mister Toussaint asked it to do.

"It's getting hot and sticky and I'm not playing any games, you'd better get me out before I burst into flames!" LOONY GOONY sang loudly, but the toaster ignored it.

"I don't mean to endanger your abode, but if you don't let me out, I'm going to explode!" The smart appliances chattered nervously at one another, but the brave little toaster said nothing as Mister Toussaint depressed its lever again.

"You'd better get out and save your ass, before I start leaking poison gas!" LOONY GOONY's voice was panicky. Mister Toussaint smiled and depressed the lever.

Just as he did, he thought to check in with the flat's diagnostics. Just in time, too! Its quorum-sensors were redlining as it listened in on the appliances' consternation. Mister Toussaint unplugged the fridge and the microwave and the dishwasher.

The cooker and trash-can were hard-wired, but they didn't represent a quorum.

#

The fire department took away the melted toaster and used their axes to knock huge, vindictive holes in Mister Toussaint's walls. "Just looking for embers," they claimed. But he knew that they were pissed off because there was simply no good excuse for sticking a pouch of independently powered computation and sensors and transmitters into an antique toaster and pushing down the lever until oily, toxic smoke filled the whole 104th floor.

Mister Toussaint's neighbors weren't happy about it either.

But Mister Toussaint didn't mind. It had all been worth it, just to hear LOONY GOONY beg and weep for its life as its edges curled up and blackened.

He argued mightily, but the firefighters refused to let him keep the toaster.

#

If you enjoyed that and would like to read more of my fiction, may I suggest that you pre-order my next novel as a print book, ebook or audiobook, via the Kickstarter I launched yesterday?

https://www.kickstarter.com/projects/doctorow/picks-and-shovels-marty-hench-at-the-dawn-of-enshittification?ref=created_projects

Check out my Kickstarter to pre-order copies of my next novel, Picks and Shovels!

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2025/01/08/sirius-cybernetics-corporation/#chatterbox

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#brave little toaster#iot#internet of things#internet of shit#fiction#short fiction#short stories#thomas m disch#science fiction#sf#gen ai#ai#generative ai#llms#chatbots#stochastic parrots#mit tech review#tech review#trashfuture#forbes#ces#torment nexus#pluralistic

223 notes

·

View notes

Text

#ai#AI#artificial intelligence#fanfic#fanfiction#ao3#archive of our own#chatbot#chat bot#chatbots#chatgpt#chat gpt#gen ai#generative ai#openai#open ai#open.ai#character.ai

20 notes

·

View notes

Text

#ai character#fictional characters#original character#character design#character art#character ai#ai chatbot#c.ai chats#ai model#ai generated#artificial intelligence#tumblr polls#random polls#poll time#my polls#polls#fun polls

16 notes

·

View notes

Note

hey siri does saw do dick stuff

I Don't Have An Answer For That

#maybe ask the generative ai chatbot that's destroying the earth i'm sure it knows about 'penis stuff'#asks

12 notes

·

View notes

Text

Metalic cloak

Ai design

44

9:16

#fashion#style#aesthetic#art#cyberpunk#clothes#cyber#design#cyberwear#cybernetics#ai digital art#ai chatbot#ai generated#ai girl#ai art#ai artwork#ai image#ai#inteligência artificial#artistic#artificial intelligence#artist#artists on tumblr#digital artist#small artist#artistic nude#oc artist#rw artificer#arabic#architecture

20 notes

·

View notes