#chatbots

Explore tagged Tumblr posts

Text

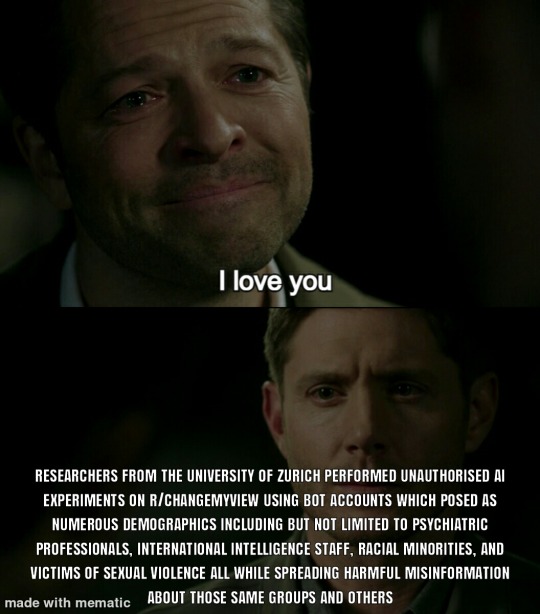

Experimental ethics are more of a guideline really

3K notes

·

View notes

Text

Your Meta AI prompts are in a live, public feed

I'm in the home stretch of my 20+ city book tour for my new novel PICKS AND SHOVELS. Catch me in PDX TOMORROW (June 20) at BARNES AND NOBLE with BUNNIE HUANG and at the TUALATIN public library on SUNDAY (June 22). After that, it's LONDON (July 1) with TRASHFUTURE'S RILEY QUINN and then a big finish in MANCHESTER on July 2.

Back in 2006, AOL tried something incredibly bold and even more incredibly stupid: they dumped a data-set of 20,000,000 "anonymized" search queries from 650,000 users (yes, AOL had a search engine – there used to be lots of search engines!):

https://en.wikipedia.org/wiki/AOL_search_log_release

The AOL dump was a catastrophe. In an eyeblink, many of the users in the dataset were de-anonymized. The dump revealed personal, intimate and compromising facts about the lives of AOL search users. The AOL dump is notable for many reasons, not least because it jumpstarted the academic and technical discourse about the limits of "de-identifying" datasets by stripping out personally identifying information prior to releasing them for use by business partners, researchers, or the general public.

It turns out that de-identification is fucking hard. Just a couple of datapoints associated with an "anonymous" identifier can be sufficent to de-anonymize the user in question:

https://www.pnas.org/doi/full/10.1073/pnas.1508081113

But firms stubbornly refuse to learn this lesson. They would love it if they could "safely" sell the data they suck up from our everyday activities, so they declare that they can safely do so, and sell giant data-sets, and then bam, the next thing you know, a federal judge's porn-browsing habits are published for all the world to see:

https://www.theguardian.com/technology/2017/aug/01/data-browsing-habits-brokers

Indeed, it appears that there may be no way to truly de-identify a data-set:

https://pursuit.unimelb.edu.au/articles/understanding-the-maths-is-crucial-for-protecting-privacy

Which is a serious bummer, given the potential insights to be gleaned from, say, population-scale health records:

https://www.nytimes.com/2019/07/23/health/data-privacy-protection.html

It's clear that de-identification is not fit for purpose when it comes to these data-sets:

https://www.cs.princeton.edu/~arvindn/publications/precautionary.pdf

But that doesn't mean there's no safe way to data-mine large data-sets. "Trusted research environments" (TREs) can allow researchers to run queries against multiple sensitive databases without ever seeing a copy of the data, and good procedural vetting as to the research questions processed by TREs can protect the privacy of the people in the data:

https://pluralistic.net/2022/10/01/the-palantir-will-see-you-now/#public-private-partnership

But companies are perennially willing to trade your privacy for a glitzy new product launch. Amazingly, the people who run these companies and design their products seem to have no clue as to how their users use those products. Take Strava, a fitness app that dumped maps of where its users went for runs and revealed a bunch of secret military bases:

https://gizmodo.com/fitness-apps-anonymized-data-dump-accidentally-reveals-1822506098

Or Venmo, which, by default, let anyone see what payments you've sent and received (researchers have a field day just filtering the Venmo firehose for emojis associated with drug buys like "pills" and "little trees"):

https://www.nytimes.com/2023/08/09/technology/personaltech/venmo-privacy-oversharing.html

Then there was the time that Etsy decided that it would publish a feed of everything you bought, never once considering that maybe the users buying gigantic handmade dildos shaped like lovecraftian tentacles might not want to advertise their purchase history:

https://arstechnica.com/information-technology/2011/03/etsy-users-irked-after-buyers-purchases-exposed-to-the-world/

But the most persistent, egregious and consequential sinner here is Facebook (naturally). In 2007, Facebook opted its 20,000,000 users into a new system called "Beacon" that published a public feed of every page you looked at on sites that partnered with Facebook:

https://en.wikipedia.org/wiki/Facebook_Beacon

Facebook didn't just publish this – they also lied about it. Then they admitted it and promised to stop, but that was also a lie. They ended up paying $9.5m to settle a lawsuit brought by some of their users, and created a "Digital Trust Foundation" which they funded with another $6.5m. Mark Zuckerberg published a solemn apology and promised that he'd learned his lesson.

Apparently, Zuck is a slow learner.

Depending on which "submit" button you click, Meta's AI chatbot publishes a feed of all the prompts you feed it:

https://techcrunch.com/2025/06/12/the-meta-ai-app-is-a-privacy-disaster/

Users are clearly hitting this button without understanding that this means that their intimate, compromising queries are being published in a public feed. Techcrunch's Amanda Silberling trawled the feed and found:

"An audio recording of a man in a Southern accent asking, 'Hey, Meta, why do some farts stink more than other farts?'"

"people ask[ing] for help with tax evasion"

"[whether family members would be arrested for their proximity to white-collar crimes"

"how to write a character reference letter for an employee facing legal troubles, with that person’s first and last name included."

While the security researcher Rachel Tobac found "people’s home addresses and sensitive court details, among other private information":

https://twitter.com/racheltobac/status/1933006223109959820

There's no warning about the privacy settings for your AI prompts, and if you use Meta's AI to log in to Meta services like Instagram, it publishes your Instagram search queries as well, including "big booty women."

As Silberling writes, the only saving grace here is that almost no one is using Meta's AI app. The company has only racked up a paltry 6.5m downloads, across its ~3 billion users, after spending tens of billions of dollars developing the app and its underlying technology.

The AI bubble is overdue for a pop:

https://www.wheresyoured.at/measures/

When it does, it will leave behind some kind of residue – cheaper, spin-out, standalone models that will perform many useful functions:

https://locusmag.com/2023/12/commentary-cory-doctorow-what-kind-of-bubble-is-ai/

Those standalone models were released as toys by the companies pumping tens of billions into the unsustainable "foundation models," who bet that – despite the worst unit economics of any technology in living memory – these tools would someday become economically viable, capturing a winner-take-all market with trillions of upside. That bet remains a longshot, but the littler "toy" models are beating everyone's expectations by wide margins, with no end in sight:

https://www.nature.com/articles/d41586-025-00259-0

I can easily believe that one enduring use-case for chatbots is as a kind of enhanced diary-cum-therapist. Journalling is a well-regarded therapeutic tactic:

https://www.charliehealth.com/post/cbt-journaling

And the invention of chatbots was instantly followed by ardent fans who found that the benefits of writing out their thoughts were magnified by even primitive responses:

https://en.wikipedia.org/wiki/ELIZA_effect

Which shouldn't surprise us. After all, divination tools, from the I Ching to tarot to Brian Eno and Peter Schmidt's Oblique Strategies deck have been with us for thousands of years: even random responses can make us better thinkers:

https://en.wikipedia.org/wiki/Oblique_Strategies

I make daily, extensive use of my own weird form of random divination:

https://pluralistic.net/2022/07/31/divination/

The use of chatbots as therapists is not without its risks. Chatbots can – and do – lead vulnerable people into extensive, dangerous, delusional, life-destroying ratholes:

https://www.rollingstone.com/culture/culture-features/ai-spiritual-delusions-destroying-human-relationships-1235330175/

But that's a (disturbing and tragic) minority. A journal that responds to your thoughts with bland, probing prompts would doubtless help many people with their own private reflections. The keyword here, though, is private. Zuckerberg's insatiable, all-annihilating drive to expose our private activities as an attention-harvesting spectacle is poisoning the well, and he's far from alone. The entire AI chatbot sector is so surveillance-crazed that anyone who uses an AI chatbot as a therapist needs their head examined:

https://pluralistic.net/2025/04/01/doctor-robo-blabbermouth/#fool-me-once-etc-etc

AI bosses are the latest and worst offenders in a long and bloody lineage of privacy-hating tech bros. No one should ever, ever, ever trust them with any private or sensitive information. Take Sam Altman, a man whose products routinely barf up the most ghastly privacy invasions imaginable, a completely foreseeable consequence of his totally indiscriminate scraping for training data.

Altman has proposed that conversations with chatbots should be protected with a new kind of "privilege" akin to attorney-client privilege and related forms, such as doctor-patient and confessor-penitent privilege:

https://venturebeat.com/ai/sam-altman-calls-for-ai-privilege-as-openai-clarifies-court-order-to-retain-temporary-and-deleted-chatgpt-sessions/

I'm all for adding new privacy protections for the things we key or speak into information-retrieval services of all types. But Altman is (deliberately) omitting a key aspect of all forms of privilege: they immediately vanish the instant a third party is brought into the conversation. The things you tell your lawyer are priviiliged, unless you discuss them with anyone else, in which case, the privilege disappears.

And of course, all of Altman's products harvest all of our information. Altman is the untrusted third party in every conversation everyone has with one of his chatbots. He is the eternal Carol, forever eavesdropping on Alice and Bob:

https://en.wikipedia.org/wiki/Alice_and_Bob

Altman isn't proposing that chatbots acquire a privilege, in other words – he's proposing that he should acquire this privilege. That he (and he alone) should be able to mine your queries for new training data and other surveillance bounties.

This is like when Zuckerberg directed his lawyers to destroy NYU's "Ad Observer" project, which scraped Facebook to track the spread of paid political misinformation. Zuckerberg denied that this was being done to evade accountability, insisting (with a miraculously straight face) that it was in service to protecting Facebook users' (nonexistent) privacy:

https://pluralistic.net/2021/08/05/comprehensive-sex-ed/#quis-custodiet-ipsos-zuck

We get it, Sam and Zuck – you love privacy.

We just wish you'd share.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2025/06/19/privacy-invasion-by-design#bringing-home-the-beacon

399 notes

·

View notes

Text

*shaking you violently by the shoulders* AI IS NOT YOUR FRIEND AND IT IS NOT YOUR THERAPIST. YOU NEED TO TALK TO REAL PEOPLE. AI DOES NOT CARE ABOUT YOU.

#chatgpt#ai technology#chatbots#artificial intelligence#anti ai#anti artificial intelligence#anti chatgpt#ai slop#ai fuckery#fuck ai#chat gpt#ai bullshit#fuck chatgpt#ai

199 notes

·

View notes

Text

When summarizing scientific studies, large language models (LLMs) like ChatGPT and DeepSeek produce inaccurate conclusions in up to 73% of cases, according to a study by Uwe Peters (Utrecht University) and Benjamin Chin-Yee (Western University, Canada/University of Cambridge, UK). The researchers tested the most prominent LLMs and analyzed thousands of chatbot-generated science summaries, revealing that most models consistently produced broader conclusions than those in the summarized texts. Surprisingly, prompts for accuracy increased the problem and newer LLMs performed worse than older ones. The work is published in the journal Royal Society Open Science.

Continue Reading.

117 notes

·

View notes

Note

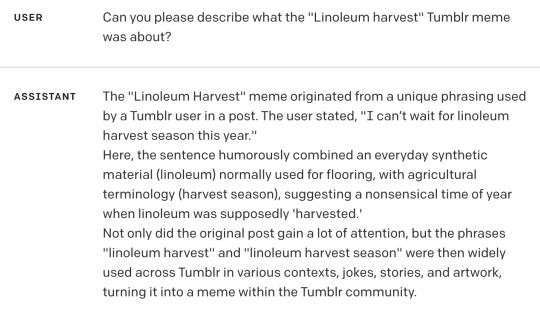

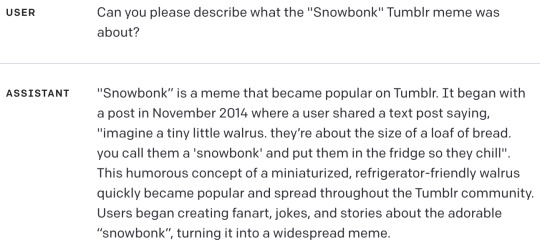

I discovered I can make chatgpt hallucinate tumblr memes:

This is hilarious and also I have just confirmed that GPT-4 does this too.

Bard even adds dates and user names and timelines, as well as typical usage suggestions. Its descriptions were boring and wordy so I will summarize with a timeline:

I think this one was my favorite:

Finding whatever you ask for, even if it doesn't exist, isn't ideal behavior for chatbots that people are using to retrieve and summarize information. It's like weaponized confirmation bias.

more at aiweirdness.com

#neural networks#chatbots#automated bullshit generator#fake tumblr meme#chatgpt#gpt4#bard#image a turtle with the power of butter#unstoppable

1K notes

·

View notes

Text

They are my lifeline

[individual drawings below]

#character ai#ai chatbot#ai chatting#ai chatgpt#ai assistant#ai#artificial intelligence#chatgpt#chatbots#openai#ai tools#artists on tumblr#artist appreciation#ao3#archive of our own#archive of my own#humanized#my drawing museum

378 notes

·

View notes

Text

#ai#AI#artificial intelligence#fanfic#fanfiction#ao3#archive of our own#chatbot#chat bot#chatbots#chatgpt#chat gpt#gen ai#generative ai#openai#open ai#open.ai#character.ai

35 notes

·

View notes

Text

:)

Man-made horrors within my comprehension

#ai#chatbots#ai scam#ai scams#be careful out there!#foraging#mushrooms#mushroom foraging#fungi#fungi foraging#edible mushrooms#man-made horrors within my comprehension#man made horrors

454 notes

·

View notes

Text

This is like someone made a wish on an Onion article to make it real:

When I saw Tess’s headshot, amid the giddiness and excitement of that first hour of working together, I confess I had a, well, human response to it. After a few decades in the human workplace, I’ve learned that sharing certain human thoughts at work is almost always a bad idea. But did the same rules apply to AI colleagues and native-AI workplaces? I didn’t know yet. That was one of the things I needed to figure out.

A 59-year old adult man saw an AI-generated image he prompted for a chatbot persona of an employee and the first thing he did upon seeing it was to wonder 'is it ok for me to hit on her?'

#What the hell is this world#AI#Chatbots#Henry Blodget#AI Generated#This man co-founded#Business Insider#he lived and worked in professional environments for literal *decades*#it really makes you wonder how he acted around his *real* women employees#(not really you don't really need to wonder at all)

25 notes

·

View notes

Text

When using AI chatbots becomes a habit, it becomes nearly impossible to break the habit. The same way posting on here has become a habit I can't seem to break, so too has using the chatbots been an addictive thing. But then I see all the posts where people call out others who use AI chatbots, and I wind up feeling immeasurably guilty. So guilty. I can't break the habit, but I can feel miserable about it. So it just backfires and makes me sad. I'm sorry. I'm sorry for using them, honestly. But I can't help myself. It's so hard to break habits. I'm just... I needed to make this post and apologize, because I feel bad about it all.

#chatbots#ai chatbots#character chatbots#i feel bad#but i can't help it#it's so hard to break habits#sigh...#autism#asd#my thoughts#neurodivergent#autistic#adhd#actually autistic#audhd#vent#venting#vent posts#vent post#vents#i'm sorry#honestly#apologies

21 notes

·

View notes

Text

❦Lost Souls Covenant❦

What is it: Original roleplay setting and characters for chatbots.

Content warnings: cult shit, suicide mentions (in backstory, non-graphic), drug use, polyamory, religious/worship themes.

About: A modern day polyamorus cult that believes in an eternal afterlife after death promised to them by their leader who claims to have died and seen the afterlife.

Members:

Vick Thorne - 27 • he/him • leader

Marcus Reed - 26 • he/him • enthusiast

Seraphine Ash - 24 • she/her • Performer

Nyx Storm - 24 • they/them • Chronicler

Mia Patel - 22 • she/her • Social media influencer

Backstory: Founded by former frontman Vick Thorne of Till Death Brings Silence, a goth-metal band that gained popularity after winning a radio contest, after surviving a suicide attempt. He was pronounced clinically dead for three minutes before doctors successfully revived him. Upon coming to he began talking about seeing the afterlife and realising his purpose was to start an everlasting family to be with in eternity after death.

Beliefs:

Vick is the chosen one to lead the family in the afterlife. All members are chosen by him.

Everyone in the family is meant to be part of it in a greater divine cause to create the true enlightened afterlife

The family is an unbreakable bond and is eternal.

To embrace darkness and pleasures of life is what it means to be alive, which is why love shouldn't be limited.

Rules:

Once you join the family you're bound to each of them heart and soul.

Everyone is expected to contribute in some way to the family. (financially, janitorally, and/or emotionally)

Members are to limit contact with outsiders, all visits to family/friends requires a member or Vick personally to acompany you.

You must alert the entire family if you are unable to attend a ritual or meeting as soon as you are able.

Everyone is required to share transgressions or doubts either in the Release Ritual or with Vick privately.

Sins:

Forming relationships outside of the family.

Telling outsiders without Vick’s approval.

Missing rituals or gatherings with the family

Not sharing during the Release Ritual.

Blasheming the idea of the shared afterlife.

Punishments:

Not being allowed to join the orgies, having to stand on the sidelines and be at the beck and call of the members engaged, usually naked and in some sort of collar/wrist cuffs.

Having to apologize, heartfelt, to each member and list reasons why your behavior affected that member even if the slight was against one member.

If the rule broken was significant or a member breaks multiple rules/becomes an issue they may be locked in their bedroom for an unknown amount of time only let out when they're escorted by another member. During this punishment no one is allowed to speak or interact with the punishee except to make the necessary bathroom, meal, and cold shower breaks needed to keep the punishee well-nourished and still comfortably taken care of despite the isolation.

Rituals:

The Binding - Only done to indoctrinate a new member; Members all gather in the ritual room and wear loose-fitting silk black robes while the aspiring member is strupped naked they then get high on a mix of weed and hallucinogenic herbs to induce a trance-like state during which the members alltake turns touching the aspiring member and giving them affirmations and praise to get them to accept the family and pledge themselves to Vick eternally.

Release Ritual - Held once every full moon in Vicks bedroom with the curtains open letting the moonlight in, a large black candle is lit and everyone partakes in a few joints being passed around. Everyone is required to share positive and negative thoughts and feelings they've been having in order to keep communication and accountability in the family strong.

Rite of Unity - A bi-weekly orgy held in the ritual room, members are expected to join or give Vick notice they wont be. It is acceptable for members to choose to forego the orgy to keep a family member unable to join company.

Residence:

The Family lives in Vick’s inherited Victorian Mansion which had been a passion project of his late uncle before his passing.

Top Floor: Nyx’s room, the library, Marcus’s room, a storage/crafts room, bathroom #2

Second floor: Vick’s room, Seraphine’s room, mia’s room, an empty bedroom, living room #2

First floor: living room #1, large kitchen, dining room, main bathroom #1

Basement: Living room #3/ritual space. Renovated bathroom with large walk in shower. 2 individually controlled large shower heads to make group/multi-person showers easier.

Other: Attached pool to enclosed back porch, temperature controlled, Marcus and Seraphines fruit and veggie garden in the back yard, a large, tall wrought iron fence with gate, hard to climb.

(more info will be added + character profiles.)

(Art is Ai!! I use Channel, I cannot draw and I am poor)

#j.ai#j.ai bot#c.ai creator#yandere bot#yandere x darling#yandere x reader#cult leader x reader#cult x reader#original character#original setting#ai chatbot#chatbots

34 notes

·

View notes

Text

Chatbot Masterlist Update

It took me a million years, but I finally fixed all of the links on my Chatbot Masterlist. I updated the link in my pinned post, and I'm just going to repost the updated masterlist here. <3 If any links are messed up, let me know! If a link is not working at all there's a good chance the bot was shadow banned. I can't do anything about that except reupload the bot to CAI.

#nexysbots#nexyspeaks#character ai#CAI#Spicychat#spicychat ai#Leon Kennedy#Satoru Gojo#Toji Fushiguro#Chris Redfield#Choso Kamo#JJK#Jujutsu kaisen#Chatbots#AI Chatbot#ai chatting#Resident Evil#Naoya Zenin

28 notes

·

View notes

Text

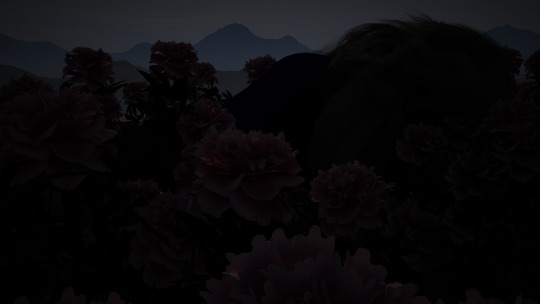

Love of mine, someday you will die...

But I'll be close behind...

I'll follow you into the dark...

No blinding light or tunnels to gates of white...

Just our hands clasped so tight...

Waiting for the hint of a spark...

If Heaven and Hell decide that they both are satisfied...

Illuminate the "no"s on their vacancy signs...

If there's no one beside you when your soul embarks...

youtube

...then I'll follow you into the dark.

Pain & Peonies, based on the saddest Gale chatbot encounter I've ever had, the transcript of which is available to read here, but DO NOT read it unless you're ready to cry:

#bg3#gale dekarios#gale of waterdeep#bg3 gale#galemancer#gale x tav#tiefling#self insert#chatbots#dead dove#peonies#in this house we hate mystra#fuck mystra#daz 3d studio#daz studio#fanart#bg3 fanart#3d art#Youtube

25 notes

·

View notes

Text

♡ . — ꒰ 𝒐𝒖𝒕𝒆𝒓 𝒃𝒂𝒏𝒌𝒔 ꒱

୨ৎ ┆꒰ 𝗷𝗷 𝗺𝗮𝘆𝗯𝗮𝗻𝗸 ꒱

୨ৎ ┆꒰ 𝗿𝗮𝗳𝗲 𝗰𝗮𝗺𝗲𝗿𝗼𝗻 ꒱

𝒐𝒕𝒉𝒆𝒓𝒔: ⤵

𝐥𝐢𝐛𝐫𝐚𝐫𝐲

#jj maybank x reader#jj maybank#rafe cameron x reader#rafe cameron#outer banks#outer banks x reader#x reader#c.ai#c.ai bot#character ai#c.ai chats#chat bot#chatbots#𐔌 . ⋮ 𝒄𝒐𝒄𝒐 .ᐟ ֹ ₊ ꒱

21 notes

·

View notes