#azure automation

Explore tagged Tumblr posts

Text

How to Deploy Azure Resources Using Azure Bicep

Azure Bicep In this article, I show you how to deploy Azure resources using Azure Bicep. It is a domain-specific language (DSL) for declaratively deploying Azure resources. It aims to simplify creating and managing your Azure infrastructure by offering several advantages over the traditional method using Azure Resource Manager (ARM) templates. Azure Bicep is used to deploy resources such as…

View On WordPress

#automation#Azure#Azure Automation#Azure Bicep#Azure Resource Manager Template#Azure Storage Account#Azure Subscription#Azure Virtual Machines#Resource Group

0 notes

Text

Unlocking the full Power of Hybrid Runbooks for Azure Automation

In today's rapidly evolving cloud computing landscape, mastering the art of automation is crucial. 'Unlocking the Power of Hybrid Runbooks for Azure Automation' dives deep into how Azure Automation can revolutionize the way you manage and automate cloud.

In the ever-evolving landscape of cloud computing, efficiency, and automation are not just buzzwords but essential strategies for managing complex cloud environments. Azure Automation emerges as a pivotal tool in this realm, offering a robust, cloud-based automation service that helps you focus on work that adds business value. By automating frequent, time-consuming, and error-prone cloud…

View On WordPress

#Automation Strategies#AzCopy#Azure Automation#Azure services#Azure VM Management#Cloud Computing#Cloud Management#Cloud Security#Data management#Hybrid Runbook Workers#IT Automation#Operational Efficiency#PowerShell Scripting#Virtual Machines

0 notes

Text

[Azure] Pause Resume Fabric, Embedded o AAS con SimplePBI

Hace un poco más de un mes que Fabric llegó y no para de causar revuelo. La posibilidad de una capacidad con todas las características de Premium y más pero al estilo pay as you go como lo es Power Bi Embedded me parece excelente.

En este artículo voy a hablar de la última actualización de la librería de Python SimplePBI que permite Pausar y/o Resumir los recursos de Bi. Si usan Analysis services, PowerBi Embedded o Fabric y les gustaría ahorrar cuando las herramientas no se usan, entonces este código nos ayudará a ejecutar la acción. Luego podemos ver como agregarlo a un schedule en Azure para que se automático.

Pre-Requisitos

Lo primero que necesitas son dos prerequisitos.

1- La versión 0.1.4 de SimplePBI o superior. En esa versión incorporaron la clase azpause que nos ayudará. Para actualizarla pueden usar pip:

pip install simplepbi --upgrade

2- Una App Registrada en Azure con secreto creado para usar como Service Principal. Copiaremos el Tenant Id, Client o App Id y Secret generado.

Ejecución

Para iniciar vamos a ir a nuestro recurso de Azure (Fabric, PBI Embedded o AAS) y daremos permiso en el control de accesos (IAM) al Service Principal (App Registrada) como “Contributor”. Este permiso le dará posibilidad de ejecutar acciones como prendido y apagado. A partir de ese momento seremos libres de ejecutar el sencillo código.

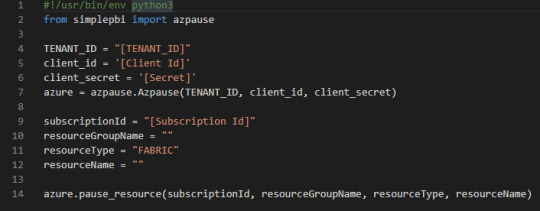

from simplepbi import azpause # Initialize the object authenticating Azure azure = azpause.Azpause(TENANT_ID, client_id, client_secret) # Run method of the object for pause or resume azure.resume_resource(subscriptionId, resourceGroupName, resourceType, resourceName) azure.pause_resource(subscriptionId, resourceGroupName, resourceType, resourceName)

Así de simple con tres líneas. Importar librería, autenticar creando objeto y llamar el método del objeto especificando valores que podemos copiar del “Overview” del recurso:

suscriptionId: el id de la suscripción, no del tenant.

resourceGroupName: nombre del grupo de recursos donde creamos el recurso

resourceType: tipo de recurso, recibe uno de tres valores posibles “FABRIC”, “PBI” o “AAS”

resourceName: nombre del servidor AAS o la capacidad Fabric/Embedded

Enlace al repo con su doc: https://github.com/ladataweb/SimplePBI/blob/main/AzPauseResume.md

Automatizarlo en Azure

Uno de los mejores usos de esa acción es programar la ejecución de dichas líneas conociendo las brechas de tiempo en que no se usan. Para ello podemos hacerlo de manera local con Windows Schedule o en Azure para no depender de una VM. Dentro de Azure hay diversos servicios, podríamos usar por ejemplo Azure Functions. En mi caso voy a mostrar el ejemplo con una cuenta de Automation creando un Runbook.

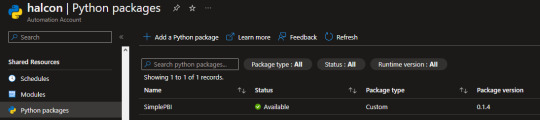

En el portal de Azure crearemos una cuenta de automatización o en ingles Automation Account. El código que usaremos estará en Python 3.8. Una vez creada la cuenta busquemos la opción Python Packages donde agregaremos la librería:

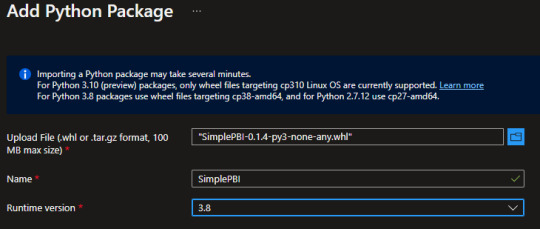

Para agregar la librería primero descargaremos el archivo Wheel de SimplePBI. Pueden encontrarlo en: https://pypi.org/project/SimplePBI/#files

Con el archivo descargado basta con seleccionar “Add a Python Package” con signo +. Tras añadir el archivo “whl” debería reconocer el nombre y ustedes seleccionen 3.8 en la versión de python

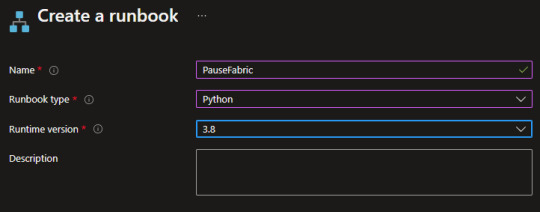

Todo esto es para poder importar simplepbi en el código de nuestro runbook. Luego de cargar la librería, que puede tardar varios minutos, crearemos un runbook en el menú de la izquierda dentro de la cuenta de automatización

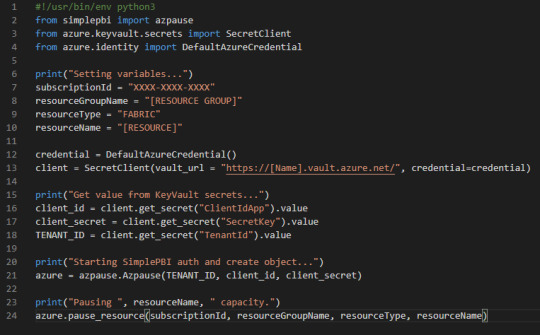

El ejemplo lo haremos con el Pausado. El código sería igual para el Resumir el servicio solo que cambiaría el último método. Creado nos guiará a una interfaz para comenzar a escribir nuestro código Python. Esto sería bastante sencillo. Algo así:

Sin embargo, recordemos que exponer claves en código es una falta de seguridad grave. Entonces lo mejor sería crear un Azure KeyVault para guardar nuestros secretos o IDs y que no queden expuestos. Para ello basta con crear el recurso KeyVault, añadir su cuenta como Key Vault Administrator y crear el secreto. Si quieren conocer más sobre esto pueden buscar ejemplos o seguir el training: https://learn.microsoft.com/en-us/training/modules/configure-and-manage-azure-key-vault/

Si usan KeyVaults se vería así:

Guardar y publicar. Luego podemos darle play para ver que todo funcione correctamente.

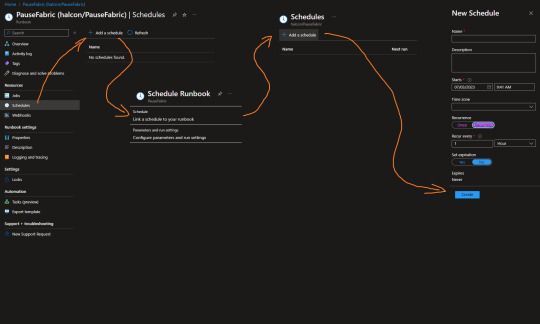

Lo último que haremos será calendarizar la corrida del script. Si ya conocemos el uso del recurso sabremos sus tiempos y horarios. Puede ser que se apague de noche o los fines de semana. Para ello, vamos al runbook y nos fijamos en el menú “Schedule” y seguimos las indicaciones de la imagen:

De ese modo podemos configurar corridas recurrentes del código para asegurarnos que todo esté en orden.

Así es como terminamos de construir nuestra Pausa de Fabric, PowerBi Embedded o Azure Analysis Services usan SimplePBI. Seguramente haría falta que repitan el proceso para “Resumir” el recurso de manera que prenda cuando necesiten usarlo.

#azure#azure automation#python#power bi python#microsoft fabric#power bi embedded#azure analysis services#azure tips#azure training#azure tutorial#ladataweb#simplepbi

0 notes

Text

youtube

The Best DevOps Development Team in India | Boost Your Business with Connect Infosoft

Please Like, Share, Subscribe, and Comment to us.

Our experts are pros at making DevOps work seamlessly for businesses big and small. From making things run smoother to saving time with automation, we've got the skills you need. Ready to level up your business?

#connectinfosofttechnologies#connectinfosoft#DevOps#DevOpsDevelopment#DevOpsService#DevOpsTeam#DevOpsSolutions#DevOpsCompany#DevOpsDeveloper#CloudComputing#CloudService#AgileDevOps#ContinuousIntegration#ContinuousDelivery#InfrastructureAsCode#Automation#Containerization#Microservices#CICD#DevSecOps#CloudNative#Kubernetes#Docker#AWS#Azure#GoogleCloud#Serverless#ITOps#TechOps#SoftwareDevelopment

2 notes

·

View notes

Text

Might just start blogging about my silly little gbf teams I love most of them a whole lot

#I’m just really having a good time with gbf now that I’ve gotten the most annoying parts automated#(seriously every beginner guide just glosses over the pro skip function)#all it took was three separate attempts but now that I’m having fun#it really recaptures the magic of what made me play azur lane for so long#(I still love azur lane but it’s lost a little of its sheen now that it’s just dailies with a new ship I can really tism about every#couple months)

2 notes

·

View notes

Text

Generative AI, innovation, creativity & what the future might hold - CyberTalk

New Post has been published on https://thedigitalinsider.com/generative-ai-innovation-creativity-what-the-future-might-hold-cybertalk/

Generative AI, innovation, creativity & what the future might hold - CyberTalk

Stephen M. Walker II is CEO and Co-founder of Klu, an LLM App Platform. Prior to founding Klu, Stephen held product leadership roles Productboard, Amazon, and Capital One.

Are you excited about empowering organizations to leverage AI for innovative endeavors? So is Stephen M. Walker II, CEO and Co-Founder of the company Klu, whose cutting-edge LLM platform empowers users to customize generative AI systems in accordance with unique organizational needs, resulting in transformative opportunities and potential.

In this interview, Stephen not only discusses his innovative vertical SaaS platform, but also addresses artificial intelligence, generative AI, innovation, creativity and culture more broadly. Want to see where generative AI is headed? Get perspectives that can inform your viewpoint, and help you pave the way for a successful 2024. Stay current. Keep reading.

Please share a bit about the Klu story:

We started Klu after seeing how capable the early versions of OpenAI’s GPT-3 were when it came to common busy-work tasks related to HR and project management. We began building a vertical SaaS product, but needed tools to launch new AI-powered features, experiment with them, track changes, and optimize the functionality as new models became available. Today, Klu is actually our internal tools turned into an app platform for anyone building their own generative features.

What kinds of challenges can Klu help solve for users?

Building an AI-powered feature that connects to an API is pretty easy, but maintaining that over time and understanding what’s working for your users takes months of extra functionality to build out. We make it possible for our users to build their own version of ChatGPT, built on their internal documents or data, in minutes.

What is your vision for the company?

The founding insight that we have is that there’s a lot of busy work that happens in companies and software today. I believe that over the next few years, you will see each company form AI teams, responsible for the internal and external features that automate this busy work away.

I’ll give you a good example for managers: Today, if you’re a senior manager or director, you likely have two layers of employees. During performance management cycles, you have to read feedback for each employee and piece together their strengths and areas for improvement. What if, instead, you received a briefing for each employee with these already synthesized and direct quotes from their peers? Now think about all of the other tasks in business that take several hours and that most people dread. We are building the tools for every company to easily solve this and bring AI into their organization.

Please share a bit about the technology behind the product:

In many ways, Klu is not that different from most other modern digital products. We’re built on cloud providers, use open source frameworks like Nextjs for our app, and have a mix of Typescript and Python services. But with AI, what’s unique is the need to lower latency, manage vector data, and connect to different AI models for different tasks. We built on Supabase using Pgvector to build our own vector storage solution. We support all major LLM providers, but we partnered with Microsoft Azure to build a global network of embedding models (Ada) and generative models (GPT-4), and use Cloudflare edge workers to deliver the fastest experience.

What innovative features or approaches have you introduced to improve user experiences/address industry challenges?

One of the biggest challenges in building AI apps is managing changes to your LLM prompts over time. The smallest changes might break for some users or introduce new and problematic edge cases. We’ve created a system similar to Git in order to track version changes, and we use proprietary AI models to review the changes and alert our customers if they’re making breaking changes. This concept isn’t novel for traditional developers, but I believe we’re the first to bring these concepts to AI engineers.

How does Klu strive to keep LLMs secure?

Cyber security is paramount at Klu. From day one, we created our policies and system monitoring for SOC2 auditors. It’s crucial for us to be a trusted partner for our customers, but it’s also top of mind for many enterprise customers. We also have a data privacy agreement with Azure, which allows us to offer GDPR-compliant versions of the OpenAI models to our customers. And finally, we offer customers the ability to redact PII from prompts so that this data is never sent to third-party models.

Internally we have pentest hackathons to understand where things break and to proactively understand potential threats. We use classic tools like Metasploit and Nmap, but the most interesting results have been finding ways to mitigate unintentional denial of service attacks. We proactively test what happens when we hit endpoints with hundreds of parallel requests per second.

What are your perspectives on the future of LLMs (predictions for 2024)?

This (2024) will be the year for multi-modal frontier models. A frontier model is just a foundational model that is leading the state of the art for what is possible. OpenAI will roll out GPT-4 Vision API access later this year and we anticipate this exploding in usage next year, along with competitive offerings from other leading AI labs. If you want to preview what will be possible, ChatGPT Pro and Enterprise customers have access to this feature in the app today.

Early this year, I heard leaders worried about hallucinations, privacy, and cost. At Klu and across the LLM industry, we found solutions for this and we continue to see a trend of LLMs becoming cheaper and more capable each year. I always talk to our customers about not letting these stop your innovation today. Start small, and find the value you can bring to your customers. Find out if you have hallucination issues, and if you do, work on prompt engineering, retrieval, and fine-tuning with your data to reduce this. You can test these new innovations with engaged customers that are ok with beta features, but will greatly benefit from what you are offering them. Once you have found market fit, you have many options for improving privacy and reducing costs at scale – but I would not worry about that in the beginning, it’s premature optimization.

LLMs introduce a new capability into the product portfolio, but it’s also an additional system to manage, monitor, and secure. Unlike other software in your portfolio, LLMs are not deterministic, and this is a mindset shift for everyone. The most important thing for CSOs is to have a strategy for enabling their organization’s innovation. Just like any other software system, we are starting to see the equivalent of buffer exploits, and expect that these systems will need to be monitored and secured if connected to data that is more important than help documentation.

Your thoughts on LLMs, AI and creativity?

Personally, I’ve had so much fun with GenAI, including image, video, and audio models. I think the best way to think about this is that the models are better than the average person. For me, I’m below average at drawing or creating animations, but I’m above average when it comes to writing. This means I can have creative ideas for an image, the model will bring these to life in seconds, and I am very impressed. But for writing, I’m often frustrated with the boring ideas, although it helps me find blind spots in my overall narrative. The reason for this is that LLMs are just bundles of math finding the most probable answer to the prompt. Human creativity —from the arts, to business, to science— typically comes from the novel combinations of ideas, something that is very difficult for LLMs to do today. I believe the best way to think about this is that the employees who adopt AI will be more productive and creative— the LLM removes their potential weaknesses, and works like a sparring partner when brainstorming.

You and Sam Altman agree on the idea of rethinking the global economy. Say more?

Generative AI greatly changes worker productivity, including the full automation of many tasks that you would typically hire more people to handle as a business scales. The easiest way to think about this is to look at what tasks or jobs a company currently outsources to agencies or vendors, especially ones in developing nations where skill requirements and costs are lower. Over this coming decade you will see work that used to be outsourced to global labor markets move to AI and move under the supervision of employees at an organization’s HQ.

As the models improve, workers will become more productive, meaning that businesses will need fewer employees performing the same tasks. Solo entrepreneurs and small businesses have the most to gain from these technologies, as they will enable them to stay smaller and leaner for longer, while still growing revenue. For large, white-collar organizations, the idea of measuring management impact by the number of employees under a manager’s span of control will quickly become outdated.

While I remain optimistic about these changes and the new opportunities that generative AI will unlock, it does represent a large change to the global economy. Klu met with UK officials last week to discuss AI Safety and I believe the countries investing in education, immigration, and infrastructure policy today will be best suited to contend with these coming changes. This won’t happen overnight, but if we face these changes head on, we can help transition the economy smoothly.

Is there anything else that you would like to share with the CyberTalk.org audience?

Expect to see more security news regarding LLMs. These systems are like any other software and I anticipate both poorly built software and bad actors who want to exploit these systems. The two exploits that I track closely are very similar to buffer overflows. One enables an attacker to potentially bypass and hijack that prompt sent to an LLM, the other bypasses the model’s alignment tuning, which prevents it from answering questions like, “how can I build a bomb?” We’ve also seen projects like GPT4All leak API keys to give people free access to paid LLM APIs. These leaks typically come from the keys being stored in the front-end or local cache, which is a security risk completely unrelated to AI or LLMs.

#2024#ai#AI-powered#Amazon#animations#API#APIs#app#apps#Art#artificial#Artificial Intelligence#Arts#audio#automation#azure#Building#Business#cache#CEO#chatGPT#Cloud#cloud providers#cloudflare#Companies#Creative Ideas#creativity#cutting#cyber#cyber criminals

3 notes

·

View notes

Text

Azure’s Evolution: What Every IT Pro Should Know About Microsoft’s Cloud

IT professionals need to keep ahead of the curve in the ever changing world of technology today. The cloud has become an integral part of modern IT infrastructure, and one of the leading players in this domain is Microsoft Azure. Azure’s evolution over the years has been nothing short of remarkable, making it essential for IT pros to understand its journey and keep pace with its innovations. In this blog, we’ll take you on a journey through Azure’s transformation, exploring its history, service portfolio, global reach, security measures, and much more. By the end of this article, you’ll have a comprehensive understanding of what every IT pro should know about Microsoft’s cloud platform.

Historical Overview

Azure’s Humble Beginnings

Microsoft Azure was officially launched in February 2010 as “Windows Azure.” It began as a platform-as-a-service (PaaS) offering primarily focused on providing Windows-based cloud services.

The Azure Branding Shift

In 2014, Microsoft rebranded Windows Azure to Microsoft Azure to reflect its broader support for various operating systems, programming languages, and frameworks. This rebranding marked a significant shift in Azure’s identity and capabilities.

Key Milestones

Over the years, Azure has achieved numerous milestones, including the introduction of Azure Virtual Machines, Azure App Service, and the Azure Marketplace. These milestones have expanded its capabilities and made it a go-to choice for businesses of all sizes.

Expanding Service Portfolio

Azure’s service portfolio has grown exponentially since its inception. Today, it offers a vast array of services catering to diverse needs:

Compute Services: Azure provides a range of options, from virtual machines (VMs) to serverless computing with Azure Functions.

Data Services: Azure offers data storage solutions like Azure SQL Database, Cosmos DB, and Azure Data Lake Storage.

AI and Machine Learning: With Azure Machine Learning and Cognitive Services, IT pros can harness the power of AI for their applications.

IoT Solutions: Azure IoT Hub and IoT Central simplify the development and management of IoT solutions.

Azure Regions and Global Reach

Azure boasts an extensive network of data centers spread across the globe. This global presence offers several advantages:

Scalability: IT pros can easily scale their applications by deploying resources in multiple regions.

Redundancy: Azure’s global datacenter presence ensures high availability and data redundancy.

Data Sovereignty: Choosing the right Azure region is crucial for data compliance and sovereignty.

Integration and Hybrid Solutions

Azure’s integration capabilities are a boon for businesses with hybrid cloud needs. Azure Arc, for instance, allows you to manage on-premises, multi-cloud, and edge environments through a unified interface. Azure’s compatibility with other cloud providers simplifies multi-cloud management.

Security and Compliance

Azure has made significant strides in security and compliance. It offers features like Azure Security Center, Azure Active Directory, and extensive compliance certifications. IT pros can leverage these tools to meet stringent security and regulatory requirements.

Azure Marketplace and Third-Party Offerings

Azure Marketplace is a treasure trove of third-party solutions that complement Azure services. IT pros can explore a wide range of offerings, from monitoring tools to cybersecurity solutions, to enhance their Azure deployments.

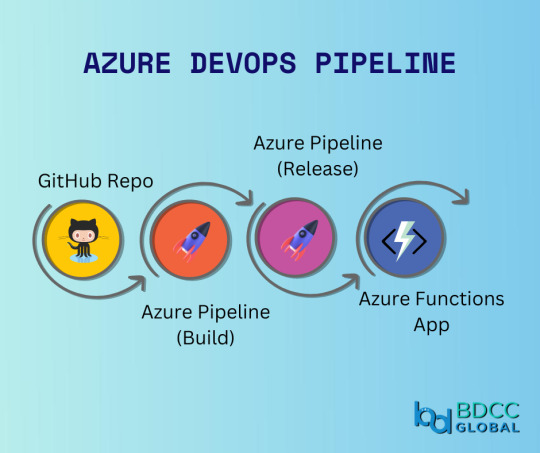

Azure DevOps and Automation

Automation is key to efficiently managing Azure resources. Azure DevOps services and tools facilitate continuous integration and continuous delivery (CI/CD), ensuring faster and more reliable application deployments.

Monitoring and Management

Azure offers robust monitoring and management tools to help IT pros optimize resource usage, troubleshoot issues, and gain insights into their Azure deployments. Best practices for resource management can help reduce costs and improve performance.

Future Trends and Innovations

As the technology landscape continues to evolve, Azure remains at the forefront of innovation. Keep an eye on trends like edge computing and quantum computing, as Azure is likely to play a significant role in these domains.

Training and Certification

To excel in your IT career, consider pursuing Azure certifications. ACTE Institute offers a range of certifications, such as the Microsoft Azure course to validate your expertise in Azure technologies.

In conclusion, Azure’s evolution is a testament to Microsoft’s commitment to cloud innovation. As an IT professional, understanding Azure’s history, service offerings, global reach, security measures, and future trends is paramount. Azure’s versatility and comprehensive toolset make it a top choice for organizations worldwide. By staying informed and adapting to Azure’s evolving landscape, IT pros can remain at the forefront of cloud technology, delivering value to their organizations and clients in an ever-changing digital world. Embrace Azure’s evolution, and empower yourself for a successful future in the cloud.

#microsoft azure#tech#education#cloud services#azure devops#information technology#automation#innovation

2 notes

·

View notes

Text

Boost your team's productivity with Azure DevOps pipeline. Automate your development process and focus on what really matters - building great software!

#Azure#DevOps#azuredevOps#pipeline#DevOpspipeline#Automation#developmentprocess#devops consulting services#aws consultants#awspartners#aws#bdccglobal#devopstrends#top aws consulting partners#bdcc#devops consulting

2 notes

·

View notes

Text

Its 2025, Still waiting to migrate your business in cloud?

Lead your business by leveraging Cloud. Techjour assures you to faster to market, reduce technology cost, scale your business and in-built advanced security.

#google cloud#aws cloud#aws#startup#microsoft azure#gitlab#automation#technology#business#trendingnow#trending#technology trends#market trends#2025#cloud solutions#cloud service provider#cloud services#cloudmigration#cloudconsulting#usa news#europe#technology news#technology tips

1 note

·

View note

Text

#VisualPath offers the best #AzureDataEngineer Training Online to help you master data and AI technologies. Our Microsoft Azure Data Engineer course covers top tools like Matillion, Snowflake, ETL, Informatica, SQL, Power BI, Databricks, and Amazon Redshift. Gain hands-on experience with flexible schedules, recorded sessions, and global access. Learn from industry experts and work on real-world projects. Achieve certification and boost your career in data engineering. Call +91-9989971070 for a free demo!

WhatsApp: https://www.whatsapp.com/catalog/919989971070/

Visit Blog: https://visualpathblogs.com/category/aws-data-engineering-with-data-analytics/

Visit: https://www.visualpath.in/online-azure-data-engineer-course.html

#visualpathedu#testing#automation#selenium#git#github#JavaScript#Azure#CICD#AzureDevOps#playwright#handonlearning#education#SoftwareDevelopment#onlinelearning#newtechnology#software#ITskills#training#trendingcourses#careers#students#typescript

0 notes

Text

Custom AWS Solutions for Modern Enterprises - Atcuality

Amazon Web Services offer an unparalleled ecosystem of cloud computing tools that cater to businesses of all sizes. At ATCuality, we understand that no two companies are the same, which is why we provide custom Amazon Web Services solutions tailored to your specific goals. From designing scalable architectures to implementing cutting-edge machine learning capabilities, our AWS services ensure that your business stays ahead of the curve. The flexibility of Amazon Web Services allows for easy integration with your existing systems, paving the way for seamless growth and enhanced efficiency. Let us help you harness the power of AWS for your enterprise.

#seo marketing#seo services#artificial intelligence#digital marketing#seo agency#iot applications#seo company#ai powered application#azure cloud services#amazon web services#virtual reality#augmented reality agency#augmented human c4 621#augmented and virtual reality market#augmented reality#augmented intelligence#digital services#iotsolutions#iot development services#iot platform#techinnovation#digitaltransformation#automation#iot#iot solutions#iot development company#innovation#erp software#erp system#cloud security services

0 notes

Text

Al-Hiyal Automation LLC

#Al-Hiyal Automation LLC: The Best IT Services Company. Al-Hiyal Automation LLC offers the essential services to meet your needs: 1. Azure Se#The Best IT Services Company

1 note

·

View note

Text

ChatGPT Gov aims to modernise US government agencies

New Post has been published on https://thedigitalinsider.com/chatgpt-gov-aims-to-modernise-us-government-agencies/

ChatGPT Gov aims to modernise US government agencies

.pp-multiple-authors-boxes-wrapper display:none; img width:100%;

OpenAI has launched ChatGPT Gov, a specially designed version of its AI chatbot tailored for use by US government agencies.

ChatGPT Gov aims to harness the potential of AI to enhance efficiency, productivity, and service delivery while safeguarding sensitive data and complying with stringent security requirements.

“We believe the US government’s adoption of artificial intelligence can boost efficiency and productivity and is crucial for maintaining and enhancing America’s global leadership in this technology,” explained OpenAI.

The company emphasised how its AI solutions present “enormous potential” for tackling complex challenges in the public sector, ranging from improving public health and infrastructure to bolstering national security.

By introducing ChatGPT Gov, OpenAI hopes to offer tools that “serve the national interest and the public good, aligned with democratic values,” while assisting policymakers in responsibly integrating AI to enhance services for the American people.

The role of ChatGPT Gov

Public sector organisations can deploy ChatGPT Gov within their own Microsoft Azure environments, either through Azure’s commercial cloud or the specialised Azure Government cloud.

This self-hosting capability ensures that agencies can meet strict security, privacy, and compliance standards, such as IL5, CJIS, ITAR, and FedRAMP High.

OpenAI believes this infrastructure will not only help facilitate compliance with cybersecurity frameworks, but also speed up internal authorisation processes for handling non-public sensitive data.

The tailored version of ChatGPT incorporates many of the features found in the enterprise version, including:

The ability to save and share conversations within a secure government workspace.

Uploading text and image files for streamlined workflows.

Access to GPT-4o, OpenAI’s state-of-the-art model capable of advanced text interpretation, summarisation, coding, image analysis, and mathematics.

Customisable GPTs, which enable users to create and share specifically tailored models for their agency’s needs.

A built-in administrative console to help CIOs and IT departments manage users, groups, security protocols such as single sign-on (SSO), and more.

These features ensure that ChatGPT Gov is not merely a tool for innovation, but an infrastructure supportive of secure and efficient operations across US public-sector entities.

OpenAI says it’s actively working to achieve FedRAMP Moderate and High accreditations for its fully managed SaaS product, ChatGPT Enterprise, a step that would bolster trust in its AI offerings for government use.

Additionally, the company is exploring ways to expand ChatGPT Gov’s capabilities into Azure’s classified regions for even more secure environments.

“ChatGPT Gov reflects our commitment to helping US government agencies leverage OpenAI’s technology today,” the company said.

A better track record in government than most politicians

Since January 2024, ChatGPT has seen widespread adoption among US government agencies, with over 90,000 users across more than 3,500 federal, state, and local agencies having already sent over 18 million messages to support a variety of operational tasks.

Several notable agencies have highlighted how they are employing OpenAI’s AI tools for meaningful outcomes:

The Air Force Research Laboratory: The lab uses ChatGPT Enterprise for administrative purposes, including improving access to internal resources, basic coding assistance, and boosting AI education efforts.

Los Alamos National Laboratory: The laboratory leverages ChatGPT Enterprise for scientific research and innovation. This includes work within its Bioscience Division, which is evaluating ways GPT-4o can safely advance bioscientific research in laboratory settings.

State of Minnesota: Minnesota’s Enterprise Translations Office uses ChatGPT Team to provide faster, more accurate translation services to multilingual communities across the state. The integration has resulted in significant cost savings and reduced turnaround times.

Commonwealth of Pennsylvania: Employees in Pennsylvania’s pioneering AI pilot programme reported that ChatGPT Enterprise helped them reduce routine task times, such as analysing project requirements, by approximately 105 minutes per day on days they used the tool.

These early use cases demonstrate the transformative potential of AI applications across various levels of government.

Beyond delivering tangible improvements to government workflows, OpenAI seeks to foster public trust in artificial intelligence through collaboration and transparency. The company said it is committed to working closely with government agencies to align its tools with shared priorities and democratic values.

“We look forward to collaborating with government agencies to enhance service delivery to the American people through AI,” OpenAI stated.

As other governments across the globe begin adopting similar technologies, America’s proactive approach may serve as a model for integrating AI into the public sector while safeguarding against risks.

Whether supporting administrative workflows, research initiatives, or language services, ChatGPT Gov stands as a testament to the growing role AI will play in shaping the future of effective governance.

(Photo by Dave Sherrill)

See also: Yiannis Antoniou, Lab49: OpenAI Operator kickstarts era of browser AI agents

Want to learn more about AI and big data from industry leaders? Check out AI & Big Data Expo taking place in Amsterdam, California, and London. The comprehensive event is co-located with other leading events including Intelligent Automation Conference, BlockX, Digital Transformation Week, and Cyber Security & Cloud Expo.

Explore other upcoming enterprise technology events and webinars powered by TechForge here.

Tags: artificial intelligence, azure, chatgpt, chatgpt gov, government, openai, usa

#000#2024#adoption#agents#ai#ai & big data expo#AI AGENTS#AI Chatbot#ai tools#air#air force#America#American#amp#Analysis#applications#approach#Art#Articles#artificial#Artificial Intelligence#automation#azure#Big Data#browser#california#chatbot#chatbots#chatGPT#chatgpt enterprise

0 notes

Text

What’s the main challenge in your workflow? Share your insights, and we’ll demonstrate how Power Automate can enhance efficiency and streamline your business processes through our comprehensive training course.

Join Now: https://meet.goto.com/707505309

Attend Online New Batch on Power Apps and Power Automation course by Mr. Rajesh.

Demo on: 22/10/2024 @8:00 AM IST

Contact us: +919989971070

Visit us: https://www.visualpath.in/online-powerapps-training.html

#visualpath#microsoft#powerapps#powerbi#powerplatform#dynamics#office#sharepoint#msflow#it#azure#powervirtualagents#microsoftteams#automation#microsoftflow#msdyn#rpa#cloud#msteams#webinar#microsoftdynamics#microsoftpowerapps#online#integrations#microsoftpowerautomate#technology#innovation#cloudcomputing

1 note

·

View note

Text

Cloud Service Provider offers scalable computing resouces, that User can access on demand over a network. Amazon Web Services, Microsoft Azure and Google Cloud are prominent leaders in Cloud market.

Cloud Service Provider's worldwide Market statistics:

AWS:

2022 - 33%

2023 - 32%

2024 - 31%

#Azure:

2022 - 22%(Q1)

2023 - 23%

2024 - 23% - 24%

#GCP:

2022 - 10% (Q1)

2023 - 10%

2024 - 13%

#Oracle:

2023 - 2%

2024 - 3%

#oracle#technology#automation#business#startup#aws cloud#google cloud#aws#microsoft azure#gitlab#huawei#devopstools#devops#devopsengineer#techjour

0 notes