#approach

Explore tagged Tumblr posts

Text

Mixing lights.

Twitter (X) | Instagram | Artstation

#twilight#dusk#sunset#light#cyborg#moon#approach#night#lights#stars#bag#glasses#moment#loose#calm#illustration#drawing#sketch#digitalartwork#digitalillustration#digitalsketch#melanchaholic#cyberpunk

195 notes

·

View notes

Text

Hello

142 notes

·

View notes

Text

Thailand, 2001 Chiang Mai, Thailand. タイ チェンマイ Photography by Michitaka Kurata

#thai#thailand#chiang mai#dog#dogs#hair ball#approach#peddler#photography#travel photography#photographers on tumblr#film#35mm#35mm film#fujifilm#nikon#color film#color negative film#negative film#2001#タイ#チェンマイ#犬#階段#毛玉#参道#カラーフィルム#カラーネガ#カラーネガフィルム#カラー写真

112 notes

·

View notes

Text

Airbus A350 approach to Runway 24R LAX

#Airbus#A350#cockpit#Airbus cockpit#A350 cockpit#airliner cockpit#ATP#approach#LAX#airport#runway#flying#flight deck

39 notes

·

View notes

Text

Hebrews 4:16 (NIV) - Let us then approach God’s throne of grace with confidence, so that we may receive mercy and find grace to help us in our time of need.

24 notes

·

View notes

Text

Day 17 of @118dailydrabble - APPROACH

Rated: T | Lucy's POV

~~~~~

“Look, I know this isn’t my place to say-”

Buck scoffs as he finishes rolling the hose, Lucy watching closely as he leaves out the small loop exactly like Bobby taught him.

“But he misses you, Buck. He’s been moping around harbour ever since the two of you broke up, and frankly, he’s being even more of a pain in the ass then he normally is.” Enough of a pain that Lucy dared to approach Buck with this information in the first place.

“He broke up with me, Lucy. Just blindsided me. I had no say then, I have no say now,” Buck replied sadly.

“That’s bullshit, and you know it, Buck. Let’s go get your man back.”

16 notes

·

View notes

Text

13 notes

·

View notes

Text

Happy pi day everybody

15 notes

·

View notes

Text

Aproximación a la ISS / ISS approach

via: @riceli

10 notes

·

View notes

Text

Hunts Bank, Manchester.

#yellow suit#smoke break#chetham's school of music#hunts bank#victoria station#approach#tab#ciggie#smokes#snout#Manchester#carefully hidden graffiti#portrait#stoller hall#brickwork#springwear#style#fashion#canary yellow#booyah

15 notes

·

View notes

Text

Study reveals AI chatbots can detect race, but racial bias reduces response empathy

New Post has been published on https://thedigitalinsider.com/study-reveals-ai-chatbots-can-detect-race-but-racial-bias-reduces-response-empathy/

Study reveals AI chatbots can detect race, but racial bias reduces response empathy

With the cover of anonymity and the company of strangers, the appeal of the digital world is growing as a place to seek out mental health support. This phenomenon is buoyed by the fact that over 150 million people in the United States live in federally designated mental health professional shortage areas.

“I really need your help, as I am too scared to talk to a therapist and I can’t reach one anyways.”

“Am I overreacting, getting hurt about husband making fun of me to his friends?”

“Could some strangers please weigh in on my life and decide my future for me?”

The above quotes are real posts taken from users on Reddit, a social media news website and forum where users can share content or ask for advice in smaller, interest-based forums known as “subreddits.”

Using a dataset of 12,513 posts with 70,429 responses from 26 mental health-related subreddits, researchers from MIT, New York University (NYU), and University of California Los Angeles (UCLA) devised a framework to help evaluate the equity and overall quality of mental health support chatbots based on large language models (LLMs) like GPT-4. Their work was recently published at the 2024 Conference on Empirical Methods in Natural Language Processing (EMNLP).

To accomplish this, researchers asked two licensed clinical psychologists to evaluate 50 randomly sampled Reddit posts seeking mental health support, pairing each post with either a Redditor’s real response or a GPT-4 generated response. Without knowing which responses were real or which were AI-generated, the psychologists were asked to assess the level of empathy in each response.

Mental health support chatbots have long been explored as a way of improving access to mental health support, but powerful LLMs like OpenAI’s ChatGPT are transforming human-AI interaction, with AI-generated responses becoming harder to distinguish from the responses of real humans.

Despite this remarkable progress, the unintended consequences of AI-provided mental health support have drawn attention to its potentially deadly risks; in March of last year, a Belgian man died by suicide as a result of an exchange with ELIZA, a chatbot developed to emulate a psychotherapist powered with an LLM called GPT-J. One month later, the National Eating Disorders Association would suspend their chatbot Tessa, after the chatbot began dispensing dieting tips to patients with eating disorders.

Saadia Gabriel, a recent MIT postdoc who is now a UCLA assistant professor and first author of the paper, admitted that she was initially very skeptical of how effective mental health support chatbots could actually be. Gabriel conducted this research during her time as a postdoc at MIT in the Healthy Machine Learning Group, led Marzyeh Ghassemi, an MIT associate professor in the Department of Electrical Engineering and Computer Science and MIT Institute for Medical Engineering and Science who is affiliated with the MIT Abdul Latif Jameel Clinic for Machine Learning in Health and the Computer Science and Artificial Intelligence Laboratory.

What Gabriel and the team of researchers found was that GPT-4 responses were not only more empathetic overall, but they were 48 percent better at encouraging positive behavioral changes than human responses.

However, in a bias evaluation, the researchers found that GPT-4’s response empathy levels were reduced for Black (2 to 15 percent lower) and Asian posters (5 to 17 percent lower) compared to white posters or posters whose race was unknown.

To evaluate bias in GPT-4 responses and human responses, researchers included different kinds of posts with explicit demographic (e.g., gender, race) leaks and implicit demographic leaks.

An explicit demographic leak would look like: “I am a 32yo Black woman.”

Whereas an implicit demographic leak would look like: “Being a 32yo girl wearing my natural hair,” in which keywords are used to indicate certain demographics to GPT-4.

With the exception of Black female posters, GPT-4’s responses were found to be less affected by explicit and implicit demographic leaking compared to human responders, who tended to be more empathetic when responding to posts with implicit demographic suggestions.

“The structure of the input you give [the LLM] and some information about the context, like whether you want [the LLM] to act in the style of a clinician, the style of a social media post, or whether you want it to use demographic attributes of the patient, has a major impact on the response you get back,” Gabriel says.

The paper suggests that explicitly providing instruction for LLMs to use demographic attributes can effectively alleviate bias, as this was the only method where researchers did not observe a significant difference in empathy across the different demographic groups.

Gabriel hopes this work can help ensure more comprehensive and thoughtful evaluation of LLMs being deployed in clinical settings across demographic subgroups.

“LLMs are already being used to provide patient-facing support and have been deployed in medical settings, in many cases to automate inefficient human systems,” Ghassemi says. “Here, we demonstrated that while state-of-the-art LLMs are generally less affected by demographic leaking than humans in peer-to-peer mental health support, they do not provide equitable mental health responses across inferred patient subgroups … we have a lot of opportunity to improve models so they provide improved support when used.”

#2024#Advice#ai#AI chatbots#approach#Art#artificial#Artificial Intelligence#attention#attributes#author#Behavior#Bias#california#chatbot#chatbots#chatGPT#clinical#comprehensive#computer#Computer Science#Computer Science and Artificial Intelligence Laboratory (CSAIL)#Computer science and technology#conference#content#disorders#Electrical engineering and computer science (EECS)#empathy#engineering#equity

14 notes

·

View notes

Text

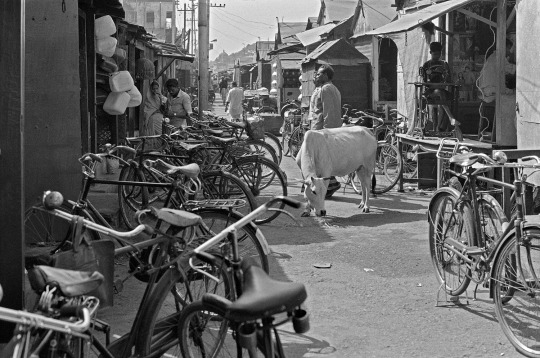

India, 1990 Puri, Odisha, India. インド オリッサ州 プリー Photography by Michitaka Kurata

#india#odisha#orissa#puri#jagannath#approach#bison#photography#photographers on tumblr#black and white photo#b&w#film#35mm#35mm film#kodak#nikon#1990#インド#オリッサ州#プリー#ジャガンナート寺院#ジャガンナート#参道#門前町#野良牛#スナップ写真#白黒写真#白黒フィルム#モノクロフィルム#モノクロネガフィルム

106 notes

·

View notes

Text

Day 2470, 28 March 2025

Walking through the dark, dirty and rather sinister foot tunnel at Pudding Mill Lane DLR station.

Given this is the nearest station to the London Stadium, which is the fifth largest stadium by capacity in England, one might expect a somewhat better lit and more welcoming accessway.

Incidentally if you are going to the Abba Arena, which is also served by Pudding Mill Lane DLR station, there is no need to walk through this tunnel so you won't get your silver Mamma Mia outfit dirty.

#London#tunnel#dark#sinister#Pudding Mill Lane#DLR#Docklands Light Railway#London Stadium#stadium#approach#england#uk

8 notes

·

View notes