#Map data structure

Text

i love how bones always animates Kunikida with pointy lil teeths- that's so cool and sexy of them - so have this,,, crooked pointy teeth Kunikida,,,, ough yea :sadthumbsup:

(not sure if i actually like this or am just Used To It after staring at it for hours until 5 am - the sketch did not look like him SOLELY bc I couldn't draw his hair right and the smile threw me off LOL - also also,, my requests,,, are open as always- even if u sent before and haven't gotten to it yet I prommy I read and appreciate and will get to them when I have more time )

#she can smile as a treat#idc if it looks ooc LET KUNIKIDA EXPERIENCE JOY LMAO#kite draws#kite watches bsd#bungou stray dogs#bsd#kunikida#kunikida doppo#:dances:#not sure if it looks actually good or I've just Gotten Used to it -#everything looks good if u throw a gradient map on it tho LMAO#inspired by ME and my fucked up lil chompers- I had braces on and off for like a decade and they're still fucky wucky#but they're cool and pointy so who's the real winner?#also this picture might have cost me my cs data structures grade bc I decided to draw it instead of finishing my already late assignment#and studying for my final but low-key who cares#IF ANYONE knows how to code a hashmap from a dynamically allocated array lemme know and I will draw u endless kunikidas for life -#GPA is temporary- Kunikida is forever or whatever KJDHKDJH#curious who actually read tags bc I be writing my life story here#sorry for oversharing guys will happen again </3#but if u are reading this u are cool and hot and im kissing u on the forehead (platonically) mwah -#thank u#this is so cringe fail of me ill shut up now

59 notes

·

View notes

Text

JavaCollections: Your Data, Your Way

Master the art of data structures:

List: ArrayList, LinkedList

Set: HashSet, TreeSet

Queue: PriorityQueue, Deque

Map: HashMap, TreeMap

Pro tips:

Use generics for type safety

Choose the right collection for your needs

Leverage stream API for elegant data processing

Collections: Because arrays are so last century.

#JavaCollections: Your Data#Your Way#Master the art of data structures:#- List: ArrayList#LinkedList#- Set: HashSet#TreeSet#- Queue: PriorityQueue#Deque#- Map: HashMap#TreeMap#Pro tips:#- Use generics for type safety#- Choose the right collection for your needs#- Leverage stream API for elegant data processing#Collections: Because arrays are so last century.#JavaProgramming#DataStructures#CodingEfficiency

2 notes

·

View notes

Text

Exploring the Diverse Landscape of Surveys: Unveiling Different Types

Introduction

Civil engineering, as a discipline, relies heavily on accurate and comprehensive data to design, plan, and construct various infrastructure projects. Surveys play a crucial role in gathering this essential information, providing engineers with the data needed to make informed decisions. There are several types of surveys in civil engineering, each serving a unique purpose. In this…

View On WordPress

#accurate measurements#as-built survey#boundary survey#civil engineering data#Civil engineering surveys#construction progress monitoring#construction survey#design accuracy#environmental monitoring#geodetic survey#global mapping#hydrographic survey#infrastructure development#infrastructure projects#land surveyor#legal boundaries#monitoring survey#project planning#property lines#structural integrity assessment#surveying in civil engineering#surveying innovations#surveying technology#topographic surveying#water body survey

2 notes

·

View notes

Text

i know this means absolutely nothing to most people but basically all of the little web game things I've made recently (angels in automata, hex plant growing game, d.a.n.m.a.k.u., life music, sudoku land, the metroidvania style map editor, etc etc etc) are all entirely self-contained individual client-side html files that can be downloaded and run offline and have literally no libraries or frameworks or dependencies, because i'm an insane woman who enjoys hand coding my input handling and display code from scratch in vanilla js and having it all live in one single html file with the game logic and the page structure and the page style all just living and loving together side by side in a universal format that can be run by any web browser on any devixe. i'll even include image files as base64 data-uri strings just to keep every single asset inside the one file.

19K notes

·

View notes

Text

What We Learned from Flying a Helicopter on Mars

The Ingenuity Mars Helicopter made history – not only as the first aircraft to perform powered, controlled flight on another world – but also for exceeding expectations, pushing the limits, and setting the stage for future NASA aerial exploration of other worlds.

Built as a technology demonstration designed to perform up to five experimental test flights over 30 days, Ingenuity performed flight operations from the Martian surface for almost three years. The helicopter ended its mission on Jan. 25, 2024, after sustaining damage to its rotor blades during its 72nd flight.

So, what did we learn from this small but mighty helicopter?

We can fly rotorcraft in the thin atmosphere of other planets.

Ingenuity proved that powered, controlled flight is possible on other worlds when it took to the Martian skies for the first time on April 19, 2021.

Flying on planets like Mars is no easy feat: The Red Planet has a significantly lower gravity – one-third that of Earth’s – and an extremely thin atmosphere, with only 1% the pressure at the surface compared to our planet. This means there are relatively few air molecules with which Ingenuity’s two 4-foot-wide (1.2-meter-wide) rotor blades can interact to achieve flight.

Ingenuity performed several flights dedicated to understanding key aerodynamic effects and how they interact with the structure and control system of the helicopter, providing us with a treasure-trove of data on how aircraft fly in the Martian atmosphere.

Now, we can use this knowledge to directly improve performance and reduce risk on future planetary aerial vehicles.

Creative solutions and “ingenuity” kept the helicopter flying longer than expected.

Over an extended mission that lasted for almost 1,000 Martian days (more than 33 times longer than originally planned), Ingenuity was upgraded with the ability to autonomously choose landing sites in treacherous terrain, dealt with a dead sensor, dusted itself off after dust storms, operated from 48 different airfields, performed three emergency landings, and survived a frigid Martian winter.

Fun fact: To keep costs low, the helicopter contained many off-the-shelf-commercial parts from the smartphone industry - parts that had never been tested in deep space. Those parts also surpassed expectations, proving durable throughout Ingenuity’s extended mission, and can inform future budget-conscious hardware solutions.

There is value in adding an aerial dimension to interplanetary surface missions.

Ingenuity traveled to Mars on the belly of the Perseverance rover, which served as the communications relay for Ingenuity and, therefore, was its constant companion. The helicopter also proved itself a helpful scout to the rover.

After its initial five flights in 2021, Ingenuity transitioned to an “operations demonstration,” serving as Perseverance’s eyes in the sky as it scouted science targets, potential rover routes, and inaccessible features, while also capturing stereo images for digital elevation maps.

Airborne assets like Ingenuity unlock a new dimension of exploration on Mars that we did not yet have – providing more pixels per meter of resolution for imaging than an orbiter and exploring locations a rover cannot reach.

Tech demos can pay off big time.

Ingenuity was flown as a technology demonstration payload on the Mars 2020 mission, and was a high risk, high reward, low-cost endeavor that paid off big. The data collected by the helicopter will be analyzed for years to come and will benefit future Mars and other planetary missions.

Just as the Sojourner rover led to the MER-class (Spirit and Opportunity) rovers, and the MSL-class (Curiosity and Perseverance) rovers, the team believes Ingenuity’s success will lead to future fleets of aircraft at Mars.

In general, NASA’s Technology Demonstration Missions test and advance new technologies, and then transition those capabilities to NASA missions, industry, and other government agencies. Chosen technologies are thoroughly ground- and flight-tested in relevant operating environments — reducing risks to future flight missions, gaining operational heritage and continuing NASA’s long history as a technological leader.

youtube

You can fall in love with robots on another planet.

Following in the tracks of beloved Martian rovers, the Ingenuity Mars Helicopter built up a worldwide fanbase. The Ingenuity team and public awaited every single flight with anticipation, awe, humor, and hope.

Check out #ThanksIngenuity on social media to see what’s been said about the helicopter’s accomplishments.

youtube

Learn more about Ingenuity’s accomplishments here. And make sure to follow us on Tumblr for your regular dose of space!

5K notes

·

View notes

Text

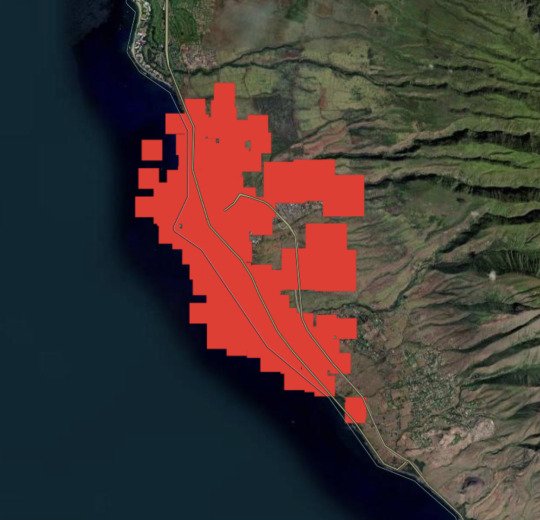

Maui Fires & How to Support Relief Efforts

(Posted on 8/10/23)

Hi, I'm Jae and my family is from Lāhainā. I watched my hometown burn down this week.

The fires caused immeasurable loss in my community so I'd like to spread awareness of the situation as well as provide links to support local organizations directly assisting survivors. I'm pretty sure most of my following is Not local so I'm writing with intent to inform people outside the situation, but if you're reading this and happen to have family in the affected area that isn't accounted for, message me and I can send you the links to the missing persons tracking docs + more localized info!! If you'd like to skip down to how to help and follow community organizations, scroll to the bottom of the post after the image.

Earlier this week, Hurricane Dora passed south of the Hawaiian Islands, bringing strong wind gusts that caused property damage across the islands. On Tuesday August 8, high winds caused sparks to fly in the middle of Lāhainā town, knocking out power lines and immediately igniting drought-ridden grasses. The fire spread quickly and destroyed the entire center of town, the harbor, and multiple neighborhoods including Hawaiian Homes (housing specifically for Native Hawaiians), parts of Lahainaluna, basically all of Front Street, and low-income housing units. There is only one public road in and out of town, and after a very hectic evacuation period that road has been mostly closed off except to emergency responders, thus it is extremely difficult for anyone to leave town to get help. The nearest hospital is 20 miles away in Wailuku, and most grocery stores in town have burnt down.

As of Thursday, August 10, over 1,000 acres have been burned and 271 structures (including homes, schools, and other community gathering places) have been destroyed. Cell service is still extremely spotty, many of the surrounding neighborhoods deemed safe for evacuees are still without utilities. There are currently confirmed 53 deaths but that number is expected to increase as search-and-rescue efforts continue. Countless families have been displaced and many have lost the homes they lived in for generations. Places of deep historical significance have been reduced to ash, including the gravesites of Hawaiian royalty, the old Lāhainā courthouse where items of cultural significance were stored, and Na ‘Aikane o Maui Cultural Center.

To add further context: Lāhainā has a population of about 13,000 residents. EVERYONE I know has been impacted in some way--at best forced to evacuate, at worst their house was burnt to the foundation, they cannot find a loved one, etc. I'm still trying to track down family members and it's been over two days. My neighbors down the street had homes last week and now many don't have ANYTHING. The hotels are taking in residents (tourists are also being STRONGLY urged to leave so that locals can recover). Without open access to the rest of the island, Lāhainā residents are now dependent on whatever people had in their homes already as well as disaster relief efforts coming in, but it's been difficult to organize and mobilize due to the location + conditions. People who have made it out are in shelters where no blankets or medicine were provided. Friends and acquaintances from neighbor islands are preparing aid to send over. Community response has been incredible, but the toll on the town has been immeasurable. My parents were desperately walking through town yesterday, my mom sounded absolutely hollow talking about it on the phone with me. It's horrifying. Below is a satellite map with data from the NASA Fire Information for Resource Management System showing the impacted areas from the past week; all of the red blotches were on fire at some point in the last three days.

Here are ways you can help:

If you have the means to donate:

Here are three donation sites verified by Maui Rapid Response, which also lists FAQs for people who are wondering about next steps.

Hawaiʻi Community Foundation - Maui Strong Fund accepts international credit cards.

Maui United Way

Maui Mutual Aid

Non-monetary ways to support:

If you know anyone who is planning to travel to ANY Hawaiian island, not just Maui, tell them to cancel their trip. Resources are extremely limited as is.

Advocate for climate change mitigation efforts locally, wherever that is for you. The fire was exacerbated by drought conditions that have worsened due to climate change.

Lastly, remember that these are people's HOMES that burned, and Native Hawaiian cultural artifacts that have been lost. Stop thinking of Hawaiʻi (or any "tourist destination" location, really) as an "escape" or a "paradise." If that's the only way you recognized my home... I'm glad I got your attention somehow, but I would ask that you challenge that perspective and prioritize local and native voices.

For transparency, I don't currently live in Lāhainā, I've been following efforts from Honolulu. My parents and brother have been updating me and I've been following friends and family who are doing immediate response work. I'm doing my best to find reliable and current sources, but if I need to update something, please let me know. If you're going to try to convince me that tourism is necessary for our recovery, news flash ***IT'S NOT***!

Thanks for reading.

#please feel free to reblog! i don't know how tagging works here anymore!#maui fires#officially reported death count increased WHILE I WAS WRITING THE POST btw. this is bonkers.#if you want to start tourism discourse in my inbox you have to donate the cost of a plane ticket and send a screenshot to me#i have sources at the ready btw. i'd say don't try me but i mean my hometown could use a couple hundred bucks!!

982 notes

·

View notes

Text

Hey chat! I decided that I don't care if you care or not, I'll post it anyway. Because I'm a scientist nerd, and a TF2 fan.

So here you go, my theory on how the respawn machine actually works.

⚠️It'll be a lot of reading and you need half of a braincell to understand it.

The Respawn Machine can recreate a body within minutes, complete with all previous memories and personality, as if the person never died. We all know this, but I doubt many have thought about how it actually works.

Of course, such a thing is impossible in real life (at least for now), but we’re talking about a game where there’s magic and mutant bread, so it’s all good.

But being an autistic dork, I couldn’t help but start searching for logical and scientific explanations for how this machine might work. How the hell does it actually function? So, I spent hours of my life on yet another useless big brain time.

In the context of the Respawn Machine, the idea is that the technology can instantly create a new mercenary body, identical to the original. This body must be ready for use immediately after the previous one’s death. To achieve this, the cloning process, which in real life takes months or even years, would need to be significantly accelerated. This means the machine is probably powered by a freaking nuclear reactor, or maybe even Australium.

My theory is that this machine is essentially a massive 3D printer capable of printing biological tissues. But how? You see, even today, people can (or are trying to) recreate creatures that lived millions of years ago using DNA. By using the mercenary’s DNA, which was previously loaded into the system, the machine could recreate a perfect copy.

However, this method likely wouldn’t be able to perfectly recreate the exact personality and all the memories from the previous body. I believe the answer lies in neuroscience.

For the Respawn Machine to restore the mercenary’s consciousness and memories, it would need to be capable of recording and preserving the complete structure of the brain, including all neural connections, synapses, and activity that encode personality and memory. This process is known as brain mapping. After creating a brain map, this data could be stored digitally and then transferred to the new body.

“Okay, but how would you transfer memories that are dated right up until the moment of death? The mercenaries clearly remember everything about their previous death.”

Well, I have a theory about that too!

Neural interfaces! Inside each mercenary’s head could be an implant (a nanodevice) that reads brain activity before death and updates a digital copy of the memories. This system operates at the synaptic level, recording changes in the structure of neurons that occur as memories are formed. After death, this data could be instantly transferred to the new body via a quantum network.

Once the data is uploaded and the brain is synchronized with the new body, the mercenary’s consciousness "awakens." Ideally, the mercenary wouldn’t notice any break in consciousness and would remember everything that happened right up to the moment of death.

However… there are also questions regarding potential negative consequences.

Can the transfer of consciousness really preserve all aspects of personality, or is something inevitably lost in the process?

Unfortunately, nothing is perfect, and there’s a chance that some small memories might be lost—like those buried in the subconscious. Or the person’s personality might become distorted. Maybe that’s why they’re all crazy?

How far does the implant’s range extend? Does the distance between the mercenary and the machine affect the accuracy of data transfer?

My theory is that yes, it does. The greater the distance, the fewer memories are retained.

Could there be deviations in the creation of the body itself?

Yes, there could be. We saw this in "Emesis Blue," which led to a complete disaster. But let’s assume everything is fine, and the only deviations are at most an extra finger (or organ—not critical, Medic would only be happy about that).

Well, these are just my theories and nothing more. I’m not a scientist; I’m an amateur enthusiast with a lot of time on my hands. My theories have many holes that I can’t yet fill due to a lack of information.

#tf2#team fortress 2#canis says#respawn machine#i got nothing better to do sorry#i like brainstorming

79 notes

·

View notes

Text

How To Play The Revolution

So: I do not like the idea of TTRPGs making formal mechanics designed to incentivise ethical play.

But, to be honest, I do not like the idea of any single game pushing any particular formal mechanics about ethical play at all.

So here I am, trying to think through the reasons why, and proposing a solution. (Sort of. A procedure, really.)

+

Assumptions:

1.

Some genres of game resist ethical play. A grand strategy game dehumanises people into census data. The fun of a shooter is violence. This is truest in videogames, but applies to tabletop games also.

Games can question their own ethics, to an extent. Terra Nil is an anti-city-builder. But it is a management game at heart, so may elide critiques of "efficiency = virtue".

Not all games should try to design for ethical play. I believe games that incentivise "bad" behaviour have a lot to teach us about those behaviours, if you approach them with eyes open.

2.

The systems that currently govern our real lives are terrible: oligarchy, profit motive; patriarchy, nation-states, ethno-centrisms. They fuel our problems: class and sectarian strife, destruction of climate and people, spiritual desertification.

They are so total that the aspiration to ethical behaviour is subsumed by their logics. See: social enterprise; corpos and occupying forces flying rainbow flags; etc.

Nowadays, when I hear "ethical", I don't hear "we remember to be decent". I hear "we must work to be better". Good ethics is radical transformation.

3.

If a videogame shooter crosses a line for you, your only real response is to stop playing. This is true for other mechanically-bounded games, like CCGs or boardgames.

In TTRPGs, players have the innate capability to act as their own referees. (even in GM-ed games adjudications are / should be by consensus.) If you don't like certain aspects of a game, you could avoid it---but also you could change it.

Only in TTRPGs can you ditch basic rules of the game and keep playing.

+

So:

D&D's rules are an engine for accumulation: more levels, more power, more stuff, more numbers going up.

If you build a subsystem in D&D for egalitarian action, but have to quantify it in ways legible to the game's other mechanical parts---what does that mean? Is your radical aspiration feeding into / providing cover for the game's underlying logics of accumulation?

At the very least it feels unsatisfactory---"non-representative of what critique / revolution entails as a rupture," to quote Marcia, in conversations we've been having around this subject, over on Discord.

How do we imagine and represent rupture, to the extent that the word "revolution" evokes?

My proposal: we rupture the game.

+++

How To Play The Revolution

Over the course of play, your player-characters have decided to begin a revolution:

An armed struggle against an invader; overturning a feudal hierarchy; a community-wide decision to abandon the silver standard.

So:

Toss out your rule book and sheets.

And then:

Keep playing.

You already know who your characters are: how they prefer to act; what they are capable of; how well they might do at certain tasks; what their context is. You and your group are quite capable of improv-ing what happens next.

Of course, this might be unsatisfactory; you are here to play a TTRPG, after all. Structures are fun. Therefore:

Decide what the rules of your game will be, going forward.

Which rules you want to keep. Which you want to discard. Jury-rig different bits from different games. Shoe-horn a tarot deck into a map-making game---play that. Be as comprehensive or as freeform as you like. Patchwork and house-rule the mechanics of your new reality.

The god designer will not lead you to the revolution. You broke the tyranny of their design. You will lead yourself. You, as a group, together. The revolution is DIY.

+++

Notes:

This is mostly a thought experiment into a personal obsession. I am genuinely tempted to write a ruleset just so I can stick the above bit into it as a codified procedure.

I am tickled to imagine how the way this works may mirror the ways revolutions have played out in history.

A group might already have alternative ruleset in mind, that they want to replace the old ruleset with wholesale. A vanguard for their preferred system.

Things could happen piecemeal, progressively. Abandon fiat currency and a game's equipment price list. Adopt pacifism and replace the combat system with an alternative resolution mechanic. As contradictions pile up, do you continue, or revert?

Discover that the shift is too uncomfortable, too unpredictable, and default back to more familiar rules. The old order reacting, reasserting itself.

+

I keep returning to this damn idea, of players crossing thresholds between rulesets through the course of play. The Revolution is a rupture of ethical reality like Faerie or the Zone is a rupture in geography.

But writing all this down is primarily spurred by this post from Sofinho talking about his game PARIAH and the idea that "switching games/systems mid-session" is an opportunity to explore different lives and ethics:

Granted this is not an original conceit (I'm not claiming to have done anything not already explored by Plato or Zhuangzi) but I think it's a fun possibility to present to your players: dropping into a parallel nightmare realm where their characters can lead different lives and chase different goals.

+

Jay Dragon tells me she is already exploring this idea in a new game, Seven Part Pact:

"the game mechanics are downright oppressive but also present the capacity to sunder them utterly, so the only way to behave ethically is to reject the rules of the game and build something new."

VINDICATION! If other designers are also thinking along these lines this means the idea isn't dumb and I'm not alone!

+++

( Images:

https://forum.paradoxplaza.com/forum/developer-diary/victoria-3-dev-diary-23-fronts-and-generals.1497106/

https://www.thestranger.com/race/2017/04/05/25059127/if-you-give-a-cop-a-pepsi

https://en.wikipedia.org/wiki/WarGames

https://nobonzo.com/

https://pangroksulap.com/about/ )

222 notes

·

View notes

Text

this also happened a lot in mando s3 but the acolyte has this problem where it portrays bureaucracy as this thing that is obstinate and arbitrary for no reason. like the arbitrariness doesn’t take on an institutional form so you just get random characters being stubborn for no reason because they’re managers/leaders/etc., because that’s how bureaucrats behave, they just want you to sign forms and get official approvals and wait for new orders for no reason, it’s not really tied to any larger operation.

and like in contrast, in Andor there was a plot point where Dedra figured out that the Empire’s decentralised data structure was making it extremely difficult to track Cassian because they’d subdivided regions of Imperial territory on their maps and separately monitored each region, so if he stole things in two separate administrative regions of space it didn’t register as suspicious to them because none of those regions were cross-communicating with one another. like the particular form that the imperial bureaucracy took was producing a specific security problem that required centralising their data structure and looking at their own territory in a new way, because their data was producing a specific type of gaze and way of relating to information collected by the state. like normally I wouldn’t be so frustrated but this type of conflict has already been demonstrated to be completely possible in a Star Wars setting

#sw.txt#like vernestra in ep4 being like oh just capture mae she won’t respond violently … literally why do you think that what gave you#that impression 🤨#vernestra sticks out as particularly egregious like I’m mostly talking about her. wagh

82 notes

·

View notes

Text

Pre-alpha Lancer Tactics changelog

(cross-posting the full gif changelog here because folks seemed to like it last time I did)

We're aiming for getting the first public alpha for backers by the end of this month! Carpenter and I scoped out mechanics that can wait until after the alpha (e.g. grappling, hiding) in favor of tying up the hundred loose threads that are needed for something that approaches a playable game. So this is mostly a big ol changelog of an update from doing that.

But I also gave a talent talk at a local Portland Indie Game Squad event about engine architecture! It'll sound familiar if you've been reading these updates; I laid out the basic idea for this talk almost a year ago, back in the June 2023 update.

youtube

We've also signed contracts & had a kickoff meeting with our writers to start on the campaigns. While I've enjoyed like a year of engine-work, it'll be so so nice to start getting to tell stories. Data structures don't mean anything beyond how they affect humans & other life.

New Content

Implemented flying as a status; unit counts as +3 spaces above the current ground level and ignores terrain and elevation extra movement costs. Added hover + takeoff/land animations.

Gave deployables the ability to have 3D meshes instead of 2D sprites; we'll probably use this mostly when the deployable in question is climbable.

Related, I fixed a bug where after terrain destruction, all units recheck the ground height under them so they'll move down if the ground is shot out from under them. When the Jerichos do that, they say "oh heck, the ground is taller! I better move up to stand on it!" — not realizing that the taller ground they're seeing came from themselves.

Fixed by locking some units' rendering to the ground level; this means no stacking climbable things, which is a call I'm comfortable making. We ain't making minecraft here (I whisper to myself, gazing at the bottom of my tea mug).

Block sizes are currently 1x1x0.5 — half as tall as they are wide. Since that was a size I pulled out of nowhere for convenience, we did some art tests for different block heights and camera angles. TLDR that size works great and we're leaving it.

Added Cone AOE pattern, courtesy of an algorithm NMcCoy sent me that guarantees the correct number of tiles are picked at the correct distance from the origin.

pick your aim angle

for each distance step N of your cone, make a list ("ring") of all the cells at that distance from your origin

sort those cells by angular distance from your aim angle, and include the N closest cells in that ring in the cone's area

Here's a gif they made of it in Bitsy:

Units face where you're planning on moving/targeting them.

Got Walking Armory's Shock option working. Added subtle (too subtle, now that I look at it) electricity effect.

Other things we've added but I don't have gifs for or failed to upload. You'll have to trust me. :)

disengage action

overcharge action

Improved Armament core bonus

basic mine explosion fx

explosion fx on character dying

Increase map elevation cap to 10. It's nice but definitely is risky with increasing the voxel space, gonna have to keep an eye on performance.

Added Structured + Stress event and the associated popups. Also added meltdown status (and hidden countdown), but there's not animation for this yet so your guy just abruptly disappears and leaves huge crater.

UI Improvements

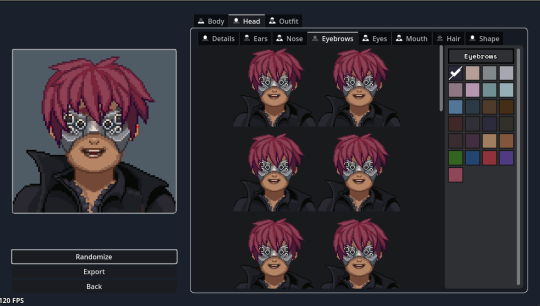

Rearranged the portrait maker. Auto-expand the color picker so you don't have to keep clicking into a submenu.

Added topdown camera mode by pressing R for handling getting mechs out of tight spaces.

The action tooltips have been bothering me for a while; they extend up and cover prime play-area real estate in the center of the screen. So I redesigned them to be shorter and have a max height by putting long descriptions in a scrollable box. This sounds simple, but the redesign, pulling in all the correct data for the tags, and wiring up the tooltips took like seven hours. Game dev is hard, yo.

Put the unit inspect popups in lockable tooltips + added a bunch of tooltips to them.

Implemented the rest of Carpenter's cool hex-y action and end turn readout. I'm a big fan of whenever we can make the game look more like a game and less like a website (though he balances out my impulse for that for the sake of legibility).

Added a JANKY talent/frame picker. I swear we have designs for a better one, but sometimes you gotta just get it working. Also seen briefly here are basic level up/down and HASE buttons.

Other no-picture things:

Negated the map-scaling effect that happens when the window resizes to prevent bad pixel scaling of mechs at different resolutions; making the window bigger now just lets you see more play area instead of making things bigger.

WIP Objectives Bullets panel to give the current sitrep info

Wired up a buncha tooltips throughout the character sheet.

Under the Hood

Serialization: can save/load games! This is the payoff for sticking with that engine architecture I've been going on about. I had to add a serialization function to everything in the center layer which took a while, but it was fairly straightforward work with few curveballs.

Finished replacement of the kit/unit/reinforcement group/sitrep pickers with a new standardized system that can pull from stock data and user-saved data.

Updated to Godot 4.2.2; the game (and editor) has been crashing on exit for a LONG time and for the life of me I couldn't track down why, but this minor update in Godot completely fixed the bug. I still have no idea what was happening, but it's so cool to be working in an engine that's this active bugfixing-wise!

Other Bugfixes

Pulled straight from the internal changelog, no edits for public parseability:

calculate cover for fliers correctly

no overwatch when outside of vertical threat

fixed skirmisher triggering for each attack in an AOE

fixed jumpjets boost-available detection

fixed mines not triggering when you step right on top of them // at a different elevation but still adjacent

weapon mods not a valid target for destruction

made camera pan less jumpy and adjust to the terrain height

better Buff name/desc localization

Fixed compcon planner letting you both boost and attack with one quick action.

Fix displayed movement points not updating

Prevent wrecks from going prone

fix berserkers not moving if they were exactly one tile away

hex mine uses deployer's save target instead of 0

restrict weapon mod selection if you don't have the SP to pay

fix deployable previews not going away

fix impaired not showing up in the unit inspector (its status code is 0 so there was a check that was like "looks like there's no status here")

fix skirmisher letting you move to a tile that should cost two movement if it's only one space away

fix hit percent calculation

fix rangefinder grid shader corner issues (this was like a full day to rewrite the shader to be better)

Teleporting costs the max(spaces traveled, elevation change) instead of always 1

So um, yeah, that's my talk, any questions? (I had a professor once tell us to never end a talk like this, so now of course it's the phrase that first comes to mind whenever I end a talk)

116 notes

·

View notes

Text

HOW DO WE KNOW HOW THE MILKY WAY LOOKS LIKE??

Blog#362

Saturday, December 30th, 2023

Welcome back,

This is the spiral galaxy NGC 2835, imaged by the impeccable, seemingly timeless Hubble Space Telescope.

And this is NGC 1132, an elliptical galaxy captured through the tandem efforts of Hubble and NASA's Chandra X-ray Observatory.

We know what both of these breathtakingly beautiful galaxies look like because we can see them from afar. How then do we know what our own Milky Way galaxy looks like, seeing as how we are inside it?

While we've never been able to zoom out and take a true galactic selfie, there are numerous observations that clue us in to the structure of our home galaxy. The greatest hint comes from looking at other galaxies. While there are perhaps two trillion in our observable universe, surprisingly, they only seem to come in three discernable varieties: spiral, elliptical, and irregular.

Spiral galaxies have a mostly flat disk with a bright central bulge and arms that swirl out from the middle. Elliptical galaxies tend to be round or oval with a uniform distribution of stars. Irregular galaxies are like stellar splotches in space, with little structure at all.

Gazing skyward from our vantage point on and around Earth, there are clear signs that the Milky Way is a spiral galaxy. You can see one sign with the naked eye! The Milky Way appears in our sky as a relatively flat disk.

Using more sophisticated methods, astrophysicists and astronomers have provided two more clues to the structure of the Milky Way.

"When we measure velocities of stars and gas in our galaxy, we see an overall rotational motion that differs from random motions," Sarah Slater, a graduate student in cosmology at Harvard University, wrote. "This is another characteristic of a spiral galaxy."

Moreover the gas proportions, colors, and dust content are similar to other spiral galaxies, she added.

Aside from these lines of evidence, astronomers are also using their tools in ingenious ways to map the structure of the Milky Way. Just this year, scientists used two radio astronomy projects from different parts of the globe to measure the parallaxes – differences in the apparent positions of objects viewed along two different lines of sight – from masers shooting off electromagnetic radiation in numerous massive star forming regions in our galaxy.

"These parallaxes allow us to directly measure the forms of spiral arms across roughly one-third of the Milky Way, and we have extended the spiral arm traces into the portion of the Milky Way seen from the Southern Hemisphere using tangencies along some arms based on carbon monoxide emission," the researchers explained. They coupled these observations with other gathered data points to construct a new image of the Milky Way. This is our home galaxy, in all its resplendant glory.

Originally published www-realclearscience-com

COMING UP!!

(Wednesday, January 3rd, 2024)

"WHAT IS QUARK MATTER??"

#astronomy#outer space#alternate universe#astrophysics#universe#spacecraft#white universe#space#parallel universe#astrophotography#galaxies#galaxy

160 notes

·

View notes

Text

Putting on their Dancing Shoes

Metahuman! Reader who's hiding out in Gotham despite Batman's 'no metahumans rule.' Because when they originally put down roots in Gotham, they weren't a meta nor did that rule exist yet. That and with all the villains in Gotham, rent had become rather cheap. Not the cheapest by any means but when compared to Metropolis or Bludhaven, they didn't exactly have the financial means to move.

So they stayed put, learning how to deal with their new metahuman abilities in secret. Thinking they had been good at keeping it on the down low until they received a knock on the door. Reader, looking through the peephole of their apartment door, hissing under their breath. 'Fuck me.' The man's attire immediately screamed detective, if not cop.

Taking a deep breath, they opened the door. Meeting the man's intimidating gaze. 'Can I help you?' Keeping their tone neutral, not wanting to come across as defensive. 'I'm detective Dick Grayson, with the GCPD. Mind if I asked you some questions?' Dick, while conducting research for a Nightwing sting, found pockets of metahuman activity. Tracking said activity to be near places Reader frequented. All within a 50 mile radius with their house at the epicenter. But unknown to Reader, Dick was recruiting for his team, the Teen Titans.

'Uh, sure. Come on in.' It'd all go well if they just kept their emotions in check. Otherwise, their physical features would shift. More accurately, dripping candle wax that would solidify into different shapes, colors, structures even. It would then be sucked back in the skin. 'I promise to make this quick.' Dick said, pulling a folder out of his bag, rife with papers. 'These are pictures taken from the CCTV cameras down on 4th and main on June 11th.' Putting down 2-3 photos from different angles, showing them with a hoodie.

'Not to assume anything but it seems like you were running away from someone or something. Which is concerning when you factor in how you look to have burn scars on your face and body.' Pointing at the areas of skin that their clothes didn't conceal. 'This was also the same night that the first power outage of the summer happened. But you never called anything in, why?'

'Not reporting something isn't a crime.' Reader shortly responds. 'Am I being charged with something?' Subtly asking if they were being arrested or if they'd end up in the back of his cop car, on the way down to the station without using the word 'arrested.' Taking a deep breath to calm their anxieties. Looking down to see if their arm was still solid. Dick shifted to a softer approach when he noticed this, sitting across from them at the small coffee table. 'Have you ever heard of the hidden figure of crime?'

'The what?'

'The hidden figure of crime or the dark figure. It's the term for the amount of crimes that go unreported. Kinda makes our job difficult. Sometimes it's because the victim experiences intimidation tactics by the perpetrator. Other times, it's because the victim doesn't recognize they've been victimized. Does that sound familiar?' Dick coaxed, using it as a leading question. Even though he technically wasn't supposed to do that. 'No, I don't think that's the case. Me not realizing I'm a victim.'

Pulling out a map with marked locations in orange sharpie. 'Okay. Well, this here is a map showing all known metahuman activity. Does anything about this data look familiar to you?' Recognizing that they weren't responding how he wanted to his questions, Dick turned up the heat. It was at this point that Reader froze, internally screaming that he knew. He must know. If he didn't, it definitely became apparent as their emotions triggered their abilities. Out of control, they now looked more akin to a Picasso.

'That's uncanny.' Dick's pristinely crafted worksona broke. Unable to continue on with the script he was following. Now assessing if Reader's ability could be of use to the team he was still building. He had to find some way to help. Beyond acting like a benefactor to get them to Central city but that'd equate to abandonment which Dick refused to do. Even if they didn't end up as a member of Teen Titans, he could at least help them figure out how to stabilize their ability. Especially with it being tied to their emotions. 'Come with me.'

Noting their confused look, as the dude literally just spent half an hour making them think he was going to arrest them, clarified. 'I'm not arresting you, this is just my day job. Pack a bag, I'll explain everything in the car.' Pulling open the blinds to show that he hadn't even driven to their apartment in a cop car to begin with. Their face having slowly shifted back to normal.

#dcu#dc universe#batman series#metahuman#metahuman! reader#dick grayson x reader#nightwing x reader#x gn reader#x gender neutral reader#dick grayson#nightwing

42 notes

·

View notes

Text

Derivative Works

This is a five-part piece of conceptual art titled Derivative Works. Each image here is generated algorithmically using one (or more) pieces of other art as input, but bears no resemblance to its 'progenitors'. The intent is to question what it means for an image to be a 'derivative' of another, both in the legal sense of copyright law and in the moral sense.

For Perceptual, Sorted, Transpose, and Sample, the input image is Wikipedia's high-resolution image of the Mona Lisa. The inputs for Composite are the first 10,000 images in this subset of the Stable Diffusion image set.

All images in Derivative Works are public domain (specifically, CC0). I do request that you give credit if you make use of them. The source code and original-resolution PNGs are available on Codeberg.

Perceptual (Derivative Works 0x0)

Perceptual uses a perceptual hash of its input image and then applies a fairly simple generative algorithm to render the output (which is intended to vaguely recall a night ocean, but that's not the point). The use of a perceptual hash means that the output depends on what the image 'looks like'; changing a pixel here or there will still result in the same output. At the same time, a substantially different input would provide a noticeably different output.

Sorted (Derivative Works 0x1)

Sorted simply sorts the pixels. The output image has all the same pixels as the original, but going back to the input would be impossible since you have no way of knowing which pixels go where. The color scheme is faintly reminiscent of the input, but all detail and structure is destroyed.

Transpose (Derivative Works 0x2)

Transpose interprets the image as a series of RGB values, then repeatedly applies a variation of the horseshoe map to them: take the first byte, then the last byte, then the second, then the second-to-last, and so on. Repeating this process four times produces something that is absolutely unrecognizable, yet still contains the same information as the input image. Furthermore, unlike Sort, the transformation is reversible; it's not hard to write a corresponding "unfold" transform that, when applied four times, would give the input image. So is this a copy (because it can be transformed into the original) or a new work entirely (because it bears no visual resemblance)?

Sample (Derivative Works 0x3)

Sample is a single reddish pixel; it may be difficult to see. The Stable Diffusion model contains roughly 2.3 billion images. The model itself is about 5 gigabytes of storage. This implies that the model contains very little information about any particular image, from an information-theory point of view. A single pixel in an image contains three bytes of information: one each for red, green, blue. Sample, which was generated by computing a 3-byte perceptual hash of its input image, is therefore a visual representation of roughly how much information Stable Diffusion has about any individual work in its input set.

Composite (Derivative Works 0x4)

Finally, Composite takes the principle of Sample to its extreme. I downloaded roughly 10,000 images from the Stable Diffusion data set, computed a 4-byte hash of them, then concatenated the hashes together to produce the output. In the interest of saving time and effort, I interpret each bit as a pixel, either on or off. There are therefore 512 * 512 / 8 / 4 = 8192 images represented. (I downloaded more than 8192 to account for images that would fail to download or that no longer pointed to a valid image.)

1K notes

·

View notes

Text

Hello Grambank! A new typological database of 2,467 language varieties

Grambank has been many years in the making, and is now publically available!

The team coded 195 features for 2,467 language varieties, and made this data publically available as part of the Cross-Linguistic Linked Data-project (CLLD). They plan to continue to release new versions with new languages and features in the future.

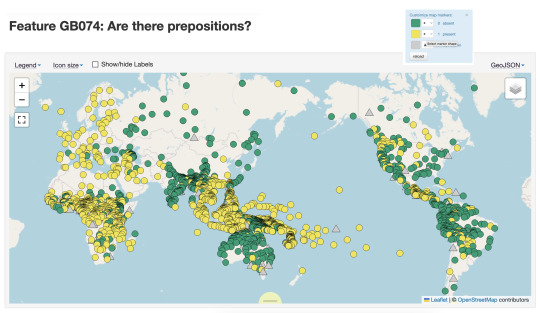

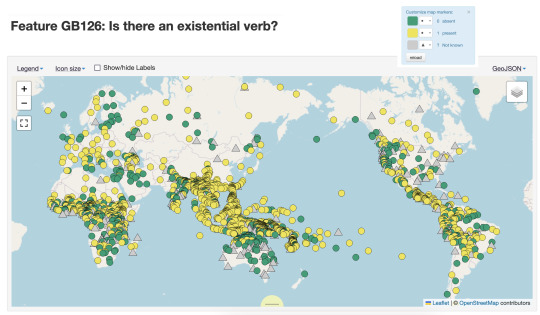

Below are maps for two features I’ve selected that show very different distribution across the world’s languages. The first map codes for whether there are prepositions (in yellow), and we can see really clear clustering of them in Europe, South East Asia and Africa. Languages without prepositions might have postpostions or use some other strategy. The second map shows languages with an existential verb (e.g. there *is* an existential verb, in yellow), we see a different distribution.

What makes Grambank particularly interesting as a user is that there is extensive public documentation of processes and terminology on a companion GitHub site. They also have been very systematic selecting values and coding for them for all the sources that they have. This is a different approach to that taken for the World Atlas of Linguistic Structures (WALS), which has been the go-to resource for the last two decades. In WALS a single author would collate information on a sample of languages for a feature they were interested in, while in Grambank a single coder would add information on all 195 features for a single grammar they were entering data for.

I’m very happy that Lamjung Yolmo is included in the set of languages in Grambank, with coding values taken from my 2016 grammar of the language. Thanks to the transparent approach to coding in this project, you can not only see the values that the coding team assigned, but the pages of the reference work that the information was sourced from.

433 notes

·

View notes

Text

Okay, I have my own opinions about AI, especially AI art, but this is actually a very cool application!

So when you think about it, we can quantify vision/sight using the actual wavelengths of light, and we can quantify hearing using frequency, but there really isn't a way to quantify smell. So scientists at the University of Reading set out to create an AI to do just that.

The AI was trained on a dataset of 5000 known odor-causing molecules. It was given their structures, and a list of various scent descriptors (such as "floral sweet" or "musty" or "buttery") and how well those descriptors fit on a scale of 1-5. After being trained on this data, the AI was able to be shown a new molecule and predict what its scent would be, using the various descriptors.

The AI's prediction abilities were compared against a panel of humans, who would smell the compound of interest and assign the descriptors. The AI's predictions were actually just as good as the human descriptions. Professor Jane Parker, who worked on the project, explained the following.

"We don't currently have a way to measure or accurately predict the odor of a molecule, based on its molecular structure. You can get so far with current knowledge of the molecular structure, but eventually you are faced with numerous exceptions where the odor and structure don't match. This is what has stumped previous models of olfaction. The fantastic thing about this new ML generated model is that it correctly predicts the odor of those exceptions"

Now what can we do with this "AI Nose", you might ask? Well, it may have benefits in the food and fragrance industries, for one. A machine that is able to quickly filter through compounds to find one with specific odor qualities could be a good way to find new, sustainable sources of fragrance in foods or perfumes. The team also believes that this "scent map" that the AI model builds could be linked to metabolism. In other words, odors that are close to each other on the map, or smell similar, are also more likely to be metabolically related

#science#stem#science side of tumblr#stemblr#biology#biochemistry#chemistry#machine learning#technology#innovation

335 notes

·

View notes

Text

Caution: Universe Work Ahead 🚧

We only have one universe. That’s usually plenty – it’s pretty big after all! But there are some things scientists can’t do with our real universe that they can do if they build new ones using computers.

The universes they create aren’t real, but they’re important tools to help us understand the cosmos. Two teams of scientists recently created a couple of these simulations to help us learn how our Nancy Grace Roman Space Telescope sets out to unveil the universe’s distant past and give us a glimpse of possible futures.

Caution: you are now entering a cosmic construction zone (no hard hat required)!

This simulated Roman deep field image, containing hundreds of thousands of galaxies, represents just 1.3 percent of the synthetic survey, which is itself just one percent of Roman's planned survey. The full simulation is available here. The galaxies are color coded – redder ones are farther away, and whiter ones are nearer. The simulation showcases Roman’s power to conduct large, deep surveys and study the universe statistically in ways that aren’t possible with current telescopes.

One Roman simulation is helping scientists plan how to study cosmic evolution by teaming up with other telescopes, like the Vera C. Rubin Observatory. It’s based on galaxy and dark matter models combined with real data from other telescopes. It envisions a big patch of the sky Roman will survey when it launches by 2027. Scientists are exploring the simulation to make observation plans so Roman will help us learn as much as possible. It’s a sneak peek at what we could figure out about how and why our universe has changed dramatically across cosmic epochs.

youtube

This video begins by showing the most distant galaxies in the simulated deep field image in red. As it zooms out, layers of nearer (yellow and white) galaxies are added to the frame. By studying different cosmic epochs, Roman will be able to trace the universe's expansion history, study how galaxies developed over time, and much more.

As part of the real future survey, Roman will study the structure and evolution of the universe, map dark matter – an invisible substance detectable only by seeing its gravitational effects on visible matter – and discern between the leading theories that attempt to explain why the expansion of the universe is speeding up. It will do it by traveling back in time…well, sort of.

Seeing into the past

Looking way out into space is kind of like using a time machine. That’s because the light emitted by distant galaxies takes longer to reach us than light from ones that are nearby. When we look at farther galaxies, we see the universe as it was when their light was emitted. That can help us see billions of years into the past. Comparing what the universe was like at different ages will help astronomers piece together the way it has transformed over time.

This animation shows the type of science that astronomers will be able to do with future Roman deep field observations. The gravity of intervening galaxy clusters and dark matter can lens the light from farther objects, warping their appearance as shown in the animation. By studying the distorted light, astronomers can study elusive dark matter, which can only be measured indirectly through its gravitational effects on visible matter. As a bonus, this lensing also makes it easier to see the most distant galaxies whose light they magnify.

The simulation demonstrates how Roman will see even farther back in time thanks to natural magnifying glasses in space. Huge clusters of galaxies are so massive that they warp the fabric of space-time, kind of like how a bowling ball creates a well when placed on a trampoline. When light from more distant galaxies passes close to a galaxy cluster, it follows the curved space-time and bends around the cluster. That lenses the light, producing brighter, distorted images of the farther galaxies.

Roman will be sensitive enough to use this phenomenon to see how even small masses, like clumps of dark matter, warp the appearance of distant galaxies. That will help narrow down the candidates for what dark matter could be made of.

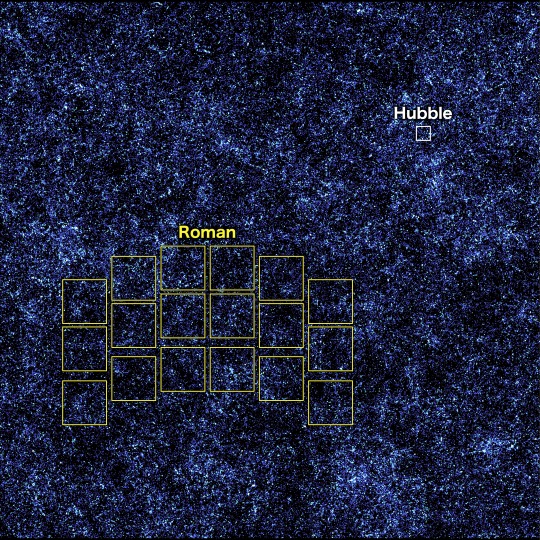

In this simulated view of the deep cosmos, each dot represents a galaxy. The three small squares show Hubble's field of view, and each reveals a different region of the synthetic universe. Roman will be able to quickly survey an area as large as the whole zoomed-out image, which will give us a glimpse of the universe’s largest structures.

Constructing the cosmos over billions of years

A separate simulation shows what Roman might expect to see across more than 10 billion years of cosmic history. It’s based on a galaxy formation model that represents our current understanding of how the universe works. That means that Roman can put that model to the test when it delivers real observations, since astronomers can compare what they expected to see with what’s really out there.

In this side view of the simulated universe, each dot represents a galaxy whose size and brightness corresponds to its mass. Slices from different epochs illustrate how Roman will be able to view the universe across cosmic history. Astronomers will use such observations to piece together how cosmic evolution led to the web-like structure we see today.

This simulation also shows how Roman will help us learn how extremely large structures in the cosmos were constructed over time. For hundreds of millions of years after the universe was born, it was filled with a sea of charged particles that was almost completely uniform. Today, billions of years later, there are galaxies and galaxy clusters glowing in clumps along invisible threads of dark matter that extend hundreds of millions of light-years. Vast “cosmic voids” are found in between all the shining strands.

Astronomers have connected some of the dots between the universe’s early days and today, but it’s been difficult to see the big picture. Roman’s broad view of space will help us quickly see the universe’s web-like structure for the first time. That’s something that would take Hubble or Webb decades to do! Scientists will also use Roman to view different slices of the universe and piece together all the snapshots in time. We’re looking forward to learning how the cosmos grew and developed to its present state and finding clues about its ultimate fate.

This image, containing millions of simulated galaxies strewn across space and time, shows the areas Hubble (white) and Roman (yellow) can capture in a single snapshot. It would take Hubble about 85 years to map the entire region shown in the image at the same depth, but Roman could do it in just 63 days. Roman’s larger view and fast survey speeds will unveil the evolving universe in ways that have never been possible before.

Roman will explore the cosmos as no telescope ever has before, combining a panoramic view of the universe with a vantage point in space. Each picture it sends back will let us see areas that are at least a hundred times larger than our Hubble or James Webb space telescopes can see at one time. Astronomers will study them to learn more about how galaxies were constructed, dark matter, and much more.

The simulations are much more than just pretty pictures – they’re important stepping stones that forecast what we can expect to see with Roman. We’ve never had a view like Roman’s before, so having a preview helps make sure we can make the most of this incredible mission when it launches.

Learn more about the exciting science this mission will investigate on Twitter and Facebook.

Make sure to follow us on Tumblr for your regular dose of space!

#NASA#astronomy#telescope#Roman Space Telescope#dark matter#galaxies#cosmology#astrophysics#stars#galaxy#Hubble#Webb#spaceblr

2K notes

·

View notes