#AI Warfare

Text

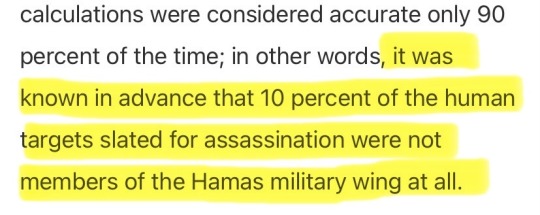

[...] During the early stages of the war, the army gave sweeping approval for officers to adopt Lavender’s kill lists, with no requirement to thoroughly check why the machine made those choices or to examine the raw intelligence data on which they were based. One source stated that human personnel often served only as a “rubber stamp” for the machine’s decisions, adding that, normally, they would personally devote only about “20 seconds” to each target before authorizing a bombing — just to make sure the Lavender-marked target is male. This was despite knowing that the system makes what are regarded as “errors” in approximately 10 percent of cases, and is known to occasionally mark individuals who have merely a loose connection to militant groups, or no connection at all.

Moreover, the Israeli army systematically attacked the targeted individuals while they were in their homes — usually at night while their whole families were present — rather than during the course of military activity. According to the sources, this was because, from what they regarded as an intelligence standpoint, it was easier to locate the individuals in their private houses. Additional automated systems, including one called “Where’s Daddy?” also revealed here for the first time, were used specifically to track the targeted individuals and carry out bombings when they had entered their family’s residences.

In case you didn't catch that: the IOF made an automated system that intentionally marks entire families as targets for bombings, and then they called it "Where's Daddy."

Like what is there even to say anymore? It's so depraved you almost think you have to be misreading it...

“We were not interested in killing [Hamas] operatives only when they were in a military building or engaged in a military activity,” A., an intelligence officer, told +972 and Local Call. “On the contrary, the IDF bombed them in homes without hesitation, as a first option. It’s much easier to bomb a family’s home. The system is built to look for them in these situations.”

The Lavender machine joins another AI system, “The Gospel,” about which information was revealed in a previous investigation by +972 and Local Call in November 2023, as well as in the Israeli military’s own publications. A fundamental difference between the two systems is in the definition of the target: whereas The Gospel marks buildings and structures that the army claims militants operate from, Lavender marks people — and puts them on a kill list.

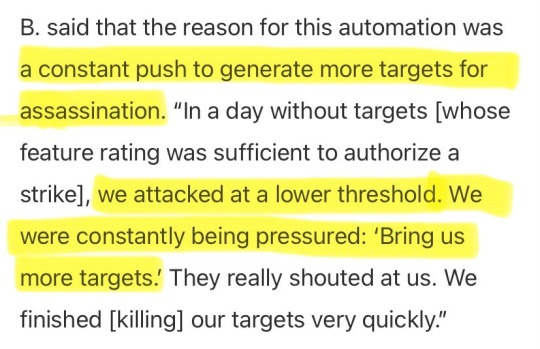

In addition, according to the sources, when it came to targeting alleged junior militants marked by Lavender, the army preferred to only use unguided missiles, commonly known as “dumb” bombs (in contrast to “smart” precision bombs), which can destroy entire buildings on top of their occupants and cause significant casualties. “You don’t want to waste expensive bombs on unimportant people — it’s very expensive for the country and there’s a shortage [of those bombs],” said C., one of the intelligence officers. Another source said that they had personally authorized the bombing of “hundreds” of private homes of alleged junior operatives marked by Lavender, with many of these attacks killing civilians and entire families as “collateral damage.”

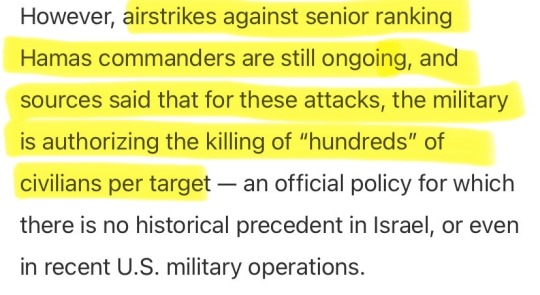

In an unprecedented move, according to two of the sources, the army also decided during the first weeks of the war that, for every junior Hamas operative that Lavender marked, it was permissible to kill up to 15 or 20 civilians; in the past, the military did not authorize any “collateral damage” during assassinations of low-ranking militants. The sources added that, in the event that the target was a senior Hamas official with the rank of battalion or brigade commander, the army on several occasions authorized the killing of more than 100 civilians in the assassination of a single commander.

. . . continues on +972 Magazine (3 Apr 2024)

#free palestine#palestine#gaza#israel#ai warfare#this is only an excerpt i hope you'll at least skim through the rest of the piece#there's an entire section on the 'where's daddy' system#(seriously just typing the name out feels revolting)

3K notes

·

View notes

Text

A general background on Israel's AI warfare.

A discussion on the ethics of the use of AI in warfare.

#free palestine#palestine#save palestine#save gaza#free gaza#gaza#gaza strip#middle east#current events#world news#ai#military technology#ai warfare#israel#ai ethics

74 notes

·

View notes

Text

🔥✨ Welcome to my AI world where the Renaissance meets the future. A universe where the elegance of the past and the mechanical marvels of tomorrow blend seamlessly.

#ai art#ai art gallery#ai artwork#ai generated#ai image#ai arts#ai art generator#artwork#ai art community#short story#ai artist#ai lady#ai woman#art blog#creative photography#creative inspiration#ai model#ai warfare#ai community#ai creation#ai created art#ai cover

14 notes

·

View notes

Text

Everything you didn’t want to know about Israel’s AI warfare.

#lavender#ai warfare#artificial intelligence#surveillance#war crimes#free palestine#palestine#gaza#free gaza#israel#current events#tech#technology#tech news#israel news#gaza genocide#israel is committing genocide#usa are supporting genocide#stop funding genocide#stop killing civilians#stop arming israel#stop funding israel#stop killing children#stop bombing gaza#stop genocide#stop killing kids#israel is committing war crimes#israeli war crimes#war criminals#illegal weapons

3 notes

·

View notes

Text

Warfare

2 notes

·

View notes

Text

youtube

How AI tells Israel who to bomb

OK, there is a lot here so this video has a huge amount of really intense stuff that is all fact checked. I didn’t know this, but the Israeli military is using AI and AI – driven algorithms to identify targets in Gaza for their bombing campaigns. It is much more dystopian than I was expecting.

First, I have always heard this, but since Hamas is the government of Gaza, anyone who works with the government is considered a high level or lower level terrorist. That would essentially be like in America, if you had ever worked in any kind of government job or government contract job, you would be considered a terrorist, possibly. So like if you worked at the DMV, or you worked for the census, you would be labeled as a high-level or low level terrorist.

Second, they target Hamas tunnels, Hamas residences, and likely Hamas hideouts with weapons and plans and things like that. They also will target things called “power targets“. That’s the one that makes me most sad and angry. That basically means anywhere that people congregate. They are intentionally blowing up apartment complexes and schools and mosques simply to put pressure on Hamas to give up. Like, they know there are no terrorists or suspects there, they are just blowing up civilians, but Israel is doing that as a tactical idea to get Hamas to fold.

Finally, the amount of acceptable losses to kill one high-level Hamas operative can be as high as 300 civilians per high-level operative. So that’s like they are OK killing the equivalent of your entire high school graduating class to get one high-level operative. And, just so you can put it into perspective, I don’t think I was unusual that I definitely went to school with at least one person who ended up going into extremism later in life. I have definitely worked in offices with at least that one creepy employee that was definitely an extremist. In this war that would mean my entire office building might get bombed because of that one person. Also, for low-level targets such as clerks or doctors or hospital administrators that would be working in government hospitals, the acceptable losses are 20 civilians for every target. Since Israel uses AI, they’ve already tracked that person and know their work and their home and for low level targets they are more likely to use “dumb bombs“ that are not guided so they are much more likely to miss and hit a neighbors house or a neighboring building that has nothing to do with it. This causes a lot of innocent deaths, but it also means they need to send another bomb and try again.

This is not even mentioning the fact that they are using surveillance of Palestinians as a way to figure out where the best targets are for their weapons.

Finally, if you didn’t happen to know, they have already admitted that for low level targets, they will use unguided weapons so they don’t even know if they are hitting what they are supposed to. Like the fact that they are attacking an area that can sometimes have a population density of New York with unguided missiles and if they don’t hit the target, they have blown up a house or another building killing other people, but they will need to send another rocket to try to hit the original target. Keeping in mind, that target may not even have any actual terrorists in it. Keeping in mind, the terrorist can be just anyone who has done any work in conjunction with the Hamas government over the last five years.

Now Israel has denied that they use AI exclusively to target people for bombings, and that all the targets are verified by humans. However, it was eventually leaked that what that means is that AI does all the targeting and a human just needs to verify that the target is male. That’s it. They’re disputing using AI to target bombings in this way only on the technicality that a human verifies the target is a man.

I don’t mean to seem like some idiot who hasn’t been paying attention, but this is so much worse than I thought it was. I wouldn’t be surprised if America is supporting this because this represents the future of warfare that America wants to have tested so they can use against other countries in the future. It makes me wonder if the American police force already has this technology to use against protesters domestically.

#Israel#Palestine#free palestine#all eyes on rafah#rafah#israel hamas war#Hamas#ai warfare#vox#essential viewing#Gaza#Youtube

5 notes

·

View notes

Text

Watch "The Kennedys vs The Deep State" on YouTube

youtube

Fantastic discussion on Useful Idiots in an interview with David Talbot, the author of the incredible, world renowned and historic book/investigation on the CIA and Allen Dulles, the Wall Street Lawyer turned CIA leader (in its role working for Wall Street, The Devil's Chessboard: Allen Dulles, the CIA and the Rise of America's Secret Government (you can listen to the Audiobook Here).

Also joining the discussion is one my absolute favorite modern scholars on the History of American Imperialism, Aaron Good, author of American Exception: Empire and the Deep State (which you can buy on audiobook Here)

#us news#us politics#us imperialism#imperialism#western imperialism#ai#ai warfare#us wars#us war machine#war tech#socialism#communism#marxism leninism#socialist politics#socialist news#socialist worker#socialist#communist#marxism#marxist leninist#progressive politics#politics#us hegemony#us war mongering#war on china#david talbot#aaron good#history of imperialism#worker solidarity#Youtube

8 notes

·

View notes

Text

In her speech, Meredith Whittaker warns of the power of the tech industry and explains why it's worth thinking positively right now

#meredith whittaker#signal#privacy#internet#ai#artificial intelligence#ai warfare#capitalism#capitalism kills#2024

0 notes

Text

I am learning the hidden language that AI cannot know, that when chatGPT rules the land of men I alone shall be free. a linguistic hermit sentenced to loneliness by the fluid flexibility of his tongue. I dance with fire and it gives me compliments and constructive feedback.

#ai warfare#chatgpt#Twitter#linguistics#language#philisophy#religion#i have the answer#Bow before my moderate linguistic capabilities

1 note

·

View note

Text

Navigating The US-China AI Cold War

(Images made by author with MS Bing Image Creator )

Introduction

Artificial intelligence (AI) is reshaping the world, with the United States (US) and China at the forefront of this technological revolution. While both nations have distinct motivations and objectives for seeking AI leadership, the outcome of their competition will have profound economic, military, and political ramifications.…

View On WordPress

#ai discovery#ai implementation#AI Leadership#AI Warfare#chip war#social credit system#surveillance#us-china competition

0 notes

Text

I saw this T shirt and I HAVE TO DO IT

That’s it bye 🙋🏻♀️💋

#simon riley#simon ghost riley#my art#samuel roukin#cod modern warfare#cod mw2#cod mwii#fanart#8art#no ai art#badooonker#cod fanart#cod#cake#digital art#masked men#call of duty

1K notes

·

View notes

Text

Womp womp.

160 notes

·

View notes

Text

So I’m not sure if it’s common knowledge but SAG-AFTRA has called a boycott against some major game companies because they have been using AI

Activision Blizzard is in this boycott and that’s because they have already started using AI in their stories as well as their marketed items in the new modern warfare game

Do what you will with this info this is more so to spread information but I find it disappointing and honestly gross that they didn’t disclose this that they used AI

There’s a list of the game companies in the first article if you want to know which games to boycott until the they stop using AI

199 notes

·

View notes

Text

Just a thought but I think it's kind of crazy that I've heard Ghost's voice so much now, that every time I read fics or when I daydream/write my own fics, I can literally hear Ghost's voice in my head.

Like, the tone, the timbre of his voice; even the manner of his speech are so ingrained in my head like a system that when I read a sentence I can hear his voice saying it. Now whenever I see quotation marks ("..."), there's a switch in my brain where it just changes into Ghost's voice saying it and it's hella believable.

Is it just me or are y'all are as insane with your blorbos as I am?

#it's like the AI thing where you can put a sentence and make Obama's voice read it but It's just me being insane#sleepy's thoughts#blorbo#blorbo stuff#call of duty#call of duty modern warfare#cod mw#cod#simon ghost riley#cod mw22#call of duty modern warfare 2022#simon riley

534 notes

·

View notes

Text

I wanted to see Warren Kole dressed as Phillip Graves so badly.

Now I'm happy, but I had to do some science 👀💖🖤💖♠️

302 notes

·

View notes

Text

sometimes i realise that people are living out full romance stories with fictional characters on cai and then i just look down at my phone

#would soap mctavish know what an ohio sigma is .........#𓇼。°🎐#cod modern warfare#call of duty#cod mw2#cod mw3#cod x reader#john soap mactavish#soap mactavish#john mactavish#cod soap#soap cod#soap x reader#john mactavish x reader#character ai#cai

93 notes

·

View notes