#deep data analysis

Explore tagged Tumblr posts

Text

Hyperparameter tuning in machine learning

The performance of a machine learning model in the dynamic world of artificial intelligence is crucial, we have various algorithms for finding a solution to a business problem. Some algorithms like linear regression , logistic regression have parameters whose values are fixed so we have to use those models without any modifications for training a model but there are some algorithms out there where the values of parameters are not fixed.

Here's a complete guide to Hyperparameter tuning in machine learning in Python!

#datascience #dataanalytics #dataanalysis #statistics #machinelearning #python #deeplearning #supervisedlearning #unsupervisedlearning

#machine learning#data analysis#data science#artificial intelligence#data analytics#deep learning#python#statistics#unsupervised learning#feature selection

3 notes

·

View notes

Text

Amy Brown, Chief Executive Officer, Authenticx – Interview Series

New Post has been published on https://thedigitalinsider.com/amy-brown-chief-executive-officer-authenticx-interview-series/

Amy Brown, Chief Executive Officer, Authenticx – Interview Series

Amy Brown, a former healthcare executive, founded Authenticx in 2018 to help healthcare organizations unlock the potential of customer interaction data. With two decades of experience in the healthcare and insurance industries, she saw the missed opportunities in using customer conversations to drive business growth and improve profitability.

Authenticx addresses this gap by utilizing AI and natural language processing to analyze recorded interactions—such as calls, emails, and chats—providing healthcare organizations with actionable insights to make better business decisions.

What inspired you to transition from a career in healthcare operations and social work to founding Authenticx, a tech-driven AI company?

With my educational background in social work and my 20-year work experience in contact center operations, my desire to advocate for individuals in healthcare became both my passion and mission.

During my time working in insurance and healthcare sectors, I noticed organizations struggling to truly understand their customers’ needs through repetitive surveys and robocalls, which often led to low response rates and metrics that were not reliable.

And that’s where Authenticx came in. By leveraging AI to analyze recorded customer conversations, I realized healthcare would be extracting valuable insights directly from the voice of the customer, empowering the industry to truly connect with their customers to strategize, invest, and take action.

How did your personal experiences, especially observing the healthcare system firsthand through your father’s practice and your own work, shape your vision for Authenticx?

My experience started by understanding how my father served in healthcare through his role as a physician. He has always practiced listening and family-centered care, so, to me, he was the exception to the typical friction you often hear about. I remember him telling me that it is the patients’ words that can help lead you to the answers. And that stuck with me, and it led me to focus my career and aspirations to improving that system by listening; it is key.

When I entered healthcare firsthand though government work and contact center operations, I saw all the different entities that are entangled in the healthcare system trying to make it work. And while this developed my macro view of affecting change, they still weren’t diving into all the data available from the countless interactions shared with customers. I wanted to help shed light on that ignored conversation data and help organizations improve their customer experience while meeting their outcomes more efficiently and effectively.

As someone without a traditional tech background, what challenges did you face while founding an AI company, and how did you overcome them?

I have faced plenty of friction along the journey of founding an AI company. Navigating critics was fatiguing, both as a founder and as someone without the technical expertise in creating SaaS technology. However, I’ve learned surrounding myself with individuals who complement my knowledge gaps is a powerful way to work together to build a company.

After leaving the corporate world and starting Authenticx, how to best approach data aggregation and analysis were my focus. So, I found a partner, Michael Armstrong, who had an impressive background in tech ( and now is our Chief Technology Officer) and we began to build out what Authenticx is today.

Authenticx uses AI to analyze healthcare conversations. Could you walk us through how your AI models are specifically tailored for healthcare and what makes them unique?

Authenticx’s models are built by and for healthcare. We approach our models with experts from healthcare, social work, and tech involved every step of the way; it is human-in-the-loop AI. And we train our models using healthcare-specific data with outputs and insights reviewed by the people who understand bias risk, gaps in context, and miscommunication that can create friction from the market and the customer.

We label the data for sentiment, friction, compliance, adverse events, topics, and other metrics and pain points. These labels become the foundation of our AI machine learning and deep learning models. We continuously evaluate, test, and retrain the models for an iterative process to build reliable AI that meets security and governance guidelines.

What is the ‘Eddy Effect,’ and how does your AI platform help healthcare organizations address this issue?

The Eddy Effect™ is our proprietary Machine Learning model and metric, which identifies and measures customer friction in the customer experience journey. Like an eddy in a river, such as a large rock causing water to swirl, the Eddy Effect™ gives insights into what causes that frustrating loop for customers. It helps identify disruptions and obstacles that are a barrier (or the large rock) to creating a positive experience.

The results of the Eddy Effect™ AI model are illuminated within dashboards spotlighting various signals of friction found in conversation data. And it is on these dashboards that common metrics, such as call length, sentiment, accuracy, and estimated waste costs are monitored for customer friction. For instance, we had a client that lacked insight into quality and pain points from their third-party contact center. With Authenticx, they targeted friction points, themes and topics, and quality to reduce the presence of identified friction by 10%.

How does Authenticx ensure that its AI models provide insights that genuinely improve patient care and reduce friction in the healthcare system?

We prioritize conversational data analysis, which provides valuable insights into customer interactions and uncovers important issues and opportunities that may be overlooked by other data sources. Authenticx employs GenAI models to simplify complex and nuanced data and provide actionable recommendations specifically for healthcare. Our reporting tools offer a consumable view of performance metrics and trends. Our built-in workflows allow users to respond timely.

Most of all, we have a consistent review of our models and their outputs. From our Customer Advisory Board providing feedback on our product, and our in-house team of data scientists and analysts ensuring the reliability of the insights organizations receive, our human-in-the-loop approach helps to alleviate risks, biases, and incorrect information to improve AI accuracy.

What role does AI play in addressing operational inefficiencies, and how does it help healthcare organizations identify and resolve broken processes?

When you can listen to a call and cut through the noise to understand the context of the pain point, the more likely you are to identify significant issues that are the root cause of a broken process. When the root cause is found, organizations can strategize with a data-driven approach to invest in resources and effectively erase the guesswork for an efficient resolution.

AI is a tool that can be used to synthesize large amounts of data to identify, quantify and trend operational inefficiencies and broken processes at scale.

We had a customer leverage Authenticx to identify what was causing patient confusion in their prescription inquiry process, making up 20% of their calls. With insights into root causes of refill friction, they restructured their phone tree and revised agent prompts, resulting in their call intake being reduced by about 550 calls over two months, saving time and resources.

Can you share an example of how Authenticx’s AI has transformed a healthcare provider’s operations or patient outcomes?

Authenticx helped a regional hospital system identify the leading drivers of friction within its central scheduling process. Callers were getting stuck while seeking medical advice, there was a lack of visibility into specialty processes once the agent transferred the call, and repeated frustration of the inability to schedule an appointment quickly.

Authenticx AI activated a full-volume analysis of calls to identify the specific barriers and provide insights to coach agents, highlighting ways to improve their quality initiatives. Within two months, their team increased agent quality skills by 12%, used Authenticx insights to predict future friction points, and proactively addressed them.

How does Authenticx’s AI augment human decision-making, and what role do healthcare professionals play in refining the AI models?

We practice a human-in-the-loop approach to ensure ethical and reliable deployment and implementation of AI. Our platform mirrors that approach: An AI and human analysis that provides feedback about customer experience, operational performance, compliance, and more.

While our in-house team works at all levels of the platform, our GenAI models are trained with healthcare-specific data to produce insights such as context-rich summaries, topic aggregation, and automated coaching notes, and we’ve announced an integrated AI assistant that provides meaningful insights instantly.

How do you see AI transforming healthcare in the next five years, and what role will Authenticx play in that transformation?

The next five years of healthcare AI will be revolutionary. The impact that AI is having in the world is already significant, so having more data, insights, security, and governance established will lead to more precision and efficiency for predictive technologies, the employee and customer experience, and advanced ways to monitor care that ultimately improves the entire healthcare system.

Continuing to listen to improve, revise, and create models will help healthcare and patient care progress positively. This impact will come from industry-specificity in developing new AI tools and models – and we’re excited about it.

Thank you for the great interview, readers who wish to learn more should visit Authenticx.

#Advice#agent#agents#ai#ai assistant#ai model#AI models#ai platform#ai tools#Analysis#approach#Authenticx#background#barrier#Bias#board#Business#career#change#compliance#customer experience#data#data analysis#data-driven#Deep Learning#deployment#diving#efficiency#emails#Events

0 notes

Text

Explore These Exciting DSU Micro Project Ideas

Explore These Exciting DSU Micro Project Ideas Are you a student looking for an interesting micro project to work on? Developing small, self-contained projects is a great way to build your skills and showcase your abilities. At the Distributed Systems University (DSU), we offer a wide range of micro project topics that cover a variety of domains. In this blog post, we’ll explore some exciting DSU…

#3D modeling#agricultural domain knowledge#Android#API design#AR frameworks (ARKit#ARCore)#backend development#best micro project topics#BLOCKCHAIN#Blockchain architecture#Blockchain development#cloud functions#cloud integration#Computer vision#Cryptocurrency protocols#CRYPTOGRAPHY#CSS#data analysis#Data Mining#Data preprocessing#data structure micro project topics#Data Visualization#database integration#decentralized applications (dApps)#decentralized identity protocols#DEEP LEARNING#dialogue management#Distributed systems architecture#distributed systems design#dsu in project management

0 notes

Text

How to Choose the Right Machine Learning Course for Your Career

As the demand for machine learning professionals continues to surge, choosing the right machine learning course has become crucial for anyone looking to build a successful career in this field. With countless options available, from free online courses to intensive boot camps and advanced degrees, making the right choice can be overwhelming.

#machine learning course#data scientist#AI engineer#machine learning researcher#eginner machine learning course#advanced machine learning course#Python programming#data analysis#machine learning curriculum#supervised learning#unsupervised learning#deep learning#natural language processing#reinforcement learning#online machine learning course#in-person machine learning course#flexible learning#machine learning certification#Coursera machine learning#edX machine learning#Udacity machine learning#machine learning instructor#course reviews#student testimonials#career support#job placement#networking opportunities#alumni network#machine learning bootcamp#degree program

0 notes

Text

Autopsie d'une intelligence artificielle | ARTE

youtube

#ai#deep learning#documentary#arte tv#europe#france#germany#sécurité des données#world politics#documentaire#français#arte#artificial intelligence#intelligence artificielle#autopsy#autopsie#politique#sociologie#technology#technologie#data privacy#sécurité Internet#internet security#Irl#world is a fuck#world is so beautiful#data analysis#social media#social justice

0 notes

Text

AI Trading

What is AI and Its Relevance in Modern Trading? 1. Definition of AI Artificial Intelligence (AI): A branch of computer science focused on creating systems capable of performing tasks that typically require human intelligence. These tasks include learning, reasoning, problem-solving, understanding natural language, and perception. Machine Learning (ML): A subset of AI that involves the…

#AI and Market Sentiment#AI and Market Trends#AI in Cryptocurrency Markets#AI in Equity Trading#AI in Finance#AI in Forex Markets#AI Trading Strategies#AI-Driven Investment Strategies#AI-Powered Trading Tools#Artificial Intelligence (AI)#Automated Trading Systems#Backtesting Trading Models#Blockchain Technology#Crypto Market Analysis#cryptocurrency trading#Data Quality in Trading#Deep Learning (DL)#equity markets#Event-Driven Trading#Explainable AI (XAI)#Financial Markets#forex trading#Human-AI Collaboration#learn technical analysis#Machine Learning (ML)#Market Volatility#Natural Language Processing (NLP)#Portfolio Optimization#Predictive Analytics in Trading#Predictive Modeling

0 notes

Text

0 notes

Text

average United States contains 1000s of pet tigers in backyards" factoid actualy [sic] just statistical error. average person has 0 tigers on property. Activist Georg, who lives the U.S. Capitol & makes up over 10,000 each day, has purposefully been spreading disinformation adn [sic] should not have been counted

I have a big mad today, folks. It's a really frustrating one, because years worth of work has been validated... but the reason for that fucking sucks.

For almost a decade, I've been trying to fact-check the claim that there "are 10,000 to 20,000 pet tigers/big cats in backyards in the United States." I talked to zoo, sanctuary, and private cat people; I looked at legislation, regulation, attack/death/escape incident rates; I read everything I could get my hands on. None of it made sense. None of it lined up. I couldn't find data supporting anything like the population of pet cats being alleged to exist. Some of you might remember the series I published on those findings from 2018 or so under the hashtag #CrouchingTigerHiddenData. I've continued to work on it in the six years since, including publishing a peer reviewed study that counted all the non-pet big cats in the US (because even though they're regulated, apparently nobody bothered to keep track of those either).

I spent years of my life obsessing over that statistic because it was being used to push for new federal legislation that, while well intentioned, contained language that would, and has, created real problems for ethical facilities that have big cats. I wrote a comprehensive - 35 page! - analysis of the issues with the then-current version of the Big Cat Public Safety Act in 2020. When the bill was first introduced to Congress in 2013, a lot of groups promoted it by fear mongering: there's so many pet tigers! they could be hidden around every corner! they could escape and attack you! they could come out of nowhere and eat your children!! Tiger King exposed the masses to the idea of "thousands of abused backyard big cats": as a result the messaging around the bill shifted to being welfare-focused, and the law passed in 2022.

The Big Cat Public Safety Act created a registry, and anyone who owned a private cat and wanted to keep it had to join. If they did, they could keep the animal until it passed, as long as they followed certain strictures (no getting more, no public contact, etc). Don’t register and get caught? Cat is seized and major punishment for you. Registering is therefore highly incentivized. That registry closed in June of 2023, and you can now get that registration data via a Freedom of Information Act request.

Guess how many pet big cats were registered in the whole country?

97.

Not tens of thousands. Not thousands. Not even triple digits. 97.

And that isn't even the right number! Ten USDA licensed facilities registered erroneously. That accounts for 55 of 97 animals. Which leaves us with 42 pet big cats, of all species, in the entire country.

Now, I know that not everyone may have registered. There's probably someone living deep in the woods somewhere with their illegal pet cougar, and there's been at least one random person in Texas arrested for trying to sell a cub since the law passed. But - and here's the big thing - even if there are ten times as many hidden cats than people who registered them - that's nowhere near ten thousand animals. Obviously, I had some questions.

Guess what? Turns out, this is because it was never real. That huge number never had data behind it, wasn't likely to be accurate, and the advocacy groups using that statistic to fearmonger and drive their agenda knew it... and didn't see a problem with that.

Allow me to introduce you to an article published last week.

This article is good. (Full disclose, I'm quoted in it). It's comprehensive and fairly written, and they did their due diligence reporting and fact-checking the piece. They talked to a lot of people on all sides of the story.

But thing that really gets me?

Multiple representatives from major advocacy organizations who worked on the Big Cat Publix Safety Act told the reporter that they knew the statistics they were quoting weren't real. And that they don't care. The end justifies the means, the good guys won over the bad guys, that's just how lobbying works after all. They're so blase about it, it makes my stomach hurt. Let me pull some excerpts from the quotes.

"Whatever the true number, nearly everyone in the debate acknowledges a disparity between the actual census and the figures cited by lawmakers. “The 20,000 number is not real,” said Bill Nimmo, founder of Tigers in America. (...) For his part, Nimmo at Tigers in America sees the exaggerated figure as part of the political process. Prior to the passage of the bill, he said, businesses that exhibited and bred big cats juiced the numbers, too. (...) “I’m not justifying the hyperbolic 20,000,” Nimmo said. “In the world of comparing hyperbole, the good guys won this one.”

"Michelle Sinnott, director and counsel for captive animal law enforcement at the PETA Foundation, emphasized that the law accomplished what it was set out to do. (...) Specific numbers are not what really matter, she said: “Whether there’s one big cat in a private home or whether there’s 10,000 big cats in a private home, the underlying problem of industry is still there.”"

I have no problem with a law ending the private ownership of big cats, and with ending cub petting practices. What I do have a problem with is that these organizations purposefully spread disinformation for years in order to push for it. By their own admission, they repeatedly and intentionally promoted false statistics within Congress. For a decade.

No wonder it never made sense. No wonder no matter where I looked, I couldn't figure out how any of these groups got those numbers, why there was never any data to back any of the claims up, why everything I learned seemed to actively contradict it. It was never real. These people decided the truth didn't matter. They knew they had no proof, couldn't verify their shocking numbers... and they decided that was fine, if it achieved the end they wanted.

So members of the public - probably like you, reading this - and legislators who care about big cats and want to see legislation exist to protect them? They got played, got fed false information through a TV show designed to tug at heartstrings, and it got a law through Congress that's causing real problems for ethical captive big cat management. The 20,000 pet cat number was too sexy - too much of a crisis - for anyone to want to look past it and check that the language of the law wouldn't mess things up up for good zoos and sanctuaries. Whoops! At least the "bad guys" lost, right? (The problems are covered somewhat in the article linked, and I'll go into more details in a future post. You can also read my analysis from 2020, linked up top.)

Now, I know. Something something something facts don't matter this much in our post-truth era, stop caring so much, that's just how politics work, etc. I’m sorry, but no. Absolutely not.

Laws that will impact the welfare of living animals must be crafted carefully, thoughtfully, and precisely in order to ensure they achieve their goals without accidental negative impacts. We have a duty of care to ensure that. And in this case, the law also impacts reservoir populations for critically endangered species! We can't get those back if we mess them up. So maybe, just maybe, if legislators hadn't been so focused on all those alleged pet cats, the bill could have been written narrowly and precisely.

But the minutiae of regulatory impacts aren't sexy, and tiger abuse and TV shows about terrible people are. We all got misled, and now we're here, and the animals in good facilities are already paying for it.

I don't have a conclusion. I'm just mad. The public deserves to know the truth about animal legislation they're voting for, and I hope we all call on our legislators in the future to be far more critical of the data they get fed.

#big cats#tiger king#my research#news#big cat public safety act#animal welfare#big cat welfare#legislation and regulation#vent post#long post#crouchingtigerhiddendata#more on the problems with the bill in the future

7K notes

·

View notes

Text

#AI chatbot#AI ethics specialist#AI jobs#Ai Jobsbuster#AI landscape 2024#AI product manager#AI research scientist#AI software products#AI tools#Artificial Intelligence (AI)#BERT#Clarifai#computational graph#Computer Vision API#Content creation#Cortana#creativity#CRM platform#cybersecurity analyst#data scientist#deep learning#deep-learning framework#DeepMind#designing#distributed computing#efficiency#emotional analysis#Facebook AI research lab#game-playing#Google Duplex

0 notes

Text

Unlock the power of machine learning for your next survey. XpBrand.AI leverages cutting-edge AI to analyze responses in real-time, identifying trends and patterns as they emerge. Gain deeper understanding, eliminate biases, and receive actionable insights that empower you to make smarter business decisions. Experience the future of surveys with XpBrand.AI's machine learning engine!

#marketresearch#market research#online survey#ai survey#market analysis#market research surveys#immersive survey#product surveys#consumerbehavior#marketing#business#survey#machinelearning#innovation#technology#deep learning#data analytics#businessintelligence#data scientist#javascript#marketing analytics#codinglife#data visualization#information technology#programmers#machine learning#Machine Learning Survey#automation#developerlife#san jose california

0 notes

Text

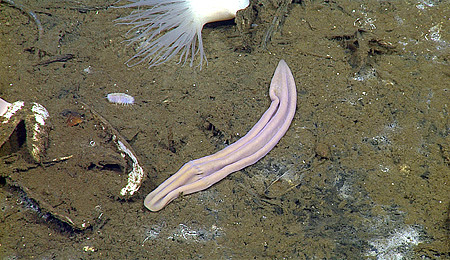

Ok y’all brace yourselves cuz I just learned about a new animal

Yes, that is an animal. Yes, scientists refer to it as the purple sock worm. No, that’s not it’s real name, silly, it’s real name is Xenoturbella!

When these deep-sea socks were first discovered, no one knew what the fuck they were looking at (and, really, can you blame them?). They have no eyes, brains, or digestive tracts. They are literally just a bag of wet slop. DNA analysis initially seemed to indicate that they were related to mollusks, until the scientists realized that DNA sample was from the clams they had recently eaten (yes, they can eat with no organs. We don’t know how.)

Scientists then analyzed the data again and tentatively placed them in the group that includes acorn worms, saying that their ancestors probably had eyes, brains, and organs, but simplified as a response to their deep sea ecosystems.

Later DNA testing has since shown that they are their own thing! Xenoturbella, along with another simple and problematic to place creature called acoelomorphs, belong to their own phylum called Xenacelomorpha! This places them as the sister group to all bilateral animals. So, they just never evolved brains, eyes, or organs. They are a glimpse at a very primitive form of animal that never bothered to change, because apparently what they do works. Rock on, purple sock worm.

36K notes

·

View notes

Text

How IBM and NASA Are Redefining Geospatial AI to Tackle Climate Challenges

New Post has been published on https://thedigitalinsider.com/how-ibm-and-nasa-are-redefining-geospatial-ai-to-tackle-climate-challenges/

How IBM and NASA Are Redefining Geospatial AI to Tackle Climate Challenges

As climate change fuels increasingly severe weather events like floods, hurricanes, droughts, and wildfires, traditional disaster response methods are struggling to keep up. While advances in satellite technology, drones, and remote sensors allow for better monitoring, access to this vital data remains limited to a few organizations, leaving many researchers and innovators without the tools they need. The flood of geospatial data being generated daily has also become a challenge—overwhelming organizations and making it harder to extract meaningful insights. To address these issues, scalable, accessible, and intelligent tools are needed to turn vast datasets into actionable climate insights. This is where geospatial AI becomes vital—an emerging technology that has the potential to analyze large volumes of data, providing more accurate, proactive, and timely predictions. This article explores the groundbreaking collaboration between IBM and NASA to develop advanced, more accessible geospatial AI, empowering a wider audience with the tools necessary to drive innovative environmental and climate solutions.

Why IBM and NASA Are Pioneering Foundation Geospatial AI

Foundation models (FMs) represent a new frontier in AI, designed to learn from vast amounts of unlabeled data and apply their insights across multiple domains. This approach offers several key advantages. Unlike traditional AI models, FMs don’t rely on massive, painstakingly curated datasets. Instead, they can finetune on smaller data samples, saving both time and resources. This makes them a powerful tool for accelerating climate research, where gathering large datasets can be costly and time-consuming.

Moreover, FMs streamline the development of specialized applications, reducing redundant efforts. For example, once an FM is trained, it can be adapted to several downstream applications such as monitoring natural disasters or tracking land use without requiring extensive retraining. Though the initial training process can demand significant computational power, requiring tens of thousands of GPU hours. However, once they are trained, running them during inference takes mere minutes or even seconds.

Additionally, FMs could make advanced weather models accessible to a wider audience. Previously, only well-funded institutions with the resources to support complex infrastructure could run these models. However, with the rise of pre-trained FMs, climate modeling is now within reach for a broader group of researchers and innovators, opening up new avenues for faster discoveries and innovative environmental solutions.

The Genesis of Foundation Geospatial AI

The vast potential of FMs has led IBM and NASA to collaborate for building a comprehensive FM of the Earth’s environment. The key objective of this partnership is to empower researchers to extract insights from NASA’s extensive Earth datasets in a manner that is both effective and accessible.

In this pursuit, they achieve a significant breakthrough in August 2023 with the unveiling of a pioneering FM for geospatial data. This model was trained on NASA’s vast satellite dataset, comprising a 40-year archive of images from the Harmonized Landsat Sentinel-2 (HLS) program. It uses advanced AI techniques, including transformer architectures, to efficiently process substantial volumes of geospatial data. Developed using IBM’s Cloud Vela supercomputer and the watsonx FM stack, the HLS model can analyze data up to four times faster than traditional deep learning models while requiring significantly fewer labeled datasets for training.

The potential applications of this model are extensive, ranging from monitoring land use changes and natural disasters to predicting crop yields. Importantly, this powerful tool is freely available on Hugging Face, allowing researchers and innovators worldwide to utilize its capabilities and contribute to the advancement of climate and environmental science.

Advances in Foundation Geospatial AI

Building on this momentum, IBM and NASA have recently introduced another groundbreaking open-source model FM: Prithvi WxC. This model is designed to address both short-term weather challenges and long-term climate predictions. Pre-trained on 40 years of NASA’s Earth observation data from the Modern-Era Retrospective analysis for Research and Applications, Version 2 (MERRA-2), the FM offers significant advancements over traditional forecasting models.

The model is built using a vision transformer and a masked autoencoder, enabling it to encode spatial data over time. By incorporating a temporal attention mechanism, the FM can analyze MERRA-2 reanalysis data, which integrates various observational streams. The model can operate on both a spherical surface, like traditional climate models, and a flat, rectangular grid, allowing it to change between global and regional views without losing resolution.

This unique architecture enables the Prithvi to be fine-tuned across global, regional, and local scales, while running on a standard desktop computer in seconds. This FM model can be employed for a range of applications including forecasting local weather to predicting extreme weather events, enhancing the spatial resolution of global climate simulations, and refining the representation of physical processes in conventional models. Additionally, Prithvi comes with two fine-tuned versions designed for specific scientific and industrial uses, providing even greater precision for environmental analysis. The model is freely available on hugging face.

The Bottom Line

IBM and NASA’s partnership is redefining geospatial AI, making it easier for researchers and innovators to address pressing climate challenges. By developing foundation models that can effectively analyze large datasets, this collaboration enhances our ability to predict and manage severe weather events. More importantly, it opens the door for a wider audience to access these powerful tools, previously limited to well-resourced institutions. As these advanced AI models become accessible to more people, they pave the way for innovative solutions that can help us respond to climate change more effectively and responsibly.

#2023#ai#AI models#Analysis#applications#approach#architecture#Article#Artificial Intelligence#attention#attention mechanism#Building#challenge#change#climate#Climate Challenges#climate change#climate models#climate research#Cloud#collaborate#Collaboration#comprehensive#computer#Crop Yields#data#datasets#Deep Learning#desktop#development

0 notes

Text

Tic Tac Toe Game In Python

This is my first small Python project where I built a tac-tac-toe game in Python, we have played a lot in small classes while sitting at the last bench some of us have played at the first bench too. It is a very famous game that we are building today after the completion of this project we can play with our friends with the project we have made.

Here's a complete guide to the Tic-tac-toe game in Python!

#datascience #dataanalytics #dataanalysis #statistics #machinelearning #python #deeplearning #supervisedlearning #unsupervisedlearning

#machine learning#data analysis#data science#artificial intelligence#data analytics#deep learning#python#statistics#unsupervised learning#feature selection

0 notes

Text

Best Data Analytics Course in Delhi NCR

Best Data Analytics Institute in Delhi NCR

In the era of digital transformation, the demand for skilled data analysts has skyrocketed, opening doors to exciting career prospects. At School of Core AI (SCAI), we recognize the power of data analytics and have tailored a cutting-edge program to equip individuals with the skills needed to thrive in this dynamic field.

The specific content and structure might vary, and it's advisable to check the official course descriptions provided by the School of Core AI Institute for the most accurate information.

Data Analytics Career Program

Course Module: -

Basic Python:

Introduction

Data Manipulation and Cleaning with Pandas

Exploratory Data Analysis (EDA)

2. Advance Python:

Advanced Data Manipulation and Transformation.

Data visualization and Interactive Dashboards

Advanced Machine Learning Algorithms and Techniques

3. Database:

Introduction to Database for Data Science

Data Retrieval and Manipulation with SQL

Database design and Data Modelling

4. Neural Network Basic

Liner Algebra

Calculus

Probability and Statistics

5. Exploratory Data Analysis (EDA):

Data Collection

Data Cleaning and Preprocessing

EDA

6. Basic Machine Learning:

Data Preparation

Feature Selection

Model Selection and Trainings

7. Power BI:

Data Connection and Transformation

Data Visualization

8. Microsoft Excel and Google Sheets:

Data Management

Charts, Graphics, and Pivotable

Multiple Techniques

9. Industry Applications and Case Studies:

Analyzing complex industry-specific datasets.

Case studies from various domains like finance, healthcare, and marketing.

10. Capstone Project:

Comprehensive project integrating advanced analytics skills.

Presenting findings and insights in a professional manner.

Short term program 02 Months and Rs. 3500/-

If you access Mastering in Advanced Data Analytics course (Rs. 30000/- and 06 months)

Institute Facilities: -

Classrooms and Labs:

Equipped with necessary technology for lectures, presentations, and hands-on exercises.

Learning Materials:

Textbooks, online resources, and other learning materials that support the course curriculum.

Software and Tools:

Access to relevant software and tools used in data analytics, such as statistical analysis software, programming environments (like Python or R), and data visualization tools.

Library Access:

A well-stocked library with books, journals, and online databases related to data analytics and related fields.

Online Learning Platforms:

Access to online platforms for course materials, assignments, and communication with instructors and fellow students.

Tutoring and Support Services:

Availability of tutors or teaching assistants for additional help and support.

Networking Opportunities:

Events, workshops, or seminars where students can network with professionals and experts in the field.

Career Services:

100% Guaranteed Placement with internships, and career guidance related to data analytics, and Top AI Companies Jobs Recommend for Equip Students.

Infrastructure for Virtual Learning:

Online meeting platforms, discussion forums, and other tools to facilitate virtual learning and collaboration.

We provide best trainers FREE DEMO CLASSES for every Equip Students or jobs professionals.

#python#datascience#dataanalytics#course#institute#machine learning#deep learning#market analysis#data#software#career

1 note

·

View note

Text

Greetings, aspiring data scientists!

In 2024, start your journey as a freshmen by being proficient in the fundamentals. Take introductory classes in statistics, mathematics, and computer languages such as Python. Explore platforms and tools for data visualization to improve your analytical abilities. Participate in practical projects and connect with data science communities to remain current with market developments. Develop an inquisitive mindset since in this ever-changing sector, learning never stops. Create a compelling portfolio that highlights your work, and remember the significance of good communication—the ability to explain complicated results in an understandable way is a useful ability. Lastly, to obtain real-world experience and expand your professional network, look for internships or part-time jobs. I wish you well on your fascinating journey into data science!

Let's Connect on LinkedIn

#data science#python#data analytics#data scientist#data analysis#ai#deep learning#machine learning#education

1 note

·

View note

Text

Key Industries & Applications in the Global Deep Learning Market

Over the years, deep learning has emerged as a transformative technology, revolutionizing the way various industries operate. Accordingly, the domain has become instrumental in the field of data analysis, predictive modeling, and process optimization. As per Inkwood Research, the global deep learning market is anticipated to grow with a CAGR of 39.67% during the forecast period 2023-2032.

In this blog, we will explore how major industries are leveraging the potential of deep learning in order to drive innovation and efficiency in their operations. We will also highlight real-world examples of successful deep learning applications in each of these industries.

1. Healthcare

Healthcare organizations of all specialties and types are becoming increasingly inclined toward how artificial intelligence (AI) can facilitate better patient care, while improving efficiencies and reducing costs. In this regard, the healthcare industry has been at the forefront of adopting deep learning for a wide range of applications, such as –

Medical Imaging: Deep learning algorithms have significantly improved the accuracy of medical image analysis. For instance, Aidoc (Israel), a leading provider of AI-powered radiology solutions, aims to improve the efficacy as well as accuracy of radiology diagnoses. The company’s platform utilizes deep learning algorithms in order to analyze medical images and aid radiologists in prioritizing and detecting critical findings.

Drug Discovery: Pharmaceutical companies are using deep learning to expedite drug discovery processes. Insilico Medicine (United States), for example, employs deep learning models to predict potential drug candidates, reducing the time and cost associated with developing new medications. The company’s early bet on deep learning is yielding significant results – a drug candidate discovered via its AI platform is now entering Phase II clinical trials for the treatment of idiopathic pulmonary fibrosis.

Disease Diagnosis: Deep learning is aiding in disease diagnosis through predictive modeling. PathAI (United States), for instance, utilizes deep learning-based AI solutions to assist pathologists in the detection, diagnosis, and prognosis of several cancer subtypes, thus improving the accuracy of diagnoses and patient outcomes.

2. Finance & Banking Services

The success of deep learning as a data processing technique has piqued the interest of the financial research community. Moreover, with the proliferation of Fintech over recent years, the use of deep learning in the finance & banking services industry has become highly prevalent across the following applications –

Fraud Detection: Banks and financial institutions deploy deep learning models to detect fraudulent activities in real time. Companies like Feedzai (Portugal) use deep learning algorithms to analyze transaction data and identify unusual patterns indicative of fraud. In July 2023, the company announced the launch of Railgun, a next-generation fraud detection engine, featuring advanced AI to secure millions from the surge in financial crime.

Algorithmic Trading: Hedge funds and trading firms leverage deep learning for algorithmic trading. Investors are utilizing deep learning models to evaluate and anticipate stock and foreign exchange markets, given the advantage of artificial intelligence.

Credit Scoring: Deep learning is transforming the credit scoring landscape. LenddoEFL (Singapore), for instance, creates, collects, and analyzes information from consent-based alternative data sources for an accurate understanding of creditworthiness. The company’s unique credit decisioning tools draw from large, diverse, and unstructured data sources through deep learning, artificial intelligence, and advanced modeling techniques.

3. Manufacturing

Here’s how the manufacturing industry benefits from deep learning in optimizing production processes and quality control –

Predictive Maintenance: Manufacturers use deep learning to predict equipment failures and schedule maintenance proactively. General Electric’s (United States) Predix platform employs deep learning to support innovative IoT solutions to help reduce downtime and maintenance costs.

Quality Control: Deep learning-based image recognition systems inspect products for defects on production lines. Real-time deep learning approaches are essential for automated industrial processes in product manufacturing, where vision-based systems effectively control the fabrication quality on the basis of specific structural designs.

Supply Chain Optimization: According to industry sources, deep learning models have the potential to generate between $1-2 trillion annually in supply chain management. In this regard, deep learning is used in the manufacturing sector to optimize supply chain operations by predicting demand patterns, enhancing inventory levels, and improving logistics planning.

Deep learning’s ability to process vast amounts of data and recognize complex patterns is transforming the way industries operate, making processes more efficient and enhancing customer experiences. As businesses strive to remain competitive in an increasingly data-driven world, leveraging deep learning and staying updated on its latest developments will be crucial for the overall growth of the global deep learning market.

FAQs:

What are the key components of a deep learning system?

A deep learning system typically comprises input data, a deep neural network architecture, loss functions, optimization algorithms, and labeled training data.

How does deep learning differ from traditional machine learning?

Deep learning, unlike traditional machine learning, eliminates the need for manual feature engineering by allowing models to learn and extract features automatically from data.

0 notes