#Machine Learning (ML)

Explore tagged Tumblr posts

Text

401 notes

·

View notes

Text

Oh my lord. Minecraft Story Mode really predicts Apple Intelligence. We're going to be utilized in the singularity.

(I'm a DS student btw -mystmin)

#mcsm#minecraft story mode#minecraft freaky mode#mcsm pama#machine learning#ml#apple#apple intelligence

24 notes

·

View notes

Text

Uuh… so I heard tumblr doesn’t like AI generated art so I made my own, enjoy!

(this is in fact a judgement free zone btw)

37 notes

·

View notes

Text

Quantum Computing 101: What are Qubits?

Curious about quantum computing? Let's break it down!

🔍 What’s a Qubit? A qubit is the basic unit of quantum information. Unlike classical bits (0 or 1), qubits can be 0, 1, or both at the same time thanks to a phenomenon called superposition.

✨ Why Is This Cool?

Superposition: Allows qubits to explore many possibilities simultaneously.

Entanglement: Qubits can be linked, so the state of one affects the state of another, no matter the distance.

⚙️ In Action: This means quantum computers can tackle complex problems faster by processing a huge number of possibilities at once!

Follow for more insights on the future of tech! 🚀✨

Instagram: cs_learninghub YT: CS Learning Hub

#quantum computing#quantum#science#physics#computer science#bits#tumblr#aesthetic#studyblr#study#study motivation#qubits#machine learning#artificial intelligence#ai#ml#cs#learn#study blog

21 notes

·

View notes

Text

they're shaking hands honest

(ai/machine learning generated animations)

14 notes

·

View notes

Text

the good thing is that I think I finally found the final focus for my thesis. the bad thing is that it’s 2AM and I got so much adrenaline from that that I will never sleep

#also I still. somehow. after fighting it at every turn! managed to make it be partly about machine learning hOw#there’s just no escape from AI/ML/DL is there (I tried to stay away from that bc ohmg it’s everywhere and I’ve been getting increasingly#more annoyed every time I see the words AI somewhere (also often used so wildly incorrectly bc of it’s a trend rn))#but yeah it’s alright at least that’s not the sole focus#ba thesis struggle diary#2024#march 2024

26 notes

·

View notes

Text

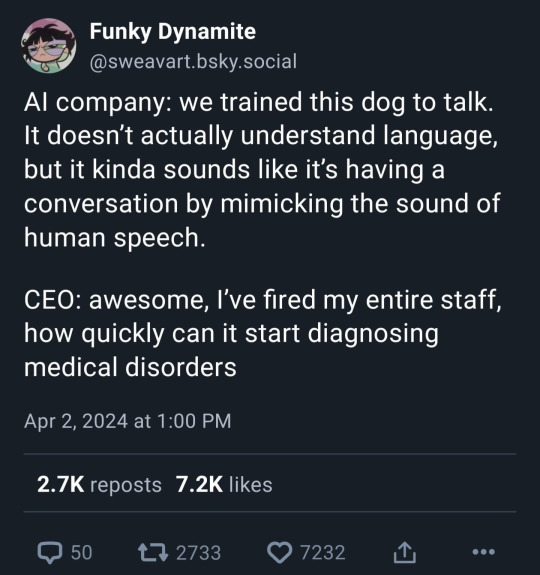

Truth speaking on the corporate obsession with AI

Hilarious. Something tells me this person's on the hellsite(affectionate)

#ai#corporate bs#late stage capitalism#funny#truth#artificial intelligence#ml#machine learning#data science#data scientist#programming#scientific programming

8 notes

·

View notes

Text

PGDM Specialization in AI & ML: Preparing for the Future of Business and Technology

#PG Diploma in AI and ML#Artificial Intelligence#Machine Learning#AI and ML in Business#AI and ML Career Opportunities#Career in Artificial Intelligence#Business and Technology#PGDM in AI and ML

2 notes

·

View notes

Text

The AI hype bubble is the new crypto hype bubble

Back in 2017 Long Island Ice Tea — known for its undistinguished, barely drinkable sugar-water — changed its name to “Long Blockchain Corp.” Its shares surged to a peak of 400% over their pre-announcement price. The company announced no specific integrations with any kind of blockchain, nor has it made any such integrations since.

If you’d like an essay-formatted version of this post to read or share, here’s a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/03/09/autocomplete-worshippers/#the-real-ai-was-the-corporations-that-we-fought-along-the-way

LBCC was subsequently delisted from NASDAQ after settling with the SEC over fraudulent investor statements. Today, the company trades over the counter and its market cap is $36m, down from $138m.

https://cointelegraph.com/news/textbook-case-of-crypto-hype-how-iced-tea-company-went-blockchain-and-failed-despite-a-289-percent-stock-rise

The most remarkable thing about this incredibly stupid story is that LBCC wasn’t the peak of the blockchain bubble — rather, it was the start of blockchain’s final pump-and-dump. By the standards of 2022’s blockchain grifters, LBCC was small potatoes, a mere $138m sugar-water grift.

They didn’t have any NFTs, no wash trades, no ICO. They didn’t have a Superbowl ad. They didn’t steal billions from mom-and-pop investors while proclaiming themselves to be “Effective Altruists.” They didn’t channel hundreds of millions to election campaigns through straw donations and other forms of campaing finance frauds. They didn’t even open a crypto-themed hamburger restaurant where you couldn’t buy hamburgers with crypto:

https://robbreport.com/food-drink/dining/bored-hungry-restaurant-no-cryptocurrency-1234694556/

They were amateurs. Their attempt to “make fetch happen” only succeeded for a brief instant. By contrast, the superpredators of the crypto bubble were able to make fetch happen over an improbably long timescale, deploying the most powerful reality distortion fields since Pets.com.

Anything that can’t go on forever will eventually stop. We’re told that trillions of dollars’ worth of crypto has been wiped out over the past year, but these losses are nowhere to be seen in the real economy — because the “wealth” that was wiped out by the crypto bubble’s bursting never existed in the first place.

Like any Ponzi scheme, crypto was a way to separate normies from their savings through the pretense that they were “investing” in a vast enterprise — but the only real money (“fiat” in cryptospeak) in the system was the hardscrabble retirement savings of working people, which the bubble’s energetic inflaters swapped for illiquid, worthless shitcoins.

We’ve stopped believing in the illusory billions. Sam Bankman-Fried is under house arrest. But the people who gave him money — and the nimbler Ponzi artists who evaded arrest — are looking for new scams to separate the marks from their money.

Take Morganstanley, who spent 2021 and 2022 hyping cryptocurrency as a massive growth opportunity:

https://cointelegraph.com/news/morgan-stanley-launches-cryptocurrency-research-team

Today, Morganstanley wants you to know that AI is a $6 trillion opportunity.

They’re not alone. The CEOs of Endeavor, Buzzfeed, Microsoft, Spotify, Youtube, Snap, Sports Illustrated, and CAA are all out there, pumping up the AI bubble with every hour that god sends, declaring that the future is AI.

https://www.hollywoodreporter.com/business/business-news/wall-street-ai-stock-price-1235343279/

Google and Bing are locked in an arms-race to see whose search engine can attain the speediest, most profound enshittification via chatbot, replacing links to web-pages with florid paragraphs composed by fully automated, supremely confident liars:

https://pluralistic.net/2023/02/16/tweedledumber/#easily-spooked

Blockchain was a solution in search of a problem. So is AI. Yes, Buzzfeed will be able to reduce its wage-bill by automating its personality quiz vertical, and Spotify’s “AI DJ” will produce slightly less terrible playlists (at least, to the extent that Spotify doesn’t put its thumb on the scales by inserting tracks into the playlists whose only fitness factor is that someone paid to boost them).

But even if you add all of this up, double it, square it, and add a billion dollar confidence interval, it still doesn’t add up to what Bank Of America analysts called “a defining moment — like the internet in the ’90s.” For one thing, the most exciting part of the “internet in the ‘90s” was that it had incredibly low barriers to entry and wasn’t dominated by large companies — indeed, it had them running scared.

The AI bubble, by contrast, is being inflated by massive incumbents, whose excitement boils down to “This will let the biggest companies get much, much bigger and the rest of you can go fuck yourselves.” Some revolution.

AI has all the hallmarks of a classic pump-and-dump, starting with terminology. AI isn’t “artificial” and it’s not “intelligent.” “Machine learning” doesn’t learn. On this week’s Trashfuture podcast, they made an excellent (and profane and hilarious) case that ChatGPT is best understood as a sophisticated form of autocomplete — not our new robot overlord.

https://open.spotify.com/episode/4NHKMZZNKi0w9mOhPYIL4T

We all know that autocomplete is a decidedly mixed blessing. Like all statistical inference tools, autocomplete is profoundly conservative — it wants you to do the same thing tomorrow as you did yesterday (that’s why “sophisticated” ad retargeting ads show you ads for shoes in response to your search for shoes). If the word you type after “hey” is usually “hon” then the next time you type “hey,” autocomplete will be ready to fill in your typical following word — even if this time you want to type “hey stop texting me you freak”:

https://blog.lareviewofbooks.org/provocations/neophobic-conservative-ai-overlords-want-everything-stay/

And when autocomplete encounters a new input — when you try to type something you’ve never typed before — it tries to get you to finish your sentence with the statistically median thing that everyone would type next, on average. Usually that produces something utterly bland, but sometimes the results can be hilarious. Back in 2018, I started to text our babysitter with “hey are you free to sit” only to have Android finish the sentence with “on my face” (not something I’d ever typed!):

https://mashable.com/article/android-predictive-text-sit-on-my-face

Modern autocomplete can produce long passages of text in response to prompts, but it is every bit as unreliable as 2018 Android SMS autocomplete, as Alexander Hanff discovered when ChatGPT informed him that he was dead, even generating a plausible URL for a link to a nonexistent obit in The Guardian:

https://www.theregister.com/2023/03/02/chatgpt_considered_harmful/

Of course, the carnival barkers of the AI pump-and-dump insist that this is all a feature, not a bug. If autocomplete says stupid, wrong things with total confidence, that’s because “AI” is becoming more human, because humans also say stupid, wrong things with total confidence.

Exhibit A is the billionaire AI grifter Sam Altman, CEO if OpenAI — a company whose products are not open, nor are they artificial, nor are they intelligent. Altman celebrated the release of ChatGPT by tweeting “i am a stochastic parrot, and so r u.”

https://twitter.com/sama/status/1599471830255177728

This was a dig at the “stochastic parrots” paper, a comprehensive, measured roundup of criticisms of AI that led Google to fire Timnit Gebru, a respected AI researcher, for having the audacity to point out the Emperor’s New Clothes:

https://www.technologyreview.com/2020/12/04/1013294/google-ai-ethics-research-paper-forced-out-timnit-gebru/

Gebru’s co-author on the Parrots paper was Emily M Bender, a computational linguistics specialist at UW, who is one of the best-informed and most damning critics of AI hype. You can get a good sense of her position from Elizabeth Weil’s New York Magazine profile:

https://nymag.com/intelligencer/article/ai-artificial-intelligence-chatbots-emily-m-bender.html

Bender has made many important scholarly contributions to her field, but she is also famous for her rules of thumb, which caution her fellow scientists not to get high on their own supply:

Please do not conflate word form and meaning

Mind your own credulity

As Bender says, we’ve made “machines that can mindlessly generate text, but we haven’t learned how to stop imagining the mind behind it.” One potential tonic against this fallacy is to follow an Italian MP’s suggestion and replace “AI” with “SALAMI” (“Systematic Approaches to Learning Algorithms and Machine Inferences”). It’s a lot easier to keep a clear head when someone asks you, “Is this SALAMI intelligent? Can this SALAMI write a novel? Does this SALAMI deserve human rights?”

Bender’s most famous contribution is the “stochastic parrot,” a construct that “just probabilistically spits out words.” AI bros like Altman love the stochastic parrot, and are hellbent on reducing human beings to stochastic parrots, which will allow them to declare that their chatbots have feature-parity with human beings.

At the same time, Altman and Co are strangely afraid of their creations. It’s possible that this is just a shuck: “I have made something so powerful that it could destroy humanity! Luckily, I am a wise steward of this thing, so it’s fine. But boy, it sure is powerful!”

They’ve been playing this game for a long time. People like Elon Musk (an investor in OpenAI, who is hoping to convince the EU Commission and FTC that he can fire all of Twitter’s human moderators and replace them with chatbots without violating EU law or the FTC’s consent decree) keep warning us that AI will destroy us unless we tame it.

There’s a lot of credulous repetition of these claims, and not just by AI’s boosters. AI critics are also prone to engaging in what Lee Vinsel calls criti-hype: criticizing something by repeating its boosters’ claims without interrogating them to see if they’re true:

https://sts-news.medium.com/youre-doing-it-wrong-notes-on-criticism-and-technology-hype-18b08b4307e5

There are better ways to respond to Elon Musk warning us that AIs will emulsify the planet and use human beings for food than to shout, “Look at how irresponsible this wizard is being! He made a Frankenstein’s Monster that will kill us all!” Like, we could point out that of all the things Elon Musk is profoundly wrong about, he is most wrong about the philosophical meaning of Wachowksi movies:

https://www.theguardian.com/film/2020/may/18/lilly-wachowski-ivana-trump-elon-musk-twitter-red-pill-the-matrix-tweets

But even if we take the bros at their word when they proclaim themselves to be terrified of “existential risk” from AI, we can find better explanations by seeking out other phenomena that might be triggering their dread. As Charlie Stross points out, corporations are Slow AIs, autonomous artificial lifeforms that consistently do the wrong thing even when the people who nominally run them try to steer them in better directions:

https://media.ccc.de/v/34c3-9270-dude_you_broke_the_future

Imagine the existential horror of a ultra-rich manbaby who nominally leads a company, but can’t get it to follow: “everyone thinks I’m in charge, but I’m actually being driven by the Slow AI, serving as its sock puppet on some days, its golem on others.”

Ted Chiang nailed this back in 2017 (the same year of the Long Island Blockchain Company):

There’s a saying, popularized by Fredric Jameson, that it’s easier to imagine the end of the world than to imagine the end of capitalism. It’s no surprise that Silicon Valley capitalists don’t want to think about capitalism ending. What’s unexpected is that the way they envision the world ending is through a form of unchecked capitalism, disguised as a superintelligent AI. They have unconsciously created a devil in their own image, a boogeyman whose excesses are precisely their own.

https://www.buzzfeednews.com/article/tedchiang/the-real-danger-to-civilization-isnt-ai-its-runaway

Chiang is still writing some of the best critical work on “AI.” His February article in the New Yorker, “ChatGPT Is a Blurry JPEG of the Web,” was an instant classic:

[AI] hallucinations are compression artifacts, but — like the incorrect labels generated by the Xerox photocopier — they are plausible enough that identifying them requires comparing them against the originals, which in this case means either the Web or our own knowledge of the world.

https://www.newyorker.com/tech/annals-of-technology/chatgpt-is-a-blurry-jpeg-of-the-web

“AI” is practically purpose-built for inflating another hype-bubble, excelling as it does at producing party-tricks — plausible essays, weird images, voice impersonations. But as Princeton’s Matthew Salganik writes, there’s a world of difference between “cool” and “tool”:

https://freedom-to-tinker.com/2023/03/08/can-chatgpt-and-its-successors-go-from-cool-to-tool/

Nature can claim “conversational AI is a game-changer for science” but “there is a huge gap between writing funny instructions for removing food from home electronics and doing scientific research.” Salganik tried to get ChatGPT to help him with the most banal of scholarly tasks — aiding him in peer reviewing a colleague’s paper. The result? “ChatGPT didn’t help me do peer review at all; not one little bit.”

The criti-hype isn’t limited to ChatGPT, of course — there’s plenty of (justifiable) concern about image and voice generators and their impact on creative labor markets, but that concern is often expressed in ways that amplify the self-serving claims of the companies hoping to inflate the hype machine.

One of the best critical responses to the question of image- and voice-generators comes from Kirby Ferguson, whose final Everything Is a Remix video is a superb, visually stunning, brilliantly argued critique of these systems:

https://www.youtube.com/watch?v=rswxcDyotXA

One area where Ferguson shines is in thinking through the copyright question — is there any right to decide who can study the art you make? Except in some edge cases, these systems don’t store copies of the images they analyze, nor do they reproduce them:

https://pluralistic.net/2023/02/09/ai-monkeys-paw/#bullied-schoolkids

For creators, the important material question raised by these systems is economic, not creative: will our bosses use them to erode our wages? That is a very important question, and as far as our bosses are concerned, the answer is a resounding yes.

Markets value automation primarily because automation allows capitalists to pay workers less. The textile factory owners who purchased automatic looms weren’t interested in giving their workers raises and shorting working days. ‘ They wanted to fire their skilled workers and replace them with small children kidnapped out of orphanages and indentured for a decade, starved and beaten and forced to work, even after they were mangled by the machines. Fun fact: Oliver Twist was based on the bestselling memoir of Robert Blincoe, a child who survived his decade of forced labor:

https://www.gutenberg.org/files/59127/59127-h/59127-h.htm

Today, voice actors sitting down to record for games companies are forced to begin each session with “My name is ______ and I hereby grant irrevocable permission to train an AI with my voice and use it any way you see fit.”

https://www.vice.com/en/article/5d37za/voice-actors-sign-away-rights-to-artificial-intelligence

Let’s be clear here: there is — at present — no firmly established copyright over voiceprints. The “right” that voice actors are signing away as a non-negotiable condition of doing their jobs for giant, powerful monopolists doesn’t even exist. When a corporation makes a worker surrender this right, they are betting that this right will be created later in the name of “artists’ rights” — and that they will then be able to harvest this right and use it to fire the artists who fought so hard for it.

There are other approaches to this. We could support the US Copyright Office’s position that machine-generated works are not works of human creative authorship and are thus not eligible for copyright — so if corporations wanted to control their products, they’d have to hire humans to make them:

https://www.theverge.com/2022/2/21/22944335/us-copyright-office-reject-ai-generated-art-recent-entrance-to-paradise

Or we could create collective rights that belong to all artists and can’t be signed away to a corporation. That’s how the right to record other musicians’ songs work — and it’s why Taylor Swift was able to re-record the masters that were sold out from under her by evil private-equity bros::

https://doctorow.medium.com/united-we-stand-61e16ec707e2

Whatever we do as creative workers and as humans entitled to a decent life, we can’t afford drink the Blockchain Iced Tea. That means that we have to be technically competent, to understand how the stochastic parrot works, and to make sure our criticism doesn’t just repeat the marketing copy of the latest pump-and-dump.

Today (Mar 9), you can catch me in person in Austin at the UT School of Design and Creative Technologies, and remotely at U Manitoba’s Ethics of Emerging Tech Lecture.

Tomorrow (Mar 10), Rebecca Giblin and I kick off the SXSW reading series.

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

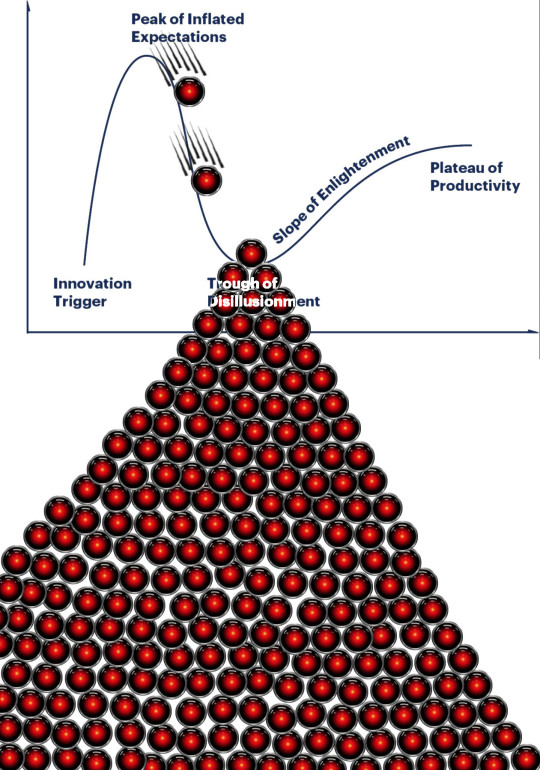

[Image ID: A graph depicting the Gartner hype cycle. A pair of HAL 9000's glowing red eyes are chasing each other down the slope from the Peak of Inflated Expectations to join another one that is at rest in the Trough of Disillusionment. It, in turn, sits atop a vast cairn of HAL 9000 eyes that are piled in a rough pyramid that extends below the graph to a distance of several times its height.]

#pluralistic#ai#ml#machine learning#artificial intelligence#chatbot#chatgpt#cryptocurrency#gartner hype cycle#hype cycle#trough of disillusionment#crypto#bubbles#bubblenomics#criti-hype#lee vinsel#slow ai#timnit gebru#emily bender#paperclip maximizers#enshittification#immortal colony organisms#blurry jpegs#charlie stross#ted chiang

2K notes

·

View notes

Text

325 notes

·

View notes

Text

The Role of Machine Learning Engineer: Combining Technology and Artificial Intelligence

Artificial intelligence has transformed our daily lives in a greater way than we can’t imagine over the past year, Impacting how we work, communicate, and solve problems. Today, Artificial intelligence furiously drives the world in all sectors from daily life to the healthcare industry. In this blog we will learn how machine learning engineer build systems that learn from data and get better over time, playing a huge part in the development of artificial intelligence (AI). Artificial intelligence is an important field, making it more innovative in every industry. In the blog, we will look career in Machine learning in the field of engineering.

What is Machine Learning Engineering?

Machine Learning engineer is a specialist who designs and builds AI models to make complex challenges easy. The role in this field merges data science and software engineering making both fields important in this field. The main role of a Machine learning engineer is to build and design software that can automate AI models. The demand for this field has grown in recent years. As Artificial intelligence is a driving force in our daily needs, it become important to run the AI in a clear and automated way.

A machine learning engineer creates systems that help computers to learn and make decisions, similar to human tasks like recognizing voices, identifying images, or predicting results. Not similar to regular programming, which follows strict rules, machine learning focuses on teaching computers to find patterns in data and improve their predictions over time.

Responsibility of a Machine Learning Engineer:

Collecting and Preparing Data

Machine learning needs a lot of data to work well. These engineers spend a lot of time finding and organizing data. That means looking for useful data sources and fixing any missing information. Good data preparation is essential because it sets the foundation for building successful models.

Building and Training Models

The main task of Machine learning engineer is creating models that learn from data. Using tools like TensorFlow, PyTorch, and many more, they build proper algorithms for specific tasks. Training a model is challenging and requires careful adjustments and monitoring to ensure it’s accurate and useful.

Checking Model Performance

When a model is trained, then it is important to check how well it works. Machine learning engineers use scores like accuracy to see model performance. They usually test the model with separate data to see how it performs in real-world situations and make improvements as needed.

Arranging and Maintaining the Model

After testing, ML engineers put the model into action so it can work with real-time data. They monitor the model to make sure it stays accurate over time, as data can change and affect results. Regular updates help keep the model effective.

Working with Other Teams

ML engineers often work closely with data scientists, software engineers, and experts in the field. This teamwork ensures that the machine learning solution fits the business goals and integrates smoothly with other systems.

Important skill that should have to become Machine Learning Engineer:

Programming Languages

Python and R are popular options in machine learning, also other languages like Java or C++ can also help, especially for projects needing high performance.

Data Handling and Processing

Working with large datasets is necessary in Machine Learning. ML engineers should know how to use SQL and other database tools and be skilled in preparing and cleaning data before using it in models.

Machine Learning Structure

ML engineers need to know structure like TensorFlow, Keras, PyTorch, and sci-kit-learn. Each of these tools has unique strengths for building and training models, so choosing the right one depends on the project.

Mathematics and Statistics

A strong background in math, including calculus, linear algebra, probability, and statistics, helps ML engineers understand how algorithms work and make accurate predictions.

Why to become a Machine Learning engineer?

A career as a machine learning engineer is both challenging and creative, allowing you to work with the latest technology. This field is always changing, with new tools and ideas coming up every year. If you like to enjoy solving complex problems and want to make a real impact, ML engineering offers an exciting path.

Conclusion

Machine learning engineer plays an important role in AI and data science, turning data into useful insights and creating systems that learn on their own. This career is great for people who love technology, enjoy learning, and want to make a difference in their lives. With many opportunities and uses, Artificial intelligence is a growing field that promises exciting innovations that will shape our future. Artificial Intelligence is changing the world and we should also keep updated our knowledge in this field, Read AI related latest blogs here.

2 notes

·

View notes

Text

The Comprehensive Guide to Web Development, Data Management, and More

Introduction

Everything today is technology driven in this digital world. There's a lot happening behind the scenes when you use your favorite apps, go to websites, and do other things with all of those zeroes and ones — or binary data. In this blog, I will be explaining what all these terminologies really means and other basics of web development, data management etc. We will be discussing them in the simplest way so that this becomes easy to understand for beginners or people who are even remotely interested about technology. JOIN US

What is Web Development?

Web development refers to the work and process of developing a website or web application that can run in a web browser. From laying out individual web page designs before we ever start coding, to how the layout will be implemented through HTML/CSS. There are two major fields of web development — front-end and back-end.

Front-End Development

Front-end development, also known as client-side development, is the part of web development that deals with what users see and interact with on their screens. It involves using languages like HTML, CSS, and JavaScript to create the visual elements of a website, such as buttons, forms, and images. JOIN US

HTML (HyperText Markup Language):

HTML is the foundation of all website, it helps one to organize their content on web platform. It provides the default style to basic elements such as headings, paragraphs and links.

CSS (Cascading Style Sheets):

styles and formats HTML elements. It makes an attractive and user-friendly look of webpage as it controls the colors, fonts, layout.

JavaScript :

A language for adding interactivity to a website Users interact with items, like clicking a button to send in a form or viewing images within the slideshow. JOIN US

Back-End Development

The difference while front-end development is all about what the user sees, back end involves everything that happens behind. The back-end consists of a server, database and application logic that runs on the web.

Server:

A server is a computer that holds website files and provides them to the user browser when they request it. Server-Side: These are populated by back-end developers who build and maintain servers using languages like Python, PHP or Ruby.

Database:

The place where a website keeps its data, from user details to content and settings The database is maintained with services like MySQL, PostgreSQL, or MongoDB. JOIN US

Application Logic —

the code that links front-end and back-end It takes user input, gets data from the database and returns right informations to front-end area.

Why Proper Data Management is Absolutely Critical

Data management — Besides web development this is the most important a part of our Digital World. What Is Data Management? It includes practices, policies and procedures that are used to collect store secure data in controlled way.

Data Storage –

data after being collected needs to be stored securely such data can be stored in relational databases or cloud storage solutions. The most important aspect here is that the data should never be accessed by an unauthorized source or breached. JOIN US

Data processing:

Right from storing the data, with Big Data you further move on to process it in order to make sense out of hordes of raw information. This includes cleansing the data (removing errors or redundancies), finding patterns among it, and producing ideas that could be useful for decision-making.

Data Security:

Another important part of data management is the security of it. It refers to defending data against unauthorized access, breaches or other potential vulnerabilities. You can do this with some basic security methods, mostly encryption and access controls as well as regular auditing of your systems.

Other Critical Tech Landmarks

There are a lot of disciplines in the tech world that go beyond web development and data management. Here are a few of them:

Cloud Computing

Leading by example, AWS had established cloud computing as the on-demand delivery of IT resources and applications via web services/Internet over a decade considering all layers to make it easy from servers up to top most layer. This will enable organizations to consume technology resources in the form of pay-as-you-go model without having to purchase, own and feed that infrastructure. JOIN US

Cloud Computing Advantages:

Main advantages are cost savings, scalability, flexibility and disaster recovery. Resources can be scaled based on usage, which means companies only pay for what they are using and have the data backed up in case of an emergency.

Examples of Cloud Services:

Few popular cloud services are Amazon Web Services (AWS), Microsoft Azure, and Google Cloud. These provide a plethora of services that helps to Develop and Manage App, Store Data etc.

Cybersecurity

As the world continues to rely more heavily on digital technologies, cybersecurity has never been a bigger issue. Protecting computer systems, networks and data from cyber attacks is called Cyber security.

Phishing attacks, Malware, Ransomware and Data breaches:

This is common cybersecurity threats. These threats can bear substantial ramifications, from financial damages to reputation harm for any corporation.

Cybersecurity Best Practices:

In order to safeguard against cybersecurity threats, it is necessary to follow best-practices including using strong passwords and two-factor authorization, updating software as required, training employees on security risks.

Artificial Intelligence and Machine Learning

Artificial Intelligence (AI) and Machine Learning (ML) represent the fastest-growing fields of creating systems that learn from data, identifying patterns in them. These are applied to several use-cases like self driving cars, personalization in Netflix.

AI vs ML —

AI is the broader concept of machines being able to carry out tasks in a way we would consider “smart”. Machine learning is a type of Artificial Intelligence (AI) that provides computers with the ability to learn without being explicitly programmed. JOIN US

Applications of Artificial Intelligence and Machine Learning: some common applications include Image recognition, Speech to text, Natural language processing, Predictive analytics Robotics.

Web Development meets Data Management etc.

We need so many things like web development, data management and cloud computing plus cybersecurity etc.. but some of them are most important aspects i.e. AI/ML yet more fascinating is where these fields converge or play off each other.

Web Development and Data Management

Web Development and Data Management goes hand in hand. The large number of websites and web-based applications in the world generate enormous amounts of data — from user interactions, to transaction records. Being able to manage this data is key in providing a fantastic user experience and enabling you to make decisions based on the right kind of information.

E.g. E-commerce Website, products data need to be saved on server also customers data should save in a database loosely coupled with orders and payments. This data is necessary for customization of the shopping experience as well as inventory management and fraud prevention.

Cloud Computing and Web Development

The development of the web has been revolutionized by cloud computing which gives developers a way to allocate, deploy and scale applications more or less without service friction. Developers now can host applications and data in cloud services instead of investing for physical servers.

E.g. A start-up company can use cloud services to roll out the web application globally in order for all users worldwide could browse it without waiting due unavailability of geolocation prohibited access.

The Future of Cybersecurity and Data Management

Which makes Cybersecurity a very important part of the Data management. The more data collected and stored by an organization, the greater a target it becomes for cyber threats. It is important to secure this data using robust cybersecurity measures, so that sensitive information remains intact and customer trust does not weaken. JOIN US

Ex: A healthcare provider would have to protect patient data in order to be compliant with regulations such as HIPAA (Health Insurance Portability and Accountability Act) that is also responsible for ensuring a degree of confidentiality between a provider and their patients.

Conclusion

Well, in a nutshell web-developer or Data manager etc are some of the integral parts for digital world.

As a Business Owner, Tech Enthusiast or even if you are just planning to make your Career in tech — it is important that you understand these. With the progress of technology never slowing down, these intersections are perhaps only going to come together more strongly and develop into cornerstones that define how we live in a digital world tomorrow.

With the fundamental knowledge of web development, data management, automation and ML you will manage to catch up with digital movements. Whether you have a site to build, ideas data to manage or simply interested in what’s hot these days, skills and knowledge around the above will stand good for changing tech world. JOIN US

#Technology#Web Development#Front-End Development#Back-End Development#HTML#CSS#JavaScript#Data Management#Data Security#Cloud Computing#AWS (Amazon Web Services)#Cybersecurity#Artificial Intelligence (AI)#Machine Learning (ML)#Digital World#Tech Trends#IT Basics#Beginners Guide#Web Development Basics#Tech Enthusiast#Tech Career#america

4 notes

·

View notes

Text

7 Computer Vision Projects for All Levels - KDnuggets

2 notes

·

View notes

Text

AKA: How to cut through the AI news reporting PR BS

Link: https://www.cjr.org/analysis/how-to-report-better-on-artificial-intelligence.php This is an excellent article for journalists, and the public alike, to better scrutinise the claims of large AI companies and their models. There’s an awful lot of fawning, non-critical, reporting on this topic and the moment and it’s really pissing me off. We need to hold companies accountable so that we aren’t suckered into making bad decisions based on their public relations department’s claims. 100% a lot of AI progress is highly impressive, and deserves a lot of attention and praise, but we need journalists to cut through to the cold, hard, facts. Hopefully this will help you personally when reading articles and determining whether they’re fan-wank or critical reporting.

20 notes

·

View notes

Text

sorry for being incredibly ia I have had so much homework this past week junior year end of quarter is no fucking joke holy. That being said I hope dream gets the chance to maim whomever he likes 👍

#I have been so busy I have barely had time to pay attention to anything that isn’t my friends or what I pick up from my priv#so I’m only tangentially aware of what’s going on and I’d like to keep it that way#10 page paper machine learning project (I’m not enrolled in an ml class and yet.) a different ml pset and a data story#I admire those of u who are students and active bc my brain just cannot handle them at the same time

19 notes

·

View notes

Text

Boost E-commerce in Saudi Arabia with ML-Powered Apps

In today's digital era, the e-commerce industry in Saudi Arabia is rapidly expanding, fueled by increasing internet penetration and a tech-savvy population. To stay competitive, businesses are turning to advanced technologies, particularly Machine Learning (ML), to enhance user experiences, optimize operations, and drive growth. This article explores how ML is transforming the e-commerce landscape in Saudi Arabia and how businesses can leverage this technology to boost their success.

The Current E-commerce Landscape in Saudi Arabia

The e-commerce market in Saudi Arabia has seen exponential growth over the past few years. With a young population, widespread smartphone usage, and supportive government policies, the Kingdom is poised to become a leading e-commerce hub in the Middle East. Key players like Noon, Souq, and Jarir have set the stage, but the market is ripe for innovation, especially with the integration of Machine Learning.

The Role of Machine Learning in E-commerce

Machine Learning, a subset of Artificial Intelligence (AI), involves the use of algorithms to analyze data, learn from it, and make informed decisions. In e-commerce, ML enhances various aspects, from personalization to fraud detection. Machine Learning’s ability to analyze large datasets and identify trends is crucial for businesses aiming to stay ahead in a competitive market.

Personalized Shopping Experiences

Personalization is crucial in today’s e-commerce environment. ML algorithms analyze user data, such as browsing history and purchase behavior, to recommend products that align with individual preferences. This not only elevates the customer experience but also drives higher conversion rates. For example, platforms that leverage ML for personalization have seen significant boosts in sales, as users are more likely to purchase items that resonate with their interests.

Optimizing Inventory Management

Effective inventory management is critical for e-commerce success. ML-driven predictive analytics can forecast demand with high accuracy, helping businesses maintain optimal inventory levels. This minimizes the chances of overstocking or running out of products, ensuring timely availability for customers. E-commerce giants like Amazon have successfully implemented ML to streamline their inventory management processes, setting a benchmark for others to follow.

Dynamic Pricing Strategies

Price is a major factor influencing consumer decisions. Machine Learning enables real-time dynamic pricing by assessing market trends, competitor rates, and customer demand. This allows businesses to adjust their prices to maximize revenue while remaining competitive. Dynamic pricing, powered by ML, has proven effective in attracting price-sensitive customers and increasing overall profitability.

Enhanced Customer Support

Customer support is another area where ML shines. AI-powered chatbots and virtual assistants can handle a large volume of customer inquiries, providing instant responses and resolving issues efficiently. This not only improves customer satisfaction but also reduces the operational costs associated with maintaining a large support team. E-commerce businesses in Saudi Arabia can greatly benefit from incorporating ML into their customer service strategies.

Fraud Detection and Security

With the rise of online transactions, ensuring the security of customer data and payments is paramount. ML algorithms can detect fraudulent activities by analyzing transaction patterns and identifying anomalies. By implementing ML-driven security measures, e-commerce businesses can protect their customers and build trust, which is essential for long-term success.

Improving Marketing Campaigns

Effective marketing is key to driving e-commerce success. ML can analyze customer data to create targeted marketing campaigns that resonate with specific audiences. It enhances the impact of marketing efforts, leading to improved customer engagement and higher conversion rates. Successful e-commerce platforms use ML to fine-tune their marketing strategies, ensuring that their messages reach the right people at the right time.

Case Study: Successful E-commerce Companies in Saudi Arabia Using ML

Several e-commerce companies in Saudi Arabia have already begun leveraging ML to drive growth. For example, Noon uses ML to personalize the shopping experience and optimize its supply chain, leading to increased customer satisfaction and operational efficiency. These companies serve as examples of how ML can be a game-changer in the competitive e-commerce market.

Challenges of Implementing Machine Learning in E-commerce

While the benefits of ML are clear, implementing this technology in e-commerce is not without challenges. Technical hurdles, such as integrating ML with existing systems, can be daunting. Additionally, there are concerns about data privacy, particularly in handling sensitive customer information. Businesses must address these challenges to fully harness the power of ML.

Future Trends in Machine Learning and E-commerce

As ML continues to evolve, new trends are emerging that will shape the future of e-commerce. For instance, the integration of ML with augmented reality (AR) offers exciting possibilities, such as virtual try-ons for products. Businesses that stay ahead of these trends will be well-positioned to lead the market in the coming years.

Influence of Machine Learning on Consumer Behavior in Saudi Arabia

ML is already influencing consumer behavior in Saudi Arabia, with personalized experiences leading to increased customer loyalty. As more businesses adopt ML, consumers can expect even more tailored shopping experiences, further enhancing their satisfaction and engagement.

Government Support and Regulations

The Saudi government is proactively encouraging the integration of cutting-edge technologies, including ML, within the e-commerce industry. Through initiatives like Vision 2030, the government aims to transform the Kingdom into a global tech hub. However, businesses must also navigate regulations related to data privacy and AI to ensure compliance.

Conclusion

Machine Learning is revolutionizing e-commerce in Saudi Arabia, offering businesses new ways to enhance user experiences, optimize operations, and drive growth. By embracing ML, e-commerce companies can not only stay competitive but also set new standards in the industry. The future of e-commerce in Saudi Arabia is bright, and Machine Learning will undoubtedly play a pivotal role in shaping its success.

FAQs

How does Machine Learning contribute to the e-commerce sector? Machine Learning enhances e-commerce by improving personalization, optimizing inventory, enabling dynamic pricing, and enhancing security.

How can Machine Learning improve customer experiences in e-commerce? ML analyzes user data to provide personalized recommendations, faster customer support, and tailored marketing campaigns, improving overall satisfaction.

What are the challenges of integrating ML in e-commerce? Challenges include technical integration, data privacy concerns, and the need for skilled professionals to manage ML systems effectively.

Which Saudi e-commerce companies are successfully using ML? Companies like Noon and Souq are leveraging ML for personalized shopping experiences, inventory management, and customer support.

What is the future of e-commerce with ML in Saudi Arabia? The future looks promising with trends like ML-driven AR experiences and more personalized

#machine learning e-commerce#Saudi Arabia tech#ML-powered apps#e-commerce growth#AI in retail#customer experience Saudi Arabia#digital transformation Saudi#ML app benefits#AI-driven marketing#predictive analytics retail#Saudi digital economy#e-commerce innovation#smart retail solutions#AI tech adoption#machine learning in business

2 notes

·

View notes