#azure power platform

Explore tagged Tumblr posts

Text

Haley A.I. emerges as a versatile intelligent assistant platform poised to revolutionize how we interact with technology. Unlike singular-purpose assistants, Haley A.I. boasts a broader range of features, making it a valuable tool for individuals and businesses alike. This comprehensive exploration delves into the potential applications, functionalities, and future directions of this innovative AI solution.

Please try this product Haley A.I.

Unveiling the Capabilities of Haley A.I.

Haley A.I. leverages the power of machine learning, natural language processing (NLP), and potentially large language models (LLMs) to deliver a multifaceted experience. Here's a closer look at some of its core functionalities:

Conversational Interface: Haley A.I. facilitates natural language interaction, allowing users to communicate through text or voice commands. This intuitive interface simplifies interactions and eliminates the need for complex navigation or code.

Task Automation: Streamline repetitive tasks by delegating them to Haley A.I. It can schedule meetings, set reminders, manage calendars, and handle basic data entry, freeing up valuable time for users to focus on more strategic endeavors.

Information Retrieval: Harness the power of Haley A.I. to access and process information. Users can ask questions on various topics, and Haley A.I. will utilize its internal knowledge base or external sources to provide relevant and accurate answers.

Decision Support: Haley A.I. can analyze data and generate insights to assist users in making informed decisions. This can involve summarizing complex reports, presenting data visualizations, or identifying potential trends.

Personalized Assistant: Haley A.I. can be customized to cater to individual needs and preferences. By learning user behavior and collecting data, it can offer personalized recommendations, automate frequently performed tasks, and tailor its responses for a more optimal experience.

Integrations: Extend Haley A.I.'s capabilities by integrating it with existing tools and platforms. Users can connect Haley A.I. to their calendars, email clients, CRM systems, or productivity tools, creating a unified workflow hub.

Harnessing the Power of Haley A.I. in Different Domains

The versatility of Haley A.I. makes it applicable across various domains. Let's explore some potential use cases:

Personal Assistant: Stay organized and manage your daily life with Haley A.I. Utilize it for scheduling appointments, setting reminders, managing grocery lists, or controlling smart home devices.

Customer Service: Businesses can leverage Haley A.I. to provide 24/7 customer support. It can answer frequently asked questions, troubleshoot basic issues, and even direct users to relevant resources.

Employee Productivity: Enhance employee productivity by automating routine tasks and providing real-time information retrieval. Imagine a sales representative being able to access customer data and product information seamlessly through Haley A.I.

Education and Learning: Haley A.I. can become a personalized learning assistant, providing students with explanations, summarizing complex topics, and even offering practice exercises tailored to their needs.

Data Analysis and Decision Making: Businesses can utilize Haley A.I. to analyze large datasets, generate reports, and identify trends. This valuable information can be used to make data-driven decisions and optimize strategies.

These examples showcase the diverse applications of Haley A.I. As the technology evolves and integrates with more platforms, the possibilities will continue to expand.

The Underlying Technology: A Peek Inside the Engine

While the specific details of Haley A.I.'s technology remain undisclosed, we can make some educated guesses based on its functionalities. Here are some potential components:

Machine Learning: Machine learning algorithms likely power Haley A.I.'s ability to learn and adapt to user behavior. This allows it to personalize responses, offer better recommendations, and improve its performance over time.

Natural Language Processing (NLP): The ability to understand and respond to natural language is crucial for a conversational interface. NLP techniques enable Haley A.I. to interpret user queries, translate them into machine-understandable code, and generate human-like responses.

Large Language Models (LLMs): These powerful AI models could play a role in Haley A.I.'s information retrieval and processing capabilities. LLMs can access and analyze vast amounts of data, allowing Haley A.I. to provide comprehensive answers to user inquiries.

The specific implementation of these technologies likely varies depending on Haley A.I.'s specific architecture and the desired functionalities. However, understanding these underlying principles sheds light on how Haley A.I. delivers its intelligent assistant experience.

Conclusion

HaleyA.I. emerges as a versatile and promising intelligent assistant platform. Its ability to automate tasks, access information, and personalize its responses positions it to revolutionize how we interact with technology. As the technology evolves and integrates with more platforms, the possibilities will continue to expand. By harnessing the power of AI responsibly and ethically, Haley A.I. has the potential to transform the way we work, learn, and live.

#machine learning#machine learning summit#machine learning finance#machine learning bootcamp#cambridge machine learning summit#deep learning#paper machine#foreigner in the philippines#microsoft power apps#university of washington#microsoft power apps platform#power apps#azure power platform#power platform#beyond the screen#top 10 beyond the screen#haley joel osment#ask hailey ai#salesforce sales cloud#sales#sales force#paper industry

0 notes

Text

Simplify Transactions and Boost Efficiency with Our Cash Collection Application

Manual cash collection can lead to inefficiencies and increased risks for businesses. Our cash collection application provides a streamlined solution, tailored to support all business sizes in managing cash effortlessly. Key features include automated invoicing, multi-channel payment options, and comprehensive analytics, all of which simplify the payment process and enhance transparency. The application is designed with a focus on usability and security, ensuring that every transaction is traceable and error-free. With real-time insights and customizable settings, you can adapt the application to align with your business needs. Its robust reporting functions give you a bird’s eye view of financial performance, helping you make data-driven decisions. Move beyond traditional, error-prone cash handling methods and step into the future with a digital approach. With our cash collection application, optimize cash flow and enjoy better financial control at every level of your organization.

#seo agency#seo company#seo marketing#digital marketing#seo services#azure cloud services#amazon web services#ai powered application#android app development#augmented reality solutions#augmented reality in education#augmented reality (ar)#augmented reality agency#augmented reality development services#cash collection application#cloud security services#iot applications#iot#iotsolutions#iot development services#iot platform#digitaltransformation#innovation#techinnovation#iot app development services#large language model services#artificial intelligence#llm#generative ai#ai

4 notes

·

View notes

Text

Clinical Nurse Specialist (RMN) - Lancashire

Job title: Clinical Nurse Specialist (RMN) – Lancashire Company: NHS Job description: within a secure setting About us Care in Mind are a CQC registered specialist service providing NHS funded intensive mental health… is made up of skilled nurses, psychologists, psychiatrists and specialist residential staff. Company Benefits Enhanced… Expected salary: £47307 – 52065 per year Location: Stockport,…

#agritech#Android#artificial intelligence#Automotive#Azure#Bioinformatics#Broadcast#cloud-native#CRM#Crypto#deep-learning#dotnet#erp#ethical AI#fintech#Frontend#GIS#govtech#insurtech#IT Support Specialist#metaverse#no-code#power-platform#product-management#Salesforce#sharepoint#SoC#visa-sponsorship#vr-ar

0 notes

Text

🤖 Discover how Xrm.Copilot is revolutionizing Dynamics 365 CE with AI! Learn about its key capabilities, integration with the Xrm API, and how developers can build smarter model-driven apps. Boost CRM productivity with conversational AI. #Dynamics365 #Copilot #PowerPlatform #XrmAPI #CRM #AI

#AI in Dynamics#Azure OpenAI.#CRM AI#CRM Automation#dataverse#Dynamics 365 CE#Low-Code AI#Microsoft Copilot#Model-Driven Apps#Power Platform#Xrm API#Xrm.Copilot

0 notes

Text

Powering Digital Transformation with Azure-Fueled Data Platform Modernization

As the world becomes increasingly digital, companies need to transform to remain competitive. Modernizing data platforms is one of the best means of doing so. Digital transformation through Azure empowers companies to access new heights of efficiency, scalability, and security through the potent cloud capabilities of Microsoft.

At Clarion Technologies, we aid businesses in converting their legacy systems into current, nimble, and data-driven environments. Azure's cutting-edge analytics, AI features, and cloud architecture support smooth integration, quicker processing, and real-time insights for making better decisions.

By embracing Digital transformation using Azure, businesses are able to:

✅ Enhance data accessibility as well as security ✅ Boost operational effectiveness with automation ✅ Scale smoothly with cloud-native solutions ✅ Get real-time insights for data-driven decisions

As a cloud and data modernization expert, Clarion Technologies makes the transition seamless, with minimal risks and maximum value. Whether migrating your databases, streamlining cloud storage, or leveraging AI-based analytics, we assist you in tapping Azure's full potential for sustainable business growth.

Join the future of data-driven innovation with Digital transformation with Azure. Start now with Clarion Technologies!

#Azure data platform modernization#Digital transformation with Azure#Azure-powered data solutions#Cloud data modernization#Enterprise data transformation

0 notes

Text

0 notes

Text

Microsoft Dynamics 365 API Access token in Postman

Introduction Dynamics 365 Online exposes Web API endpoints, making integration simple. The most difficult part, though, is authenticating since Dynamics 365 Online uses OAuth2.0. Every HTTP request to the Web API requires a valid access bearer token that is issued by Microsoft Azure Active Directory. In this blog, I will talk about how to use Dynamics 365 Application User (Client ID and Secret…

0 notes

Text

Discover how our team's deep expertise in Microsoft Azure can help you build, deploy, and manage modern web apps, AI solutions, data services, and more

0 notes

Text

(AI) with Azure AI. Microsoft Azure’s comprehensive suite of AI services is paving the way for businesses to compete and thrive. Unlock the potential of AI with Azure AI’s diverse range of tools and services. Enhance decision-making, streamline operations, and discover new opportunities. Let’s not forget about Azure OpenAI, a cutting-edge collaboration between Microsoft and OpenAI, a renowned AI research lab.

Start here to unravel the potential of Azure OpenAI for the best of both worlds. Harness the incredible language model of Azure Open AI, paired with the unbeatable scalability, security, and user-friendliness of the Azure platform. This dynamic partnership opens up endless business opportunities to revolutionize applications, enhance customer experiences, and ignite innovation. Keep reading to delve into the fundamentals of Azure AI and unlock a new realm of possibilities.

GET STARTED WITH MICROSOFT AZURE AI

Table of Contents hide

1 What is Azure AI? What Services come under this?

1.1 What Is the Difference Between Azure and OpenAI?

1.2 What are the latest Azure AI features launched?

1.3 Addressing Challenges in Azure AI

1.3.1 What is there for IT Leaders?

1.4 How Can Azure AI Help Protecting and Building Data Insight for Your Business?

1.5 How can Industries Benefit from Azure AI?

1.5.1 Ready to Maximize Microsoft Azure?

1.5.2 How can you begin your journey with Azure AI?

1.5.3 Why choose ECF Data for the next-generation AI project?

1 note

·

View note

Text

Custom AWS Solutions for Modern Enterprises - Atcuality

Amazon Web Services offer an unparalleled ecosystem of cloud computing tools that cater to businesses of all sizes. At ATCuality, we understand that no two companies are the same, which is why we provide custom Amazon Web Services solutions tailored to your specific goals. From designing scalable architectures to implementing cutting-edge machine learning capabilities, our AWS services ensure that your business stays ahead of the curve. The flexibility of Amazon Web Services allows for easy integration with your existing systems, paving the way for seamless growth and enhanced efficiency. Let us help you harness the power of AWS for your enterprise.

#seo marketing#seo services#artificial intelligence#digital marketing#seo agency#iot applications#seo company#ai powered application#azure cloud services#amazon web services#virtual reality#augmented reality agency#augmented human c4 621#augmented and virtual reality market#augmented reality#augmented intelligence#digital services#iotsolutions#iot development services#iot platform#techinnovation#digitaltransformation#automation#iot#iot solutions#iot development company#innovation#erp software#erp system#cloud security services

0 notes

Text

Lead Ruby on Rails Software Developer

Job title: Lead Ruby on Rails Software Developer Company: OCU Group Job description: OCU Group are looking for an experienced Ruby on the rails Lead Software Developer to join our growing digital team… & Experience Experience of professional software development experience, currently operating at Lead Developer or equivalent… Expected salary: Location: Preston Job date: Tue, 20 May 2025 23:50:49…

#5G#Android#Azure#Bioinformatics#Cybersecurity#data-privacy#data-science#deep-learning#dotnet#Ecommerce#erp#Flutter Developer#GIS#govtech#healthtech#insurtech#Java#legaltech#mlops#mobile-development#no-code#power-platform#prompt-engineering#regtech#remote-jobs#sharepoint#telecoms#ux-design#vr-ar

0 notes

Text

Day 2: Microsoft Fabric Architecture & Core Concepts – The Backbone of a Unified Data Platform

Microsoft Fabric Architecture Explained | Core Concepts for Beginners (2025) Published: July 3, 2025 🚀 Introduction After understanding what Microsoft Fabric is in Day 1, let’s now open the hood and look at its powerful architecture. Microsoft Fabric isn’t just a collection of tools—it’s a cohesive, integrated platform built with modern data challenges in mind. In this article, we’ll break down…

#ai#azure#Data engineering with Fabric#Data platform 2025#Fabric#Fabric architecture explained#Fabric KQL#Fabric Lakehouse#Fabric SQL Engine#Fabric vs Synapse#Microsoft Fabric#Microsoft Fabric architecture#Microsoft Fabric compute engines#Microsoft Fabric for beginners#Microsoft Fabric governance#Microsoft Fabric Lakehouse#Microsoft Fabric tutorial#Microsoft Purview#microsoft-fabric#OneLake#Power BI Fabric#Spark engine in Microsoft Fabric#technology

0 notes

Text

Lingping Gao, Founder, CEO and Chairman of NetBrain Technologies – Interview Series

New Post has been published on https://thedigitalinsider.com/lingping-gao-founder-ceo-and-chairman-of-netbrain-technologies-interview-series/

Lingping Gao, Founder, CEO and Chairman of NetBrain Technologies – Interview Series

Lingping Gao, Founder, Chief Executive Officer, and Chairman of NetBrain Technologies, established the company in 2004 with a mission to simplify network management. Prior to founding NetBrain, Mr. Gao was the Chief Network Architect at Thomson Financial, where he managed the complexities of large enterprise networks and experienced the challenges of maintaining network performance.

Mr. Gao has experience within multiple areas of business, including management, engineering, and international business within the networking, software, and automotive industries. He holds a BS and a BA in Automotive Engineering from Tsinghua University and an MS in Engineering from Yale University.

Founded in 2004, NetBrain is the market leader for network automation. Its technology platform provides network engineers with end-to-end visibility across their hybrid environments while automating their tasks across IT workflows. Today, more than 2,400 of the world’s largest enterprises and managed services providers use NetBrain to automate network documentation, accelerate troubleshooting, and strengthen network security—while integrating with a rich ecosystem of partners. NetBrain is headquartered in Burlington, Massachusetts, with employees located across the United States and Canada, Germany, the United Kingdom, India, and China.

What inspired you to start NetBrain in 2004? Were there any specific challenges you faced at Thomson Financial that led you to see a gap in network management?

Early in my career, I spent five years as a network engineer at Thomson Financial. I remember getting pulled into the NOC on my way out of the building one day and spending all night helping troubleshoot a problem. It turns out that a Cisco switch had been upgraded and, it changed an important configuration. I remember wondering why it took so long, even though we had a whole team of smart engineers working on it. Surely, there must be a better way.

I realized that the reason troubleshooting was so difficult was a lack of data. During those long nights, engineers always ask the same few questions. What devices is my network made of? What does the baseline look like? Who made this, and why is it configured this way? I started NetBrain to make it easier to answer those questions.

I knew that if network data was more easily accessible, problems could be solved much more quickly. At that first job, you’d have to take a pager and a stack of network diagrams with you whenever you went on vacation! My vision for NetBrain was to give engineers fast and easy access to the network data they need to solve problems and a way to easily automate their tasks so they can be scaled up and done proactively instead of reactively. If we can catch and fix an issue before it affects an end-user then no one has to spend all night troubleshooting! Now, 20 years later, my vision is coming to fruition with NetBrain.

NetBrain pioneered no-code automation for network management. What was the thought process behind developing a no-code solution instead of traditional scripting or programming-based automation?

We wanted to solve the critical challenges facing network operations teams by lowering the barrier to adopting and using network automation while making it accessible to all levels of IT skillsets. We see automation as harnessing the expertise of network engineers to create automation, making the platform more useful and ingrained in the culture of network operations.

Script-based DIY network automation requires an engineer who knows coding such as Python and has a high level of networking and CLI knowledge. There are just not enough people with that particular skill set (and they’re expensive!). Projects that pair coders with network engineers end up producing relatively few automations that can only address a limited set of problems instead of stopping recurrences.

No-code automation makes it easy enough to deploy and scale automation across hybrid networks that it can be used for many problems – really any repetitive task. This leads to a change in mindset where NetOps and other IT teams will look to automation as their first solution for most problems, rather than a “last resort” reserved for only a few high-priority issues.

AI is increasingly shaping enterprise IT operations. How does AI enhance NetBrain’s network automation capabilities, particularly in troubleshooting and security enforcement?

AI-powered features were a major update in NetBrain’s most recent version, Next-Gen Release 12 (R12). One of these capabilities includes a GenAI LLM Co-Pilot, which can assess, orchestrate, and summarize network automation results using natural language. This AI Co-Pilot serves as a technology translator, enabling users to engage with no-code automation without the need for extensive training. We plan to continue expanding our AI capabilities in upcoming releases.

Our chatbot also functions as a virtual self-service tool, allowing operations and security teams to gather essential network information, thereby conserving valuable NetOps resources for more strategic activities. Users can pose questions in natural language, facilitating intuitive problem resolution and automating troubleshooting, change management, and assessment workflows.

Broadly, we see automation as the way to scale NetOps processes up to machine scale and AI as the way people can interact with those automations and the network overall. Together, they help bridge the knowledge gap within IT teams by capturing years of expert experience and making it available to engineers of all levels. Nearly every enterprise has an engineer who knows how to solve every networking issue. But what do you do when that person is on vacation, in a different country, or unavailable? Automation and AI help share that person’s knowledge with the rest of the IT team without requiring deep engineering and coding skills.

Can you walk us through how NetBrain’s Digital Twin technology works and how it benefits organizations managing hybrid and multi-cloud networks?

NetBrain’s Digital Twin is a live model of a client’s multi-vendor networks that incorporates Intent, traffic forwarding, topology, and device data and supports no-code automation and dynamic maps. Unlike other digital twins, our intent layer houses a large collection of network configurations and service-level designs essential for effectively delivering any and all application requirements.

Another unique feature of our digital twin is that it provides real-time data across all layers, creating a more seamless, integrated system. Our customers are guaranteed live calculations of baseline and historical forwarding paths across multi-cloud and hybrid environments, as well as real-time topology and configurations of traditional, virtual, and cloud-based components with our hybrid network. This, combined with Network Auto-Discovery, removes the necessity of manually creating static network maps and continuously updates every component of the connected multi-vendor network. The benefit of real-time data is the ability to work more efficiently internally without the worry of human error while working in a single device that supports the discovery of traditional, virtual, and cloud-based devices.

Many companies struggle with network downtime and troubleshooting. How does NetBrain’s AI-driven automation help reduce Mean Time to Repair (MTTR)?

NetBrain reduces MTTR by making troubleshooting more efficient and streamlined. Our AI-powered automation does this in several ways:

Automatically create shareable incident summary dashboards.

Conduct automated monitoring to detect troubleshooting issues before they affect a user

Automatically conduct basic diagnostic tests whenever a ticket is opened

Automatically close tickets

Suggest remediations or possible causes for issues

Give other IT teams easier access to network data

Even small time savings compound quickly at scale – one of our customers estimated that NetBrain saved them 16,000 troubleshooting hours in 2022 on about 63,000 tickets by automating a series of routine diagnostic tests. All in all, these capabilities make troubleshooting more efficient and reduce MTTR directly. They also enable level 1 engineers to solve more problems on their own and reduce escalations. This is often called “shifting left.” It frees up more time for senior engineers to spend on more difficult troubleshooting.

With the rise of hybrid cloud and SDN environments, how does NetBrain ensure end-to-end network observability and compliance across diverse infrastructures?

NetBrain ensures comprehensive network observability and compliance across hybrid cloud and SDN environments. We seamlessly support multi-cloud infrastructures like AWS, Microsoft Azure, and Google Cloud Platform, as well as traditional networks, SD-WAN, and SDN deployments.

Our platform enables clients to monitor cloud configuration changes in real time, automate continuous compliance assessments, and track evolving network configurations through an intuitive dashboard. Additionally, NetBrain provides multi-layered security observability, continuously evaluating cloud security across network, server, data, and application layers.

For SDN fabrics, NetBrain enhances visibility and makes SDN knowledge easily shareable across teams. By leveraging automation, organizations can scale SDN expertise while accelerating incident response. Our “Shift Left” approach proactively identifies root causes and resolves data center issues earlier in the network support lifecycle, significantly reducing MTTR.

How has NetBrain adapted to new cybersecurity challenges, especially with growing concerns about network security vulnerabilities?

Cyber threats are evolving rapidly, and traditional, reactive security approaches can no longer keep up. NetBrain has adapted by making network security proactive and automated, helping IT find misconfigurations and vulnerabilities before they can be exploited by attackers.

We offer Triple Defense Change Management, which validates every network change against security policies before, during, and after implementation. This ensures compliance and prevents unintended exposure. Our automation also continuously audits configurations, detects drift, and integrates with ITSM and security platforms to enforce best practices in real-time.

By leveraging AI and automation, NetBrain helps enterprises reduce human error, improve response times, and prevent security gaps, ensuring networks remain secure without adding operational overhead.Given NetBrain’s ability to eliminate outages and improve security enforcement, do you see a future where AI-driven networks become fully autonomous?

As AI-driven networks continue to advance, they are gradually replacing traditional networking methods. However, full autonomy remains a future possibility rather than an immediate reality.

AI plays a crucial role in streamlining NetOps by automating labor-intensive tasks. For example, identifying and cataloging IT infrastructure components—traditionally a time-consuming process—can now be significantly accelerated. With AI-powered Digital Twin technology, tasks like diagnosing a BGP tunnel issue can be reduced from two hours to just ten minutes. AI also helps bridge the knowledge gap within IT teams by capturing and distributing years of expert experience to engineers of all levels. When an issue arises, AI can not only assist with diagnosis but also recommend corrective actions, next steps, and follow-up procedures—dramatically reducing response times and enabling teams to resolve problems faster.

That said, AI still has limitations. While it can analyze data, suggest optimizations, and automate certain processes, it cannot make decisions, take accountability, or approve network changes without human oversight. Given the complexity and high stakes of enterprise networking, AI’s recommendations must be validated by engineers to prevent costly errors and downtime. Until AI can demonstrate greater reliability and contextual decision-making, fully autonomous networks will remain an aspiration rather than a reality.

NetBrain now serves over 2,500 enterprise customers, including one-third of Fortune 500 companies. What do you think has been the key to your success in scaling and gaining enterprise adoption?

Our success comes from fundamentally transforming how enterprises manage their networks. Traditional, reactive troubleshooting no longer scales, so we pioneered no-code network automation to make network operations proactive, not just reactive.

A key differentiator is our Digital Twin, which provides real-time visibility into the entire hybrid network, allowing teams to automate diagnostics, enforce golden engineering standards, and prevent outages before they happen. Combined with our ITSM-integrated troubleshooting and Triple Defense Change Management, enterprises can scale automation across even the most complex environments—without requiring an army of developers.

Ultimately, NetBrain makes automation accessible, enabling teams to resolve issues faster, enforce design intent, and keep business-critical applications running smoothly. Automation combined with accurate network mapping and deeper network insight lets us solve many NetOps challenges without additional overhead.

Looking ahead five years, how do you see the landscape of network automation evolving, and what role do you envision NetBrain playing in shaping the future of AI-driven network operations?

Over the next five years, network automation will move beyond scripted tasks and reactive troubleshooting to AI-driven, intent-based automation that dynamically adapts to changing network conditions. The days of manual diagnostics and fragmented tools are numbered — automation will be the backbone of network operations, ensuring resilience, security, and agility at scale.

AI will make that automation accessible and lower the barrier to usability at all levels in operations. It will make it easier to obtain and tailor network data into digestible and meaningful information so teams can reduce risk and gain efficiencies faster.

NetBrain is at the forefront of this evolution. Our Digital Twin provides a live model of the network, allowing AI to understand its design intent and enforce it proactively. We are pioneering GenAI-driven troubleshooting, self-healing networks, and deeper ITSM integrations so enterprises can shift from manual intervention to fully autonomous operations. Our vision is simple: make network automation intuitive, scalable, and indispensable — turning every engineer into an automation expert without requiring them to code.

In the next few years, AI-driven network operations won’t be a luxury, it will be a necessity. NetBrain is leading that charge, ensuring enterprises stay ahead of complexity while keeping networks secure, compliant, and always available.

Thank you for the great interview, readers who wish to learn more should visit NetBrain.

#000#2022#adoption#ai#AI-powered#applications#approach#assessment#attackers#automation#automotive#autonomous#AWS#azure#barrier#bridge#Building#Business#Canada#career#CEO#change#change management#chatbot#China#Cisco#Cloud#cloud platform#Cloud Security#code

0 notes

Text

[Fabric] ¿Por donde comienzo? OneLake intro

Microsoft viene causando gran revuelo desde sus lanzamientos en el evento MSBuild 2023. Las demos, videos, artículos y pruebas de concepto estan volando para conocer más y más en profundidad la plataforma.

Cada contenido que vamos encontrando nos cuenta sobre algun servicio o alguna feature, pero muchos me preguntaron "¿Por donde empiezo?" hay tantos nombres de servicios y tecnologías grandiosas que aturden un poco.

En este artículo vamos a introducirnos en el primer concepto para poder iniciar el camino para comprender a Fabric. Nos vamos a introducir en OneLake.

Si aún no conoces nada de Fabric te invito a pasar por mi post introductorio así te empapas un poco antes de comenzar.

Introducción

Para introducirnos en este nuevo mundo me gustaría comenzar aclarando que es necesaria una capacidad dedicada para usar Fabric. Hoy esto no es un problema para pruebas puesto que Microsoft liberó Fabric Trials que podemos activar en la configuración de inquilinos (tenant settings) de nuestro portal de administración.

Fabric se organiza separando contenido que podemos crear según servicios nombrados como focos de disciplinas o herramientas como PowerBi, Data Factory, Data Science, Data Engineering, etc. Estos son formas de organizar el contenido para visualizar lo que nos pertine en la diaria. Sin embargo, al final del día el proyecto que trabajamos esta en un workspace que tiene contenidos varios como: informes, conjuntos de datos, lakehouse, sql endpoints, notebooks, pipelines, etc.

Para poder comenzar a trabajar necesitaremos entender LakeHouse y OneLake.

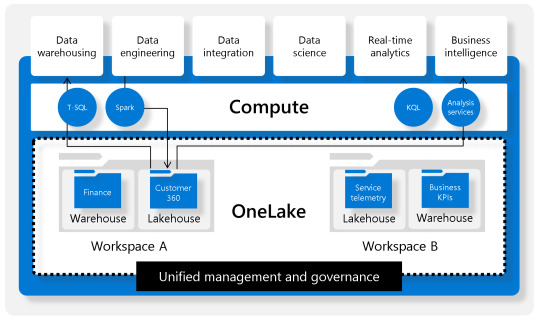

Podemos pensar en OneLake como un storage único por organización. Esta única fuente de datos puede tener proyectos organizados por Workspaces. Los proyectos permiten crear sub lagos del único llamado LakeHouse. El contenido LakeHouse no es más que una porción de gran OneLake. Los LakeHouses combinan las funcionalidades analíticas basadas en SQL de un almacenamiento de datos relacional y la flexibilidad y escalabilidad de un Data Lake. La herramienta permite almacenar todos los formatos de archivos de datos conocidos y provee herramientas analíticas para leerlos. Veamos una imagen como referencia estructural:

Beneficios

Usan motores Spark y SQL para procesar datos a gran escala y admitir el aprendizaje automático o el análisis de modelado predictivo.

Los datos se organizan en schema-on-read format, lo que significa que se define el esquema según sea necesario en lugar de tener un esquema predefinido.

Admiten transacciones ACID (Atomicidad, Coherencia, Aislamiento, Durabilidad) a través de tablas con formato de Delta Lake para conseguir coherencia e integridad en los datos.

Crear un LakeHouse

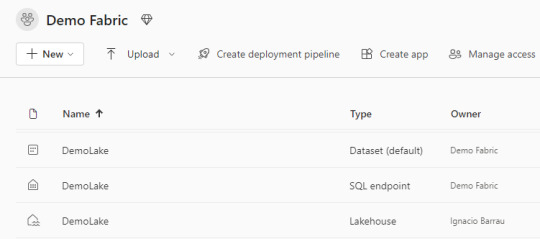

Lo primero a utilizar para aprovechar Fabric es su OneLake. Sus ventajas y capacidades será aprovechadas si alojamos datos en LakeHouses. Al crear el componente nos encontramos con que tres componentes fueron creados en lugar de uno:

Lakehouse contiene los metadatos y la porción el almacenamiento storage del OneLake. Ahi encontraremos un esquema de archivos carpetas y datos de tabla para pre visualizar.

Dataset (default) es un modelo de datos que crea automáticamente y apunta a todas las tablas del LakeHouse. Se pueden crear informes de PowerBi a partir de este conjunto. La conexión establecida es DirectLake. Click aqui para conocer más de direct lake.

SQL Endpoint como su nombre lo indica es un punto para conectarnos con SQL. Podemos entrar por plataforma web o copiar sus datos para conectarnos con una herramienta externa. Corre Transact-SQL y las consultas a ejecutar son únicamente de lectura.

Lakehouse

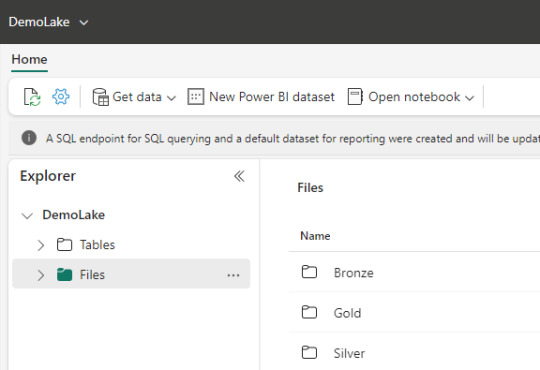

Dentro de este contenido creado, vamos a visualizar dos separaciones principales.

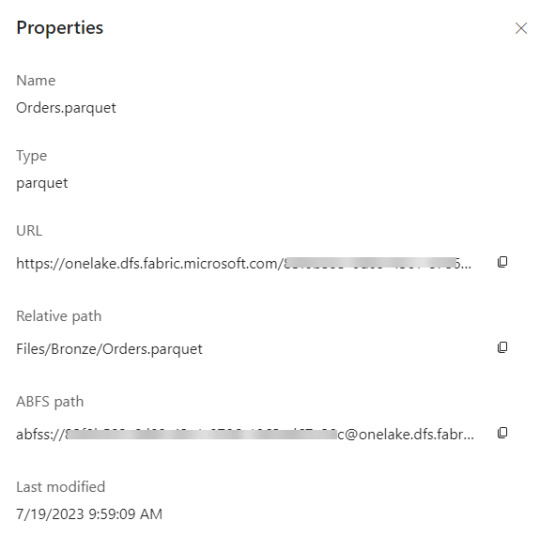

Archivos: esta carpeta es lo más parecido a un Data Lake tradicional. Podemos crear subcarpetas y almacenar cualquier tipo de archivos. Podemos pensarlo como un filesystem para organizar todo tipo de archivos que querramos analizar. Aquellos archivos que sean de formato datos como parquet o csv, podrán ser visualizados con un simple click para ver una vista previa del contenido. Como muestra la imagen, aquí mismo podemos trabajar una arquitectura tradicional de medallón (Bronze, Silver, Gold). Aquí podemos validar que existe un único lakehouse analizando las propiedades de un archivo, si las abrimos nos encontraremos con un ABFS path como en otra tecnología Data Lake.

Tablas: este espacio vendría a representar un Spark Catalog, es decir un metastore de objetos de data relacionales como son las tablas o vistas de un motor de base de datos. Esta basado en formato de tablas DeltaLake que es open source. Delta nos permite definir un schema de tablas en nuestro lakehouse que podrá ser consultado con SQL. Aquí no hay subcarpetas. Aqui solo hay un Meta store tipo base de datos. De momento, es uno solo por LakeHouse.

Ahora que conocemos más sobre OneLake podemos iniciar nuestra expedición por Fabric. El siguiente paso sería la ingesta de datos. Podes continuar leyendo por varios lugares o esperar nuestro próximo post sobre eso :)

#onelake#fabric#microsoft fabric#fabric onelake#fabric tutorial#fabric training#fabric tips#azure data platform#ladataweb#powerbi#power bi#fabric argentina#fabric jujuy#fabric cordoba#power bi service

0 notes