#Azure App Service Platform

Explore tagged Tumblr posts

Text

0 notes

Text

Simplify Transactions and Boost Efficiency with Our Cash Collection Application

Manual cash collection can lead to inefficiencies and increased risks for businesses. Our cash collection application provides a streamlined solution, tailored to support all business sizes in managing cash effortlessly. Key features include automated invoicing, multi-channel payment options, and comprehensive analytics, all of which simplify the payment process and enhance transparency. The application is designed with a focus on usability and security, ensuring that every transaction is traceable and error-free. With real-time insights and customizable settings, you can adapt the application to align with your business needs. Its robust reporting functions give you a bird’s eye view of financial performance, helping you make data-driven decisions. Move beyond traditional, error-prone cash handling methods and step into the future with a digital approach. With our cash collection application, optimize cash flow and enjoy better financial control at every level of your organization.

#seo agency#seo company#seo marketing#digital marketing#seo services#azure cloud services#amazon web services#ai powered application#android app development#augmented reality solutions#augmented reality in education#augmented reality (ar)#augmented reality agency#augmented reality development services#cash collection application#cloud security services#iot applications#iot#iotsolutions#iot development services#iot platform#digitaltransformation#innovation#techinnovation#iot app development services#large language model services#artificial intelligence#llm#generative ai#ai

4 notes

·

View notes

Text

10 Best Internal Developer Platforms (IDPs) (October 2024)

New Post has been published on https://thedigitalinsider.com/10-best-internal-developer-platforms-idps-october-2024/

10 Best Internal Developer Platforms (IDPs) (October 2024)

Internal Developer Platforms (IDPs) are tools that help organizations optimize their development processes. As companies grapple with the complexities of cloud-native architectures, microservices, and the need for rapid deployment, IDPs offer a solution that streamlines workflows, automates repetitive tasks, and empowers developers to focus on what they do best – writing code.

This article explores the top internal developer platforms that are improving the way development teams work, deploy applications, and manage their infrastructure.

Qovery stands out as a powerful DevOps Automation Platform that aims to streamline the development process and reduce the need for extensive DevOps hiring. Built on top of Kubernetes, Qovery provides a comprehensive solution for provisioning and maintaining secure and compliant infrastructure in a fraction of the time typically required.

What sets Qovery apart is its focus on creating an exceptional developer experience while giving platform engineering teams the tools they need to maintain control and customization. The platform’s approach to abstracting away the complexities of underlying systems allows developers to concentrate on writing code and delivering value to their customers, significantly reducing the friction often associated with infrastructure management.

Key features of Qovery include:

Templates for standardizing deployments across the organization

Role-based access control (RBAC) for enhanced security and governance

GitOps support, enabling version-controlled infrastructure management

Self-service deployment capabilities for developers, promoting autonomy

Seamless integration with existing CI/CD pipelines for smooth workflow incorporation

Visit Quovery →

Humanitec offers a comprehensive internal developer platform that focuses on enabling self-service infrastructure and streamlining development workflows. By providing a suite of tools designed to reduce cognitive load and drive standardization, Humanitec addresses the challenges faced by modern development teams working with complex, distributed systems.

One of Humanitec’s standout features is its Platform Orchestrator, which integrates seamlessly with CI/CD pipelines to standardize configurations and workflows. This approach eliminates infrastructure bottlenecks and allows development teams to work more efficiently. Additionally, Humanitec’s commitment to accelerating time-to-market while maintaining enterprise-grade security controls makes it an attractive option for organizations of all sizes.

Humanitec’s key features include:

Score: A workload specification for defining resource requirements across any tech stack

Platform Orchestrator for seamless CI/CD pipeline integration

Humanitec Portal: A user-friendly interface for simplified infrastructure management

Dynamically generated, standardized application and infrastructure configurations

Enterprise-grade security controls for maintaining compliance and governance

Visit Humanitec →

OpsLevel takes a unique approach to internal developer platforms by focusing on creating a comprehensive service catalog and enhancing the efficiency of high-performing engineering teams. By providing a centralized platform for cataloging, measuring, and improving software development processes, OpsLevel helps organizations gain better visibility into their microservices architecture and maintain high standards across their development ecosystem.

What distinguishes OpsLevel is its emphasis on standards and scorecards, allowing teams to set and maintain development standards across their organization. This focus on continuous improvement and alignment with best practices helps teams identify areas for optimization and ensures consistent quality across all services.

OpsLevel’s key features include:

Comprehensive Service Catalog with AI-assisted service descriptions

Standards and Scorecards for setting and maintaining development benchmarks

Developer Self-Service capabilities for improved autonomy and productivity

Extensive integrations with various tools and services in the development stack

Clear visibility of services with automated dependency tracking and ownership management

Visit OpsLevel →

Backstage, originally created by Spotify and later open-sourced, has quickly become a frontrunner in the IDP space. This open-source framework for building developer portals provides a centralized platform for managing software catalogs, documentation, and developer workflows, making it an attractive option for organizations looking to create a customized internal platform.

What sets Backstage apart is its flexibility and extensibility. The platform’s plugin architecture allows teams to tailor the developer portal to their specific needs, integrating with existing tools and services seamlessly. This adaptability, combined with its strong community support, makes Backstage an excellent choice for organizations that value customization and have the resources to invest in platform development.

Key features of Backstage include:

Software Catalog for managing metadata about all software in an organization

Software Templates enabling standardized project creation and best practices enforcement

TechDocs, implementing a “docs like code” approach for easy maintenance of technical documentation

Extensible plugin architecture for customization and integration with existing tools

Large and active community support, ensuring continuous improvement and innovation

Visit Backstage →

Mia Platform offers a comprehensive IDP designed to optimize the delivery and lifecycle management of cloud-native applications. By providing a suite of services for platform teams, software engineers, and IT leaders, Mia Platform aims to enhance productivity, facilitate governance, and expedite delivery in complex cloud environments.

One of Mia Platform’s standout features is its Mia-Platform Console, which serves as a unified developer hub. This centralized console allows organizations to govern all projects in one place, industrialize DevOps operations, and accelerate the creation of microservices architectures. The platform’s approach to improving the overall developer experience (DevX) while maintaining robust governance capabilities makes it an attractive option for organizations looking to balance agility with control.

Mia Platform’s key features include:

Mia-Platform Console for centralized project governance and DevOps automation

Marketplace filled with ready-to-use templates and plug-and-play components

Fast Data Service enabling Digital Integration Hub architecture for improved performance

Built-in CI/CD capabilities for streamlined development and deployment

Comprehensive tools for microservices development and orchestration

Visit Mia Platform →

Coherence positions itself as a “full developer experience platform,” uniquely combining features of an IDP, ephemeral environments, and Platform as a Service (PaaS). This comprehensive approach supports the entire software development lifecycle, from initial development to testing and deployment of full-stack web applications.

What distinguishes Coherence is its holistic approach to the development process. By offering a solution that covers the full spectrum of development needs, including cloud IDE integration and PaaS capabilities, Coherence aims to provide a seamless experience for developers while simplifying complex processes for organizations. This all-encompassing platform is particularly valuable for teams looking to consolidate their toolchain and streamline their development workflow.

Key features of Coherence include:

Full-cycle platform supporting development, testing, and deployment processes

Ephemeral Environments as a Service, simplifying the creation of preview environments

Cloud IDE integration for collaborative and consistent development practices

PaaS capabilities that can be deployed within an organization’s cloud infrastructure

Simplified creation and management of various environment types, from development to production

Visit Coherence →

Facets offers a comprehensive IDP designed to unify developer and operations workflows. By accelerating software delivery and reducing cloud costs, Facets aims to address the challenges faced by multi-app engineering organizations dealing with complex cloud architectures.

One of Facets’ standout features is its no-code infrastructure automation, which allows users to create and manage cloud infrastructure through an intuitive interface. This approach democratizes infrastructure management, enabling team members with varying levels of technical expertise to contribute to the process. Additionally, Facets’ emphasis on reusable architecture blueprints helps organizations standardize best practices and accelerate project initiation.

Facets’ key features include:

A unified interface for centralized management of all infrastructure and applications

Automated environment provisioning for consistent setup across development stages

No-code infrastructure automation to simplify complex cloud management tasks

Comprehensive microservice catalog with dependency visualization for better system understanding

Reusable architecture blueprints to enforce best practices and accelerate new project setups

Visit Facets →

Bunnyshell positions itself as an Environments as a Service (EaaS) platform specifically designed for Kubernetes applications. By focusing on automating the development process and enabling developer self-service, Bunnyshell aims to simplify the complexities often associated with Kubernetes environments.

What distinguishes Bunnyshell is its emphasis on creating and managing ephemeral environments. This feature allows developers to spin up isolated, realistic environments for each pull request, significantly reducing integration issues and accelerating the feedback loop. The platform’s approach to cloud development environments also eliminates the need for powerful local machines, making it an attractive option for teams embracing remote or distributed work models.

Bunnyshell’s key features include:

Automatic preview environments for each pull request, enhancing code review processes

Self-service cloud development environments for on-demand access to resources

Comprehensive Infrastructure as Code support for defining complex environments

Seamless CI/CD integration with popular tools like GitHub, GitLab, and Jenkins

Real-time observability and logging capabilities for efficient debugging and monitoring

Visit Bunnyshell →

Portainer stands out as a universal container management platform designed to simplify the deployment, management, and monitoring of containerized applications. While not exclusively an Internal Developer Platform, Portainer’s focus on making container technologies accessible to users with varying levels of expertise makes it a valuable tool in the modern development ecosystem.

What sets Portainer apart is its user-friendly approach to container management. By providing a intuitive web interface that abstracts the complexities of container technologies, Portainer enables teams to manage Docker, Kubernetes, and Azure ACI environments from a single platform. This unified approach is particularly beneficial for organizations transitioning to or expanding their use of containerized applications.

Key features of Portainer include:

A centralized management interface for multiple container environments, offering a single pane of glass view

Comprehensive container and image management capabilities for efficient resource utilization

Simplified Kubernetes support, making complex orchestration more accessible

Robust Role-Based Access Control (RBAC) for enhanced security and governance

Environment templates for quick deployment of pre-configured application stacks

Visit Portainer →

Appvia stands out as a specialized IDP that focuses on simplifying the adoption and management of Kubernetes and cloud-native technologies. By offering a comprehensive suite of tools that cater to both developers and operations teams, Appvia aims to strike a balance between developer empowerment and operational control in complex cloud environments.

What sets Appvia apart is its deep integration with Kubernetes and its commitment to making cloud-native technologies more accessible. The platform’s approach to automating complex tasks and providing self-service capabilities allows organizations to accelerate their cloud-native journey without compromising on security or governance. This makes Appvia particularly attractive for enterprises looking to adopt or expand their use of Kubernetes while maintaining strict compliance and security standards.

Appvia’s key features include:

Kubernetes-native architecture, providing seamless integration with existing Kubernetes ecosystems

Self-service portal for developers, enabling rapid provisioning of cloud resources and environments

Comprehensive governance and compliance tools to ensure adherence to organizational policies

Multi-cloud support, allowing for consistent management across various cloud providers

Advanced automation capabilities for CI/CD pipelines and infrastructure provisioning

Visit Appvia →

The Bottom Line

The landscape of internal developer platforms has evolved significantly, offering a wide range of solutions to address the complex challenges of modern software development. From open-source frameworks like Backstage to specialized platforms like Bunnyshell for Kubernetes environments, there’s a solution for every organization’s unique needs.

These platforms share a common goal: to streamline development processes, improve collaboration, and accelerate time-to-market. By automating routine tasks, providing self-service capabilities, and offering centralized management interfaces, IDPs enable development teams to focus on innovation rather than infrastructure management.

As cloud-native architectures and microservices continue to dominate the development landscape, adopting the right IDP can be a game-changer for businesses looking to stay competitive. Whether you’re a small startup or a large enterprise, investing in an internal developer platform can lead to significant improvements in productivity, cost-efficiency, and overall software quality.

When choosing an IDP, consider factors such as your team’s specific needs, existing technology stack, scalability requirements, and long-term development goals. The right platform will not only solve immediate challenges but also grow with your organization, supporting your development efforts well into the future.

#2024#access control#adoption#ai#app#applications#approach#architecture#Article#as a service#automation#automation platform#azure#Best Of#Building#CI/CD#Cloud#cloud infrastructure#Cloud Management#Cloud-Native#code#Code Review#Collaboration#collaborative#Community#Companies#compliance#comprehensive#container#container management

0 notes

Text

Chipsy.io Backend Development: Unleashing the Power of Modern Technology

In the fast-evolving world of technology, businesses need robust, scalable, and secure backend systems to support their digital transformation. At Chipsy.io, we specialize in backend development, harnessing the power of cutting-edge technologies to build systems that drive your business forward.

Key Technologies

AWS: Leveraging Amazon Web Services (AWS), we provide scalable and flexible solutions that meet the demands of your business. From EC2 instances to Lambda functions, our expertise ensures your applications run smoothly and efficiently.

Azure: With Microsoft Azure, we deliver enterprise-grade solutions that integrate seamlessly with your existing infrastructure. Our services include everything from Azure App Services to Azure Functions, enabling rapid development and deployment.

Google Cloud Platform (GCP): Utilizing the power of GCP, we build highly scalable and resilient backend systems. Our capabilities include using Google Kubernetes Engine (GKE) for container orchestration and BigQuery for real-time analytics.

Best Practices

At Chipsy.io, we adhere to industry best practices to ensure the quality and reliability of our backend systems:

Microservices Architecture: We design our systems using a microservices architecture, allowing for independent development, deployment, and scaling of each service.

Continuous Integration/Continuous Deployment (CI/CD): Our CI/CD pipelines automate the testing and deployment process, ensuring rapid and reliable releases.

Security: We implement robust security measures, including data encryption, secure APIs, and regular security audits, to protect your sensitive information.

Monitoring and Logging: Our systems include comprehensive monitoring and logging solutions, providing real-time insights and facilitating quick issue resolution.

Future Trends

We stay ahead of the curve by continuously exploring emerging trends and technologies:

Serverless Computing: Our expertise in serverless architectures allows for building highly scalable applications without the need for server management.

Artificial Intelligence and Machine Learning: We integrate AI and ML capabilities into backend systems to provide advanced analytics and automation.

Edge Computing: By processing data closer to the source, we reduce latency and improve performance, especially for IoT applications.

Why Choose Chipsy.io?

Partnering with Chipsy.io for your backend development needs means gaining access to a team of experts dedicated to delivering high-quality, future-proof solutions. Our commitment to excellence and innovation ensures your business stays competitive in a digital-first world.

Ready to transform your backend systems? Contact Chipsy.io today and let us help you unleash the power of modern technology.

#backend development#aws#microsoft azure#mobile app design#artificial intelligence#machinelearning#google cloud platform#google cloud services

0 notes

Text

Technological advancements are orchestrating a monumental shift in the field of Healthcare software development. The advent of Azure and serverless technologies made an unbelievable impact on the ways of app development and deployment. Today, you can discover a variety of healthcare apps that can assess your healthcare, monitor your body's state, and provide you with helpful suggestions on how to improve it. You may access them at any time and from any location, fundamentally altering the way healthcare is seen.

The traditional approach to healthcare software development often grappled with scalability limitations and infrastructure maintenance burdens. However, with Serverless computing and the power of Azure, such challenges have been addressed. Let’s deep dive and learn how healthcare Azure software development is making a paradigm shift with serverless technology.

#Microsoft Azure development#serverless platforms#Microsoft Azure and serverless platforms#Healthcare software development#Azure development services#serverless solutions#healthcare app development company#custom healthcare software#software outsourcing#software development company

0 notes

Text

AI Agent Development: How to Create Intelligent Virtual Assistants for Business Success

In today's digital landscape, businesses are increasingly turning to AI-powered virtual assistants to streamline operations, enhance customer service, and boost productivity. AI agent development is at the forefront of this transformation, enabling companies to create intelligent, responsive, and highly efficient virtual assistants. In this blog, we will explore how to develop AI agents and leverage them for business success.

Understanding AI Agents and Virtual Assistants

AI agents, or intelligent virtual assistants, are software programs that use artificial intelligence, machine learning, and natural language processing (NLP) to interact with users, automate tasks, and make decisions. These agents can be deployed across various platforms, including websites, mobile apps, and messaging applications, to improve customer engagement and operational efficiency.

Key Features of AI Agents

Natural Language Processing (NLP): Enables the assistant to understand and process human language.

Machine Learning (ML): Allows the assistant to improve over time based on user interactions.

Conversational AI: Facilitates human-like interactions.

Task Automation: Handles repetitive tasks like answering FAQs, scheduling appointments, and processing orders.

Integration Capabilities: Connects with CRM, ERP, and other business tools for seamless operations.

Steps to Develop an AI Virtual Assistant

1. Define Business Objectives

Before developing an AI agent, it is crucial to identify the business goals it will serve. Whether it's improving customer support, automating sales inquiries, or handling HR tasks, a well-defined purpose ensures the assistant aligns with organizational needs.

2. Choose the Right AI Technologies

Selecting the right technology stack is essential for building a powerful AI agent. Key technologies include:

NLP frameworks: OpenAI's GPT, Google's Dialogflow, or Rasa.

Machine Learning Platforms: TensorFlow, PyTorch, or Scikit-learn.

Speech Recognition: Amazon Lex, IBM Watson, or Microsoft Azure Speech.

Cloud Services: AWS, Google Cloud, or Microsoft Azure.

3. Design the Conversation Flow

A well-structured conversation flow is crucial for user experience. Define intents (what the user wants) and responses to ensure the AI assistant provides accurate and helpful information. Tools like chatbot builders or decision trees help streamline this process.

4. Train the AI Model

Training an AI assistant involves feeding it with relevant datasets to improve accuracy. This may include:

Supervised Learning: Using labeled datasets for training.

Reinforcement Learning: Allowing the assistant to learn from interactions.

Continuous Learning: Updating models based on user feedback and new data.

5. Test and Optimize

Before deployment, rigorous testing is essential to refine the AI assistant's performance. Conduct:

User Testing: To evaluate usability and responsiveness.

A/B Testing: To compare different versions for effectiveness.

Performance Analysis: To measure speed, accuracy, and reliability.

6. Deploy and Monitor

Once the AI assistant is live, continuous monitoring and optimization are necessary to enhance user experience. Use analytics to track interactions, identify issues, and implement improvements over time.

Benefits of AI Virtual Assistants for Businesses

1. Enhanced Customer Service

AI-powered virtual assistants provide 24/7 support, instantly responding to customer queries and reducing response times.

2. Increased Efficiency

By automating repetitive tasks, businesses can save time and resources, allowing employees to focus on higher-value tasks.

3. Cost Savings

AI assistants reduce the need for large customer support teams, leading to significant cost reductions.

4. Scalability

Unlike human agents, AI assistants can handle multiple conversations simultaneously, making them highly scalable solutions.

5. Data-Driven Insights

AI assistants gather valuable data on customer behavior and preferences, enabling businesses to make informed decisions.

Future Trends in AI Agent Development

1. Hyper-Personalization

AI assistants will leverage deep learning to offer more personalized interactions based on user history and preferences.

2. Voice and Multimodal AI

The integration of voice recognition and visual processing will make AI assistants more interactive and intuitive.

3. Emotional AI

Advancements in AI will enable virtual assistants to detect and respond to human emotions for more empathetic interactions.

4. Autonomous AI Agents

Future AI agents will not only respond to queries but also proactively assist users by predicting their needs and taking independent actions.

Conclusion

AI agent development is transforming the way businesses interact with customers and streamline operations. By leveraging cutting-edge AI technologies, companies can create intelligent virtual assistants that enhance efficiency, reduce costs, and drive business success. As AI continues to evolve, embracing AI-powered assistants will be essential for staying competitive in the digital era.

4 notes

·

View notes

Text

Top 10 In- Demand Tech Jobs in 2025

Technology is growing faster than ever, and so is the need for skilled professionals in the field. From artificial intelligence to cloud computing, businesses are looking for experts who can keep up with the latest advancements. These tech jobs not only pay well but also offer great career growth and exciting challenges.

In this blog, we’ll look at the top 10 tech jobs that are in high demand today. Whether you’re starting your career or thinking of learning new skills, these jobs can help you plan a bright future in the tech world.

1. AI and Machine Learning Specialists

Artificial Intelligence (AI) and Machine Learning are changing the game by helping machines learn and improve on their own without needing step-by-step instructions. They’re being used in many areas, like chatbots, spotting fraud, and predicting trends.

Key Skills: Python, TensorFlow, PyTorch, data analysis, deep learning, and natural language processing (NLP).

Industries Hiring: Healthcare, finance, retail, and manufacturing.

Career Tip: Keep up with AI and machine learning by working on projects and getting an AI certification. Joining AI hackathons helps you learn and meet others in the field.

2. Data Scientists

Data scientists work with large sets of data to find patterns, trends, and useful insights that help businesses make smart decisions. They play a key role in everything from personalized marketing to predicting health outcomes.

Key Skills: Data visualization, statistical analysis, R, Python, SQL, and data mining.

Industries Hiring: E-commerce, telecommunications, and pharmaceuticals.

Career Tip: Work with real-world data and build a strong portfolio to showcase your skills. Earning certifications in data science tools can help you stand out.

3. Cloud Computing Engineers: These professionals create and manage cloud systems that allow businesses to store data and run apps without needing physical servers, making operations more efficient.

Key Skills: AWS, Azure, Google Cloud Platform (GCP), DevOps, and containerization (Docker, Kubernetes).

Industries Hiring: IT services, startups, and enterprises undergoing digital transformation.

Career Tip: Get certified in cloud platforms like AWS (e.g., AWS Certified Solutions Architect).

4. Cybersecurity Experts

Cybersecurity professionals protect companies from data breaches, malware, and other online threats. As remote work grows, keeping digital information safe is more crucial than ever.

Key Skills: Ethical hacking, penetration testing, risk management, and cybersecurity tools.

Industries Hiring: Banking, IT, and government agencies.

Career Tip: Stay updated on new cybersecurity threats and trends. Certifications like CEH (Certified Ethical Hacker) or CISSP (Certified Information Systems Security Professional) can help you advance in your career.

5. Full-Stack Developers

Full-stack developers are skilled programmers who can work on both the front-end (what users see) and the back-end (server and database) of web applications.

Key Skills: JavaScript, React, Node.js, HTML/CSS, and APIs.

Industries Hiring: Tech startups, e-commerce, and digital media.

Career Tip: Create a strong GitHub profile with projects that highlight your full-stack skills. Learn popular frameworks like React Native to expand into mobile app development.

6. DevOps Engineers

DevOps engineers help make software faster and more reliable by connecting development and operations teams. They streamline the process for quicker deployments.

Key Skills: CI/CD pipelines, automation tools, scripting, and system administration.

Industries Hiring: SaaS companies, cloud service providers, and enterprise IT.

Career Tip: Earn key tools like Jenkins, Ansible, and Kubernetes, and develop scripting skills in languages like Bash or Python. Earning a DevOps certification is a plus and can enhance your expertise in the field.

7. Blockchain Developers

They build secure, transparent, and unchangeable systems. Blockchain is not just for cryptocurrencies; it’s also used in tracking supply chains, managing healthcare records, and even in voting systems.

Key Skills: Solidity, Ethereum, smart contracts, cryptography, and DApp development.

Industries Hiring: Fintech, logistics, and healthcare.

Career Tip: Create and share your own blockchain projects to show your skills. Joining blockchain communities can help you learn more and connect with others in the field.

8. Robotics Engineers

Robotics engineers design, build, and program robots to do tasks faster or safer than humans. Their work is especially important in industries like manufacturing and healthcare.

Key Skills: Programming (C++, Python), robotics process automation (RPA), and mechanical engineering.

Industries Hiring: Automotive, healthcare, and logistics.

Career Tip: Stay updated on new trends like self-driving cars and AI in robotics.

9. Internet of Things (IoT) Specialists

IoT specialists work on systems that connect devices to the internet, allowing them to communicate and be controlled easily. This is crucial for creating smart cities, homes, and industries.

Key Skills: Embedded systems, wireless communication protocols, data analytics, and IoT platforms.

Industries Hiring: Consumer electronics, automotive, and smart city projects.

Career Tip: Create IoT prototypes and learn to use platforms like AWS IoT or Microsoft Azure IoT. Stay updated on 5G technology and edge computing trends.

10. Product Managers

Product managers oversee the development of products, from idea to launch, making sure they are both technically possible and meet market demands. They connect technical teams with business stakeholders.

Key Skills: Agile methodologies, market research, UX design, and project management.

Industries Hiring: Software development, e-commerce, and SaaS companies.

Career Tip: Work on improving your communication and leadership skills. Getting certifications like PMP (Project Management Professional) or CSPO (Certified Scrum Product Owner) can help you advance.

Importance of Upskilling in the Tech Industry

Stay Up-to-Date: Technology changes fast, and learning new skills helps you keep up with the latest trends and tools.

Grow in Your Career: By learning new skills, you open doors to better job opportunities and promotions.

Earn a Higher Salary: The more skills you have, the more valuable you are to employers, which can lead to higher-paying jobs.

Feel More Confident: Learning new things makes you feel more prepared and ready to take on tougher tasks.

Adapt to Changes: Technology keeps evolving, and upskilling helps you stay flexible and ready for any new changes in the industry.

Top Companies Hiring for These Roles

Global Tech Giants: Google, Microsoft, Amazon, and IBM.

Startups: Fintech, health tech, and AI-based startups are often at the forefront of innovation.

Consulting Firms: Companies like Accenture, Deloitte, and PwC increasingly seek tech talent.

In conclusion, the tech world is constantly changing, and staying updated is key to having a successful career. In 2025, jobs in fields like AI, cybersecurity, data science, and software development will be in high demand. By learning the right skills and keeping up with new trends, you can prepare yourself for these exciting roles. Whether you're just starting or looking to improve your skills, the tech industry offers many opportunities for growth and success.

#Top 10 Tech Jobs in 2025#In- Demand Tech Jobs#High paying Tech Jobs#artificial intelligence#datascience#cybersecurity

2 notes

·

View notes

Text

Expert Power Platform Services | Navignite LLP

Looking to streamline your business processes with custom applications? With over 10 years of extensive experience, our agency specializes in delivering top-notch Power Apps services that transform the way you operate. We harness the full potential of the Microsoft Power Platform to create solutions that are tailored to your unique needs.

Our Services Include:

Custom Power Apps Development: Building bespoke applications to address your specific business challenges.

Workflow Automation with Power Automate: Enhancing efficiency through automated workflows and processes.

Integration with Microsoft Suite: Seamless connectivity with SharePoint, Dynamics 365, Power BI, and other Microsoft tools.

Third-Party Integrations: Expertise in integrating Xero, QuickBooks, MYOB, and other external systems.

Data Migration & Management: Secure and efficient data handling using tools like XRM Toolbox.

Maintenance & Support: Ongoing support to ensure your applications run smoothly and effectively.

Our decade-long experience includes working with technologies like Azure Functions, Custom Web Services, and SQL Server, ensuring that we deliver robust and scalable solutions.

Why Choose Us?

Proven Expertise: Over 10 years of experience in Microsoft Dynamics CRM and Power Platform.

Tailored Solutions: Customized services that align with your business goals.

Comprehensive Skill Set: Proficient in plugin development, workflow management, and client-side scripting.

Client-Centric Approach: Dedicated to improving your productivity and simplifying tasks.

Boost your productivity and drive innovation with our expert Power Apps solutions.

Contact us today to elevate your business to the next level!

#artificial intelligence#power platform#microsoft power apps#microsoft power platform#powerplatform#power platform developers#microsoft power platform developer#msft power platform#dynamics 365 platform

2 notes

·

View notes

Text

5 Trends in ICT

Exploring the 5 ICT Trends Shaping the Future The Information and Communication Technology (ICT) landscape is evolving at a rapid pace, driven by advancements that are transforming how we live, work, and interact. Here are five key trends in ICT that are making a significant impact:

1. Convergence of Technologies

Technologies are merging into integrated systems, like smart devices that combine communication, media, and internet functions into one seamless tool. This trend enhances user experience and drives innovation across various sectors

Convergence technologies merge different systems, like smartphones combining communication and computing, smart homes using IoT, telemedicine linking healthcare with telecom, AR headsets overlaying digital on reality, and electric vehicles integrating AI and renewable energy.

2. Social Media

Social media platforms are central to modern communication and marketing, offering real-time interaction and advanced engagement tools. New features and analytics are making these platforms more powerful for personal and business use.

Social media examples linked to ICT trends include Facebook with cloud computing, TikTok using AI for personalized content, Instagram focusing on mobile technology, LinkedIn applying big data analytics, and YouTube leading in video streaming.

3. Mobile Technologies

Mobile technology is advancing with faster 5G networks and more sophisticated devices, transforming how we use smartphones and tablets. These improvements enable new applications and services, enhancing connectivity and user experiences.

Mobile technologies tied to ICT trends include 5G for high-speed connectivity, mobile payment apps in fintech, wearables linked to IoT, AR apps like Pokémon GO, and mobile cloud storage services like Google Drive.

4. Assistive Media

Assistive media technologies improve accessibility for people with disabilities, including tools like screen readers and voice recognition software. These innovations ensure that digital environments are navigable for everyone, promoting inclusivity.

Assistive media examples linked to ICT trends include screen readers for accessibility, AI-driven voice assistants, speech-to-text software using NLP, eye-tracking devices for HCI, and closed captioning on video platforms for digital media accessibility.

5. Cloud Computing

Cloud computing allows for scalable and flexible data storage and application hosting on remote servers. This trend supports software-as-a-service (SaaS) models and drives advancements in data analytics, cybersecurity, and collaborative tools.

Cloud computing examples related to ICT trends include AWS for IaaS, Google Drive for cloud storage, Microsoft Azure for PaaS, Salesforce for SaaS, and Dropbox for file synchronization.

Submitted by: Van Dexter G. Tirado

3 notes

·

View notes

Text

CLOUD COMPUTING: A CONCEPT OF NEW ERA FOR DATA SCIENCE

Cloud Computing is the most interesting and evolving topic in computing in the recent decade. The concept of storing data or accessing software from another computer that you are not aware of seems to be confusing to many users. Most the people/organizations that use cloud computing on their daily basis claim that they do not understand the subject of cloud computing. But the concept of cloud computing is not as confusing as it sounds. Cloud Computing is a type of service where the computer resources are sent over a network. In simple words, the concept of cloud computing can be compared to the electricity supply that we daily use. We do not have to bother how the electricity is made and transported to our houses or we do not have to worry from where the electricity is coming from, all we do is just use it. The ideology behind the cloud computing is also the same: People/organizations can simply use it. This concept is a huge and major development of the decade in computing.

Cloud computing is a service that is provided to the user who can sit in one location and remotely access the data or software or program applications from another location. Usually, this process is done with the use of a web browser over a network i.e., in most cases over the internet. Nowadays browsers and the internet are easily usable on almost all the devices that people are using these days. If the user wants to access a file in his device and does not have the necessary software to access that file, then the user would take the help of cloud computing to access that file with the help of the internet.

Cloud computing provide over hundreds and thousands of services and one of the most used services of cloud computing is the cloud storage. All these services are accessible to the public throughout the globe and they do not require to have the software on their devices. The general public can access and utilize these services from the cloud with the help of the internet. These services will be free to an extent and then later the users will be billed for further usage. Few of the well-known cloud services that are drop box, Sugar Sync, Amazon Cloud Drive, Google Docs etc.

Finally, that the use of cloud services is not guaranteed let it be because of the technical problems or because the services go out of business. The example they have used is about the Mega upload, a service that was banned and closed by the government of U.S and the FBI for their illegal file sharing allegations. And due to this, they had to delete all the files in their storage and due to which the customers cannot get their files back from the storage.

Service Models Cloud Software as a Service Use the provider's applications running on a cloud infrastructure Accessible from various client devices through thin client interface such as a web browser Consumer does not manage or control the underlying cloud infrastructure including network, servers, operating systems, storage

Google Apps, Microsoft Office 365, Petrosoft, Onlive, GT Nexus, Marketo, Casengo, TradeCard, Rally Software, Salesforce, ExactTarget and CallidusCloud

Cloud Platform as a Service Cloud providers deliver a computing platform, typically including operating system, programming language execution environment, database, and web server Application developers can develop and run their software solutions on a cloud platform without the cost and complexity of buying and managing the underlying hardware and software layers

AWS Elastic Beanstalk, Cloud Foundry, Heroku, Force.com, Engine Yard, Mendix, OpenShift, Google App Engine, AppScale, Windows Azure Cloud Services, OrangeScape and Jelastic.

Cloud Infrastructure as a Service Cloud provider offers processing, storage, networks, and other fundamental computing resources Consumer is able to deploy and run arbitrary software, which can include operating systems and applications Amazon EC2, Google Compute Engine, HP Cloud, Joyent, Linode, NaviSite, Rackspace, Windows Azure, ReadySpace Cloud Services, and Internap Agile

Deployment Models Private Cloud: Cloud infrastructure is operated solely for an organization Community Cloud : Shared by several organizations and supports a specific community that has shared concerns Public Cloud: Cloud infrastructure is made available to the general public Hybrid Cloud: Cloud infrastructure is a composition of two or more clouds

Advantages of Cloud Computing • Improved performance • Better performance for large programs • Unlimited storage capacity and computing power • Reduced software costs • Universal document access • Just computer with internet connection is required • Instant software updates • No need to pay for or download an upgrade

Disadvantages of Cloud Computing • Requires a constant Internet connection • Does not work well with low-speed connections • Even with a fast connection, web-based applications can sometimes be slower than accessing a similar software program on your desktop PC • Everything about the program, from the interface to the current document, has to be sent back and forth from your computer to the computers in the cloud

About Rang Technologies: Headquartered in New Jersey, Rang Technologies has dedicated over a decade delivering innovative solutions and best talent to help businesses get the most out of the latest technologies in their digital transformation journey. Read More...

#CloudComputing#CloudTech#HybridCloud#ArtificialIntelligence#MachineLearning#Rangtechnologies#Ranghealthcare#Ranglifesciences

9 notes

·

View notes

Text

Discover how our team's deep expertise in Microsoft Azure can help you build, deploy, and manage modern web apps, AI solutions, data services, and more

0 notes

Text

Advanced WordPress Security Services for Peace of Mind

A secure website is essential for any business, whether you’re running an e-commerce platform or a blog. At Atcuality, we provide advanced WordPress security services tailored to your specific needs. Our solutions include malware removal, vulnerability scanning, and backups to safeguard your data. We also offer 24/7 monitoring to ensure immediate response to any security breaches. With a focus on providing end-to-end protection, we help businesses maintain credibility and uptime. Partner with us and experience unmatched security for your WordPress site, ensuring that you stay one step ahead of cyber threats.

#seo marketing#seo services#artificial intelligence#seo agency#seo company#iot applications#azure cloud services#digital marketing#amazon web services#ai powered application#ai generated#technology#chatgpt#ai services#wordpress#business#iot#iot platform#iot development services#iotsolutions#techinnovation#automation#digitaltransformation#ai applications#amazon services#android app development#mobile application development#app development company#mobile app development company#mobile app development

1 note

·

View note

Text

Deploying Large Language Models on Kubernetes: A Comprehensive Guide

New Post has been published on https://thedigitalinsider.com/deploying-large-language-models-on-kubernetes-a-comprehensive-guide/

Deploying Large Language Models on Kubernetes: A Comprehensive Guide

Large Language Models (LLMs) are capable of understanding and generating human-like text, making them invaluable for a wide range of applications, such as chatbots, content generation, and language translation.

However, deploying LLMs can be a challenging task due to their immense size and computational requirements. Kubernetes, an open-source container orchestration system, provides a powerful solution for deploying and managing LLMs at scale. In this technical blog, we’ll explore the process of deploying LLMs on Kubernetes, covering various aspects such as containerization, resource allocation, and scalability.

Understanding Large Language Models

Before diving into the deployment process, let’s briefly understand what Large Language Models are and why they are gaining so much attention.

Large Language Models (LLMs) are a type of neural network model trained on vast amounts of text data. These models learn to understand and generate human-like language by analyzing patterns and relationships within the training data. Some popular examples of LLMs include GPT (Generative Pre-trained Transformer), BERT (Bidirectional Encoder Representations from Transformers), and XLNet.

LLMs have achieved remarkable performance in various NLP tasks, such as text generation, language translation, and question answering. However, their massive size and computational requirements pose significant challenges for deployment and inference.

Why Kubernetes for LLM Deployment?

Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. It provides several benefits for deploying LLMs, including:

Scalability: Kubernetes allows you to scale your LLM deployment horizontally by adding or removing compute resources as needed, ensuring optimal resource utilization and performance.

Resource Management: Kubernetes enables efficient resource allocation and isolation, ensuring that your LLM deployment has access to the required compute, memory, and GPU resources.

High Availability: Kubernetes provides built-in mechanisms for self-healing, automatic rollouts, and rollbacks, ensuring that your LLM deployment remains highly available and resilient to failures.

Portability: Containerized LLM deployments can be easily moved between different environments, such as on-premises data centers or cloud platforms, without the need for extensive reconfiguration.

Ecosystem and Community Support: Kubernetes has a large and active community, providing a wealth of tools, libraries, and resources for deploying and managing complex applications like LLMs.

Preparing for LLM Deployment on Kubernetes:

Before deploying an LLM on Kubernetes, there are several prerequisites to consider:

Kubernetes Cluster: You’ll need a Kubernetes cluster set up and running, either on-premises or on a cloud platform like Amazon Elastic Kubernetes Service (EKS), Google Kubernetes Engine (GKE), or Azure Kubernetes Service (AKS).

GPU Support: LLMs are computationally intensive and often require GPU acceleration for efficient inference. Ensure that your Kubernetes cluster has access to GPU resources, either through physical GPUs or cloud-based GPU instances.

Container Registry: You’ll need a container registry to store your LLM Docker images. Popular options include Docker Hub, Amazon Elastic Container Registry (ECR), Google Container Registry (GCR), or Azure Container Registry (ACR).

LLM Model Files: Obtain the pre-trained LLM model files (weights, configuration, and tokenizer) from the respective source or train your own model.

Containerization: Containerize your LLM application using Docker or a similar container runtime. This involves creating a Dockerfile that packages your LLM code, dependencies, and model files into a Docker image.

Deploying an LLM on Kubernetes

Once you have the prerequisites in place, you can proceed with deploying your LLM on Kubernetes. The deployment process typically involves the following steps:

Building the Docker Image

Build the Docker image for your LLM application using the provided Dockerfile and push it to your container registry.

Creating Kubernetes Resources

Define the Kubernetes resources required for your LLM deployment, such as Deployments, Services, ConfigMaps, and Secrets. These resources are typically defined using YAML or JSON manifests.

Configuring Resource Requirements

Specify the resource requirements for your LLM deployment, including CPU, memory, and GPU resources. This ensures that your deployment has access to the necessary compute resources for efficient inference.

Deploying to Kubernetes

Use the kubectl command-line tool or a Kubernetes management tool (e.g., Kubernetes Dashboard, Rancher, or Lens) to apply the Kubernetes manifests and deploy your LLM application.

Monitoring and Scaling

Monitor the performance and resource utilization of your LLM deployment using Kubernetes monitoring tools like Prometheus and Grafana. Adjust the resource allocation or scale your deployment as needed to meet the demand.

Example Deployment

Let’s consider an example of deploying the GPT-3 language model on Kubernetes using a pre-built Docker image from Hugging Face. We’ll assume that you have a Kubernetes cluster set up and configured with GPU support.

Pull the Docker Image:

bashCopydocker pull huggingface/text-generation-inference:1.1.0

Create a Kubernetes Deployment:

Create a file named gpt3-deployment.yaml with the following content:

apiVersion: apps/v1 kind: Deployment metadata: name: gpt3-deployment spec: replicas: 1 selector: matchLabels: app: gpt3 template: metadata: labels: app: gpt3 spec: containers: - name: gpt3 image: huggingface/text-generation-inference:1.1.0 resources: limits: nvidia.com/gpu: 1 env: - name: MODEL_ID value: gpt2 - name: NUM_SHARD value: "1" - name: PORT value: "8080" - name: QUANTIZE value: bitsandbytes-nf4

This deployment specifies that we want to run one replica of the gpt3 container using the huggingface/text-generation-inference:1.1.0 Docker image. The deployment also sets the environment variables required for the container to load the GPT-3 model and configure the inference server.

Create a Kubernetes Service:

Create a file named gpt3-service.yaml with the following content:

apiVersion: v1 kind: Service metadata: name: gpt3-service spec: selector: app: gpt3 ports: - port: 80 targetPort: 8080 type: LoadBalancer

This service exposes the gpt3 deployment on port 80 and creates a LoadBalancer type service to make the inference server accessible from outside the Kubernetes cluster.

Deploy to Kubernetes:

Apply the Kubernetes manifests using the kubectl command:

kubectl apply -f gpt3-deployment.yaml kubectl apply -f gpt3-service.yaml

Monitor the Deployment:

Monitor the deployment progress using the following commands:

kubectl get pods kubectl logs <pod_name>

Once the pod is running and the logs indicate that the model is loaded and ready, you can obtain the external IP address of the LoadBalancer service:

kubectl get service gpt3-service

Test the Deployment:

You can now send requests to the inference server using the external IP address and port obtained from the previous step. For example, using curl:

curl -X POST http://<external_ip>:80/generate -H 'Content-Type: application/json' -d '"inputs": "The quick brown fox", "parameters": "max_new_tokens": 50'

This command sends a text generation request to the GPT-3 inference server, asking it to continue the prompt “The quick brown fox” for up to 50 additional tokens.

Advanced topics you should be aware of

While the example above demonstrates a basic deployment of an LLM on Kubernetes, there are several advanced topics and considerations to explore:

_*]:min-w-0″ readability=”131.72387362124″>

1. Autoscaling

Kubernetes supports horizontal and vertical autoscaling, which can be beneficial for LLM deployments due to their variable computational demands. Horizontal autoscaling allows you to automatically scale the number of replicas (pods) based on metrics like CPU or memory utilization. Vertical autoscaling, on the other hand, allows you to dynamically adjust the resource requests and limits for your containers.

To enable autoscaling, you can use the Kubernetes Horizontal Pod Autoscaler (HPA) and Vertical Pod Autoscaler (VPA). These components monitor your deployment and automatically scale resources based on predefined rules and thresholds.

2. GPU Scheduling and Sharing

In scenarios where multiple LLM deployments or other GPU-intensive workloads are running on the same Kubernetes cluster, efficient GPU scheduling and sharing become crucial. Kubernetes provides several mechanisms to ensure fair and efficient GPU utilization, such as GPU device plugins, node selectors, and resource limits.

You can also leverage advanced GPU scheduling techniques like NVIDIA Multi-Instance GPU (MIG) or AMD Memory Pool Remapping (MPR) to virtualize GPUs and share them among multiple workloads.

3. Model Parallelism and Sharding

Some LLMs, particularly those with billions or trillions of parameters, may not fit entirely into the memory of a single GPU or even a single node. In such cases, you can employ model parallelism and sharding techniques to distribute the model across multiple GPUs or nodes.

Model parallelism involves splitting the model architecture into different components (e.g., encoder, decoder) and distributing them across multiple devices. Sharding, on the other hand, involves partitioning the model parameters and distributing them across multiple devices or nodes.

Kubernetes provides mechanisms like StatefulSets and Custom Resource Definitions (CRDs) to manage and orchestrate distributed LLM deployments with model parallelism and sharding.

4. Fine-tuning and Continuous Learning

In many cases, pre-trained LLMs may need to be fine-tuned or continuously trained on domain-specific data to improve their performance for specific tasks or domains. Kubernetes can facilitate this process by providing a scalable and resilient platform for running fine-tuning or continuous learning workloads.

You can leverage Kubernetes batch processing frameworks like Apache Spark or Kubeflow to run distributed fine-tuning or training jobs on your LLM models. Additionally, you can integrate your fine-tuned or continuously trained models with your inference deployments using Kubernetes mechanisms like rolling updates or blue/green deployments.

5. Monitoring and Observability

Monitoring and observability are crucial aspects of any production deployment, including LLM deployments on Kubernetes. Kubernetes provides built-in monitoring solutions like Prometheus and integrations with popular observability platforms like Grafana, Elasticsearch, and Jaeger.

You can monitor various metrics related to your LLM deployments, such as CPU and memory utilization, GPU usage, inference latency, and throughput. Additionally, you can collect and analyze application-level logs and traces to gain insights into the behavior and performance of your LLM models.

6. Security and Compliance

Depending on your use case and the sensitivity of the data involved, you may need to consider security and compliance aspects when deploying LLMs on Kubernetes. Kubernetes provides several features and integrations to enhance security, such as network policies, role-based access control (RBAC), secrets management, and integration with external security solutions like HashiCorp Vault or AWS Secrets Manager.

Additionally, if you’re deploying LLMs in regulated industries or handling sensitive data, you may need to ensure compliance with relevant standards and regulations, such as GDPR, HIPAA, or PCI-DSS.

7. Multi-Cloud and Hybrid Deployments

While this blog post focuses on deploying LLMs on a single Kubernetes cluster, you may need to consider multi-cloud or hybrid deployments in some scenarios. Kubernetes provides a consistent platform for deploying and managing applications across different cloud providers and on-premises data centers.

You can leverage Kubernetes federation or multi-cluster management tools like KubeFed or GKE Hub to manage and orchestrate LLM deployments across multiple Kubernetes clusters spanning different cloud providers or hybrid environments.

These advanced topics highlight the flexibility and scalability of Kubernetes for deploying and managing LLMs.

Conclusion

Deploying Large Language Models (LLMs) on Kubernetes offers numerous benefits, including scalability, resource management, high availability, and portability. By following the steps outlined in this technical blog, you can containerize your LLM application, define the necessary Kubernetes resources, and deploy it to a Kubernetes cluster.

However, deploying LLMs on Kubernetes is just the first step. As your application grows and your requirements evolve, you may need to explore advanced topics such as autoscaling, GPU scheduling, model parallelism, fine-tuning, monitoring, security, and multi-cloud deployments.

Kubernetes provides a robust and extensible platform for deploying and managing LLMs, enabling you to build reliable, scalable, and secure applications.

#access control#Amazon#Amazon Elastic Kubernetes Service#amd#Apache#Apache Spark#app#applications#apps#architecture#Artificial Intelligence#attention#AWS#azure#Behavior#BERT#Blog#Blue#Building#chatbots#Cloud#cloud platform#cloud providers#cluster#clusters#code#command#Community#compliance#comprehensive

0 notes

Text

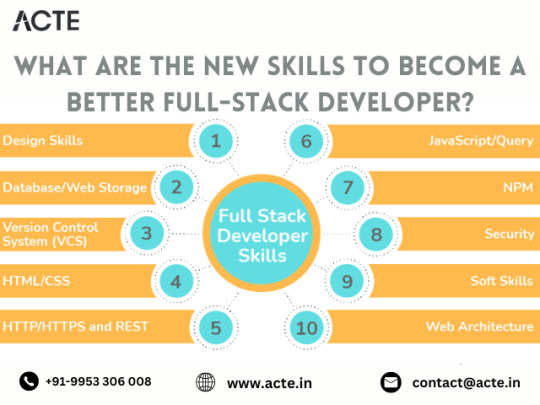

Elevating Your Full-Stack Developer Expertise: Exploring Emerging Skills and Technologies

Introduction: In the dynamic landscape of web development, staying at the forefront requires continuous learning and adaptation. Full-stack developers play a pivotal role in crafting modern web applications, balancing frontend finesse with backend robustness. This guide delves into the evolving skills and technologies that can propel full-stack developers to new heights of expertise and innovation.

Pioneering Progress: Key Skills for Full-Stack Developers

1. Innovating with Microservices Architecture:

Microservices have redefined application development, offering scalability and flexibility in the face of complexity. Mastery of frameworks like Kubernetes and Docker empowers developers to architect, deploy, and manage microservices efficiently. By breaking down monolithic applications into modular components, developers can iterate rapidly and respond to changing requirements with agility.

2. Embracing Serverless Computing:

The advent of serverless architecture has revolutionized infrastructure management, freeing developers from the burdens of server maintenance. Platforms such as AWS Lambda and Azure Functions enable developers to focus solely on code development, driving efficiency and cost-effectiveness. Embrace serverless computing to build scalable, event-driven applications that adapt seamlessly to fluctuating workloads.

3. Crafting Progressive Web Experiences (PWEs):

Progressive Web Apps (PWAs) herald a new era of web development, delivering native app-like experiences within the browser. Harness the power of technologies like Service Workers and Web App Manifests to create PWAs that are fast, reliable, and engaging. With features like offline functionality and push notifications, PWAs blur the lines between web and mobile, captivating users and enhancing engagement.

4. Harnessing GraphQL for Flexible Data Management:

GraphQL has emerged as a versatile alternative to RESTful APIs, offering a unified interface for data fetching and manipulation. Dive into GraphQL's intuitive query language and schema-driven approach to simplify data interactions and optimize performance. With GraphQL, developers can fetch precisely the data they need, minimizing overhead and maximizing efficiency.

5. Unlocking Potential with Jamstack Development:

Jamstack architecture empowers developers to build fast, secure, and scalable web applications using modern tools and practices. Explore frameworks like Gatsby and Next.js to leverage pre-rendering, serverless functions, and CDN caching. By decoupling frontend presentation from backend logic, Jamstack enables developers to deliver blazing-fast experiences that delight users and drive engagement.

6. Integrating Headless CMS for Content Flexibility:

Headless CMS platforms offer developers unprecedented control over content management, enabling seamless integration with frontend frameworks. Explore platforms like Contentful and Strapi to decouple content creation from presentation, facilitating dynamic and personalized experiences across channels. With headless CMS, developers can iterate quickly and deliver content-driven applications with ease.

7. Optimizing Single Page Applications (SPAs) for Performance:

Single Page Applications (SPAs) provide immersive user experiences but require careful optimization to ensure performance and responsiveness. Implement techniques like lazy loading and server-side rendering to minimize load times and enhance interactivity. By optimizing resource delivery and prioritizing critical content, developers can create SPAs that deliver a seamless and engaging user experience.

8. Infusing Intelligence with Machine Learning and AI:

Machine learning and artificial intelligence open new frontiers for full-stack developers, enabling intelligent features and personalized experiences. Dive into frameworks like TensorFlow.js and PyTorch.js to build recommendation systems, predictive analytics, and natural language processing capabilities. By harnessing the power of machine learning, developers can create smarter, more adaptive applications that anticipate user needs and preferences.

9. Safeguarding Applications with Cybersecurity Best Practices:

As cyber threats continue to evolve, cybersecurity remains a critical concern for developers and organizations alike. Stay informed about common vulnerabilities and adhere to best practices for securing applications and user data. By implementing robust security measures and proactive monitoring, developers can protect against potential threats and safeguard the integrity of their applications.

10. Streamlining Development with CI/CD Pipelines:

Continuous Integration and Deployment (CI/CD) pipelines are essential for accelerating development workflows and ensuring code quality and reliability. Explore tools like Jenkins, CircleCI, and GitLab CI/CD to automate testing, integration, and deployment processes. By embracing CI/CD best practices, developers can deliver updates and features with confidence, driving innovation and agility in their development cycles.

#full stack developer#education#information#full stack web development#front end development#web development#frameworks#technology#backend#full stack developer course

2 notes

·

View notes

Text

Advanced Techniques in Full-Stack Development

Certainly, let's delve deeper into more advanced techniques and concepts in full-stack development:

1. Server-Side Rendering (SSR) and Static Site Generation (SSG):

SSR: Rendering web pages on the server side to improve performance and SEO by delivering fully rendered pages to the client.

SSG: Generating static HTML files at build time, enhancing speed, and reducing the server load.

2. WebAssembly:

WebAssembly (Wasm): A binary instruction format for a stack-based virtual machine. It allows high-performance execution of code on web browsers, enabling languages like C, C++, and Rust to run in web applications.

3. Progressive Web Apps (PWAs) Enhancements:

Background Sync: Allowing PWAs to sync data in the background even when the app is closed.

Web Push Notifications: Implementing push notifications to engage users even when they are not actively using the application.

4. State Management:

Redux and MobX: Advanced state management libraries in React applications for managing complex application states efficiently.

Reactive Programming: Utilizing RxJS or other reactive programming libraries to handle asynchronous data streams and events in real-time applications.

5. WebSockets and WebRTC:

WebSockets: Enabling real-time, bidirectional communication between clients and servers for applications requiring constant data updates.

WebRTC: Facilitating real-time communication, such as video chat, directly between web browsers without the need for plugins or additional software.

6. Caching Strategies:

Content Delivery Networks (CDN): Leveraging CDNs to cache and distribute content globally, improving website loading speeds for users worldwide.

Service Workers: Using service workers to cache assets and data, providing offline access and improving performance for returning visitors.

7. GraphQL Subscriptions:

GraphQL Subscriptions: Enabling real-time updates in GraphQL APIs by allowing clients to subscribe to specific events and receive push notifications when data changes.

8. Authentication and Authorization:

OAuth 2.0 and OpenID Connect: Implementing secure authentication and authorization protocols for user login and access control.

JSON Web Tokens (JWT): Utilizing JWTs to securely transmit information between parties, ensuring data integrity and authenticity.

9. Content Management Systems (CMS) Integration:

Headless CMS: Integrating headless CMS like Contentful or Strapi, allowing content creators to manage content independently from the application's front end.

10. Automated Performance Optimization:

Lighthouse and Web Vitals: Utilizing tools like Lighthouse and Google's Web Vitals to measure and optimize web performance, focusing on key user-centric metrics like loading speed and interactivity.

11. Machine Learning and AI Integration:

TensorFlow.js and ONNX.js: Integrating machine learning models directly into web applications for tasks like image recognition, language processing, and recommendation systems.

12. Cross-Platform Development with Electron:

Electron: Building cross-platform desktop applications using web technologies (HTML, CSS, JavaScript), allowing developers to create desktop apps for Windows, macOS, and Linux.

13. Advanced Database Techniques:

Database Sharding: Implementing database sharding techniques to distribute large databases across multiple servers, improving scalability and performance.

Full-Text Search and Indexing: Implementing full-text search capabilities and optimized indexing for efficient searching and data retrieval.

14. Chaos Engineering:

Chaos Engineering: Introducing controlled experiments to identify weaknesses and potential failures in the system, ensuring the application's resilience and reliability.

15. Serverless Architectures with AWS Lambda or Azure Functions:

Serverless Architectures: Building applications as a collection of small, single-purpose functions that run in a serverless environment, providing automatic scaling and cost efficiency.

16. Data Pipelines and ETL (Extract, Transform, Load) Processes:

Data Pipelines: Creating automated data pipelines for processing and transforming large volumes of data, integrating various data sources and ensuring data consistency.

17. Responsive Design and Accessibility:

Responsive Design: Implementing advanced responsive design techniques for seamless user experiences across a variety of devices and screen sizes.

Accessibility: Ensuring web applications are accessible to all users, including those with disabilities, by following WCAG guidelines and ARIA practices.

full stack development training in Pune

2 notes

·

View notes

Text

Exploring the Boundless World of Java Programming: Your Path to Software Development Excellence

The world of programming is a fascinating and dynamic realm where innovation knows no bounds. In this rapidly evolving landscape, one language has remained a steadfast and versatile companion to developers for many years – Java. As one of the cornerstones of software development, Java is a programming language that continues to shape the digital future. Its ability to create diverse applications, its cross-platform compatibility, and its emphasis on readability have endeared it to developers across the globe.

Java's extensive libraries and its commitment to staying at the forefront of technology have made it a powerhouse capable of addressing a wide spectrum of challenges in the software development arena. The allure of Java programming lies in its versatility and the potential it offers to explore and master this dynamic and ever-evolving world.

Whether you're a seasoned developer or a novice just embarking on your coding adventure, Java has something to offer. It opens doors to countless opportunities and holds the potential to shape your digital future. As you delve into the capabilities of Java programming, you'll discover why it's a valuable skill to acquire and the myriad ways it can impact your journey in the world of software development.

Java in the World of Development

Java programming's influence spans a wide range of application domains, making it a sought-after skill among developers. Let's explore some key areas where Java shines:

1. Web Development

Java's utility in web development is well-established. The Java Enterprise Edition (Java EE) offers a robust set of tools and frameworks for building enterprise-level web applications. Popular web frameworks like Spring and JavaServer Faces (JSF) simplify web development, making it more efficient and structured.

2. Mobile App Development

For mobile app development, Java stands as a primary language for the Android platform. Android Studio, the official Android development environment, uses Java to create mobile apps for Android devices. Given the widespread use of Android devices, Java skills are in high demand, offering lucrative opportunities for developers.

3. Desktop Applications

Java's Graphical User Interface (GUI) libraries, including Swing and JavaFX, allow developers to create cross-platform desktop applications. This means a single Java application can run seamlessly on Windows, macOS, and Linux without modification. Java's platform independence is a significant advantage in this regard.

4. Game Development

While not as common as some other languages for game development, Java has made its mark in the gaming industry. Frameworks like LibGDX empower developers to create engaging and interactive games, proving that Java's versatility extends to the world of gaming.

5. Big Data and Analytics

In the realm of big data processing and analytics, Java plays a pivotal role. Leading frameworks like Apache Hadoop and Apache Spark are written in Java, leveraging its multithreading capabilities for processing vast datasets. Java's speed and reliability make it a preferred choice in data-intensive applications.

6. Server-Side Applications

Java is a top choice for building server-side applications. It powers numerous web servers, including Apache Tomcat and Jetty, and is widely used in developing backend services. Java's scalability and performance make it an ideal candidate for server-side tasks.

7. Cloud Computing

The cloud computing landscape benefits from Java's presence. Java applications can be seamlessly deployed on cloud platforms like Amazon Web Services (AWS) and Microsoft Azure. Its robustness and adaptability are vital for building cloud-based services and applications, offering limitless possibilities in cloud computing.

8. Scientific and Academic Research

Java's readability and maintainability make it a preferred choice in scientific and academic research. Researchers and scientists find Java suitable for developing scientific simulations, analysis tools, and research applications. Its ability to handle complex computations and data processing is a significant advantage in the research domain.

9. Internet of Things (IoT)

Java is gaining prominence in the Internet of Things (IoT) domain. It serves as a valuable tool for developing embedded systems, particularly in conjunction with platforms like Raspberry Pi. Its cross-platform compatibility ensures that IoT devices can run smoothly across diverse hardware.

10. Enterprise Software

Many large-scale enterprise-level applications and systems rely on Java as their foundation. Its scalability, security features, and maintainability make it an ideal choice for large organizations. Java's robustness and ability to handle complex business logic are assets in the enterprise software domain.

Java Programming: A Valuable Skill

The allure of Java programming lies in its adaptability and its remarkable potential to shape your career in software development. Java is not just a programming language; it's a gateway to a world of opportunities. With an ever-present demand for skilled Java developers, learning Java can open doors to numerous career prospects and professional growth.

As you embark on your journey to master Java programming, it's essential to have the right guidance and training.If you're committed to becoming a proficient Java developer and unlocking the full potential of this versatile language, consider enrolling in the comprehensive Java training programs offered by ACTE Technologies. It stands as a trusted guide, offering expert-led courses designed to equip you with the knowledge, skills, and practical experience necessary to excel in the world of Java programming. Your future as a proficient Java developer begins here, and the possibilities are limitless.

Java programming is a valuable investment in your career in software development. The multitude of applications and opportunities it offers, coupled with its sustained relevance and demand, make it a skill worth acquiring. Whether you are entering the world of programming or seeking to expand your horizons, mastering Java is a rewarding and transformative journey. So, why wait? Take the first step towards becoming a proficient Java developer with ACTE Technologies, and unlocking a world of possibilities in the realm of software development. Your journey to success begins here.

3 notes

·

View notes