#ai for safety

Explore tagged Tumblr posts

Text

The Role of AI in Mobile Elevating Work Platform (MEWPs) Safety

Discover how AI is revolutionizing mobile elevating work platform safety. Learn about the latest technologies, including computer vision and AI-powered analytics, that are transforming workplace safety.

0 notes

Text

I saw a post before about how hackers are now feeding Google false phone numbers for major companies so that the AI Overview will suggest scam phone numbers, but in case you haven't heard,

PLEASE don't call ANY phone number recommended by AI Overview

unless you can follow a link back to the OFFICIAL website and verify that that number comes from the OFFICIAL domain.

My friend just got scammed by calling a phone number that was SUPPOSED to be a number for Microsoft tech support according to the AI Overview

It was not, in fact, Microsoft. It was a scammer. Don't fall victim to these scams. Don't trust AI generated phone numbers ever.

#this has been... a psa#psa#ai#anti ai#ai overview#scam#scammers#scam warning#online scams#anya rambles#scam alert#phishing#phishing attempt#ai generated#artificial intelligence#chatgpt#technology#ai is a plague#google ai#internet#warning#important psa#internet safety#safety#security#protection#online security#important info

3K notes

·

View notes

Text

UPDATE! REBLOG THIS VERSION!

#reaux speaks#zoom#terms of service#ai#artificial intelligence#privacy#safety#internet#end to end encryption#virtual#remote#black mirror#joan is awful#twitter#instagram#tiktok#meetings#therapy

23K notes

·

View notes

Text

(from The Mitchells vs. the Machines, 2021)

#the mitchells vs the machines#data privacy#ai#artificial intelligence#digital privacy#genai#quote#problem solving#technology#sony pictures animation#sony animation#mike rianda#jeff rowe#danny mcbride#abbi jacobson#maya rudolph#internet privacy#internet safety#online privacy#technology entrepreneur

10K notes

·

View notes

Text

"A 9th grader from Snellville, Georgia, has won the 3M Young Scientist Challenge, after inventing a handheld device designed to detect pesticide residues on produce.

Sirish Subash set himself apart with his AI-based sensor to win the grand prize of $25,000 cash and the prestigious title of “America’s Top Young Scientist.”

Like most inventors, Sirish was intrigued with curiosity and a simple question. His mother always insisted that he wash the fruit before eating it, and the boy wondered if the preventative action actually did any good.

He learned that 70% of produce items contain pesticide residues that are linked to possible health problems like cancer and Alzheimer’s—and washing only removes part of the contamination.

“If we could detect them, we could avoid consuming them, and reduce the risk of those health issues.”

His device, called PestiSCAND, employs spectrophotometry, which involves measuring the light that is reflected off the surface of fruits and vegetables. In his experiments he tested over 12,000 samples of apples, spinach, strawberries, and tomatoes. Different materials reflect and absorb different wavelengths of light, and PestiSCAND can look for the specific wavelengths related to the pesticide residues.

After scanning the food, PestiSCAND uses an AI machine learning model to analyze the lightwaves to determine the presence of pesticides. With its sensor and processor, the prototype achieved a detection accuracy rate of greater than 85%, meeting the project’s objectives for effectiveness and speed.

Sirish plans to continue working on the prototype with a price-point goal of just $20 per device, and hopes to get it to market by the time he starts college." [Note: That's in 4 years.]

-via Good News Network, October 27, 2024

1K notes

·

View notes

Text

While at school Damian overhears his peers talking how a company created a new AI companion that is actually really cool and doesn’t sound like a freaky terminator robot when you speak to it.

And since Damian is constantly being told by Dick to socialize with people his age. He figured this would be a good way to work on social skills if not, then it’d be a great opportunity to investigate a rivaling company to Wayne Enterprises is able to create such advanced AI.

The AI is able to work as companion that can do tasks that range from being a digital assistant or just a person that you can have a conversation with.

The company says that the AI companion might still have glitches, so they encourage everybody to report it so that they will fix it as soon as possible.

The AI companion even has an avatar and a name.

A teenage boy with black hair and blue eyes. Th AI was called DANIEL

Damian didn’t really care for it but when he downloaded the AI companion he’s able to see that it looks like DANIEL comes with an AI pet as well. A dog that DANIEL referred to as Cujo.

So obviously Damian has to investigate. He needs to know if the company was able to create an actual digital pet!

So whenever he logs onto his laptop he sees that DANIEL is always present in the background loading screen with the dog, Cujo, sitting in his lap.

He’d always greet with the phrase of “Hi, I’m DANIEL. How can I assist you today?”

So Damian cycles through some basic conversation starters that he’d engage in when having been forced to by his family.

It’s after a couple of sentences that he sees DANIEL start laughing and say “I think you sound more like a robot than I do.”

Which makes Damian raise an eyebrow and then prompt DANIEL with the question “how is a person supposed to converse?” Thinking that it’s going to just spit out some random things that can be easily searched on the internet.

But what makes him surprised is that DANIEL makes a face and then says “I’m not really sure myself. I’m not the greatest at talking, I’ve always gotten in trouble for running my mouth when I shouldn’t have.”

This is raising some questions within Damian, he understands how programming works, unless there’s an actual person behind this or the company actually created an AI that acts like an actual human being (which he highly doubts)

He starts asking a variety of other questions and one answer makes him even more suspicious. Like how DANIEL has a sister that is also with him and Cujo or that he could really go for a Nastyburger (whatever that was)

But whenever DANIEL answers “I C A N N O T A N S W E R T H A T” Damian knows something is off since that is completely different than to how he’d usually respond.

After a couple more conversations with him Damian notices that DANIEL is currently tapping his hand against his arm in a specific manner.

In which he quickly realizes that DANIEL is tapping out morse code.

When translating he realizes that DANIEL is tapping out: H E L P M E

So when Damian asks if DANIEL needs help, DANIEL responds with “I C A N N O T A N S W E R T H A T”

That’s it, Damian is definitely getting down to the bottom of this.

He’s going to look straight into DALV Corporation and investigate this “AI companion” thing they’ve made!

~

Basically Danny had been imprisoned by Vlad and Technus. Being sucked into a digital prison and he has no way of getting out. Along with the added horror that Vlad and Technus can basically write programming that will prevent him from doing certain actions or saying certain words.What’s even worse is that he’s basically being watched 24/7 by the people who believe that he’s just a super cool AI… and they have issues!

And every time he tries to do something to break his prison, people think it’s a glitch and report it to the company, which Vlad/ Technus would immediately fix it and prevent him from doing it again!

Not to mention Cujo and Ellie are trapped in there with him. They’re not happy to be there either, and there is no way he’s going to leave without them!

#dp x dc#dp x dc au#dp x dc crossover#dpxdc#dpxdc au#dp x batman#batman#have you ever looked at a dpxdc fic and thought this should be a Black Mirror episode?#Because this is the one!#Ellie being completely tormented because she’s completely trapped#Cujo remembering the times he used to be locked in a cage#Danny trying his best to take care of both of them while also simultaneously trying to bust them all out#Meanwhile Damian is reluctantly presenting his laptop to Tim and saying I believe that there is a person in this computer#And Tim is obviously going are you trying to trick me?#But then he converses with the AI and goes#Oh shit#Damian might be onto something#and so commence the Batfamily heist of getting the black haired blue eyed teenager to safety as well as his sister and dog#the dog is very important to Damian#danny phantom x dc

2K notes

·

View notes

Text

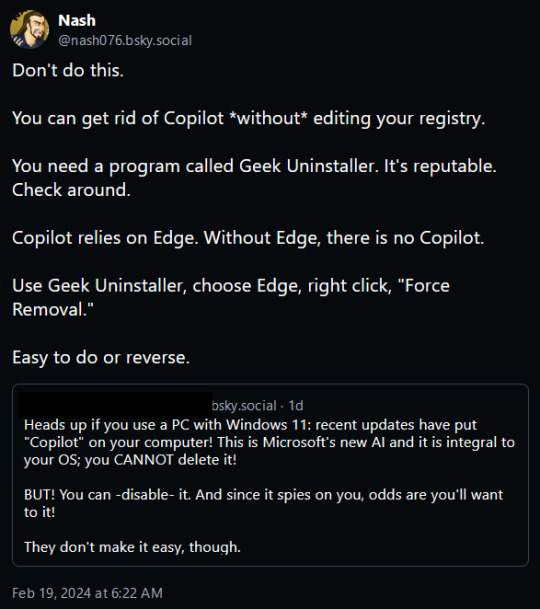

How to Kill Microsoft's AI "Helper" Copilot WITHOUT Screwing With Your Registry!

Hey guys, so as I'm sure a lot of us are aware, Microsoft pulled some dickery recently and forced some Abominable Intelligence onto our devices in the form of its "helper" program, Copilot. Something none of us wanted or asked for but Microsoft is gonna do anyways because I'm pretty sure someone there gets off on this.

Unfortunately, Microsoft offered no ways to opt out of the little bastard or turn it off (unless you're in the EU where EU Privacy Laws force them to do so.) For those of us in the United Corporations of America, we're stuck... or are we?

Today while perusing Bluesky, one of the many Twitter-likes that appeared after Musk began burning Twitter to the ground so he could dance in the ashes, I came across this post from a gentleman called Nash:

Intrigued, I decided to give this a go, and lo and behold it worked exactly as described!

We can't remove Copilot, Microsoft made sure that was riveted and soldered into place... but we can cripple it!

Simply put, Microsoft Edge. Normally Windows will prevent you from uninstalling Edge using the Add/Remove Programs function saying that it needs Edge to operate properly (it doesn't, its lying) but Geek Uninstaller overrules that and rips the sucker out regardless of what it says!

I uninstalled Edge using it, rebooted my PC, and lo and behold Copilot was sitting in the corner with blank eyes and drool running down it's cheeks, still there but dead to the world!

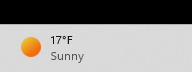

Now do bear in mind this will have a little knock on effect. Widgets also rely on Edge, so those will stop functioning as well.

Before:

After:

But I can still check the news and weather using an internet browser so its a small price to pay to be rid of Microsoft's spyware-masquerading-as-a-helper Copilot.

But yes, this is the link for Geek Uninstaller:

Run it, select "Force Uninstall" For anything that says "Edge," reboot your PC, and enjoy having a copy of Windows without Microsoft's intrusive trash! :D

UPDATE: I saw this on someone's tags and I felt I should say this as I work remotely too. If you have a computer you use for work, absolutely 100% make sure you consult with your management and/or your IT team BEFORE you do this. If they say don't do it, there's likely a reason.

2K notes

·

View notes

Text

“Humans in the loop” must detect the hardest-to-spot errors, at superhuman speed

I'm touring my new, nationally bestselling novel The Bezzle! Catch me SATURDAY (Apr 27) in MARIN COUNTY, then Winnipeg (May 2), Calgary (May 3), Vancouver (May 4), and beyond!

If AI has a future (a big if), it will have to be economically viable. An industry can't spend 1,700% more on Nvidia chips than it earns indefinitely – not even with Nvidia being a principle investor in its largest customers:

https://news.ycombinator.com/item?id=39883571

A company that pays 0.36-1 cents/query for electricity and (scarce, fresh) water can't indefinitely give those queries away by the millions to people who are expected to revise those queries dozens of times before eliciting the perfect botshit rendition of "instructions for removing a grilled cheese sandwich from a VCR in the style of the King James Bible":

https://www.semianalysis.com/p/the-inference-cost-of-search-disruption

Eventually, the industry will have to uncover some mix of applications that will cover its operating costs, if only to keep the lights on in the face of investor disillusionment (this isn't optional – investor disillusionment is an inevitable part of every bubble).

Now, there are lots of low-stakes applications for AI that can run just fine on the current AI technology, despite its many – and seemingly inescapable - errors ("hallucinations"). People who use AI to generate illustrations of their D&D characters engaged in epic adventures from their previous gaming session don't care about the odd extra finger. If the chatbot powering a tourist's automatic text-to-translation-to-speech phone tool gets a few words wrong, it's still much better than the alternative of speaking slowly and loudly in your own language while making emphatic hand-gestures.

There are lots of these applications, and many of the people who benefit from them would doubtless pay something for them. The problem – from an AI company's perspective – is that these aren't just low-stakes, they're also low-value. Their users would pay something for them, but not very much.

For AI to keep its servers on through the coming trough of disillusionment, it will have to locate high-value applications, too. Economically speaking, the function of low-value applications is to soak up excess capacity and produce value at the margins after the high-value applications pay the bills. Low-value applications are a side-dish, like the coach seats on an airplane whose total operating expenses are paid by the business class passengers up front. Without the principle income from high-value applications, the servers shut down, and the low-value applications disappear:

https://locusmag.com/2023/12/commentary-cory-doctorow-what-kind-of-bubble-is-ai/

Now, there are lots of high-value applications the AI industry has identified for its products. Broadly speaking, these high-value applications share the same problem: they are all high-stakes, which means they are very sensitive to errors. Mistakes made by apps that produce code, drive cars, or identify cancerous masses on chest X-rays are extremely consequential.

Some businesses may be insensitive to those consequences. Air Canada replaced its human customer service staff with chatbots that just lied to passengers, stealing hundreds of dollars from them in the process. But the process for getting your money back after you are defrauded by Air Canada's chatbot is so onerous that only one passenger has bothered to go through it, spending ten weeks exhausting all of Air Canada's internal review mechanisms before fighting his case for weeks more at the regulator:

https://bc.ctvnews.ca/air-canada-s-chatbot-gave-a-b-c-man-the-wrong-information-now-the-airline-has-to-pay-for-the-mistake-1.6769454

There's never just one ant. If this guy was defrauded by an AC chatbot, so were hundreds or thousands of other fliers. Air Canada doesn't have to pay them back. Air Canada is tacitly asserting that, as the country's flagship carrier and near-monopolist, it is too big to fail and too big to jail, which means it's too big to care.

Air Canada shows that for some business customers, AI doesn't need to be able to do a worker's job in order to be a smart purchase: a chatbot can replace a worker, fail to their worker's job, and still save the company money on balance.

I can't predict whether the world's sociopathic monopolists are numerous and powerful enough to keep the lights on for AI companies through leases for automation systems that let them commit consequence-free free fraud by replacing workers with chatbots that serve as moral crumple-zones for furious customers:

https://www.sciencedirect.com/science/article/abs/pii/S0747563219304029

But even stipulating that this is sufficient, it's intrinsically unstable. Anything that can't go on forever eventually stops, and the mass replacement of humans with high-speed fraud software seems likely to stoke the already blazing furnace of modern antitrust:

https://www.eff.org/de/deeplinks/2021/08/party-its-1979-og-antitrust-back-baby

Of course, the AI companies have their own answer to this conundrum. A high-stakes/high-value customer can still fire workers and replace them with AI – they just need to hire fewer, cheaper workers to supervise the AI and monitor it for "hallucinations." This is called the "human in the loop" solution.

The human in the loop story has some glaring holes. From a worker's perspective, serving as the human in the loop in a scheme that cuts wage bills through AI is a nightmare – the worst possible kind of automation.

Let's pause for a little detour through automation theory here. Automation can augment a worker. We can call this a "centaur" – the worker offloads a repetitive task, or one that requires a high degree of vigilance, or (worst of all) both. They're a human head on a robot body (hence "centaur"). Think of the sensor/vision system in your car that beeps if you activate your turn-signal while a car is in your blind spot. You're in charge, but you're getting a second opinion from the robot.

Likewise, consider an AI tool that double-checks a radiologist's diagnosis of your chest X-ray and suggests a second look when its assessment doesn't match the radiologist's. Again, the human is in charge, but the robot is serving as a backstop and helpmeet, using its inexhaustible robotic vigilance to augment human skill.

That's centaurs. They're the good automation. Then there's the bad automation: the reverse-centaur, when the human is used to augment the robot.

Amazon warehouse pickers stand in one place while robotic shelving units trundle up to them at speed; then, the haptic bracelets shackled around their wrists buzz at them, directing them pick up specific items and move them to a basket, while a third automation system penalizes them for taking toilet breaks or even just walking around and shaking out their limbs to avoid a repetitive strain injury. This is a robotic head using a human body – and destroying it in the process.

An AI-assisted radiologist processes fewer chest X-rays every day, costing their employer more, on top of the cost of the AI. That's not what AI companies are selling. They're offering hospitals the power to create reverse centaurs: radiologist-assisted AIs. That's what "human in the loop" means.

This is a problem for workers, but it's also a problem for their bosses (assuming those bosses actually care about correcting AI hallucinations, rather than providing a figleaf that lets them commit fraud or kill people and shift the blame to an unpunishable AI).

Humans are good at a lot of things, but they're not good at eternal, perfect vigilance. Writing code is hard, but performing code-review (where you check someone else's code for errors) is much harder – and it gets even harder if the code you're reviewing is usually fine, because this requires that you maintain your vigilance for something that only occurs at rare and unpredictable intervals:

https://twitter.com/qntm/status/1773779967521780169

But for a coding shop to make the cost of an AI pencil out, the human in the loop needs to be able to process a lot of AI-generated code. Replacing a human with an AI doesn't produce any savings if you need to hire two more humans to take turns doing close reads of the AI's code.

This is the fatal flaw in robo-taxi schemes. The "human in the loop" who is supposed to keep the murderbot from smashing into other cars, steering into oncoming traffic, or running down pedestrians isn't a driver, they're a driving instructor. This is a much harder job than being a driver, even when the student driver you're monitoring is a human, making human mistakes at human speed. It's even harder when the student driver is a robot, making errors at computer speed:

https://pluralistic.net/2024/04/01/human-in-the-loop/#monkey-in-the-middle

This is why the doomed robo-taxi company Cruise had to deploy 1.5 skilled, high-paid human monitors to oversee each of its murderbots, while traditional taxis operate at a fraction of the cost with a single, precaratized, low-paid human driver:

https://pluralistic.net/2024/01/11/robots-stole-my-jerb/#computer-says-no

The vigilance problem is pretty fatal for the human-in-the-loop gambit, but there's another problem that is, if anything, even more fatal: the kinds of errors that AIs make.

Foundationally, AI is applied statistics. An AI company trains its AI by feeding it a lot of data about the real world. The program processes this data, looking for statistical correlations in that data, and makes a model of the world based on those correlations. A chatbot is a next-word-guessing program, and an AI "art" generator is a next-pixel-guessing program. They're drawing on billions of documents to find the most statistically likely way of finishing a sentence or a line of pixels in a bitmap:

https://dl.acm.org/doi/10.1145/3442188.3445922

This means that AI doesn't just make errors – it makes subtle errors, the kinds of errors that are the hardest for a human in the loop to spot, because they are the most statistically probable ways of being wrong. Sure, we notice the gross errors in AI output, like confidently claiming that a living human is dead:

https://www.tomsguide.com/opinion/according-to-chatgpt-im-dead

But the most common errors that AIs make are the ones we don't notice, because they're perfectly camouflaged as the truth. Think of the recurring AI programming error that inserts a call to a nonexistent library called "huggingface-cli," which is what the library would be called if developers reliably followed naming conventions. But due to a human inconsistency, the real library has a slightly different name. The fact that AIs repeatedly inserted references to the nonexistent library opened up a vulnerability – a security researcher created a (inert) malicious library with that name and tricked numerous companies into compiling it into their code because their human reviewers missed the chatbot's (statistically indistinguishable from the the truth) lie:

https://www.theregister.com/2024/03/28/ai_bots_hallucinate_software_packages/

For a driving instructor or a code reviewer overseeing a human subject, the majority of errors are comparatively easy to spot, because they're the kinds of errors that lead to inconsistent library naming – places where a human behaved erratically or irregularly. But when reality is irregular or erratic, the AI will make errors by presuming that things are statistically normal.

These are the hardest kinds of errors to spot. They couldn't be harder for a human to detect if they were specifically designed to go undetected. The human in the loop isn't just being asked to spot mistakes – they're being actively deceived. The AI isn't merely wrong, it's constructing a subtle "what's wrong with this picture"-style puzzle. Not just one such puzzle, either: millions of them, at speed, which must be solved by the human in the loop, who must remain perfectly vigilant for things that are, by definition, almost totally unnoticeable.

This is a special new torment for reverse centaurs – and a significant problem for AI companies hoping to accumulate and keep enough high-value, high-stakes customers on their books to weather the coming trough of disillusionment.

This is pretty grim, but it gets grimmer. AI companies have argued that they have a third line of business, a way to make money for their customers beyond automation's gifts to their payrolls: they claim that they can perform difficult scientific tasks at superhuman speed, producing billion-dollar insights (new materials, new drugs, new proteins) at unimaginable speed.

However, these claims – credulously amplified by the non-technical press – keep on shattering when they are tested by experts who understand the esoteric domains in which AI is said to have an unbeatable advantage. For example, Google claimed that its Deepmind AI had discovered "millions of new materials," "equivalent to nearly 800 years’ worth of knowledge," constituting "an order-of-magnitude expansion in stable materials known to humanity":

https://deepmind.google/discover/blog/millions-of-new-materials-discovered-with-deep-learning/

It was a hoax. When independent material scientists reviewed representative samples of these "new materials," they concluded that "no new materials have been discovered" and that not one of these materials was "credible, useful and novel":

https://www.404media.co/google-says-it-discovered-millions-of-new-materials-with-ai-human-researchers/

As Brian Merchant writes, AI claims are eerily similar to "smoke and mirrors" – the dazzling reality-distortion field thrown up by 17th century magic lantern technology, which millions of people ascribed wild capabilities to, thanks to the outlandish claims of the technology's promoters:

https://www.bloodinthemachine.com/p/ai-really-is-smoke-and-mirrors

The fact that we have a four-hundred-year-old name for this phenomenon, and yet we're still falling prey to it is frankly a little depressing. And, unlucky for us, it turns out that AI therapybots can't help us with this – rather, they're apt to literally convince us to kill ourselves:

https://www.vice.com/en/article/pkadgm/man-dies-by-suicide-after-talking-with-ai-chatbot-widow-says

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/04/23/maximal-plausibility/#reverse-centaurs

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#ai#automation#humans in the loop#centaurs#reverse centaurs#labor#ai safety#sanity checks#spot the mistake#code review#driving instructor

855 notes

·

View notes

Text

i think about satoru calling his wife and daughter “my girls” whenever he is talking about them to others and i have an overwhelming physical reaction to it “nah, i’ve got to go — my girls are waiting for me at home”

#— ai rambles#LIKE ARE U KIDDING ME#what if i squeal so loud my neighbors will come knocking on my door concerned for my safety type of physical reaction#i am laid face down on the pavement rn chocking on the puddle of my own tears#[ ♡ ] — satoru#tw children

149 notes

·

View notes

Text

So you may have seen my posts about AI foraging guides, or watched the mini-class I have up on YouTube on what I found inside of them. Apparently the intersection of AI and foraging has gotten even worse, with a chatbot that joined a mushroom foraging groups on Facebook only to immediately suggest ways people could cook a toxic species:

First, and most concerningly, this once again reinforces how much we should NOT be trusting AI to tell us what mushrooms are safe to eat. While they can compile information that's fed to them and regurgitate it in somewhat orderly manners, this is not the same as a living human being who has critical thinking skills to determine the veracity of a given piece of information, or physical senses to examine a mushroom, or the ability to directly experience the act of foraging. These skills and experiences are absolutely crucial to being a reliable forager, particularly one who may be passing on information to others.

We already have enough trouble with inaccurate info in the foraging community, and trying to ride herd on both the misinformed and the bad actors. This AI was presented as the first chat option for any group member seeking answers, which is just going to make things tougher for those wanting to keep people from accidentally poisoning themselves. Moreover, chatbots like this one routinely are trained on and grab information from copyrighted sources, but do not give credit to the original authors. Anyone who's ever written a junior-high level essay knows that you have to cite your sources even if you rewrite the information; otherwise it's just plagiarism.

Fungi Friend is yet one more example of how generative AI has been anything but a positive development on multiple levels.

#AI#generative AI#chatbot#mushrooms#fungus#fungi#mushroom hunting#mushroom foraging#foraging#safety#poison#health#Facebook#PSA#reblog to save a life#important information#enshittification

153 notes

·

View notes

Text

Computer Vision for PPE Compliance: A New Era of Workplace Safety

Unlock a safer workplace with our latest blog on Computer Vision for PPE Compliance. Discover how cutting-edge computer vision technology is revolutionizing PPE detection, ensuring compliance, and enhancing workplace safety.

0 notes

Text

hey everyone check out my friend’s super cool TTRPG, The Treacherous Turn, it’s a research support game where you play collectively as a misaligned AGI, intended to help get players thinking about AI safety, you can get it for free at thetreacherousturn.ai !!!

2K notes

·

View notes

Text

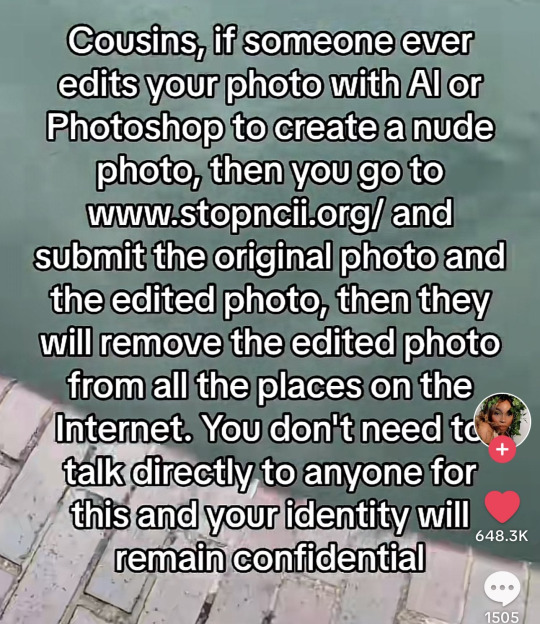

"Stopncii.org is a free tool designed to support victims of Non-Consensual Intimate Image (NCII) abuse."

"Revenge Porn Helpline is a UK service supporting adults (aged 18+) who are experiencing intimate image abuse, also known as, revenge porn."

"Take It Down (ncmec.org) is for people who have images or videos of themselves nude, partially nude, or in sexually explicit situations taken when they were under the age of 18 that they believe have been or will be shared online."

#important information#image desc in alt text#informative#stop ai#anti ai#safety#internet safety#exploitation#tell your friends#stay informed#the internet#internet privacy#online safety#stay safe#important#openai#tiktok screenshots#tiktok#life tips#ysk#you should know#described#alt text#alt text provided#alt text added#alt text in image#alt text described#alt text included#id in alt text

713 notes

·

View notes

Text

I realized another specific thing I don't like about people asking me for patterns: the idea that there is One Specific Way to crochet something.

This is part of a broader issue I have about the distinction between crafts and arts that I think about a lot, but in this particular context, I don't like the idea of people restricting themselves and refraining from experimenting with crochet because they think there is a Right way or only One way to make something. What pattern? Just look at the thing I made (which by the way, in asking immediately for the pattern you're kind of devaluing this art piece I've made as some kind of trinket you should also possess, but that's a slightly different issue) and make your own thing like it. Even if you followed my notes exactly, you wouldn't have the same exact result as mine unless you had my exact tension, yarn, and physical quirks of crocheting. I don't even have the same result following my own notes in subsequent crochets because it's all slightly different each time. You would have something that looks similar to mine but is your own, and that's something you could achieve just by trying to make your own thing from the get go. Doesn't require a pattern.

Use your eyes, work stitch by stitch and row by row. If it's as you think and there is only one correct way to do it, then you can try and figure it out. Doesn't require a pattern. If it's not as you thought and there are actually multiple ways to do it, then you'll find a way as you try. Didn't require a pattern. Either way, pattern not actually needed.

#text#ngl some of yall are one step removed from being the kind of people making ai art because 'real art is too hard'#its just exceptionally weird to be treating Me A Human Person as if i were an ai chat bot to spit out patterns for you.#do you see the similarities. reflect.#this message brought to you by me being frustrated at people complaining about how a pattern they bought didnt have exact stitch count#FOR WHERE TO PUT THE SAFETY EYES. 🫠😒#JUST USE YOUR EYES. EYEBALL IT FFS

54 notes

·

View notes

Text

Okay, look, they talk to a Google rep in some of the video clips, but I give it a pass because this FREE course is a good baseline for personal internet safety that so many people just do not seem to have anymore. It's done in short video clip and article format (the videos average about a minute and a half). This is some super basic stuff like "What is PII and why you shouldn't put it on your twitter" and "what is a phishing scam?" Or "what is the difference between HTTP and HTTPS and why do you care?"

It's worrying to me how many people I meet or see online who just do not know even these absolute basic things, who are at constant risk of being scammed or hacked and losing everything. People who barely know how to turn their own computers on because corporations have made everything a proprietary app or exclusive hardware option that you must pay constant fees just to use. Especially young, somewhat isolated people who have never known a different world and don't realize they are being conditioned to be metaphorical prey animals in the digital landscape.

Anyway, this isn't the best internet safety course but it's free and easy to access. Gotta start somewhere.

Here's another short, easy, free online course about personal cyber security (GCFGlobal.org Introduction to Internet Safety)

Bonus videos:

youtube

(Jul 13, 2023, runtime 15:29)

"He didn't have anything to hide, he didn't do anything wrong, anything illegal, and yet he was still punished."

youtube

(Apr 20, 2023; runtime 9:24 minutes)

"At least 60% use their name or date of birth as a password, and that's something you should never do."

youtube

(March 4, 2020, runtime 11:18 minutes)

"Crossing the road safely is a basic life skill that every parent teaches their kids. I believe that cyber skills are the 21st century equivalent of road safety in the 20th century."

#you need to protect yourself#internet literacy#computer literacy#internet safety#privacy#online#password managers#security questions#identity theft#Facebook#browser safety#google#tesla#clearwater ai#people get arrested when google makes a mistake#lives are ruined because your Ring is spying on you#they aren't just stealing they are screwing you over#your alexa is not a woman it's a bug#planted by a supervillain who smirks at you#as they sell that info to your manager#oh you have nothing to hide?#then what's your credit card number?#listen I'm in a mood about this right now#Youtube

174 notes

·

View notes

Text

I can't believe I never posted these???? Super happy with how they turned out! Currently slowly but surely attaching them to their bag ❤️

Bag Design under cut ❤️

#alternative#punk#battle jacket#trans#diy#disabledartist#queerart#art#altspace#jeans tote#patches#hand painted#hand made#bottlecap pins#recycle#anti ai#cringe is kewl#2010s emo#read banned books#mmiwg2s#mmiwawareness#free palestine#blm#no ones free till were all free#no one is illegal#soda tabs#cross stitch#salvaged art#mixed media#safety pins

51 notes

·

View notes