#NLTK

Explore tagged Tumblr posts

Text

python iterative monte carlo search for text generation using nltk

You are playing a game and you want to win. But you don't know what move to make next, because you don't know what the other player will do. So, you decide to try different moves randomly and see what happens. You repeat this process again and again, each time learning from the result of the move you made. This is called iterative Monte Carlo search. It's like making random moves in a game and learning from the outcome each time until you find the best move to win.

Iterative Monte Carlo search is a technique used in AI to explore a large space of possible solutions to find the best ones. It can be applied to semantic synonym finding by randomly selecting synonyms, generating sentences, and analyzing their context to refine the selection.

# an iterative monte carlo search example using nltk # https://pythonprogrammingsnippets.tumblr.com import random from nltk.corpus import wordnet # Define a function to get the synonyms of a word using wordnet def get_synonyms(word): synonyms = [] for syn in wordnet.synsets(word): for l in syn.lemmas(): if '_' not in l.name(): synonyms.append(l.name()) return list(set(synonyms)) # Define a function to get a random variant of a word def get_random_variant(word): synonyms = get_synonyms(word) if len(synonyms) == 0: return word else: return random.choice(synonyms) # Define a function to get the score of a candidate sentence def get_score(candidate): return len(candidate) # Define a function to perform one iteration of the monte carlo search def monte_carlo_search(candidate): variants = [get_random_variant(word) for word in candidate.split()] max_candidate = ' '.join(variants) max_score = get_score(max_candidate) for i in range(100): variants = [get_random_variant(word) for word in candidate.split()] candidate = ' '.join(variants) score = get_score(candidate) if score > max_score: max_score = score max_candidate = candidate return max_candidate initial_candidate = "This is an example sentence." # Perform 10 iterations of the monte carlo search for i in range(10): initial_candidate = monte_carlo_search(initial_candidate) print(initial_candidate)

output:

This manufacture Associate_in_Nursing theoretical_account sentence. This fabricate Associate_in_Nursing theoretical_account sentence. This construct Associate_in_Nursing theoretical_account sentence. This cathode-ray_oscilloscope Associate_in_Nursing counteract sentence. This collapse Associate_in_Nursing computed_axial_tomography sentence. This waste_one's_time Associate_in_Nursing gossip sentence. This magnetic_inclination Associate_in_Nursing temptingness sentence. This magnetic_inclination Associate_in_Nursing conjure sentence. This magnetic_inclination Associate_in_Nursing controversy sentence. This inclination Associate_in_Nursing magnetic_inclination sentence.

#python#nltk#iterative monte carlo search#monte carlo search#monte carlo#search#text generation#generative text#text#generation#text prediction#synonyms#synonym#semantics#semantic#language#language model#ai#iterative#iteration#artificial intelligence#sentence rewriting#sentence rewrite#story generation#deep learning#learning#educational#snippet#code#source code

2 notes

·

View notes

Text

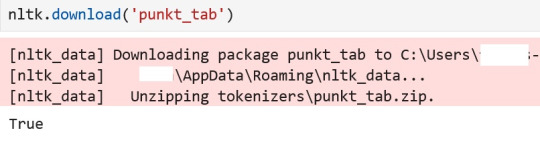

Resource punkt_tab not found - for NLTK (NLP)

Over the past few years, I see that quite a few folks are STILL getting this error in Jupyter Notebook. And it means, again, a lot of troubleshooting on my part. The instructor for the course I am taking (one of several Gen AI courses) did not get that error or they have an environment set up for that specific .ipynb file. And as such, they did not comment on it. After I did that last…

View On WordPress

0 notes

Text

El concepto de los diccionarios de sentimientos y cómo son fundamentales en el análisis de sentimientos.

¿Qué son los diccionarios de sentimientos y cómo funcionan? Imagina un diccionario, pero en lugar de definir palabras, clasifica las palabras según la emoción que expresan. Estos son los diccionarios de sentimientos. Son como una especie de “tesauro emocional” que asigna a cada palabra una puntuación que indica si es positiva, negativa o neutral. ¿Cómo funcionan? Lexicón: Contienen una extensa…

#alicante#análisis de sentimientos#anotación manual#aprendizaje automático#comunidad valenciana#contexto#corpus#diccionarios de sentimientos#empresas locales.#F1-score#gobierno#Google Cloud Natural Language API#herramientas de análisis de sentimientos#IBM Watson#inteligencia artificial#intensidad#MonkeyLearn#NLTK#polaridad#precisión#procesamiento del lenguaje natural#RapidMiner#recall#redes neuronales#spaCy#turismo

1 note

·

View note

Text

In this tutorial, we will explore how to perform sentiment analysis using Python with three popular libraries — NLTK, TextBlob, and VADER.

#machine learning#data science#python#sentiment analysis#natural language processing#NLTK#TextBlob#VADER#tutorial#medium#medium writers#artificial intelligence#ai#data analysis#data scientist#data analytics#computer science

1 note

·

View note

Text

Not me out here writing a program to log into my AO3 account and perform a natural language sentiment analysis of the comments in my inbox to identify trolls without having to read their garbage...

#trolls begone#that's what i get for daring to write fic with darker themes...#this was a fun lil project tho#here's the stack i used:#python3#nltk + vader sentiment analyzer#beautifulsoup4 to grok html#python-requests to wrangle cookies

3 notes

·

View notes

Text

#nlp libraries#natural language processing libraries#python libraries#nodejs nlp libraries#python and libraries#javascript nlp libraries#best nlp libraries for nodejs#nlp libraries for java script#best nlp libraries for javascript#nlp libraries for nodejs and javascript#nltk library#python library#pattern library#python best gui library#python library re#python library requests#python library list#python library pandas#python best plotting library

0 notes

Text

in what situation is the word "oh" a proper noun

#🍯 talks#using nltk for my project and skimming through to fix some mistakes#and it keeps tagging oh as nnp

1 note

·

View note

Text

Can't believe I had to miss my morphology lecture because comp sci has no concept of timetables

#it's so sad#i literally only took it as a minor to get a leg up on python for nltk#and i'm doing intensive french with it as well so i'm swamped and i'm only two weeks in#bro i just wanna do linguistics :(

0 notes

Text

Want to make NLP tasks a breeze? Explore how NLTK streamlines text analysis in Python, making it easier to extract valuable insights from your data. Discover more https://bit.ly/487hj9L

0 notes

Text

part of speech tagging? oh man sorry i though u meant piece of shit tagging

0 notes

Text

Real-World Application of Part-of-Speech Tagging with NLTK and NLTK2

Introduction Real-World Application of Part-of-Speech Tagging with NLTK and NLTK2 Part-of-speech (POS) tagging is a fundamental task in Natural Language Processing (NLP) that involves identifying the grammatical category of each word in a sentence, such as noun, verb, adjective, etc. In this tutorial, we will explore the real-world application of POS tagging with the popular NLP libraries NLTK…

0 notes

Text

python keyword extraction using nltk wordnet

import re # include wordnet.morphy from nltk.corpus import wordnet # https://pythonprogrammingsnippets.tumblr.com/ def get_non_plural(word): # return the non-plural form of a word # if word is not empty if word != "": # get the non-plural form non_plural = wordnet.morphy(word, wordnet.NOUN) # if non_plural is not empty if non_plural != None: # return the non-plural form # print(word, "->", non_plural) return non_plural # if word is empty or non_plural is empty return word def get_root_word(word): # return the root word of a word # if word is not empty if word != "": word = get_non_plural(word) # get the root word root_word = wordnet.morphy(word) # if root_word is not empty if root_word != None: # return the root word # print(word, "->", root_word) word = root_word # if word is empty or root_word is empty return word def process_keywords(keywords): ret_k = [] for k in keywords: # replace all characters that are not letters, spaces, or apostrophes with a space k = re.sub(r"[^a-zA-Z' ]", " ", k) # if there is more than one whitespace in a row, replace it # with a single whitespace k = re.sub(r"\s+", " ", k) # remove leading and trailing whitespace k = k.strip() k = k.lower() # if k has more than one word, split it into words and add each word # back to keywords if " " in k: ret_k.append(k) # we still want the original keyword k = k.split(" ") for k2 in k: #if not is_adjective(k2): ret_k.append(get_root_word(k2)) ret_k.append(k2.strip()) else: # if not is_adjective(k): ret_k.append(get_root_word(k)) ret_k.append(k.strip()) # unique ret_k = list(set(ret_k)) # remove empty strings ret_k = [k for k in ret_k if k != ""] # remove all words that are less than 3 characters ret_k = [k for k in ret_k if len(k) >= 3] # remove words like 'and', 'or', 'the', etc. ret_k = [k for k in ret_k if k not in ["and", "or", "the", "a", "an", "of", "to", "in", "on", "at", "for", "with", "from", "by", "as", "into", "like", "through", "after", "over", "between", "out", "against", "during", "without", "before", "under", "around", "among", "throughout", "despite", "towards", "upon", "concerning", "of", "to", "in", "on", "at", "for", "with", "from", "by", "as", "into", "like", "through", "after", "over", "between", "out", "against", "during", "without", "before", "under", "around", "among", "throughout", "despite", "towards", "upon", "concerning", "this", "that", "these", "those", "is", "are", "was", "were", "be", "been", "being", "have", "has", "had", "having", "do", "does", "did", "doing", "will", "would", "shall", "should", "can", "could", "may", "might", "must", "ought", "i", "me", "my", "mine", "we", "us", "our", "ours", "you", "your", "yours", "he", "him", "his", "she", "her", "hers", "it", "its", "they", "them", "their", "theirs", "what", "which", "who", "whom", "whose", "this", "that", "these", "those", "myself", "yourself", "himself", "herself", "itself", "ourselves", "yourselves", "themselves", "whoever", "whatever", "whomever", "whichever", "whichever" ]] return ret_k def extract_keywords(paragraph): if " " in paragraph: return paragraph.split(" ") return [paragraph]

example usage:

the_string = "Jims House of Judo and Karate is a martial arts school in the heart of downtown San Francisco. We offer classes in Judo, Karate, and Jiu Jitsu. We also offer private lessons and group classes. We have a great staff of instructors who are all black belts. We have been in business for over 20 years. We are located at 123 Main Street." keywords = process_keywords(extract_keywords(the_string)) print(keywords)

output:

# output: ['jims', 'instructors', 'class', 'lesson', 'all', 'school', 'san', 'martial', 'classes', 'karate', 'great', 'lessons', 'downtown', 'private', 'arts', 'also', 'locate', 'belts', 'business', 'judo', 'years', 'located', 'main', 'street', 'jitsu', 'house', 'offer', 'staff', 'group', 'heart', 'instructor', 'belt', 'black', 'francisco', 'jiu']

#python#keyword extraction#keywords#keyword#extraction#natural language processing#natural language#nltk#natural language toolkit#wordnet#morphy#keyword creation#seo#keyword maker#keywording#depluralization#plurals#pluralize#filtering#language processing#text processing#data processing#data#text#paragraph#regex#geek#nerd#nerdy#geeky

1 note

·

View note

Text

Building a chatbot in Python involves using libraries like NLTK, ChatterBot, or OpenAI's GPT. Steps include data preprocessing, training the model, implementing logic, and integrating with a user interface via Flask or Django for real-time interactions.

0 notes

Text

Online Artificial Intelligence Course in Rajkot, India | Pro Academys

Artificial Intelligence (AI) is no longer a distant future concept; it is the present, reshaping industries and driving innovation worldwide. With AI applications ranging from healthcare to finance, marketing to manufacturing, there has never been a better time to dive into the fascinating world of AI. At Pro Academy, we provide a comprehensive Online Artificial Intelligence Course in Rajkot, India, designed to equip learners with the knowledge and skills to excel in this rapidly evolving field.

Why Choose Artificial Intelligence?

AI is the driving force behind many cutting-edge technologies. It powers search engines, recommendation algorithms, autonomous vehicles, virtual assistants, and much more. As industries increasingly adopt AI to optimize operations and improve decision-making, the demand for skilled AI professionals continues to rise. Whether you are a student looking to enter the AI field or a professional seeking to upskill, this course offers everything you need to stay ahead in today’s job market.

Course Overview

Our Online Artificial Intelligence Course in Rajkot, India, is designed to provide a deep understanding of AI concepts, techniques, and applications. The course covers a broad range of topics, from foundational AI principles to advanced machine learning and neural network techniques. You will explore the theoretical concepts of AI while gaining hands-on experience with tools and technologies that are essential for implementing AI solutions in real-world scenarios.

This course is structured to be accessible to beginners while also challenging enough for intermediate learners looking to enhance their skills. By the end of the course, you will have developed a strong understanding of AI and its practical applications, giving you the tools to build intelligent systems and solve complex problems.

What Will You Learn?

The Artificial Intelligence Course covers the following key areas:

Introduction to AI:

Understand the history, evolution, and impact of AI on society and industries.

Learn about AI's subfields, including machine learning, deep learning, natural language processing (NLP), and robotics.

Explore AI ethics and responsible AI development.

Machine Learning Fundamentals:

Master the basics of supervised and unsupervised learning.

Dive into key algorithms such as linear regression, decision trees, support vector machines (SVM), and clustering techniques.

Understand the importance of data preprocessing and feature selection for model accuracy.

Deep Learning and Neural Networks:

Gain insights into neural networks, backpropagation, and activation functions.

Explore popular architectures such as Convolutional Neural Networks (CNN) for image processing and Recurrent Neural Networks (RNN) for sequential data.

Learn to build and train deep learning models using TensorFlow and PyTorch.

Natural Language Processing (NLP):

Study the techniques used to process and analyze human language.

Work on projects involving text classification, sentiment analysis, and language translation.

Implement NLP models using libraries like NLTK and spaCy.

AI in Robotics:

Discover how AI is transforming the field of robotics.

Learn about autonomous systems, reinforcement learning, and control systems.

Explore real-world applications such as robotic process automation (RPA) and AI-powered drones.

AI Project Implementation:

Work on capstone projects that involve solving real-world problems using AI techniques.

Learn how to deploy AI models into production environments.

Gain experience in handling AI project life cycles from data collection to model deployment.

Tools and Technologies Covered

Our course gives you hands-on experience with some of the most widely used AI tools and frameworks, including:

Python: The go-to programming language for AI development, used for scripting AI models and algorithms.

TensorFlow & PyTorch: Leading open-source frameworks for building and training machine learning models.

Keras: A powerful deep learning API that allows you to build neural networks with ease.

Scikit-learn: A versatile machine learning library for implementing regression, classification, and clustering algorithms.

NLTK & spaCy: Libraries that facilitate natural language processing tasks such as tokenization, parsing, and sentiment analysis.

Who Should Enroll?

Our Online Artificial Intelligence Course in Rajkot, India, is perfect for:

Students and Graduates: Those looking to build a career in AI or machine learning can start here. The course provides a solid foundation and offers plenty of opportunities for practical learning.

IT Professionals: If you are already working in the tech industry and want to expand your skill set, this course can help you transition into AI roles.

Entrepreneurs and Innovators: Those interested in using AI to innovate and solve complex business problems can gain the knowledge and skills needed to develop AI-driven solutions.

Anyone Interested in AI: Whether you're an AI enthusiast or someone curious about how AI works, this course is designed to be accessible and engaging for all learners.

Why Choose Our Online Course?

At Pro Academy, we are committed to delivering high-quality education tailored to the needs of modern learners. Our Online Artificial Intelligence Course in Rajkot, India, offers the following advantages:

Flexible Learning: Learn at your own pace from anywhere in the world with our online platform. Our course is designed to fit into your schedule, whether you're a working professional or a full-time student.

Expert Instructors: Our instructors are AI professionals with industry experience. They are passionate about teaching and providing personalized support to help you succeed.

Hands-On Projects: Theory is important, but we believe that the best way to learn AI is by doing. You will work on real-world projects that will challenge you to apply the concepts you've learned and develop practical skills.

Certification: Upon completing the course, you will receive a certification that showcases your AI expertise, giving you a competitive edge in the job market.

Career Opportunities in AI

The career opportunities in the field of AI are vast and growing rapidly. Some of the roles you can pursue after completing this course include:

AI Engineer

Machine Learning Engineer

Data Scientist

AI Research Scientist

NLP Engineer

Robotics Engineer

AI professionals are in high demand across various industries, including finance, healthcare, retail, automotive, and tech startups. By enrolling in our Online Artificial Intelligence Course in Rajkot, India, you are taking the first step toward a rewarding career in one of the most sought-after fields.

Enroll Today

Unlock your potential and become part of the AI revolution with our Online Artificial Intelligence Course in Rajkot, India. Whether you are looking to advance your career, start a new venture, or simply explore the fascinating world of AI, this course provides everything you need to succeed. Enroll today and start building the future with AI.

website: https://www.proacademys.com/

Email Id: [email protected]

Contact No.: +91 6355016394

#ProAcademyAI#AIForAll#LearnAIOnline#AIRevolution#AITraining#FutureOfAI#ArtificialIntelligence#AIInEducation#AICourse#MasterAI#AICertification#AIInnovation#AIOnlineLearning#AIBeginners

0 notes

Text

import nltk

from nltk.corpus import words

nltk.download('words')

def get_permutations(string):

if len(string) == 0:

return ['']

permutations = []

for i in range(len(string)):

first_char = string[i]

remaining_chars = string[:i] + string[i+1:]

sub_permutations = get_permutations(remaining_chars)

for sub_permutation in sub_permutations:

permutations.append(first_char + sub_permutation)

return permutations

string = "LPEHEATN"

english_words = set(words.words())

result = get_permutations(string)

for x in result:

if x.lower() in english_words:

print(x)

#it was so hard to keep the giggles in#like first of all. the puzzle book is meant for kindergarteners#and also. WE CANT SOLVE IT#its ok its not our fault. its meant for 5 year olds and we're not 5 thats why we failed#youre welcome btw. im stupid so you can be smart and get entertainment. its a community service

1 note

·

View note

Text

5 Artificial Intelligence Project Ideas for Beginners [2025] - Arya College

Best College in Jaipur which is Arya College of Engineering & I.T. has five top AI projects for beginners that will not only help you learn essential concepts but also allow you to create something tangible:

1. AI-Powered Chatbot

Creating a chatbot is one of the most popular beginner projects in AI. This project involves building a conversational agent that can understand user queries and respond appropriately.

Duration: Approximately 10 hours

Complexity: Easy

Learning Outcomes: Gain insights into natural language processing (NLP) and chatbot frameworks like Rasa or Dialogflow.

Real-world applications: Customer service automation, personal assistants, and FAQ systems.

2. Handwritten Digit Recognition

This project utilizes the MNIST dataset to build a model that recognizes handwritten digits. It serves as an excellent introduction to machine learning and image classification.

Tools/Libraries: TensorFlow, Keras, or PyTorch

Learning Outcomes: Understand convolutional neural networks (CNNs) and image processing techniques.

Real-world applications: Optical character recognition (OCR) systems and automated data entry.

3. Spam Detection System

Developing a spam detection system involves classifying emails as spam or not spam based on their content. This project is a practical application of supervised learning algorithms.

Tools/Libraries: Scikit-learn, Pandas

Learning Outcomes: Learn about text classification, feature extraction, and model evaluation techniques.

Real-world applications: Email filtering systems and content moderation.

4. Music Genre Classification

In this project, you will classify music tracks into different genres using audio features. This project introduces you to audio processing and machine learning algorithms.

Tools/Libraries: Librosa for audio analysis, TensorFlow or Keras for model training

Learning Outcomes: Understand feature extraction from audio signals and classification techniques.

Real-world applications: Music recommendation systems and automated playlist generation.

5. Sentiment Analysis Tool

Building a sentiment analysis tool allows you to analyze customer reviews or social media posts to determine the overall sentiment (positive, negative, neutral). This project is highly relevant for businesses looking to gauge customer feedback.

Tools/Libraries: NLTK, TextBlob, or VADER

Learning Outcomes: Learn about text preprocessing, sentiment classification algorithms, and evaluation metrics.

Real-world applications: Market research, brand monitoring, and customer feedback analysis.

These projects provide an excellent foundation for understanding AI concepts while allowing you to apply your knowledge practically. Engaging in these hands-on experiences will enhance your skills and prepare you for more advanced AI challenges in the future.

What are some advanced NLP projects for professionals

1. Language Recognition System

Develop a system capable of accurately identifying and distinguishing between multiple languages from text input. This project requires a deep understanding of linguistic features and can be implemented using character n-gram models or deep learning architectures like recurrent neural networks (RNNs) and Transformers.

2. Image-Caption Generator

Create a model that generates descriptive captions for images by combining computer vision with NLP. This project involves analyzing visual content and producing coherent textual descriptions, which requires knowledge of both image processing and language models.

3. Homework Helper

Build an intelligent system that can assist students by answering questions related to their homework. This project can involve implementing a question-answering model that retrieves relevant information from educational resources.

4. Text Summarization Tool

Develop an advanced text summarization tool that can condense large documents into concise summaries. You can implement both extractive and abstractive summarization techniques using transformer-based models like BERT or GPT.

5. Recommendation System Using NLP

Create a recommendation system that utilizes user reviews and preferences to suggest products or services. This project can involve sentiment analysis to gauge user opinions and collaborative filtering techniques for personalized recommendations.

6. Generating Research Paper Titles

Train a model to generate titles for scientific papers based on their content. This innovative project can involve using GPT-2 or similar models trained on datasets of existing research titles.

7. Translate and Summarize News Articles

Build a web application that translates news articles from one language to another while also summarizing them. This project can utilize libraries such as Hugging Face Transformers for translation tasks combined with summarization techniques.

0 notes