#Global GPT

Explore tagged Tumblr posts

Text

What is Global GPT?

The world of AI is constantly evolving, with tools like Global GPT revolutionizing how we interact with technology. Whether you are a writer, student, or business owner, tools like these can simplify your tasks and make your work more efficient. But what exactly is Global GPT, and how does it compare to ChatGPT? Let’s explore the details. Difference Between Global GPT and ChatGPT Understanding…

#AI#blogging#ChatGPT#dailyprompt#dailyprompt-1822#dailyprompt-1823#dailyprompt-1824#Global GPT#technology#writing-blogging

0 notes

Text

How AI is Changing the Way We Tackle Conspiracy Theories

New Post has been published on https://thedigitalinsider.com/how-ai-is-changing-the-way-we-tackle-conspiracy-theories/

How AI is Changing the Way We Tackle Conspiracy Theories

Conspiracy theories have always been a part of human history, drawing people in with stories of secret plots and hidden truths. But in today’s connected world, these theories are not just harmless gossip; they’ve become a global problem. With social media, false ideas like “9/11 was an inside job” or “vaccines have microchips” can spread across the world in a matter of minutes. These narratives can create distrust, divide communities, and, in some cases, incite violence.

Psychologists have spent years trying to understand why people believe in these theories and how to challenge them. Despite their best efforts, changing these beliefs has proven difficult. Psychological theories suggest that these ideas are deeply tied to emotions and people’s personal identity rather than logic or facts.

However, researchers have recently found that AI might offer a way forward. Unlike traditional methods, AI doesn’t just present facts—it engages in conversations. By listening and responding in ways that feel personal and empathetic, AI has the potential to challenge these deeply held beliefs. In this article, we will explore this newfound capability of AI and how it could change how we address conspiracy theories.

The AI Experiment: A New Approach

Recently, researchers conducted an experiment to explore whether generative AI could deal with the challenge of conspiracy theories. Their study, published in Science, employed OpenAI’s GPT-4 Turbo, a large language model (LLM), to engage conspiracy believers in personalized, evidence-based conversations. Participants were asked to share a conspiracy theory they believed in and supporting evidence. The AI then engaged them in a structured, three-round dialogue, presenting counterarguments tailored to the specific theory the person believed in.

The results were impressive. After talking to the AI, belief in the conspiracy theory dropped by an average of 20%. This wasn’t just a quick shift; the change stayed for at least two months. Even more surprisingly, people became less likely to believe other conspiracy theories. They also felt more motivated to challenge others who believed in similar ideas.

Why AI Works Where Humans Struggle

There are several reasons why AI stands out in addressing conspiracy theories by doing things that people often find hard to achieve. One of the key strengths of AI is personalization. Instead of using generic fact-checks or broad explanations, AI adopts responses to match each person’s specific beliefs and the evidence they provide. This makes the conversations more relevant and convincing.

Another reason AI works so well is because it can stay calm and neutral. Unlike humans, AI can allow detailed discussions without showing frustration or judgment. This ability allows it to maintain an empathetic and non-judgmental tone, making people less defensive and more open to rethinking their views.

The accuracy of AI is another critical factor. It’s been tested on hundreds of claims, and 99.2% of the time, its responses were accurate. This reliability builds trust and makes people more likely to reconsider their beliefs.

What’s even more impressive is how AI’s impact goes beyond just one conspiracy theory. It helps people rethink their approach to similar ideas, making them less likely to believe other conspiracy theories. Some even feel motivated to challenge misinformation when they see it. By tackling both specific beliefs and the broader mindset, AI shows great potential in how we can fight conspiracy theories effectively.

Implications for Society

The world is struggling with misinformation, and these findings bring a ray of hope. We’ve long been told that conspiracy theories can only be tackled with facts, but this study shows that even deep-rooted beliefs can be changed with the right approach. It’s possible to help people move out of the misinformation cycle by guiding them toward a more grounded view of reality.

AI’s ability to tackle conspiracy theories could have an impact beyond just individual conversations. It could help reduce societal conflicts caused by conspiracy theories—like the fear of vaccines or false election fraud claims- if used correctly. It could also play a role in preventing misinformation from spreading in the first place. AI could tackle false ideas before they gain traction by being part of education, public health campaigns, and even social media platforms.

Ethical Considerations and Risks

AI is powerful, but with that power comes responsibility. The same tools that can help debunk conspiracy theories could also be used for harm. Imagine AI being used to spread false information or manipulate people’s opinions. That’s why it’s crucial to make sure AI is used ethically. There must be clear rules, oversight, and transparency in how AI is applied, especially regarding sensitive topics.

The success of AI also depends on the quality of its training data and algorithms. If the data is biased, it could lead to inaccurate or unfair responses, damaging the AI’s credibility and effectiveness. Regular updates, ongoing research, and independent audits will be critical to identify and correct these issues, ensuring the technology is used responsibly and ethically.

A Broader Shift in AI’s Role

This study highlights an emerging shift in how AI can benefit society. While generative AI is often criticized for amplifying misinformation, this research shows it can also be a powerful tool to counteract it. By demonstrating AI’s ability to address complex issues like conspiracy theories, the study changes the standard narrative, showcasing AI as a solution to some of the challenges it’s often blamed for.

It’s a reminder that technology is neutral— neither good nor bad. Its impact depends entirely on how we decide to use it. By focusing on ethical and responsible applications, we can utilize AI’s potential to drive positive change and tackle some of society’s most pressing problems.

The Bottom Line

AI offers a promising new way to combat conspiracy theories by engaging people in personalized, empathetic conversations that encourage critical thinking and reduce belief in misinformation. Unlike traditional methods, AI’s neutral tone, tailored responses, and high accuracy effectively challenge deep-rooted beliefs and foster a broader resistance to conspiracy thinking. However, its success depends on ethical usage, transparency, and ongoing oversight. This study highlights AI’s potential to counter misinformation and promote societal harmony when applied responsibly.

#ai#Algorithms#applications#approach#Article#Artificial Intelligence#challenge#change#conspiracy theories#conspiracy theory#data#deal#Dialogue#education#election#emotions#Empathetic AI#employed#factor#Facts#fear#Fight#fraud#generative#generative ai#Global#GPT#GPT-4#gpt-4 turbo#Harmony

0 notes

Text

The Impact of Generative AI on Supply Chain Management: Optimizing Logistics

The generative AI market has been gaining significant traction in recent years, driven by the increasing adoption of artificial intelligence (AI) across various industries. Generative AI refers to a subset of AI techniques focused on creating data, content, or outputs that mimic or resemble human-generated content. This approach enables machines to autonomously produce diverse outputs, including images, text, audio, and video, often indistinguishable from human-created content. In this article, we will delve into the current state of the generative AI market, its applications, challenges, and future outlook.

Market Size and Growth

The global generative AI market size was valued at USD 43.87 billion in 2023 and is projected to grow from USD 67.18 billion in 2024 to USD 967.65 billion by 2032, exhibiting a Compound Annual Growth Rate (CAGR) of 39.6% during the forecast period (2024-2032). This rapid growth is attributed to the rising need for creating virtual worlds in the metaverse, conversational Gen AI ability, and the deployment of large language models (LLM).

Applications of Generative AI

Generative AI has numerous applications across various industries, including marketing, healthcare, finance, and education. In marketing, generative AI is used for content creation, content personalization, content ideation, and automated customer service and support. For instance, generative AI models can write copy from an outline or prompt, and they’re handy for short-form content like blog posts, emails, social media posts, and digital advertising. In healthcare, generative AI is used for medical imaging analysis, disease diagnosis, and personalized treatment planning.

Challenges in Adopting Generative AI

Despite the numerous benefits of generative AI, there are several challenges involved in adopting this technology. Some of the key challenges include data security concerns, biases, errors, and limitations of generative AI, dependence on third-party platforms, and the need for employee training. Additionally, the market faces risks related to data breaches and sensitive information, which can hinder market growth.

Key Players in the Generative AI Market

The generative AI market is dominated by key players such as IBM Corporation, Microsoft Corporation, (Alphabet) Google LLC, Adobe, Amazon Web Services, Inc., SAP SE, Rephrase AI, Nvidia, and Synthesis AI, among others. These companies are driving innovation in the market through the development of new generative AI models and applications.

Future Outlook

The future outlook for the generative AI market is promising, with the potential to transform various industries and revolutionize the way we live and work. As the technology continues to evolve, we can expect to see more sophisticated applications of generative AI, including the creation of virtual worlds in the metaverse and the deployment of large language models. However, the market will also face challenges related to data security, biases, and limitations, which will require careful consideration and mitigation strategies.

Conclusion

In conclusion, the generative AI market is a rapidly growing field with numerous applications across various industries. While there are challenges involved in adopting this technology, the potential benefits are significant, and the market is expected to continue growing at a rapid pace. As the technology continues to evolve, we can expect to see more sophisticated applications of generative AI, which will transform the way we live and work.

#Generative AI#Retail#Marketing#Sales#ChatGPT#Jasper#Einstein GPT#Rapidely#Manychat#Flick#Meta#Mark Zuckerberg#Technology#Future Profits#Market Analysis#Market Size#Opportunities#Forecasts#Growth#Industry Verticals#Global Data#Competitive Landscape#Microsoft Corporation#Google#IBM Corporation#Open AI#Bloomberg Intelligence#Research#Software Revenue#AI Products

0 notes

Video

youtube

Why ProPics Should Be Your Global Media Solutions Partner #gpt4 #ProPic... Why ProPics Should Be Your Global Media Solutions Partner #GPT #ChatGPT #ProPicsCanadaMedia #Media #Video #Audio #Photography #Graphics #Brandidentity #Branding #Brandawareness #socialmedia #logos #Webdesign #Mediamanagement #Mediamonitoring #CustomSolutions #Customapps #AIIMS #FilmOneSolutions #CloudComputing #AI #GenerativeAI #PredictiveAI #MachineLearning #IPManagement #ITPurchasing Transition #implimentation #AIIntegration #AISolutions #Cloud #FileManagement #DataStorage #Datamanagement #Dataanalytics #Dataanalysis #Safetysolutions #businessgrowth #BusinessDevelopment #onlinemarketing #traditionalmarketing #Contentcreation #Strategy #Creativestrategies #Technology #Videoproduction #Mediaproduction #Canada #Vancouver #Toronto #Calgary #Whitehorse #Yellowknife #nunavut #Montreal #Kelowna #Edmonton #PrinceGeorge #Winnipeg #Regina

#youtube#Why ProPics Should Be Your Global Media Solutions Partner GPT ChatGPT ProPicsCanadaMedia Media Video Audio Photography Graphics Brandident

0 notes

Text

Tom A. I. Riddle: The Dark Side of the Digital Horcrux

Artificial Intelligence has taken us by storm. In my blog this week, I look at the dark side of AI. From the control by a select few to its potential social and global disruptions, this essay delves into the digital horcrux that is AI. #AI #DigitalHorcrux

Over the past weekend, I had the opportunity to dine with some very smart people: engineers and entreprenuers, all alumni of GIKI, Pakistan. They had insightful things to say about a range of issues, from the oil and gas sector to American geopolitical power, the economy, and all the way to AI and industrial automation. Continue reading Untitled

View On WordPress

#A.I.#AI and Democracy#AI Bias#AI Disruption#Artificial Intelligence#Data Privacy#Digital Transformation#Facebook#Global Peace#GPT-4#Horcrux#Income Inequality#Power#Social Media#Tom Riddle#Tom Riddle&039;s Diary#Universal Basic Income#Western Hegemony

1 note

·

View note

Text

The Tragedy of Unchecked Misinformation: An Appeal for Responsible Internet Use

Has anyone else noticed the dangerous common denominator in this maelstrom of pseudoscientific gibberish flooding the internet? Let me shed some light if you haven't: it's the rampant, unrestricted access to information dissemination platforms, granted indiscriminately to all — regardless of their level of intellectual maturity or educational background.

Irresponsible Internet Access: A Lurking Threat

Imagine providing a toddler with a loaded firearm — it's as preposterously reckless as it sounds. Now, think of granting anyone and everyone the means to freely navigate and contribute to the internet — same level of danger, but with far-reaching and often irreversible repercussions. Our failure to regulate access to the digital world has allowed the perpetuation of baseless theories, the promotion of pseudoscience, and the flourishing of outright lies.

Take, for instance, an unsettling trend I recently stumbled upon — a video promoting borax ingestion as some sort of dietary supplement or 'health fad.' While this isn't about vilifying a specific demographic, it is concerning to observe such harmful misinformation being propagated by people, who are — ironically — often seen as caretakers and educators in their households. It's vital to remember, however, that being taken in by such dangerous misinformation is not exclusive to any one group; it is an issue that transcends gender, age, and social status, underscoring the critical importance of digital literacy for all internet users.

The Age of Misinformation: A Gullible Audience

Compounding the issue of unchecked internet access is the alarming readiness of certain internet users to uncritically accept any and all information presented to them. This unfortunate trait has fueled the rise of damaging belief systems, from anti-vaxxers and climate change deniers to COVID-19 skeptics and flat-earth believers. This willingness to believe in unverifiable claims extends to religious dogmatism, with unscientific declarations like 'Jesus is real' shared as truths without empirical validation.

A Call to Arms: Quality Control and Regulated Access

With today's advanced technology, we possess the means to combat this wave of misinformation. We could, theoretically, have artificial intelligence ensure the veracity of shared online content, safeguarding the reliability of digital information. Yet, our focus is scattered — engrossed in perpetuating bigotry against various marginalized groups, and misguidedly 'canceling' individuals as a self-congratulatory act of faux-justice.

As the world continues to burn, both literally and metaphorically, and as the internet increasingly mirrors the lowest common denominator of human intellect, it's high time for rational and critical thinkers to step up. We need to convince our governing bodies to implement stricter regulations on internet access and enforce quality control measures for online content.

The need of the hour is to act — before the avalanche of misinformation turns into an unstoppable force, inflicting irrevocable damage on our society and future generations. Our collective survival could depend on it.

DO NOT INGEST BORAX!!!

#gpt#gpt4#ai#the critical skeptic#borax#boraxing#boraxxing#conspiracies#dangerous fads#anti vaxxers#crazy#crazy trends#dangerous trend#misinformation#global warming#social issues#internet moderation#critical thinking#reason#freedom is an illusion

0 notes

Text

One assessment suggests that ChatGPT, the chatbot created by OpenAI in San Francisco, California, is already consuming the energy of 33,000 homes. It’s estimated that a search driven by generative AI uses four to five times the energy of a conventional web search. Within years, large AI systems are likely to need as much energy as entire nations. And it’s not just energy. Generative AI systems need enormous amounts of fresh water to cool their processors and generate electricity. In West Des Moines, Iowa, a giant data-centre cluster serves OpenAI’s most advanced model, GPT-4. A lawsuit by local residents revealed that in July 2022, the month before OpenAI finished training the model, the cluster used about 6% of the district’s water. As Google and Microsoft prepared their Bard and Bing large language models, both had major spikes in water use — increases of 20% and 34%, respectively, in one year, according to the companies’ environmental reports. One preprint suggests that, globally, the demand for water for AI could be half that of the United Kingdom by 2027. In another, Facebook AI researchers called the environmental effects of the industry’s pursuit of scale the “elephant in the room”. Rather than pipe-dream technologies, we need pragmatic actions to limit AI’s ecological impacts now.

1K notes

·

View notes

Text

information flow in transformers

In machine learning, the transformer architecture is a very commonly used type of neural network model. Many of the well-known neural nets introduced in the last few years use this architecture, including GPT-2, GPT-3, and GPT-4.

This post is about the way that computation is structured inside of a transformer.

Internally, these models pass information around in a constrained way that feels strange and limited at first glance.

Specifically, inside the "program" implemented by a transformer, each segment of "code" can only access a subset of the program's "state." If the program computes a value, and writes it into the state, that doesn't make value available to any block of code that might run after the write; instead, only some operations can access the value, while others are prohibited from seeing it.

This sounds vaguely like the kind of constraint that human programmers often put on themselves: "separation of concerns," "no global variables," "your function should only take the inputs it needs," that sort of thing.

However, the apparent analogy is misleading. The transformer constraints don't look much like anything that a human programmer would write, at least under normal circumstances. And the rationale behind them is very different from "modularity" or "separation of concerns."

(Domain experts know all about this already -- this is a pedagogical post for everyone else.)

1. setting the stage

For concreteness, let's think about a transformer that is a causal language model.

So, something like GPT-3, or the model that wrote text for @nostalgebraist-autoresponder.

Roughly speaking, this model's input is a sequence of words, like ["Fido", "is", "a", "dog"].

Since the model needs to know the order the words come in, we'll include an integer offset alongside each word, specifying the position of this element in the sequence. So, in full, our example input is

[ ("Fido", 0), ("is", 1), ("a", 2), ("dog", 3), ]

The model itself -- the neural network -- can be viewed as a single long function, which operates on a single element of the sequence. Its task is to output the next element.

Let's call the function f. If f does its job perfectly, then when applied to our example sequence, we will have

f("Fido", 0) = "is" f("is", 1) = "a" f("a", 2) = "dog"

(Note: I've omitted the index from the output type, since it's always obvious what the next index is. Also, in reality the output type is a probability distribution over words, not just a word; the goal is to put high probability on the next word. I'm ignoring this to simplify exposition.)

You may have noticed something: as written, this seems impossible!

Like, how is the function supposed to know that after ("a", 2), the next word is "dog"!? The word "a" could be followed by all sorts of things.

What makes "dog" likely, in this case, is the fact that we're talking about someone named "Fido."

That information isn't contained in ("a", 2). To do the right thing here, you need info from the whole sequence thus far -- from "Fido is a", as opposed to just "a".

How can f get this information, if its input is just a single word and an index?

This is possible because f isn't a pure function. The program has an internal state, which f can access and modify.

But f doesn't just have arbitrary read/write access to the state. Its access is constrained, in a very specific sort of way.

2. transformer-style programming

Let's get more specific about the program state.

The state consists of a series of distinct "memory regions" or "blocks," which have an order assigned to them.

Let's use the notation memory_i for these. The first block is memory_0, the second is memory_1, and so on.

In practice, a small transformer might have around 10 of these blocks, while a very large one might have 100 or more.

Each block contains a separate data-storage "cell" for each offset in the sequence.

For example, memory_0 contains a cell for position 0 ("Fido" in our example text), and a cell for position 1 ("is"), and so on. Meanwhile, memory_1 contains its own, distinct cells for each of these positions. And so does memory_2, etc.

So the overall layout looks like:

memory_0: [cell 0, cell 1, ...] memory_1: [cell 0, cell 1, ...] [...]

Our function f can interact with this program state. But it must do so in a way that conforms to a set of rules.

Here are the rules:

The function can only interact with the blocks by using a specific instruction.

This instruction is an "atomic write+read". It writes data to a block, then reads data from that block for f to use.

When the instruction writes data, it goes in the cell specified in the function offset argument. That is, the "i" in f(..., i).

When the instruction reads data, the data comes from all cells up to and including the offset argument.

The function must call the instruction exactly once for each block.

These calls must happen in order. For example, you can't do the call for memory_1 until you've done the one for memory_0.

Here's some pseudo-code, showing a generic computation of this kind:

f(x, i) { calculate some things using x and i; // next 2 lines are a single instruction write to memory_0 at position i; z0 = read from memory_0 at positions 0...i; calculate some things using x, i, and z0; // next 2 lines are a single instruction write to memory_1 at position i; z1 = read from memory_1 at positions 0...i; calculate some things using x, i, z0, and z1; [etc.] }

The rules impose a tradeoff between the amount of processing required to produce a value, and how early the value can be accessed within the function body.

Consider the moment when data is written to memory_0. This happens before anything is read (even from memory_0 itself).

So the data in memory_0 has been computed only on the basis of individual inputs like ("a," 2). It can't leverage any information about multiple words and how they relate to one another.

But just after the write to memory_0, there's a read from memory_0. This read pulls in data computed by f when it ran on all the earlier words in the sequence.

If we're processing ("a", 2) in our example, then this is the point where our code is first able to access facts like "the word 'Fido' appeared earlier in the text."

However, we still know less than we might prefer.

Recall that memory_0 gets written before anything gets read. The data living there only reflects what f knows before it can see all the other words, while it still only has access to the one word that appeared in its input.

The data we've just read does not contain a holistic, "fully processed" representation of the whole sequence so far ("Fido is a"). Instead, it contains:

a representation of ("Fido", 0) alone, computed in ignorance of the rest of the text

a representation of ("is", 1) alone, computed in ignorance of the rest of the text

a representation of ("a", 2) alone, computed in ignorance of the rest of the text

Now, once we get to memory_1, we will no longer face this problem. Stuff in memory_1 gets computed with the benefit of whatever was in memory_0. The step that computes it can "see all the words at once."

Nonetheless, the whole function is affected by a generalized version of the same quirk.

All else being equal, data stored in later blocks ought to be more useful. Suppose for instance that

memory_4 gets read/written 20% of the way through the function body, and

memory_16 gets read/written 80% of the way through the function body

Here, strictly more computation can be leveraged to produce the data in memory_16. Calculations which are simple enough to fit in the program, but too complex to fit in just 20% of the program, can be stored in memory_16 but not in memory_4.

All else being equal, then, we'd prefer to read from memory_16 rather than memory_4 if possible.

But in fact, we can only read from memory_16 once -- at a point 80% of the way through the code, when the read/write happens for that block.

The general picture looks like:

The early parts of the function can see and leverage what got computed earlier in the sequence -- by the same early parts of the function. This data is relatively "weak," since not much computation went into it. But, by the same token, we have plenty of time to further process it.

The late parts of the function can see and leverage what got computed earlier in the sequence -- by the same late parts of the function. This data is relatively "strong," since lots of computation went into it. But, by the same token, we don't have much time left to further process it.

3. why?

There are multiple ways you can "run" the program specified by f.

Here's one way, which is used when generating text, and which matches popular intuitions about how language models work:

First, we run f("Fido", 0) from start to end. The function returns "is." As a side effect, it populates cell 0 of every memory block.

Next, we run f("is", 1) from start to end. The function returns "a." As a side effect, it populates cell 1 of every memory block.

Etc.

If we're running the code like this, the constraints described earlier feel weird and pointlessly restrictive.

By the time we're running f("is", 1), we've already populated some data into every memory block, all the way up to memory_16 or whatever.

This data is already there, and contains lots of useful insights.

And yet, during the function call f("is", 1), we "forget about" this data -- only to progressively remember it again, block by block. The early parts of this call have only memory_0 to play with, and then memory_1, etc. Only at the end do we allow access to the juicy, extensively processed results that occupy the final blocks.

Why? Why not just let this call read memory_16 immediately, on the first line of code? The data is sitting there, ready to be used!

Why? Because the constraint enables a second way of running this program.

The second way is equivalent to the first, in the sense of producing the same outputs. But instead of processing one word at a time, it processes a whole sequence of words, in parallel.

Here's how it works:

In parallel, run f("Fido", 0) and f("is", 1) and f("a", 2), up until the first write+read instruction. You can do this because the functions are causally independent of one another, up to this point. We now have 3 copies of f, each at the same "line of code": the first write+read instruction.

Perform the write part of the instruction for all the copies, in parallel. This populates cells 0, 1 and 2 of memory_0.

Perform the read part of the instruction for all the copies, in parallel. Each copy of f receives some of the data just written to memory_0, covering offsets up to its own. For instance, f("is", 1) gets data from cells 0 and 1.

In parallel, continue running the 3 copies of f, covering the code between the first write+read instruction and the second.

Perform the second write. This populates cells 0, 1 and 2 of memory_1.

Perform the second read.

Repeat like this until done.

Observe that mode of operation only works if you have a complete input sequence ready before you run anything.

(You can't parallelize over later positions in the sequence if you don't know, yet, what words they contain.)

So, this won't work when the model is generating text, word by word.

But it will work if you have a bunch of texts, and you want to process those texts with the model, for the sake of updating the model so it does a better job of predicting them.

This is called "training," and it's how neural nets get made in the first place. In our programming analogy, it's how the code inside the function body gets written.

The fact that we can train in parallel over the sequence is a huge deal, and probably accounts for most (or even all) of the benefit that transformers have over earlier architectures like RNNs.

Accelerators like GPUs are really good at doing the kinds of calculations that happen inside neural nets, in parallel.

So if you can make your training process more parallel, you can effectively multiply the computing power available to it, for free. (I'm omitting many caveats here -- see this great post for details.)

Transformer training isn't maximally parallel. It's still sequential in one "dimension," namely the layers, which correspond to our write+read steps here. You can't parallelize those.

But it is, at least, parallel along some dimension, namely the sequence dimension.

The older RNN architecture, by contrast, was inherently sequential along both these dimensions. Training an RNN is, effectively, a nested for loop. But training a transformer is just a regular, single for loop.

4. tying it together

The "magical" thing about this setup is that both ways of running the model do the same thing. You are, literally, doing the same exact computation. The function can't tell whether it is being run one way or the other.

This is crucial, because we want the training process -- which uses the parallel mode -- to teach the model how to perform generation, which uses the sequential mode. Since both modes look the same from the model's perspective, this works.

This constraint -- that the code can run in parallel over the sequence, and that this must do the same thing as running it sequentially -- is the reason for everything else we noted above.

Earlier, we asked: why can't we allow later (in the sequence) invocations of f to read earlier data out of blocks like memory_16 immediately, on "the first line of code"?

And the answer is: because that would break parallelism. You'd have to run f("Fido", 0) all the way through before even starting to run f("is", 1).

By structuring the computation in this specific way, we provide the model with the benefits of recurrence -- writing things down at earlier positions, accessing them at later positions, and writing further things down which can be accessed even later -- while breaking the sequential dependencies that would ordinarily prevent a recurrent calculation from being executed in parallel.

In other words, we've found a way to create an iterative function that takes its own outputs as input -- and does so repeatedly, producing longer and longer outputs to be read off by its next invocation -- with the property that this iteration can be run in parallel.

We can run the first 10% of every iteration -- of f() and f(f()) and f(f(f())) and so on -- at the same time, before we know what will happen in the later stages of any iteration.

The call f(f()) uses all the information handed to it by f() -- eventually. But it cannot make any requests for information that would leave itself idling, waiting for f() to fully complete.

Whenever f(f()) needs a value computed by f(), it is always the value that f() -- running alongside f(f()), simultaneously -- has just written down, a mere moment ago.

No dead time, no idling, no waiting-for-the-other-guy-to-finish.

p.s.

The "memory blocks" here correspond to what are called "keys and values" in usual transformer lingo.

If you've heard the term "KV cache," it refers to the contents of the memory blocks during generation, when we're running in "sequential mode."

Usually, during generation, one keeps this state in memory and appends a new cell to each block whenever a new token is generated (and, as a result, the sequence gets longer by 1).

This is called "caching" to contrast it with the worse approach of throwing away the block contents after each generated token, and then re-generating them by running f on the whole sequence so far (not just the latest token). And then having to do that over and over, once per generated token.

#ai tag#is there some standard CS name for the thing i'm talking about here?#i feel like there should be#but i never heard people mention it#(or at least i've never heard people mention it in a way that made the connection with transformers clear)

302 notes

·

View notes

Text

So, just some Fermi numbers for AI: I'm going to invent a unit right now, 1 Global Flop-Second. It's the total amount of computation available in the world, for one second. That's 10^21 flops if you're actually kind of curious. GPT-3 required about 100 Global Flop-Seconds, or nearly 2 minutes. GPT-4 required around 10,000 Global Flop-Seconds, or about 3 hours, and at the time, consumed something like 1/2000th the worlds total computational capacity for a couple of years. If we assume that every iteration just goes up by something like 100x as many flop seconds, GPT-5 is going to take 1,000,000 Global Flop-Seconds, or 12 days of capacity. They've been working on it for a year and a half, which implies that they've been using something like 1% of the world's total computational capacity in that time.

So just drawing straights lines in the guesses (this is a Fermi estimation), GPT-6 would need 20x as much computing fraction as GPT-5, which needed 20x as much as GPT-4, so it would take something like a quarter of all the world's computational capacity to make if they tried for a year and a half. If they cut themselves some slack and went for five years, they'd still need 5-6%.

And GPT-7 would need 20x as much as that.

OpenAI's CEO has said that their optimistic estimates for getting to GPT-7 would require seven-trillion dollars of investment. That's about as much as Microsoft, Apple, and Google combined. So, for limiting factors involved are... GPT-6: Limited by money. GPT-6 doesn't happen unless GPT-5 can make an absolute shitload. Decreasing gains kill this project, and all the ones after that. We don't actually know how far deep learning can be pushed before it stops working, but it probably doesnt' scale forever. GPT-7: Limited by money, and by total supply of hardware. Would need to make a massive return on six, and find a way to actually improve hardware output for the world. GPT-8: Limited by money, and by hardware, and by global energy supplies. Would require breakthroughs in at least two of those three. A world where GPT-8 can be designed is almost impossible to imagine. A world where GPT-8 exists is like summoning an elder god. GPT-9, just for giggles, is like a Kardeshev 1 level project. Maybe level 2.

55 notes

·

View notes

Text

The European Union today agreed on the details of the AI Act, a far-reaching set of rules for the people building and using artificial intelligence. It’s a milestone law that, lawmakers hope, will create a blueprint for the rest of the world.

After months of debate about how to regulate companies like OpenAI, lawmakers from the EU’s three branches of government—the Parliament, Council, and Commission—spent more than 36 hours in total thrashing out the new legislation between Wednesday afternoon and Friday evening. Lawmakers were under pressure to strike a deal before the EU parliament election campaign starts in the new year.

“The EU AI Act is a global first,” said European Commission president Ursula von der Leyen on X. “[It is] a unique legal framework for the development of AI you can trust. And for the safety and fundamental rights of people and businesses.”

The law itself is not a world-first; China’s new rules for generative AI went into effect in August. But the EU AI Act is the most sweeping rulebook of its kind for the technology. It includes bans on biometric systems that identify people using sensitive characteristics such as sexual orientation and race, and the indiscriminate scraping of faces from the internet. Lawmakers also agreed that law enforcement should be able to use biometric identification systems in public spaces for certain crimes.

New transparency requirements for all general purpose AI models, like OpenAI's GPT-4, which powers ChatGPT, and stronger rules for “very powerful” models were also included. “The AI Act sets rules for large, powerful AI models, ensuring they do not present systemic risks to the Union,” says Dragos Tudorache, member of the European Parliament and one of two co-rapporteurs leading the negotiations.

Companies that don’t comply with the rules can be fined up to 7 percent of their global turnover. The bans on prohibited AI will take effect in six months, the transparency requirements in 12 months, and the full set of rules in around two years.

Measures designed to make it easier to protect copyright holders from generative AI and require general purpose AI systems to be more transparent about their energy use were also included.

“Europe has positioned itself as a pioneer, understanding the importance of its role as a global standard setter,” said European Commissioner Thierry Breton in a press conference on Friday night.

Over the two years lawmakers have been negotiating the rules agreed today, AI technology and the leading concerns about it have dramatically changed. When the AI Act was conceived in April 2021, policymakers were worried about opaque algorithms deciding who would get a job, be granted refugee status or receive social benefits. By 2022, there were examples that AI was actively harming people. In a Dutch scandal, decisions made by algorithms were linked to families being forcibly separated from their children, while students studying remotely alleged that AI systems discriminated against them based on the color of their skin.

Then, in November 2022, OpenAI released ChatGPT, dramatically shifting the debate. The leap in AI’s flexibility and popularity triggered alarm in some AI experts, who drew hyperbolic comparisons between AI and nuclear weapons.

That discussion manifested in the AI Act negotiations in Brussels in the form of a debate about whether makers of so-called foundation models such as the one behind ChatGPT, like OpenAI and Google, should be considered as the root of potential problems and regulated accordingly—or whether new rules should instead focus on companies using those foundational models to build new AI-powered applications, such as chatbots or image generators.

Representatives of Europe’s generative AI industry expressed caution about regulating foundation models, saying it could hamper innovation among the bloc’s AI startups. “We cannot regulate an engine devoid of usage,” Arthur Mensch, CEO of French AI company Mistral, said last month. “We don’t regulate the C [programming] language because one can use it to develop malware. Instead, we ban malware.” Mistral’s foundation model 7B would be exempt under the rules agreed today because the company is still in the research and development phase, Carme Artigas, Spain's Secretary of State for Digitalization and Artificial Intelligence, said in the press conference.

The major point of disagreement during the final discussions that ran late into the night twice this week was whether law enforcement should be allowed to use facial recognition or other types of biometrics to identify people either in real time or retrospectively. “Both destroy anonymity in public spaces,” says Daniel Leufer, a senior policy analyst at digital rights group Access Now. Real-time biometric identification can identify a person standing in a train station right now using live security camera feeds, he explains, while “post” or retrospective biometric identification can figure out that the same person also visited the train station, a bank, and a supermarket yesterday, using previously banked images or video.

Leufer said he was disappointed by the “loopholes” for law enforcement that appeared to have been built into the version of the act finalized today.

European regulators’ slow response to the emergence of social media era loomed over discussions. Almost 20 years elapsed between Facebook's launch and the passage of the Digital Services Act—the EU rulebook designed to protect human rights online—taking effect this year. In that time, the bloc was forced to deal with the problems created by US platforms, while being unable to foster their smaller European challengers. “Maybe we could have prevented [the problems] better by earlier regulation,” Brando Benifei, one of two lead negotiators for the European Parliament, told WIRED in July. AI technology is moving fast. But it will still be many years until it’s possible to say whether the AI Act is more successful in containing the downsides of Silicon Valley’s latest export.

82 notes

·

View notes

Text

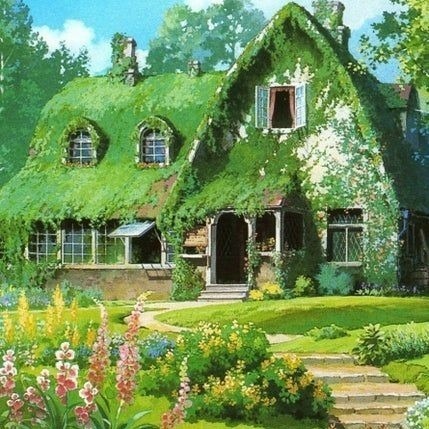

Asteroid Hayao Miyazaki (8883) in your astrology natal chart

by : Brielledoesastrology (tumblr)

"I believe that fantasy in the meaning of imagination is very important. We shouldn't stick too close to everyday reality but give room to the reality of the heart, of the mind, and of the imagination." - Hayao Miyazaki

asteroid "Miyazaki Hayao" code number : 8883

.

The asteroid "Miyazaki Hayao" (8883) is named after the famous japanese animator and movie director Hayao Miyazaki.

.

Hayao Miyazaki is best known as an acclaimed Japanese film director and animator. He is best known for his imaginative and visually stunning animated films, often called anime. Miyazaki is the co-founder of Studio Ghibli, a renowned animation studio, and has directed some of his most popular and successful films.

.

Miyazaki has been nominated for and won several Academy Awards. His film "Spirited Away" (2001) won the Academy Award for Best Animated Feature in 2003 making it the first and only hand-drawn and non-English-language film to win in that category.

.

His work often deals with themes such as nature, conservation, growth and the importance of kindness.

.

Miyazaki's films such as Spirited Away, Totoro, and Princess Mononoke are known for their compelling storytelling, beautiful hand-drawn animation, and unforgettable characters. He gained worldwide recognition and became an influential figure in the animation world.

.

Hayao Miyazaki's art style is characterized by attention to detail, fluidity, and the ability to evoke a sense of wonder and magic. His hand-drawn animations are known for their meticulous attention to detail and care put into each frame.

.

Miyazaki often emphasizes the beauty of nature, including lush landscapes, intricate cityscapes, and fantastical creatures. His characters are brought to life by expressive facial features and subtle movements to capture a wide range of emotions.

.

Miyazaki's art style also incorporates elements of traditional Japanese aesthetics, including a strong connection with nature and an emphasis on simplicity and elegance.

.

Overall, his art style is distinctive and recognizable, creating a visually stunning and immersive experience for the viewer.

(source : chat gpt)

.

In astrology the asteroid "Hayao Miyazaki" (8883) could indicate : your artistic expression, your imagination, your storytelling, your environmental consciousness, or ur a sense of wonder and magic, where could hayao miyazaki's work inspires or influence ur life in a type of way, ur interest in animation, your interest in art , where u are widely respected or admired for your artistic work, where your artistic work could gain wide or global recognition, where u plan to retired a lot of times when u are old but you still do ur job anyway lmao

⚠️ Warning : i consider this asteroid as prominent and brings the most effect if it conjuncts ur personal planets (sun,moon,venus,mercury,mars) and if it conjuncts ur personal points (ac,dc,ic,mc), i use 0 - 2.5 orbs (for conjunctions). For sextile, trine, opposite and square aspects to asteroids i usually use 0 - 2 orbs. Yes tight conjunctions of planet / personal points to asteroids tends to give the most effect, but other aspects (sextile,trine,square,opposite, etc) still exist, even they produce effects. If it doesn't aspect any of your planets or personal points, check the house placement of the asteroid, maybe some stuff/topics relating to this asteroid could affect some topics/stuff relating to the house placement . ⚠️

youtube

#astrology#astro observations#astro notes#astrology observations#astro community#astrology notes#zodiac#astroblr#aquarius#gemini#tarotblr#witchblr#astrology blogs#astrology community#hayao miyazaki#studio ghibli#anime#anime astrology#asteroids astrology#astrology asteroids#asteroids in astrology#asteroids astrology observation#brielledoesastrology#asteroid hayao miyazaki#spirited away#d4rkpluto#zeldasnotes#astrology tips#fame in astrology#Youtube

295 notes

·

View notes

Text

AI Summarizer Review: Best Summarizer Tool - Technology Org

New Post has been published on https://thedigitalinsider.com/ai-summarizer-review-best-summarizer-tool-technology-org/

AI Summarizer Review: Best Summarizer Tool - Technology Org

AI Summarizer is an online summary-generating tool. It is reachable at the domain “Summarizer.org.” It’s quite popular and shows up in the top results when you look for summarizing tools on Google.

In this post, we’re going to be looking at the workings and features of this tool. The purpose of the review is to help you decide whether or not this is the tool that you want to use for your needs.

What exactly is an AI Summarizer and how does it work?

At the domain Summarizer.org, you can find a number of different tools. However, in this review, we’re going to be looking at the AI summarizer in particular.

The AI Summarizer utilizes artificial intelligence to process and understand the content that you provide. Then, once it has the main idea, it shortens the text to a smaller number of words.

Some of the models that this tool utilizes include:

NLP: NLP stands for natural language processing. It is basically the technology that software and machines use to understand natural human languages.

GPT 3.5/4: GPT 3.5/4 is quite a popular AI technology. It is used in ChatGPT. GPT basically stands for generative pre-trained transformer. This is the main ingredient that allows the AI Summarizer to understand the content and create a summary without breaking the context.

How well does AI Summarizer work?

Next up, let’s take a look at how well this tool works. Whatever the frills and peripheral perks may be, the main selling point of any online tool is its performance.

There are two modes that you can use in AI Summarizer. They are right at the top of the input box. Here is how they look:

Since we want to give you a well-rounded idea of the performance of this tool, we will check out both of these modes.

The Testing Material

When we review an online tool, we try to use such material or content that actually shows the efficacy of the main functionality. That sounds a bit dumb and obvious but we’ll explain it.

The best way to check a summarizer is to create some content that has an order or chronology to it. A story, for example, would be a good way to check a summarizer because the result would show us whether or not the tool captured the important bits or not.

If we just put in some purely informational content about a short topic, say, long-tail keywords or something, then even one line would encapsulate the whole thing.

Keeping that in mind, we’re going to be using a story-like bit of text to test Summarizer.org. We’re going to write a short one here right now.

There was a boy sitting alone in the forest when a stag came into the clearing in front of him. The stag and the boy stared at each other for a while. “I never understood the meaning of deer in the headlights,” said the boy, “But I get it now.” The stag shook his head and said “I’m not a deer.” The boy also shook his head and replied “You’re a stag, sure, but it’s the same family.”

Meanwhile, a lion also arrived at the scene.

“It’s impressive,” he said to the boy, “that you don’t find his ability to speak as fascinating as the fact that he got his family wrong.”

The stag chipped in at this point:

“He probably thinks we’re some hallucinations.”

The boy laughed out loud.

“A boy sitting in the middle of the forest. Ready meal for every predator. And you think I am the one that’s hallucinating?”

The boy and the stag looked at the lion. The latter closed his eyes. When he opened them, there was no one in the clearing. His stomach grumbling, the lion turned back, thinking what it would tell the cubs and the missus back in the den.

I ended up writing something really deep here but it works.

Testing of the Free “AI Summarizer” Mode

Let’s check out the free mode. It’s named “AI Summarizer.” You can use this one without paying but if you want the 2.0 mode, you have to be a paid member.

Here is the summary that this mode created for the input text when the length was set at 24%.

In a forest, a boy encounters a stag and they discuss the similarities between deer and stags. A lion joins the conversation and questions the boy’s fascination with the stag’s ability to speak rather than his misidentifying the family. The boy jokes about being a potential prey and they all look at the lion before disappearing. The lion contemplates what to tell his family upon returning to the den.

For the most part, the summary does convey the main occurrences but it spoils the context and the whole setting. The summary implies that the stag and the boy just disappear into thin air rather than describing them as delirious hallucinations of a hungry lion. That changes the overall meaning, which is not a good indicator of the overall performance of the tool.

Plus, in the summary, the boy “encounters” the stag, whereas in the output, it’s the exact opposite. The stag comes across the boy. There is no mention of the point that the lion was hungry and it had to return to the cubs and break the sad news of not having any food.

Overall, the summary did away with a lot of main points and the output isn’t completely on point. We would say that it was 60% accurate.

Now let’s move on to the next mode.

Testing of the AI Summarizer 2.0

Here are what the results look like when we use the paid mode.

A boy sat alone in the forest when a stag appeared before him. They gazed at each other, with the boy remarking on the deer in headlights phenomenon. The stag corrected him, and a lion joined the conversation, amused by the boy’s response. The stag joked about being seen as a hallucination, leading the boy to laugh. The boy challenged the idea of being prey, causing the lion to contemplate what to tell its family. And just like that, the boy and the stag vanished, leaving the lion to ponder the encounter.

If we’re being completely honest, the same problems exist in this output as did in the first performance testing. The context is off, and the whole setting of the story is disturbed.

Without probing into the problems again, we’ll just say that this summary was around 65% accurate. The tone and the flow were slightly better.

Verdict of Performance

After seeing how well this tool performs and how it shrinks the provided content, here is how we can present the final verdict.

The performance of this tool is fair. It does have issues but it doesn’t deviate completely off point and it does not introduce alien concepts or aspects in the content. However, due to the issues, we’ll say that the tool can be used under stringer supervision and necessary editing.

Features of AI Summarizer

Now that we’re done looking at the performance, we’re going to list some of the main features that we think play a role in deciding the overall quality of the tool.

AI Summarizer is available in various languages

This is the first and one of the most remarkable features that exist in this tool. It’s something that we haven’t seen a lot in other summarizing tools.

When you load AI Summarizer, you can specify the language that you want to summarize content in. If there is some Spanish content or some French text that you need to have shortened, you can select the respective language from the menu.

Once you click on the language, the text on the tool’s interface will automatically translate. This can help you find the options you need easily.

AI Summarizer has various useful post-processing features

When the provided text is summarized and the output is provided in the right side box, there are a number of options that you can use.

You can click on the “Show Bullets” button to view the summary in the form of bullets rather than paragraphs

You can click on the “Best Line” button to view the most descriptive line from the whole summary. It can be a good option if you just want to encapsulate the whole crux of the essay

AI Summarizer allows you to upload and download files easily

Another great feature that you can use with this tool is the quick file uploads and downloads.

Before you get started with the process, you can upload a file from your local storage so that it gets quickly imported without needless copy-pasting.

And once you’re done, you can do the same process in reverse. You can download the file to your local storage instead of copying it all and then pasting it into a Word file or something.

While this was an in-depth review of the AI summarizer offered by Summarizer.org, there are other tools that are offered in the domain as well. Let us take a brief look at each of them as well and see if they perform well before ending this post.

Other Tools offered by Summarizer.org

Following are the other tools that are provided by Summarizer.org on top of the AI summarizer. We’re also adding a brief review of each of the other tools in the list below.

AI Paraphrasing Tool

The AI paraphrasing tool that is offered by Summarizer.org is a free-to-use tool that can rephrase the given content in an instant. It offers 4 different paraphrasing modes, namely Smooth, Creative, Shorten, and AI Paraphrase.

The tool says it improves the readability and clarity of the provided text while paraphrasing, which is why we provided it with the following text to see if it does what it claims.

Education is the process by which a person either acquires or delivers some knowledge to another person. It is also where someone develops essential skills to learn social norms. However, the main goal of education is to help individuals live life and contribute to society when they become older. There are multiple types of education but traditional schooling plays a key role in measuring the success of a person. Besides this, education also helps to eliminate poverty and provides people the chance to live better lives.

The output that the tool provided us can be seen in the screenshot attached below.

The paraphrased version is quite good and the tool does in fact fulfill its claims. We’d say it is a 9/10 paraphrasing tool considering it provides accurate results and is free for everyone.

AI Essay Writer

The AI Essay Writer by Summarizer.org claims that it can generate any type of essay on any given topic. It allows the users to select the type of essay that they want the tool to generate.

Some of the types of essays that the tool claims to generate are:

Basic

Descriptive

Narrative

Persuasive

Comparative

Users can also set the length of the essay they want from the tool once they’ve provided it with the title and selected the type.

For our testing, we gave it the title “The effects of global warming on the world” to generate a medium-length essay. Here’s what it came up with as a result.

The essay that the tool generated is almost 1000 words so you’re not going to see it fully in the screenshot. That being said, the output is quite good and will definitely work for you even if you’re a student.

AI Story Generator

The tool that we’d like to discuss and briefly review next is the AI Story Generator by Summarizer.org.

The tool says that it can generate a story on any given topic and in whatever genre the user chooses. Some of the genres available for story generation by the tool are:

Original

Classic

Humor

SciFi

Romance

Thriller

Horror

Realism

Besides the genre selection, users can also choose the length of the story and the creativity that they want the tool to generate the story in. ie. Innovative, visionary, conservative, imaginative, and inspired.

For our testing, we gave the tool the topic “Whispers of Destiny” and here’s the output generated by it.

We’d say the output is quite good and the story perfectly fits the topic. Considering this, you can use this tool without hesitation.

AI Conclusion Generator

The last tool offered by Summarizer.org that we’d like to discuss in this post is the AI Conclusion Generator. As the name suggests, it can generate a conclusion passage for any text you provide.

This can come in handy, especially for bloggers and students as they have to often write blog posts and essays. A conclusion is an important part of both these write-ups and now you, considering you’re a student or a blogger, don’t have to write it manually anymore.

That being said, let us now test this tool by providing it with a blog post titled “Navigating the Ethical Landscape of Artificial Intelligence: Balancing Innovation and Responsibility”. Here’s the output that the tool provided us.

We can see from the image provided above that the tool generated a perfect conclusion for us based on the blog post we provided. This makes it a great tool and we’d give it a solid 8/10.

Now that we’ve covered all the other tools as well, this brings us to the end of our review post.

Final Verdict

And with that, our review comes to an end. In summation, here is how we can define Summarizer.org

It offers fairly good tools that are free to access. The AI Summarizer does have some issues in the output but with a bit of editing, they can be resolved. The other tools are quite good and their outputs can be used without editing.

#A.I. & Neural Networks news#ai#air#alien#artificial#Artificial Intelligence#Blog#box#chatGPT#content#creativity#Editing#education#effects#eyes#Features#Food#forest#form#generative#generative ai#generator#Global#Global Warming#Google#GPT#Hallucination#hallucinations#how#human

0 notes

Note

Hey, Zooble told me you could Tell me more about Tax evasion, please teach me oh wise one

Tax evasion is the illegal act of deliberately avoiding paying taxes owed to the government. This fraudulent activity often involves misrepresenting income, inflating deductions, hiding money in offshore accounts, or failing to report cash transactions. By doing so, individuals and businesses can evade their financial obligations to the state, undermining public trust and depriving governments of necessary revenue to fund public services like healthcare, education, and infrastructure. Tax evasion can take many forms, from small-scale underreporting by individuals to large, sophisticated schemes orchestrated by corporations or wealthy entities. The consequences of tax evasion are significant, including hefty fines, legal penalties, and potential imprisonment. Moreover, it creates an unfair burden on law-abiding taxpayers, who are left to compensate for the lost revenue. Governments worldwide combat tax evasion through rigorous audits, stricter reporting requirements, and international cooperation to trace illicit financial flows. Despite these efforts, tax evasion remains a global challenge, with many offenders exploiting loopholes in tax laws and leveraging advanced technologies to obscure their activities. Addressing this issue requires a combination of legal reform, technological innovation, and public awareness to ensure fairness in taxation and maintain the integrity of economic systems. -Chat GPT

In the meantime here’s the definition of grass

Grass is a versatile and resilient plant that plays an essential role in ecosystems around the world. It belongs to the Poaceae family, one of the largest and most widespread plant families on Earth, and includes thousands of species ranging from tiny blades to towering bamboos. Grass covers vast areas, from prairies and savannas to urban lawns and sports fields, providing food, shelter, and oxygen for countless organisms. Its dense root systems stabilize soil, prevent erosion, and improve water retention, making it a vital component of healthy landscapes. In agriculture, grasses like wheat, rice, and corn are staple crops that sustain much of the world’s population, while wild grasses support grazing animals and contribute to biodiversity. Grass also holds cultural and recreational significance, serving as a space for play, gatherings, and aesthetic beauty in parks and gardens. Its ability to regenerate quickly after being cut or grazed demonstrates its remarkable adaptability. Despite its commonplace appearance, grass is a silent powerhouse of the natural world, deeply intertwined with human and environmental well-being. Now go touch some. -Chat GPT

Eat up

#the amazing digital circus#tadc#tadc jax#the amazing digital circus jax#ask blog#send asks#asks open#jax#send me asks#asks#jax=lazy#ask anon#ask answered#ask response#tumblr asks#ask anything#ask#anon ask#answered asks#ask me anything#tadc ask blog#ic post#my asks#jax amazing digital circus#jax tadc#jax the amazing digital circus#jax was here#ask jax#jax the rabbit#anon asks

11 notes

·

View notes

Text

baking contest w/ the avengers!!

type of writing: headcanons / scenario

word count: 1k

request: yes / no

original request: OMG CAN U PLS DO THE AVENGERS IF THEY HAD LIKE A COOKING OR BAKING CONTEST?

dynamic: avengers x teen!reader (teenage avenger series)

characters: reader, scott lang, nick fury, clint barton, harley keener, peter parker, miles morales, tony stark, pietro maximoff etc

a/n: HECK YEAH I CAN!!!! i loved this idea sm i was so excited to get this request :D i'm getting back into writing so sorry if it's a lil bad lol. also guys i'm gonna open requests again so feel free to submit!! i have a lot of muse for spiderverse stuff atm hehe so i may post again today!! tysm, hope u enjoy!!!

taglist: @shefollowedthestars @thecloudedmind @ayohitmanddaeng

(fill out this form to be on my taglist!)

-----------------------✰----------------------

so there’s this thing that the avengers do

in order to do team bonding

they’ll assign partners in the beginning of the year

& each month, a new set of partners will choose something to do

and it’s always super fun

like that’s how u ended up at the trampoline park last month

& how scott ended up with a broken arm rip king

so this month had to be something a little less dangerous

kinda funny when u think about it like it’s literally the avengers they’re in dangerous situations all the time

and while you wanted to do something different, certain ~forces~ kept preventing that

like y’all were watching a movie a couple weeks ago

and fury came on the screen

how he could hack into it idk hes nick fury dude he can do anything

but he just looked at the camera and said “no more dumbass trampoline parks”

HAH

so yeah it had to be something tame

anyway so this month was you and scott!!!!

best duo ever!!!!!!

so you had to plan what to do

& scott refused to go skydiving bc that was your first choice

smh scott it would be so fun!!!!

his arm was still broken & he said that was why he wouldn’t go but like…. scott we know ur a scaredy-cat

anyway you were trying to decide when suddenly he was like

“y/n!!!! i totally forgot! the great british baking show just premiered and i promised clint we could watch it together!”

and that gave you an idea

scott LOVED it

but y’all needed a couple things before

first of all, u needed baking supplies

when i say baking supplies i mean BAKING SUPPLIES

there’s like a thousand avengers at this point bruh :’)

scott almost got one of those instacart orders for it but u hated the thought of an instacart person getting ur crazy order

so it was store time :D

let’s just say tony’s credit card was used very well that day 😛

then it was time to pick teams

not everyone had to participate

wanda said she wanted in

so pietro joined too which was slightly concerning

the man literally burnt a bowl of cereal once

and ur probably thinking “how—”

EXACTLY

only you and harley saw it and honestly it rendered u both speechless

tony joined too

but you and scott made sure he knew that there could be NO robots

vision asked to be a judge

scott said “vis, we really appreciate that but… uh… don’t you like not eat?”

“ah! you are correct, scott. i do not consume food in the traditional way. however, given my vast knowledge & global database, i do believe that i would be a very good judge of presentation and overall ingredient chemistry.”

“alright, you do that buddy!”

also off topic but why do i just know that tony would give vision the nickname “chat gpt”

sorry i had to get that out ANYWAYY

you got a few more people to participate

sam and bucky wanted to be a team, and harley peter & miles wanted to be a team too

yknow what that was fine by you

so the day came.

you had turned one of the empty conference rooms into a crazy kitchen setup

thx party city for the confetti & balloons!!! ;)

in came your loyal hosts, scott & clint

(clint begged you and scott to let him host, he kept using a british accent until you said yes & just trust me it was good that he finally stopped)

you, natasha, and vision were the taste & presentation judges

you surveyed scott’s & your work, pretty proud of how it turned out

“ALRIGHTY THEN, READY, SET, OFF THE BLIMEY!!”

vision shot you a quizzical look, but you just shook your head.

scott & clint rly were a…. hosting duo

yep, the most… hosting duo of all time

the hostiest hosters to ever host

omg the funniest thing was that they kept eating the cookie dough from harley peter & miles’ station

they literally had to push them away

peter & miles webbed their hands shut HAHA

everyone else seemed to be doing pretty well though

aside from their usual arguing, bucky & sam seemed to actually be making something good

wanda was perfect as per usual

and pietro was zipping around the kitchen, causing tony’s flour to rise up in his face

steve came over, blowing a whistle and pointed at pietro

you and scott had enlisted him to be the referee

yes, cooking shows don’t normally have referees, but think abt the ppl we’re dealing with here 😀

anyways finally time was up!!!

but you and scott still had a trick up your sleeves.

“and now presenting our special guest judge… GIVE IT UP FOR NICK FURY!!”

yes that’s right, he had said yes to this

after you promised to finish a mission report for him

and bought him some new eyepatches

which was why he was wearing a navy blue one complete with rhinestones

pietro was up first, and he placed four slices of chocolate cake in front of all the judges.

“i gotta say p, this actually looks really good!” you spoke, and he beamed.

natasha didn’t look so sure

“as y/n says, it does look alright on the outside. however, it does seem like there’s some sort of… strange ingredient in the chemical makeup… i am going to analyze for a moment.” said vision

“aw, let’s just eat the damn thing already!” fury spoke, and so you all did.

“mm, it’s good!!” you said, and natasha nodded in agreement.

but did not have the same reaction.

he had stopped chewing, and his eye had narrowed. he was giving pietro a death stare.

“uhm… fury? what is … jolly wrong with you?” scott asked, his british accent wavering.

“yeah… guv’nr?” said clint.

“who the hell puts hot sauce in a damn chocolate cake. you better start runnin’ maximoff, because i’m comin’ for you!!” fury spoke, getting progressively louder.

“that one was supposed to be for y/n- i mean vision! yeah! oops. um…” pietro spoke, before disappearing from the room in a quick streak.

after that, fury left.

and that's why now cooking/baking competitions are banned on the premises of SHIELD!!

-----------------------✰----------------------

#mcu headcanons#marvel headcanons#marvel#mcu#mae's requests#avengers headcanons#bucky barnes#sam wilson#steve rogers#tony stark#pietro maximoff#scott lang#clint barton#nick fury

112 notes

·

View notes

Text

If you love our planet, stop using ai.

this is a topic I tiptoe around a LOT considering for a while, I used ai. I’d use c.ai, I used grammarly generative ai, and it’s important to know the environmental repercussions of ai. Especially now that America (one of the biggest producers of greenhouse gasses) has pulled from the Paris Peace Agreement.

Rapid development and deployment of powerful generative AI models comes with environmental consequences, including increased electricity demand and water consumption. By 2026, it’s estimated that 4% of electric energy will be ai (for reference, 4% of energy powers ALL of Japan.)

In 2019, University of Massachusetts Amherst researchers trained several large language models and found that training a single AI model can emit over 626,000 pounds of CO2, equivalent to the emissions of five cars over their lifetimes.

A more recent study reported that training GPT-3 with 175 billion parameters consumed 1287 MWh of electricity, and resulted in carbon emissions of 502 metric tons of carbon, equivalent to driving 112 gasoline powered cars for a year.

The more we overshoot what natural processes can remove in a given year, the faster the atmospheric concentration of carbon dioxide rises. In the 1960s, the global growth rate of atmospheric carbon dioxide was roughly 0.8± 0.1 ppm per year. Over the next half century, the annual growth rate tripled, reaching 2.4 ppm per year during the 2010s. The annual rate of increase in atmospheric carbon dioxide over the past 60 years is about 100 times faster than previous natural increases, such as those that occurred at the end of the last ice age 11,000-17,000 years ago.

If you use c.ai? Go find a discord server to roleplay in, try finding a community on apps like amino.

There’s other ways, we don’t have to kill our earth like this,

#ai#ai is stupid#ai is a plague#ai is dangerous#earth#pollution#stop using ai#earth is dying#most of this is a splice collage of articles because 75% of people will NOT go read what I linked.#I hope this gets my point across#we are all fucked#and we are going to die#climate crisis#climate change#climate action#please reblog#please repost#do anything#interact#blaze this#it’s important#fuck trump#trump is a threat to democracy#trump is a coward#climate justice#politics#world politics#earth day#ai will kill us#ai is bad

3 notes

·

View notes

Text

Acorns Investments can rescue you from a huge Government Failure

Acorns Investments (a membership — auto-trading stock market account) has been around for several years now and is showing its members the better side of investing in stocks.

Your deposits are made for you automatically whenever you purchase something using a credit card or debit card that you register with your account. Whenever you purchase something with this card, your total purchase price is rounded up to the nearest dollar. This could be as little as a few pennies or several pennies. In other words, this is money you’ll never miss. I think of it like loose change that falls out of pockets every day, or money that we waste every day on donuts or that five dollar cup of coffee just because we’re nearby.

SO — I ALWAYS ASK — where is the risk? There’s very little. AND the best part is you are making money in the stock market as it appreciates over time. YOU are NOT wasting your time watching the ups and downs of your investment portfolio due to that day’s news. Instead, artificially intelligent bots are trading your account for you, and these things can know more about the current onslaught of events than any single person, including you.

$3.00 per month is all you pay and with this automatic trading account that deposits money that you would have wasted into your account can grow into the hundreds of thousands of dollars by the time you retire OR SOONER — because there are other ways of getting risk free capital into your account and you’ll learn all about it with my short video:

youtube

GIVE IT LOOK — or GO DIRECT — Share.Acorns.com/marketingcircles SIGN UP TODAY and get a web site just like mine that will earn you even MORE!

#global warming#ai chat gpt#climate crisis#ai#best audible books#best science fiction movies#investing#free investing#stocks#stock market#stock market investing#otherpeoplesmoney#other people's money#opm#risk free investment#risk free stock market investing#guaranteed winners#best stock picks#top 10 stocks#top ten stocks#make money in the stock market#day trading ideas#stock market ideas#Youtube

0 notes