#misinformation

Explore tagged Tumblr posts

Text

Most people, sadly, have little to no understanding of how birth control actually works and the many things it can do for you, beyond preventing pregnancy. Even growing up in a country with good sex ed, I've had to explain, time and time again, that hormonal bc-meds are the only reason I can function at all duruing my piriods. If not being in excruciating pain and fainting from blood loss is 'toxic', then I'm sorry babes, I'll never get 'clean'.

this might sound stupid but I can’t help but believe that the new wave of “birth control is actually horrible for your body, you need to get off it immediately” misinformation from influencers and the ‘natural cycle tracking’ apps suddenly being advertised is a sneaky underhanded way of causing more unplanned pregnancies that people now cannot abort. now is possibly the worst time ever to turn towards ‘natural family planning’

25K notes

·

View notes

Text

Signal > Noise

(Bite-sized topics in media and information literacy)

Here's a great example of how amazingly sloppy professional western media has become.

This New York Magazine article came out four days ago. Check out the caption on this photo.

"Damage from Israel’s strike on Ramat Gan in Iran"

Here's the thing: Israel didn't strike Ramat Gan in Iran.

Ramat Gan is a city of ~170,000 people to the east of Tel Aviv, in Israel.

Misinformation is false or inaccurate information-getting the facts wrong.

Disinformation is false information which is deliberately intended to mislead- intentionally misstating the facts.

If this had just been posted today, I'd assume it's a mistake and call it misinformation.

After four days uncorrected, though, this stops being misinformation and becomes disinformation.

Why? After four days, they unquestionably know it's wrong...and have not bothered to correct it.

What does that tell you about New York Magazine (or parent company Vox Media)? How important are things like journalistic ethics and integrity to them?

#jumblr#israel#Journalism#New York Magazine#Iran#Ramat Gan#Sloppy Journalism#Sloppy Reporting#Misinformation#Media literacy#Information literacy#Signal > Noise#current events#News#disinformation

318 notes

·

View notes

Text

Donkey Kong's father isn't around because he canonically got abducted by aliens and was never seen again.

#donkey kong#misinformation#edit: man I'd love to get a fraction of the notes for this post as listeners for my music stuff

321 notes

·

View notes

Text

let me briefly tell you something about the psychology of misinformation:

when we hear of events, we will most likely retain the information that we are first exposed to, given that the information is (at least at the surface level) credible

through those information, we build mental models of the events to make sense of the timeline

if the mental model is incomplete, we tend to fill that space with ANY information whether it's something we heard, something that is inferred or something that we make. why? because we like complete incorrect models than incomplete models

some people retain misinformation EVEN after correction - this is due to a lot of factors that i won't even talk about

one of the best way to correct misinformation is when there is a causal explanation to the event to complete the mental model

so i guess what i'm saying to all of this is: yes, we are cognitive misers and we hate thinking, but at the very least, take your time to be uncomfortable for a bit UNTIL credited, real information is out.

and i guess what i'm trying to say further is, i think we as a whole, humans as a whole, need to sit down, take a deep breath and gain all the facts before we start pointing fingers and accusing people of a, b and c without definite reason/evidence.

and i guess what i'm trying to say is, in this moment with the information given and available, i think the boyz should stay as 11.

#the boyz haknyeon#the boyz#ju haknyeon#haknyeon#tbz haknyeon#tbz#the boyz ju haknyeon#misinformation#psychology#cognitive psychology

41 notes

·

View notes

Text

58K notes

·

View notes

Text

During autism awareness month, it’s important to recognize this type of misinformation propaganda. Please reblog and share to spread the word. This is complete ableism.

#autism#actually autistic#autism awareness month#misinformation#us propaganda#neurodiversity#actually neurodivergent#feel free to share/reblog#ABC News

11K notes

·

View notes

Text

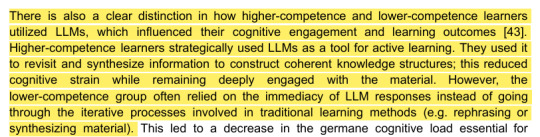

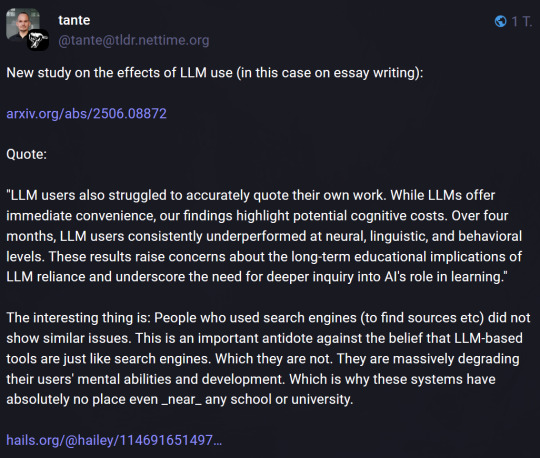

Okay. I'll preface this the same way I did on the last post linking a study that "discovered" using AI leads to cognitive atrophy:

I am an English Composition teacher at a University. I struggle regularly with my students' willingness to use AI. I am against using AI in the classroom. I have real concerns about how it affects my students' learning, and the problems widespread uncritical use of AI in academia will cause in the future.

And I'm here to very nonjudgmentally say: This study is bull shit.

They did not discover that using AI causes "cognitive atrophy."

First of all, this study isn't peer-reviewed. That alone would make it functionally meaningless at this point. Please, in the future, before you reblog a study, before you believe a study, determine if it's peer-reviewed first. Peer-reviewed studies are not certifiably 100% true 100% of the time, but they are by and far the most credible research we have. Studies that aren't peer-reviewed aren't credible at all.

Secondly, they're basing their research on this theory (which, to begin with, is only a theory, and an unfalsifiable one at that) developed by John Sweller:

John Sweller, interestingly enough, advocated for teachers to not require students to problem-solve. So, how seriously do you take this man and his research?

Next, there's the exceptionally limited research pool of 54 participants:

Can't stress enough that 54 is like, nothing.

Then, there's the problem of their methodology.

These 54 participants were separated into three groups. One was tasked with writing essays using ChatGPT only, one with using search engines only, and one with using their brain only.

The results of these three groups were compared to each other.

Let me say that again. The results of these three groups in this one-time study were compared to each other.

The question to ask here is not "What does that prove?" but "What can that prove?"

Here's what it can prove: It can prove that one group used their brains more than the other groups while writing essays.

Here's what it can't prove: It cannot prove that the participants who used AI did as poorly as they did because of "cognitive atrophy."

Something like that would require a study over time, and it would require that the participants' results are compared to their own prior results. It would require that we compare how they did doing the same tasks over time, as in asking "Did they progressively get worse?"

And in order for this to work, if they even did it, they would have to account for all possible confounding variables, such as, but not limited to, accounting for potential outside influences on their cognitive ability, such as learning disability, disease or injury. That would also mean ensuring that the participants in the "AI only" group only used AI for everything that required any cognitive skills from the moment the study began to the moment the study ended. That is the only way they could prove that any data indicating the presence of "cognitive atrophy" was caused by AI.

That would be a near impossible endeavor to begin with, because we use critical thinking skills for many things besides writing essays, but it is most certainly not what they did in this study.

(While I'm here, I want to point out that what's been written by @itsalexvacca, and what's been quoted in one of the comments above, is misleading.

This quote: "Here's the terrifying part: When researchers forced ChatGPT users to write without AI, they performed worse than people who never used AI at all."

This quote does not accurately reflect the methodology used in the study. This makes it sound as if they asked people who habitually use AI and people who had never once before used AI to write without using AI. That isn't what happened. The participants selected for this study were not selected based on how much or how little AI they used prior to the study. It's possible every one of them has been habitually using AI long before the study took place, and it's also possible none of them ever used it. The study doesn't say.

What actually happened was they rotated the groups. People who were initially in the "AI only" group were eventually in the "Brain only" group. They were given the choice to continue working on the same essay they did while in the previous group, and all of them chose to do this.

And crucially, what they performed worse at was memory. Those who had previously been in the "AI only" group had difficulty recalling previous prompts and quotes.

We don't need a 2025 study to tell us that if you spend less time on something, or don't pay as much attention to it, you're not going to remember it as easily as if you had. This is not new, and it does not prove that the reason the "AI only" group didn't "perform" (remember) as well is because of "cognitive atrophy." The same exact thing would happen if you copy-and-pasted something instead of typing it out. Of course the thing you spend more time with and pay more attention to is easier to remember!

Never mind that I shouldn't have to explain to anyone that one single instance of poorly recalling something you wrote previously would not be enough to prove anything. It's anecdotal!

On top of that, this happened in session 4. Guess how many of the 54 participants were even present for session 4?

Eighteen. That is less than nothing. Absolutely nothing can be meaningfully concluded from that.)

What the study actually "proved," if they proved anything with a pool of 54 participants (and later just eighteen!), is that when these participants used AI to write an essay, they didn't use as much of their brain, and they didn't remember things as well, and they didn't appear to challenge what they read or wrote as much. They possibly didn't think as "critically" about it, based on our conception of what "critical thinking" is (itself a nebulous and subjective concept).

They argue that it "diminishes users inclination to critically evaluate the LLM's output or 'opinions.'" While I don't doubt it's possible - beyond likely, really - that when people use AI they are less inclined to critically evaluate output, the researchers are yet again not considering confounding variables beyond cognitive skill.

A lot of people who use AI aren't inclined to critically evaluate it because they are using it to begin with as a shortcut - if they were going to be critical, they wouldn't be using the AI. Now, I'm not arguing that's not a problem. Obviously, it is. What I'm arguing is that nothing about AI caused them to not think critically in that scenario.

Another reason people who use AI might not be inclined to critically evaluate it is because they erroneously believe that AI is foolproof. They have bought into AI tech bro hype and believe that anything AI produces is infallible. Again, not arguing this isn't a problem. Again, obviously it is. And again, what I'm arguing is that nothing about AI itself caused them to believe this.

In the case of this particular study, I'm guessing another reason the participants who used AI were not inclined to critically evaluate output is because they were there to get paid. They're college students who agreed to be part of a study in exchange for 100 bucks. This is added time and energy and work they don't need. What they do need is money. It could also be that they misunderstood their role as one of the "AI only" group members.

It could be so many things, but once again, the study did not prove that it is because AI itself in any way atrophied their cognitive ability. That is just not what this study proved.

There's even another problem with their interpretation of their data:

Here, they acknowledge that some of the participants in the "AI only" group actually did use critical thinking skills while they were using AI. These participants were "higher-competence learners." Nowhere in the study do they explain how they determined which participants were "higher-" or "lower-" competence learners, nor do they even explain what qualifies someone as a higher- or lower-competence learner.

But the point I'm making here is, if the data suggests that being a higher- or lower-competence learner affects their "cognitive engagement," who's to say that AI has anything to do with why some of the "AI-only" users didn't perform well on their essays?

We don't know. Because the researchers conducting this study didn't account for confounding variables, nor create and include a methodology that aimed to answer that question.

Lastly, they are supporting their claim that using AI can cause "cognitive atrophy" with their least verifiable, and most hypothetical data:

They are taking their finding that their "AI-only" participants didn't critically engage while using AI to argue that hypothetically if this continued it could lead to "cognitive debt."

Remember when I pointed out that this entire study is based on the unproven, and more importantly unfalsifiable theory of "cognitive load theory"? This theory was invented by the previously mentioned psychologist, John Sweller.

He did not invent the concept of "cognitive debt," nor is "cognitive debt" accurately defined here. From what I have found, the term "cognitive debt" was coined by John V Willshire - a "strategic designer," founder of "Smithery" and frequent writer for Medium. Whatever the fuck any of that is good for. He defines it as "forgoing the thinking in order just to get the answers, but have no real idea of why the answers are what the they are." This may be a useful personal concept, but it is made up by someone with no credentials, and it is unfounded in any research. "Cognitive load theory" is at least a theory that resulted from research studies. How credible those studies are may be up for debate, but nevertheless. "Cognitive Debt," on the other hand, is a concept made up by a random guy who thinks himself a philanthropist.

And the researchers of this study are hypothesizing that continually using AI could lead to someone not using their critical thinking skills enough that it leads to "cognitive debt" whatever the fuck that means, I guess, since they aren't using the same definition the inventor is, and as far as I can tell, they provided their own definition for it - based on what research, I don't know (I'm guessing none). And this of course does not consider nor account for all the many other situations in our lives outside of writing an essay for class we use our critical thinking skills on daily.

And they are claiming that this^ conclusion is the equivalent of definitively proving that AI causes "cognitive atrophy."

It is. Absolute. Bull shit.

All this to say that "cognitive atrophy" does not exist. It is not something that has ever been scientifically proven to exist.

(Brain atrophy - a loss of neurons and connections between neurons - exists, in people with say, cerebral palsy or dementia. But AI doesn't cause brain atrophy either.)

Currently, cognitive atrophy is a concept being almost exclusively discussed in relation to AI, but it is not a new concept. Moral panic about "cognitive atrophy" comes up after every major new development in technology. It happened with the calculator. It happened with printing. It even happened with writing itself. People have been fearmongering over advancements in technology "atrophying" cognition and critical thinking for ages and it has been bogus every single time, including this time.

Using AI does not "atrophy" your cognitive thinking skills. What it does is allow you to do a task without using as much cognition as you would otherwise need to do that task, no different than a calculator. Nothing about this causes your brain to atrophy, and this study certainly didn't conclude with any findings that suggest otherwise.

When people make the argument that the brain is "like a muscle" (it's not) and that if you don't "use it, you lose it" they are right in the sense that if you don't use a skill in a long time, the next time you attempt to do this skill you will not be as good at it as you once were. This, once again, is nothing new. We did not need a 2025 study to prove this.

And when this happens, by the way, when you lose a skill you previously acquired due to lack of use, nothing actually happens to your brain. If you want, you can reacquire the skill. This is precisely why "cognitive atrophy" isn't a real thing. If your brain was actually atrophying, losing skills would mean losing actual brain function. It would mean never being able to perform those skills again. That's not what happens. But losing a skill does not mean losing brain function. It means your brain kicked something out that you weren't using anymore. That's all, and that's not new.

The last thing I will say is that moral panic over "cognitive atrophy" is literal Nazi rhetoric. It's the same argument they used to legitimize eugenics. It's the basis of "degeneracy theory." I'm prone to correcting misinformation as is, but this above all is why I take the time to so adamantly contest bunk studies like this.

Social degeneration - Wikipedia

You are not immune to confirmation bias. Those of us against AI use in education and academia have real, valid concerns about the chain reaction of harm that will follow, but we have to resist the temptation to accept every study that confirms our biases at face value.

When we do that, by the way, we are doing the exact same thing we are accusing AI users of doing: not using our critical thinking skills.

This study is bunk. So is the last study that claimed AI causes "cognitive atrophy." Every study that claims anything causes "cognitive atrophy" is going to be, because "cognitive atrophy" is a Nazi talking point used to legitimize eugenics, not a real neurological state or condition.

You don't need to fear or fight or warn against AI because of "cognitive atrophy." Focus instead on the real problems AI is capable of causing in education and academia. I, for one, am far more worried about how the existence of AI allows for young students to avoid ever developing their critical thinking skills to begin with. I worry about the unprecedented amount of misinformation that must currently be being spread and even published in credentialed journals. I worry about how many college students are using AI to avoid truly learning the fundamentals of their disciplines, and how that will harm us in the future, particularly in medicine. I worry about how AI's existence may set a precedent for completely eliminated or negatively reformed English departments. I worry that its existence will further validate the already popular belief that reading and writing and the skills used to read and write aren't very important or necessary to learn. I worry about so many things that AI actually has the power to cause.

These are things that you should actually be worried about, and they are more than enough to justify your stance on AI in education and academia.

44K notes

·

View notes

Text

4K notes

·

View notes

Text

idk if this is a prevalent joke Here but i know i keep seeing it from twitter(on discord)

4K notes

·

View notes

Text

You know how the word "feline" refers to cats, and "canine" refers to dogs? There are a whole bunch more animal adjectives, and here are some of them:

equine -> horses

bovine -> cows

murine -> mice/rats

porcupine -> porcupines

wolverine -> wolves

marine -> marmosets

saline -> salmonella

cosine -> cosmonauts

citrine -> citrus

combine -> combs

famine -> your fam

bromine -> your bros

palpatine -> your pals

alpine -> alps

christine -> christ

asinine -> asses

machine -> the speed of sound

landmine -> explosions

migraine -> migrants

trampoline -> tramps

dopamine -> dopes

medicine -> the Medici family

praline -> prey

masculine -> mascara

feminine -> femurs

latrine -> latissimus dorsi

fettuccine -> fetuses

poutine -> sadness

turbine -> turbans

engine -> england

supine -> soup

valentine -> valence electrons

Follow for more nature facts!

21K notes

·

View notes

Text

Yeah, see, doing it that way, you learn a lot about how to put together something plausible when, for one reason or another, you can't do it the right way. That's a life skill!

Equally importantly, you can only plausibly half-ass an essay like that if you learned something from all the times you whole-assed one.

Whereas if you use ChatGPT to generate something that resembles an essay, all you learn is...how to prompt ChatGPT for something that resembles an essay, and maybe--if you're an unusually dedicated plagiarist--a little bit about fact-checking and editing the output.

Those are skills, but they are not the kind of powerful and flexible skills that you can transfer and build on as you go on to more complex things. When those skills let you down--when you have a writing task that Chat GPT can't do, because it doesn't have enough examples of it in its corpus to spit out something semi-plausible--you've got nothing.

Even worse, when you encounter everyday situations that require you to think--to reason, to draw inferences, to recall relevant pieces of information and make connections among them, all that stuff--not only will you not be able to do that, but you probably won't even realize that you should.

If you managed to understand that you were missing something--like, for example, "hey, this picture of Japanese people getting on a train in old-timey clothes has probably been placed alongside this headline about ICE raids from last week for some kind of reason,"-- you could, theoretically, ask ChatGPT to speculate about what that reason might be, and it might even tell you a good answer.

But if you've spent all of your growing years outsourcing your thinking to a machine, you won't even know what to ask about.

That's what's so scary about AI. Yes, there are serious people in various fields suggesting reasonable use-cases for it, whatever low-stakes but time-consuming things come up a lot in their fields. (Professors like to mention letters of reference.) But those people are talking about things that they already know how to do.

And also that they don't care much about/have no reason to do particularly well, but you--generic high school or college student--probably also don't care much about your assignments, so that isn't terribly relevant here, but it is a key detail when it comes to real-life applications.

You can't actually skip over all the "busywork"--a term students today seem to apply to almost anything they are asked to do for a class--and then do the fun stuff. You can't watch a bunch of movies about mountain-climbing and then go straight from your couch to Kilamanjaro.

You will die.

You have to do a lot of plain old walking, then add hills and varied terrain and stuff, and probably start learning and practicing the technical stuff, with the ropes and all, in a climbing gym, then you do--I'm actually just guessing here, but I think you probably do a mountain that's mostly walking with a few technical bits?

Similarly, if you go right from having the magic machine tell you the right answer to having to make grown-up decisions like "which of these two highly calculated public personas is more likely to set our country on fire and flush it down the toilet, should they become president," you're not going to do so well.

Whenever I think about students using AI, I think about an essay I did in high school. Now see, we were reading The Grapes of Wrath, and I just couldn't do it. I got 25 pages in and my brain refused to read any more. I hated it. And its not like I hate the classics, I loved English class and I loved reading. I had even enjoyed Of Mice and Men, which I had read for fun. For some reason though, I absolutely could NOT read The Grapes of Wrath.

And it turned out I also couldn't watch the movie. I fell asleep in class both days we were watching it.

This, of course, meant I had to cheat on my essay.

And I got an A.

The essay was to compare the book and the movie and discuss the changes and how that affected the story.

Well it turned out Sparknotes had an entire section devoted to comparing and contrasting the book and the movie. Using that, and flipping to pages mentioned in Sparknotes to read sections of the book, I was able to bullshit an A paper.

But see the thing is, that this kind of 'cheating' still takes skills, you still learn things.

I had to know how to find the information I needed, I needed to be able to comprehend what sparknotes was saying and the analysis they did, I needed to know how to USE the information I read there to write an essay, I needed to know how to make sure none of it was marked as plagerized. I had to form an opinion on the sparknotes analysis so I could express my own opinions in the essay.

Was it cheating? Yeah, I didn't read the book or watch the movie. I used Sparknotes. It was a lot less work than if I had read the book and watched the movie and done it all myself.

The thing is though, I still had to use my fucking brain. Being able to bullshit an essay like that is a skill in and of itself that is useful. I exercised important skills, and even if it wasnt the intended way I still learned.

ChatGTP and other AI do not give that experience to people, people have to do nothing and gain nothing from it.

Using AI is absolutely different from other ways students have cheated in the past, and I stand by my opinion that its making students dumber, more helpless, and less capable.

However you feel about higher education, I think its undeniable that students using chatgtp is to their detriment. And by extension a detriment to anyone they work with or anyone who has to rely on them for something.

6K notes

·

View notes

Text

17K notes

·

View notes

Text

playing science telephone

Hi folks. Let's play a fun game today called "unravelling bad science communication back to its source."

Journey with me.

Saw a comment going around on a tumblr thread that "sometimes the life expectancy of autism is cited in the 30s"

That number seemed..... strange. The commenter DID go on to say that that was "situational on people being awful and not… anything autism actually does", but you know what? Still a strange number. I feel compelled to fact check.

Quick Google "autism life expectancy" pulls up quite a few websites bandying around the number 39. Which is ~technically~ within the 30s, but already higher than the tumblr factoid would suggest. But, guess what. This number still sounds strange to me.

Most of the websites presenting this factoid present themselves as official autism resources and organizations (for parents, etc), and most of them vaguely wave towards "studies."

Ex: "Above And Beyond Therapy" has a whole article on "Does Autism Affect Life Expectancy" and states:

The link implies that it will take you to the "research studies" being referenced, but it in fact takes you to another random autism resource group called.... Songbird Care?

And on that website we find the factoid again:

Ooh, look. Now they've added the word "some". The average lifespan for SOME autistic people. Which the next group erased from the fact. The message shifts further.

And we have slightly more information about the study! (Which has also shifted from "studies" to a singular "study"). And we have another link!

Wonderfully, this link actually takes us to the actual peer-reviewed 2020 study being discussed. [x]

And here, just by reading the abstract, we find the most important information of all.

This study followed a cohort of adolescent and adult autistic people across a 20 year time period. Within that time period, 6.4% of the cohort died. Within that 6.4%, the average age of death was 39 years.

So this number is VERY MUCH not the average age of death for autistic people, or even the average age of death for the cohort of autistic people in that study. It is the average age of death IF you died young and within the 20 year period of the study (n=26), and also we don't even know the average starting age of participants without digging into earlier papers, except that it was 10 or older. (If you're curious, the researchers in the study suggested reduced self-sufficiency to be among the biggest risk factors for the early mortality group.)

But the number in the study has been removed from it's context, gradually modified and spread around the web, and modified some more, until it is pretty much a nonsense number that everyone is citing from everyone else.

There ARE two other numbers that pop up semi-frequently:

One cites the life expectancy at 58. I will leave finding the context for that number as an exercise for the audience, since none of the places I saw it gave a direct citation for where they were getting it.

And then, probably the best and most relevant number floating around out there (and the least frequently cited) draws from a 2023 study of over 17,000 UK people with an autism diagnosis, across 30 years. [x] This study estimated life expectancies between 70 and 77 years, varying with sex and presence/absence of a learning disability. (As compared to the UK 80-83 average for the population as a whole.)

This is a set of numbers that makes way more sense and is backed by way better data, but isn't quite as snappy a soundbite to pass around the internet. I'm gonna pass it around anyway, because I feel bad about how many scared internet people I stumbled across while doing this search.

People on quora like "I'm autistic, can I live past 38"-- honey, YES. omg.

---

tl;dr, when someone gives you a number out of context, consider that the context is probably important

also, make an amateur fact checker's life easier and CITE YOUR SOURCES

8K notes

·

View notes

Text

i'm the girl who makes up all the fake folk etymologies. it's fun and easy for me. nobody pays me, i just do it for the love of the game.

#keeping it fun and funky fresh#folk etymology#here's a couple hot new ones fresh off the dome:#genitals are called yr ''loins'' bc it was a mishearing of the cockney pronounciation of ''lines'' as in ancestry/breeding#atom is actually the acronym A.T.O.M. - All Things Of Matter#discord was originally dis-chord in the musical sense - a chord that is wrong or off in some way#boom. there ya go. three beautiful lies free to go out into the world#my life goal is to achieve something as powerfully garbage as Ship High In Transit. someday......#misinformation#etymology#successful posts

6K notes

·

View notes

Text

adding to this:

i don't think the methodology of the paper is all that bad, i think it's fine. but the paper wants to make a big splash and puts out extremely strong statements like "cognitive atrophy", "cognitive 'deficiency'", "decrease in learning skills", and the unfortunate implications of the title referencing U.S. "War on Drugs" campaigns.

it keeps suggesting potential, hypothetical long-term decline that has not been observed, after testing this "decline" in only 9 people, over the course of four tasks, one per month. and did using LLMs make these 9 unable to use their brain afterwards? well... page 108:

The critical point of this discussion is Session 4, where participants wrote without any AI assistance after having previously used an LLM. Our findings show that Session 4's brain connectivity did not simply reset to a novice (Session 1) pattern, but it also did not reach the levels of a fully practiced Session 3 in most aspects. Instead, Session 4 tended to mirror somewhat of an intermediate state of network engagement. For example [brain connectivity] remained above the Session 1 which we consider a baseline in this context.

no! they were perfectly capable of writing this fourth, AI-less essay at a level of brain connectivity lower than their third AI essay (when they had gotten used to it), but higher than their first AI essay.

if the study proves anything, it's that AI does not make you dumber, but it makes you learn slower. which isn't a crime, a form of harm, or a social ill! there's nothing wrong with completing a task using a tool that slows your learning, it would be dangerous to suggest otherwise.

ChatGTP rotting away your brain

3K notes

·

View notes