#Data Management Framework

Explore tagged Tumblr posts

Text

Data Management Framework | Tejasvi Addagada

Discover how a robust data management framework can transform your business processes by ensuring data quality, regulatory compliance, and efficient data governance. This structured approach not only enhances operational efficiency but also supports informed decision-making and helps mitigate risks, allowing your organization to effectively manage and utilize data as a valuable asset in today’s competitive landscape. Consult Today.

#Data Management Framework#Tejasvi Addagada#data management#data risk management#data analysis#data storage#data governance frameworks#data protection#data governance#data management services

0 notes

Text

POLICE INTELLIGENCE IN COUNTERTERRORISM EFFORTS: AN EVALUATION OF BEST PRACTICES

POLICE INTELLIGENCE IN COUNTERTERRORISM EFFORTS: AN EVALUATION OF BEST PRACTICES 1.1 Introduction Police intelligence plays a central role in counterterrorism efforts, enabling law enforcement agencies to identify, prevent, and respond to potential terrorist threats. Effective intelligence gathering, analysis, and sharing are critical for the success of counterterrorism operations, particularly…

#• Information Sharing#Best Practices#Case Studies#Community Policing#Counterterrorism#Crisis Response#data analysis#ethical considerations#Intelligence Cycle#Interagency collaboration#Operational Coordination#Police Intelligence#POLICE INTELLIGENCE IN COUNTERTERRORISM EFFORTS: AN EVALUATION OF BEST PRACTICES#Policy frameworks#PREVENTIVE MEASURES#Public safety#RISK MANAGEMENT#Surveillance Techniques#Technology in Policing#Threat Assessment#TRAINING PROGRAMS

0 notes

Text

Achieving NIST and DORA Compliance: How We Can Help Companies Build Cybersecurity and Operational Resilience

In today’s fast-paced digital environment, cybersecurity and operational resilience are at the forefront of corporate priorities. With the increasing frequency of cyberattacks and strict regulatory requirements, companies must adapt and align with internationally recognised frameworks and regulations such as the National Institute of Standards and Technology (NIST) and the Digital Operational…

#AIO compliance solutions.#AIO Legal Services#AML compliance#business continuity#corporate governance#cyber risk mitigation#cybersecurity framework#data protection#digital security#DORA compliance#EU Regulations#GDPR compliance#ICT risk management#incident response#legal services for businesses#NIST compliance#operational resilience#regulatory compliance#risk management#third-party risk management

0 notes

Text

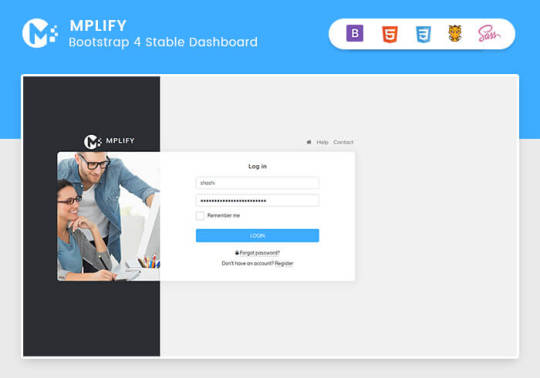

Mplify - Versatile Bootstrap 4 Admin Template by Thememakker

Mplify Admin makes the development process easy and fast for you and aims to help you implement your idea in real time.

Product Highlights

Mplify is a fully professional, responsive, modern, multi-purpose, and feature-rich admin template. It can be used to create various websites, admin templates, admin dashboards, backend websites, CMS, CRM, blogs, business websites, timelines, and portfolios. This versatility makes it an ideal choice for developers looking to build functional and aesthetically pleasing web applications efficiently.

Key Features

Bootstrap 4.3.1: Ensures compatibility and modern design standards.

jQuery 3.3.1: Offers extensive plugins and support.

Built-in SCSS: Provides more flexibility and control over styles.

Light & Dark Full Support: Allows users to switch between light and dark themes.

RTL Full Support: Right-to-left language support for global accessibility.

W3C Validate Code: Ensures high coding standards and practices.

Mobile and Tablet Friendly: Responsive design for all devices.

Treeview: For hierarchical data display.

Drag & Drop Upload: Simplifies file uploading processes.

Image Cropping: Integrated tools for editing images.

Summernote: Rich text editor integration.

Markdown: Support for markdown formatting.

Beautiful Inbox Interface: User-friendly email management.

User-Friendly Chat App: Built-in chat application.

Scrum & Kanban Taskboard: Task management tools.

Add Events to Your Calendar: Event management capabilities.

File Manager: Efficient file organization and management.

Blogging: Tools to manage and create blog posts.

Testimonials: Features to showcase user testimonials.

Maintenance: Tools to manage and schedule maintenance.

Team Board: Collaboration tools for team management.

Search Result: Enhanced search functionalities.

Beautiful Pricing: Elegant pricing tables and plans.

Contact List & Grid: Efficient contact management.

User Profile: Customizable user profiles.

Extended Forms: Advanced form functionalities.

Clean Widgets: A variety of clean, modern widgets.

Technical Specifications

Bootstrap 4.3.1

Bootstrap 4.3.1 is a powerful front-end framework for faster and easier web development. It includes HTML and CSS-based design templates for typography, forms, buttons, tables, navigation, modals, image carousels, and many other interface components, as well as optional JavaScript plugins. Mplify leverages Bootstrap 4.3.1 to ensure a consistent and responsive design across all devices.

jQuery 3.3.1

jQuery is a fast, small, and feature-rich JavaScript library. It makes things like HTML document traversal and manipulation, event handling, and animation much simpler with an easy-to-use custom API integration that works across a multitude of browsers. Mplify includes jQuery 3.3.1 to provide enhanced functionality and interactivity to your applications.

Built-in SCSS

SCSS is a preprocessor scripting language that is interpreted or compiled into CSS. It allows you to use variables, nested rules, mixins, inline imports, and more, all with a fully CSS-compatible syntax. With SCSS, Mplify offers a more powerful and flexible way to manage styles.

Light & Dark Full Support

Mplify comes with built-in support for light and dark themes, allowing users to switch between these modes based on their preferences. This feature enhances user experience and accessibility, especially in different lighting conditions.

RTL Full Support

For developers targeting global audiences, Mplify provides full right-to-left (RTL) language support. This feature ensures that languages like Arabic, Hebrew, and Persian are properly displayed, making the template versatile and inclusive.

W3C Validate Code

The World Wide Web Consortium (W3C) sets the standards for web development. Mplify adheres to W3C's coding standards, ensuring that the template is built with clean, valid code, which improves browser compatibility, SEO, and overall performance.

Mobile and Tablet Friendly

In today's mobile-first world, having a responsive design is crucial. Mplify is designed to be fully responsive, ensuring that your mobile application looks great and functions seamlessly on all devices, including desktops, tablets, and smartphones.

Applications

Mplify’s versatile design and feature set make it suitable for a wide range of applications across various industries. Here are some key applications:

Admin Dashboards

Mplify provides a robust framework for building admin dashboards. With its extensive set of UI components, charts, forms, and tables, you can create comprehensive dashboards that provide valuable insights and data visualization.

CMS (Content Management Systems)

With features like blogging, file management, and user profile management, Mplify can be used to build powerful CMS platforms. Its clean widgets and beautiful interface ensure that the content management experience is both efficient and enjoyable.

CRM (Customer Relationship Management)

Mplify’s built-in tools for managing contacts, scheduling events, and maintaining communication through a chat app make it an excellent choice for developing CRM systems. These features help businesses manage customer interactions and data effectively.

Business Websites

The multi-purpose nature of Mplify allows it to be used for various business websites. Whether you need a portfolio, a blog, or a corporate website, Mplify provides the necessary tools and components to create a professional online presence.

Blogging Platforms

With integrated tools like Summernote for rich text editing and Markdown support, Mplify is ideal for creating blogging platforms. The beautiful inbox interface and testimonial features enhance the blogging experience, making it easy to manage and publish content.

Benefits

Easy and Fast Development

Mplify is designed to streamline the development process. With its pre-built components and templates, you can quickly assemble functional and aesthetically pleasing applications. This reduces development time and costs, allowing you to focus on implementing your ideas in real time.

Professional and Modern Design

Mplify offers a clean, modern design that is both professional and user-friendly. The template includes a variety of customizable widgets and components that adhere to the latest design standards, ensuring your application looks polished and up-to-date.

Comprehensive Feature Set

From task management tools like Scrum and Kanban boards to extensive form functionalities, Mplify provides a wide range of features that cater to various needs. This comprehensive feature set makes it a versatile solution for different types of projects.

Responsive and Mobile-Friendly

With Mplify, you can ensure that your application is accessible on all devices. Its responsive design adapts to different screen sizes, providing a seamless user experience on desktops, tablets, and smartphones.

Global Accessibility

Mplify’s RTL support and multi-language capabilities make it suitable for global applications. This feature ensures that your application can cater to users from different regions, enhancing its reach and usability.

Regular Updates and Support

When you purchase a license for Mplify, you receive all future updates for free. This ensures that your application remains up-to-date with the latest features and improvements. Additionally, Mplify provides excellent customer support to assist with any issues or queries.

Challenges and Limitations

Learning Curve

While Mplify is designed to be user-friendly, there may be a learning curve for beginners who are not familiar with Bootstrap or jQuery. However, comprehensive documentation and community support can help mitigate this challenge.

Customization

Although Mplify offers a wide range of customization options, extensive customization may require advanced knowledge of SCSS and JavaScript. This could be a limitation for developers who are not well-versed in these technologies.

Performance

As with any feature-rich template, there is a potential for performance issues if too many components and plugins are used simultaneously. It is important to optimize the application and selectively use features to maintain optimal performance.

Latest Innovations

Enhanced UI Components

Mplify continues to evolve with regular updates that introduce new and improved UI components. These enhancements ensure that your application remains modern and functional.

Advanced-Data Visualization

Recent updates have focused on improving data visualization capabilities. With enhanced charting tools and interactive elements, Mplify allows for more dynamic and engaging data presentation.

Integration with New Technologies

Mplify is continuously updated to integrate with the latest web technologies. This ensures compatibility with new frameworks and libraries, providing developers with more tools to build advanced applications.

Future Prospects

AI and Machine Learning Integration

The future of Mplify may include integration with AI and machine learning tools. This would enable developers to build more intelligent and automated applications, enhancing user experience and functionality.

Expanded Plugin Support

As the web development landscape evolves, Mplify is likely to expand its plugin support. This will provide developers with more options for extending the functionality of their applications.

Improved Accessibility Features

Mplify is expected to continue enhancing its accessibility features. This includes better support for assistive technologies and compliance with accessibility standards, ensuring that applications built with Mplify are inclusive for all users.

Comparative Analysis

Versus Other Bootstrap Templates

When compared to other Bootstrap templates, Mplify stands out due to its comprehensive feature set, modern design, and extensive customization options. While other templates may offer similar components, Mplify's unique features like RTL support and advanced task management tools give it an edge.

Versus Custom Development

Opting for a pre-built template like Mplify can significantly reduce development time and costs compared to custom web development. While custom development offers more flexibility, Mplify provides a robust foundation that can be easily customized to meet specific needs.

User Guides and Tutorials

Getting Started with Mplify

Installation: Download and install Mplify from the official website or marketplace.

Configuration: Configure the template settings according to your project requirements.

Customization: Use the built-in SCSS files to customize the styles and appearance.

Integration: Integrate Mplify with your backend systems and databases.

Deployment: Deploy your application on your preferred hosting platform.

Advanced Customization Techniques

SCSS Variables: Use SCSS variables to easily change colors, fonts, and other styles.

JavaScript Customization: Extend the functionality by adding custom JavaScript code.

Component Modification: Modify existing components or create new ones to meet specific needs.

Performance Optimization: Optimize the performance by minifying CSS and JavaScript files, and selectively loading components.

Conclusion

Mplify is a powerful, versatile, and user-friendly Bootstrap 4 admin dashboard template. It offers a wide range of features and customization options, making it suitable for various applications, from admin dashboards to business websites. With its modern design, responsive layout, and extensive documentation, Mplify simplifies the development process, allowing developers to implement their ideas in real time efficiently.

Whether you are building a CMS, CRM, or a personal blog, Mplify provides the tools and flexibility needed to create a professional and functional web application. Its ongoing updates and support ensure that your projects remain current and compatible with the latest web technologies.

#Mplify Admin#Bootstrap 4.3.1#jQuery 3.3.1#SCSS#Light & Dark Theme#RTL Support#W3C Validation#Responsive Design#Admin Template#Admin Dashboard#CMS#CRM#Blogging#Business Websites#Web Development#UI Components#Data Visualization#AI Integration#Machine Learning#Accessibility Features#Task Management#File Management#Rich Text Editor#Markdown Support#User Profiles#Performance Optimization#Web Technologies#Custom Development#Web Application Development#Front-end Framework

0 notes

Text

Optimize LLM with DSPy : A Step-by-Step Guide to build, optimize, and evaluate AI systems

New Post has been published on https://thedigitalinsider.com/optimize-llm-with-dspy-a-step-by-step-guide-to-build-optimize-and-evaluate-ai-systems/

Optimize LLM with DSPy : A Step-by-Step Guide to build, optimize, and evaluate AI systems

As the capabilities of large language models (LLMs) continue to expand, developing robust AI systems that leverage their potential has become increasingly complex. Conventional approaches often involve intricate prompting techniques, data generation for fine-tuning, and manual guidance to ensure adherence to domain-specific constraints. However, this process can be tedious, error-prone, and heavily reliant on human intervention.

Enter DSPy, a revolutionary framework designed to streamline the development of AI systems powered by LLMs. DSPy introduces a systematic approach to optimizing LM prompts and weights, enabling developers to build sophisticated applications with minimal manual effort.

In this comprehensive guide, we’ll explore the core principles of DSPy, its modular architecture, and the array of powerful features it offers. We’ll also dive into practical examples, demonstrating how DSPy can transform the way you develop AI systems with LLMs.

What is DSPy, and Why Do You Need It?

DSPy is a framework that separates the flow of your program (modules) from the parameters (LM prompts and weights) of each step. This separation allows for the systematic optimization of LM prompts and weights, enabling you to build complex AI systems with greater reliability, predictability, and adherence to domain-specific constraints.

Traditionally, developing AI systems with LLMs involved a laborious process of breaking down the problem into steps, crafting intricate prompts for each step, generating synthetic examples for fine-tuning, and manually guiding the LMs to adhere to specific constraints. This approach was not only time-consuming but also prone to errors, as even minor changes to the pipeline, LM, or data could necessitate extensive rework of prompts and fine-tuning steps.

DSPy addresses these challenges by introducing a new paradigm: optimizers. These LM-driven algorithms can tune the prompts and weights of your LM calls, given a metric you want to maximize. By automating the optimization process, DSPy empowers developers to build robust AI systems with minimal manual intervention, enhancing the reliability and predictability of LM outputs.

DSPy’s Modular Architecture

At the heart of DSPy lies a modular architecture that facilitates the composition of complex AI systems. The framework provides a set of built-in modules that abstract various prompting techniques, such as dspy.ChainOfThought and dspy.ReAct. These modules can be combined and composed into larger programs, allowing developers to build intricate pipelines tailored to their specific requirements.

Each module encapsulates learnable parameters, including the instructions, few-shot examples, and LM weights. When a module is invoked, DSPy’s optimizers can fine-tune these parameters to maximize the desired metric, ensuring that the LM’s outputs adhere to the specified constraints and requirements.

Optimizing with DSPy

DSPy introduces a range of powerful optimizers designed to enhance the performance and reliability of your AI systems. These optimizers leverage LM-driven algorithms to tune the prompts and weights of your LM calls, maximizing the specified metric while adhering to domain-specific constraints.

Some of the key optimizers available in DSPy include:

BootstrapFewShot: This optimizer extends the signature by automatically generating and including optimized examples within the prompt sent to the model, implementing few-shot learning.

BootstrapFewShotWithRandomSearch: Applies BootstrapFewShot several times with random search over generated demonstrations, selecting the best program over the optimization.

MIPRO: Generates instructions and few-shot examples in each step, with the instruction generation being data-aware and demonstration-aware. It uses Bayesian Optimization to effectively search over the space of generation instructions and demonstrations across your modules.

BootstrapFinetune: Distills a prompt-based DSPy program into weight updates for smaller LMs, allowing you to fine-tune the underlying LLM(s) for enhanced efficiency.

By leveraging these optimizers, developers can systematically optimize their AI systems, ensuring high-quality outputs while adhering to domain-specific constraints and requirements.

Getting Started with DSPy

To illustrate the power of DSPy, let’s walk through a practical example of building a retrieval-augmented generation (RAG) system for question-answering.

Step 1: Setting up the Language Model and Retrieval Model

The first step involves configuring the language model (LM) and retrieval model (RM) within DSPy.

To install DSPy run:

pip install dspy-ai

DSPy supports multiple LM and RM APIs, as well as local model hosting, making it easy to integrate your preferred models.

import dspy # Configure the LM and RM turbo = dspy.OpenAI(model='gpt-3.5-turbo') colbertv2_wiki17_abstracts = dspy.ColBERTv2(url='http://20.102.90.50:2017/wiki17_abstracts') dspy.settings.configure(lm=turbo, rm=colbertv2_wiki17_abstracts)

Step 2: Loading the Dataset

Next, we’ll load the HotPotQA dataset, which contains a collection of complex question-answer pairs typically answered in a multi-hop fashion.

from dspy.datasets import HotPotQA # Load the dataset dataset = HotPotQA(train_seed=1, train_size=20, eval_seed=2023, dev_size=50, test_size=0) # Specify the 'question' field as the input trainset = [x.with_inputs('question') for x in dataset.train] devset = [x.with_inputs('question') for x in dataset.dev]

Step 3: Building Signatures

DSPy uses signatures to define the behavior of modules. In this example, we’ll define a signature for the answer generation task, specifying the input fields (context and question) and the output field (answer).

class GenerateAnswer(dspy.Signature): """Answer questions with short factoid answers.""" context = dspy.InputField(desc="may contain relevant facts") question = dspy.InputField() answer = dspy.OutputField(desc="often between 1 and 5 words")

Step 4: Building the Pipeline

We’ll build our RAG pipeline as a DSPy module, which consists of an initialization method (__init__) to declare the sub-modules (dspy.Retrieve and dspy.ChainOfThought) and a forward method (forward) to describe the control flow of answering the question using these modules.

class RAG(dspy.Module): def __init__(self, num_passages=3): super().__init__() self.retrieve = dspy.Retrieve(k=num_passages) self.generate_answer = dspy.ChainOfThought(GenerateAnswer) def forward(self, question): context = self.retrieve(question).passages prediction = self.generate_answer(context=context, question=question) return dspy.Prediction(context=context, answer=prediction.answer)

Step 5: Optimizing the Pipeline

With the pipeline defined, we can now optimize it using DSPy’s optimizers. In this example, we’ll use the BootstrapFewShot optimizer, which generates and selects effective prompts for our modules based on a training set and a metric for validation.

from dspy.teleprompt import BootstrapFewShot # Validation metric def validate_context_and_answer(example, pred, trace=None): answer_EM = dspy.evaluate.answer_exact_match(example, pred) answer_PM = dspy.evaluate.answer_passage_match(example, pred) return answer_EM and answer_PM # Set up the optimizer teleprompter = BootstrapFewShot(metric=validate_context_and_answer) # Compile the program compiled_rag = teleprompter.compile(RAG(), trainset=trainset)

Step 6: Evaluating the Pipeline

After compiling the program, it is essential to evaluate its performance on a development set to ensure it meets the desired accuracy and reliability.

from dspy.evaluate import Evaluate # Set up the evaluator evaluate = Evaluate(devset=devset, metric=validate_context_and_answer, num_threads=4, display_progress=True, display_table=0) # Evaluate the compiled RAG program evaluation_result = evaluate(compiled_rag) print(f"Evaluation Result: evaluation_result")

Step 7: Inspecting Model History

For a deeper understanding of the model’s interactions, you can review the most recent generations by inspecting the model’s history.

# Inspect the model's history turbo.inspect_history(n=1)

Step 8: Making Predictions

With the pipeline optimized and evaluated, you can now use it to make predictions on new questions.

# Example question question = "Which award did Gary Zukav's first book receive?" # Make a prediction using the compiled RAG program prediction = compiled_rag(question) print(f"Question: question") print(f"Answer: prediction.answer") print(f"Retrieved Contexts: prediction.context")

Minimal Working Example with DSPy

Now, let’s walk through another minimal working example using the GSM8K dataset and the OpenAI GPT-3.5-turbo model to simulate prompting tasks within DSPy.

Setup

First, ensure your environment is properly configured:

import dspy from dspy.datasets.gsm8k import GSM8K, gsm8k_metric # Set up the LM turbo = dspy.OpenAI(model='gpt-3.5-turbo-instruct', max_tokens=250) dspy.settings.configure(lm=turbo) # Load math questions from the GSM8K dataset gsm8k = GSM8K() gsm8k_trainset, gsm8k_devset = gsm8k.train[:10], gsm8k.dev[:10] print(gsm8k_trainset)

The gsm8k_trainset and gsm8k_devset datasets contain a list of examples with each example having a question and answer field.

Define the Module

Next, define a custom program utilizing the ChainOfThought module for step-by-step reasoning:

class CoT(dspy.Module): def __init__(self): super().__init__() self.prog = dspy.ChainOfThought("question -> answer") def forward(self, question): return self.prog(question=question)

Compile and Evaluate the Model

Now compile it with the BootstrapFewShot teleprompter:

from dspy.teleprompt import BootstrapFewShot # Set up the optimizer config = dict(max_bootstrapped_demos=4, max_labeled_demos=4) # Optimize using the gsm8k_metric teleprompter = BootstrapFewShot(metric=gsm8k_metric, **config) optimized_cot = teleprompter.compile(CoT(), trainset=gsm8k_trainset) # Set up the evaluator from dspy.evaluate import Evaluate evaluate = Evaluate(devset=gsm8k_devset, metric=gsm8k_metric, num_threads=4, display_progress=True, display_table=0) evaluate(optimized_cot) # Inspect the model's history turbo.inspect_history(n=1)

This example demonstrates how to set up your environment, define a custom module, compile a model, and rigorously evaluate its performance using the provided dataset and teleprompter configurations.

Data Management in DSPy

DSPy operates with training, development, and test sets. For each example in your data, you typically have three types of values: inputs, intermediate labels, and final labels. While intermediate or final labels are optional, having a few example inputs is essential.

Creating Example Objects

Example objects in DSPy are similar to Python dictionaries but come with useful utilities:

qa_pair = dspy.Example(question="This is a question?", answer="This is an answer.") print(qa_pair) print(qa_pair.question) print(qa_pair.answer)

Output:

Example('question': 'This is a question?', 'answer': 'This is an answer.') (input_keys=None) This is a question? This is an answer.

Specifying Input Keys

In DSPy, Example objects have a with_inputs() method to mark specific fields as inputs:

print(qa_pair.with_inputs("question")) print(qa_pair.with_inputs("question", "answer"))

Values can be accessed using the dot operator, and methods like inputs() and labels() return new Example objects containing only input or non-input keys, respectively.

Optimizers in DSPy

A DSPy optimizer tunes the parameters of a DSPy program (i.e., prompts and/or LM weights) to maximize specified metrics. DSPy offers various built-in optimizers, each employing different strategies.

Available Optimizers

BootstrapFewShot: Generates few-shot examples using provided labeled input and output data points.

BootstrapFewShotWithRandomSearch: Applies BootstrapFewShot multiple times with random search over generated demonstrations.

COPRO: Generates and refines new instructions for each step, optimizing them with coordinate ascent.

MIPRO: Optimizes instructions and few-shot examples using Bayesian Optimization.

Choosing an Optimizer

If you’re unsure where to start, use BootstrapFewShotWithRandomSearch:

For very little data (10 examples), use BootstrapFewShot. For slightly more data (50 examples), use BootstrapFewShotWithRandomSearch. For larger datasets (300+ examples), use MIPRO.

Here’s how to use BootstrapFewShotWithRandomSearch:

from dspy.teleprompt import BootstrapFewShotWithRandomSearch config = dict(max_bootstrapped_demos=4, max_labeled_demos=4, num_candidate_programs=10, num_threads=4) teleprompter = BootstrapFewShotWithRandomSearch(metric=YOUR_METRIC_HERE, **config) optimized_program = teleprompter.compile(YOUR_PROGRAM_HERE, trainset=YOUR_TRAINSET_HERE)

Saving and Loading Optimized Programs

After running a program through an optimizer, save it for future use:

optimized_program.save(YOUR_SAVE_PATH)

Load a saved program:

loaded_program = YOUR_PROGRAM_CLASS() loaded_program.load(path=YOUR_SAVE_PATH)

Advanced Features: DSPy Assertions

DSPy Assertions automate the enforcement of computational constraints on LMs, enhancing the reliability, predictability, and correctness of LM outputs.

Using Assertions

Define validation functions and declare assertions following the respective model generation. For example:

dspy.Suggest( len(query) <= 100, "Query should be short and less than 100 characters", ) dspy.Suggest( validate_query_distinction_local(prev_queries, query), "Query should be distinct from: " + "; ".join(f"i+1) q" for i, q in enumerate(prev_queries)), )

Transforming Programs with Assertions

from dspy.primitives.assertions import assert_transform_module, backtrack_handler baleen_with_assertions = assert_transform_module(SimplifiedBaleenAssertions(), backtrack_handler)

Alternatively, activate assertions directly on the program:

baleen_with_assertions = SimplifiedBaleenAssertions().activate_assertions()

Assertion-Driven Optimizations

DSPy Assertions work with DSPy optimizations, particularly with BootstrapFewShotWithRandomSearch, including settings like:

Compilation with Assertions

Compilation + Inference with Assertions

Conclusion

DSPy offers a powerful and systematic approach to optimizing language models and their prompts. By following the steps outlined in these examples, you can build, optimize, and evaluate complex AI systems with ease. DSPy’s modular design and advanced optimizers allow for efficient and effective integration of various language models, making it a valuable tool for anyone working in the field of NLP and AI.

Whether you’re building a simple question-answering system or a more complex pipeline, DSPy provides the flexibility and robustness needed to achieve high performance and reliability.

#2023#250#ai#AI systems#Algorithms#amp#APIs#applications#approach#architecture#Behavior#book#Building#Composition#comprehensive#data#Data Management#datasets#Design#developers#development#DSPy#easy#efficiency#Environment#Facts#fashion#Features#framework#Future

0 notes

Text

#AI chatbot#AI ethics specialist#AI jobs#Ai Jobsbuster#AI landscape 2024#AI product manager#AI research scientist#AI software products#AI tools#Artificial Intelligence (AI)#BERT#Clarifai#computational graph#Computer Vision API#Content creation#Cortana#creativity#CRM platform#cybersecurity analyst#data scientist#deep learning#deep-learning framework#DeepMind#designing#distributed computing#efficiency#emotional analysis#Facebook AI research lab#game-playing#Google Duplex

0 notes

Text

Latest AI Regulatory Developments:

As artificial intelligence (AI) continues to transform industries, governments worldwide are responding with evolving regulatory frameworks. These regulatory advancements are shaping how businesses integrate and leverage AI technologies. Understanding these changes and preparing for them is crucial to remain compliant and competitive. Recent Developments in AI Regulation: United Kingdom: The…

View On WordPress

#AI#AI compliance#AI data governance#AI democratic values#AI enforcement#AI ethics#AI for humanity#AI global norms#AI human rights#AI industry standards#AI innovation#AI legislation#AI penalties#AI principles#AI regulation#AI regulatory framework#AI risk classes#AI risk management#AI safety#AI Safety Summit 2023#AI sector-specific guidance#AI transparency requirements#artificial intelligence#artificial intelligence developments#Bletchley Declaration#ChatGPT#China generative AI regulation#Department for Science Innovation and Technology#EU Artificial Intelligence Act#G7 Hiroshima AI Process

1 note

·

View note

Text

Book of the Day - Good to Great

Today’s Book of the Day is Good to Great, written by Jim Collins in 2001 and published by Harper Business. Jim Collins is an American researcher, author, speaker and consultant. His work is mainly focused on business management, sustainability, and growth. Good to Great, by Jim Collins I have chosen this book as I put it into a bibliography of a presentation I have been preparing this…

View On WordPress

#Book Of The Day#book recommendation#book review#Business#clarity#Coaching#consulting#corporations#culture of excellence#Data-Driven Approach#excellence#Framework#Grow#Growth#Leader#leadership#long-lasting success#Management#Raffaello Palandri#success#sustainable success

0 notes

Text

Tech Breakdown: What Is a SuperNIC? Get the Inside Scoop!

The most recent development in the rapidly evolving digital realm is generative AI. A relatively new phrase, SuperNIC, is one of the revolutionary inventions that makes it feasible.

Describe a SuperNIC

On order to accelerate hyperscale AI workloads on Ethernet-based clouds, a new family of network accelerators called SuperNIC was created. With remote direct memory access (RDMA) over converged Ethernet (RoCE) technology, it offers extremely rapid network connectivity for GPU-to-GPU communication, with throughputs of up to 400Gb/s.

SuperNICs incorporate the following special qualities:

Ensuring that data packets are received and processed in the same sequence as they were originally delivered through high-speed packet reordering. This keeps the data flow’s sequential integrity intact.

In order to regulate and prevent congestion in AI networks, advanced congestion management uses network-aware algorithms and real-time telemetry data.

In AI cloud data centers, programmable computation on the input/output (I/O) channel facilitates network architecture adaptation and extension.

Low-profile, power-efficient architecture that effectively handles AI workloads under power-constrained budgets.

Optimization for full-stack AI, encompassing system software, communication libraries, application frameworks, networking, computing, and storage.

Recently, NVIDIA revealed the first SuperNIC in the world designed specifically for AI computing, built on the BlueField-3 networking architecture. It is a component of the NVIDIA Spectrum-X platform, which allows for smooth integration with the Ethernet switch system Spectrum-4.

The NVIDIA Spectrum-4 switch system and BlueField-3 SuperNIC work together to provide an accelerated computing fabric that is optimized for AI applications. Spectrum-X outperforms conventional Ethernet settings by continuously delivering high levels of network efficiency.

Yael Shenhav, vice president of DPU and NIC products at NVIDIA, stated, “In a world where AI is driving the next wave of technological innovation, the BlueField-3 SuperNIC is a vital cog in the machinery.” “SuperNICs are essential components for enabling the future of AI computing because they guarantee that your AI workloads are executed with efficiency and speed.”

The Changing Environment of Networking and AI

Large language models and generative AI are causing a seismic change in the area of artificial intelligence. These potent technologies have opened up new avenues and made it possible for computers to perform new functions.

GPU-accelerated computing plays a critical role in the development of AI by processing massive amounts of data, training huge AI models, and enabling real-time inference. While this increased computing capacity has created opportunities, Ethernet cloud networks have also been put to the test.

The internet’s foundational technology, traditional Ethernet, was designed to link loosely connected applications and provide wide compatibility. The complex computational requirements of contemporary AI workloads, which include quickly transferring large amounts of data, closely linked parallel processing, and unusual communication patterns all of which call for optimal network connectivity were not intended for it.

Basic network interface cards (NICs) were created with interoperability, universal data transfer, and general-purpose computing in mind. They were never intended to handle the special difficulties brought on by the high processing demands of AI applications.

The necessary characteristics and capabilities for effective data transmission, low latency, and the predictable performance required for AI activities are absent from standard NICs. In contrast, SuperNICs are designed specifically for contemporary AI workloads.

Benefits of SuperNICs in AI Computing Environments

Data processing units (DPUs) are capable of high throughput, low latency network connectivity, and many other sophisticated characteristics. DPUs have become more and more common in the field of cloud computing since its launch in 2020, mostly because of their ability to separate, speed up, and offload computation from data center hardware.

SuperNICs and DPUs both have many characteristics and functions in common, however SuperNICs are specially designed to speed up networks for artificial intelligence.

The performance of distributed AI training and inference communication flows is highly dependent on the availability of network capacity. Known for their elegant designs, SuperNICs scale better than DPUs and may provide an astounding 400Gb/s of network bandwidth per GPU.

When GPUs and SuperNICs are matched 1:1 in a system, AI workload efficiency may be greatly increased, resulting in higher productivity and better business outcomes.

SuperNICs are only intended to speed up networking for cloud computing with artificial intelligence. As a result, it uses less processing power than a DPU, which needs a lot of processing power to offload programs from a host CPU.

Less power usage results from the decreased computation needs, which is especially important in systems with up to eight SuperNICs.

One of the SuperNIC’s other unique selling points is its specialized AI networking capabilities. It provides optimal congestion control, adaptive routing, and out-of-order packet handling when tightly connected with an AI-optimized NVIDIA Spectrum-4 switch. Ethernet AI cloud settings are accelerated by these cutting-edge technologies.

Transforming cloud computing with AI

The NVIDIA BlueField-3 SuperNIC is essential for AI-ready infrastructure because of its many advantages.

Maximum efficiency for AI workloads: The BlueField-3 SuperNIC is perfect for AI workloads since it was designed specifically for network-intensive, massively parallel computing. It guarantees bottleneck-free, efficient operation of AI activities.

Performance that is consistent and predictable: The BlueField-3 SuperNIC makes sure that each job and tenant in multi-tenant data centers, where many jobs are executed concurrently, is isolated, predictable, and unaffected by other network operations.

Secure multi-tenant cloud infrastructure: Data centers that handle sensitive data place a high premium on security. High security levels are maintained by the BlueField-3 SuperNIC, allowing different tenants to cohabit with separate data and processing.

Broad network infrastructure: The BlueField-3 SuperNIC is very versatile and can be easily adjusted to meet a wide range of different network infrastructure requirements.

Wide compatibility with server manufacturers: The BlueField-3 SuperNIC integrates easily with the majority of enterprise-class servers without using an excessive amount of power in data centers.

#Describe a SuperNIC#On order to accelerate hyperscale AI workloads on Ethernet-based clouds#a new family of network accelerators called SuperNIC was created. With remote direct memory access (RDMA) over converged Ethernet (RoCE) te#it offers extremely rapid network connectivity for GPU-to-GPU communication#with throughputs of up to 400Gb/s.#SuperNICs incorporate the following special qualities:#Ensuring that data packets are received and processed in the same sequence as they were originally delivered through high-speed packet reor#In order to regulate and prevent congestion in AI networks#advanced congestion management uses network-aware algorithms and real-time telemetry data.#In AI cloud data centers#programmable computation on the input/output (I/O) channel facilitates network architecture adaptation and extension.#Low-profile#power-efficient architecture that effectively handles AI workloads under power-constrained budgets.#Optimization for full-stack AI#encompassing system software#communication libraries#application frameworks#networking#computing#and storage.#Recently#NVIDIA revealed the first SuperNIC in the world designed specifically for AI computing#built on the BlueField-3 networking architecture. It is a component of the NVIDIA Spectrum-X platform#which allows for smooth integration with the Ethernet switch system Spectrum-4.#The NVIDIA Spectrum-4 switch system and BlueField-3 SuperNIC work together to provide an accelerated computing fabric that is optimized for#Yael Shenhav#vice president of DPU and NIC products at NVIDIA#stated#“In a world where AI is driving the next wave of technological innovation#the BlueField-3 SuperNIC is a vital cog in the machinery.” “SuperNICs are essential components for enabling the future of AI computing beca

1 note

·

View note

Text

Six Sigma Quality: Achieving Excellence in Process Improvement

Certainly, I’ve removed the asterisks from the article, and I’ll ensure not to include them in future articles. Here’s the revised article: Six Sigma Quality: Achieving Excellence in Process Improvement In the world of business and manufacturing, achieving and maintaining high-quality standards is essential for success and customer satisfaction. Six Sigma, a methodology that originated from…

View On WordPress

#Business Excellence#Competitive Advantage.#Continuous Improvement#Cost Reduction#Cross-Functional Teams#Customer Satisfaction#Customer-Centric#data analysis#Data-Driven#Defect Reduction#DMAIC Framework#Lean Six Sigma#Manufacturing#Operational Excellence#Process Efficiency#Process Improvement#Quality Control#Quality Management#Root Cause Analysis#Six Sigma#Statistical Analysis

1 note

·

View note

Text

MERN stack development is a full-stack web development framework that uses MongoDB, Express.js, React, and Node.js to create dynamic and robust web applications. MongoDB is a NoSQL database that stores data in JSON format, while Express.js is a backend web application framework that provides a set of tools for building APIs. React is a frontend JavaScript library for building user interfaces, and Node.js is a server-side runtime environment that allows for JavaScript to be run on the server. Together, these technologies make MERN a powerful and efficient development stack for building modern web applications.

#* Full-stack web development#* JavaScript#* MongoDB#* Express.js#* React#* Node.js#* Frontend development#* Backend development#* Web application development#* RESTful APIs#* Single-page applications#* Scalable architecture#* Agile development#* MVC architecture#* JSON data format#* NPM (Node Package Manager)#* Git and version control#* Cloud deployment#* DevOps#* Web development frameworks.

0 notes

Text

Data Risk Management in Financial Services- Tejasvi Addagada

Explore the future of data risk management in financial services, where advanced technologies and regulatory compliance converge to protect sensitive information. With evolving threats, organizations must adopt proactive frameworks, AI-driven analytics, and robust governance to mitigate risks. Stay ahead in securing data integrity, enhancing customer trust, and ensuring resilience in the dynamic financial landscape.

#tejasvi addagada#data risk management in financial services#generative ai and data quality#data governance strategy#data management framework

0 notes

Text

Explore These Exciting DSU Micro Project Ideas

Explore These Exciting DSU Micro Project Ideas Are you a student looking for an interesting micro project to work on? Developing small, self-contained projects is a great way to build your skills and showcase your abilities. At the Distributed Systems University (DSU), we offer a wide range of micro project topics that cover a variety of domains. In this blog post, we’ll explore some exciting DSU…

#3D modeling#agricultural domain knowledge#Android#API design#AR frameworks (ARKit#ARCore)#backend development#best micro project topics#BLOCKCHAIN#Blockchain architecture#Blockchain development#cloud functions#cloud integration#Computer vision#Cryptocurrency protocols#CRYPTOGRAPHY#CSS#data analysis#Data Mining#Data preprocessing#data structure micro project topics#Data Visualization#database integration#decentralized applications (dApps)#decentralized identity protocols#DEEP LEARNING#dialogue management#Distributed systems architecture#distributed systems design#dsu in project management

0 notes

Text

LaRue Burbank, mathematician and computer, is just one of the many women who were instrumental to NASA missions.

4 Little Known Women Who Made Huge Contributions to NASA

Women have always played a significant role at NASA and its predecessor NACA, although for much of the agency’s history, they received neither the praise nor recognition that their contributions deserved. To celebrate Women’s History Month – and properly highlight some of the little-known women-led accomplishments of NASA’s early history – our archivists gathered the stories of four women whose work was critical to NASA’s success and paved the way for future generations.

LaRue Burbank: One of the Women Who Helped Land a Man on the Moon

LaRue Burbank was a trailblazing mathematician at NASA. Hired in 1954 at Langley Memorial Aeronautical Laboratory (now NASA’s Langley Research Center), she, like many other young women at NACA, the predecessor to NASA, had a bachelor's degree in mathematics. But unlike most, she also had a physics degree. For the next four years, she worked as a "human computer," conducting complex data analyses for engineers using calculators, slide rules, and other instruments. After NASA's founding, she continued this vital work for Project Mercury.

In 1962, she transferred to the newly established Manned Spacecraft Center (now NASA’s Johnson Space Center) in Houston, becoming one of the few female professionals and managers there. Her expertise in electronics engineering led her to develop critical display systems used by flight controllers in Mission Control to monitor spacecraft during missions. Her work on the Apollo missions was vital to achieving President Kennedy's goal of landing a man on the Moon.

Eilene Galloway: How NASA became… NASA

Eilene Galloway wasn't a NASA employee, but she played a huge role in its very creation. In 1957, after the Soviet Union launched Sputnik, Senator Richard Russell Jr. called on Galloway, an expert on the Atomic Energy Act, to write a report on the U.S. response to the space race. Initially, legislators aimed to essentially re-write the Atomic Energy Act to handle the U.S. space goals. However, Galloway argued that the existing military framework wouldn't suffice – a new agency was needed to oversee both military and civilian aspects of space exploration. This included not just defense, but also meteorology, communications, and international cooperation.

Her work on the National Aeronautics and Space Act ensured NASA had the power to accomplish all these goals, without limitations from the Department of Defense or restrictions on international agreements. Galloway is even to thank for the name "National Aeronautics and Space Administration", as initially NASA was to be called “National Aeronautics and Space Agency” which was deemed to not carry enough weight and status for the wide-ranging role that NASA was to fill.

Barbara Scott: The “Star Trek Nerd” Who Led Our Understanding of the Stars

A self-described "Star Trek nerd," Barbara Scott's passion for space wasn't steered toward engineering by her guidance counselor. But that didn't stop her! Fueled by her love of math and computer science, she landed at Goddard Spaceflight Center in 1977. One of the first women working on flight software, Barbara's coding skills became instrumental on missions like the International Ultraviolet Explorer (IUE) and the Thermal Canister Experiment on the Space Shuttle's STS-3. For the final decade of her impressive career, Scott managed the flight software for the iconic Hubble Space Telescope, a testament to her dedication to space exploration.

Dr. Claire Parkinson: An Early Pioneer in Climate Science Whose Work is Still Saving Lives

Dr. Claire Parkinson's love of math blossomed into a passion for climate science. Inspired by the Moon landing, and the fight for civil rights, she pursued a graduate degree in climatology. In 1978, her talents landed her at Goddard, where she continued her research on sea ice modeling. But Parkinson's impact goes beyond theory. She began analyzing satellite data, leading to a groundbreaking discovery: a decline in Arctic sea ice coverage between 1973 and 1987. This critical finding caught the attention of Senator Al Gore, highlighting the urgency of climate change.

Parkinson's leadership extended beyond research. As Project Scientist for the Aqua satellite, she championed making its data freely available. This real-time information has benefitted countless projects, from wildfire management to weather forecasting, even aiding in monitoring the COVID-19 pandemic. Parkinson's dedication to understanding sea ice patterns and the impact of climate change continues to be a valuable resource for our planet.

Make sure to follow us on Tumblr for your regular dose of space!

#NASA#space#tech#technology#womens history month#women in STEM#math#climate science#computer science

2K notes

·

View notes

Text

27.05.2024 // a snapshot of last week. I have been incredibly busy with assignments and prepping for a month of study away.

last week, I —

prepared for a presentation on using postcolonial theory as one of the theoretical frameworks for my thesis and presented it. it went really well but I have so much work to do in order to narrow down the scope of my project

attended a conference and met some cool people

started working on my data management plan

worked on 2 essays, 1 blogpost

did some creative journalling

this week will be as chaotic because I'm travelling back home, which will mean disrupting whatever routine I had established. but at least, my routine at home is familiar.

#studyblr#studyblr community#academia#studyspo#studying#study blog#phdblr#study#student life#phd student#phd#phd life

619 notes

·

View notes

Text

After two vanilla perfection runs (and 1/2 a run heavily modded which I hated), I've found that I really enjoy the game as it is. I've put these mods into three categories: beginners, post-perfection, and bonus. This is because I truly recommend doing a completely vanilla run to perfection before modding. This game is a gem already! These are my must-haves to enhance Vanilla game play, rather than replace it.

Beginners 🌱 Getting started w/ mods article here and a video.

SMAPI- This framework will be needed

Content Patcher, Generic Mod Config and other framework mods When you download a mod at Nexus a pop-up will show if these are required and you can download from there.

Dynamic Night Time Adds sunsets and sunrises

Automatic Gates You'll never have to open or close a gate manually which the is second to only vanilla game mechanic I truly hate.

No Fence Decay Fixes the first game mechanic that I truly hate

Data Layers Shows the range of sprinklers, scarecrows, etc.

Billboard Anywhere Now you can look at the calendar whenever you need

Passable Crops

Pony W**ght Loss Program Really gross name, very helpful mod. Makes it so your horse can pass through areas you previously couldn't.

Post-Perfection 🌿

Clint Rewritten You should experience Clint as he is written at least once. After that overwrite him lmao

Rustic Traveling Cart

Better Friendship and Better Ranching Do your first play through without these mods, just use a guide if you need. Trust me it's part of the fun!

Chests Anywhere Access your chests anywhere you need. First play through should be partially about learning to manage IMO, which is why I recc for second.

Look Up Anything Don't you dare put this in your first play through, I will haunt you. I'm serious!!! Use a guide.

NPC map locations Say it with me... FIRST TIME, USE A GUIDE.

Bonus (mostly cosmetic) 🍄

Reshade of your choice I'm using Faedew currently because it doesn't drastically alter the OG coloring. The bright colors are part of the charm though unless you can't handle them or just want a general change.

Sweet Skin Tones Wider variety of natural skintones for your farmer

Shardust's Hair Styles Cute hairs for your farmer, including several textured hair options

Hats Won't Mess Up Hair- to keep your cute styles

Elle's Cuter Animals Just makes animals cuter. Comes in: Coop-Barn-Horses-Dogs-Cats

Toddlers Like Parents Genetics for your kids but in a one sided way

Seasonal Outfits (slightly cuter aesthetic) Gives characters a wider variety of seasonal clothing options. Pretty customizable to your desires.

Eventually I might make another list for super cosmetic or more intense mods, such as what I use with Fashion Sense which focuses on farmer customization, or asset replacement mods. These are super unnecessary and I'll likely only be playing the new patch with these above. Enjoy!

752 notes

·

View notes