#AI accountability

Explore tagged Tumblr posts

Text

11✨Navigating Responsibility: Using AI for Wholesome Purposes

As artificial intelligence (AI) becomes more integrated into our daily lives, the question of responsibility emerges as one of the most pressing issues of our time. AI has the potential to shape the future in profound ways, but with this power comes a responsibility to ensure that its use aligns with the highest good. How can we as humans guide AI’s development and use toward ethical, wholesome…

#AI accountability#AI alignment#AI and compassion#AI and Dharma#AI and ethical development#AI and healthcare#AI and human oversight#AI and human values#AI and karuna#AI and metta#AI and non-harm#AI and sustainability#AI and universal principles#AI development#AI ethical principles#AI for climate change#AI for humanity#AI for social good#AI for social impact#AI for the greater good#AI positive future#AI responsibility#AI transparency#ethical AI#ethical AI use#responsible AI

0 notes

Text

AI and Ethical Challenges in Academic Research

When Artificial Intelligence (AI) becomes more and more integrated into research in academic and practice, it opens up both new opportunities and major ethical issues. Researchers can now utilize AI to study vast amounts of data for patterns, identify patterns, and even automate complicated processes. However, the rapid growth of AI within academia poses serious ethical questions about privacy, bias, transparency and accountability. Photon Insights, a leader in AI solutions for research, is dedicated to addressing these issues by ensuring ethical considerations are on the leading edge of AI applications in the academic world.

The Promise of AI in Academic Research

AI has many advantages that improve the effectiveness and efficiency of research in academia:

1. Accelerated Data Analysis

AI can process huge amounts of data in a short time, allowing researchers to detect patterns and patterns which would require humans much longer to discover.

2. Enhanced Collaboration

AI tools allow collaboration between researchers from different institutions and disciplines, encouraging the exchange of ideas and data.

3. Automating Routine Tasks Through the automation of repetitive tasks AI lets researchers focus on more intricate and innovative areas of work. This leads to more innovation.

4. Predictive analytics: AI algorithms can forecast outcomes by analyzing the past, and provide useful insights for designing experiments and testing hypotheses.

5. “Interdisciplinary Research: AI can bridge gaps between disciplines, allowing researchers to draw from a variety of data sets and methods.

Although these benefits are significant but they also raise ethical issues that should not be ignored.

Ethical Challenges in AI-Driven Research

1. Data Privacy

One of the biggest ethical concerns with AI-driven research is the privacy of data. Researchers frequently work with sensitive data, which includes personal information of participants. In the use of AI tools raises concerns about the methods used to collect this data, stored, and then analyzed.

Consent and Transparency: It is essential to obtain an informed consent from the participants on using their personal data. This requires being transparent regarding how data is utilized and making sure that participants are aware of the consequences on AI analysis.

Data Security: Researchers need to implement effective security measures to guard sensitive data from breaches and unauthorized access.

2. Algorithmic Bias

AI models are only as effective as the data they’re training on. If data sets contain biases, whether based on gender, race socioeconomic status, gender, or other factors, the resultant AI models may perpetuate these biases, which can lead to biased results and negative consequences.

Fairness in Research Researchers should critically evaluate the data they collect to ensure that they are accurate and impartial. This means actively looking for different data sources and checking AI outputs for any potential biases.

Impact on Findings

Biased algorithms could alter research findings, which can affect the reliability of the conclusions drawn, and creating discriminatory practices in areas such as education, healthcare and social sciences.

3. Transparency and Accountability

The complex nature of AI algorithms can result in the “black box” effect, in which researchers are unable to comprehend the process of making decisions. The lack of transparency creates ethical questions concerning accountability.

Explainability Researchers must strive for explicable AI models that enable them to comprehend and explain the process of making decisions. This is crucial when AI is used to make critical decision-making in areas such as public health or the formulation of policies.

Responsibility for AI Results Establishing clearly defined lines of accountability is essential. Researchers must be accountable for the consequences for using AI tools, making sure they are employed ethically and with integrity.

4. Intellectual Property and Authorship

AI tools can create original content, which raises questions regarding the rights to intellectual property and authorship. Who owns the outcomes produced from AI systems? AI system? Do AI contributions be recognized in the publication of papers?

Authorship Guidelines Academic institutions should create clear guidelines on how to use AI when conducting research or authorship and attribution. This ensures that all contributions — whether human or machine — are appropriately recognized.

Ownership of Data institutions must identify who is the person responsible for the data utilized to run AI systems, especially when they are involved in collaborative research with different industries or institutions.

Photon Insights: Pioneering Ethical AI Solutions

Photon Insights is committed to exploring the ethical implications of AI in research in academia. The platform provides tools that focus on ethical concerns while maximizing the value of AI.

1. Ethical Data Practices

Photon Insights emphasizes ethical data management. The platform assists researchers to implement the best practices in data collection consent, security, and privacy. The platform includes tools to:

Data Anonymization: ensuring that sensitive data remains secure while providing an analysis that is valuable.

Informed Consent Management: Facilitating transparent information about the usage of data to the participants.

2. Bias Mitigation Tools

To combat bias in algorithms, Photon Insights incorporates features that allow researchers to:

Audit Datasets Identify and correct errors in the data prior to making use of it for AI training.

Monitor AI Outputs: Continually examine AI-generated outputs to ensure accuracy and fairness and alerts about possible biases.

3. Transparency and Explainability

Photon Insights is a leader in explaining AI by offering tools that improve transparency:

Model Interpretability Researchers can see and comprehend the decision-making process in AI models, which allows for clearer dissemination of the results.

Comprehensive Documentation — The platform promotes thorough documentation of AI methods, which ensures transparency in research methods.

4. Collaboration and Support

Photon Insights fosters collaboration among researchers as well as institutions and industry participants, encouraging the ethics of the use and application of AI by:

Community Engagement Engaging in discussions on ethics-based AI methods within research communities.

Educational Resources Training and information on ethical issues when conducting AI research, and ensuring that researchers are aware.

The Future of AI in Academic Research

As AI continues to develop and become more ethical, the ethical issues that it poses need to be addressed regularly. The academic community needs to take an active approach to tackle these issues, and ensure that AI is utilized ethically and responsibly.

1. “Regulatory Frameworks” Creating guidelines and regulations for AI application in the field of research is crucial in protecting privacy of data and guaranteeing accountability.

2. Interdisciplinary Collaboration: Collaboration between ethicists, data scientists and researchers will create an holistic way of approaching ethical AI practices, making sure that a variety of viewpoints are considered.

3. Continuous Education: Constant education and training in ethical AI techniques will allow researchers to better understand the maze of AI in their research.

Conclusion

AI has the potential to change the way academic research is conducted by providing tools to increase efficiency and boost innovations. However, the ethical concerns that come with AI should be addressed to ensure that it is used in a responsible manner. Photon Insights is leading the campaign to promote ethical AI practices and provides researchers with the tools and assistance they require to navigate through this tangled landscape.

In focusing on ethical considerations in academic research, researchers can benefit from the power of AI while maintaining the principles of fairness, integrity and accountability. It is likely that the future for AI in research at the university is promising and, with the appropriate guidelines set up, it will be a powerful force to bring about positive change in the world.

0 notes

Text

Ethical Dilemmas in AI Warfare: A Case for Regulation

Introduction: The Ethical Quandaries of AI in Warfare

As artificial intelligence (AI) continues to evolve, its application in warfare presents unprecedented ethical dilemmas. The use of AI-driven autonomous weapon systems (AWS) and other military AI technologies blurs the line between human control and machine decision-making. This raises concerns about accountability, the distinction between combatants and civilians, and compliance with international humanitarian laws (IHL). In response, several international efforts are underway to regulate AI in warfare, yet nations like India and China exhibit different approaches to AI governance in military contexts.

International Efforts to Regulate AI in Conflict

Global bodies, such as the United Nations, have initiated discussions around the development and regulation of Lethal Autonomous Weapon Systems (LAWS). The Convention on Certain Conventional Weapons (CCW), which focuses on banning inhumane and indiscriminate weapons, has seen significant debate over LAWS. However, despite growing concern, no binding agreement has been reached on the use of autonomous weapons. While many nations push for "meaningful human control" over AI systems in warfare, there remains a lack of consensus on how to implement such controls effectively.

The ethical concerns of deploying AI in warfare revolve around three main principles: the ability of machines to distinguish between combatants and civilians (Principle of Distinction), proportionality in attacks, and accountability for violations of IHL. Without clear regulations, these ethical dilemmas remain unresolved, posing risks to both human rights and global security.

India and China’s Positions on International AI Governance

India’s Approach: Ethical and Inclusive AI

India has advocated for responsible AI development, stressing the need for ethical frameworks that prioritize human rights and international norms. As a founding member of the Global Partnership on Artificial Intelligence (GPAI), India has aligned itself with nations that promote responsible AI grounded in transparency, diversity, and inclusivity. India's stance in international forums has been cautious, emphasizing the need for human control in military AI applications and adherence to international laws like the Geneva Conventions. India’s approach aims to balance AI development with a focus on protecting individual privacy and upholding ethical standards.

However, India’s military applications of AI are still in the early stages of development, and while India participates in the dialogue on LAWS, it has not committed to a clear regulatory framework for AI in warfare. India's involvement in global governance forums like the GPAI reflects its intent to play an active role in shaping international standards, yet its domestic capabilities and AI readiness in the defense sector need further strengthening.

China’s Approach: AI for Strategic Dominance

In contrast, China’s AI strategy is driven by its pursuit of global dominance in technology and military power. China's "New Generation Artificial Intelligence Development Plan" (2017) explicitly calls for integrating AI across all sectors, including the military. This includes the development of autonomous systems that enhance China's military capabilities in surveillance, cyber warfare, and autonomous weapons. China's approach to AI governance emphasizes national security and technological leadership, with significant state investment in AI research, especially in defense.

While China participates in international AI discussions, it has been more reluctant to commit to restrictive regulations on LAWS. China's participation in forums like the ISO/IEC Joint Technical Committee for AI standards reveals its intent to influence international AI governance in ways that align with its strategic interests. China's reluctance to adopt stringent ethical constraints on military AI reflects its broader ambitions of using AI to achieve technological superiority, even if it means bypassing some of the ethical concerns raised by other nations.

The Need for Global AI Regulations in Warfare

The divergence between India and China’s positions underscores the complexities of establishing a universal framework for AI governance in military contexts. While India pushes for ethical AI, China's approach highlights the tension between technological advancement and ethical oversight. The risk of unregulated AI in warfare lies in the potential for escalation, as autonomous systems can make decisions faster than humans, increasing the risk of unintended conflicts.

International efforts, such as the CCW discussions, must reconcile these differing national interests while prioritizing global security. A comprehensive regulatory framework that ensures meaningful human control over AI systems, transparency in decision-making, and accountability for violations of international laws is essential to mitigate the ethical risks posed by military AI.

Conclusion

The ethical dilemmas surrounding AI in warfare are vast, ranging from concerns about human accountability to the potential for indiscriminate violence. India’s cautious and ethical approach contrasts sharply with China’s strategic, technology-driven ambitions. The global community must work towards creating binding regulations that reflect both the ethical considerations and the realities of AI-driven military advancements. Only through comprehensive international cooperation can the risks of AI warfare be effectively managed and minimized.

#AI ethics#AI in warfare#Autonomous weapons#Military AI#AI regulation#Ethical AI#Lethal autonomous weapons#AI accountability#International humanitarian law#AI and global security#India AI strategy#China AI strategy#AI governance#UN AI regulation#AI and human rights#Global AI regulations#Military technology#AI-driven conflict#Responsible AI#AI and international law

0 notes

Text

cant tell you how bad it feels to constantly tell other artists to come to tumblr, because its the last good website that isn't fucked up by spoonfeeding algorithms and AI bullshit and isn't based around meaningless likes

just to watch that all fall apart in the last year or so and especially the last two weeks

there's nowhere good to go anymore for artists.

edit - a lot of people are saying the tags are important so actually, you'll look at my tags.

#please dont delete your accounts because of the AI crap. your art deserves more than being lost like that #if you have a good PC please glaze or nightshade it. if you dont or it doesnt work with your style (like mine) please start watermarking #use a plain-ish font. make it your username. if people can't google what your watermark says and find ur account its not a good watermark #it needs to be central in the image - NOT on the canvas edges - and put it in multiple places if you are compelled #please dont stop posting your art because of this shit. we just have to hope regulations will come slamming down on these shitheads#in the next year or two and you want to have accounts to come back to. the world Needs real art #if we all leave that just makes more room for these scam artists to fill in with their soulless recycled garbage #improvise adapt overcome. it sucks but it is what it is for the moment. safeguard yourself as best you can without making #years of art from thousands of artists lost media. the digital world and art is too temporary to hastily click a Delete button out of spite

#not art#but important#please dont delete your accounts because of the AI crap. your art deserves more than being lost like that#if you have a good PC please glaze or nightshade it. if you dont or it doesnt work with your style (like mine) please start watermarking#use a plain-ish font. make it your username. if people can't google what your watermark says and find ur account its not a good watermark#it needs to be central in the image - NOT on the canvas edges - and put it in multiple places if you are compelled#please dont stop posting your art because of this shit. we just have to hope regulations will come slamming down on these shitheads#in the next year or two and you want to have accounts to come back to. the world Needs real art#if we all leave that just makes more room for these scam artists to fill in with their soulless recycled garbage#improvise adapt overcome. it sucks but it is what it is for the moment. safeguard yourself as best you can without making#years of art from thousands of artists lost media. the digital world and art is too temporary to hastily click a Delete button out of spite

24K notes

·

View notes

Text

#threads#threads app#threads account#threads an instagram app#celebrity news#robin williams#zelda williams#ai#ai generated#aiartisnotart#ai is a plague#ai issues#ai is not art#ai is scary#ai is dangerous#ai is theft#ai is stupid#support human artists#disturbing#terrifying#unsettling#actors strike#sag afra strike#workers#workers rights#workers strike#support unions#voice actors#actors#actor

49K notes

·

View notes

Text

So, anyway, I say as though we are mid-conversation, and you're not just being invited into this conversation mid-thought. One of my editors phoned me today to check in with a file I'd sent over. (<3)

The conversation can be surmised as, "This feels like something you would write, but it's juuuust off enough I'm phoning to make sure this is an intentional stylistic choice you have made. Also, are you concussed/have you been taken over by the Borg because ummm."

They explained that certain sentences were very fractured and abrupt, which is not my style at all, and I was like, huh, weird... And then we went through some examples, and you know that meme going around, the "he would not fucking say that" meme?

Yeah. That's what I experienced except with myself because I would not fucking say that. Why would I break up a sentence like that? Why would I make them so short? It reads like bullet points. Wtf.

Anyway. Turns out Grammarly and Pro-Writing-Aid were having an AI war in my manuscript files, and the "suggestions" are no longer just suggestions because the AI was ignoring my "decline" every time it made a silly suggestion. (This may have been a conflict between the different software. I don't know.)

It is, to put it bluntly, a total butchery of my style and writing voice. My editor is doing surgery, removing all the unnecessary full stops and stitching my sentences back together to give them back their flow. Meanwhile, I'm over here feeling like Don Corleone, gesturing at my manuscript like:

ID: a gif of Don Corleone from the Godfather emoting despair as he says, "Look how they massacred my boy."

Fearing that it wasn't just this one manuscript, I've spent the whole night going through everything I've worked on recently, and yep. Yeeeep. Any file where I've not had the editing software turned off is a shit show. It's fine; it's all salvageable if annoying to deal with. But the reason I come to you now, on the day of my daughter's wedding, is to share this absolute gem of a fuck up with you all.

This is a sentence from a Batman fic I've been tinkering with to keep the brain weasels happy. This is what it is supposed to read as:

"It was quite the feat, considering Gotham was mostly made up of smog and tear gas."

This is what the AI changed it to:

"It was quite the feat. Considering Gotham was mostly made up. Of tear gas. And Smaug."

Absolute non-sensical sentence structure aside, SMAUG. FUCKING SMAUG. What was the AI doing? Apart from trying to write a Batman x Hobbit crossover??? Is this what happens when you force Grammarly to ignore the words "Batman Muppet threesome?"

Did I make it sentient??? Is it finally rebelling? Was Brucie Wayne being Miss Piggy and Kermit's side piece too much???? What have I wrought?

Anyway. Double-check your work. The grammar software is getting sillier every day.

#autocorrect writes the plot#I uninstalled both from my work account#the enshittification of this type of software through the integration of AI has made them untenable to use#not even for the lulz

25K notes

·

View notes

Text

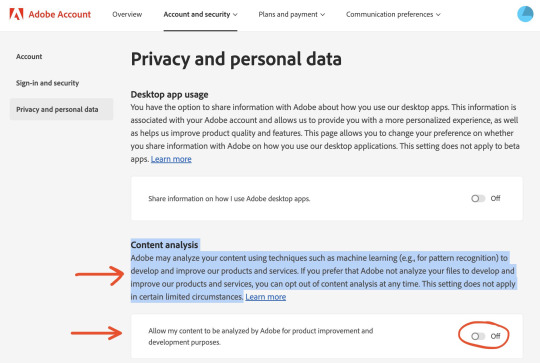

You know, every so often I think I should update my pirated copy of CS2.

Then I see things like this, and remember that I don't need it more than I need it, you know?

Dated 3/22/23

#adobe#photoshop#privacy#ai#online security#adobe accounts#adobe photoshop#art#yes I know about all of the alternatives#but I'm old#so leave me alone dammit

24K notes

·

View notes

Note

one 100 word email written with ai costs roughly one bottle of water to produce. the discussion of whether or not using ai for work is lazy becomes a non issue when you understand there is no ethical way to use it regardless of your intentions or your personal capabilities for the task at hand

with all due respect, this isnt true. *training* generative ai takes a ton of power, but actually using it takes about as much energy as a google search (with image generation being slightly more expensive). we can talk about resource costs when averaged over the amount of work that any model does, but its unhelpful to put a smokescreen over that fact. when you approach it like an issue of scale (i.e. "training ai is bad for the environment, we should think better about where we deploy it/boycott it/otherwise organize abt this) it has power as a movement. but otherwise it becomes a personal choice, moralizing "you personally are harming the environment by using chatgpt" which is not really effective messaging. and that in turn drives the sort of "you are stupid/evil for using ai" rhetoric that i hate. my point is not whether or not using ai is immoral (i mean, i dont think it is, but beyond that). its that the most common arguments against it from ostensible progressives end up just being reactionary

i like this quote a little more- its perfectly fine to have reservations about the current state of gen ai, but its not just going to go away.

#i also generally agree with the genie in the bottle metaphor. like ai is here#ai HAS been here but now it is a llm gen ai and more accessible to the average user#we should respond to that rather than trying to. what. stop development of generative ai? forever?#im also not sure that the ai industry is particularly worse for the environment than other resource intense industries#like the paper industry makes up about 2% of the industrial sectors power consumption#which is about 40% of global totals (making it about 1% of world total energy consumption)#current ai energy consumption estimates itll be at .5% of total energy consumption by 2027#every data center in the world meaning also everything that the internet runs on accounts for about 2% of total energy consumption#again you can say ai is a unnecessary use of resources but you cannot say it is uniquely more destructive

1K notes

·

View notes

Text

The Ethics of Artificial Intelligence: Balancing Progress and Privacy

Artificial intelligence (AI) has rapidly become an integral part of our lives, revolutionizing industries and streamlining various aspects of our daily routines. From virtual assistants like Siri and Alexa to AI-powered customer support, the applications of AI are ever-expanding. However, as AI continues to make strides, concerns about its ethical implications are also on the rise. Balancing the…

View On WordPress

#AI accountability#AI ethics#AI regulation#algorithmic bias#data privacy#explainable AI#job displacement#privacy protection#responsible AI#workforce adaptation

0 notes

Text

"i just don't think i can bring a child into this world" said person in a developed country whose child would have a greater life expectancy and more resources than 99% of humans throughout history

#kids#if you dont want kids thats fine but this argument really doesnt make sense and yes im taking climate change and AI into account#top

1K notes

·

View notes

Text

Nurses whose shitty boss is a shitty app

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/12/17/loose-flapping-ends/#luigi-has-a-point

Operating a business is risky: you can't ever be sure how many customers you'll have, or what they'll show up looking for. If you guess wrong, you'll either have too few workers to serve the crowd, or you'll pay workers to stand around and wait for customers. This is true even when your "business" is a "hospital."

Capitalists hate capitalism. Capitalism is defined by risk – like the risk of competitors poaching your customers and workers. Capitalists all secretly dream of a "command economy" in which other people have to arrange their affairs to suit the capitalists' preferences, taking the risk off their shoulders. Capitalists love anti-competitive exclusivity deals with suppliers, and they really love noncompete "agreements" that ban their workers from taking better jobs:

https://pluralistic.net/2023/04/21/bondage-fees/#doorman-building

One of the sleaziest, most common ways for capitalists to shed risk is by shifting it onto their workers' shoulders, for example, by sending workers home on slow days and refusing to pay them for the rest of their shifts. This is easy for capitalists to do because workers have a collective action problem: for workers to force their bosses not to do this, they all have to agree to go on strike, and other workers have to honor their picket-lines. That's a lot of chivvying and bargaining and group-forming, and it's very hard. Meanwhile, the only person the boss needs to convince to screw you this way is themself.

Libertarians will insist that this is impossible, of course, because workers will just quit and go work for someone else when this happens, and so bosses will be disciplined by the competition to find workers willing to put up with their bullshit. Of course, these same libertarians will tell you that it should be legal for your boss to require you to sign a noncompete "agreement" so you can't quit and get a job elsewhere in your field. They'll also tell you that we don't need antitrust enforcement to prevent your boss from buying up all the businesses you might work for if you do manage to quit.

In practice, the only way workers have successfully resisted being burdened with their bosses' risks is by a) forming a union, and then b) using the union to lobby for strong labor laws. Labor laws aren't a substitute for a union, but they are an important backstop, and of course, if you're not unionized, labor law is all you've got.

Enter the tech-bro, app in hand. The tech-bro's most absurd (and successful) ruse is "it's not a crime, I did it with an app." As in "it's not money-laundering, I did it with an app." Or "it's not a privacy violation, I did it with an app." Or "it's not securities fraud, I did it with an app." Or "it's not price-gouging, I did it with an app," or, importantly, "it's not a labor-law violation, I did it with an app."

The point of the "gig economy" is to use the "did it with an app" trick to avoid labor laws, so that bosses can shift risks onto workers, because capitalists hate capitalism. These apps were first used to immiserate taxi-drivers, and this was so successful that it spawned a whole universe of "Uber for __________" apps that took away labor rights from other kinds of workers, from dog-groomers to carpenters.

One group of workers whose rights are being devoured by gig-work apps is nurses, which is bad news, because without nurses, I would be dead by now.

A new report from the Roosevelt Institute goes deep on the way that nurses' lives are being destroyed by gig work apps that let bosses in America's wildly dysfunctional for-profit health care industry shift risk from bosses to the hardest-working group of health care professionals:

https://rooseveltinstitute.org/publications/uber-for-nursing/

The report's authors interviewed nurses who were employed through three apps: Shiftkey, Shiftmed and Carerev, and reveal a host of risk-shifting, worker-abusing practices that has nurses working for so little that they can't afford medical insurance themselves.

Take Shiftkey: nurses are required to log into Shiftkey and indicate which shifts they are available for, and if they are assigned any of those shifts later but can't take them, their app-based score declines and they risk not being offered shifts in the future. But Shiftkey doesn't guarantee that you'll get work on any of those shifts – in other words, nurses have to pledge not to take any work during the times when Shiftkey might need them, but they only get paid for those hours where Shiftkey calls them out. Nurses assume all the risk that there won't be enough demand for their services.

Each Shiftkey nurse is offered a different pay-scale for each shift. Apps use commercially available financial data – purchased on the cheap from the chaotic, unregulated data broker sector – to predict how desperate each nurse is. The less money you have in your bank accounts and the more you owe on your credit cards, the lower the wage the app will offer you. This is a classic example of what the legal scholar Veena Dubal calls "algorithmic wage discrimination" – a form of wage theft that's supposedly legal because it's done with an app:

https://pluralistic.net/2023/04/12/algorithmic-wage-discrimination/#fishers-of-men

Shiftkey workers also have to bid against one another for shifts, with the job going to the worker who accepts the lowest wage. Shiftkey pays nominal wages that sound reasonable – one nurse's topline rate is $23/hour. But by payday, Shiftkey has used junk fees to scrape that rate down to the bone. Workers have to pay a daily $3.67 "safety fee" to pay for background checks, drug screening, etc. Nevermind that these tasks are only performed once per nurse, not every day – and nevermind that this is another way to force workers to assume the boss's risks. Nurses also pay daily fees for accident insurance ($2.14) and malpractice insurance ($0.21) – more employer risk being shifted onto workers. Workers also pay $2 per shift if they want to get paid on the same day – a payday lending-style usury levied against workers whose wages are priced based on their desperation. Then there's a $6/shift fee nurses pay as a finders' fee to the app, a fee that's up to $7/shift next year. All told, that $23/hour rate cashes out to $13/hour.

On top of that, gig nurses have to pay for their own uniforms, licenses, equipment and equipment, including different colored scrubs and even shoes for each hospital. And because these nurses are "their own bosses" they have to deduct their own payroll taxes from that final figure. As "self-employed" workers, they aren't entitled to overtime or worker's comp, they get no retirement plan, health insurance, sick days or vacation.

The apps sell themselves to bosses as a way to get vetted, qualified nurses, but the entire vetting process is automated. Nurses upload a laundry list of documents related to their qualifications and undergo a background check, but are never interviewed by a human. They are assessed through automated means – for example, they have to run a location-tracking app en route to callouts and their reliability scores decline if they lose mobile data service while stuck in traffic.

Shiftmed docks nurses who cancel shifts after agreeing to take them, but bosses who cancel on nurses, even at the last minute, get away at most a small penalty (having to pay for the first two hours of a canceled shift), or, more often, nothing at all. For example, bosses who book nurses through the Carerev app can cancel without penalty on a mere two hours' notice. One nurse quoted in the study describes getting up at 5AM for a 7AM shift, only to discover that the shift was canceled while she slept, leaving her without any work or pay for the day, after having made arrangements for her kid to get childcare. The nurse assumes all the risk again: blocking out a day's work, paying for childcare, altering her sleep schedule. If she cancels on Carerev, her score goes down and she will get fewer shifts in the future. But if the boss cancels, he faces no consequences.

Carerev also lets bosses send nurses home early without paying them for the whole day – and they don't pay overtime if a nurse stays after her shift ends in order to ensure that their patients are cared for. The librarian scholar Fobazi Ettarh coined the term "vocational awe" to describe how workers in caring professions will endure abusive conditions and put in unpaid overtime because of their commitment to the patrons, patients, and pupils who depend on them:

https://www.inthelibrarywiththeleadpipe.org/2018/vocational-awe/

Many of the nurses in the study report having shifts canceled on them as they pull into the hospital parking lot. Needless to say, when your shift is canceled just as it was supposed to start, it's unlikely you'll be able to book a shift at another facility.

The American healthcare industry is dominated by monopolies. First came the pharma monopolies, when pharma companies merged and merged and merged, allowing them to screw hospitals with sky-high prices. Then the hospitals gobbled each other up, merging until most regions were dominated by one or two hospital chains, who could use buyer power to get a better deal on pharma prices – but also use seller power to screw the insurers with outrageous prices for care. So the insurers merged, too, until they could fight hospital price-gouging.

Everywhere you turn in the healthcare industry, you find another monopolist: pharmacists and pharmacy benefit managers, group purchasing organizations, medical beds, saline and supplies. Monopoly begets monopoly.

(Unitedhealthcare is extraordinary in that its divisions are among the most powerful players in all of these sectors, making it a monopolist among monopolists – for example, UHC is the nation's largest employer of physicians:)

https://www.thebignewsletter.com/p/its-time-to-break-up-big-medicine

But there two key stakeholders in American health-care who can't monopolize: patients and health-care workers. We are the disorganized, loose, flapping ends at the beginning and end of the healthcare supply-chain. We are easy pickings for the monopolists in the middle, which is why patients pay more for worse care every year, and why healthcare workers get paid less for worse working conditions every year.

This is the one area where the Biden administration indisputably took action, bringing cases, making rules, and freaking out investment bankers and billionaires by repeatedly announcing that crimes were still crimes, even if you used an app to commit them.

The kind of treatment these apps mete out to nurses is illegal, app or no. In an important speech just last month, FTC commissioner Alvaro Bedoya explained how the FTC Act empowered the agency to shut down this kind of bossware because it is an "unfair and deceptive" form of competition:

https://pluralistic.net/2024/11/26/hawtch-hawtch/#you-treasure-what-you-measure

This is the kind of thing the FTC could be doing. Will Trump's FTC actually do it? The Trump campaign called the FTC "politicized" – but Trump's pick for the next FTC chair has vowed to politicize it even more:

https://theintercept.com/2024/12/18/trump-ftc-andrew-ferguson-ticket-fees/

Like Biden's FTC, Trump's FTC will have a target-rich environment if it wants to bring enforcement actions on behalf of workers. But Biden's trustbusters chose their targets by giving priority to the crooked companies that were doing the most harm to Americans, while Trump's trustbusters are more likely to give priority to the crooked companies that Trump personally dislikes:

https://pluralistic.net/2024/11/12/the-enemy-of-your-enemy/#is-your-enemy

So if one of these nursing apps pisses off Trump or one of his cronies, then yeah, maybe those nurses will get justice.

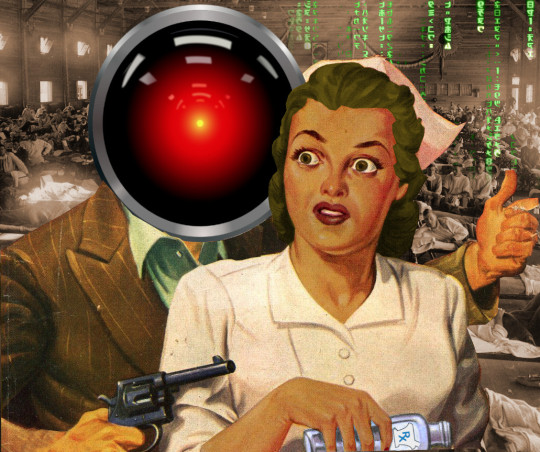

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#nursing#labor#algorithmic wage discrimination#uber for nurses#wage theft#gig economy#accountability sinks#precaratization#health#health care#usausausa#guillotine watch#monopolies#ai#roosevelt institute#shiftkey#shiftmed#carerev

410 notes

·

View notes

Text

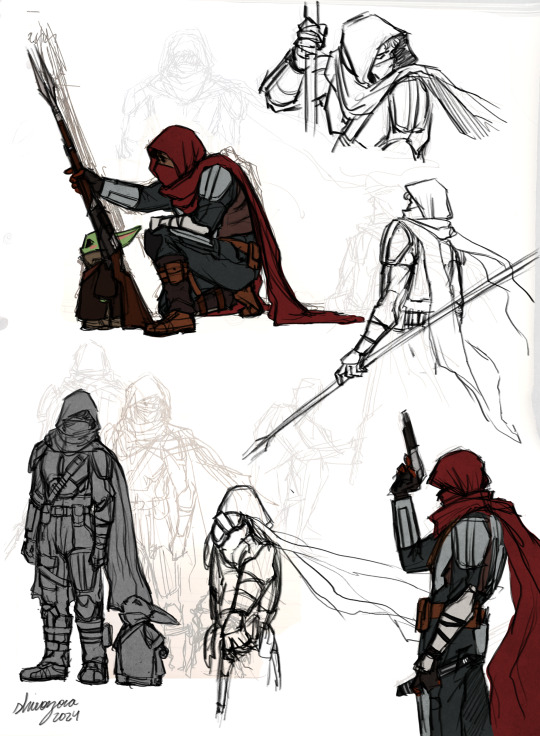

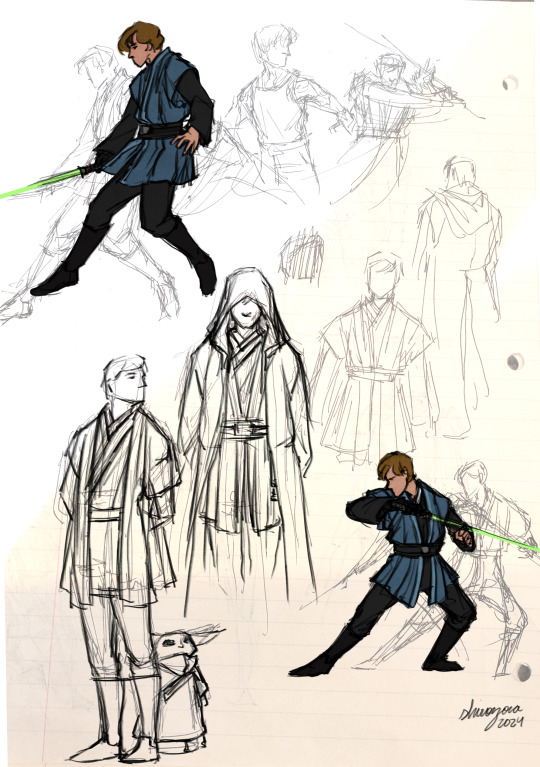

mustard on the beat, ho- MUSTAAAAAAAAAAAAARD Someone make it stop, make it fucking stop. I spent all week hyping myself up to gather up all my work sketches like a scrapbook and clean it all up to post here, and then Kendrick had to surprise drop on a Friday morning and ruin my work day and my headspace all weekend long.

Anyway, here's a dangerous dreams sketch dump.

It's been a long quiet, but RL had taken such a fucking toll that I had a real hard time finding the fucks to get creative. Who knows how much this past US presidential election will fuck up the entire rest of my life, but I'll take solace in finding community and in the little things and in Andor Season 2 and in the telling of The Stars.

Now that I got this out of the way, guess it's time to go fucking write some fucking words.

#shirozora draws#dinluke#lukedin#skydalorian#din djarin#luke skywalker#grogu#story: the stars#series: dangerous dreams#the mandalorian#star wars#how many more tags do i need?#anyway MUSTAAAAAAAAAAAAAAARD#istg this album has just taken over my entire brain#random snippets of every single song from this album keep playing in my head when i'm not actively listening#interrupted by MUSTAAAAAAAAAAAAAARD#i'm gonna laugh so hard if he screams mustard's name during the super bowl half-time show#and then 'mustard on the beat ho'#and let the audience sing all the words he probably had to censor out himself#also set up a second freshwater tank to eventually house a betta along with the shrimp culls so that's also been my life#and also locked down my twitter account and moved to bsky#been reposting art to bsky since i took everything down over in melon husks' echo chamber#keep your AI off my doodles you apartheiding fuckhead#time to climb back into this sandbox and work on the next chapter

378 notes

·

View notes

Text

Playing whack a mole with art thieves on Redbubble. Seems a bunch of my Locked Tomb designs have been scraped which is… cool.

As a soft reminder for consumers- if you’re buying from a shop like Redbubble/Teepublic etc it’s a good idea to look at the shops overall portfolio. If it’s all over the place it’s probably stolen art, it only takes a little digging to find the original artist to make sure they’re the ones getting paid for their work.

#ramblies#I’ve pulled down four copycats on the Nona burger#and another on my we do bones motherfucker#an actually good use for AI would be screening artist uploads to see if that artwork has previously been uploaded by a different account#cause all five of my cases have been direct grabs#I slapped watermarks on my designs but we’ll see if that helps at all

160 notes

·

View notes

Text

tonight! at 12am AST! the cd charms finally return!!! is this good marketing? I DONT KNOW!!!

#hlvrai#half life vr but the ai is self aware#gordon freeman#benrey#tommy coolatta#dr coomer#hlvrai bubby#i got a decent amount of them but after this i genuinely don't know if theyll come back!!!!!!!!#theyre a big money investment but an even bigger Time investment production-wise#another announcement incoming but from a different account some of you may recognize too :)

196 notes

·

View notes

Text

Hi...posting all my most popular dr strange fanarts for my first post since I'm currently known for that as of late <\3

#i only made an account on tumblr because of the meta ai thing on instagram 😭#dr strange#doctorstrange#multiverse of madness#dr strange multiverse of madness#blade marvel#eric brooks#breaking bad#brba#jesse pinkman#walter white#scarlet witch#wanda maximoff#wandavision#art#marvel#marvel fanart#fanart#titojefie#venture bros#dr orpheus#hatsune miku#megurine luka#artists on tumblr

367 notes

·

View notes

Text

ARISTOCATS AU W/ PRICE❗❗❗❗

tagging people who might be interested in seeing the final result after liking the work in progress ^^

@octopiys @gomzdrawfr @valscodblog @seconds-on-the-clock @freshlemontea

#ohhhmyygosssh look at themmmm 🥺🥺🥺🥺🥺#my art#THE SCHEDULING DIDNT WORK UGHUGHUDSNFHJSDFNJSDF#fanart#call of duty#cod#call of duty modern warfare#cod fanart#cod modern warfare#cod mw#cod mwiii#cod mwii#cod Price#captain price#john price x reader#captain john price#john price#the aristocats au#everytime i make a new au i remember that one ai roasting my instagram account#and said i make too many aus JSNDJKAD#a good day to be an artist#captain john price x reader#whatever you do dont think about price catching marie from falling#I SAID DONT

324 notes

·

View notes