#direct preference

Explore tagged Tumblr posts

Text

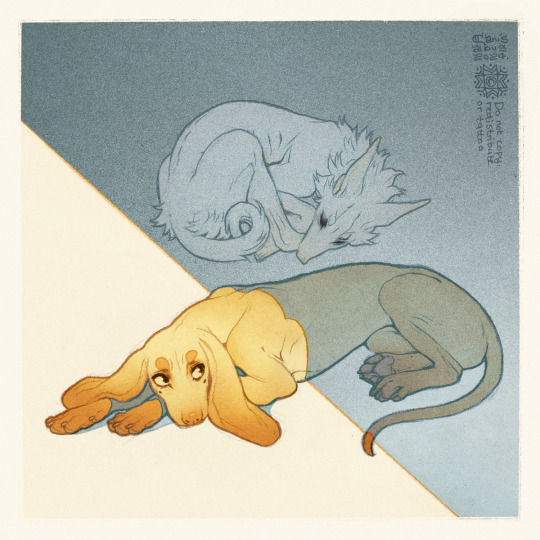

✦ Siesta ✦

#own art#own characters#CanisAlbus#art#artists on tumblr#Vasco#Machete#sighthound#dogs#canine#animals#Vasco's golden fur looks it's best in direct sunlight#Machete prefers shade because he burns easily and his eyes are sensitive to bright lights#I love light yellow I see yellow and I go HRAAAH

18K notes

·

View notes

Text

Putting things back together..

#tf2#lil pootis#blu team#blu medic#engiespy#tf2 medic#tf2 spy#tf2 engineer#blu spy#he loves his job#mfw i appreciate my medic but would still prefer to avoid direct eye contact (no reason in particular)

8K notes

·

View notes

Photo

Gentlemen Prefer Nature Girls (Doris Wishman, 1963)

4K notes

·

View notes

Text

🍑

#Pick him up and swing around#Pick him up and throw at somone#Deploy at a preferred direction#There are so many ways of weilding Steve Rogers#Bucky knows all the controls and failsafe#It's totally doable#steve rogers#stevebucky#james barns#james buchanan barnes#white wolf#stucky#steve bucky#steve and bucky#winter solider

171 notes

·

View notes

Text

ATEEZ(에이티즈) - 'Lemon Drop': Jongho

#ATEEZ#jonghoooo my guyyyyy#styling that works with his preferences and remains on theme? 10/10 we love it#i love when he gets to dance a lil harder in a comeback cause he starts making his stank faces when he's dancing i love that so much#can't wait for all the focus cameras for him imma add 1000 views my darn self#definitely feel like he was helping direct behind the scenes on this one. seems like he'd have fun messin with them with this one.#“it'd be better if you look this way then turn this way. Watch me.”#atz#ateez lemon dropping the killer visuals#jongho#kpop#8 makes 1 team#에이티니#에이티즈#종호#kq entertainment#ateez gifs#atiny#ateez lemon drop#golden hour pt 3#golden hour part 3#jongho gifs#choi jongho

146 notes

·

View notes

Note

hiiii can i request wheelchair user riz :)))) np ofc

-@transgenderfabianseacaster

YES PLEASE !!!!

#opened up the flood gates for the wheelchair user Riz headcanons I’ve been stirring up since I started FH#so in fy riz is already using mobility aids for his chronic fatigue but doesn’t prefer the wheelchair because the one he has is a-#secondhand hospital chair and the handles make it easy for people to direct him around which he HATES#so for most of fy he uses a cane (his dad’s old one that he thinks adds on to his cool detective aesthetic)#but in sy he essentially develops an autoimmune disease caused by kalina#(because he was born with her his body doesn’t know how to function once he’s cured and attacks itself in response. I’ve thought an it a lot#but it’s too much to put in tags)#it causes him a lot of pain and fatigue so he uses his Kalvaxus money to buy himself a better chair! yay!#he used to fall out a ton for various reasons so his mom made him get straps#I also spent like 20 minutes trying to figure out if being digitigrade would effect the structure of the chair? I came to zero conclusions#anyways#riz gukgak#fantasy high#d20#dimension 20#fhsy spoilers#undescribed#request#my art

158 notes

·

View notes

Text

I am still obsessed with how fucking rude Armand's little script notes were. "We need an animation here to convey how bad the hoarding was," bitch that is your boyfriend you are selling to his death. It's that necessary for you to let a whole theatre of humans know how bad his depression cave got?

#press says iwtv#interview with the vampire#admittedly armand is a weirdo who might be doing it for love of the game (directing his fucky little plays)#but he was exacting. he was mad.#possibly at louis possibly at lestat for preferring the depression hoarder because i will note that mr. lioncourt also broke laws and he#was supposed to make it out alive#anyway why the change of heart?#i'm sure it'll be covered in season three but lol i think it should be more coherent now

446 notes

·

View notes

Text

I like how I gave Frida two flags when she only needed one, meanwhile David has to squish both flags together. To be fair, it’s just like Frida to be incredibly organised and bring multiple back up flags, and for David to panic and not do that.

#which direction is his transgenderism? don’t ask me complicated questions#look all I know is that little guy ain’t cis#we as a fandom do make him mtf a lot which is AWESOME#but it’s also really sweet to imagine him as ftm#especially since he grew up with Frida#I imagine she helped him a lot when transitioning when they were little kids#frida is totally the kind of little kid to angrily correct adults misgendering people#David is probably too shy to correct people but frida is not#Hilda probably doesn’t know what being trans is until she moves to Trolberg and she thinks it’s so cool when she finds out#also nb Hilda! I think she uses she/they#she’s just like yeah I’m sort of a girl and I’m proud of that but I’m also definitely nothing close to a girl#like she doesn’t have a preference for either#I could see her using any pronouns other than he#I don’t think she’d use he#idk why#anyways those r just my headcanons happy pride!#hilda#hilda the series#netflix hilda#hilda netflix#art#my art#digital art#fanart#drawing#Hilda (Hilda)#David hilda#Hilda david#frida hilda#Hilda frida

439 notes

·

View notes

Text

study..? w flirty doods

#forgot to post this#gasp !!! same direction bangs#and wow wdym their Xs complement each other#hazbin hotel#charlie morningstar#vaggie#exorcist vaggie#hazbin hotel lute#chaggie#luggie#fallenwings#mybe art#small edit: if you prefer chaggie over luggie or vice versa it's ok but I really don't wanna know which one you think is the best#so please keep these kind of comments to yourself. thank you!

260 notes

·

View notes

Text

An angsty little comic I made for chapter 8 of my lem/sleeper fic, Carving Through the Dark :)

#i said it on my wip post and I’ll say it again#lem is in a slutty little tank top for PLOT REASONS not just because I prefer him that way#now did I purposely direct the plot in a way that he’d take some of his clothes off?#yeah#arrest me#carving through the dark fic#citizen sleeper#slem#lem citizen sleeper#sleeper citizen sleeper#extractor citizen sleeper#clarks art#my art#fanart#artists on tumblr#procreate

103 notes

·

View notes

Text

your secret boyfriend, luke, gets jealous over harry styles words: 987 request fill: anon ask! genre: fluff tw: luke's petty, that's all. author’s note: a short and sweet little luke fic! - “Wanna see my dressing room?”

Luke’s head snaps around as soon as the words fall upon his ears, eyes darting around to find your figure. Seeing as the Take Me Home tour with One Direction was his first big gig as an artist, he was eager to bring you along to experience it with him.

But now, watching as the star of the show, Harry Styles— with his brunet curls tousled perfectly and lean, muscular arms caging your body in against the speaker you sat on— casually invited you backstage, he wasn’t entirely sure it was the right decision.

“What back there is worth my time?..” You probe, looking over his shoulder at the fluorescent-lit hallway that led backstage. Harry laughs dryly and looks off to the side for a moment, thinking about the question for a second.

Glancing back at you with a playful glint in his emerald green eyes. “You’ll have to see for yourself.”

Luke’s grip on the neck of his guitar tightens and he clears his throat into the microphone. “Check, check. One two, one two.” He says a little louder than necessary. The man at the sound booth gives him a thumbs up from backstage, but Luke doesn’t notice it. His eyes stay trained on the way Harry seems to lean too comfortably into you as he talks. “Check, check.” He repeats louder, bringing his slender hands to the mic and pulling it closer to his mouth. The feedback buzzes in the air and garners the attention of any listeners, including Harry and yourself.

You give the boy a curt smile and a thumbs up, reassuring him that his audio was indeed working. Luke didn’t care about that, though. He just wanted your attention off of Harry.

“You sound great, Lu!” You call out from stage right, still leaning against the speaker with the boy’s arms on either side of you. You couldn’t deny that Harry was hot— you understood why he was considered such a heartthrob— but at the moment, you weren’t registering how the position could’ve looked compromising to your boyfriend. Even worse, your relationship wasn’t public yet. The boys knew, management knew, and his family knew. That was it.

Luke gives you a tight-lipped smile in response, eyes then traveling to Harry to give him a less-than-satisfied look of approval. The brunet doesn’t get the message, though, and continues to make conversation. “Lu? S’ that some childhood nickname you gave him?” Harry teases.

“You could say.” Your answer amuses him. Girls that didn’t throw themselves at Harry were few and far between, but he enjoyed the challenge of getting them to break. “Where’s mine then?” His fingers prod a sensitive spot in your ribcage and you squeal. Keeling over, you try to squirm away from his fingers. “Huh? Where’s my nickname?”

From the center of the stage, Luke leans forward to try and follow your movement. The sound of your collective laughter didn’t exactly make him feel very comfortable. Your laughter alone brought butterflies to his stomach, but it wasn’t him making you laugh and that was the problem. Breaking the silence of the ambience once again, he strikes his pick against the strings and the sound reverberates loudly through the arena. Even the staff working around them stop in place to give him a confused, slightly irritated look. Just as he planned, the surprise sound breaks the two of you up.

Harry glances over irately and Luke, satisfied with himself, gives him a smug look. “Check.” He announces into the microphone after the chord slowly decrescendoed.

“You don’t have to burst our eardrums for soundcheck.” Harry quips with a discontented scoff.

“You don’t have to have your hands all over her to have a conversation.” Luke retorts, leaning into the microphone so that the entire crew’s gaze now falls on you and Harry.

With a bruised ego, the boy steps back a little from you and gives you room to breathe. Even your cheeks heat up, you can’t imagine how Harry feels. With a little huff, Luke runs his hand through his blonde quiff tiredly. “You’re all good, boys. You can exit the stage.” A stage manager calls out to them from underneath his headset. The other boys take their time setting their gear down and grabbing a drink, but Luke hurriedly sets his guitar down, steps away from the mic, and heads towards you.

Since he’d been called out, Harry had been less touchy-feely than before, but he still stuck around you. He seemed adamant about showing you his dressing room, letting you meet the other members of One Direction or any other excuse he could find to bring you with him backstage, and that pissed Luke off.

Wrapping his arms around your upper torso, Luke looms over you from behind. “Sorry, about early, mate. 'Just trying to get the mic working properly.” He offers Harry a half-assed smile before letting his hands wander to your hips, giving them a reaffirming squeeze. “You havin’ fun backstage, baby?..” He stoops down a little to hear you properly as you respond. “Harry was just about to bring me to the dressing rooms!..” You tilt your head to the side, pecking his cheek sweetly. He hums at your words.

“Yeah? That sounds like fun! Mind if I join you two?” With an excited nod, you head towards the hallway backstage, the dingy lighting humming above you. Following close behind, Luke drapes his arm over your shoulder and gazes over his shoulder at Harry proudly. His hand travels down to rest against your ass, which he knows Harry is staring at intently, tucking his hand in your back pocket, leaving only his middle finger out for him to see. Harry clears his throat uncomfortably.

“What’re you doing?” You raise an eyebrow at Luke and he shrugs, directing his vision back in front of him with his dimples on full display.

“Hm? Nothin’. Don’t worry ‘bout it.”

#requests#5 seconds of summer#5sos imagine#5sos fanfic#5sos preference#5sos smut#5sos#5sos x reader#luke hemmings#5sos luke#luke 5sos#long way home#english love affair#5sos groupies#5sos leaked#michael 5sos#michael clifford#5sos michael#fetus luke hemmings#fetus michael clifford#5sos calum#calum 5sos#calum hood#fetus calum hood#ashton irwin x reader#5sos ashton#ashton 5sos#ashton irwin#fetus ashton irwin#fetus one direction

107 notes

·

View notes

Text

“Friendship” in the Revolutionary Girl Utena light novels

#rgu#revolutionary girl utena#touga kiryuu#saionji kyouichi#utena tenjou#anthy himemiya#wakaba shinohara#tbh might become a diehard rgu light novels fan#i honestly loved the expansion on saionji and touga#but miki and kozue I’m not sure… some interesting things here and there but i prefer the direction in the show#honestly same impressions as after the revolution manga. loved touga and saionji. miki and kozue meh which is so sad#give me more good kozue pleaaase

88 notes

·

View notes

Text

I wonder how many people really love and relate to Alisaie because they too were the girl who struggled to understand how folks around her could act like suffering and selfishness and hivemind behavior was normal and their softness was called a weakness and so they built up an abrasive exterior to force people to take them seriously and now they don't know how to be vulnerable without feeling embarrassed or cringe even though they still feel things so deeply it's almost suffocating...

#it's me#i feel like i really understand why ali is always trying to prove herself#how strong and capable she is#that she isn't just emotional or impulsive#like i stated on another post alisaie's “violence” is more related to both her desire to be taken seriously#and also her preference for direct action rather than politics#she's a protector and defendor#and she is deeply frustrated by the way the world turned out#and i think she partially has felt very lonely in that for a long time#she just wants people to do right by each other#and she hates to see suffering and injustice#and she maybe hates even more that the general public kind of just falls in line#alisaie#alisaie leveilleur

295 notes

·

View notes

Text

Zayn's transformation 2011-2025

because he's so fckn beautiful god help me

#i can talk about this all day#zayn#zayn malik#1d#one direction#1dir#his doe eyes never changed#so hot and cute at the same time i cant#just step on me at this point#or i step on you whichever you prefer

71 notes

·

View notes

Text

oh hey that reminds me, i didn't put my Shigadeku animation here

set in a universe where he survived and was rehabilitated

#shigadeku#dekushiga#shigaraki tomura#izuku midoriya#fan animation#my animation#if i recall when i tried to post this after making it it never uploaded. i TRIED#btw i dont care what direction the name goes in i prefer characters to switch and/or flip#my art

117 notes

·

View notes

Text

last year in my sketchbook: can representational art be more sustainable for me if i take it less seriously? can i balance the two? | my art

#(can i imbue some of my passions into the academic side of art? will that help to bring me balance?)#the stuff i make now is a direct result of this stuff although it looks nowhere near this stuff. i think that was the question i didnt know#that i was asking myself as i was doing this whole series.#i love these dearly but they felt somehow impersonal. very important for me realizing i dont super prefer creating this kind of stuff#i think i was creating with an audience in mind and i dont like doing that anymore#got really into how each spread made shadows onto the next. how visible it all was and how i couldnt hide between them#really loved the confrontation of using ink at this time#traditional art#sketchbook tour#mine#ok to rb#my art#please dont repost without credit pleeease :p#artists on tumblr#art

296 notes

·

View notes