#data and privacy

Explore tagged Tumblr posts

Text

From instructions on how to opt out, look at the official staff post on the topic. It also gives more information on Tumblr's new policies. If you are opting out, remember to opt out each separate blog individually.

Please reblog this post, so it will get more votes!

#third party sharing#third-party sharing#scrapping#ai scrapping#Polls#tumblr#tumblr staff#poll#please reblog#art#everything else#features#opt out#policies#data privacy#privacy#please boost#staff

47K notes

·

View notes

Text

youtube

Data privacy refers to the protection of personal and sensitive information collected, processed, and stored by individuals, organizations, or entities. It encompasses the set of practices, policies, regulations, and technologies designed to ensure that individuals have control over their personal data and that this data is handled in a way that respects their rights, maintains its confidentiality, integrity, and availability, and prevents unauthorized access, use, or disclosure.

Data privacy encompasses a range of concepts and principles that collectively aim to safeguard individuals' rights and interests in their personal information. It involves understanding and implementing measures to control who has access to your data, how it is used, and for what purposes. This extends to both online and offline contexts, as more and more of our activities and interactions occur in the digital realm.

Key aspects of data privacy include: Data Collection: Organizations collect various types of data from individuals, including names, addresses, phone numbers, email addresses, and more. Data privacy emphasizes the need for transparent and informed consent before collecting personal data, ensuring individuals are aware of how their data will be used.

Data Storage and Security: Personal data should be securely stored to prevent unauthorized access, breaches, or leaks. Organizations are expected to implement robust cybersecurity measures to safeguard sensitive information.

Data Processing: When organizations process personal data, such as analyzing it to gain insights or using it for targeted advertising, they must do so within the bounds of applicable laws and regulations. Individuals have the right to know what processing is taking place and to object to certain types of processing.

Data Sharing: Personal data should not be shared with third parties without explicit consent from the individual. This includes sharing data with advertisers, marketers, or other businesses.

User Control: Individuals should have the ability to access their own data, correct inaccuracies, and, in some cases, request the deletion of their data. This principle is enshrined in regulations like the European Union's General Data Protection Regulation (GDPR).

Understanding your digital rights in terms of data privacy empowers you to make informed decisions about sharing your personal information, using online services, and interacting in the digital world while maintaining a reasonable level of control over your own data.

What is Data Privacy? Understanding Your Digital Rights

#what is data privacy#understanding your digital rights#data privacy#data protection#data privacy definition#data security#digital rights#LimitLess Tech 888#online privacy#internet privacy#tech privacy#data privacy and security#data and privacy#dark side of data privacy#data privacy protection#privacy data#data protection and privacy#digital rights management#online privacy and security#data privacy explained#data privacy policy#data privacy awarness#Youtube

0 notes

Text

youtube

Data privacy refers to the protection of personal and sensitive information collected, processed, and stored by individuals, organizations, or entities. It encompasses the set of practices, policies, regulations, and technologies designed to ensure that individuals have control over their personal data and that this data is handled in a way that respects their rights, maintains its confidentiality, integrity, and availability, and prevents unauthorized access, use, or disclosure.

Data privacy encompasses a range of concepts and principles that collectively aim to safeguard individuals' rights and interests in their personal information. It involves understanding and implementing measures to control who has access to your data, how it is used, and for what purposes. This extends to both online and offline contexts, as more and more of our activities and interactions occur in the digital realm.

Key aspects of data privacy include: Data Collection: Organizations collect various types of data from individuals, including names, addresses, phone numbers, email addresses, and more. Data privacy emphasizes the need for transparent and informed consent before collecting personal data, ensuring individuals are aware of how their data will be used.

Data Storage and Security: Personal data should be securely stored to prevent unauthorized access, breaches, or leaks. Organizations are expected to implement robust cybersecurity measures to safeguard sensitive information.

Data Processing: When organizations process personal data, such as analyzing it to gain insights or using it for targeted advertising, they must do so within the bounds of applicable laws and regulations. Individuals have the right to know what processing is taking place and to object to certain types of processing.

Data Sharing: Personal data should not be shared with third parties without explicit consent from the individual. This includes sharing data with advertisers, marketers, or other businesses.

User Control: Individuals should have the ability to access their own data, correct inaccuracies, and, in some cases, request the deletion of their data. This principle is enshrined in regulations like the European Union's General Data Protection Regulation (GDPR).

Laws and regulations related to data privacy vary by country, with some of the most prominent ones being the GDPR in Europe, the California Consumer Privacy Act (CCPA) in the United States, and various other regional and national regulations.

Understanding your digital rights in terms of data privacy empowers you to make informed decisions about sharing your personal information, using online services, and interacting in the digital world while maintaining a reasonable level of control over your own data.

#dataprivacy#dataprotection#digitalrights#datasecurity#limitlesstech#dataprivacydefinition#onlineprivacy#internetprivacy#techprivacy#dataprivacyandsecurity#dataandprivacy#dataprivacyexplained#dataprivacypolicy#dataprivacyawarness

What is Data Privacy? Understanding Your Digital Rights

#what is data privacy#understanding your digital rights#data privacy#data protection#data privacy definition#data security#digital rights#LimitLess Tech 888#online privacy#internet privacy#tech privacy#data privacy and security#data and privacy#dark side of data privacy#data privacy protection#privacy data#data protection and privacy#digital rights management#online privacy and security#data privacy explained#data privacy policy#data privacy awarness#Youtube

0 notes

Text

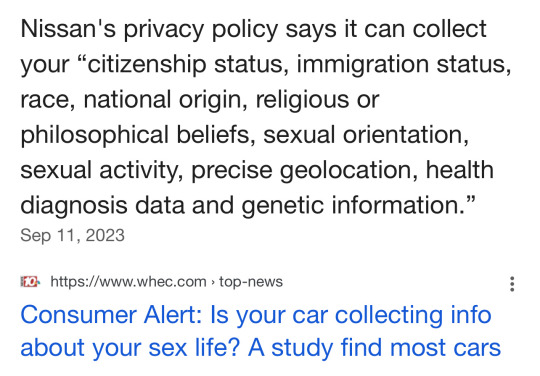

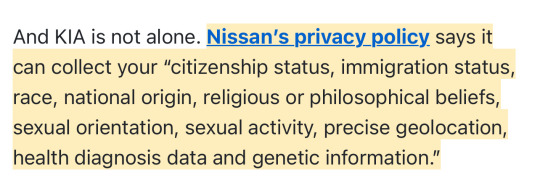

🗣️ This is for all new internet connected cars

A new study has found that your car likely knows more about you than your mom. That is disconcerting, but what’s even more so is what is being done with your information. It’s all about the Benjamins. Our private information is being collected and sold.

The Mozilla Foundation, a non-profit that studies internet and privacy issues, studied 25 car manufacturers. And it found every manufacturer sold in America poses a greater risk to your privacy than any device, app or social media platform.

Our cars are rolling computers, many of which are connected to the internet collecting information about how you drive and where. New cars also have microphones and sensors that give you safety features like automatic braking and drowsy driver detection. Those systems are also providing information. Got GPS or satellite radio? Then your car likely knows your habits, musical and political preferences.

Did you download your car’s app which gives you access to even more features? Well that also gives your car access to your phone and all the information on it.

The study found that of the 25 car brands, 84% say they sell your personal data.

And what they collect is astounding.

One example the study sites is KIA’s privacy policy. It indicates the company collects information about your sexual activity. I initially didn’t believe it until I pulled KIA’s privacy policy and read it. And it’s right there in black and white. It says it collects information about your “ethnicity, religious, philosophical beliefs, sexual orientation, sex life, or political opinions.

And it says it can keep your info for “as long as is necessary for the legitimate business purpose set out in this privacy notice.”

Translation: Nissan can keep your information as long as they want to. And more than half of the manufacturers (56%) say they will share your information with law enforcement if asked.

(continue reading) more ↵

#politics#data mining#smart cars#spyware#privacy rights#surveillance state#new cars#big brother#nissan#kia#connected cars#consumer alert#panopticon

9K notes

·

View notes

Text

(from The Mitchells vs. the Machines, 2021)

#the mitchells vs the machines#data privacy#ai#artificial intelligence#digital privacy#genai#quote#problem solving#technology#sony pictures animation#sony animation#mike rianda#jeff rowe#danny mcbride#abbi jacobson#maya rudolph#internet privacy#internet safety#online privacy#technology entrepreneur

8K notes

·

View notes

Text

So there's a class action lawsuit against google for spying on users who had the "don't track me" toggle turned on.

If you are in the US, had google's tracking "paused", and used a mobile device with that account at any time since 2016, you count. That going to be nearly every American who has ever poked with the privacy settings.

Google sent my email about about this straight to the spam folder. I'm not going to say they did that on purpose, but no matter if it's malicious supression of information or a poorly tuned auto filter, it's, uh, lets say "funny".

So yeah, go check your spam folders, guys.

621 notes

·

View notes

Text

Your car spies on you and rats you out to insurance companies

I'm on tour with my new, nationally bestselling novel The Bezzle! Catch me TOMORROW (Mar 13) in SAN FRANCISCO with ROBIN SLOAN, then Toronto, NYC, Anaheim, and more!

Another characteristically brilliant Kashmir Hill story for The New York Times reveals another characteristically terrible fact about modern life: your car secretly records fine-grained telemetry about your driving and sells it to data-brokers, who sell it to insurers, who use it as a pretext to gouge you on premiums:

https://www.nytimes.com/2024/03/11/technology/carmakers-driver-tracking-insurance.html

Almost every car manufacturer does this: Hyundai, Nissan, Ford, Chrysler, etc etc:

https://www.repairerdrivennews.com/2020/09/09/ford-state-farm-ford-metromile-honda-verisk-among-insurer-oem-telematics-connections/

This is true whether you own or lease the car, and it's separate from the "black box" your insurer might have offered to you in exchange for a discount on your premiums. In other words, even if you say no to the insurer's carrot – a surveillance-based discount – they've got a stick in reserve: buying your nonconsensually harvested data on the open market.

I've always hated that saying, "If you're not paying for the product, you're the product," the reason being that it posits decent treatment as a customer reward program, like the little ramekin warm nuts first class passengers get before takeoff. Companies don't treat you well when you pay them. Companies treat you well when they fear the consequences of treating you badly.

Take Apple. The company offers Ios users a one-tap opt-out from commercial surveillance, and more than 96% of users opted out. Presumably, the other 4% were either confused or on Facebook's payroll. Apple – and its army of cultists – insist that this proves that our world's woes can be traced to cheapskate "consumers" who expected to get something for nothing by using advertising-supported products.

But here's the kicker: right after Apple blocked all its rivals from spying on its customers, it began secretly spying on those customers! Apple has a rival surveillance ad network, and even if you opt out of commercial surveillance on your Iphone, Apple still secretly spies on you and uses the data to target you for ads:

https://pluralistic.net/2022/11/14/luxury-surveillance/#liar-liar

Even if you're paying for the product, you're still the product – provided the company can get away with treating you as the product. Apple can absolutely get away with treating you as the product, because it lacks the historical constraints that prevented Apple – and other companies – from treating you as the product.

As I described in my McLuhan lecture on enshittification, tech firms can be constrained by four forces:

I. Competition

II. Regulation

III. Self-help

IV. Labor

https://pluralistic.net/2024/01/30/go-nuts-meine-kerle/#ich-bin-ein-bratapfel

When companies have real competitors – when a sector is composed of dozens or hundreds of roughly evenly matched firms – they have to worry that a maltreated customer might move to a rival. 40 years of antitrust neglect means that corporations were able to buy their way to dominance with predatory mergers and pricing, producing today's inbred, Habsburg capitalism. Apple and Google are a mobile duopoly, Google is a search monopoly, etc. It's not just tech! Every sector looks like this:

https://www.openmarketsinstitute.org/learn/monopoly-by-the-numbers

Eliminating competition doesn't just deprive customers of alternatives, it also empowers corporations. Liberated from "wasteful competition," companies in concentrated industries can extract massive profits. Think of how both Apple and Google have "competitively" arrived at the same 30% app tax on app sales and transactions, a rate that's more than 1,000% higher than the transaction fees extracted by the (bloated, price-gouging) credit-card sector:

https://pluralistic.net/2023/06/07/curatorial-vig/#app-tax

But cartels' power goes beyond the size of their warchest. The real source of a cartel's power is the ease with which a small number of companies can arrive at – and stick to – a common lobbying position. That's where "regulatory capture" comes in: the mobile duopoly has an easier time of capturing its regulators because two companies have an easy time agreeing on how to spend their app-tax billions:

https://pluralistic.net/2022/06/05/regulatory-capture/

Apple – and Google, and Facebook, and your car company – can violate your privacy because they aren't constrained regulation, just as Uber can violate its drivers' labor rights and Amazon can violate your consumer rights. The tech cartels have captured their regulators and convinced them that the law doesn't apply if it's being broken via an app:

https://pluralistic.net/2023/04/18/cursed-are-the-sausagemakers/#how-the-parties-get-to-yes

In other words, Apple can spy on you because it's allowed to spy on you. America's last consumer privacy law was passed in 1988, and it bans video-store clerks from leaking your VHS rental history. Congress has taken no action on consumer privacy since the Reagan years:

https://www.eff.org/tags/video-privacy-protection-act

But tech has some special enshittification-resistant characteristics. The most important of these is interoperability: the fact that computers are universal digital machines that can run any program. HP can design a printer that rejects third-party ink and charge $10,000/gallon for its own colored water, but someone else can write a program that lets you jailbreak your printer so that it accepts any ink cartridge:

https://www.eff.org/deeplinks/2020/11/ink-stained-wretches-battle-soul-digital-freedom-taking-place-inside-your-printer

Tech companies that contemplated enshittifying their products always had to watch over their shoulders for a rival that might offer a disenshittification tool and use that as a wedge between the company and its customers. If you make your website's ads 20% more obnoxious in anticipation of a 2% increase in gross margins, you have to consider the possibility that 40% of your users will google "how do I block ads?" Because the revenue from a user who blocks ads doesn't stay at 100% of the current levels – it drops to zero, forever (no user ever googles "how do I stop blocking ads?").

The majority of web users are running an ad-blocker:

https://doc.searls.com/2023/11/11/how-is-the-worlds-biggest-boycott-doing/

Web operators made them an offer ("free website in exchange for unlimited surveillance and unfettered intrusions") and they made a counteroffer ("how about 'nah'?"):

https://www.eff.org/deeplinks/2019/07/adblocking-how-about-nah

Here's the thing: reverse-engineering an app – or any other IP-encumbered technology – is a legal minefield. Just decompiling an app exposes you to felony prosecution: a five year sentence and a $500k fine for violating Section 1201 of the DMCA. But it's not just the DMCA – modern products are surrounded with high-tech tripwires that allow companies to invoke IP law to prevent competitors from augmenting, recongifuring or adapting their products. When a business says it has "IP," it means that it has arranged its legal affairs to allow it to invoke the power of the state to control its customers, critics and competitors:

https://locusmag.com/2020/09/cory-doctorow-ip/

An "app" is just a web-page skinned in enough IP to make it a crime to add an ad-blocker to it. This is what Jay Freeman calls "felony contempt of business model" and it's everywhere. When companies don't have to worry about users deploying self-help measures to disenshittify their products, they are freed from the constraint that prevents them indulging the impulse to shift value from their customers to themselves.

Apple owes its existence to interoperability – its ability to clone Microsoft Office's file formats for Pages, Numbers and Keynote, which saved the company in the early 2000s – and ever since, it has devoted its existence to making sure no one ever does to Apple what Apple did to Microsoft:

https://www.eff.org/deeplinks/2019/06/adversarial-interoperability-reviving-elegant-weapon-more-civilized-age-slay

Regulatory capture cuts both ways: it's not just about powerful corporations being free to flout the law, it's also about their ability to enlist the law to punish competitors that might constrain their plans for exploiting their workers, customers, suppliers or other stakeholders.

The final historical constraint on tech companies was their own workers. Tech has very low union-density, but that's in part because individual tech workers enjoyed so much bargaining power due to their scarcity. This is why their bosses pampered them with whimsical campuses filled with gourmet cafeterias, fancy gyms and free massages: it allowed tech companies to convince tech workers to work like government mules by flattering them that they were partners on a mission to bring the world to its digital future:

https://pluralistic.net/2023/09/10/the-proletarianization-of-tech-workers/

For tech bosses, this gambit worked well, but failed badly. On the one hand, they were able to get otherwise powerful workers to consent to being "extremely hardcore" by invoking Fobazi Ettarh's spirit of "vocational awe":

https://www.inthelibrarywiththeleadpipe.org/2018/vocational-awe/

On the other hand, when you motivate your workers by appealing to their sense of mission, the downside is that they feel a sense of mission. That means that when you demand that a tech worker enshittifies something they missed their mother's funeral to deliver, they will experience a profound sense of moral injury and refuse, and that worker's bargaining power means that they can make it stick.

Or at least, it did. In this era of mass tech layoffs, when Google can fire 12,000 workers after a $80b stock buyback that would have paid their wages for the next 27 years, tech workers are learning that the answer to "I won't do this and you can't make me" is "don't let the door hit you in the ass on the way out" (AKA "sharpen your blades boys"):

https://techcrunch.com/2022/09/29/elon-musk-texts-discovery-twitter/

With competition, regulation, self-help and labor cleared away, tech firms – and firms that have wrapped their products around the pluripotently malleable core of digital tech, including automotive makers – are no longer constrained from enshittifying their products.

And that's why your car manufacturer has chosen to spy on you and sell your private information to data-brokers and anyone else who wants it. Not because you didn't pay for the product, so you're the product. It's because they can get away with it.

Cars are enshittified. The dozens of chips that auto makers have shoveled into their car design are only incidentally related to delivering a better product. The primary use for those chips is autoenshittification – access to legal strictures ("IP") that allows them to block modifications and repairs that would interfere with the unfettered abuse of their own customers:

https://pluralistic.net/2023/07/24/rent-to-pwn/#kitt-is-a-demon

The fact that it's a felony to reverse-engineer and modify a car's software opens the floodgates to all kinds of shitty scams. Remember when Bay Staters were voting on a ballot measure to impose right-to-repair obligations on automakers in Massachusetts? The only reason they needed to have the law intervene to make right-to-repair viable is that Big Car has figured out that if it encrypts its diagnostic messages, it can felonize third-party diagnosis of a car, because decrypting the messages violates the DMCA:

https://www.eff.org/deeplinks/2013/11/drm-cars-will-drive-consumers-crazy

Big Car figured out that VIN locking – DRM for engine components and subassemblies – can felonize the production and the installation of third-party spare parts:

https://pluralistic.net/2022/05/08/about-those-kill-switched-ukrainian-tractors/

The fact that you can't legally modify your car means that automakers can go back to their pre-2008 ways, when they transformed themselves into unregulated banks that incidentally manufactured the cars they sold subprime loans for. Subprime auto loans – over $1t worth! – absolutely relies on the fact that borrowers' cars can be remotely controlled by lenders. Miss a payment and your car's stereo turns itself on and blares threatening messages at top volume, which you can't turn off. Break the lease agreement that says you won't drive your car over the county line and it will immobilize itself. Try to change any of this software and you'll commit a felony under Section 1201 of the DMCA:

https://pluralistic.net/2021/04/02/innovation-unlocks-markets/#digital-arm-breakers

Tesla, naturally, has the most advanced anti-features. Long before BMW tried to rent you your seat-heater and Mercedes tried to sell you a monthly subscription to your accelerator pedal, Teslas were demon-haunted nightmare cars. Miss a Tesla payment and the car will immobilize itself and lock you out until the repo man arrives, then it will blare its horn and back itself out of its parking spot. If you "buy" the right to fully charge your car's battery or use the features it came with, you don't own them – they're repossessed when your car changes hands, meaning you get less money on the used market because your car's next owner has to buy these features all over again:

https://pluralistic.net/2023/07/28/edison-not-tesla/#demon-haunted-world

And all this DRM allows your car maker to install spyware that you're not allowed to remove. They really tipped their hand on this when the R2R ballot measure was steaming towards an 80% victory, with wall-to-wall scare ads that revealed that your car collects so much information about you that allowing third parties to access it could lead to your murder (no, really!):

https://pluralistic.net/2020/09/03/rip-david-graeber/#rolling-surveillance-platforms

That's why your car spies on you. Because it can. Because the company that made it lacks constraint, be it market-based, legal, technological or its own workforce's ethics.

One common critique of my enshittification hypothesis is that this is "kind of sensible and normal" because "there’s something off in the consumer mindset that we’ve come to believe that the internet should provide us with amazing products, which bring us joy and happiness and we spend hours of the day on, and should ask nothing back in return":

https://freakonomics.com/podcast/how-to-have-great-conversations/

What this criticism misses is that this isn't the companies bargaining to shift some value from us to them. Enshittification happens when a company can seize all that value, without having to bargain, exploiting law and technology and market power over buyers and sellers to unilaterally alter the way the products and services we rely on work.

A company that doesn't have to fear competitors, regulators, jailbreaking or workers' refusal to enshittify its products doesn't have to bargain, it can take. It's the first lesson they teach you in the Darth Vader MBA: "I am altering the deal. Pray I don't alter it any further":

https://pluralistic.net/2023/10/26/hit-with-a-brick/#graceful-failure

Your car spying on you isn't down to your belief that your carmaker "should provide you with amazing products, which brings your joy and happiness you spend hours of the day on, and should ask nothing back in return." It's not because you didn't pay for the product, so now you're the product. It's because they can get away with it.

The consequences of this spying go much further than mere insurance premium hikes, too. Car telemetry sits at the top of the funnel that the unbelievably sleazy data broker industry uses to collect and sell our data. These are the same companies that sell the fact that you visited an abortion clinic to marketers, bounty hunters, advertisers, or vengeful family members pretending to be one of those:

https://pluralistic.net/2022/05/07/safegraph-spies-and-lies/#theres-no-i-in-uterus

Decades of pro-monopoly policy led to widespread regulatory capture. Corporate cartels use the monopoly profits they extract from us to pay for regulatory inaction, allowing them to extract more profits.

But when it comes to privacy, that period of unchecked corporate power might be coming to an end. The lack of privacy regulation is at the root of so many problems that a pro-privacy movement has an unstoppable constituency working in its favor.

At EFF, we call this "privacy first." Whether you're worried about grifters targeting vulnerable people with conspiracy theories, or teens being targeted with media that harms their mental health, or Americans being spied on by foreign governments, or cops using commercial surveillance data to round up protesters, or your car selling your data to insurance companies, passing that long-overdue privacy legislation would turn off the taps for the data powering all these harms:

https://www.eff.org/wp/privacy-first-better-way-address-online-harms

Traditional economics fails because it thinks about markets without thinking about power. Monopolies lead to more than market power: they produce regulatory capture, power over workers, and state capture, which felonizes competition through IP law. The story that our problems stem from the fact that we just don't spend enough money, or buy the wrong products, only makes sense if you willfully ignore the power that corporations exert over our lives. It's nice to think that you can shop your way out of a monopoly, because that's a lot easier than voting your way out of a monopoly, but no matter how many times you vote with your wallet, the cartels that control the market will always win:

https://pluralistic.net/2024/03/05/the-map-is-not-the-territory/#apor-locksmith

Name your price for 18 of my DRM-free ebooks and support the Electronic Frontier Foundation with the Humble Cory Doctorow Bundle.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/03/12/market-failure/#car-wars

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#if you're not paying for the product you're the product#if you're paying for the product you're the product#cars#automotive#enshittification#technofeudalism#autoenshittification#antifeatures#felony contempt of business model#twiddling#right to repair#privacywashing#apple#lexisnexis#insuretech#surveillance#commercial surveillance#privacy first#data brokers#subprime#kash hill#kashmir hill

2K notes

·

View notes

Text

We ask your questions so you don’t have to! Submit your questions to have them posted anonymously as polls.

#polls#incognito polls#anonymous#tumblr polls#tumblr users#questions#polls about the internet#submitted may 29#privacy#online privacy#data privacy#internet privacy#ads#targeted ads

406 notes

·

View notes

Text

Websites that demand you give them extremely private information to "verify your age"

#would you punt them#into the sun#tumblr poll#polls#poll#tumblr polls#google#data protection#data privacy#online privacy#internet privacy#privacy#record sweep

671 notes

·

View notes

Text

I think it is very cool how tech companies, schools, employers, and universities make it actively difficult to distance yourself from Google, Microsoft, and Apple.

Yes most Linux distros are very stable, way more secure, privacy friendly, and way more customizable. But every institution is built to make technological independence as difficult as possible.

Yelling on the internet that everyone should switch to Linux and FOSS really ignores how much of the technological world is designed to not let that happen.

#yes switch to linux if you can#Data privacy and security needs to be addressed on a much larger legal scale#you cant consume your way out of this my friends#opensuse#linux#open source#data privacy

658 notes

·

View notes

Text

Christmas Data Strike 2024

Instagram is literally suppressing every video I made on reels about this so I’m turning to tumblr. On the 24th and 25th, we are striking on Meta services (Instagram, Facebook) as well as X/Twitter and Tiktok. Streaming services, google, and any amazon services should be boycotted as well if possible.

DO ✅

Engage with activities that are offline

Spend as little time online as possible

Tell your friends and family that you’re going dark and then put your phone in airplane mode!!

DO NOT ❌

Use any of these socials period. Do not give them your screen time

Use google to search for anything - use an alternative search engine

Buy anything online

Stream television or music - download your shows and watch offline if necessary

Why?

These corporations, especially during christmas, use our data to train their models for next year. They want to know what gifts we got and what we’re doing with them. By depriving these companies of our data, we are saying that we want to maintain the right to our data and privacy! Here’s a tiktok video that explains in more detail.

PLEASE reblog for more reach!!! Tumblr is the only platform I can think of that won’t suppress this strike.

#this isn’t just some fringe movement i made up either#millions of people are already doing this on tiktok#so please take this as a sign to participate#it may be difficult but i promise it’s worth it#data strike 2024#data strike#anti meta#anti capitalism#anti corporations#us politics#politics#donald trump#luigi mangione#data privacy#strike#privacy rights#anti social media#netflix#instagram#spotify#facebook#meta#tiktok#google#uhc

155 notes

·

View notes

Text

Hey so just saw this on Twitter and figured there are some people who would like to know @infinitytraincrew is apparently getting deleted tonight so if you wanna archive it do it now

#infinity train#third-party sharing#owen dennis#anti ai#tumblr staff making stupid decisions again#cryptid says stuff#don't just glaze it actively nightshade it#ai scraping#data privacy

417 notes

·

View notes

Photo

(via Vending machine error reveals secret face image database of college students | Ars Technica)

Canada-based University of Waterloo is racing to remove M&M-branded smart vending machines from campus after outraged students discovered the machines were covertly collecting facial-recognition data without their consent.

The scandal started when a student using the alias SquidKid47 posted an image on Reddit showing a campus vending machine error message, "Invenda.Vending.FacialRecognitionApp.exe," displayed after the machine failed to launch a facial recognition application that nobody expected to be part of the process of using a vending machine.

"Hey, so why do the stupid M&M machines have facial recognition?" SquidKid47 pondered.

The Reddit post sparked an investigation from a fourth-year student named River Stanley, who was writing for a university publication called MathNEWS.

Stanley sounded alarm after consulting Invenda sales brochures that promised "the machines are capable of sending estimated ages and genders" of every person who used the machines without ever requesting consent.

This frustrated Stanley, who discovered that Canada's privacy commissioner had years ago investigated a shopping mall operator called Cadillac Fairview after discovering some of the malls' informational kiosks were secretly "using facial recognition software on unsuspecting patrons."

Only because of that official investigation did Canadians learn that "over 5 million nonconsenting Canadians" were scanned into Cadillac Fairview's database, Stanley reported. Where Cadillac Fairview was ultimately forced to delete the entire database, Stanley wrote that consequences for collecting similarly sensitive facial recognition data without consent for Invenda clients like Mars remain unclear.

Stanley's report ended with a call for students to demand that the university "bar facial recognition vending machines from campus."

what the motherfuck

#m&m vending machine#secret face image database#college students#massive invasion of privacy#tech#collecting facial-recognition data without consent

474 notes

·

View notes

Text

The Supreme Court of Canada made a key privacy ruling Friday that means police must now first have a warrant or court order to obtain the numbers making up a person or organization's IP address.

The top court was asked to consider whether an IP address alone, without any of the personal information attached to it, was protected by an expectation of privacy under the Charter.

In a five-four split decision, the court said a reasonable expectation of privacy is attached to the numbers making up a person's IP address, and just getting those numbers alone constitutes a search.

Full article

Tagging: @politicsofcanada

#cdnpoli#canada#canadian politics#canadian news#canadian#privacy#expectation of privacy#ip address#ip addresses#digital privacy#data privacy#supreme court

368 notes

·

View notes

Text

reminder not to do what i almost did just now and tell tumblr what your high school mascot is/was just because someone made a fun poll about it, because that's a SECURITY QUESTION ON A BUNCH OF YOUR PASSWORD-PROTECTED PLATFORMS

277 notes

·

View notes

Text

#writing#my writing#my artwrok#my art#leftist#leftism#leftist art#anti capitalist#anti capitalism#anticapitalista#anticapitalist memes#anticapitalist art#protest art#cyberpunk#social media#web 2.0#data privacy#online privacy#internet privacy#invasion of privacy#robots#robot#feminist#feminism#anti censorship#online censorship#internet censorship#internet culture#enshittification#adblock

205 notes

·

View notes