#data brokers

Explore tagged Tumblr posts

Text

Ad-tech targeting is an existential threat

I'm on a 20+ city book tour for my new novel PICKS AND SHOVELS. Catch me TORONTO on SUNDAY (Feb 23) at Another Story Books, and in NYC on WEDNESDAY (26 Feb) with JOHN HODGMAN. More tour dates here.

The commercial surveillance industry is almost totally unregulated. Data brokers, ad-tech, and everyone in between – they harvest, store, analyze, sell and rent every intimate, sensitive, potentially compromising fact about your life.

Late last year, I testified at a Consumer Finance Protection Bureau hearing about a proposed new rule to kill off data brokers, who are the lynchpin of the industry:

https://pluralistic.net/2023/08/16/the-second-best-time-is-now/#the-point-of-a-system-is-what-it-does

The other witnesses were fascinating – and chilling, There was a lawyer from the AARP who explained how data-brokers would let you target ads to categories like "seniors with dementia." Then there was someone from the Pentagon, discussing how anyone could do an ad-buy targeting "people enlisted in the armed forces who have gambling problems." Sure, I thought, and you don't even need these explicit categories: if you served an ad to "people 25-40 with Ivy League/Big Ten law or political science degrees within 5 miles of Congress," you could serve an ad with a malicious payload to every Congressional staffer.

Now, that's just the data brokers. The real action is in ad-tech, a sector dominated by two giant companies, Meta and Google. These companies claim that they are better than the unregulated data-broker cowboys at the bottom of the food-chain. They say they're responsible wielders of unregulated monopoly surveillance power. Reader, they are not.

Meta has been repeatedly caught offering ad-targeting like "depressed teenagers" (great for your next incel recruiting drive):

https://www.technologyreview.com/2017/05/01/105987/is-facebook-targeting-ads-at-sad-teens/

And Google? They just keep on getting caught with both hands in the creepy commercial surveillance cookie-jar. Today, Wired's Dell Cameron and Dhruv Mehrotra report on a way to use Google to target people with chronic illnesses, people in financial distress, and national security "decision makers":

https://www.wired.com/story/google-dv360-banned-audience-segments-national-security/

Google doesn't offer these categories itself, they just allow data-brokers to assemble them and offer them for sale via Google. Just as it's possible to generate a target of "Congressional staffers" by using location and education data, it's possible to target people with chronic illnesses based on things like whether they regularly travel to clinics that treat HIV, asthma, chronic pain, etc.

Google claims that this violates their policies, and that they have best-of-breed technical measures to prevent this from happening, but when Wired asked how this data-broker was able to sell these audiences – including people in menopause, or with "chronic pain, fibromyalgia, psoriasis, arthritis, high cholesterol, and hypertension" – Google did not reply.

The data broker in the report also sold access to people based on which medications they took (including Ambien), people who abuse opioids or are recovering from opioid addiction, people with endocrine disorders, and "contractors with access to restricted US defense-related technologies."

It's easy to see how these categories could enable blackmail, spear-phishing, scams, malvertising, and many other crimes that threaten individuals, groups, and the nation as a whole. The US Office of Naval Intelligence has already published details of how "anonymous" people targeted by ads can be identified:

https://www.odni.gov/files/ODNI/documents/assessments/ODNI-Declassified-Report-on-CAI-January2022.pdf

The most amazing part is how the 33,000 targeting segments came to public light: an activist just pretended to be an ad buyer, and the data-broker sent him the whole package, no questions asked. Johnny Ryan is a brilliant Irish privacy activist with the Irish Council for Civil Liberties. He created a fake data analytics website for a company that wasn't registered anywhere, then sent out a sales query to a brokerage (the brokerage isn't identified in the piece, to prevent bad actors from using it to attack targeted categories of people).

Foreign states, including China – a favorite boogeyman of the US national security establishment – can buy Google's data and target users based on Google ad-tech stack. In the past, Chinese spies have used malvertising – serving targeted ads loaded with malware – to attack their adversaries. Chinese firms spend billions every year to target ads to Americans:

https://www.nytimes.com/2024/03/06/business/google-meta-temu-shein.html

Google and Meta have no meaningful checks to prevent anyone from establishing a shell company that buys and targets ads with their services, and the data-brokers that feed into those services are even less well-protected against fraud and other malicious act.

All of this is only possible because Congress has failed to act on privacy since 1988. That's the year that Congress passed the Video Privacy Protection Act, which bans video store clerks from telling the newspapers which VHS cassettes you have at home. That's also the last time Congress passed a federal consumer privacy law:

https://en.wikipedia.org/wiki/Video_Privacy_Protection_Act

The legislative history of the VPPA is telling: it was passed after a newspaper published the leaked video-rental history of a far-right judge named Robert Bork, whom Reagan hoped to elevate to the Supreme Court. Bork failed his Senate confirmation hearings, but not because of his video rentals (he actually had pretty good taste in movies). Rather, it was because he was a Nixonite criminal and virulent loudmouth racist whose record was strewn with the most disgusting nonsense imaginable).

But the leak of Bork's video-rental history gave Congress the cold grue. His video rental history wasn't embarrassing, but it sure seemed like Congress had some stuff in its video-rental records that they didn't want voters finding out about. They beat all land-speed records in making it a crime to tell anyone what kind of movies they (and we) were watching.

And that was it. For 37 years, Congress has completely failed to pass another consumer privacy law. Which is how we got here – to this moment where you can target ads to suicidal teens, gambling addicted soldiers in Minuteman silos, grannies with Alzheimer's, and every Congressional staffer on the Hill.

Some people think the problem with mass surveillance is a kind of machine-driven, automated mind-control ray. They believe the self-aggrandizing claims of tech bros to have finally perfected the elusive mind-control ray, using big data and machine learning.

But you don't need to accept these outlandish claims – which come from Big Tech's sales literature, wherein they boast to potential advertisers that surveillance ads are devastatingly effective – to understand how and why this is harmful. If you're struggling with opioid addiction and I target an ad to you for a fake cure or rehab center, I haven't brainwashed you – I've just tricked you. We don't have to believe in mind-control to believe that targeted lies can cause unlimited harms.

And those harms are indeed grave. Stein's Law predicts that "anything that can't go on forever eventually stops." Congress's failure on privacy has put us all at risk – including Congress. It's only a matter of time until the commercial surveillance industry is responsible for a massive leak, targeted phishing campaign, or a ghastly national security incident involving Congress. Perhaps then we will get action.

In the meantime, the coalition of people whose problems can be blamed on the failure to update privacy law continues to grow. That coalition includes protesters whose identities were served up to cops, teenagers who were tracked to out-of-state abortion clinics, people of color who were discriminated against in hiring and lending, and anyone who's been harassed with deepfake porn:

https://pluralistic.net/2023/12/06/privacy-first/#but-not-just-privacy

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2025/02/20/privacy-first-second-third/#malvertising

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#google#ad-tech#ad targeting#surveillance capitalism#vppa#video privacy protection act#mind-control rays#big tech#privacy#privacy first#surveillance advertising#behavioral advertising#data brokers#cfpb

419 notes

·

View notes

Text

Today, Mozilla Monitor (previously called Firefox Monitor), a free service that notifies you when your email has been part of a breach, announced its new paid subscription service offering: automatic data removal and continuous monitoring of your exposed personal information.

On your behalf, Mozilla Monitor will start with data removal requests, then scan every month to make sure your personal information stays off data broker sites. Monitor Plus will let you know once your personal information has been removed from more than 190+ data broker sites.

9 notes

·

View notes

Text

youtube

#Aperture#video essay#algorithm#algorithms#Eric Loomis#COMPAS#thought piece#computer#computer program#data#data brokers#targeted ads#data breach#terminal#the silver machine#AI#machine learning#healthcare#tech#technology#profit#Youtube

2 notes

·

View notes

Text

The FBI, CBP, and other agencies can track your location using WiFi and GPS data, but they rarely know how to do all of this and piece together enough of your location data to get a conviction without a confession. Most of this data is actually useless without other evidence or a confession, not to mention the easy method of making all of your digital behavior random and unpredictable to where their machines can’t make predictions on you, and any agents get a headache trying to understand what you’re doing. You can also have multiple phones logged into the same account running in different locations, faraday bags, and custom encrypted operating systems.

#social engineering#hacking#location data#GPS#WiFi#data spoofing#faraday bags#computers#programming#data#data brokers

3 notes

·

View notes

Text

Victory! California’s new data broker law will hold data brokers accountable and give us needed control over our data by making it easier to exercise our privacy rights.

Read more about what the new law does here:

#privacy #databrokers #CA

#privacy#data brokers#california#usa#america#law#humanrights#invasion of privacy#privacy rights#ausgov#politas#auspol#tasgov#taspol#australia#fuck neoliberals#neoliberal capitalism#anthony albanese#albanese government#native american#amerikkka#amerika#united states#unitedstateofamerica#class war#eat the rich#eat the fucking rich#fuck the gop#fuck the police#fuck the patriarchy

5 notes

·

View notes

Text

our anonymity, our right to privacy and therefor our right to PEACE is constantly being stripped away from us

"oh i dont care if google tracks and makes money off my data all they use it for is ads"

if thats you ^ you are FOOLISH to think this

maybe you have nothing to loose? youve never done anything wrong so you have nothing to hide?

but its never been about right and wrong doings. if the right kinds of oppressed people are considered wrong enough, then they will be prosecuted and their identity is more than enough to criminalize them

if you are not concerned its not about you.

the people who are concerned are not paranoid. they are likely one bad law or political movement away from becoming a victim of this terrible system.

and then our only saving grace is anonymity. your voice can and will be used against you if they know who it belongs to

the fingerprint data brokers have on us should be horrifying. the loophole that police dont need a warrant if the information is obtained via a data broker should be horrifying.

by normalizing it you do nothing but help big brother. we are living in 1984 and that is not a meme, its true.

whatever you can do within your own power to help prevent this is better than nothing. just switching browsers from chrome to Firefox is a big step.

learn to protect your privacy. not just for you but for all the rest of us too.

#we need to start caring about this collectively l#i can see how this is playing out and it give horrible precedent to policing to come#please protect yourself#please help protect others#data#online fingerprinting#police#data brokers#right to privacy#privacy#1984

4 notes

·

View notes

Text

Ah, data brokers, gathering info for the US government.

5 notes

·

View notes

Text

The context of the state of internet privacy laws (or lack thereof) make this “TikTok ban”even wilder.

Learn stuff the US govt doesn’t want you to know👇🏾^…^👇🏾

Congress is moving urgently to pass a TikTok ban that nobody asked for while 23 million homes are about to be priced out of affording the internet. 🔍 Affordable Connectivity Program

As a lil cherry on top, Congress has been dragging their feet to stop government surveillance. Our government is buying and selling our personal data from/to foreign adversaries. And yes, it is unconstitutional AF 🔍 Section-702 Foreign Intelligence Surveillance Act

This TikTok ban is the result of an wombo-combo of Sinophobia at the highest rungs of our government, bloated military spending, and excessive lobbying of right wing lawmakers by Facebook (Meta) to eliminate competition. 🔍Targeted Victory “Slap a Teacher” trend

So yeah fuck all this. We need to start demanding real data privacy from our government. Some helpful terms to know when you call your goons.

Data minimization: limit the kind of data collected and for how long

Net Neutrality: protection from Internet Providers selling our browsing information + blocking access to certain sites

Close the Digital Divide: establish affordable, readily available internet in public + private locations to stop internet/data monopolies + digital discrimination.

Regulate Data Brokers: Monitor data vendors and punish irresponsible/ illegal data purchases to protect privacy.

Trust, they don’t want you to know this stuff. Your call to your rep will be 10x spookier if you say any of this👆🏾

so the house of representatives just passed a bill that will now move to the senate to BAN tik tok completely in the united states and they are expected to argue that “national security risks” outweigh the freedom of speech and first amendment rights. biden has already said that if it gets to him, he will sign it. whether or not you use the app…��.this is something to be worried about

#ray writes#tik tok#tik tok ban#us politics#ref#data privacy#tiktok#cyberpunk#hope punk#government surveillance#sec 702 fisa#702#ACP#affordable connectivity program#internet access#data brokers#data poisoning#call your reps folks#call your reps#call your senators#Congress#us senate

55K notes

·

View notes

Text

They were warned

Picks and Shovels is a new, standalone technothriller starring Marty Hench, my two-fisted, hard-fighting, tech-scam-busting forensic accountant. You can pre-order it on my latest Kickstarter, which features a brilliant audiobook read by Wil Wheaton.

Truth is provisional! Sometimes, the things we understand to be true about the world change, and stuff we've "always done" has to change, too. There comes a day when the evidence against using radium suppositories is overwhelming, and then you really must dig that radium out of your colon and safely dispose of it:

https://pluralistic.net/2024/09/19/just-stop-putting-that-up-your-ass/#harm-reduction

So it's natural and right that in the world, there will be people who want to revisit the received wisdom and best practices for how we live our lives, regulate our economy, and organize our society. But not a license to simply throw out the systems we rely on. Sure, maybe they're outdated or unnecessary, but maybe not. That's where "Chesterton's Fence" comes in:

Let us say, for the sake of simplicity, a fence or gate erected across a road. The more modern type of reformer goes gaily up to it and says, "I don't see the use of this; let us clear it away." To which the more intelligent type of reformer will do well to answer: "If you don't see the use of it, I certainly won't let you clear it away. Go away and think. Then, when you can come back and tell me that you do see the use of it, I may allow you to destroy it."

https://en.wikipedia.org/wiki/G._K._Chesterton#Chesterton's_fence

In other words, it's not enough to say, "This principle gets in the way of something I want to do, so let's throw it out because I'm pretty sure the inconvenience I'm experiencing is worse than the consequences of doing away with this principle." You need to have a theory of how you will prevent the harms the principle protects us from once you tear it down. That theory can be "the harms are imaginary" so it doesn't matter. Like, if you get rid of all the measures that defend us from hexes placed by evil witches, it's OK to say, "This is safe because evil witches aren't real and neither are hexes."

But you'd better be sure! After all, some preventative measures work so well that no living person has experienced the harms they guard us against. It's easy to mistake these for imaginary or exaggerated. Think of the antivaxers who are ideologically committed to a world in which human beings do not have a shared destiny, meaning that no one has a moral claim over the choices you make. Motivated reasoning lets those people rationalize their way into imagining that measles – a deadly and ferociously contagious disease that was a scourge for millennia until we all but extinguished it – was no big deal:

https://en.wikipedia.org/wiki/Measles:_A_Dangerous_Illness

There's nothing wrong with asking whether longstanding health measures need to be carried on, or whether they can be sunset. But antivaxers' sloppy, reckless reasoning about contagious disease is inexcusable. They were warned, repeatedly, about the mass death and widespread lifelong disability that would follow from their pursuit of an ideological commitment to living as though their decisions have no effect on others. They pressed ahead anyway, inventing ever-more fanciful reasons why health is a purely private matter, and why "public health" was either a myth or a Communist conspiracy:

https://www.conspirituality.net/episodes/brief-vinay-prasad-pick-me-campaign

When RFK Jr kills your kids with measles or permanently disables them with polio, he doesn't get to say "I was just inquiring as to the efficacy of a longstanding measure, as is right and proper." He was told why the vaccine fence was there, and he came up with objectively very stupid reasons why that didn't matter, and then he killed your kids. He was warned.

Fuck that guy.

Or take Bill Clinton. From 1933 until 1999, American banks were regulated under the Glass-Steagall Act, which "structurally separated" them. Under structural separation, a "retail bank" – the bank that holds your savings and mortgage and provides you with a checkbook – could not be "investment bank." That meant it couldn't own or invest in businesses that competed with the businesses its depositors and borrowers ran. It couldn't get into other lines of business, either, like insurance underwriting.

Glass-Steagall was a fence that stood between retail banks and the casino economy. It was there for a fucking great reason: the failure to structurally separate banks allowed them to act like casinos, inflating a giant market bubble that popped on Black Friday in October 1929, kicking off the Great Depression. Congress built the structural separation fence to keep banks from doing it again.

In the 1990s, Bill Clinton agitated for getting rid of Glass-Steagall. He argued that new economic controls would allow the government to prevent another giant bubble and crash. This time, the banks would behave themselves. After all, hadn't they demonstrated their prudence for seven decades?

In fact, they hadn't. Every time banks figured out how to slip out of regulatory constraints they inflated another huge bubble, leading to another massive crash that made the rich obscenely richer and destroyed ordinary savers' lives. Clinton took office just as one of these finance-sector bombs – the S&L Crisis – was detonating. Clinton had no basis – apart from wishful thinking – to believe that deregulating banks would lead to anything but another gigantic crash.

But Clinton let his self interest – in presiding over a sugar-high economic expansion driven by deregulation – overrule his prudence (about the crash that would follow). Sure enough, in the last months of Clinton's presidency, the stock market imploded with the March 2000 dot-bomb. And because Congress learned nothing from the dot-com crash and declined to restore the Glass-Steagall fence, the crash led to another bubble, this time in subprime mortgages, and then, inevitably, we suffered the Great Financial Crisis.

Look: there's no virtue in having bank regulations for the sake of having them. It is conceptually possible for bank regulations to be useless or even harmful. There's nothing wrong with investigating whether the 70-year old Glass-Steagall Act was still needed in 1999. But Clinton was provided with a mountain of evidence about why Glass-Steagall was the only thing standing between Americans and economic chaos, including the evidence of the S&L Crisis, which was still underway when he took office, and he ignored all of them. If you lost everything – your home, your savings, your pension – in the dot-bomb or the Great Financial Crisis, Bill Clinton is to blame. He was warned. he ignored the warnings.

Fuck that guy.

No, seriously, fuck Bill Clinton. Deregulating banks wasn't Clinton's only passion. He also wanted to ban working cryptography. The cornerstone of Clinton's tech policy was the "Clipper Chip," a backdoored encryption chip that, by law, every technology was supposed to use. If Clipper had gone into effect, then cops, spooks, and anyone who could suborn, bribe, or trick a cop or a spook could break into any computer, server, mobile device, or embedded system in America.

When Clinton was told – over and over, in small, easy-to-understand words – that there was no way to make a security system that only worked when "bad guys" tried to break into it, but collapsed immediately if a "good guy" wanted to bypass it. We explained to him – oh, how we explained to him! – that working encryption would be all that stood between your pacemaker's firmware and a malicious update that killed you where you stood; all that stood between your antilock brakes' firmware and a malicious update that sent you careening off a cliff; all that stood between businesses and corporate espionage, all that stood between America and foreign state adversaries wanting to learn its secrets.

In response, Clinton said the same thing that all of his successors in the Crypto Wars have said: NERD HARDER! Just figure it out. Cops need to look at bad guys' phones, so you need to figure out how to make encryption that keeps teenagers safe from sextortionists, but melts away the second a cop tries to unlock a suspect's phone. Take Malcolm Turnbull, the former Australian Prime Minister. When he was told that the laws of mathematics dictated that it was impossible to build selectively effective encryption of the sort he was demanding, he replied, "The laws of mathematics are very commendable but the only law that applies in Australia is the law of Australia":

https://www.eff.org/deeplinks/2017/07/australian-pm-calls-end-end-encryption-ban-says-laws-mathematics-dont-apply-down

Fuck that guy. Fuck Bill Clinton. Fuck a succession of UK Prime Ministers who have repeatedly attempted to ban working encryption. Fuck 'em all. The stakes here are obscenely high. They have been warned, and all they say in response is "NERD HARDER!"

https://pluralistic.net/2023/03/05/theyre-still-trying-to-ban-cryptography/

Now, of course, "crypto means cryptography," but the other crypto – cryptocurrency – deserves a look-in here. Cryptocurrency proponents advocate for a system of deregulated money creation, AKA "wildcat currencies." They say, variously, that central banks are no longer needed; or that we never needed central banks to regulate the money supply. Let's take away that fence. Why not? It's not fit for purpose today, and maybe it never was.

Why do we have central banks? The Fed – which is far from a perfect institution and could use substantial reform or even replacement – was created because the age of wildcat currencies was a nightmare. Wildcat currencies created wild economic swings, massive booms and even bigger busts. Wildcat currencies are the reason that abandoned haunted mansions feature so heavily in the American imagination: American towns and cities were dotted with giant mansions built by financiers who'd grown rich as bubbles expanded, then lost it all after the crash.

Prudent management of the money supply didn't end those booms and busts, but it substantially dampened them, ending the so-called "business cycle" that once terrorized Americans, destroying their towns and livelihoods and wiping out their savings.

It shouldn't surprise us that a new wildcat money sector, flogging "decentralized" cryptocurrencies (that they are nevertheless weirdly anxious to swap for your gross, boring old "fiat" money) has created a series of massive booms and busts, with insiders getting richer and richer, and retail investors losing everything.

If there was ever any doubt about whether wildcat currencies could be made safe by putting them on a blockchain, it is gone. Wildcat currencies are as dangerous today as they were in the 18th and 19th century – only moreso, since this new bad paper relies on the endless consumption of whole rainforests' worth of carbon, endangering not just our economy, but also the habitability of the planet Earth.

And nevertheless, the Trump administration is promising a new crypto golden age (or, ahem, a Gilded Age). And there are plenty of Democrats who continue to throw in with the rotten, corrupt crypto industry, which flushed billions into the 2024 election to bring Trump to office. The result is absolutely going to be more massive bubbles and life-destroying implosions. Fuck those guys. They were warned, and they did it anyway.

Speaking of the climate emergency: greetings from smoky Los Angeles! My city's on fire. This was not an unforeseeable disaster. Malibu is the most on-fire place in the world:

https://longreads.com/2018/12/04/the-case-for-letting-malibu-burn/

Since 1919, the region has been managed on the basis of "total fire suppression." This policy continued long after science showed that this creates "fire debt" in the form of accumulated fuel. The longer you go between fires, the hotter and more destructive those fires become, and the relationship is nonlinear. A 50-year fire isn't 250% more intense than a 20-year fire: it's 50,000% more intense.

Despite this, California has invested peanuts in regular controlled burns, which has created biennial uncontrolled burns – wildfires that cost thousands of times more than any controlled burn.

Speaking of underinvestment: PG&E has spent decades extracting dividends for its investors and bonuses for its execs, while engaging in near-total neglect of maintenance of its high-voltage transmission lines. Even with normal winds, these lines routinely fall down and start blazes.

But we don't have normal winds. The climate emergency has been steadily worsening for decades. LA is just the latest place to be on fire, or under water, or under ice, or baking in wet bulb temperatures. Last week in southern California, we were warned to expect gusts of 120mph.

They were warned. #ExxonKnew: in the early 1970s, Exxon's own scientists warned them that fossil fuel consumption would kick off climate change so drastic that it would endanger human civilzation. Exxon responded by burying the reports and investing in climate denial:

https://exxonknew.org/

They were warned! Warned about fire debt. Warned about transmission lines. Warned about climate change. And specific, named people, who individually had the power to heed these warnings and stave off disaster, ignored the warnings. They didn't make honest mistakes, either: they ignored the warnings because doing so made them extraordinarily, disgustingly rich. They used this money to create dynastic fortunes, and have created entire lineages of ultra-wealthy princelings in $900,000 watches who owe it all to our suffering and impending dooml

Fuck those guys. Fuck 'em all.

We've had so many missed opportunities, chances to make good policy or at least not make bad policy. The enshitternet didn't happen on its own. It was the foreseeable result of choices – again, choices made by named individuals who became very wealthy by ignoring the warnings all around them.

Let's go back to Bill Clinton, because more than anyone else, Clinton presided over some terrible technology regulations. In 1998, Clinton signed the Digital Millennium Copyright Act, a bill championed by Barney Frank (fuck that guy, too). Under Section 1201 of the Digital Millennium Copyright Act, it's a felony, punishable by a five year prison sentence, and a $500,000 fine, to tamper with a "digital lock."

That means that if HP uses a digital lock to prevent you from using third-party ink, it's a literal crime to bypass that lock. Which is why HP ink now costs $10,000/gallon, and why you print your shopping lists with colored water that costs more, ounce for ounce, than the sperm of a Kentucky Derby winner:

https://pluralistic.net/2024/09/30/life-finds-a-way/#ink-stained-wretches

Clinton was warned that DMCA 1201 would soon metastasize into every kind of device – not just the games consoles and DVD players where it was first used, but medical implants, tractors, cars, home appliances – anything you could put a microchip into (Jay Freeman calls this "felony contempt of business-model"):

https://pluralistic.net/2023/07/24/rent-to-pwn/#kitt-is-a-demon

He ignored those warnings and signed the DMCA anyway (fuck that guy). Then, under Bush (fuck that guy), the US Trade Representative went all around the world demanding that America's trading partners adopt versions of this law (fuck that guy). In 2001, the European Parliament capitulated, enacting the EU Copyright Directive, whose Article 6 is a copy-paste of DMCA 1201 (fuck all those people).

Fast forward 20 years, and boy is there a lot of shit with microchips that can be boobytrapped with rent-extracting logic bombs that are illegal to research, describe, or disable.

Like choo-choo trains.

Last year, the Polish hacking group Dragon Sector was contacted by a public sector train company whose Newag trains kept going out of service. The operator suspected that Newag had boobytrapped the trains to punish the train company for getting its maintenance from a third-party contractor. When Dragon Sector investigated, they discovered that Newag had indeed riddled the trains' firmware with boobytraps. Trains that were taken to locations known to have third-party maintenance workshops were immediately bricked (hilariously, this bomb would detonate if trains just passed through stations near to these workshops, which is why another train company had to remove all the GPSes from its trains – they kept slamming to a halt when they approached a station near a third-party workshop). But Newag's logic bombs would brick trains for all kinds of reasons – merely keeping a train stationary for too many days would result in its being bricked. Installing a third-party component in a locomotive would also trigger a bomb, bricking the train.

In their talk at last year's Chaos Communications Congress, the Dragon Sector folks describe how they have been legally terrorized by Newag, which has repeatedly sued them for violating its "intellectual property" by revealing its sleazy, corrupt business practices. They also note that Newag continues to sell lots of trains in Poland, despite the widespread knowledge of its dirty business model, because public train operators are bound by procurement rules, and as long as Newag is the cheapest bidder, they get the contract:

https://media.ccc.de/v/38c3-we-ve-not-been-trained-for-this-life-after-the-newag-drm-disclosure

The laws that let Newag make millions off a nakedly corrupt enterprise – and put the individuals who blew the whistle on it at risk of losing everything – were passed by Members of the European Parliament who were warned that this would happen, and they ignored those warnings, and now it's happening. Fuck those people, every one of 'em.

It's not just European parliamentarians who ignored warnings and did the bidding of the US Trade Representative, enacting laws that banned tampering with digital locks. In 2010, two Canadian Conservative Party ministers in the Stephen Harper government brought forward similar legislation. These ministers, Tony Clement (now a disgraced sex-pest and PPE grifter) and James Moore (today, a sleazeball white-shoe corporate lawyer), held a consultation on this proposal.

6, 138 people wrote in to say, "Don't do this, it will be hugely destructive." 54 respondents wrote in support of it. Clement and Moore threw out the 6,138 opposing comments. Moore explained why: these were the "babyish" responses of "radical extremists." The law passed in 2012.

Last year, the Canadian Parliament passed bills guaranteeing Canadians the Right to Repair and the right to interoperability. But Canadians can't act on either of these laws, because they would have to tamper with a digital lock to do so, and that's illegal, thanks to Tony Clement and James Moore. Who were warned. And who ignored those warnings. Fuck those guys:

https://pluralistic.net/2024/11/15/radical-extremists/#sex-pest

Back in the 1990s, Bill Clinton had a ton of proposals for regulating the internet, but nowhere among those proposals will you find a consumer privacy law. The last time an American president signed a consumer privacy law was 1988, when Reagan signed the Video Privacy Protection Act and ensured that Americans would never have to worry that video-store clerks where telling the newspapers what VHS cassettes they took home.

In the years since, Congress has enacted exactly zero consumer privacy laws. None. This has allowed the out-of-control, unregulated data broker sector to metastasize into a cancer on the American people. This is an industry that fuels stalkers, discriminatory financial and hiring algorithms, and an ad-tech sector that lets advertisers target categories like "teenagers with depression," "seniors with dementia" and "armed service personnel with gambling addictions."

When the people cry out for privacy protections, Congress – and the surveillance industry shills that fund them – say we don't need a privacy law. The market will solve this problem. People are selling their privacy willingly, and it would be an "undue interference in the market" if we took away your "freedom to contract" by barring companies from spying on you after you clicked the "I agree" button.

These people have been repeatedly warned about the severe dangers to the American public – as workers, as citizens, as community members, and as consumers – from the national privacy free-for-all, and have done nothing. Fuck them, every one:

https://pluralistic.net/2023/12/06/privacy-first/#but-not-just-privacy

Now, even a stopped clock is right twice a day, and not every one of Bill Clinton's internet policies was terrible. He had exactly one great policy, and, ironically, that's the one there's the most energy for dismantling. That policy is Section 230 of the Communications Decency Act (a law that was otherwise such a dumpster fire that the courts struck it down). Chances are, you have been systematically misled about the history, use, and language of Section 230, which is wild, because it's exactly 26 words long and fits in a single tweet:

No provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider.

Section 230 was passed because when companies were held liable for their users' speech, they "solved" this problem by just blocking every controversial thing a user said. Without Section 230, there would be no Black Lives Matter, no #MeToo – no online spaces where the powerful were held to account. Meanwhile, rich and powerful people would continue to enjoy online platforms where they and their bootlickers could pump out the most grotesque nonsense imaginable, either because they owned those platforms (ahem, Twitter and Truth Social) or because rich and powerful people can afford the professional advice needed to navigate the content-moderation bureaucracies of large systems.

We know exactly what the internet looks like when platforms are civilly liable for their users' speech: it's an internet where marginalized and powerless people are silenced, and where the people who've got a boot on their throats are the only voices you can hear:

https://www.techdirt.com/2020/06/23/hello-youve-been-referred-here-because-youre-wrong-about-section-230-communications-decency-act/

The evidence for this isn't limited to the era of AOL and Prodigy. In 2018, Trump signed SESTA/FOSTA, a law that held platforms liable for "sex trafficking." Advocates for this law – like Ashton Kutcher, who campaigns against sexual assault unless it involves one of his friends, in which case he petitions the judge for leniency – were warned that it would be used to shut down all consensual sex work online, making sex workers's lives much more dangerous. This warnings were immediately borne out, and they have been repeatedly borne out every month since. Killing CDA 230 for sex work brought back pimping, exposed sex workers to grave threats to their personal safety, and made them much poorer:

https://decriminalizesex.work/advocacy/sesta-fosta/what-is-sesta-fosta/

It also pushed sex trafficking and other nonconsensual sex into privateforums that are much harder for law enforcement to monitor and intervene in, making it that much harder to catch sex traffickers:

https://cdt.org/insights/its-all-downsides-hybrid-fosta-sesta-hinders-law-enforcement-hurts-victims-and-speakers/

This is exactly what SESTA/FOSTA's advocates were warned of. They were warned. They did it anyway. Fuck those people.

Maybe you have a theory about how platforms can be held civilly liable for their users' speech without harming marginalized people in exactly the way that SESTA/FOSTA, it had better amount to more than "platforms are evil monopolists and CDA 230 makes their lives easier." Yes, they're evil monopolists. Yes, 230 makes their lives easier. But without 230, small forums – private message boards, Mastodon servers, Bluesky, etc – couldn't possibly operate.

There's a reason Mark Zuckerberg wants to kill CDA 230, and it's not because he wants to send Facebook to the digital graveyard. Zuck knows that FB can operate in a post-230 world by automating the deletion of all controversial speech, and he knows that small services that might "disrupt" Facebook's hegemony would be immediately extinguished by eliminating 230:

https://www.nbcnews.com/tech/tech-news/zuckerberg-calls-changes-techs-section-230-protections-rcna486

It's depressing to see so many comrades in the fight against Big Tech getting suckered into carrying water for Zuck, demanding the eradication of CDA 230. Please, I beg you: look at the evidence for what happens when you remove that fence. Heed the warnings. Don't be like Bill Clinton, or California fire suppression officials, or James Moore and Tony Clement, or the European Parliament, or the US Trade Rep, or cryptocurrency freaks, or Malcolm Turnbull.

Or Ashton fucking Kutcher.

Because, you know, fuck those guys.

Check out my Kickstarter to pre-order copies of my next novel, Picks and Shovels!

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2025/01/13/wanting-it-badly/#is-not-enough

#pluralistic#we told you so#told you so#foreseeable outcomes#enshittification#crypto cars#cryto means cryptography#data brokers#cda 230#section 230#230#newag#drm#copyfight#section 1201#wildcat money#backdoors#wanting it badly is not enough#dragon sector#great financial crisis#structural separation#guillotine watch#nerd harder

320 notes

·

View notes

Video

Ad-tech targeting is an existential threat by Cory Doctorow Via Flickr: pluralistic.net/2025/02/20/privacy-first-second-third/#ma... A towering figure with the head of HAL 9000 from Stanley Kubrick's '2001: A Space Odyssey,' surmounted by Trump's hair, wearing a tailcoat with a Google logo lapel pin. It peers through a magnifying glass at a distressed, tiny Uncle Sam figure perched in its monstrous palm. Image: Cryteria (modified) commons.wikimedia.org/wiki/File:HAL9000.svg CC BY 3.0 creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#illustration#collage#google#ad-tech#ad targeting#surveillance capitalism#vppa#video privacy protection act#mind-control rays#big tech#privacy#privacy first#surveillance advertising#behavioral advertising#data brokers#cfpb#flickr

0 notes

Text

...Wired's Dell Cameron and Dhruv Mehrotra report on a way to use Google to target people with chronic illnesses, people in financial distress, and national security "decision makers"

Google doesn't offer these categories itself, they just allow data-brokers to assemble them and offer them for sale via Google. Just as it's possible to generate a target of "Congressional staffers" by using location and education data, it's possible to target people with chronic illnesses based on things like whether they regularly travel to clinics that treat HIV, asthma, chronic pain, etc.

Google claims that this violates their policies, and that they have best-of-breed technical measures to prevent this from happening, but when Wired asked how this data-broker was able to sell these audiences – including people in menopause, or with "chronic pain, fibromyalgia, psoriasis, arthritis, high cholesterol, and hypertension" – Google did not reply.

....The most amazing part is how the 33,000 targeting segments came to public light: an activist just pretended to be an ad buyer, and the data-broker sent him the whole package, no questions asked. Johnny Ryan is a brilliant Irish privacy activist with the Irish Council for Civil Liberties. He created a fake data analytics website for a company that wasn't registered anywhere, then sent out a sales query to a brokerage ...

Google and Meta have no meaningful checks to prevent anyone from establishing a shell company that buys and targets ads with their services, and the data-brokers that feed into those services are even less well-protected against fraud and other malicious act.

All of this is only possible because Congress has failed to act on privacy since 1988. That's the year that Congress passed the Video Privacy Protection Act, which bans video store clerks from telling the newspapers which VHS cassettes you have at home. That's also the last time Congress passed a federal consumer privacy law

0 notes

Text

Recently I read about a massive geolocation data leak from Gravy Analytics, which exposed more than 2000 apps, both in AppStore and Google Play, that secretly collect geolocation data without user consent. Oftentimes, even without developers` knowledge.

I looked into the list (link here) and found at least 3 apps I have installed on my iPhone. Take a look for yourself! This made me come up with an idea to track myself down externally, e.g. to buy my geolocation data leaked by some application.

TL;DR

After more than couple dozen hours of trying, here are the main takeaways:

I found a couple requests sent by my phone with my location + 5 requests that leak my IP address, which can be turned into geolocation using reverse DNS.

Learned a lot about the RTB (real-time bidding) auctions and OpenRTB protocol and was shocked by the amount and types of data sent with the bids to ad exchanges.

Gave up on the idea to buy my location data from a data broker or a tracking service, because I don't have a big enough company to take a trial or $10-50k to buy a huge database with the data of millions of people + me. Well maybe I do, but such expense seems a bit irrational. Turns out that EU-based peoples` data is almost the most expensive.

But still, I know my location data was collected and I know where to buy it!

#surveillance capitalism#social media#big tech#ai#technology#google#facebook#apple#surveillance#data brokers#data collection#gravy analytics#app#developer#iphone#geolocation#data leak

1 note

·

View note

Text

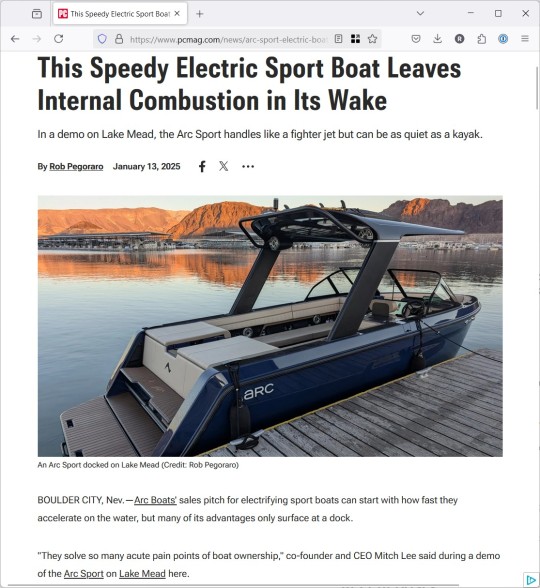

Weekly output: Arc Boats, data brokers, Mark Vena podcast, New Glenn, Starship, TikTok

Ideally, the week after CES would be a relaxing time with at least one day spent entirely disconnected from work. Because we don’t live in an ideal world, my week instead featured the Supreme Court blowing up TikTok and SpaceX blowing up the second stage of its giant Starship rocket. And on top of that, I wrote a post Wednesday for Patreon readers sharing further observations from CES. 1/13/2025:…

View On WordPress

#Arc Boats#Blue Origin#ces#data brokers#electric boat#First Amendment#Las Vegas#Mark Vena#New Glenn#Shmoocon#SpaceX#Starship#Supreme Court#TikTok#Yael Grauer

0 notes

Text

CFPB Takes Aim at Data Brokers in Proposed Rule Amending FCRA

On December 3, the CFPB announced a proposed rule to enhance oversight of data brokers that handle consumers’ sensitive personal and financial information. The proposed rule would amend Regulation V, which implements the Fair Credit Reporting Act (FCRA), to require data brokers to comply with credit bureau-style regulations under FCRA if they sell income data or certain other financial…

#AI#Artificial Intelligence#CFPB#consent#Consumer Financial Protection Bureau#CRA#credit history#credit score#data brokers#debt payments#Disclosure#Fair Credit Reporting Act#FCRA#financial information#personal information#privacy protection#Regulation V

1 note

·

View note

Text

SOCIAL SECURITY NUMBER RULES COULD BE CHANGED

In response to widespread data breaches exposing millions of Social Security numbers, the Consumer Financial Protection Bureau (CFPB) is proposing new regulations to strengthen consumer protections. These changes aim to classify certain data brokers as consumer reporting agencies, subjecting them to the same rules as credit bureaus under the Fair Credit Reporting Act (FCRA). This move seeks to…

0 notes

Text

Privacy Risks for Women Seeking Out-of-State Care

In this episode of Scam DamNation, host Lillian Cauldwell introduces an old type of scam still continuing in the United States targeted at women where personal information bought with a credit card tracks women who visit abortion clinics and tracks them back across state lines to their place of residence and nothing is being done about it. Senator Ron Wyden wrote an article in which he states…

#Abortion Clinics.#AI Scams.#Cell Phone#Credit Card Tracking&039;s#Data Breach#Data Brokers#Lillian Cauldwell#Privacy Risks#Scam DamNation#Scams#Senator Ron Wyden#women health#Women Rights

0 notes