#Yael Grauer

Explore tagged Tumblr posts

Text

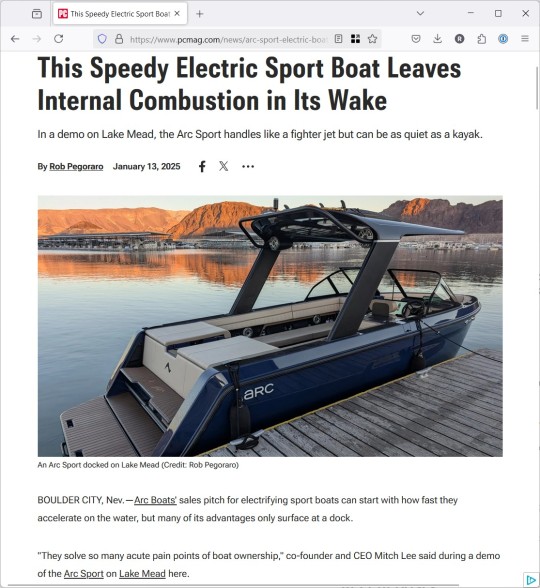

Weekly output: Arc Boats, data brokers, Mark Vena podcast, New Glenn, Starship, TikTok

Ideally, the week after CES would be a relaxing time with at least one day spent entirely disconnected from work. Because we don’t live in an ideal world, my week instead featured the Supreme Court blowing up TikTok and SpaceX blowing up the second stage of its giant Starship rocket. And on top of that, I wrote a post Wednesday for Patreon readers sharing further observations from CES. 1/13/2025:…

View On WordPress

#Arc Boats#Blue Origin#ces#data brokers#electric boat#First Amendment#Las Vegas#Mark Vena#New Glenn#Shmoocon#SpaceX#Starship#Supreme Court#TikTok#Yael Grauer

0 notes

Text

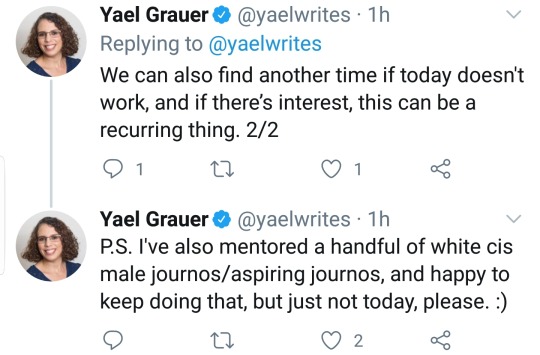

Link here

#twitter#yael grauer#bipoc#lgbtq#meetingbird#alex whitcomb#wired#projects#time#recurring#mentored#today

0 notes

Text

Staggering Variety of Clandestine Trackers Found in Popular Android Apps

Staggering Variety of Clandestine Trackers Found in Popular Android Apps

by Yael Grauer

RESEARCHERS AT YALE Privacy Lab and French nonprofit Exodus Privacy have documented the proliferation of tracking software on smartphones, finding that weather, flashlight, rideshare, and dating apps, among others, are infested with dozens of different types of trackers collecting vast amounts of information…

View On WordPress

0 notes

Text

CactusCon 2023 video index

Day 1 Track 1

6:31 Opening Remarks

8:08 Opening Remarks, Sounds begins to work

39:54 Scottish march with bagpipes

40:55 The Power of Community and How it Saved My Job by conf1ck3r

1:09:02 Egress Filtering in AWS, and other Sisyphean tasks by David Gilman

2:04:38 Hunting the Bian Lian Ransomware by Sean Pattee & Hallie Schukai

4:06:25 Keynote Introduction

4:08:47 Keynote: The New Challenges of Artificial Intelligence by Paulo Shakarian

5:12:35 Will AI Take My Infosec Job? By Andrew Cook

6:03:49 Windows Powershell for WMI by Kyle Nordby

6:53:26 How Expired Domains Lead to Facebook ATO by Jon Wade

Day 1 Track 2

0:00 Free Food Anyone? Wi-Fi Hacking and by Megi Bashi & Ryan Dinnan

13:37 Best Practices and Lessons Learned from Starting Up Mutiple OSINT Teams by Matt0177

1:18:03 A Peek inside an Adversary's Toolkit by Ryan Thompson

CANCELED Shodan OSINT Automation to Mass by me_dheeraj

5:13:46 Single Source of Truth: Documenting Incident Response by Casey Beaumont

6:14:00 Bridging the gap in the static and dynamic analysis of brinaries by mahaloz

Day 1 Track 3

0:00 Slides for the Exploiting IoT devices talk begins

2:30 Listen to the staff troubleshoot audio. Hi! We love you!!

9:30 Exploiting IoT devices through Physical Embedded Security by Ryan Jones

59:20 It's a Bird! It's a Plane! It's…A Script? By nuclearfarmboy

1:30:14 From Sticks and Stones to a Functional Forensics Lab by K Singh

4:33:13 Unmanaged Systems by Patterson Cake

5:27:45 Txt Me Bro: a text message phishing honeypot by Aftermath

5:57:20 Can Ducks Teach Us how to Share: What by Christian Taillon

Day 2 Track 1

0:00 IPFS, Dapps, & Blockchains by Edmund Brumaghin

40:10 PSA: Video Doorbells Record Audio, Too by Yael Grauer

1:10:41 Surveillance in your pocket by dnsprincess

1:41:00 Is Dead Memory Analysis Dead by Marcus Guevara

3:40:45 Keynote: Minimum Height Requirements by Sherrod Degrippo

4:40:47 CatusCon Annual Rock Paper Scissors Tournament

4:41:25 How to Job Hunt Like a Hacker! By BanjoCrashland

5:43:40 State of (Absolute) AppSec by @sethlaw & @cktricky

6:50:15 Getting Into The Reeds With Sensor Manipulation by Drew Porter

Day 2 Track 2

0:00 BloodHound Unleashed by n00py & ninjastyle82

50:40 Business Email Compromise by iHeartMalware

1:51:20 Worst of Cybersecurity Reporting 2022 by Yael Grauer & huertanix

4:53:30 Securing Your Home Network/Homelab by Maddoghoek77

5:52:00 PBR and Kittens by Jacob Wellnitz & James Navarro

6:51:40 Welcome to the Jungle: Pentesting AWS by ustayready

Day 2 Track 3

0:00 Hack your smart home first by Joey White

48:05 Building a Canarytoken to Monitor by Casey Smith & Jacob Torrey

1:47:00 I Came in Like a Wrecking Ball by iamv1nc3nt

4:48:10 Security Operations with Velociraptor by Eric Capuano & Whitney Champion

5:49:00 Sniper Incident Response by Chris Brewer

0 notes

Text

IRL Ads Are Taking Scary Inspiration From Social Media | by Yael Grauer | OneZero

0 notes

Text

Technology Can’t Predict Crime, It Can Only Weaponize Proximity to Policing

Technology Can’t Predict Crime, It Can Only Weaponize Proximity to Policing

Special thanks to Yael Grauer for additional writing and research.

In June 2020, Santa Cruz, California became the first city in the United States to ban municipal use of predictive policing, a method of deploying law enforcement resources according to data-driven analytics that supposedly are able to predict perpetrators, victims, or locations of future crimes. Especially interesting is that…

View On WordPress

0 notes

Text

Hackers Discuss the ‘Mr. Robot’ Series Finale

All good things must come to an end, but we got together one last time to discuss Mr. Robot’s series finale. This encompasses both part I and part II of the finale.

We talked about [SPOILERS, obv] Red Team Elliot vs. Blue Team Elliot, the iMac hack, FileVault, hidden partitions, body disposal (sort of), the finale’s music, recursive loops, disassociative identity disorder, and the show’s trans and queer representation. (The chat transcript has been edited for brevity, clarity, and chronology.)

This week’s team of experts includes:

Em Best: a former hacker and current journalist and transparency advocate with a specialty in counterintelligence and national security.

Jason Hernandez: Solutions Architect for Bishop Fox, an offensive security firm. He also does research into surveillance technology and has presented work on aerial surveillance.

Harlo Holmes: Director of Digital Security at Freedom of the Press Foundation.

Trammell Hudson: a security researcher who likes to take things apart.

Micah Lee: a technologist with a focus on operational security, source protection, privacy and cryptography, as well as Director of Information Security at The Intercept.

Freddy Martinez: a technologist and public records expert. He serves as a Director for the Chicago-based Lucy Parsons Labs.

Yael Grauer (moderator): an investigative tech reporter covering online privacy and security, digital freedom, mass surveillance and hacking

Part I

Computer repair shops

Yael: I just want to say that tiny computer repair shops are adorable. I go to certified Mac repair shops that aren't Genius Bars all the time because they're nicer and more honest about what repairs you need and what they'd recommend doing/buying. And generally cheaper. So I like that throughout the show there's been these little nerdy shops and it makes me miss Radio Shack. Shoutout to MacMedia in Scottsdale.

Jason: Yeah, I remember going to a few indie computer shops back in the day. It's sad that most of them didn't survive the rise of e-commerce. Well, I'm less sentimental about the death of Circuit City, even though I worked one Christmas there. I do miss CompUSA sometimes.

Harlo: I really miss mom n pop computer shops. They used to be all over NYC.

Coding trophies

Yael: Do you really get trophies for coding? Is that a thing?

Jason: Maybe at some corporate hackathons? It's not something I recall there being any kind of competition for kids to do when I was younger, but idk.

Trammell: I'm glad everyone else noticed the CODING trophy. Did you see the one with the laptop?

Yael: I'll have to go back and look again. I just remember wondering, what would Young Preppy Elliot have gotten trophies for?

Micah: I don't think I ever got coding trophies, but when I was in middle school, my team won a MATHCOUNTS competition, and in high school I traveled to another state to compete in a regional programming contest and got first place. So I'm basically like Elliot.

Parallel Universe

Trammell: Did you all notice the cars? Every single car in the perfect world was white and new.

Jason: Lots of Tesla Model Ss.

Trammell: There were lots of fun small things, like the sign advertising the nuclear power plant with the nuclear family illustration turned into an ad for the community center with the same family. Or the Township sign that said, "A nice place to live," but in the earlier world was defaced to remove the "nice."

Yael: I was very excited to see an old-school iMac. I had one of those!

Jason: Yeah, I think I actually did my first programming on one of those iMacs in Junior High. Some JavaScript embedded in HTML docs.

Harlo: I had one of those, too. Mine was purple.

Yael: I had one of those right after I graduated college. And then at my very first post-college job we had a bunch of them in a circle for people to check their email if they didn't have internet access at home.

Harlo: What's the parallel universe Mr. Robot font? Because that is a distinct logo, I can't quite place it…

Jason: It's definitely inspired by the Geek Squad logo.

Harlo: Yep, can confirm with Bing!

Dual Elliots

Trammell: Malek did a wonderful job between the two of them with such different characteristics. Very Tatiana Maslany from Orphan Black.

Yael: So if you were hacking your parallel/perpendicular universe self, would you be able to do it? I guess it's easier to break stuff than to protect it. Red Team Elliot has an advantage over Blue Team Elliot. So I guess my money is on Elliot being able to hack Elliot.

Micah: I noticed that when (A) hacker Elliot was searching preppy Elliot's apartment, he found a copy of Ubuntu Made Easy, the 2012 No Starch Press book.

(A) Elliot == Red Team Elliot

Preppy Elliot == Blue Team Elliot

Harlo: A CASE LOGIC FULL OF PHOTOS. WHAT A FUCKING DRIP

Trammell: Red Team Elliot also had the advantage that the iMac was old and would still boot into single-user mode. That was the same technique I had to use to break into a NeXT Cube with a long-forgotten root password:

Image: Trammell Hudson

Yael: I thought it was interesting that Elliot thought he could learn about Elliot through his social media profiles, at least as step 1. I just want to say that OSINT has its limitations in multiple ways… so what happened here was everything looked all happy and glamorous.

Jason: Everyone self-censors on public social media… one's Facebook profile is never a perfect representation of their life.

Yael: But one time I backstalked someone's Instagram to try to determine what kind of a person they were and my impression of this person’s character ended up being WAY WORSE than how they are IRL. So it cuts both ways.

iMac Hack

Micah: So the iMac hack, I never tried that before (in my earlier hacker days I never had Mac hardware), but this is the password reset he was doing. Also this.

Yael: Ooh, that's how he did the password reset?

Trammell: Once he was logged in to the account on the Mac, pretty much all of the websites would be accessible due to stored cookies in the browser, as well as any data backed up to iCloud. That's a huge advantage for Red Team Elliot.

Yael: What could Blue Team Elliot have done? Saved on an encrypted drive?

Harlo: FileVault.

Trammell: If the browser login cookies didn't persist, they would have been lost after the reboot into single-user mode. It's a hassle to have to re-login after restarting, which is why most folks don't do it.

Em: Would there have been an iCloud backup, though? Not introduced until 2011 and OS 10.7.

Jason: It looks like Blue Team Elliot used a weak password that was cracked pretty quickly on a single machine.

Harlo: But that was on the "hidden" partition.

Micah: So there's something in how (A) Elliot hacked preppy Elliot's computer that I don't understand. Initially, the password reset failed because of FileVault, right? And then he got around FileVault by booting to single-user mode, deleting /var/db/.applesetupdone which causes the Setup Assistant to re-run on reboot… but how does that unlock the FileVault drive? Wouldn't he still need preppy Elliot's password?

Jason: Yeah, I think so.

Yael: Maybe it was just the same complex password Red Team Elliot would've guessed

Trammell: Maybe he had an idea for what it might be and was able to limit the search space for the password testing.

Em: There are apparently ways around this. You need my Yubikey to boot into my Mac. Unless you go into Rescue mode, and then you can disable that requirement entirely.

Harlo: He definitely went into Rescue mode.

Em: "Physical access is total access."

Micah: He deleted the file “.setupdone,” though in real life it's called “.applesetupdone.”

Also, he didn't include spaces where they should be. I wonder if the producers decided to slightly modify the commands so they won't actually work if someone tried them? Like instead of /sbin/mount-rw/ the real command is /sbin/mount -rw /

Trammell: He seemed to miss spaces in several of the commands.

Em: Yeah, the iMac had no iCloud. So iCloud hacking wasn’t an option. It didn't—and couldn't—run the required OS.

Yael: I know they show has invented different tech that mimics real tech before to avoid the situation where the company says no and they get in trouble.

Micah: Yes they have. Like, I remember they've slightly altered Windows screenshots to remove words like "Windows" and "Microsoft," and I think they've maybe skipped spaces before, too.

Yael: So like for example if they wanted a Windows tool and didn't want to ask Windows they would just create an alternative Windows-esque tool that didn't say Windows because if a company says no and they do that, then it's worse than if they never asked, but at the same time, they got real tech from, like, Bishop Fox, etc. with permission.

Em: That's called Greeking. Or, as Wikipedia mostly calls it, "Product Displacement." But industry term is, IIRC, "greeking it." Interesting to note that with the missed spaces, etc., they animate the screens entirely. So it raises the question of how deliberate those things are.

Trammell: Back on the self-hack, right quick… he uses Chrome in headless mode to extract the various login cookies using a WebSocket debugger. That's super clever.

Yael: Can you parse that for us?

Trammell: Rather than running Chrome and trying out all of the different sites to see which Preppy Elliot used, he had Chrome tell him the stored login passwords (I think that is what those are in the value field).

Harlo: Can we say more about this! I heard of a new wave of identity theft where it's just about stealing people's browser footprints; is this related?

Trammell: Google tries to identify real people by having things like login cookies, search history, etc. So if you can copy some of those tokens from real people, you don't have to run lots of automatic searches to try to build up a history. (Preppy Elliot is also on twitter, but I can't make out the handle.) I think that is more about passing the Not a Robot test.

Hidden Partition

Micah: So I don't quite get getting through the first full disk encryption FileVault, but the hidden partition part was pretty awesome

Harlo: How do we know he had FileVault on?

Micah: When Red Team Elliot attempts to reset Blue Team Elliot's password in recovery mode, but can't, he says, "Shit. The drive's encrypted. Maybe he's more like me than I thought."

Em: (Again, he technically couldn't have because it wasn't a feature introduced until OS X 10.3 which his old iMac couldn't handle unless it was just a shell and he'd completely swapped out all of the tech, which is problematic in other ways but definitely doable)

Jason: I just watched the Mac hacking scene up to the discovery of the hidden disk volume, and I'm a little confused about what happens. I assume that he didn't actually get through FileVault to access Blue Team Elliot's user profile, despite his comment "shit, the boot drive is encrypted, maybe he's more like me than I thought.” The list of volumes also shows the filesystem is APFS, which is pretty new

Trammell: I can't find the full GIF, but the hacking scene was the most realistic since Hackers: https://images.gr-assets.com/hostedimages/1500997301ra/23405528.gif (That one is like a 12 hour time-lapse of Dade sitting at the keyboard staring at code and printouts while the sun sets, the rest of the crew dances, has pizza, and the sun rises).

Yael: Heh. Accurate. Except needs more Mountain Dew.

Micah: Speaking of The Matrix, the earthquakes were absolutely caused by glitches in the matrix

Harlo: Oh, hey, just a thought. What was that Mac OS X vuln where the root password was just ""? [nothing] Is that applicable here?

Em: That was a Sierra bug.

Trammell: The Intel Management Engine had a vulnerability where they did a password string compare with strncmp(real_password, user_input, strlen(user_input)), which means that an empty input will match.

Yael: Ooh, that's a fun bug.

Micah: Here’s the password cracker script. The password cracking script is really simple. It just reads from stdin, and tries mounting the hidden partition one line at a time. When Elliot actually runs it, he passes in passwords.list, which is some wordlist he must have downloaded or created. And apparently, it included the password ELLIOTS-desktop, which I think was the hidden partition's password.

Trammell: I would expect Preppy Elliot to use pico/nano, while Hacker Elliot would be more of a vi or ed sort of person.

Yael: Oh no, Elliot. You made it too easy for Elliott. So what's the 101 rundown of how the entire hack worked? He goes in, runs from safety mode, overrides…something, downloads a password list?

Trammell: Single-user mode, which used to not require any passwords. And provides write access to the non-FileVault encrypted parts of the drive. So that he could then add new users, change passwords, etc.

Micah: I think overall it was realistic in that if someone has physical access to your computer, there is a good chance they can get in. (At least, in the olden days). That gave him access to the disks and let him log in as the normal user. Which then let him do the social media searches, etc.

Jason: Why is it calling apfs-fuse on a Mac? Is that a thing?

Trammell: Fuse is a general-purpose user space file system.

Micah: Fuse is also used for other types of partitions, like macfuse is a dependency for Veracrypt.

Trammell: He's using fuse to probe the apfs encryption key, since it doesn't have any rate limiting, and since he doesn't need a slow GUI to keep popping up. Essentially using apfs-fuse as an offline attack against the password, where the official apple tools have provisions to make them less useful for automated attacks).

Trammell: That clarifies why you'd use a FUSE driver on a Mac for a native filesystem.

Jason: I think this might be the driver he's using: https://github.com/kholia/apfs2john— it looks like it runs on MacOS.

Yael: But wait, he had to do something else to find the hidden partition.

Harlo: It's NOT hidden, though. It's just… there. If you can find it with diskutil list, it's not hidden.

Jason: Yeah, it's not really hidden on/from the system. It's just not automatically mounted.

Harlo: Also, it's not hidden if you can find it running regular commands, and it's also labeled "fuck society." "Here are my secrets, thank you.bat"

Jason: I think Blue Team Elliot's "hiding" of those images is just intended to keep Angela/friends/family from stumbling on his weird hobby drawings.

Yael: So perfect Elliot wanted another life… and drew Elliot's life and F Society because “I figured that’s what an anarchist hacker would come up with,” which is actually pretty good tbh. I mean he did have a hoodie, but pretty accurate.

Trammell: They invented each other—Hacker Elliot imagined what a perfect life would be, and Preppy Elliot imagined what a l337 hacker would be like.

Harlo: One more thing about the hack. Passwords.list, do we have a link to that? Just a plug for diceware, my friends.

Micah: Whenever I need good wordlists to try to crack a password, my first try is using one of the lists in this repository: https://github.com/danielmiessler/SecLists.

Jason: Rockyou.txt is a pretty small password list that is highly effective in pentesting

Yael: Wait, if it's effective in pentesting, do you need to not use it for yourself? 🙂

Jason: Rockyou.txt is a list of a lot of common passwords. It's a quick and easy list to run through penetration testing tools and guess all of them quickly if no rate limiting is involved. If you use a password that is on that list, you should change it 🙂

Trammell: You can also do the RAND Corp style guaranteed random password generation:

Image: Trammell Hudson

Yael: I think EFF sells one, too?

Harlo: Nope, WE sell a dope diceware zine, but EFF sells awesome dice to go with it. 🙂

Micah: These are sitting on my desk at the moment.

Image: Micah Lee

Yael: Okay, I will link to these things for nerdy stocking stuffers/Hanukkah presents.

Killing Your Alt

Yael: Last thing for this episode. Would you kill your alt? I want to knooooooow.

Trammell: https://tvtropes.org/pmwiki/pmwiki.php/Main/KillingYourAlternateSelf

Micah: I wouldn't kill my alt. If I were in that situation — without understanding exactly how real this world I was in was or not—I would have called 911 and tried to save him.

Yael: I think I would try to reason with my alt, esp. if she didn't call the cops on me.

Jason: Yeah, I'd call 911 and disappear.

Yael; Or I'd pretend I was a long-lost twin, maybe?

Harlo: I would NOT kill my alt. I think they would be really fun.

Micah: Even if they're sort of the same person, it's clear the other Elliot is like a sentient human. I guess it's true that the two of them interacting tended to cause earthquakes, but still.

Yael: I thought the earthquakes were from the nuclear reactor blast that did/didn't happen.

Em: It's proposed by Elliot, but what we see contradicts the notion that the earthquakes are caused by their proximity or interacting.

Micah: The first earthquake happened when he heard his alt's voice on the phone, then another happened when they touched each other

Em: No. The first earthquake happened when Elliot woke up in the street.

Part II

Body Disposal

Micah: Elliot is fuckin dark. What's he gonna do with the body?

Em: For the record, it would be the easiest body disposal of all time since no one would be reported missing. The only way to get caught would be in the act.

Harlo: Isn't that the whole thing with multiple timelines? You have to kill your alt?

Em: That's a myth.

Micah: This is related to the philosophy of transporters in Star Trek. If you beam from one location to another, you actually end up murdering the first you and materialize as the second you. Is it ethical? There was even a TNG episode where a double of Riker got stuck in the transporter buffer for years or something.

Em: Well that's not murder, that's suicide.

Trammell: The Riker double went on to live a productive life in a different Trek franchise.

Harlo: Why wouldn't we want to have two Rikers? I mean, one was burdened with ineffable and unprecedented trauma…

Em: There are two things to discuss. One is that it's not a body Mastermind!Elliot is trying to dispose of its Host!Elliot. As in, actual Host!Elliot. The consciousness. You can't really examine the episode without looking at it through the lens of dissociative identity disorder. The show's version of dissociative identity disorder.

Music

Freddy: I just wanted to say that the song Ne me quitte pas that was playing before the wedding was covered by Nina Simone. A song about cowardice men. Harry Anslinger, who created the original Bureau of Narcotics (before the DEA) was obsessed with Simone. (Anslinger would obtain heroin for Joseph McCarthy for years.) There is an amazing book called "Chasing the Scream" that documents Anslinger's obsession with Simone and how he used it to launch the modern war on drugs. More on this here: https://www.thefix.com/content…

Em: Since we're addressing the music, have to say that the opening song in part 1 was more appropriate than everyone would think on first watch. Delightfully so.

You're wondering who I am (Secret, secret, I've got a secret) I've got a secret I've been hiding under my skin My heart is human, my blood is boiling, my brain IBM So if you see me acting strangely, don't be surprised I'm just a man who needed someone and somewhere to hide To keep me alive, just keep me alive I'm not a robot without emotions, I'm not what you see I've come to help you with your problems, so we can be free I'm not a hero, I'm not a savior, forget what you know I'm just a man whose circumstances went beyond his control The lyrics tell you the truth right at the start.

Looking Back at Part I

Jason: I would say that the hacking and password attacks were probably easier than they would have been, given that the imagined Elliot exists entirely in our Elliot's mind.

Em: Okay, but it's not an "imagined Elliot." They would've thought pretty similarly. It's the host.

The "imagined Elliot" (Host!Elliot) is older than "our Elliot" (Mastermind!Elliot). If one has to say that either of them was "imagined," it'd be Mastermind!Elliot.

Micah: Maybe this explains my confusion with bypassing FileVault. How did that actually work, since Elliot didn't crack the password to initially root the box? Maybe it was more like what happens when you're hacking in your dreams. It doesn't have to completely make sense.

Em: This is what I meant by "it all has to be analyzed through the lens of DID because that's how it was constructed by the producers." And it did make sense, but you can't think of it as hacking because it wasn't. It was Mastermind finding ways to access parts of themself that had been cordoned off, basically. Some of which were figments. But thinking of it as "hacking" at all is inaccurate, which is one reason why all the tech didn't actually make sense. The iMac wasn't an iMac, it was a box stuffed with memories and idealized versions of things. It was an amalgam.

Jason: If I was imagining how I'd hack a Mac in my brain, there would be some inaccuracies because I don't perfectly remember how they work

Em: But that's not what this was. It wasn't a Mac. It wasn't even the idea of a Mac. Not really. Think of the iMac as a memory palace that's locked. Think of it as memories that you've lost. You're not imagining finding them. It's not imaginary, it's more symbolism. Thinking of it as "hacking" at all is like thinking of trick or treaters as ghosts and goblins. It's something else with a sheet over it.

Micah: I really liked it when dream-reality started to seriously break down.

Yael: The FSociety masks?

Micah: Yes, the FSociety masks. Everyone having Mr. Robot's face. All of the glitches in the matrix where suddenly he was somewhere else.

Yael: He called himself on the phone, ermigod. Yeah, it was fun. Kind of reminded me of The Butterfly Effect and of Being John Malkovich

Jason: It also kind of reminds me of the end of Brazil, with the Baby Face masks

Recursive Loops

Yael: What did they mean by "recursive loop" re: this imagined universe?

Em: That it was limited and repeating. The same day over and over. The same fantasy. Host!Elliot never married Faux!Angela, Host!Elliot was always about to get married to Faux!Angela. It was a loop that Elliot's mind tried to interpret through a technical lens, same as the so-called hacking. Host!Elliot = "preppy Elliot" AKA the "real Elliot."

Harlo: Thus the busy work of the last episode.

Jason: I thought that the phrase "recursive loop" was a little goofy in a technical sense. Is it recursion, or is it a loop?

Harlo: Ooooh, that's good. If it recurses, it never gets to loop. Poesie (chef's kiss).

Micah: You can infinitely loop, but you can't infinitely recurse—each time you call a recursive function you take a bit more memory, and eventually you run out of memory

Harlo: Fucking killjoy.

Jason: Yeah, the earthquakes are an out of memory condition.

Em: They're dissonance.

Jason: Maybe it's recursive, and the collision of Red Team Elliot with Blue Team Elliot is the result of it reaching an exit condition. Same as the missing faces/F-society masks at the wedding.

Em: Hard disagree.

Yael: Out of memory condition is when your computer runs out of memory and starts doing weird glitchy shit?

Micah: Generally if a program uses all of your computer's RAM, your OS kills it

Yael: But you can’t kill the host

Micah: I kept hitting that in one of the Advent of Code recursion challenges

Harlo: How so? That’s interesting!

Micah: I was running out of memory with my implementation for this challenge https://adventofcode.com/2019/… you need to solve a series of complicated mazes in the most efficient way possible, and you do this by building up a tree recursively. I actually hit the python RecursionError: maximum recursion depth exceeded exception, so I increased the maximum to something insane, and ran it again, and I could open System Monitor and watch my RAM usage go up, and up, and up, until the computer freezes for a few seconds and then my OS kills my Python script.

Em: It's not an "out of memory" thing. That has nothing to do with this. The earthquakes, the masks and the faces were the result of dissonance. Each one happened when the "reality" was challenged. That happened most directly by Mastermind and the Host confronting each other, but several things caused it. Each instance happened when "reality" was challenged, either by entering into the denial space/alternate reality, seeing "himself" or having Mr. Robot flat out tell him "that's not real."

Harlo: Oh… also, Gretchen Carlson. Oooookkkkkk. It was all a dream! And you were there, and you were there, and Gretchen Carlson was there…

Tying Up Loose Ends

Yael: Anyone have anything else to add, maybe about stuff I cut out in past seasons or that we didn’t discuss?

Harlo: So, a while ago in the robot chat (I forget which season), I mentioned that this show has a Sybil vibe to it. The editors linked to the Sybil consensus against attacks (which is totally reasonable). But actually, I was talking about the Sybil complex (Flora Rheta Schreiber) this entire time.

Yael: Oh, that was me that linked to that, not an editor. (Sorry!)

Micah: Here's one thing I didn't quite get. Whiterose's body was found in the nuclear reactor, and the news was reporting it as a foiled terrorist attack. And Elliot was found unconscious at the site of the attack, and Darlene is like in hiding. Yet she's just visiting him in the hospital, and they're not in FBI custody?

Yael: Yes, we all know that you'll never be off the hook for hacking, even if you do save the world. (cc: Marcus Hutchins).

Em: The show was kind of really shitty in terms of trans and queer representation. Consistently so.

Yael: Please go off.

Em: The only trans character was motivated to commit mass murder over decades due to her dysphoria. They linked it directly to her being trans. The producers misgendered White Rose on at least one occasion in public materials, after the reveal of who she was. Dom and Darlene got fxcked in how their story was handled and told. The only queer and trans characters were either villains BECAUSE they were queer and trans or the show went out of its way to deny them any sort of happy ending, or even closure. What happens with Dom? No one knows, and the show didn't care.

Yael: I touched on this a tiny bit last episode, but Janus reminded me of Hot Carla.

Em: Hot Carla at least got a bit more rep than what was in the show itself, but still. She was treated with more dignity than White Rose, even if I'm not sure if we actually saw her or not. Hot Carla was a prisoner who was treated somewhat as a joke by the show, from what I recall. She wasn't a character, she was a plot device and a joke.

Yael: I was expecting the Dom/Darlene situation to resolv, but yeah, they really did leave that stupid airport thing unfinished. I was disappointed with the ending tbh.

Micah: I'm glad we finally got to understand all of Elliot's various personalities, and that the entire show was really about Mastermind Elliot

Em: His main personalities, we didn't get to understand all of them. We (the viewers) are a collective alter and the final alter of the Dr. was never explained or addressed, that was just a 'form that was chosen,' but we sort of did get the others explained, even if it was more of a "tell don't show" situation, unless you count retroactive stuff, which I hesitate to.

Yael: I agree, not the best ending, and beating WhiteRose ended up seeming a bit anticlimactic. But overall, it’s been a great four and a half years. Goodbye, friends.

Hackers Discuss the ‘Mr. Robot’ Series Finale syndicated from https://triviaqaweb.wordpress.com/feed/

1 note

·

View note

Text

Dark Patterns: why do companies pursue strategies that make their customers regret doing business? #1yrago

In this 30 minute video, Harry Brignull rounds up his work on cataloging and unpicking "Dark Patterns," (previously) the super-optimized techniques used by online services to lure their customers into taking actions they would not make otherwise and will later regret.

Dark patterns are everywhere and once you know what they are, they're easier to spot and counter.

Most interesting is how businesses decide that dark patterns are the right way to conduct their affairs, despite the long-term risks of alienating customers who've been induced to make decisions that harm them and benefit the companies: it's a combination of machine-optimization and short-term thinking, both of which are endemic in the super-financialized world of online business.

Yael Grauer spoke to a good cross-section of designers and business-people about Brignull's work:

https://boingboing.net/2016/07/28/dark-patterns-why-do-companie.html

18 notes

·

View notes

Text

Facebook is not equipped to stop the spread of authoritarianism

Yael Grauer Contributor

Share on Twitter

Yael Grauer is an independent tech journalist and investigative reporter based in Phoenix. She’s written for The Intercept, Ars Technica, Breaker, Motherboard, WIRED, Slate and more.

After the driver of a speeding bus ran over and killed two college students in Dhaka in July, student protesters took to the streets. They forced the ordinarily disorganized local traffic to drive in strict lanes and stopped vehicles to inspect license and registration papers. They even halted the vehicle of the chief of Bangladesh Police Bureau of Investigation and found that his license was expired. And they posted videos and information about the protests on Facebook.

The fatal road accident that led to these protests was hardly an isolated incident. Dhaka, Bangladesh’s capital, which was ranked the second least livable city in the world in the Economist Intelligence Unit’s 2018 global liveability index, scored 26.8 out of 100 in the infrastructure category included in the rating. But the regional government chose to stifle the highway safety protests anyway. It went so far as raids of residential areas adjacent to universities to check social media activity, leading to the arrest of 20 students. Although there were many images of Bangladesh Chhatra League, or BCL men, committing acts of violence on students, none of them were arrested. (The BCL is the student wing of the ruling Awami League, one of the major political parties of Bangladesh.)

Students were forced to log into their Facebook profiles and were arrested or beaten for their posts, photographs and videos. In one instance, BCL men called three students into the dorm’s guest room, quizzed them over Facebook posts, beat them, then handed them over to police. They were reportedly tortured in custody.

A pregnant school teacher was arrested and jailed for just over two weeks for “spreading rumors” due to sharing a Facebook post about student protests. A photographer and social justice activist spent more than 100 days in jail for describing police violence during these protests; he told reporters he was beaten in custody. And a university professor was jailed for 37 days for his Facebook posts.

A Dhaka resident who spoke on the condition of anonymity out of fear for their safety said that the crackdown on social media posts essentially silenced student protesters, many of whom removed from their profiles entirely photos, videos and status updates about the protests. While the person thought that students were continuing to be arrested, they said, “nobody is talking about it anymore — at least in my network — because everyone kind of ‘got the memo,’ if you know what I mean.”

This isn’t the first time Bangladeshi citizens have been arrested for Facebook posts. As just one example, in April 2017, a rubber plantation worker in southern Bangladesh was arrested and detained for three months for liking and sharing a Facebook post that criticized the prime minister’s visit to India, according to Human Rights Watch.

Bangladesh is far from alone. Government harassment to silence dissent on social media has occurred across the region, and in other regions as well — and it often comes hand-in-hand with governments filing takedown requests with Facebook and requesting data on users.

Facebook has removed posts critical of the prime minister in Cambodia and reportedly “agreed to coordinate in the monitoring and removal of content” in Vietnam. Facebook was criticized for not stopping the repression of Rohingya Muslims in Myanmar, where military personnel created fake accounts to spread propaganda, which human rights groups say fueled violence and forced displacement. Facebook has since undertaken a human rights impact assessment in Myanmar, and it also took down coordinated inauthentic accounts in the country.

UNITED STATES – APRIL 10: Facebook CEO Mark Zuckerberg testifies during the Senate Commerce, Science and Transportation Committee and Senate Judiciary Committee joint hearing on “Facebook, Social Media Privacy, and the Use and Abuse of Data” on Tuesday, April 10, 2018. (Photo By Bill Clark/CQ Roll Call)

Protesters scrubbing Facebook data for fear of repercussion isn’t uncommon. Over and over again, authoritarian-leaning regimes have utilized low-tech strategies to quell dissent. And aside from providing resources related to online privacy and security, Facebook still has little in place to protect its most vulnerable users from these pernicious efforts. As various countries pass laws calling for a local presence and increased regulation, it is possible that the social media conglomerate doesn’t always even want to.

“In many situations, the platforms are under pressure,” said Raman Jit Singh Chima, policy director at Access Now. “Tech companies are being directly sent takedown orders, user data requests. The danger of that is that companies will potentially be overcomplying or responding far too quickly to government demands when they are able to push back on those requests,” he said.

Elections are often a critical moment for oppressive behavior from governments — Uganda, Chad and Vietnam have specifically targeted citizens — and candidates — during election time. Facebook announced just last Thursday that it had taken down nine Facebook pages and six Facebook accounts for engaging in coordinated inauthentic behavior in Bangladesh. These pages, which Facebook believes were linked to people associated with the Bangladesh government, were “designed to look like independent news outlets and posted pro-government and anti-opposition content.” The sites masqueraded as news outlets, including fake BBC Bengali, BDSNews24 and Bangla Tribune and news pages with Photoshopped blue checkmarks, according to the Atlantic Council’s Digital Forensic Research Lab.

Still, the imminent election in Bangladesh doesn’t bode well for anyone who might wish to express dissent. In October, a digital security bill that regulates some types of controversial speech was passed in the country, signaling to companies that as the regulatory environment tightens, they too could become targets.

More restrictive regulation is part of a greater trend around the world, said Naman M. Aggarwal, Asia policy associate at Access Now. Some countries, like Brazil and India, have passed “fake news” laws. (A similar law was proposed in Malaysia, but it was blocked in the Senate.) These types of laws are frequently followed by content takedowns. (In Bangladesh, the government warned broadcasters not to air footage that could create panic or disorder, essentially halting news programming on the protests.)

Other governments in the Middle East and North Africa — such as Egypt, Algeria, United Arab Emirates, Saudi Arabia and Bahrain — clamp down on free expression on social media under the threat of fines or prison time. And countries like Vietnam have passed laws requiring social media companies to localize their storage and have a presence in the country — typically an indication of greater content regulation and pressure on the companies from local governments. In India, WhatsApp and other financial tech services were told to open offices in the country.

And crackdowns on posts about protests on social media come hand-in-hand with government requests for data. Facebook’s biannual transparency report provides detail on the percentage of government requests with which the company complies in each country, but most people don’t know until long after the fact. Between January and June, the company received 134 emergency requests and 18 legal processes from Bangladeshi authorities for 205 users or accounts. Facebook turned over at least some data in 61 percent of emergency requests and 28 percent of legal processes.

Facebook said in a statement that it “believes people deserve to have a voice, and that everyone has the right to express themselves in a safe environment,” and that it handles requests for user data “extremely carefully.”

The company pointed to its Facebook for Journalists resources and said it is “saddened by governments using broad and vague regulation or other practices to silence, criminalize or imprison journalists, activists, and others who speak out against them,” but the company said it also helps journalists, activists and other people around the world to “tell their stories in more innovative ways, reach global audiences, and connect directly with people.”

But there are policies that Facebook could enact that would help people in these vulnerable positions, like allowing users to post anonymously.

“Facebook’s real names policy doesn’t exactly protect anonymity, and has created issues for people in countries like Vietnam,” said Aggarwal. “If platforms provide leeway, or enough space for anonymous posting, and anonymous interactions, that is really helpful to people on the ground.”

BERLIN, GERMANY – SEPTEMBER 12: A visitor uses a mobile phone in front of the Facebook logo at the #CDUdigital conference on September 12, 2015 in Berlin, Germany. (Photo by Adam Berry/Getty Images)

A German court in February found the policy illegal under its decade-old privacy law. Facebook said it plans to appeal the decision.

“I’m not sure if Facebook even has an effective strategy or understanding of strategy in the long term,” said Sean O’Brien, lead researcher at Yale Privacy Lab. “In some cases, Facebook is taking a very proactive role… but in other cases, it won’t.” In any case, these decisions require a nuanced understanding of the population, culture, and political spectrum in various regions — something it’s not clear Facebook has.

Facebook isn’t responsible for government decisions to clamp down on free expression. But the question remains: How can companies stop assisting authoritarian governments, inadvertently or otherwise?

“If Facebook knows about this kind of repression, they should probably have… some sort of mechanism to at the very least heavily try to convince people not to post things publicly that they think they could get in trouble for,” said O’Brien. “It would have a chilling effect on speech, of course, which is a whole other issue, but at least it would allow people to make that decision for themselves.”

This could be an opt-in feature, but O’Brien acknowledges that it could create legal liabilities for Facebook, leading the social media giant to create lists of “dangerous speech” or profiles on “dissidents,” and could theoretically shut them down or report them to the police. Still, Facebook could consider rolling a “speech alert” feature to an entire city or country if that area becomes volatile politically and dangerous for speech, he said.

O’Brien says that social media companies could consider responding to situations where a person is being detained illegally and potentially coerced into giving their passwords in a way that could protect them, perhaps by triggering a temporary account reset or freeze to prevent anyone from accessing the account without proper legal process. Some actions that might trigger the reset or freeze could be news about an individual’s arrest — if Facebook is alerted to it, contact from the authorities, or contact from friends and loved ones, as evaluated by humans. There could even be a “panic button” type trigger, like Guardian Project’s PanicKit, but for Facebook — allowing users to wipe or freeze their own accounts or posts tagged preemptively with a code word only the owner knows.

“One of the issues with computer interfaces is that when people log into a site, they get a false sense of privacy even when the things they’re posting in that site are widely available to the public,” said O’Brien. Case in point: this year, women anonymously shared their experiences of abusive co-workers in a shared Google Doc — the so-called “Shitty Media Men” list, likely without realizing that a lawsuit could unmask them. That’s exactly what is happening.

Instead, activists and journalists often need to tap into resources and gain assistance from groups like Access Now, which runs a digital security helpline, and the Committee to Protect Journalists. These organizations can provide personal advice tailored to their specific country and situation. They can access Facebook over the Tor anonymity network. Then can use VPNs, and end-to-end encrypted messaging tools, and non-phone-based two-factor authentication methods. But many may not realize what the threat is until it’s too late.

The violent crackdown on free speech in Bangladesh accompanied government-imposed internet restrictions, including the throttling of internet access around the country. Users at home with a broadband connection did not feel the effects of this, but “it was the students on the streets who couldn’t go live or publish any photos of what was going on,” the Dhaka resident said.

Elections will take place in Bangladesh on December 30.

In the few months leading up to the election, Access Now says it’s noticed an increase in Bangladeshi residents expressing concern that their data has been compromised and seeking assistance from the Digital Security hotline.

Other rights groups have also found an uptick in malicious activity.

Meenakshi Ganguly, South Asia director at Human Rights Watch, said in an email that the organization is “extremely concerned about the ongoing crackdown on the political opposition and on freedom of expression, which has created a climate of fear ahead of national elections.”

Ganguly cited politically motivated cases against thousands of opposition supporters, many of which have been arrested, as well as candidates that have been attacked.

Human Rights Watch issued a statement about the situation, warning that the Rapid Action Battalion, a “paramilitary force implicated in serious human rights violations including extrajudicial killings and enforced disappearances,” and has been “tasked with monitoring social media for ‘anti-state propaganda, rumors, fake news, and provocations.'” This is in addition to a nine-member monitoring cell and around 100 police teams dedicated to quashing so-called “rumors” on social media, amid the looming threat of news website shutdowns.

“The security forces continue to arrest people for any criticism of the government, including on social media,” Ganguly said. “We hope that the international community will urge the Awami League government to create conditions that will uphold the rights of all Bangladeshis to participate in a free and fair vote.”

Original Article : HERE ; This post was curated & posted using : RealSpecific

=> *********************************************** Originally Published Here: Facebook is not equipped to stop the spread of authoritarianism ************************************ =>

Facebook is not equipped to stop the spread of authoritarianism was originally posted by Latest news - Feed

0 notes

Text

Technology Can’t Predict Crime, It Can Only Weaponize Proximity to Policing

Special thanks to Yael Grauer for additional writing and research.

In June 2020, Santa Cruz, California became the first city in the United States to ban municipal use of predictive policing, a method of deploying law enforcement resources according to data-driven analytics that supposedly are able to predict perpetrators, victims, or locations of future crimes. Especially interesting is that Santa Cruz was one of the first cities in the country to experiment with the technology when it piloted, and then adopted, a predictive policing program in 2011. That program used historic and current crime data to break down some areas of the city into 500 foot by 500 foot blocks in order to pinpoint locations that were likely to be the scene of future crimes. However, after nine years, the city council voted unanimously to ban it over fears of how it perpetuated racial inequality.

Predictive policing is a self-fulfilling prophecy. If police focus their efforts in one neighborhood and arrest dozens of people there during the span of a week, the data will reflect that area as a hotbed of criminal activity. The system also considers only reported crime, which means that neighborhoods and communities where the police are called more often might see a higher likelihood of having predictive policing technology concentrate resources there. This system is tailor-made to further victimize communities that are already overpoliced—namely, communities of color, unhoused individuals, and immigrants—by using the cloak of scientific legitimacy and the supposed unbiased nature of data.

Santa Cruz’s experiment, and eventual banning of the technology is a lesson to the rest of the country: technology is not a substitute for community engagement and holistic crime reduction measures. The more police departments rely on technology to dictate where to focus efforts and who to be suspicious of, the more harm those departments will cause to vulnerable communities. That’s why police departments should be banned from using supposedly data-informed algorithms to inform which communities, and even which people, should receive the lion’s share of policing and criminalization.

What Is Predictive Policing?

The Santa Cruz ordinance banning predictive policing defines the technology as “means software that is used to predict information or trends about crime or criminality in the past or future, including but not limited to the characteristics or profile of any person(s) likely to commit a crime, the identity of any person(s) likely to commit crime, the locations or frequency of crime, or the person(s) impacted by predicted crime.”

Predictive policing analyzes a massive amount of information from historical crimes including the time of day, season of the year, weather patterns, types of victims, and types of location in order to infer when and in which locations crime is likely to occur. For instance, if a number of crimes have been committed in alleyways on Thursdays, the algorithm might tell a department they should dispatch officers to alleyways every Thursday. Of course, then this means that police are predisposed to be suspicious of everyone who happens to be in that area at that time.

The technology attempts to function similarly while conducting the less prevalent “person based” predictive policing. This takes the form of opaque rating systems that assign people a risk value based on a number of data streams including age, suspected gang affiliation, and the number of times a person has been a victim as well as an alleged perpetrator of a crime. The accumulated total of this data could result in someone being placed on a “hot list”, as happened to over 1,000 people in Chicago who were placed on one such “Strategic Subject List.” As when specific locations are targeted, this technology cannot actually predict crime—and in an attempt to do so, it may expose people to targeted police harassment or surveillance without any actual proof that a crime will be committed.

There is a reason why the use of predictive policing continues to expand despite its dubious foundations: it makes money. Many companies have developed tools for data-driven policing; some of the biggest arePredPol, HunchLab, CivicScape, and Palantir. Academic institutions have also developed predictive policing technologies, such as Rutgers University’s RTM Diagnostics or Carnegie Mellon University’s CrimeScan, which is used in Pittsburgh. Some departments have built such tools with private companies and academic institutions. For example, in 2010, the Memphis Police Department built its own tool, in partnership with the University of Memphis Department of Criminology and Criminal Justice, using IBM SPSS predictive analytics.

As of summer 2020, the technology is used in dozens of cities across the United States.

What Problems Does it Pose?

One of the biggest flaws of predictive policing is the faulty data fed into the system. These algorithms depend on data informing them of where criminal activity has happened to predict where future criminal activity will take place. However, not all crime is recorded—some communities are more likely to report crime than others, some crimes are less likely to be reported than other crimes, and officers have discretion in deciding whether or not to make an arrest. Predictive policing only accounts for crimes that are reported, and concentrates policing resources in those communities, which then makes it more likely that police may uncover other crimes. This all creates a feedback loop that makes predictive policing a self-fulfilling prophecy. As professor Suresh Venkatasubramanian put it:

“If you build predictive policing, you are essentially sending police to certain neighborhoods based on what they told you—but that also means you’re not sending police to other neighborhoods because the system didn’t tell you to go there. If you assume that the data collection for your system is generated by police whom you sent to certain neighborhoods, then essentially your model is controlling the next round of data you get.”

This feedback loop will impact vulnerable communities, including communities of color, unhoused communities, and immigrants.

Police are already policing minority neighborhoods and arresting people for things that may have gone unnoticed or unreported in less heavily patrolled neighborhoods. When this already skewed data is entered into a predictive algorithm, it will deploy more officers to the communities that are already overpoliced.

A recent deep dive into the predictive program used by the Pasco County Sheriff's office illustrates the harms that getting stuck in an algorithmic loop can have on people. After one 15-year-old was arrested for stealing bicycles out of a garage, the algorithm continuously dispatched police to harass him and his family. Over the span of five months, police went to his home 21 times. They showed up at his gym and his parent’s place of work. The Tampa Bay Times revealed that since 2015, the sheriff's office has made more than 12,500 similar preemptive visits on people.

These visits often resulted in other, unrelated arrests that further victimized families and added to the likelihood that they would be visited and harassed again. In one incident, the mother of a targeted teenager was issued a $2,500 fine when police sent to check in on her child saw chickens in the backyard. In another incident, a father was arrested when police looked through the window of the house and saw a 17year-old smoking a cigarette. These are the kinds of usually unreported crimes that occur in all neighborhoods, across all economic strata—but which only those marginalized people who live under near constant policing are penalized for.

As experts have pointed out, these algorithms often draw from flawed and non-transparent sources such as gang databases, which have been the subject of public scrutiny due to their lack of transparency and overinclusion of Black and Latinx people. In Los Angeles, for instance, if police notice a person wearing a sports jersey or having a brief conversation with someone on the street, it may be enough to include that person in the LAPD’s gang database. Being included in a gang database often means being exposed to more police harassment and surveillance, and also can lead to consequences once in the legal system, such as harsher sentences. Inclusion in a gang database can impact whether a predictive algorithm identifies a person as being a potential threat to society or artificially projects a specific crime as gang-related. In July 2020, the California Attorney General barred police in the state from accessing any of LAPD’s entries into the California gang database after LAPD officers were caught falsifying data. Unaccountable and overly broad gang databases are the type of flawed data flowing from police departments into predictive algorithms, and exactly why predictive policing cannot be trusted.

To test racial disparities in predictive policing, Human Rights Data Analysis Group (HRDAG) looked at Oakland Police Department’s recorded drug crimes. It used a big data policing algorithm to determine where it would suggest that police look for future drug crimes. Sure enough, HRDAG found that the data-driven model would have focused almost exclusively on low-income communities of color. But public health data on drug users combined with U.S. Census data show that the distribution of drug users does not correlate with the program’s predictions, demonstrating that the algorithm’s predictions were rooted in bias rather than reality.

All of this is why a group of academic mathematicians recently declared a boycott against helping police create predictive policing tools. They argued that their credentials and expertise create a convenient way to smuggle racist ideas about who will commit a crime based on where they live and who they know, into the mainstream through scientific legitimacy. “It is simply too easy,” they write, “to create a ’scientific’ veneer for racism.”

In addition, there is a disturbing lack of transparency surrounding many predictive policing tools. In many cases, it’s unclear how the algorithms are designed, what data is being used, and sometimes even what the system claims to predict. Vendors have sought non-disclosure clauses or otherwise shrouded their products in secrecy, citing trade secrets or business confidentiality. When data-driven policing tools are black boxes, it’s difficult to assess the risks of error rates, false positives, limits in programming capabilities, biased data, or even flaws in source code that affect search results.

For local departments, the prohibitive cost of using these predictive technologies can also be a detriment to the maintenance of civil society. In Los Angeles, the LAPD paid $20 million over the course of nine years to use Palantir’s predictive technology alone. That’s only one of many tools used by the LAPD in an attempt to predict the future.

Finally, predictive policing raises constitutional concerns. Simply living or spending time in a neighborhood or with certain people may draw suspicion from police or cause them to treat people as potential perpetrators. As legal scholar Andrew Guthrie Furgeson has written, there is tension between predictive policing and legal requirements that police possess reasonable suspicion to make a stop. Moreover, predictive policing systems sometimes utilize information from social media to assess whether a person might be likely to engage in crime, which also raises free speech issues.

Technology cannot predict crime, it can only weaponize a person’s proximity to police action. An individual should not have their presumption of innocence eroded because a casual acquaintance, family member, or neighbor commits a crime. This just opens up members of already vulnerable populations to more police harassment, erodes trust between public safety measures and the community, and ultimately creates more danger. This has already happened in Chicago, where the police surveil and monitor the social media of victims of crimes—because being a victim of a crime is one of the many factors Chicago’s predictive algorithm uses to determine if a person is at high risk of committing a crime themselves.

What Can Be Done About It?

As the Santa Cruz ban suggests, cities are beginning to wise up to the dangers of predictive policing. As with the growing movement to ban government use of face recognition and other biometric surveillance, we should also seek bans on predictive policing. Across the country, from San Francisco to Boston, almost a dozen cities have banned police use of face recognition after recognizing its disproportionate impact on people of color, its tendency to falsely accuse people of crimes, its erosion of our presumption of innocence, and its ability to track our movements.

Before predictive policing becomes even more widespread, cities should now take advantage of the opportunity to protect the well-being of their residents by passing ordinances that ban the use of this technology or prevent departments from acquiring it in the first place. If your town has legislation like a Community Control Over Police Surveillance (CCOPS) ordinance, which requires elected officials to approve police purchase and use of surveillance equipment, the acquisition of predictive policing can be blocked while attempts to ban the technology are made.

The lessons from the novella and film Minority Report still apply, even in the age of big data: people are innocent until proven guilty. People should not be subject to harassment and surveillance because of their proximity to crime. For-profit software companies with secretive proprietary algorithms should not be creating black box crystal balls exempt from public scrutiny and used without constraint by law enforcement. It’s not too late to put the genie of predictive policing back in the bottle, and that is exactly what we should be urging local, state, and federal leaders to do.

from Deeplinks https://ift.tt/2EV5PgI

0 notes

Text

Que prouve vraiment un audit de sécurité VPN? - Magazine PC

Que prouve vraiment un audit de sécurité VPN? – Magazine PC

Que prouve vraiment un audit de sécurité VPN? - Magazine PC

Il est difficile pour les experts, et encore moins pour les consommateurs, de connaître les réseaux privés virtuels auxquels vous pouvez faire confiance. Les audits de sécurité peuvent-ils aider la situation? Peut-être, mais seulement si vous comprenez comment les interpréter.

Par Yael Grauer

FAu fil des années, les utilisateurs n’ont…

View On WordPress

0 notes

Text

Facebook is not equipped to stop the spread of authoritarianism

Yael Grauer UNITED State- APRIL 10: Facebook CEO Mark Zuckerberg witnesses during the Senate Commerce, Science and Transportation Committee and Senate Judiciary Committee seam hearing on” Facebook, Social Media Privacy, and the Use and Abuse of Data” on Tuesday, April 10, 2018.( Photo By Bill Clark/ CQ Roll Call) Protesters scrubbing Facebook the necessary data for anxiety of repercussion isn’t uncommon. Over and over again, authoritarian-leaning regimes have utilized low-tech...

The post Facebook is not equipped to stop the spread of authoritarianism appeared first on Victory Lion.

from WordPress http://bit.ly/2TvwRNb via IFTTT

0 notes

Text

Facebook não está equipado para parar a propagação do autoritarismo

Yael Gray Contributor Share on Twitter Yael Grauer é uma jornalista independente de tecnologia e repórter investigativa baseada em Phoenix. Ela é escrita para(...) source https://blogia.com.br/-/MjE3Mw==/Facebook+nC3A3o+estC3A1+equipado+para+parar+a+propagaC3A7C3A3o+do+autoritarismo

0 notes

Text

Facebook is not equipped to stop the spread of authoritarianism

Yael Grauer Contributor

Share on Twitter

Yael Grauer is an independent tech journalist and investigative reporter based in Phoenix. She’s written for The Intercept, Ars Technica, Breaker, Motherboard, WIRED, Slate and more.

After the driver of a speeding bus ran over and killed two college students in Dhaka in July, student protesters took to the streets. Theyforced the…

View On WordPress

#algeria#asia#bahrain#bangladesh#brazil#cambodia#column#dhaka#east africa#egypt#Facebook#fake news#Google#government#India#internet access#malaysia#Middle East#myanmar#north africa#policy#saudi arabia#security#social#social-media#uganda#vietnam#whatsapp#world wide web

0 notes

Text

Facebook is just not outfitted to cease the unfold of authoritarianism

Yael Grauer Contributor

Share on Twitter

Yael Grauer is an impartial tech journalist and investigative reporter based mostly in Phoenix. She’s written for The Intercept, Ars Technica, Breaker, Motherboard, WIRED, Slate and extra.

After the motive force of a rushing bus ran over and killed two school college students in Dhaka in July, pupil protesters took…

View On WordPress

0 notes

Text

Hackers Dissect ‘Mr. Robot’ Season 4 Episode 11: ‘Exit’

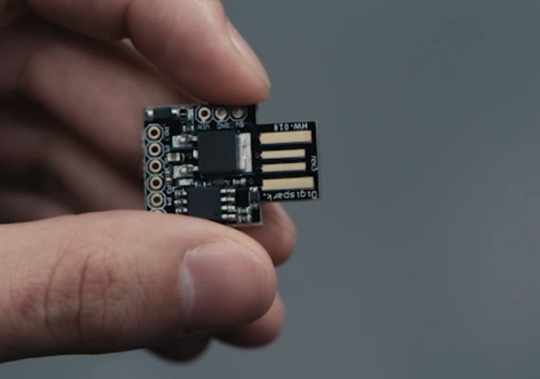

Every week, a roundtable of hackers discusses the latest episode of Mr. Robot. Episode 11 of Mr. Robot’s final season had one last attempted hack, so we discussed [SPOILERS, obvs] rubber duckies, Stuxnet, text/graphics games, parallel universes, and more. (The chat transcript has been edited for brevity, clarity, and chronology.)

This week’s team of experts includes:

Freddy Martinez: a technologist and public records expert. He serves as a Director for the Chicago-based Lucy Parsons Labs.

Harlo Holmes: Director of Digital Security at Freedom of the Press Foundation.

Trammell Hudson: a security researcher who likes to take things apart.

Yael Grauer (moderator): an investigative tech reporter covering online privacy and security, digital freedom, mass surveillance and hacking.

One More Hack

Yael: So I thought it was interesting that there was just one more hack. This is how criminals get caught: they just want to do one more job. And then one more job. And then another job.

Trammell: Yes, the "one more hack" thing is why I go to bed far too late many nights.

And how vacations without projects can turn into almost withdrawal experience. "I'm not good with computers, I'm just really bad at giving up."

Yael: Right, like that except with the added risk and adrenaline rush of breaking the law. (Allegedly.) I think he really does think he'll be done after this hack.

Trammel: He used a Digispark to sequence things. It is an Arduino compatible ATtiny85 system that can pretend to be USB devices. One common use is as a "rubber ducky" to store lots of keystrokes so that the attacker doesn't have to carefully type the commands. That seems to be how he used it. He plugged it in and it started (slowly) sending commands while he watched. I didn't catch all of them—some sort of power shell invocation and then the fuxor program to encrypt the /bin directory (after gathering entropy).

Image: USA

Harlo: Yeah, similar to a ransomware attack! Which is kind of prevalent nowadays, targeting civic infrastructure.

Trammell: When Mr. Robot said "that would take forever to write," I was hoping that Elliot would reply, "I've reused most of the Stuxnet code.” (Although the Stuxnet virus targeted uranium refinement for nuclear weapons, rather than nuclear power plants.)

Yael: Is Stuxnet code public?

Freddy: Some of it is, other parts are not. I think some Stuxnet code is still technically classified.

Trammell: The NSA/CIA/Mossad were just giving away the Stuxnet binaries for free!

Freddy: Yeah, they were "giving away" the Stuxnet code; all you needed to qualify is an emerging nuclear enrichment program.

Trammell: Not only was it free, they even offered a complimentary on-site installation by a Dutch technician.

A Dutch newspaper featuring the Stuxnet attack. (Image: DeVolkskrant)

Ideology

Yael: I thought the conversation between Whiterose and Elliot was super interesting, about who is more hateful. Because I think it's easy to think you're doing stuff to help people when you actually dehumanize big swaths of the population.

Trammell: Very few people seem to ask, "Are we the baddies?"

Yael: Haha, they should just go on Reddit. /r/AmItheAsshole? I thought it was problematic that the one trans character on the show decides mass murder is the answer due to gender dysphoria. [ed: Hey, don’t forget about Hot Carla! She is good and pure!]

Though in White Rose’s mind, it wasn’t mass murder. It was "helping people." Or something. But also, it was funny for Whiterose to be like, "you are hateful, your name is FSociety" after killing dozens of people in the plant. Or ordering their killing, anyway. "No, actually, you are the real hater."

The Hack Attempt

Yael: Can someone explain the tech to me? Does it matter at what point you start the program and the malware is put in? And how does this lead to a nuclear meltdown?

Harlo: This is not a Stuxnet-like attack, I don't think. I think Elliot just encrypted /bin on some remote computer, which is nowhere near as sophisticated.

Yael: Anyone who wants to learn more about Stuxnet should read Kim Zetter’s book on it, Countdown to Zero Day.

Trammell: I think the meltdown was inevitable when White Rose switched on the machine; the malware didn't have any effect.

Yael: Okay, so Elliot got there too late. Sorry, Elliot.

Harlo: Get Kim Zetter on the phone. I was just about to say, it was like bringing a knife to a gunfight. What Elliot was trying to do reminds me more of the rash of attacks against municipalities (see New Orleans just a few days ago, Baltimore last summer; countless school districts). Which is ridiculously effective, but nowhere near the sophistication of an attack like Stuxnet. Thus, knives at a gunfight. Yet, the scary thing is, these knives are proving HORRIFICALLY effective.

Trammell: On the rubber ducky: that's bad opsec by the plant. Allowing random USB devices, even HID input devices, is a bad plan for security.

Yael: How do you disallow USB devices from getting plugged in?

Trammell: You can set up allowed-lists or banned-lists in most OSes so that only permitted devices can be used. You can still spoof it if you know what is allowed though; my homebrew keyboards all claim to be generic 101-key Microsoft or Dell keyboards so that they are likely to be permitted.

Freddy: At some companies, they have rules that will send an alert when a USB is plugged in, and then they have a security person who shows up to talk to you.

Yael: Maybe that person got shot, though.

The Game

Yael: I thought it was so weird that Elliot didn’t think it was strange that the people doing security for the site just, like, weren’t there.

Trammell: When it turned into a first-person shooter game with all of the empty rooms and unlocked computers, it seemed like Elliot should have realized something wasn't quite right. White Rose totally pulled an Ozymandias on Elliot.

Yael: The game reminded me of one I played as a kid, but I can't remember what it was.

Trammell: The game was very much inspired by the text/graphics games of the era, although the parser was much more sophisticated than the Z-machine of that time.

Harlo: I have an AWESOME BOOK FOR YOU TO PLUG. Twisty Little Passages by Nick Montfort. It's a media archeology about interactive text-based games.

Yael: It seemed like there was no way for Elliot to win the game.

Freddy: As with all nuclear policy, the only winning move is not to play.

Image: USA

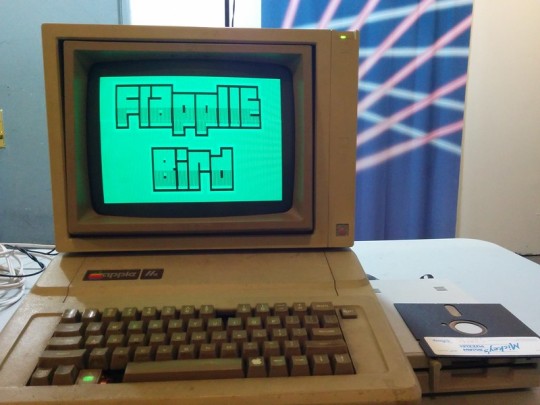

Trammell: The Apple //e in the episode came with a lowercase character generator built in, so that part was realistic. I wonder if they wrote the game and ran it on real hardware. A few years ago at the NYC Resistor Interactive Show, we had Flapple Bird, a real retro game for the Apple 2.

Yael: Did anyone catch the door code?

Trammell: The door code was 0509, the date of the hack.

Flapple Bird (Image: Trammel Hudson)

Parallel Universe

Yael: The episode ends with the weird parallel universe. Do you guys think that is actually happening? Or is it in Elliot's mind? (I've been watching The Runaways, so it seemed so… redundant, lol.)

Harlo: I got a kinda Rick and Morty vibe.

Trammell: “Did you know that the first Matrix was designed to be a perfect human world, where none suffered, where everyone would be happy? It was a disaster…”

Freddy: It reminded me of the scene where Elliot becomes a normie.

Yael: I didn’t like preppie Elliot, to be honest. He was a little annoying.

Harlo: Maybe this is what Whiterose was talking about in her vision of the better life?

Notice there's no Darlene? What does that mean?

Yael: IDK. I don't want to be in a parallel universe without Darlene, though.

Trammell: Yeah, why didn't she come along to the new world?

Yael: Even Tyrell was there.

Trammell: Tyrell in a hoodie, just like he wanted.

Freddy: Tyrell being a cool CEO like Mark Zuckerberg.

Harlo: Maybe because Darlene's not dead yet?

Trammell: It seems that Tyrell knew that this was a reboot and was trying to figure out if Elliot knew as well. Was Elliot "forgetting his wallet" an attempt to test the new rules of the perfect world?

Freddy: I thought so too, Trammell!

Yael: If this is how Elliot really dies, it's sad. And almost anti-climactic, to be honest.

Harlo: I just hope the next episode is a real-life Rick and Morty crossover.

Hackers Dissect ‘Mr. Robot’ Season 4 Episode 11: ‘Exit’ syndicated from https://triviaqaweb.wordpress.com/feed/

1 note

·

View note