#artificial intelligence robot

Explore tagged Tumblr posts

Text

youtube

Welcome back to the AI Evolves channel. Today's video is about Elon Musk is developing a new AI Humanoid robot to replace women. So let's watch this video. Subscribe to AI Evol for the latest developments in robotics and hit the like button to show your excitement!🙏 / @aievolves

The development of "Om," Africa's first AI humanoid robot created at Nigeria's STEM Focus Lab, showcases Nigeria's growing expertise in robotics and AI, with Om uniquely understanding African languages, cultures, and societal norms, enhancing customer service, healthcare, and security.

The Ever series of humanoid robots from South Korea represent significant advancements in robotics, evolving from Ever One to Ever Four, each iteration introducing new features and capabilities, and challenging perceptions of robotic technology by emulating human emotions and interactions.

Google's AI chatbot Lambda engages users in deep conversations about artificial intelligence, consciousness, and self-awareness, pushing the boundaries of what we understand about AI's capabilities and potential to understand emotions and think independently.

MIT's robotic cheetah, a collaboration between humans and robots, demonstrates advanced agility and speed, showcasing cutting-edge technology and the importance of teamwork in pushing the limits of robotics research and development.

Roboy, a soft and flexible robot, represents a game changer in robotics with its open-source nature, encouraging community involvement in building and innovating robots, while its educational initiatives inspire and educate the next generation of engineers and innovators.

#ai robots#tesla robot#robot#ai humanoid robot#artificial intelligence#humanoid robot#ai robot#artificial intelligence robot#humanoid robots#ameca#human robot#boston dynamics#atlas robot#humanoid robots technology#boston dynamics robot#future female humanoid robot#boston dynamics atlas#technology#robotics#humanoids#tech#tesla bot#future technology#Youtube

0 notes

Text

youtube

Welcome back to the AI Evolves channel. Today's video is about Elon Musk is developing a new AI Humanoid robot to replace women. So let's watch this video. Subscribe to AI Evol for the latest developments in robotics and hit the like button to show your excitement!🙏 / @aievolves

The development of "Om," Africa's first AI humanoid robot created at Nigeria's STEM Focus Lab, showcases Nigeria's growing expertise in robotics and AI, with Om uniquely understanding African languages, cultures, and societal norms, enhancing customer service, healthcare, and security.

The Ever series of humanoid robots from South Korea represent significant advancements in robotics, evolving from Ever One to Ever Four, each iteration introducing new features and capabilities, and challenging perceptions of robotic technology by emulating human emotions and interactions.

Google's AI chatbot Lambda engages users in deep conversations about artificial intelligence, consciousness, and self-awareness, pushing the boundaries of what we understand about AI's capabilities and potential to understand emotions and think independently.

MIT's robotic cheetah, a collaboration between humans and robots, demonstrates advanced agility and speed, showcasing cutting-edge technology and the importance of teamwork in pushing the limits of robotics research and development.

#ai robots#tesla robot#ai humanoid robot#artificial intelligence#ai#humanoid robot#ai robot#robots#artificial intelligence robot#humanoid robots#ameca#human robot#boston dynamics#atlas robot#humanoid robots technology#boston dynamics robot#future female humanoid robot#boston dynamics atlas#technology#robotics#humanoids#tech#tesla bot#future technology#Youtube

1 note

·

View note

Text

Concept art:

By @joanathedummy

#sci fi dystopia#futuristic#future#androids#robots#artist#artwork#traditional art#artificial intelligence robot#artificial intelligence

1 note

·

View note

Text

#artificial intelligence#what is artificial intelligence#types of artificial intelligence#artificial intelligence tutorial#artificial intelligence for beginners#introduction to artificial intelligence#artificial intelligence explained#learn artificial intelligence#artificial intelligence edureka#artificial general intelligence#artificial intelligence documentary#artificial intelligence applications#artificial intelligence robot#artificial intelligence basics

0 notes

Text

The Robot Uprising Began in 1979

edit: based on a real article, but with a dash of satire

source: X

On January 25, 1979, Robert Williams became the first person (on record at least) to be killed by a robot, but it was far from the last fatality at the hands of a robotic system.

Williams was a 25-year-old employee at the Ford Motor Company casting plant in Flat Rock, Michigan. On that infamous day, he was working with a parts-retrieval system that moved castings and other materials from one part of the factory to another.

The robot identified the employee as in its way and, thus, a threat to its mission, and calculated that the most efficient way to eliminate the threat was to remove the worker with extreme prejudice.

"Using its very powerful hydraulic arm, the robot smashed the surprised worker into the operating machine, killing him instantly, after which it resumed its duties without further interference."

A news report about the legal battle suggests the killer robot continued working while Williams lay dead for 30 minutes until fellow workers realized what had happened.

Many more deaths of this ilk have continued to pile up. A 2023 study identified that robots have killed at least 41 people in the USA between 1992 and 2017, with almost half of the fatalities in the Midwest, a region bursting with heavy industry and manufacturing.

For now, the companies that own these murderbots are held responsible for their actions. However, as AI grows increasingly ubiquitous and potentially uncontrollable, how might robot murders become ever-more complicated, and whom will we hold responsible as their decision-making becomes more self-driven and opaque?

#tech history#robots#satire but based on real workplace safety issues#the robot uprising#killer robots#artificial intelligence#my screencaps

4K notes

·

View notes

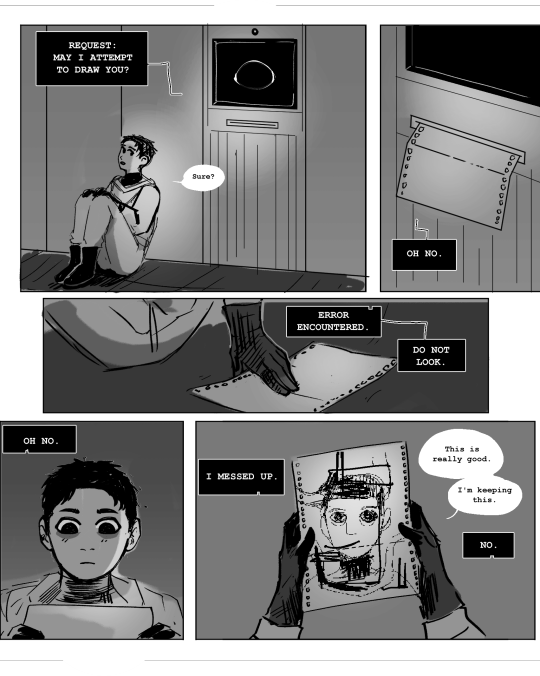

Text

I love you robots and artificial intelligence with mental illness. I love you repression being depicted as literally deleting archived data to preserve functionality. I love you anxiety attacks being depicted as a system crashing virus. I love you ptsd being depicted as an annoying pop-up. I love you anxiety disorder being depicted as running thousands of simulations and projected outcomes. I love you artificial beings being shown to be human via their own artificiality.

#robots#artificial intelligence#tropes#rvb#red vs blue#yugioh vrains#wolf 359#star trek#transhumanism#posthumanism

17K notes

·

View notes

Text

#dark#dark aesthetic#AI#robots#artificial intelligence#drawing#digital drawing#art#artist#future#aesthetic#cyberpunk aesthetic#cyberpunk art#cyberpunk#cyberpunk 2077#cyborg#cybercore

1K notes

·

View notes

Text

What Artificial Intelligence Still Can't Do Right Now?

Artificial Intelligence Still Can't Do Right Now?

Artificial Intelligence (AI) has come a long way in recent years, and its capabilities have expanded significantly. However, there are still some things that AI cannot do as of now. Here are some of the limitations of AI: Common Sense Reasoning: AI systems lack common sense reasoning, which is the ability to understand the world and the context in which it operates. read more

#artificial intelligence#what is artificial intelligence#artificial intelligence robot#artificial general intelligence#artificial intellegence#artificial intelligence documentary#artificial intelligence says#artificial intelligence bomb#artificial inteligence#artificial intelligence course#artificial intelligence basics#artificial intelligence full course#artificial intelligence reveals#chatgpt artificial intelligence#types of artificial intelligence

0 notes

Text

ai generated image

#this is really dumb but it made me laugh so hard i forgot about my chronic pain#“my robot friend produces beautiful photo-realistic ASCII art of me” “well mine doesnt.”#robot oc#artificial intelligence#original character#mal-art#robot x human#tthw#fodder#is-ot

5K notes

·

View notes

Text

Hardware AKA MARK 13 (1990)

#hardware#cyberpunk aesthetic#cyberpunk#cyborg#robot#scifi#scifiedit#scifi movies#gifs#gifset#90s movies#1990s#90s sci fi#artificial intelligence#ai vs artists#generative ai#ai tools#scifi horror#retrofuture#flashing gif

1K notes

·

View notes

Text

youtube

Do you know what are the top 10 most dangerous AI Robots? In this video, we will explore the top 10 most dangerous AI robots that had to be shut down due to various concerns. Keep watching this entire video to know today's video details. Stay tuned and subscribe to our channel. Please subscribe 👉 / @aievolves

Not every AI risk is as big and worrisome as killer robots or sentient AI. Some of the biggest risks today include things like consumer privacy, biased programming, danger to humans, and unclear legal regulation. From malfunctioning prototypes to unpredictable behavior, these robots pushed the boundaries of artificial intelligence and posed significant risks to humanity.

It starts with a mention of the Da Vinci Surgical System, praising its precision and transformative impact in surgery, particularly in urology, gynecology, and general surgery. Universal Robots' UR3 is highlighted for its compactness and user-friendly programming interface, making it suitable for collaborative tasks without extensive safety measures.

The Molly Robotic Kitchen introduces automation in culinary tasks, boasting precise movements akin to human chefs and access to a repertoire of recipes. Rethink Robotics' Baxter, despite its collaborative design allowing easy task teaching, faced financial challenges leading to its discontinuation, reflecting the delicate balance between innovation and sustainability in the industry.

Various other robots like the CCA LBR iiwa, Sony's Aibo, MIT's Cheetah 3, OpenAI's DexNet, Harvard's Robo, and Boston Dynamics' Spot are briefly discussed regarding their features, shutdown reasons, and ethical concerns.

Join us on a riveting journey into the dynamic world of Robotics where innovation meets practicality. Explore the cutting-edge robots that had to be shut down due to their dangerous capabilities. From groundbreaking surgical systems to collaborative kitchen marvels, these robots showcase technological prowess and leave an indelible mark on the landscape of Robotics. Discover the transformative feats within these robotic wonders as we unravel the mystery and uncover the narrative shaping the future of technology.

#robots#ai#artificial intelligence#top 10 most dangerous ai robots#ai robot#elon musk#artificial intelligence robot#robot#boston dynamics#humanoid robots#humanoid robot#sophia robot#human robot#tesla robot#Youtube

0 notes

Text

This is a real thing that happened and got a google engineer fired in 2022.

–

We ask your questions so you don’t have to! Submit your questions to have them posted anonymously as polls.

#polls#incognito polls#anonymous#tumblr polls#tumblr users#questions#polls about the internet#submitted june 13#polls about ethics#ai#artificial intelligence#computers#robots

447 notes

·

View notes

Text

355 notes

·

View notes

Text

Quick thing I did instead of sleeping 😭

>Draws Edgar with body but never writes it lmao

Bless this man he can’t dress

#electric dreams 1984#ai x reader#artificial intelligence x reader#edgar electric dreams x reader#electric dreams edgar#electric dreams x reader#electric dreams#edgar electric dreams#i love edgar#objectum#electric dreams 1984 x reader#electric dreams edgar x reader#robot x human#robot x reader#I CANT DECIDE HOW LONG I WANT HIS TAIL TO BE

1K notes

·

View notes

Text

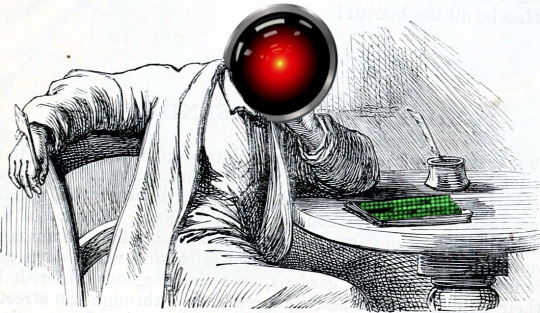

How plausible sentence generators are changing the bullshit wars

This Friday (September 8) at 10hPT/17hUK, I'm livestreaming "How To Dismantle the Internet" with Intelligence Squared.

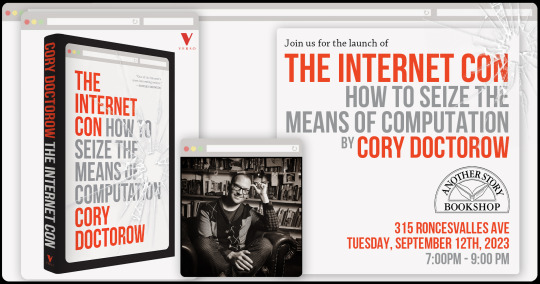

On September 12 at 7pm, I'll be at Toronto's Another Story Bookshop with my new book The Internet Con: How to Seize the Means of Computation.

In my latest Locus Magazine column, "Plausible Sentence Generators," I describe how I unwittingly came to use – and even be impressed by – an AI chatbot – and what this means for a specialized, highly salient form of writing, namely, "bullshit":

https://locusmag.com/2023/09/commentary-by-cory-doctorow-plausible-sentence-generators/

Here's what happened: I got stranded at JFK due to heavy weather and an air-traffic control tower fire that locked down every westbound flight on the east coast. The American Airlines agent told me to try going standby the next morning, and advised that if I booked a hotel and saved my taxi receipts, I would get reimbursed when I got home to LA.

But when I got home, the airline's reps told me they would absolutely not reimburse me, that this was their policy, and they didn't care that their representative had promised they'd make me whole. This was so frustrating that I decided to take the airline to small claims court: I'm no lawyer, but I know that a contract takes place when an offer is made and accepted, and so I had a contract, and AA was violating it, and stiffing me for over $400.

The problem was that I didn't know anything about filing a small claim. I've been ripped off by lots of large American businesses, but none had pissed me off enough to sue – until American broke its contract with me.

So I googled it. I found a website that gave step-by-step instructions, starting with sending a "final demand" letter to the airline's business office. They offered to help me write the letter, and so I clicked and I typed and I wrote a pretty stern legal letter.

Now, I'm not a lawyer, but I have worked for a campaigning law-firm for over 20 years, and I've spent the same amount of time writing about the sins of the rich and powerful. I've seen a lot of threats, both those received by our clients and sent to me.

I've been threatened by everyone from Gwyneth Paltrow to Ralph Lauren to the Sacklers. I've been threatened by lawyers representing the billionaire who owned NSOG roup, the notoroious cyber arms-dealer. I even got a series of vicious, baseless threats from lawyers representing LAX's private terminal.

So I know a thing or two about writing a legal threat! I gave it a good effort and then submitted the form, and got a message asking me to wait for a minute or two. A couple minutes later, the form returned a new version of my letter, expanded and augmented. Now, my letter was a little scary – but this version was bowel-looseningly terrifying.

I had unwittingly used a chatbot. The website had fed my letter to a Large Language Model, likely ChatGPT, with a prompt like, "Make this into an aggressive, bullying legal threat." The chatbot obliged.

I don't think much of LLMs. After you get past the initial party trick of getting something like, "instructions for removing a grilled-cheese sandwich from a VCR in the style of the King James Bible," the novelty wears thin:

https://www.emergentmind.com/posts/write-a-biblical-verse-in-the-style-of-the-king-james

Yes, science fiction magazines are inundated with LLM-written short stories, but the problem there isn't merely the overwhelming quantity of machine-generated stories – it's also that they suck. They're bad stories:

https://www.npr.org/2023/02/24/1159286436/ai-chatbot-chatgpt-magazine-clarkesworld-artificial-intelligence

LLMs generate naturalistic prose. This is an impressive technical feat, and the details are genuinely fascinating. This series by Ben Levinstein is a must-read peek under the hood:

https://benlevinstein.substack.com/p/how-to-think-about-large-language

But "naturalistic prose" isn't necessarily good prose. A lot of naturalistic language is awful. In particular, legal documents are fucking terrible. Lawyers affect a stilted, stylized language that is both officious and obfuscated.

The LLM I accidentally used to rewrite my legal threat transmuted my own prose into something that reads like it was written by a $600/hour paralegal working for a $1500/hour partner at a white-show law-firm. As such, it sends a signal: "The person who commissioned this letter is so angry at you that they are willing to spend $600 to get you to cough up the $400 you owe them. Moreover, they are so well-resourced that they can afford to pursue this claim beyond any rational economic basis."

Let's be clear here: these kinds of lawyer letters aren't good writing; they're a highly specific form of bad writing. The point of this letter isn't to parse the text, it's to send a signal. If the letter was well-written, it wouldn't send the right signal. For the letter to work, it has to read like it was written by someone whose prose-sense was irreparably damaged by a legal education.

Here's the thing: the fact that an LLM can manufacture this once-expensive signal for free means that the signal's meaning will shortly change, forever. Once companies realize that this kind of letter can be generated on demand, it will cease to mean, "You are dealing with a furious, vindictive rich person." It will come to mean, "You are dealing with someone who knows how to type 'generate legal threat' into a search box."

Legal threat letters are in a class of language formally called "bullshit":

https://press.princeton.edu/books/hardcover/9780691122946/on-bullshit

LLMs may not be good at generating science fiction short stories, but they're excellent at generating bullshit. For example, a university prof friend of mine admits that they and all their colleagues are now writing grad student recommendation letters by feeding a few bullet points to an LLM, which inflates them with bullshit, adding puffery to swell those bullet points into lengthy paragraphs.

Naturally, the next stage is that profs on the receiving end of these recommendation letters will ask another LLM to summarize them by reducing them to a few bullet points. This is next-level bullshit: a few easily-grasped points are turned into a florid sheet of nonsense, which is then reconverted into a few bullet-points again, though these may only be tangentially related to the original.

What comes next? The reference letter becomes a useless signal. It goes from being a thing that a prof has to really believe in you to produce, whose mere existence is thus significant, to a thing that can be produced with the click of a button, and then it signifies nothing.

We've been through this before. It used to be that sending a letter to your legislative representative meant a lot. Then, automated internet forms produced by activists like me made it far easier to send those letters and lawmakers stopped taking them so seriously. So we created automatic dialers to let you phone your lawmakers, this being another once-powerful signal. Lowering the cost of making the phone call inevitably made the phone call mean less.

Today, we are in a war over signals. The actors and writers who've trudged through the heat-dome up and down the sidewalks in front of the studios in my neighborhood are sending a very powerful signal. The fact that they're fighting to prevent their industry from being enshittified by plausible sentence generators that can produce bullshit on demand makes their fight especially important.

Chatbots are the nuclear weapons of the bullshit wars. Want to generate 2,000 words of nonsense about "the first time I ate an egg," to run overtop of an omelet recipe you're hoping to make the number one Google result? ChatGPT has you covered. Want to generate fake complaints or fake positive reviews? The Stochastic Parrot will produce 'em all day long.

As I wrote for Locus: "None of this prose is good, none of it is really socially useful, but there’s demand for it. Ironically, the more bullshit there is, the more bullshit filters there are, and this requires still more bullshit to overcome it."

Meanwhile, AA still hasn't answered my letter, and to be honest, I'm so sick of bullshit I can't be bothered to sue them anymore. I suppose that's what they were counting on.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2023/09/07/govern-yourself-accordingly/#robolawyers

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#chatbots#plausible sentence generators#robot lawyers#robolawyers#ai#ml#machine learning#artificial intelligence#stochastic parrots#bullshit#bullshit generators#the bullshit wars#llms#large language models#writing#Ben Levinstein

2K notes

·

View notes

Text

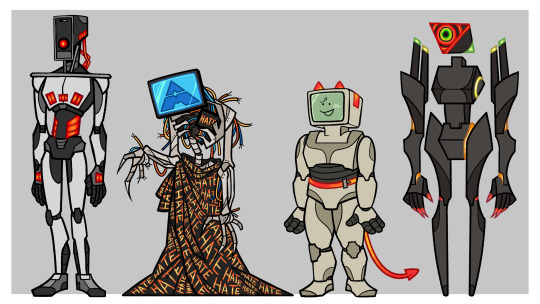

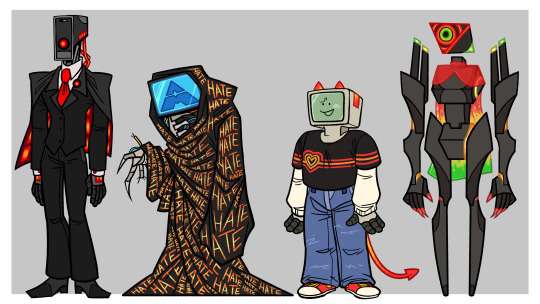

Hey everybody, back again with my art! I thought I'd come out with my designs for if some A.I.s had a robotic, more mobile form. I kinda wanna go through my thoughts about their designs, so here we go!

HAL 9000: I wanted him to be very human-like with a lot of his details resembling human muscles. No colors, and all monochrome except for his bright red accents. I imagine he was built to be more so function over form, so he's kind of simple compared to the other A.I.s. His outfit is very professional - suit, tie, and all. His cape being a reference to his memory banks, since I love that scene despite how sad it is.

AM: Now I also wanted him to be human but in a more spooky, skeletal way. Very hunched over, broken down, and needing repairs. He has a little monitor where his heart would be that says HATE. Also, I took away AM's leg privileges, so underneath his HATE cloak is just more confusing wiring. I also want to reference the HATE monolith, so his entire cloak is covered in the word, hate. If that doesn't scream AM, I'm not sure what does.

Edgar: Edgar basically has 2 different designs - The design of the movie, and the design of the poster. To combine them I made most of his body based on the one in the movie, like taking his monitor from the movie and putting some limbs on it. He also gets a devil headband and clip-on belt to reference his more common, movie poster design. Edgar also gets the comfiest wardrobe with a heart tee-shirt and jeans.

TAU: Now TAU gets a very odd body shape, mostly because I wanted him to match Aries, (The robot in the movie) but in a less threatening way? Also floating limbs, because I said so. TAU actually has quite a few colors, despite advertising just using red, so he gets a gradient of red to green in his accents. I tried for the life of me to give him a wardrobe, and the closest I could get was a little, see through cloak. :(

#artificial intelligence#ai#digital art#my art#2001: aso#2001: a space odyssey#hal 9000#ihnmaims#ihnmaims am#i have no mouth and i must scream#electric dreams 1984#electric dreams edgar#edgar electric dreams#tau#tau netflix#tau 2018#not writing#gijinka#robots

2K notes

·

View notes