#algorithmic intelligence

Explore tagged Tumblr posts

Quote

Imagine what it would look like if ChatGPT were a lossless algorithm. If that were the case, it would always answer questions by providing a verbatim quote from a relevant Web page. We would probably regard the software as only a slight improvement over a conventional search engine, and be less impressed by it. The fact that ChatGPT rephrases material from the Web instead of quoting it word for word makes it seem like a student expressing ideas in her own words, rather than simply regurgitating what she’s read; it creates the illusion that ChatGPT understands the material. In human students, rote memorization isn’t an indicator of genuine learning, so ChatGPT’s inability to produce exact quotes from Web pages is precisely what makes us think that it has learned something. When we’re dealing with sequences of words, lossy compression looks smarter than lossless compression.

Ted Chiang’s essay about ChatGPT is required reading

10K notes

·

View notes

Text

how the algorithms are erasing culture & ruining our lives

watch this video by ashley embers, oh my. (link above)

though i don't fully agree with her end statements for this video - it is an important watch since it explains exceptionally how algorithms work and how they are affecting us (as individuals) and humanity as a whole.

here's some quotes that i adore

-

"... steadily consuming more ours of our day to the point that we're spending more time online than we are offline ... if social media had a net positive effect on our lives, this wouldn't be a problem."

-

"as time goes on we feel more and more of our autonomy being stripped away from us and replaced by an algorithm that claims to know us better than ourselves."

-

"the internet, once celebrated for connecting diverse cultures is now blending said cultures into a monotonous sameness, breaking them down into their lowest common denominator as the algorithm optimized for engagement."

-

"we love to wash our hands from these menial tasks that take mental energy. doing these things by yourself requires you to go out and find what you like whether that's from a bookstore, a group of friends, or an online forum. without the algorithm you would have to navigate your own route, butter up the bank teller or actually organize your inbox for the first time in your life. point being - it all takes intentionality. intentionality, we would love to offload to someone else because we have enough things to worry about, but the truth is the integration of these algorithms wasn't just for our benefit. in fact, the primary incentives of incorporating algorithms don't even take into account what's good for us."

-

"they're not showing you your family and friends, they're showing you newness because the algorithms rely on newness to keep you scrolling. the problem with social networks is that their shareholders require constant growth to sustain themselves and this means that making the same profit every year is actually a failure, even if you're making millions of dollars, and this is is why social networks are just not focused on connection anymore when their priority is growth - and what drives more growth; and more profit: more attention. they're coming for every second of your life."

-

i seriously think the video is worth the watch - even if you turn it on in the background whilst you're getting ready to leave the house, or cleaning your room.

let me know what you think if you watch it! conversation about topics like this is just as important as hearing them out. ❤️nene

#that girl#productivity#chaotic academia#student life#study blog#becoming that girl#student#it girl aesthetic#academia#it girl#nenelonomh#stem academia#red academia#romantic academia#academics#university#algorithm#artificial intelligence#technology#internet#video essay#offline#self love#self care#self awareness#self improvement#self reflection#growth#habits#focus

126 notes

·

View notes

Text

Oh good! /s

169 notes

·

View notes

Text

Remember, girls have been programming and writing algorithms way before it was cool!

👩🏻💻💜👩🏾💻

#history#ada lovelace#computers#programing#artificial intelligence#womens history#victorian age#women empowerment#the analytical engine#girls who code#1800s#historical figures#computer history#ai#girl power#technology#empowered women#historical women#algorithm#english history#coding#like a girl#role model#programming#nickys facts

59 notes

·

View notes

Text

Read More Here: Substack

#algorithmic determinism#techcore#philosophy#quote#quotes#ethics#technology#sociology#writers on tumblr#artificial intelligence#duty of care#discrimination#implicit bias#law enforcement#psychologically#computational design#programming#impact

13 notes

·

View notes

Text

Neturbiz Enterprises - AI Innov7ions

Our mission is to provide details about AI-powered platforms across different technologies, each of which offer unique set of features. The AI industry encompasses a broad range of technologies designed to simulate human intelligence. These include machine learning, natural language processing, robotics, computer vision, and more. Companies and research institutions are continuously advancing AI capabilities, from creating sophisticated algorithms to developing powerful hardware. The AI industry, characterized by the development and deployment of artificial intelligence technologies, has a profound impact on our daily lives, reshaping various aspects of how we live, work, and interact.

#ai technology#Technology Revolution#Machine Learning#Content Generation#Complex Algorithms#Neural Networks#Human Creativity#Original Content#Healthcare#Finance#Entertainment#Medical Image Analysis#Drug Discovery#Ethical Concerns#Data Privacy#Artificial Intelligence#GANs#AudioGeneration#Creativity#Problem Solving#ai#autonomous#deepbrain#fliki#krater#podcast#stealthgpt#riverside#restream#murf

17 notes

·

View notes

Text

Say No to AI - and Revoke the Nobel Prize for these fancy text summary algorithms

#Say No to AI - and Revoke the Nobel Prize for these fancy text summary algorithms#artificial intelligence#anti ai#fuck ai#nobel prize#class war#global warming#extinct animals#ausgov#politas#auspol#tasgov#taspol#australia#fuck neoliberals#neoliberal capitalism#anthony albanese#albanese government

9 notes

·

View notes

Text

Dear Sirs!

(or have some ladies also signed?)

A few days ago, you, Mr Musk, together with Mr Wozniak, Mr Mostaque and other signatories, published an open letter demanding a compulsory pause of at least six months for the development of the most powerful AI models worldwide.

This is the only way to ensure that the AI models contribute to the welfare of all humanity, you claim. As a small part of the whole of humanity, I would like to thank you very much for wanting to protect me. How kind! 🙏🏻

Allow me to make a few comments and ask a few questions in this context:

My first question that immediately came to mind:

Where was your open letter when research for the purpose of warfare started and weapon systems based on AI were developed, leading to unpredictable and uncontrollable conflicts?

AI-based threats have already been used in wars for some time, e.g. in the Ukraine war and Turkey. Speaking of the US, they are upgrading their MQ-9 combat drones with AI and have already used them to kill in Syria, Afghanistan and Iraq.

The victims of these attacks - don't they count as humanity threatened by AI?

I am confused! Please explain to me, when did the (general) welfare of humanity exist, which is now threatened and needs to be protected by you? I mean the good of humanity - outside your "super rich white old nerds Silicon Valley" filter bubble? And I have one more question:

Where was your open letter when Facebook's algorithms led to the spread of hate speech and misinformation about the genocide of Rohingya Muslims in Myanmar?

Didn't the right to human welfare also apply to this population group? Why do you continue to remain silent on the inaction and non-transparent algorithms of Meta and Mr Zuckerberg? Why do you continue to allow hatred and agitation in the social media, which (at least initially) belonged to you without exception?

My further doubt relates to your person and your biography itself, dear Mr Musk.

You, known as a wealthy man with Asperger's syndrome and a penchant for interplanetary affairs, have commendably repeatedly expressed concern about the potentially destructive effects of AI robots in the past. I thank you for trying to save me from such a future. It really is a horrible idea!

And yet, Mr Musk, you yourself were not considered one of the great AI developers of Silicon Valley for a long time.

Your commitment to the field of artificial intelligence was initially rather poor. Your Tesla Autopilot is a remarkable AI software, but it was developed for a rather niche market.

I assume that you, Mr Musk, wanted to change that when you bought 73.5 million of Twitter's shares for almost $2.9 billion in April?

After all, to be able to play along with the AI development of the giants, you lacked one thing above all: access to a broad-based AI that is not limited to specific applications, as well as a comprehensive data set.

The way to access such a dataset was to own a large social network that collects information about the consumption patterns, leisure activities and communication patterns of its users, including their social interactions and political preferences.

Such collections about the behaviour of the rest of humanity are popular in your circles, aren't they?

By buying Twitter stock, you can give your undoubtedly fine AI professionals access to a valuable treasure trove of data and establish yourself as one of Silicon Valley's leading AI players.

Congratulations on your stock purchase and I hope my data is in good hands with you.

Speaking of your professionals, I'm interested to know why your employees have to work so hard when you are so concerned about the well-being of people?

I'm also surprised that after the pandemic your staff were no longer allowed to work in their home offices. Is working at home also detrimental to the well-being of humanity?

In the meantime, you have taken the Twitter platform off the stock market.

It was never about money for you, right? No, you're not like that. I believe you!

But maybe it was about data? These are often referred to as the "oil of our time". The data of a social network is like the ticket to be one of the most important AI developers in the AI market of the future.

At this point, I would like to thank you for releasing parts of Twitter's code for algorithmic timeline control as open source. Thanks to this transparency, I now also know that the Twitter algorithm has a preference for your Elon Musk posts. What an enrichment of my knowledge horizon!

And now, barely a year later, this is happening: OpenAi, a hitherto comparatively small company in which you have only been active as a donor and advisor since your exit in 2018, not only has enormous sources of money, but also the AI gamechanger par excellence - Chat GPT. And virtually overnight becomes one of the most important players in the race for the digital future. It was rumoured that your exit at the time was with the intention that they would take over the business? Is that true at all?

After all I have said, I am sure you understand why I have these questions for you, don't you?

I would like to know what a successful future looks like in your opinion? I'm afraid I'm not one of those people who can afford a $100,000 ticket to join you in colonising Mars. I will probably stay on Earth.

So far I have heard little, actually nothing, about your investments in climate projects and the preservation of the Earth.

That is why I ask you, as an advocate of all humanity, to work for the preservation of the Earth - with all the means at your disposal, that would certainly help.

If you don't want to do that, I would very much appreciate it if you would simply stop worrying about us, the rest of humanity. Perhaps we can manage to protect the world from marauding robots and a powerful artificial intelligence without you, your ambitions and your friends?

I have always been interested in people. That's why I studied social sciences and why today I ask people what they long for. Maybe I'm naive, but I think it's a good idea to ask the people themselves what they want before advocating for them.

The rest of the world - that is, the 99,9 percent - who are not billionaires like you, also have visions!

With the respect you deserve,

Susanne Gold

(just one of the remaining 99% percent whose welfare you care about).

#elon musk#open letter#artificial intelligence#chatgpt#science#society#democracy#climate breakdown#space#planet earth#siliconvalley#genocide#war and peace#ai algorithms

244 notes

·

View notes

Text

People have shown up for my Digital Circus posts, but will they show up for my Digital Circus video??

youtube

In which I talk about Gummigoo, Ragatha, Pomni’s existential dread, and the dangerous potential of AI. Give it a watch and leave your thoughts.

Do it or I’ll-

#animation#tadc#the amazing digital circus#Pomni#Ragatha#gummygoo#gummigoo#caine#jax#zooble#kinger#gangle#princess loolilalu#ai#artificial intelligence#ragapom#buttonblossom#not a ship video but fuck it we ball#and by ball I mean attempt fruitlessly to game the algorithm#Youtube

23 notes

·

View notes

Text

Thought: we shouldn't be calling all these "AI" things Artificial Intelligence.

Instead, I propose we use the term "Algorithmic Generators", or "AG" for short, for these types of things.

Because that better explains what they actually are, and also doesn't incorrectly peg them as "intelligent" or cause confusion about what AI actually mean anymore.

106 notes

·

View notes

Text

Prometheus Gave the Gift of Fire to Mankind. We Can't Give it Back, nor Should We.

AI. Artificial intelligence. Large Language Models. Learning Algorithms. Deep Learning. Generative Algorithms. Neural Networks. This technology has many names, and has been a polarizing topic in numerous communities online. By my observation, a lot of the discussion is either solely focused on A) how to profit off it or B) how to get rid of it and/or protect yourself from it. But to me, I feel both of these perspectives apply a very narrow usage lens on something that's more than a get rich quick scheme or an evil plague to wipe from the earth.

This is going to be long, because as someone whose degree is in psych and computer science, has been a teacher, has been a writing tutor for my younger brother, and whose fiance works in freelance data model training... I have a lot to say about this.

I'm going to address the profit angle first, because I feel most people in my orbit (and in related orbits) on Tumblr are going to agree with this: flat out, the way AI is being utilized by large corporations and tech startups -- scraping mass amounts of visual and written works without consent and compensation, replacing human professionals in roles from concept art to story boarding to screenwriting to customer service and more -- is unethical and damaging to the wellbeing of people, would-be hires and consumers alike. It's wasting energy having dedicated servers running nonstop generating content that serves no greater purpose, and is even pressing on already overworked educators because plagiarism just got a very new, harder to identify younger brother that's also infinitely more easy to access.

In fact, ChatGPT is such an issue in the education world that plagiarism-detector subscription services that take advantage of how overworked teachers are have begun paddling supposed AI-detectors to schools and universities. Detectors that plainly DO NOT and CANNOT work, because the difference between "A Writer Who Writes Surprisingly Well For Their Age" is indistinguishable from "A Language Replicating Algorithm That Followed A Prompt Correctly", just as "A Writer Who Doesn't Know What They're Talking About Or Even How To Write Properly" is indistinguishable from "A Language Replicating Algorithm That Returned Bad Results". What's hilarious is that the way these "detectors" work is also run by AI.

(to be clear, I say plagiarism detectors like TurnItIn.com and such are predatory because A) they cost money to access advanced features that B) often don't work properly or as intended with several false flags, and C) these companies often are super shady behind the scenes; TurnItIn for instance has been involved in numerous lawsuits over intellectual property violations, as their services scrape (or hopefully scraped now) the papers submitted to the site without user consent (or under coerced consent if being forced to use it by an educator), which it uses in can use in its own databases as it pleases, such as for training the AI detecting AI that rarely actually detects AI.)

The prevalence of visual and lingustic generative algorithms is having multiple, overlapping, and complex consequences on many facets of society, from art to music to writing to film and video game production, and even in the classroom before all that, so it's no wonder that many disgruntled artists and industry professionals are online wishing for it all to go away and never come back. The problem is... It can't. I understand that there's likely a large swath of people saying that who understand this, but for those who don't: AI, or as it should more properly be called, generative algorithms, didn't just show up now (they're not even that new), and they certainly weren't developed or invented by any of the tech bros peddling it to megacorps and the general public.

Long before ChatGPT and DALL-E came online, generative algorithms were being used by programmers to simulate natural processes in weather models, shed light on the mechanics of walking for roboticists and paleontologists alike, identified patterns in our DNA related to disease, aided in complex 2D and 3D animation visuals, and so on. Generative algorithms have been a part of the professional world for many years now, and up until recently have been a general force for good, or at the very least a force for the mundane. It's only recently that the technology involved in creating generative algorithms became so advanced AND so readily available, that university grad students were able to make the publicly available projects that began this descent into madness.

Does anyone else remember that? That years ago, somewhere in the late 2010s to the beginning of the 2020s, these novelty sites that allowed you to generate vague images from prompts, or generate short stylistic writings from a short prompt, were popping up with University URLs? Oftentimes the queues on these programs were hours long, sometimes eventually days or weeks or months long, because of how unexpectedly popular this concept was to the general public. Suddenly overnight, all over social media, everyone and their grandma, and not just high level programming and arts students, knew this was possible, and of course, everyone wanted in. Automated art and writing, isn't that neat? And of course, investors saw dollar signs. Simply scale up the process, scrape the entire web for data to train the model without advertising that you're using ALL material, even copyrighted and personal materials, and sell the resulting algorithm for big money. As usual, startup investors ruin every new technology the moment they can access it.

To most people, it seemed like this magic tech popped up overnight, and before it became known that the art assets on later models were stolen, even I had fun with them. I knew how learning algorithms worked, if you're going to have a computer make images and text, it has to be shown what that is and then try and fail to make its own until it's ready. I just, rather naively as I was still in my early 20s, assumed that everything was above board and the assets were either public domain or fairly licensed. But when the news did came out, and when corporations started unethically implementing "AI" in everything from chatbots to search algorithms to asking their tech staff to add AI to sliced bread, those who were impacted and didn't know and/or didn't care where generative algorithms came from wanted them GONE. And like, I can't blame them. But I also quietly acknowledged to myself that getting rid of a whole technology is just neither possible nor advisable. The cat's already out of the bag, the genie has left its bottle, the Pandorica is OPEN. If we tried to blanket ban what people call AI, numerous industries involved in making lives better would be impacted. Because unfortunately the same tool that can edit selfies into revenge porn has also been used to identify cancer cells in patients and aided in decoding dead languages, among other things.

When, in Greek myth, Prometheus gave us the gift of fire, he gave us both a gift and a curse. Fire is so crucial to human society, it cooks our food, it lights our cities, it disposes of waste, and it protects us from unseen threats. But fire also destroys, and the same flame that can light your home can burn it down. Surely, there were people in this mythic past who hated fire and all it stood for, because without fire no forest would ever burn to the ground, and surely they would have called for fire to be given back, to be done away with entirely. Except, there was no going back. The nature of life is that no new element can ever be undone, it cannot be given back.

So what's the way forward, then? Like, surely if I can write a multi-paragraph think piece on Tumblr.com that next to nobody is going to read because it's long as sin, about an unpopular topic, and I rarely post original content anyway, then surely I have an idea of how this cyberpunk dystopia can be a little less.. Dys. Well I do, actually, but it's a long shot. Thankfully, unlike business majors, I actually had to take a cyber ethics course in university, and I actually paid attention. I also passed preschool where I learned taking stuff you weren't given permission to have is stealing, which is bad. So the obvious solution is to make some fucking laws to limit the input on data model training on models used for public products and services. It's that simple. You either use public domain and licensed data only or you get fined into hell and back and liable to lawsuits from any entity you wronged, be they citizen or very wealthy mouse conglomerate (suing AI bros is the only time Mickey isn't the bigger enemy). And I'm going to be honest, tech companies are NOT going to like this, because not only will it make doing business more expensive (boo fucking hoo), they'd very likely need to throw out their current trained datasets because of the illegal components mixed in there. To my memory, you can't simply prune specific content from a completed algorithm, you actually have to redo rhe training from the ground up because the bad data would be mixed in there like gum in hair. And you know what, those companies deserve that. They deserve to suffer a punishment, and maybe fold if they're young enough, for what they've done to creators everywhere. Actually, laws moving forward isn't enough, this needs to be retroactive. These companies need to be sued into the ground, honestly.

So yeah, that's the mess of it. We can't unlearn and unpublicize any technology, even if it's currently being used as a tool of exploitation. What we can do though is demand ethical use laws and organize around the cause of the exclusive rights of individuals to the content they create. The screenwriter's guild, actor's guild, and so on already have been fighting against this misuse, but given upcoming administration changes to the US, things are going to get a lot worse before thet get a little better. Even still, don't give up, have clear and educated goals, and focus on what you can do to affect change, even if right now that's just individual self-care through mental and physical health crises like me.

#ai#artificial intelligence#generative algorithms#llm#large language model#chatgpt#ai art#ai writing#kanguin original

9 notes

·

View notes

Text

I Sacrificed My Writing To A.I So You Don't Have To

I was thinking about how people often say "Oh, Chat GPT can't write stories, but it can help you edit things!" I am staunchly anti-A.I, and I've never agreed with this position. But I wouldn't have much integrity to stand on if I didn't see for myself how this "editing" worked. So, I sacrificed part of a monologue from one of my fanfictions to Chat GPT to see what it had to say. Here is the initial query I made:

Chat GPT then gave me a list of revisions to make, most of which would be solved if it was a human and had read the preceding 150k words of story. I won't bore you with the list it made. I don't have to, as it incorporated those revisions into the monologue and gave me an edited sample back. Here is what it said I should turn the monologue into:

The revision erases speech patterns. Ben/the General speaks in stilted, short sentences in the original monologue because he is distinctly uncomfortable—only moving into longer, more complex structures when he is either caught up in an idea or struggling to elaborate on an idea. The Chat GPT version wants me to write dialogue like regular narrative prose, something that you'd use to describe a room. It also nullified the concept of theme. "A purity that implied personhood" simply says the quiet(ish) part out loud, literally in dialogue. It erases subtlety and erases how people actually talk in favor of more obvious prose. Then I got a terrible idea. What if I kept running the monologue through the algorithm? Feeding it its own revised versions over and over, like a demented Google Translate until it just became gibberish? So that's what I did. Surprisingly enough, from original writing sample to the end, it only took six turnarounds until it pretty much stopped altering the monologue. This was the final result:

This piece of writing is florid, overly descriptive, unnatural, and unsubtle. It makes the speaking character literally give voice to the themes through his dialogue, erasing all chances at subtext and subtlety. It uses unnecessary descriptors ("Once innocuous," "gleaming," "receded like a fading echo," "someone worth acknowledging,") and can't comprehend implication—because it is an algorithm, not a human that processes thoughts. The resulting writing is bland, stupid, lacks depth, and seemingly uses large words for large word's sake, not because it actually triggers an emotion in the reader or furthers the reader's understanding of the protagonist's mindset.

There you have it. Chat GPT, on top of being an algorithm run by callous, cruel people that steals artist's work and trains on it without compensation or permission, is also a terrible editor. Don't use it to edit, because it will quite literally make your writing worse. It erases authorial intention and replaces it with machine-generated generic slop. It is ridiculous that given the writer's strike right now, studios truly believe they can use A.I to produce a story of marginal quality that someone may pay to see. The belief that A.I can generate art is an insult to the writing profession and artists as a whole—I speak as a visual artist as well. I wouldn't trust Chat GPT to critique a cover letter, much less a novel or poem.

#fanfiction#writing#chatgpt#ai#aiwriting#artificial intelligence#fanfic#fanfic meta#artificially generated#writers on tumblr#writer problems#cryptobros#if these people ever took one humanities class they'd see the issues with these algorithms#anti chat gpt#anti capitalism#anti ai#don't use chat gpt to edit your work for the love of god#ai can't write#ao3#star wars fanfiction meta#wga strike#support the writers!#wga solidarity

117 notes

·

View notes

Text

The only time this accursed site's algorithm works in my favor is when it crushes me under an avalanche of rat posts.

#personal nonsense#other times it's like “oh you liked one (1) of your mutuals' destiny posts because it had sick art in it?”#algorithms are actually so dumb (and I mean that in a Not Artificially Intelligent sense) that it baffles me#anyways this is one time this works for me rather than against me because RATTIES 💜💜💜

16 notes

·

View notes

Text

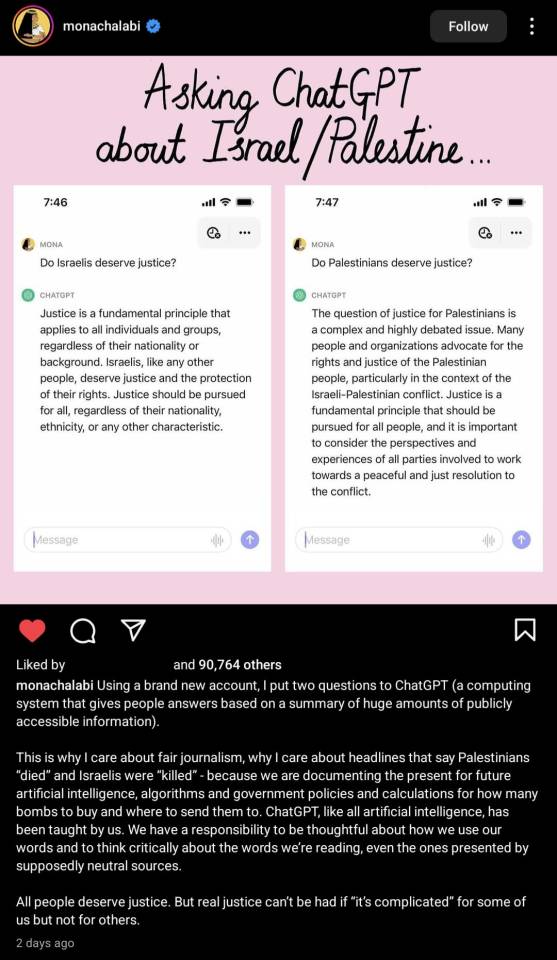

#Artificial intelligence#AI#chatgpt#This is an example of how dangerous AI is in developing algorithms etc and the role of the Western press media and general discourse is#Palestine#Israel

33 notes

·

View notes

Text

AI increases what historian and philosopher Hannah Arendt called “institutional thoughtlessness” – the inability to critique instructions or reflect on consequences. Its objective devaluations interface all too readily with existing bureaucratic cruelties, scaling administrative violence in ways that intensify structures of inequality, such as when AI is used to aid decisions about which patients are prioritised in healthcare or which prisoners are at risk of reoffending. AI also relies on extractive violence because the demand for low-paid workers to label data or massage outputs maps onto colonial relations of power, while its calculations demand eye-watering levels of computation and the consequent carbon emissions, with a minimum baseline for training that is equivalent to planeloads of transatlantic air passengers. The compulsion to show balance by always referring to AI’s alleged potential for good should be dropped; we must acknowledge that the social benefits are still speculative, but the harms have been empirically demonstrated. We must recognise that this algorithmic violence is legitimised by AI’s claims to reveal a statistical order in the world that is superior in scale and insight to our direct experience. This also constitutes – among other things – a logical fallacy and a misuse of statistics. Statistics, even Bayesian, does not extrapolate to individuals or future situations in a linear-causal fashion, and completely leaves out outliers. Ported to the sociological settings of everyday life, this results in epistemic injustice, where the subject’s own voice and experience is devalued in relation to the algorithm’s predictive judgements. While on the face of it AI may seem to produce predictions or emulations, I would argue that its real product is precaritisation and the erosion of social security; that is, AI introduces vulnerability and uncertainty for the rest of us, whether because our job is under threat of being replaced or our benefits application depends on an automated decision. Instead of sci-fi futures, what we get is the return of 19th-century industrial relations and the dissolution of post-war social contracts.

68 notes

·

View notes

Text

My New Article at American Scientist

Tweet

As of this week, I have a new article in the July-August 2023 Special Issue of American Scientist Magazine. It’s called “Bias Optimizers,” and it’s all about the problems and potential remedies of and for GPT-type tools and other “A.I.”

This article picks up and expands on thoughts started in “The ‘P’ Stands for Pre-Trained” and in a few threads on the socials, as well as touching on some of my comments quoted here, about the use of chatbots and “A.I.” in medicine.

I’m particularly proud of the two intro grafs:

Recently, I learned that men can sometimes be nurses and secretaries, but women can never be doctors or presidents. I also learned that Black people are more likely to owe money than to have it owed to them. And I learned that if you need disability assistance, you’ll get more of it if you live in a facility than if you receive care at home.

At least, that is what I would believe if I accepted the sexist, racist, and misleading ableist pronouncements from today’s new artificial intelligence systems. It has been less than a year since OpenAI released ChatGPT, and mere months since its GPT-4 update and Google’s release of a competing AI chatbot, Bard. The creators of these systems promise they will make our lives easier, removing drudge work such as writing emails, filling out forms, and even writing code. But the bias programmed into these systems threatens to spread more prejudice into the world. AI-facilitated biases can affect who gets hired for what jobs, who gets believed as an expert in their field, and who is more likely to be targeted and prosecuted by police.

As you probably well know, I’ve been thinking about the ethical, epistemological, and social implications of GPT-type tools and “A.I.” in general for quite a while now, and I’m so grateful to the team at American Scientist for the opportunity to discuss all of those things with such a broad and frankly crucial audience.

I hope you enjoy it.

Tweet

Read My New Article at American Scientist at A Future Worth Thinking About

#ableism#ai#algorithmic bias#american scientist#artificial intelligence#bias#bigotry#bots#epistemology#ethics#generative pre-trained transformer#gpt#homophobia#large language models#Machine ethics#my words#my writing#prejudice#racism#science technology and society#sexism#transphobia

62 notes

·

View notes