#IntelTiberDeveloperCloud

Explore tagged Tumblr posts

Text

Intel Tiber AI Cloud: Intel Tiber Cloud Services Portfolio

Introducing Intel Tiber AI Cloud

Today Intel excited to announce the rebranding of Intel Tiber AI Cloud, the company’s rapidly expanding cloud offering. It is aimed to providing large-scale access to Intel software and computation for AI installations, support for the developer community, and benchmark testing. It is a component of the Intel Tiber Cloud Services portfolio.

What is Intel Tiber AI Cloud?

Intel’s new cloud service, the Intel Tiber AI Cloud, is designed to accommodate massive AI workloads using its Gaudi 3 CPUs. Rather than going up against AWS or other cloud giants directly, this platform is meant to serve business clients with an emphasis on AI computing. Tiber AI Cloud is particularly designed for complicated AI activities and offers scalability and customization for enterprises by using Intel’s cutting-edge AI processors.

Based on the foundation of Intel Tiber Developer Cloud, Intel Tiber AI Cloud represents the rising focus on large-scale AI implementations with more paying clients and AI partners, all the while expanding the developer community and open-source collaborations.

New Developments Of Intel Tiber AI Cloud

It have added a number of new features to Intel Tiber AI Cloud in the last several months:

New computing options

Nous began building up Intel’s most recent AI accelerator platform, Intel Tiber AI Cloud, in conjunction with last month’s launch of Intel Gaudi 3. We anticipate broad availability later this year. Furthermore, pre-release Dev Kits for the AI PC using the new Intel Core Ultra processors and compute instances with the new 128-core Intel Xeon 6 CPU are now accessible for tech assessment via the Preview catalog in the Intel Tiber AI Cloud.

Open source model and framework integrations

With the most recent PyTorch 2.4 release, Intel revealed support for Intel GPUs. Developers may now test the most recent Pytorch versions supported by Intel on laptops via the Learning catalog.

The most recent version of Meta’s Llama mode collection, Llama 3.2, has been deployed in Intel Tiber AI Cloud and has undergone successful testing with Intel Xeon processors, Intel Gaudi AI accelerator, and AI PCs equipped with Intel Core Ultra CPUs.

Increased learning resources

New notebooks introduced to the Learning library allow you to use Gaudi AI accelerators. AI engineers and data scientists may use these notebooks to run interactive shell commands on Gaudi accelerators. Furthermore, the Intel cloud team collaborated with Prediction Guard and DeepLearning.ai to provide a brand-new, well-liked LLM course powered by Gaudi 2 computing.

Partnerships

It revealed Intel’s collaboration with Seekr, a rapidly expanding AI startup, at the Intel Vision 2024 conference. To assist businesses overcome the difficulties of bias and inaccuracy in their AI applications, Seekr is now training foundation models and LLMs on one of the latest super-compute Gaudi clusters in Intel’s AI Cloud, which has over 1,024 accelerators. Its dependable artificial intelligence platform, SeekrFlow, is now accessible via Intel Tiber AI Cloud‘s Software Catalog.

How it Works

Let’s take a closer look at the components that make up Intel’s AI cloud offering: systems, software, silicon, and services.

Silicon

For the newest Intel CPUs, GPUs, and AI accelerators, Intel Tiber AI Cloud is first in line and Customer Zero, offering direct support lines into Intel engineers. Because of this, Intel’s AI cloud is able to provide early access to inexpensive, perhaps non-generally accessible access to the newest Intel hardware and software.

Systems

The size and performance needs of AI workloads are growing, which causes the demands on computational infrastructure to change quickly.

To implement AI workloads, Intel Tiber AI Cloud provides a range of computing solutions, including as virtual machines, dedicated systems, and containers. Furthermore, it have recently implemented high-speed Ethernet networking, compact and super-compute size Intel Gaudi clusters for training foundation models.

Program

Intel actively participates in open source initiatives like Pytorch and UXL Foundation and supports the expanding open software ecosystem for artificial intelligence. Users may access the newest AI models and frameworks in Intel Tiber AI Cloud with to dedicated Intel cloud computing that is assigned to help open source projects.

Services

The way in which developers and enterprise customers use computing AI services varies according on use cases, technical proficiency, and implementation specifications. To satisfy these various access needs, Intel Tiber AI Cloud provides server CLI access, curated interactive access via notebooks, and serverless API access.

Why It Is Important

One of the main components of Intel’s plan to level the playing field and eliminate obstacles to AI adoption for developers, enterprises, and startups is the Intel Tiber AI Cloud:

Cost performance: For the best AI implementations, it provides early access to the newest Intel computing at a competitive cost performance.

Scale: From virtual machines (VMs) and single nodes to massive super-computing Gaudi clusters, it provides a scale-out computing platform for expanding AI deployments.

Open source: It ensures mobility and prevents vendor lock-in by providing access to open source models, frameworks, and accelerator software supported by Intel.

Read more on Govindhtech.com

#IntelTiberAICloud#Gaudi3#AICloud#IntelTiberDeveloperCloud#IntelCoreUltraprocessors#Pytorchversions#GaudiAIaccelerators#virtualmachines#news#technews#technology#technologynews#technologytrends#govindhtech

0 notes

Text

Top 5 Fine Tuning LLM Techniques & Inference To Improve AI

Fine Tuning LLM Techniques

The Top 5 Fine Tuning LLM Techniques and Inference Tricks to Boost Your AI Proficiency. With LLM inference and fine-tuning, your generative AI (GenAI) systems will perform even better.

The foundation of GenAI is LLMs, which allow us to create strong, cutting-edge applications. But like any cutting-edge technology, there are obstacles to overcome before they can be fully used. It may be difficult to install and fine-tune these models for inference. You may overcome these obstacles with the help of these five recommendations from this article.

Prepare Your Data Carefully

The performance of the model is largely dependent on efficient data preparation. Having a clean and well-labeled dataset may greatly improve training results. Noisy data, unbalanced classes, task-specific formatting, and nonstandard datatypes are among the difficulties.

Tips

The columns and structure of your dataset will depend on whether you want to train and fine-tune for teaching, conversation, or open-ended text creation.

Generate fake data from a much bigger LLM to supplement your data. To create data for fine-tuning a smaller 1B parameter model, for instance, utilize a 70B parameter model.

” This still holds true for language models, and it may significantly affect your models’ quality and hallucination. Try assessing 10% of your data by hand at random.

Adjust Hyperparameters Methodically

Optimizing hyperparameters is essential to attaining peak performance. Because of the large search space, choosing the appropriate learning rate, batch size, and number of epochs may be challenging. It’s difficult to automate this using LLMs, and optimizing it usually involves having access to two or more accelerators.

Tips

Utilize random or grid search techniques to investigate the hyperparameter space.

Create a bespoke benchmark for distinct LLM tasks by synthesizing or manually constructing a smaller group of data based on your dataset. As an alternative, make use of common benchmarks from harnesses for language modeling such as EleutherAI Language Model Evaluation Harness.

To prevent either overfitting or underfitting, pay strict attention to training data. Look for circumstances in which your validation loss rises while your training loss stays constant this is a blatant indication of overfitting.

LLM Fine tuning Methods

Employ Cutting-Edge Methods

Training time and memory may be greatly decreased by using sophisticated methods like parameter-efficient fine-tuning (PEFT), distributed training, and mixed precision. The research and production teams working on GenAI applications find these strategies useful and use them.

Tips

For accuracy to be maintained across mixed and non-mixed precision model training sessions, verify your model’s performance on a regular basis.

To make implementation simpler, use libraries that enable mixed precision natively. Above all, PyTorch allows for automated mixed precision with little modifications to the training code.

Model sharding is a more sophisticated and resource-efficient approach than conventional distributed parallel data approaches. It divides the data and the model across many processors. Software alternatives that are popular include Microsoft DeepSpeed ZeRO and PyTorch Fully Sharded Data Parallel (FSDP).

Low-rank adaptations (LoRA), one of the PEFT approaches, let you build “mini-models” or adapters for different tasks and domains. Additionally, LoRA lowers the overall number of trainable parameters, which lowers the fine-tuning process’s memory and computational cost. By effectively deploying these adapters, you may handle a multitude of use scenarios without requiring several huge model files.

Aim for Inference Speed Optimization

Minimizing inference latency is essential for successfully deploying LLMs, but it may be difficult because of their complexity and scale. The user experience and system latency are most directly impacted by this component of AI

Tips

To compress models to 16-bit and 8-bit representations, use methods such as low-bit quantization.

As you try quantization recipes with lower precisions, be sure to periodically assess the model’s performance to ensure accuracy is maintained.

To lessen the computational burden, remove unnecessary weights using pruning procedures.

To build a quicker, smaller model that closely resembles the original, think about model distillation.

Large-Scale Implementation with Sturdy Infrastructure

Maintaining low latency, fault tolerance, and load balancing are some of the issues associated with large-scale LLM deployment. Setting up infrastructure effectively is essential.

Tips

To build consistent LLM inference environment deployments, use Docker software. The management of dependencies and settings across several deployment phases is facilitated by this.

Utilize AI and machine learning tools like Ray or container management systems like Kubernetes to coordinate the deployment of many model instances within a data center cluster.

When language models get unusually high or low request volumes, use autoscaling to manage fluctuating loads and preserve performance during peak demand. In addition to ensuring that the deployment appropriately satisfies the application’s business needs, this may assist reduce money.

While fine-tuning and implementing LLMs may seem like difficult tasks, you may overcome any obstacles by using the appropriate techniques. Overcoming typical mistakes may be greatly aided by the advice and techniques shown above.

Hugging Face fine-tuning LLM

Library of Resources

For aspiring and experienced AI engineers, it provide carefully crafted and written material on LLM fine-tuning and inference in this area. They go over methods and tools such as Hugging Face for the Optimum for Intel Gaudi library, distributed training, LoRA fine-tuning of Llama 7B, and more.

What you will discover

Apply LoRA PEFT to cutting-edge models.

Find ways to train and execute inference with LLMs using Hugging Face tools.

Seek to use distributed training methods, such as PyTorch FSDP, to expedite the process of training models.

On the Intel Tiber Developer Cloud, configure an Intel Gaudi processor node.

Read more on govindhtech.com

#Top5#ImproveAI#TuningLLMTechniques#IntelGaudi#generativeAI#languagemodels#GenAIapplications#machinelearning#IntelTiberDeveloperCloud#SturdyInfrastructure#DataCarefully#technology#technews#news#govindhtech

0 notes

Text

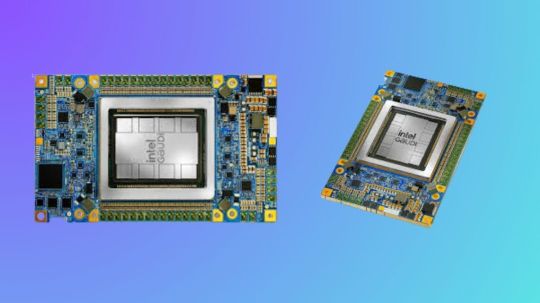

Use Intel Gaudi-3 Accelerators To Increase Your AI Skills

Boost Your Knowledge of AI with Intel Gaudi-3 Accelerators

Large language models (LLMs) and generative artificial intelligence (AI) are two areas in which Intel Gaudi Al accelerators are intended to improve the effectiveness and performance of deep learning workloads. Gaudi processors provide efficient solutions for demanding AI applications including large-scale model training and inference, making them a more affordable option than typical NVIDIA GPUs. Because Intel’s Gaudi architecture is specifically designed to accommodate the increasing computing demands of generative AI applications, businesses looking to implement scalable AI solutions will find it to be a highly competitive option. The main technological characteristics, software integration, and upcoming developments of the Gaudi AI accelerators are all covered in this webinar.

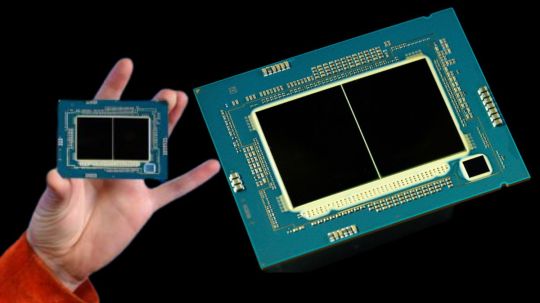

Intel Gaudi Al Accelerators Overview

The very resource-intensive generative AI applications, as LLM training and inference, are the focus of the Gaudi AI accelerator. While Intel Gaudi-3, which is anticipated to be released between 2024 and 2025, promises even more breakthroughs, Gaudi 2, the second-generation CPU, enables a variety of deep learning enhancements.

- Advertisement -

Intel Gaudi 2

The main attributes of Gaudi 2 consist of:

Matrix Multiplication Engine: Hardware specifically designed to process tensors efficiently.

For AI tasks, 24 Tensor Processor Cores offer high throughput.

Larger model and batch sizes are made possible for better performance by the 96 GB of on-board HBM2e memory.

24 on-chip 100 GbE ports offer low latency and high bandwidth communication, making it possible to scale applications over many accelerators.

7nm Process Technology: For deep learning tasks, the 7nm architecture guarantees excellent performance and power efficiency.

These characteristics, particularly the combination of integrated networking and high memory bandwidth, make Gaudi 2 an excellent choice for scalable AI activities like multi-node training of big models. With its specialized on-chip networking, Gaudi’s innovative design does away with the requirement for external network controllers, greatly cutting latency in comparison to competing systems.

Intel Gaudi Pytorch

Software Environment and Stack

With its extensive software package, Intel’s Gaudi platform is designed to interact easily with well-known AI frameworks like PyTorch. There are various essential components that make up this software stack:

Graph Compiler and Runtime: Generates executable graphs that are tailored for the Gaudi hardware using deep learning models.

Kernel Libraries: Reduce the requirement for manual optimizations by using pre-optimized libraries for deep learning operations.

PyTorch Bridge: Requires less code modification to run PyTorch models on Gaudi accelerators.

Complete Docker Support: By using pre-configured Docker images, users may quickly deploy models, which simplifies the environment setup process.

With a GPU migration toolset, Intel also offers comprehensive support for models coming from other platforms, like NVIDIA GPUs. With the use of this tool, model code can be automatically adjusted to work with Gaudi hardware, enabling developers to make the switch without having to completely rebuild their current infrastructure.

Open Platforms for Enterprise AI

Use Cases of Generative AI and Open Platforms for Enterprise AI

The Open Platform for Enterprise AI (OPEA) introduction is one of the webinar’s main highlights. “Enable businesses to develop and implement GenAI solutions powered by an open ecosystem that delivers on security, safety, scalability, cost efficiency, and agility” is the stated mission of OPEA. It is completely open source with open governance, and it was introduced in May 2024 under the Linux Foundation AI and Data umbrella.

It has attracted more than 40 industry partners and has members from system integrators, hardware manufacturers, software developers, and end users on its technical steering committee. With OPEA, businesses can create and implement scalable AI solutions in a variety of fields, ranging from chatbots and question-answering systems to more intricate multimodal models. The platform makes use of Gaudi’s hardware improvements to cut costs while improving performance. Among the important use cases are:

Visual Q&A: This is a model that uses the potent LLaVA model for vision-based reasoning to comprehend and respond to questions based on image input.

Large Language and Vision Assistant, or LLaVA, is a multimodal AI model that combines language and vision to carry out tasks including visual comprehension and reasoning. In essence, it aims to combine the advantages of vision models with LLMs to provide answers to queries pertaining to visual content, such as photographs.

Large language models, such as GPT or others, are the foundation of LLaVA, which expands their functionality by incorporating visual inputs. Typically, it blends the natural language generation and interpretation powers of big language models with image processing techniques (such those from CNNs or Vision Transformers). Compared to solely vision-based models, LLaVA is able to reason about images in addition to describing them thanks to this integration.

Retrieval-Augmented Generation (RAG) or ChatQnA is a cutting-edge architecture that combines a vector database and big language models to improve chatbot capabilities. By ensuring the model obtains and analyzes domain-specific data from the knowledge base and maintains correct and up-to-date responses, this strategy lessens hallucinations.

Microservices can be customized because to OPEA’s modular architecture, which lets users change out databases and models as needed. This adaptability is essential, particularly in quickly changing AI ecosystems where new models and tools are always being developed.

Intel Gaudi Roadmap

According to Intel’s Gaudi roadmap, Gaudi 2 and Intel Gaudi-3 offer notable performance gains. Among the significant developments are:

Doubling AI Compute: In order to handle the increasing complexity of models like LLMs, Intel Gaudi-3 will offer floating-point performance that is 2 times faster for FP8 and 4 times faster for BF16.

Enhanced Memory Bandwidth: Intel Gaudi-3 is equipped with 1.5 times the memory bandwidth of its predecessor, so that speed won’t be compromised when handling larger models.

Increased Network capacity: Intel Gaudi-3’s two times greater networking capacity will help to further eliminate bottlenecks in multi-node training scenarios, which makes it perfect for distributing workloads over big clusters.

Additionally, Gaudi AI IP and Intel’s GPU technology will be combined into a single GPU form factor in Intel’s forthcoming Falcon Shores architecture, which is anticipated to launch in 2025. As part of Intel’s ongoing effort to offer an alternative to conventional GPU-heavy environments, this hybrid architecture is expected to provide an even more potent foundation for deep learning.

Tools for Deployment and Development

Through the Intel Tiber Developer Cloud, which offers cloud-based instances of Gaudi 2 hardware, developers can utilize Gaudi accelerators. Users can test and implement models at large scale using this platform without having to make investments in on-premises infrastructure.

Starting with Gaudi accelerators is as simple as following these steps:

Docker Setup: First, users use pre-built images to build up Docker environments.

Microservices Deployment: End-to-end AI solutions, such chatbots or visual Q&A systems, can be deployed by users using tools like Docker Compose and Kubernetes.

Intel’s inherent support for monitoring tools, such as Prometheus and Grafana, enables users to manage resource utilization and performance throughout their AI pipelines.

In summary

Enterprises seeking an efficient way to scale AI workloads will find a compelling solution in Intel’s Gaudi CPUs, in conjunction with the extensive OPEA framework and software stack. With Gaudi 2’s impressive performance and Intel Gaudi-3‘s upcoming improvements, Intel is establishing itself as a formidable rival in the AI hardware market by offering a reasonably priced substitute for conventional GPU-based architectures. With OPEA’s open and modular design and wide ecosystem support, developers can quickly create and implement AI solutions that are customized to meet their unique requirements.

Read more on govindhtech.com

#IntelGaudi3Accelerators#AISkills#AIapplications#IntelGaudi3#Gaudi2#KernelLibraries#chatbots#LargeLanguage#VisionAssistant#LLaVA#intel#RetrievalAugmentedGeneration#RAG#news#Tools#IntelTiberDeveloperCloud#Prometheus#EnterpriseAI#GenerativeAI#technology#technews#govindhtech

0 notes

Text

Intel Tiber Developer Cloud, Text- to-Image Stable Diffusion

Check Out GenAI for Text-to-Image with a Stable Diffusion Intel Tiber Developer Cloud Workshop.

What is Intel Tiber Developer Cloud?

With access to state-of-the-art Intel hardware and software solutions, developers, AI/ML researchers, ecosystem partners, AI startups, and enterprise customers can build, test, run, and optimize AI and High-Performance Computing applications at a low cost and overhead thanks to the Intel Tiber Developer Cloud, a cloud-based platform. With access to AI-optimized software like oneAPI, the Intel Tiber Developer Cloud offers developers a simple way to create with small or large workloads on Intel CPUs, GPUs, and the AI PC.

- Advertisement -

Developers and enterprise clients have the option to use free shared workspaces and Jupyter notebooks to explore the possibilities of the platform and hardware and discover what Intel can accomplish.

Text-to-Image

This article will guide you through a workshop that uses the Stable Diffusion model practically to produce visuals in response to a written challenge. You will discover how to conduct inference using the Stable Diffusion text-to-image generation model using PyTorch and Intel Gaudi AI Accelerators. Additionally, you will see how the Intel Tiber Developer Cloud can assist you in creating and implementing generative AI workloads.

Text To Image AI Generator

AI Generation and Steady Diffusion

Industry-wide, generative artificial intelligence (GenAI) is quickly taking off, revolutionizing content creation and offering fresh approaches to problem-solving and creative expression. One prominent GenAI application is text-to-image generation, which uses an understanding of the context and meaning of a user-provided description to generate images based on text prompts. To learn correlations between words and visual attributes, the model is trained on massive datasets of photos linked with associated textual descriptions.

A well-liked GenAI deep learning model called Stable Diffusion uses text-to-image synthesis to produce images. Diffusion models work by progressively transforming random noise into a visually significant result. Due to its efficiency, scalability, and open-source nature, stable diffusion is widely used in a variety of creative applications.

- Advertisement -

The Stable Diffusion model in this training is run using PyTorch and the Intel Gaudi AI Accelerator. The Intel Extension for PyTorch, which maximizes deep learning training and inference performance on Intel CPUs for a variety of applications, including large language models (LLMs) and Generative AI (GenAI), is another option for GPU support and improved performance.

Stable Diffusion

To access the Training page once on the platform, click the Menu icon in the upper left corner.

The Intel Tiber Developer Cloud‘s Training website features a number of JupyterLab workshops that you may try out, including as those in AI, AI with Intel Gaudi 2 Accelerators, C++ SYCL, Gen AI, and the Rendering Toolkit.

Workshop on Inference Using Stable Diffusion

Thwy will look at the Inference with Stable Diffusion v2.1 workshop and browse to the AI with Intel Gaudi 2 Accelerator course in this tutorial.

Make that Python 3 (ipykernel) is selected in the upper right corner of the Jupyter notebook training window once it launches. To see an example of inference using stable diffusion and creating an image from your prompt, run the cells and adhere to the notebook’s instructions. An expanded description of the procedures listed in the training notebook can be found below.

Note: the Jupyter notebook contains the complete code; the cells shown here are merely for reference and lack important lines that are necessary for proper operation.

Configuring the Environment

Installing all the Python package prerequisites and cloning the Habana Model-References repository branch to this docker will come first. Additionally, They are going to download the Hugging Face model checkpoint.%cd ~/Gaudi-tutorials/PyTorch/Single_card_tutorials !git clone -b 1.15.1 https://github.com/habanaai/Model-References %cd Model-References/PyTorch/generative_models/stable-diffusion-v-2-1 !pip install -q -r requirements.txt !wget https://huggingface.co/stabilityai/stable-diffusion-2-1-base/resolve/main/ v2-1_512-ema-pruned.ckpt

Executing the Inference

prompt = input("Enter a prompt for image generation: ")

The prompt field is created by the aforementioned line of code, from which the model generates the image. To generate an image, you can enter any text; in this tutorial, for instance, they’ll use the prompt “cat wearing a hat.”cmd = f'python3 scripts/txt2img.py --prompt "{prompt}" 1 --ckpt v2-1_512-ema-pruned.ckpt \ --config configs/stable-diffusion/v2-inference.yaml \ --H 512 --W 512 \ --n_samples 1 \ --n_iter 2 --steps 35 \ --k_sampler dpmpp_2m \ --use_hpu_graph'

print(cmd) import os os.system(cmd)

Examining the Outcomes

Stable Diffusion will be used to produce their image, and Intel can verify the outcome. To view the created image, you can either run the cells in the notebook or navigate to the output folder using the File Browser on the left-hand panel:

/Gaudi-tutorials/PyTorch/Single_card_tutorials/Model-References /PyTorch/generative_models/stable-diffusion-v-2-1/outputs/txt2img-samples/Image Credit To Intel

Once you locate the outputs folder and locate your image, grid-0000.png, you may examine the resulting image. This is the image that resulted from the prompt in this tutorial:

You will have effectively been introduced to the capabilities of GenAI and Stable Diffusion on Intel Gaudi AI Accelerators, including PyTorch, model inference, and quick engineering, after completing the tasks in the notebook.

Read more on govindhtech.com

#IntelTiberDeveloper#TexttoImage#StableDiffusion#IntelCPU#aipc#IntelTiberDeveloperCloud#aiml#IntelGaudiAI#Workshop#genai#Python#GenerativeAI#IntelGaudi#generativeartificialintelligence#technology#technews#news#GenAI#ai#govindhtech

0 notes

Text

Intel Webinar: Experienced Assistance To Implement LLMs

How Prediction Guard Uses Intel Gaudi 2 AI Accelerators to Provide Reliable AI.

Intel webinar

Large language models (LLMs) and generative AI are two areas where the growing use of open-source tools and software at the corporate level makes it necessary to talk about the key tactics and technologies needed to build safe, scalable, and effective LLMs for business applications. In this Intel webinar, Rahul Unnikrishnan Nair, Engineering Lead at Intel Liftoff for Startups, and Daniel Whitenack, Ph.D., creator of Prediction Guard, lead us through the important topics of implementing LLMs utilizing open models, protecting data privacy, and preserving high accuracy.

- Advertisement -

Intel AI webinar

Important Conditions for Enterprise LLM Adoption

Three essential elements are identified in the Intel webinar for an enterprise LLM adoption to be successful: using open models, protecting data privacy, and retaining high accuracy. Enterprises may have more control and customization using open models like Mistral and Llama 3, which allow them to obtain model weights and access inference code. In contrast, closed models lack insight into underlying processes and are accessible via APIs.

Businesses that handle sensitive data like PHI and PII must secure data privacy. HIPAA compliance is typically essential in these scenarios. High accuracy is also crucial, necessitating strong procedures to compare the LLM outputs with ground truth data in order to reduce problems like as hallucinations, in which the output generates erroneous or misleading information even while it is grammatically and coherently accurate.

Obstacles in Closed Models

Closed models like those offered by Cohere and OpenAI have a number of drawbacks. Businesses may be biased and make mistakes because they are unable to observe how their inputs and outputs are handled. In the absence of transparency, consumers could experience latency variations and moderation failures without knowing why they occur. Prompt injection attacks can provide serious security threats because they may use closed models to expose confidential information. These problems highlight how crucial it is to use open models in corporate applications.

Prediction Guard

The Method Used by Prediction Guard

The platform from Prediction Guard tackles these issues by combining performance enhancements, strong security measures, and safe hosting. To ensure security, models are hosted in private settings inside the Intel Tiber Developer Cloud. To improve speed and save costs, Intel Gaudi 2 AI accelerators are used. Before PII reaches the LLM, input filters are employed to disguise or substitute it and prevent prompt injections. By comparing LLM outputs to ground truth data, output validators guarantee the factual consistency of the data.

- Advertisement -

During the optimization phase, which lasted from September 2023 to April 2024, load balancing over many Gaudi 2 machines, improving prompt processing performance by bucketing and padding similar-sized prompts, and switching to the TGI Gaudi framework for easier model server administration were all done.

Prediction Guard moved to Kubernetes-based architecture in Intel Tiber Developer Cloud during the current growth phase (April 2024 to the present), merging CPU and Gaudi node groups. Implemented include deployment automation, performance and uptime monitoring, and integration with Cloudflare for DDoS protection and CDN services.

Performance and Financial Gains

There were notable gains when switching to Gaudi 2. Compared to earlier GPU systems, Prediction Guard accomplished a 10x decrease in computation costs and a 2x gain in throughput for corporate applications. Prediction Guard’s sub-200ms time-to-first-token latency reduction puts it at the top of the industry performance rankings. These advantages were obtained without performance loss, demonstrating Gaudi 2’s scalability and cost-effectiveness.

Technical Analysis and Suggestions

The presenters stressed that having access to an LLM API alone is not enough for a strong corporate AI solution. Thorough validation against ground truth data is necessary to guarantee the outputs’ correctness and reliability. Data management is a crucial factor in AI system design as integrating sensitive data requires robust privacy and security safeguards. Prediction Guard offers other developers a blueprint for optimizing Gaudi 2 consumption via a staged approach. The secret to a successful deployment is to validate core functionality first, then gradually scale and optimize depending on performance data and user input.

Additional Information on Technical Execution

In order to optimize memory and compute utilization, handling static forms during the first migration phase required setting up model servers to manage varying prompt lengths by padding them to specified sizes. By processing a window of requests in bulk via dynamic batching, the system was able to increase throughput and decrease delay.

In order to properly handle traffic and prevent bottlenecks, load balancing among numerous Gaudi 2 servers was deployed during the optimization process. Performance was further improved by streamlining the processing of input prompts by grouping them into buckets according to size and padding within each bucket. Changing to the TGI Gaudi framework made managing model servers easier.

Scalable and robust deployment was made possible during the scaling phase by the implementation of an Intel Kubernetes Service (IKS) cluster that integrates CPU and Gaudi node groups. High availability and performance were guaranteed by automating deployment procedures and putting monitoring systems in place. Model serving efficiency was maximized by setting up inference parameters and controlling key-value caches.

Useful Implementation Advice

It is advised that developers and businesses wishing to use comparable AI solutions begin with open models in order to maintain control and customization options. It is crucial to make sure that sensitive data is handled safely and in accordance with applicable regulations. Successful deployment also requires taking a staged approach to optimization, beginning with fundamental features and progressively improving performance depending on measurements and feedback. Finally, optimizing and integrating processes may be streamlined by using frameworks like TGI Gaudi and Optimum Habana.

In summary

Webinar Intel

Prediction Guard’s all-encompassing strategy, developed in partnership with Intel, exemplifies how businesses may implement scalable, effective, and safe AI solutions. Prediction Guard offers a strong foundation for corporate AI adoption by using Intel Gaudi 2 and Intel Tiber Developer Cloud to handle important issues related to control, personalization, data protection, and accuracy. The Intel webinar‘s technical insights and useful suggestions provide developers and businesses with invaluable direction for negotiating the challenges associated with LLM adoption.

Read more on govindhtech.com

#IntelWebinar#ExperiencedAssistance#Llama3#ImplementLLM#Largelanguagemodels#IntelLiftoff#IntelTiberDeveloperCloud#PredictionGuard#IntelGaudi2#KubernetesService#AIsolutions#dataprotection#technology#technews#news#govindhtech

0 notes

Text

The Veda App Resolved Asia’s Challenges Regarding Education

Asia’s Educational Challenges Solved by the Veda App.

As the school year draws to a close, the Veda app will still be beneficial to over a million kids in Brunei, Japan, and hundreds of schools in Nepal.

Developed by Intel Liftoff program member InGrails Software, the Veda app is a cloud-based all-in-one school and college administration solution that combines all of the separate systems that educational institutions have historically required to employ.

The Veda App Solutions

The Veda app helps schools streamline their operations, provide parents real-time information, and enhance student instruction by automating administrative duties.

All administrative tasks at the school, such as payroll, inventory management, billing and finance, and academic (class, test, report, assignments, attendance, alerts, etc.), are now automated on a single platform. Schools were more conscious of the need to improve remote learning options as a result of the COVID-19 epidemic.

Teachers and students may interact with the curriculum at various times and from different places thanks to the platform’s support for asynchronous learning. This guarantees that learners may go on with their study in spite of outside disturbances.

Technological Accessibility

Although the requirements of all the schools are similar, their technological competency varies. The platform facilitates the accessibility and use of instructional products.

Veda gives schools simple-to-use tools that help them save time while making choices that will have a lasting effect on their children by using data and visualization. They are able to change the way they give education and learning.

The Role of Intel Liftoff

The Veda team and InGrails credit the Intel Liftoff program for helping them with product development. They also credit the mentorship and resources, such as the Intel Tiber Developer Cloud, for helping them hone their technology and optimize their platform for efficiency and scalability by incorporating artificial intelligence.

Their team members were able to learn new techniques for enhancing system performance while managing system load via a number of virtual workshops. In the future, they want to use the hardware resources made available by the Intel developer platform to further innovate Intel product and stay true to their goal of making education the greatest possible user of rapidly changing technology.

The Veda App‘s founder, Nirdesh Dwa, states, “they are now an all-in-one cloud-based school software and digital learning system for growing, big and ambitious names in education.”

The Effect

Veda has significantly changed the education industry by developing a single solution. The more than 1,200 schools that serve 1.3 million kids in Nepal, Japan, and Brunei have benefited from simplified procedures, a 30% average increase in parent participation, and enhanced instruction thanks to data-driven insights.

Education’s Future in Asia and Africa

The Veda platform is now reaching Central Africa, South East Asia, and the Middle East and North Africa. Additionally, they’re keeping up their goal of completely integrating AI capabilities, such social-emotional learning (SEL) tools and decision support systems, to build even more encouraging and productive learning environments by the middle of 2025.

Their mission is to keep pushing the boundaries of innovation and giving educators the resources they need to thrive in a world becoming more and more digital.

Future ideas for Veda include integrating AI to create a Decision Support System based on instructor input and making predictions about students’ talents for advanced coursework possible.

An all-inclusive MIS and digital learning platform for schools and colleges

They are the greatest all-in-one cloud-based educational software and digital learning platform for rapidly expanding, well-known, and aspirational educational brands.

Designed to Be Used by All

Parents and children may access all the information and study directly from their mobile devices with the help of an app. accessible on Android and iOS platforms.

Designed even for parents without technological experience, this comprehensive solution gives parents access to all the information they need about their kids, school, and important details like bills.

With the aid of their mobile devices, students may access resources and complete assignments, complete online courses, and much more.

Features

All you need to put your college and school on autopilot is Veda. Veda takes care of every facet of education, freeing you up to focus on what really matters: assisting students and securing their future.

Why should your school’s ERP be Veda?

Their superior product quality, first-rate customer support, industry experience, and ever-expanding expertise make us the ideal software for schools and colleges.Image Credit To Intel

More than one thousand universities and institutions vouch for it.

99% of clients have renewed for over six years.

Market leader in 45 Nepalese districts

superior after-sale support

Operating within ten days of the agreed-upon date

MIS Veda & E-learning

enabling online education in over a thousand schools. With only one school administration software, they provide Zoom Integrated Online Classes, Auto Attendance, Assignment with annotation, Subjective and Objective Exams, Learning Materials, Online Admissions, and Online Fee payment.Image Credit To Intel

Your software should reflect the uniqueness of your institution

Every kind of educational institution, including private schools, public schools, foreign schools, Montessori schools, and universities, uses the tried-and-true Veda school management system.

What is the Veda?

“Veda” is a comprehensive platform for digital learning and school management. Veda assists schools with automating daily operations and activities; facilitating effective and economical communication and information sharing among staff, parents, and school administration; and assisting schools in centrally storing, retrieving, and analyzing data produced by various school processes.

Read more on govindhtech.com

#VedaApp#ResolvedAsia#Challenges#IntelLiftoff#RegardingEducation#IntelLiftoffprogram#intel#IntelTiberDeveloperCloud#AIcapabilities#analyzingdata#learningplatform#AndroidiOS#mobiledevices#technology#technews#news#govindhtech

0 notes