#LargeLanguage

Explore tagged Tumblr posts

Text

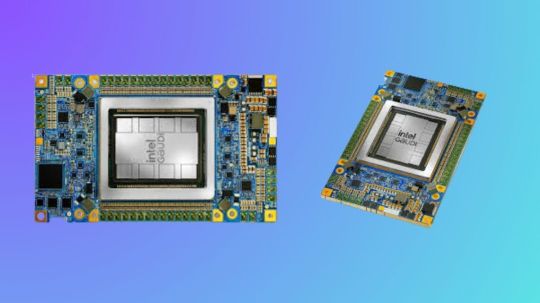

Use Intel Gaudi-3 Accelerators To Increase Your AI Skills

Boost Your Knowledge of AI with Intel Gaudi-3 Accelerators

Large language models (LLMs) and generative artificial intelligence (AI) are two areas in which Intel Gaudi Al accelerators are intended to improve the effectiveness and performance of deep learning workloads. Gaudi processors provide efficient solutions for demanding AI applications including large-scale model training and inference, making them a more affordable option than typical NVIDIA GPUs. Because Intel’s Gaudi architecture is specifically designed to accommodate the increasing computing demands of generative AI applications, businesses looking to implement scalable AI solutions will find it to be a highly competitive option. The main technological characteristics, software integration, and upcoming developments of the Gaudi AI accelerators are all covered in this webinar.

Intel Gaudi Al Accelerators Overview

The very resource-intensive generative AI applications, as LLM training and inference, are the focus of the Gaudi AI accelerator. While Intel Gaudi-3, which is anticipated to be released between 2024 and 2025, promises even more breakthroughs, Gaudi 2, the second-generation CPU, enables a variety of deep learning enhancements.

- Advertisement -

Intel Gaudi 2

The main attributes of Gaudi 2 consist of:

Matrix Multiplication Engine: Hardware specifically designed to process tensors efficiently.

For AI tasks, 24 Tensor Processor Cores offer high throughput.

Larger model and batch sizes are made possible for better performance by the 96 GB of on-board HBM2e memory.

24 on-chip 100 GbE ports offer low latency and high bandwidth communication, making it possible to scale applications over many accelerators.

7nm Process Technology: For deep learning tasks, the 7nm architecture guarantees excellent performance and power efficiency.

These characteristics, particularly the combination of integrated networking and high memory bandwidth, make Gaudi 2 an excellent choice for scalable AI activities like multi-node training of big models. With its specialized on-chip networking, Gaudi’s innovative design does away with the requirement for external network controllers, greatly cutting latency in comparison to competing systems.

Intel Gaudi Pytorch

Software Environment and Stack

With its extensive software package, Intel’s Gaudi platform is designed to interact easily with well-known AI frameworks like PyTorch. There are various essential components that make up this software stack:

Graph Compiler and Runtime: Generates executable graphs that are tailored for the Gaudi hardware using deep learning models.

Kernel Libraries: Reduce the requirement for manual optimizations by using pre-optimized libraries for deep learning operations.

PyTorch Bridge: Requires less code modification to run PyTorch models on Gaudi accelerators.

Complete Docker Support: By using pre-configured Docker images, users may quickly deploy models, which simplifies the environment setup process.

With a GPU migration toolset, Intel also offers comprehensive support for models coming from other platforms, like NVIDIA GPUs. With the use of this tool, model code can be automatically adjusted to work with Gaudi hardware, enabling developers to make the switch without having to completely rebuild their current infrastructure.

Open Platforms for Enterprise AI

Use Cases of Generative AI and Open Platforms for Enterprise AI

The Open Platform for Enterprise AI (OPEA) introduction is one of the webinar’s main highlights. “Enable businesses to develop and implement GenAI solutions powered by an open ecosystem that delivers on security, safety, scalability, cost efficiency, and agility” is the stated mission of OPEA. It is completely open source with open governance, and it was introduced in May 2024 under the Linux Foundation AI and Data umbrella.

It has attracted more than 40 industry partners and has members from system integrators, hardware manufacturers, software developers, and end users on its technical steering committee. With OPEA, businesses can create and implement scalable AI solutions in a variety of fields, ranging from chatbots and question-answering systems to more intricate multimodal models. The platform makes use of Gaudi’s hardware improvements to cut costs while improving performance. Among the important use cases are:

Visual Q&A: This is a model that uses the potent LLaVA model for vision-based reasoning to comprehend and respond to questions based on image input.

Large Language and Vision Assistant, or LLaVA, is a multimodal AI model that combines language and vision to carry out tasks including visual comprehension and reasoning. In essence, it aims to combine the advantages of vision models with LLMs to provide answers to queries pertaining to visual content, such as photographs.

Large language models, such as GPT or others, are the foundation of LLaVA, which expands their functionality by incorporating visual inputs. Typically, it blends the natural language generation and interpretation powers of big language models with image processing techniques (such those from CNNs or Vision Transformers). Compared to solely vision-based models, LLaVA is able to reason about images in addition to describing them thanks to this integration.

Retrieval-Augmented Generation (RAG) or ChatQnA is a cutting-edge architecture that combines a vector database and big language models to improve chatbot capabilities. By ensuring the model obtains and analyzes domain-specific data from the knowledge base and maintains correct and up-to-date responses, this strategy lessens hallucinations.

Microservices can be customized because to OPEA’s modular architecture, which lets users change out databases and models as needed. This adaptability is essential, particularly in quickly changing AI ecosystems where new models and tools are always being developed.

Intel Gaudi Roadmap

According to Intel’s Gaudi roadmap, Gaudi 2 and Intel Gaudi-3 offer notable performance gains. Among the significant developments are:

Doubling AI Compute: In order to handle the increasing complexity of models like LLMs, Intel Gaudi-3 will offer floating-point performance that is 2 times faster for FP8 and 4 times faster for BF16.

Enhanced Memory Bandwidth: Intel Gaudi-3 is equipped with 1.5 times the memory bandwidth of its predecessor, so that speed won’t be compromised when handling larger models.

Increased Network capacity: Intel Gaudi-3’s two times greater networking capacity will help to further eliminate bottlenecks in multi-node training scenarios, which makes it perfect for distributing workloads over big clusters.

Additionally, Gaudi AI IP and Intel’s GPU technology will be combined into a single GPU form factor in Intel’s forthcoming Falcon Shores architecture, which is anticipated to launch in 2025. As part of Intel’s ongoing effort to offer an alternative to conventional GPU-heavy environments, this hybrid architecture is expected to provide an even more potent foundation for deep learning.

Tools for Deployment and Development

Through the Intel Tiber Developer Cloud, which offers cloud-based instances of Gaudi 2 hardware, developers can utilize Gaudi accelerators. Users can test and implement models at large scale using this platform without having to make investments in on-premises infrastructure.

Starting with Gaudi accelerators is as simple as following these steps:

Docker Setup: First, users use pre-built images to build up Docker environments.

Microservices Deployment: End-to-end AI solutions, such chatbots or visual Q&A systems, can be deployed by users using tools like Docker Compose and Kubernetes.

Intel’s inherent support for monitoring tools, such as Prometheus and Grafana, enables users to manage resource utilization and performance throughout their AI pipelines.

In summary

Enterprises seeking an efficient way to scale AI workloads will find a compelling solution in Intel’s Gaudi CPUs, in conjunction with the extensive OPEA framework and software stack. With Gaudi 2’s impressive performance and Intel Gaudi-3‘s upcoming improvements, Intel is establishing itself as a formidable rival in the AI hardware market by offering a reasonably priced substitute for conventional GPU-based architectures. With OPEA’s open and modular design and wide ecosystem support, developers can quickly create and implement AI solutions that are customized to meet their unique requirements.

Read more on govindhtech.com

#IntelGaudi3Accelerators#AISkills#AIapplications#IntelGaudi3#Gaudi2#KernelLibraries#chatbots#LargeLanguage#VisionAssistant#LLaVA#intel#RetrievalAugmentedGeneration#RAG#news#Tools#IntelTiberDeveloperCloud#Prometheus#EnterpriseAI#GenerativeAI#technology#technews#govindhtech

0 notes

Text

MediaTek Teases LLM Demo with its Dimensity Chipsets

As generative AI's influence on the consumer tech industry widens, we've seen a growing number of brands, manufacturers, and companies make impressive strides in innovation, at least as far as AI is concerned. Not one to be outdone, MediaTek announced that it will be showcasing its upcoming LLM demo at Mobile World Congress (MWC) 2024, scheduled to take place later this month in Barcelona. Part of MediaTek's official announcement reads: "Last August, we announced that we are working closely to leverage Llama 2, Meta’s open-source LargeLanguage Model (LLM), as part of our ongoing investment in creating technology and an ecosystem thatenables the future of AI... At Mobile World Congress 2024, MediaTek will demonstrate an optimized Llama 2 Generative AI application on-device using MediaTek’s APU edge hardware acceleration on the Dimensity 9300 and 8300 for the first time." MediaTek adds that it will be using its latest APUs and NeuroPilot AI platform, which will work in tandem with Llama 2 in enabling generative AI apps to run directly on-device, instead of a cloud-based method. Based on how current generative AI for mobile devices works at the moment, this does seem to be a more promising solution - for example, Google's AI-based Magic Editor feature on its Pixel phones often requires users to initially backup their photo to the cloud before allowing them to make edits on their files. MediaTek states that providing users with an on-device method for Generative AI is a more ideal scenario, as it opens the floodgates for seamless performance, better privacy, and security, lower latency, as well as the ability to work in cases where there's no online connectivity. In addition, on-device integration for Llama 2 will require powerful chipsets to handle the workload involved, in which case MediaTek's dimensity 9300 and 8300 mobile chipsets are ideal hardware solutions, at least according to the company. As for the chips, MediaTek states that both Dimensity SoCs can support Llama 2 7B applications. In particular, the Dimensity 9300 is tailor-made for generative AI features. For instance, the 9300 features a 7th-gen APU that’s responsible for hardware-accelerated generative AI onto mobile devices – as such, MediaTek claims that the 9300 comes with 8x faster transformer-based generative Al, 2x faster integer and floating-point compute improvement, 45% more power efficiency, and even support for on-device LoRA Fusion. Attendees to MWC 2024 who are interested in checking out MediaTek's upcoming AI endeavors are invited by the company at Booth 3D10 in Hall 3, where the demo is scheduled to take place. Read the full article

0 notes