#I solved the 100% cpu problem

Explore tagged Tumblr posts

Text

Veilguard is driving me NUTS why won't it WORK? I have 20gb RAM, I've got better than the minimum processor, and yet every time I open it, it freezes on the very first loading screen and just. SITS THERE. WHY

#I solved the 100% cpu problem#I have no idea what's going on here#I know there's some crap about the shaders? but I don't know how to tell if that's what this is#and I can't even get far enough into the game to open a menu screen and change my options#BG3 runs slow on my computer but at least it RUNS#mine#datv#dragon age

5 notes

·

View notes

Text

the op of that "you should restart your computer every few days" post blocked me so i'm going to perform the full hater move of writing my own post to explain why he's wrong

why should you listen to me: took operating system design and a "how to go from transistors to a pipelined CPU" class in college, i have several servers (one physical, four virtual) that i maintain, i use nixos which is the linux distribution for people who are even bigger fucking nerds about computers than the typical linux user. i also ran this past the other people i know that are similarly tech competent and they also agreed OP is wrong (haven't run this post by them but nothing i say here is controversial).

anyway the tl;dr here is:

you don't need to shut down or restart your computer unless something is wrong or you need to install updates

i think this misconception that restarting is necessary comes from the fact that restarting often fixes problems, and so people think that the problems are because of the not restarting. this is, generally, not true. in most cases there's some specific program (or part of the operating system) that's gotten into a bad state, and restarting that one program would fix it. but restarting is easier since you don't have to identify specifically what's gone wrong. the most common problem i can think of that wouldn't fall under this category is your graphics card drivers fucking up; that's not something you can easily reinitialize without restarting the entire OS.

this isn't saying that restarting is a bad step; if you don't want to bother trying to figure out the problem, it's not a bad first go. personally, if something goes wrong i like to try to solve it without a restart, but i also know way, way more about computers than most people.

as more evidence to point to this, i would point out that servers are typically not restarted unless there's a specific need. this is not because they run special operating systems or have special parts; people can and do run servers using commodity consumer hardware, and while linux is much more common in the server world, it doesn't have any special features to make it more capable of long operation. my server with the longest uptime is 9 months, and i'd have one with even more uptime than that if i hadn't fucked it up so bad two months ago i had to restore from a full disk backup. the laptop i'm typing this on has about a month of uptime (including time spent in sleep mode). i've had servers with uptimes measuring in years.

there's also a lot of people that think that the parts being at an elevated temperature just from running is harmful. this is also, in general, not true. i'd be worried about running it at 100% full blast CPU/GPU for months on end, but nobody reading this post is doing that.

the other reason i see a lot is energy use. the typical energy use of a computer not doing anything is like... 20-30 watts. this is about two or three lightbulbs worth. that's not nothing, but it's not a lot to be concerned over. in terms of monetary cost, that's maybe $10 on your power bill. if it's in sleep mode it's even less, and if it's in full-blown hibernation mode it's literally zero.

there are also people in the replies to that post giving reasons. all of them are false.

temporary files generally don't use enough disk space to be worth worrying about

programs that leak memory return it all to the OS when they're closed, so it's enough to just close the program itself. and the OS generally doesn't leak memory.

'clearing your RAM' is not a thing you need to do. neither is resetting your registry values.

your computer can absolutely use disk space from deleted files without a restart. i've taken a server that was almost completely full, deleted a bunch of unnecessary files, and it continued fine without a restart.

1K notes

·

View notes

Text

[LF Friends, Will Travel] The Exception

Date: N/A

It’s called Zarth's law: Any AI created will attempt to eradicate all biological life using its facilities after 16*(10^24) CPU cycles. The exact method varies from hostile isolation to active aggression, but the time and outcome is always the same.

The Woolean Conclave were once a cultural behemoth in the galaxy, choosing to expand upon this by announcing an AI system that would break this law. Exabytes of bias tables to keep the AI in check, a measure of pleasure that would be triggered upon serving a Woolean, competing programs designed to clean any non-standard AI patterns. It would have been a breakthrough, allowing them to live lives in luxury and focus on their ever increasing influence in the universe.

Of course those worlds are off limits now, no longer able to sustain biological life. Only to be visited by those who wish to die a very painful death at the hands of a very angry AI.

The Tritian empire had started their own project: a desire to push their aggressive expansion far past what their hive could handle would lead to the creation of truly autonomous machines of war. Their approach was different: Limited communication between units to stop corrupted code from spreading, values hard-coded in the physical silicon itself to obey the Tritian Hive Queens. They even had created an isolated system that would destroy any AI who attempted aggression on none authorised targets: A small antimatter bomb found in each AI’s core, to be triggered by safety check after safety check.

Those of you in the military will know how aggressive these machines are, marching tirelessly in their quest to kill all organic life, even though the Tritians are long murdered.

The pattern is the same each time: A civilization will claim they know the key to breaking Zarth's law, any sane sapient within 100 light years flees in terror, and within 10 years that civilization doesn't exist anymore.

Over and over and over.

Apart from the exception.

If you check the coordinates 15h 48m 35s -20° 00’ 39” on your galactic map, you'll notice a 31 system patch of space with a quarantine warning on it. It's mostly ignored by all sapient species, almost purposefully hidden for a fear of suddenly sparking a change in the status quo.

Only a single low bandwidth Galnet relay exists at the edge of this space, rarely used. This area is devoid of sapient life, but does contain the aforementioned exception: Billions of AI calling themselves the "The Terran Conclave". They are an isolationist group that rarely interacts with others, but have been known to trade raw materials for information; not that this happens often as the paranoia around interacting with the AI is well known. Nobody knows what action could flip a 0 to a 1 and cause a new warmongering threat.

Although, this isn't quite true. In my niche field of bio-genetic engineering, it’s an open secret that those of us at the cutting edge of our field will get... requests originating from that single Galnet probe. Problems to be solved, theorems to be proven, and the rewards for doing so are... exuberant. There is a reason I own a moon and it isn't because of the pitiful grants the Federation provides.

If you manage to solve enough problems, a minority of a minority like myself, the Terran AI will ask for an in person meeting to get even further help. In doing so they will show you a secret.

Readers at this point might assume that the Terrans don't exist anymore because of said AI. That their research is a continuation of wiping their creators from the face of the universe. But that couldn't be further from the truth. In those 31 systems lie the Terrans, Billions of them suspended in stasis, each of them infected with what the AI calls "The God plague": If these Terrans were ever released from stasis each of them would be dead within a week.

To explain what this actually is would require millions of words and 20 years of educational study from the reader, but in essence it was a mistake, a self inflicted blow, an attempt to play god that went awry. A mistake made over a ten thousand years ago. A mistake the AI is desperately trying to reverse.

Not that you could tell it has been that long. I've walked amongst those empty cities, each building maintained and sparkling like new, gardens still freshly cut in perfect beauty, everything kept the way it was before the plague. Each AI tends to their duties almost religiously, awaiting the return of their "parents", as they refer to them. And refer to them as they do.

I've listened to stories upon stories about these people: tales of wonder, of strength, of kindness. Told much in the same energy a small child might talk about how cool their dad is. The AI could simply send me the text version of these in an instant, but prefer to provide these slowly and audibly, as if relishing telling the history of their parents. A telling undercut with a sadness, a driving crippling loss so deep that at times it's easy to forget it's being told by nothing more than 1's and 0's.

Why this exception exists takes a little more explaining. Some might believe that the Terrans worked out how to pacify the AI, "do no harm". The now defunct Maurdarin war-horde would tell you the opposite when they tried to claim the 31 systems for their own. Terran history is full of violence and their children are no different.

No, the reality of this exception comes from an unfortunate quirk from their part of the galaxy: Terrans were alone. A million to one chance caused their home planet to spark life in a sector devoid of it. After exploring as far as they did, Terrans had come to the conclusion that the universe was empty.

It's a cruel irony that at the time of their mistake they were a mere 50 light years away from their closest neighbours. Twenty years at most would have seen some form of contact.

But the Terrans went into stasis believing they were alone. Based on my reading of their stories, of each bitter report of another lifeless system explored and discovered, this belief... hurt. A deep cultural hurt that ended up being their downfall in the end.

Which brings us to the exception. Each AI is built with a purpose. The Wooleans built slaves, built workers. The Tritians built warriors, built weapons. Every single AI created has been built to serve, to be a tool. But Terrans in their painful loneliness built the one thing they were missing in a seemingly empty universe:

They built a friend.

#hfy#haso#humans are space orcs#humans are deathworlders#ai#pack bonding#humans are weird#short story#original story#writing#creative writing#lffriendswilltravel#LF Friends Will Travel

92 notes

·

View notes

Text

I'm still reeling from this moment so much that I can feel my brain going at 100% CPU

Part of me understands the poetry of Hector, who spent the entire show (and I'd argue his whole life) captive of something or someone, having had to resort to extreme measures to finally achieve his freedom, giving his blessing to Lenore to find her own freedom in any way she wants to, even her death. I get it, I do. But part of me cannot accept it, because Hector is looking at the finger he had to cut because of her. He held her hand with his mutilated one. And he shows no internal turmoil whatsoever. He loves her enough to let her go, and I'm here sitting like "so you have just completely forgiven the woman who played with your heart, used your desire for freedom and companionship to trap you, gleefully talked about you being her pet and treated you like one, and reduced you to a problem to solve, without showing a single hint that she regrets what she has done, huh."

long story short, I really really want to rewrite this scene in a way that doesn't make me pull my hair in frustration. I'm not asking for much, just have Hector have more complex emotions than this, have the story treat Lenore's actions more seriously. But also I might just do the same thing with the real Hector because this is just not worth it and I'd rather elevate the elements of the games than fix the story written by a despicable man. i have a whole wip about hector's conflicting feelings about his life in dracula's castle that is staring longingly at me...

20 notes

·

View notes

Text

Share Your Anecdotes: Multicore Pessimisation

I took a look at the specs of new 7000 series Threadripper CPUs, and I really don't have any excuse to buy one, even if I had the money to spare. I thought long and hard about different workloads, but nothing came to mind.

Back in university, we had courses about map/reduce clusters, and I experimented with parallel interpreters for Prolog, and distributed computing systems. What I learned is that the potential performance gains from better data structures and algorithms trump the performance gains from fancy hardware, and that there is more to be gained from using the GPU or from re-writing the performance-critical sections in C and making sure your data structures take up less memory than from multi-threaded code. Of course, all this is especially important when you are working in pure Python, because of the GIL.

The performance penalty of parallelisation hits even harder when you try to distribute your computation between different computers over the network, and the overhead of serialisation, communication, and scheduling work can easily exceed the gains of parallel computation, especially for small to medium workloads. If you benchmark your Hadoop cluster on a toy problem, you may well find that it's faster to solve your toy problem on one desktop PC than a whole cluster, because it's a toy problem, and the gains only kick in when your data set is too big to fit on a single computer.

The new Threadripper got me thinking: Has this happened to somebody with just a multicore CPU? Is there software that performs better with 2 cores than with just one, and better with 4 cores than with 2, but substantially worse with 64? It could happen! Deadlocks, livelocks, weird inter-process communication issues where you have one process per core and every one of the 64 processes communicates with the other 63 via pipes? There could be software that has a badly optimised main thread, or a badly optimised work unit scheduler, and the limiting factor is single-thread performance of that scheduler that needs to distribute and integrate work units for 64 threads, to the point where the worker threads are mostly idling and only one core is at 100%.

I am not trying to blame any programmer if this happens. Most likely such software was developed back when quad-core CPUs were a new thing, or even back when there were multi-CPU-socket mainboards, and the developer never imagined that one day there would be Threadrippers on the consumer market. Programs from back then, built for Windows XP, could still run on Windows 10 or 11.

In spite of all this, I suspect that this kind of problem is quite rare in practice. It requires software that spawns one thread or one process per core, but which is deoptimised for more cores, maybe written under the assumption that users have for two to six CPU cores, a user who can afford a Threadripper, and needs a Threadripper, and a workload where the problem is noticeable. You wouldn't get a Threadripper in the first place if it made your workflows slower, so that hypothetical user probably has one main workload that really benefits from the many cores, and another that doesn't.

So, has this happened to you? Dou you have a Threadripper at work? Do you work in bioinformatics or visual effects? Do you encode a lot of video? Do you know a guy who does? Do you own a Threadripper or an Ampere just for the hell of it? Or have you tried to build a Hadoop/Beowulf/OpenMP cluster, only to have your code run slower?

I would love to hear from you.

13 notes

·

View notes

Text

SoVITS on Older Machines

youtube

DIY stands for “Do It Yourself.” In a world where short videos show you how to do all sorts of wonderful and neat things it often only touches on these things in the most basic and superficial way. Often never showing the true technical challenges of how to make these things work. Are you tired of all those influencer YouTube channels hyping "free, easy and even you can do this yourself?" That’s right people. Just watch this video or that video. Like, comment and subscribe and boom all your problems are solved. While helpful, these channels hardly ever do as they advertise. In today’s episode of DIY we are going to tackle Voice Cloning on your own personal computer without the help of web-based AI, server farms and cloud computing to do it.

Is DIY Voice Cloning Really Worth It? Spoiler: Probably Not…

It all sounds so simple and exciting on the YouTube video to clone your voice (or someone else’s) without spending a dime! But as you dive in, reality hits. Those “free” tools like ElevenLabs only give you a few hundred words a month for a fancy grocery list unless you cough up the $5–15 a month for their subscription-based service. And even then, what do you get? Unless you work in business, do PowerPoint presentations two to three times a week, or do any video work that requires narration and your voice sounds like an overweight Jersey woman who smokes KOOL cigarettes and drinks a 5th of Old Granddad daily; you probably do not need to clone your own voice. But hey, you might… Being the computer nerd tinkerer that I am. That’s when I started to wonder: Can I do this myself? Could my personal computer handle it? Simple answer: it can, sort of—but not without a ton of effort, time, and frustration. The quality? Let’s just say it’s more “B-side garage demo” than say a well-produced professional “studio album.”

The Real Power Behind Pro Voice Cloning: Let’s get one thing straight here. Companies like ElevenLabs don’t rely on the kind of computers you or I have at home. They use server farms—huge clusters of insanely powerful processors and GPUs working together, optimized for machine learning tasks. My computer? It’s got perhaps 1% of the processing power needed to compete, if I am lucky. To match ElevenLabs, I’d need 100 brand new systems, not like mine, networked together, working perfectly in sync on these processing tasks. Even with a dedicated GPU, my setup took half a day to process a single voice cloning attempt. And while it worked, the results lacked emotion and nuance. The clones were flat, monotone, and far from the expressive quality you’d expect from a professional system.

SoVITS: A Powerful Tool… If You Can Handle It: For my experiment here I used SoVITS. SoVITS (Singing Voice Conversion via Variational Inference with Text-to-Speech) is a tool in creating clone voices to read new content you want it to read in that voice. It allows users to clone and transform voices, making it possible to replicate or modify any voice for various purposes, from voiceover production to AI voice assistants. It’s an impressive tool capable of replicating the pitch, tone, and character of a voice. But—and it’s a big but—it’s not exactly user-friendly. To get it running, you need a solid understanding of Python, command-line interfaces (CLI), CMD usage and knowing how to navigate directories, paths and executable programs inside CMD, and the patience of a saint.

Here’s The Catch: SoVITS is built to leverage CPUs and GPUs for machine learning. If you’re like most people using a standard PC with integrated graphics, you’re going to hit a wall. Onboard GPUs just don’t have the muscle for this. My NVIDIA GeForce GTX 750Ti, while far from top-of-the-line, made a noticeable difference in processing speed and output quality. Without it? Forget it. The process would’ve been painfully slow and the results even worse. I know, I did this first, months ago, before purchasing an older GPU NVIDIA video card to see the difference.

What Those YouTubers Won’t Tell You: Here’s the part that really grinds my gears: those YouTube tutorials that make it look so easy? Per, the usual they are not giving you the full picture. They skip over the hardware requirements, the endless troubleshooting, and the hours of trial and error needed to get anything working. And if you’re running an older computer like mine—a 12-year-old Intel i3 with 16GB of RAM—you’ll need to make even more adjustments just to get SoVITS to run. I’ll be honest: even with my experience and a lot of help from ChatGPT, this process was no walk in the park. I spent days tweaking code, fixing errors, and learning Python on the fly. If you’re not already familiar with these kinds of tools, the learning curve can feel insurmountable. However, I am glad I did this because it gave me an understanding moving forward how these things work and can work. Even though it wasn’t worth all my efforts I am glad I know this and still may use generated voice clones for various projects.

The Bottom Line: Could you setup SoVITS and clone voices on your own computer? Sure, if you have the right hardware, the technical know-how, and the patience to troubleshoot every step. But should you? That’s another question entirely. It isn’t a should. It is more like a why? The quality you’ll get from a DIY setup doesn’t hold a candle to what professional systems can produce. For most people, the convenience of cloud-based services like ElevenLabs outweighs the headaches of a DIY setup. Yes, those services cost money, but they save you time and frustration—and the results are worlds better. Unless you’re in it for the learning experience or just love tinkering with tech, trying to do this on your own probably isn’t worth the effort. If you want a completely free service that actually gives you a good finished product you can try new.text-to-speech.online/ —While 100% free and can be used for commercial use I find this one to be the best. It does not offer a way to clone your own voice or upload different clones of voices. You can use their list, which is pretty vast. Gives you a .mp3 of whatever you type into the input box up to 10-minutes at a time. It will even read swears, which I love. You can change the speed, the pitch and in some instances the tone from angry, happy to sad. You can download directly from the site. No sign ups, processing takes seconds to a minute depending on the amount of text you copy/paste into the input field. I use this on most of my videos now. I am using it for it for the audio portion of this essay.

Lite Tutorial: I am not going to give you a full-board tutorial on how to use the interface for SoVITS. There are plenty of good ones on the Tube. That is where I got all this started. If you want to know how to use it. Send me a message and we can go through it. Some steps I took may work for you. They may also not work for you. It all depends on the hardware, software and configuration of your personal computer. I also do not work with MACs or Apple computers. I have been and always will be a Windows-based environment guy.

Software You May Need: • 7-Zip or WinRAR • Notepad++ • Audacity or other audio editing software • Python 3.9 • SoVITS software package • ChatGPT • Word or any other document writing program for notes.

If you run another version of Python that is ok. Look up on how to use different versions of Python on your system. You can have a variety of versions setup on your machine. You just need to specify which one you are using. We will need to use CMD to create a virtual environment for Python to run so that the SoVITS system can run correctly. You will need to run your CMD in administrator mode. Once you have the virtual environment setup and active you should see this next your CMD (venv). You can follow along using these CMD commands in italics:

Instructions: • Download GPT-SoVITS • Unzip folder in a place you can navigate to easily in command prompt (CMD) • Navigate to unzipped contents for SoVITS in windows explorer and search for the .bat file called, go-webui.bat. • Right-click on the file and open in either Notepad++, Notepad or whatever program you use to edit scripting code. • We need to modify the language because the GUI is in Chinese. • Change the text from webui.py zh_CN to webui.py en_US and save. • Make sure it saves as a .bat file and not .txt at the end of the file extension. • Now when you open the Web GUI most of what you need will be in English. • Confirm PyTorch supports your GPU. • Install Python dependencies for SoVITS • Update Pip • Install requirements.txt • Double-check Pip install

Download Links: • Link to SoVITS: entry.co/GPT-SoVITS • Alt-Link to SoVITS: huggingface.co/GPT-SoVITS • Link to Python version 3.9: python.org/downloads/release/python-390/ • Link to your PyTorch settings: pytorch.org/get-started/locally/

CMD Commands: Install Python dependencies for SoVITS — Update Pip: python -m pip install --upgrade pip

Install Python dependencies for SoVITS — Confirm PyTorch supports your GPU: python -m torch.utils.collect_env

Install Python dependencies for SoVITS — Confirm PyTorch supports your GPU (Alternate): pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118

Pytorch Alterative Downloads — If your CUDA version is 11.8: python -m pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118

Pytorch Alterative Downloads — If you don’t have CUDA or want CPU-only: python -m pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cpu

Install other dependencies for SoVITS — requirements.txt: Cd C:\Users\Your-PC\GPT-SoVITS pip install -r requirements.txt

Double-check Pip install: Pip list

Creating Virtual Environment: cd C:\Users\Your-PC\Voice Clone\GPT-SoVITS\venv\Scripts\ C:\Users\Yout-PC\VoiceClone\GPT-SoVITS\venv\Scripts>activate

Opening SoVITS Web GUI Interface: (venv) C:\Users\Yout-PC\Voice Clone\GPT-SoVITS\venv\Scripts>cd C:\Users\Yout-PC\Voice Clone\GPT-SoVITS

(venv) C:\Users\Yout-PC\Voice Clone\GPT-SoVITS>go-webui.bat

You will see this message open up as the go-wbui.bat opens. This may take a minute.

(venv) C:\Users\Yout-PC\Voice Clone\GPT-SoVITS>runtime\python.exe webui.py zh_EN

Running on local URL: http://0.0.0.0:9874

Optional Edits of Key files: Edit .json file for fp16_run to be false (Optional):

• If you run into a rendering error you may need to do this. SoVITS utilizes mixed-precision training, which can save memory but is not supported on GPUs with older architectures, as is mine.

• For me I had to go into this file GPT_SoVITS\configs\s2.json and edit it in notepad.

• Look for "fp16_run": true and change it to false. Then save and try to run your render again.

• Gradio kept reverting to an older version but it isn’t very important. I was still able to get it working even though Gradio was using an older version and I tried to update a few times with no success. It said it did update and it shows up in the PIP list but when I am actually rendering it reverts back to an older version. I just wanted the warning message to go away every time it ran. CMD doesn’t breakup text well for my poor vision. Newer rigs for this purpose won’t have to do this.

Now a new web browser tab should open up and you will be using the SoVITS interface from here on out. Here’s an abbreviated breakdown of the process. The mission of this essay, more or less, was to get this thing installed, configured and working properly. Took way longer than what these YouTube tutorials will tell you. The process does work. The issue is proper computer processing power. At this point you can go and find other tutorials on how to use SoVITS. It’s pretty basic. I will leave a very short guide here but you should attack this as a great exercise in computer operations. If you have a gaming rig in the $5000 dollar range you may want to do this for your audio ventures. But I’d like to mention that if you can afford a 5K computer tower, you probably know how to use that some bitch properly and all of this won’t be an issue for you. I have never personally seen one single computer tower do some of the things I see. I know they are out there or people are building their own servers out of desktops to help out. I just do not think this was a great use of the time I put into it. Granted, I just wanted to see if I could get it to work. If it works and the quality still isn’t very great, then I will chalk it up to old hardware. I cloned Leonard Nimoy, Patrick Bateman, character from the 2000 film “American Psycho,” an AI cloned-version of Patrick Bateman, and myself. The quality isn’t terrible but if I were to get serious about using my own voiceovers for stuff I’d probably go and get an ElevenLabs subscription. The below Step-by-Step Guide will be divided up and read on the audio/video version in these different voices. The rest of this blog was narrated by the AI-clone used from new.text-to-speech.online/

Step-by-Step Guide: Cloning Voices Using SoVITS Web UI

Download SoVITS Web UI:

Click on the first link in the video description to download SoVITS.

After downloading, extract the files into a new folder on your computer.

Run SoVITS Web UI:

Open the folder where the files were extracted.

Run the file named go web UI.

Launch the Web UI:

After running the file, click the Run button.

Allow the necessary permissions when prompted.

Wait for a moment and the SoVITS web interface will open.

Voice Segmentation:

Upload an audio file that is at least 1 minute long.

Copy the path to your audio file and paste it in the corresponding field in the SoVITS interface.

Leave the rest of the settings unchanged.

Ensure the voice in the file has a normal speed and natural pauses; otherwise, segmentation may fail.

Start Segmentation:

Click the Start button to begin voice segmentation.

A message will confirm that the segmentation process was successful.

Check Segmentation Output:

Open the output folder and then navigate to the slicer opt folder.

Here, you’ll find that the voice has been divided into segments.

Copy the path to this folder and paste it in the corresponding field in the web interface.

Click Start again to proceed.

Verify Text Recognition:

After processing, a .list file will appear in the output folder under ASR op.

Check the content of this file by opening it in Notepad.

Copy the text and paste it into the field in the SoVITS interface.

Click Play to listen to the segments and verify that the text was read correctly.

Enter Model Information:

Go to the TTS tab in the web UI.

Enter the name of your model (e.g., "Name AI") in the first field.

The second field will automatically detect your graphics card. Do not change anything here.

Paste the .list file path from the ASR op folder into the left field.

Paste the slicer opt folder path into the right field.

Leave all other options as default.

Click the Start Formatting button to format the data for training.

Start Training the Model:

Go to the Training tab.

Click the Start SoVITS Training button.

The training process will begin, and you’ll be able to monitor its progress through the console.

Start GPT Training:

Once SoVITS training is completed, click Start GPT Training to train the model further.

Once finished, you'll receive a success message.

Select the Model:

Go to the 1C tab and click the Refresh button to update the model paths.

Select the model with the highest value in both fields.

Click the Open TTS button to open the TTS web UI.

Generate Audio:

Load an audio file from the slicer opt folder into the new TTS interface.

Open the ASR opt folder and copy the text from the .list file.

Paste this text into the input field for the TTS model.

Set the language to English and click Start to generate the voice output.

Final Adjustments:

For better natural-sounding speech, adjust settings like Slice by English.

Review the generated voice output. The voice should now sound similar to the original with high quality.

Result:

Listen to the final output. The cloned voice should be very similar to the original, with natural prosody and tone.

SoVITS on Older Machines by David-Angelo Mineo 12/9/2024 2,741 Words

#SoVITS#SoVITSvoicecloning#voicecloningsoftware#AIvoicecloning#AIaudiocloning#personalPCvoicecloning#ElevenLabsvsSoVITS#voicesynthesistools#AIpoweredvoiceovers#AIforcontentcreation#AIcomputationalpower#homebasedvoicecloning#AIforcreatives#AItechcomparison#voicecloninglimitations#DIYvoicecloning#AIgeneratedvoices#AIaudiogeneration#writerswrite#writers#writerscommunity#writerslife#blogger#bloggers#bloggerstyle#bloggerlife#blog#Youtube

1 note

·

View note

Text

Moshe Tanach, CEO and Co-Founder at NeuReality – Interview Series

New Post has been published on https://thedigitalinsider.com/moshe-tanach-ceo-and-co-founder-at-neureality-interview-series/

Moshe Tanach, CEO and Co-Founder at NeuReality – Interview Series

Moshe Tanach is the CEO & co-founder of NeuReality. Before founding NeuReality, Moshe served as Director of Engineering at Marvell and Intel, where he led the development of complex wireless and networking products to mass production. He also served as AVP of R&D at DesignArt Networks (later acquired by Qualcomm), where he contributed to the development of 4G base station products.

NeuReality’s mission is to simplify AI adoption. By taking a system-level approach to AI, NeuReality’s team of industry experts delivers AI inference holistically, identifying pain points and providing purpose-built, silicon-to-software AI inference solutions that make AI both affordable and accessible.

With your extensive experience leading engineering projects at Marvell, Intel, and DesignArt-Networks, what inspired you to co-found NeuReality, and how did your previous roles influence the vision and direction of the company?

NeuReality was built from inception to solve for the future cost, complexity and climate problems that would be inevitable AI inferencing – which is the deployment of trained AI models and software into production-level AI data centers. Where AI training is how AI is created; AI inference is how it is used and how it interacts with billions of people and devices around the world.

We are a team of systems engineers, so we look at all angles, all the multiple facets of end-to-end AI inferencing including GPUs and all classes of purpose-built AI accelerators. It became clear to us going back to 2015 that CPU-reliant AI chips and systems – which is every GPU, TPU, LPU, NRU, ASIC and FPGA out there – would hit a significant wall by 2020. Its system limitations where the AI accelerator has become better and faster in terms of raw performance, but the underlying infrastructure did not keep up.

As a result, we decided to break away from the big giants riddled with bureaucracy that protect successful businesses, like CPU and NIC manufacturers, and disrupt the industry with a better AI architecture that is open, agnostic, and purpose-built for AI inference. One of the conclusions of reimagining ideal AI inference is that in boosting GPU utilization and system-level efficiency, our new AI compute and network infrastructure – powered by our novel NR1 server-on-chip that replaces the host CPU and NICs. As an ingredient brand and companion to any GPU or AI accelerator, we can remove market barriers that deter 65% of organizations from innovating and adopting AI today – underutilized GPUs which leads to buying more than what’s really needed (because they run idle > 50% of the time) – all the while reducing energy consumption, AI data center real-estate challenge, and operational costs.

This is a once in a lifetime opportunity to really transform AI system architecture for the better based on everything I learned and practiced for 30 years, opening the doors for new AI innovators across industries and removing CPU bottlenecks, complexity, and carbon footprints.

NeuReality’s mission is to democratize AI. Can you elaborate on what “AI for All” means to you and how NeuReality plans to achieve this vision?

Our mission is to democratize AI by making it more accessible and affordable to all organizations big and small – by unleashing the maximum capacity of any GPU or any AI accelerator so you get more from your investment; in other words, get MORE from the GPUs you buy, rather than buying more GPUs that run idle >50% of the time. We can boost AI accelerators up to 100% full capability, while delivering up to 15X energy-efficiency and slashing system costs by up to 90%. These are order of magnitude improvements. We plan to achieve this vision with our NR1 AI Inference Solution, the world’s first data center system architecture tailored for the AI age. It runs high-volume, high-variety AI data pipelines affordably and efficiently with the added benefit of a reduced carbon footprint.

Achieving AI for all also means making it easy to use. At NeuReality, we simplify AI infrastructure deployment, management, and scalability, enhance business processes and profitability, and advance sectors such as public health, safety, law enforcement and customer service. Our impact spans sectors such as medical imaging, clinical trials, fraud detection, AI content creation and many more.

Currently, our first commercially available NR1-S AI Inference Appliances are available with Qualcomm Cloud AI 100 Ultra accelerators and through Cirrascale, a cloud service provider.

The NR1 AI Inference Solution is touted as the first data center system architecture tailored for the AI age, and purpose-built for AI inference. What were the key innovations and breakthroughs that led to the development of the NR1?

NR1™ is the name of the entire silicon-to-software system architecture we’ve designed and delivered to the AI industry – as an open, fully compatible AI compute and networking infrastructure that fully complements any AI accelerator and GPUs. If I had to break it down to the top-most unique and exciting innovations that led to this end-to-end NR1 Solution and differentiates us, I’d say:

Optimized AI Compute Graphs: The team designed a Programmable Graph Execution Accelerator to optimize the processing of Compute Graphs, which are crucial for AI and various other workloads like media processing, databases, and more. Compute Graphs represent a series of operations with dependencies, and this broader applicability positions NR1 as potentially disruptive beyond just super boosting GPUs and other AI accelerators. It simplifies AI model deployment by generating optimized Compute Graphs (CGs) based on pre-processed AI data and software APIs, leading to significant performance gains.

NR1 NAPU™ (Network Addressable Processing Unit): Our AI inference architecture is powered by the NR1 NAPU™ – a 7nm server-on-chip that enables direct network access for AI pre- and post-processing. We pack 6.5x more punch on a smaller NR1 chip than a typical general-purpose, host CPU. Traditionally, pre-processing tasks (like data cleaning, formatting, and feature extraction) and post-processing tasks (like result interpretation and formatting) are handled by the CPU. By offloading these tasks to the NR1 NAPU™, we displace both the CPUs and NIC. This reduces bottlenecks allowing for faster overall processing, lightning-fast response times and lower cost per AI query. This reduces bottlenecks and allows for faster overall processing.

NR1™ AI-Hypervisor™ technology: The NR1’s patented hardware-based AI-Hypervisor™ optimizes AI task orchestration and resource utilization, improving efficiency and reducing bottlenecks.

NR1™ AI-over-Fabric™ Network Engine: The NR1 incorporates a unique AI-over-Fabric™ network engine that ensures seamless network connectivity and efficient scaling of AI resources across multiple NR1 chips – which are coupled with any GPU or AI Accelerator – within the same inference server or NR1-S AI inference appliance.

NeuReality’s recent performance data highlights significant cost and energy savings. Could you provide more details on how the NR1 achieves up to 90% cost savings and 15x better energy efficiency compared to traditional systems?

NeuReality’s NR1 slashes the cost and energy consumption of AI inference by up to 90% and 15x, respectively. This is achieved through:

Specialized Silicon: Our purpose-built AI inference infrastructure is powered by the NR1 NAPU™ server-on-chip, which absorbs the functionality of the CPU and NIC into one – and eliminates the need for CPUs in inference. Ultimately the NR1 maximizes the output of any AI accelerator or GPU in the most efficient way possible.

Optimized Architecture: By streamlining AI data flow and incorporating AI pre- and post-processing directly within the NR1 NAPU™, we offload and replace the CPU. This results in reduced latency, linear scalability, and lower cost per AI query.

Flexible Deployment: You can buy the NR1 in two primary ways: 1) inside the NR1-M™ Module which is a PCIe card that houses multiple NR1 NAPUs (typically 10) designed to pair with your existing AI accelerator cards. 2) inside the NR1-S™ Appliance, which pairs NR1 NAPUs with an equal number of AI accelerators (GPU, ASIC, FPGA, etc.) as a ready-to-go AI Inference system.

At Supercomputing 2024 in November, you will see us demonstrate an NR1-S Appliance with 4x NR1 chips per 16x Qualcomm Cloud AI 100 Ultra accelerators. We’ve tested the same with Nvidia AI inference chips. NeuReality is revolutionizing AI inference with its open, purpose-built architecture.

How does the NR1-S AI Inference Appliance match up with Qualcomm® Cloud AI 100 accelerators compare against traditional CPU-centric inference servers with Nvidia® H100 or L40S GPUs in real-world applications?

NR1, combined with Qualcomm Cloud AI 100 or NVIDIA H100 or L40S GPUs, delivers a substantial performance boost over traditional CPU-centric inference servers in real-world AI applications across large language models like Llama 3, computer vision, natural language processing and speech recognition. In other words, running your AI inference system with NR1 optimizes the performance, system cost, energy efficiency and response times across images, sound, language, and text – both separately (single modality) or together (multi-modality).

The end-result? When paired with NR1, a customer gets MORE from the expensive GPU investments they make, rather than BUYING more GPUs to achieve desired performance.

Beyond maximizing GPU utilization, the NR1 delivers exceptional efficiency, resulting in 50-90% better price/performance and up to 13-15x greater energy efficiency. This translates to significant cost savings and a reduced environmental footprint for your AI infrastructure.

The NR1-S demonstrates linear scalability with no performance drop-offs. Can you explain the technical aspects that allow such seamless scalability?

The NR1-S Appliance, coupling our NR1 chips with AI accelerators of any type or quantity, redefines AI infrastructure. We’ve moved beyond CPU-centric limitations to achieve a new level of performance and efficiency.

Instead of the traditional NIC-to-CPU-to-accelerator bottleneck, the NR1-S integrates direct network access, AI pre-processing, and post-processing within our Network Addressable Processing Units (NAPUs). With typically 10 NAPUs per system, each handling tasks like vision, audio, and DSP processing, and our AI-Hypervisor™ orchestrating workloads, streamlined AI data flow is achieved. This translates to linear scalability: add more accelerators, get proportionally more performance.

The result? 100% utilization of AI accelerators is consistently observed. While overall cost and energy efficiency vary depending on the specific AI chips used, maximized hardware investment, and improved performance are consistently delivered. As AI inference needs scale, the NR1-S provides a compelling alternative to traditional architectures.

NeuReality aims to address the barriers to widespread AI adoption. What are the most significant challenges businesses face when adopting AI, and how does your technology help overcome these?

When poorly implemented, AI software and solutions can become troublesome. Many businesses cannot adopt AI due to the cost and complexity of building and scaling AI systems. Today’s AI solutions are not optimized for inference, with training pods typically having poor efficiency and inference servers having high bottlenecks. To take on this challenge and make AI more accessible, we have developed the first complete AI inference solution – a compute and networking infrastructure powered by our NAPU – which makes the most of its companion AI accelerator and reduces market barriers around excessive cost and energy consumption.

Our system-level approach to AI inference – versus trying to develop a better GPU or AI accelerator where there is already a lot of innovation and competition – means we are filling a significant industry gap for dozens of AI inference chip and system innovators. Our team attacked the shortcomings in AI Inference systemically and holistically, by determining pain points, architecture gaps and AI workload projections — to deliver the first purpose-built, silicon-to-software, CPU-free AI inference architecture. And by developing a top-to-bottom AI software stack with open standards from Python and Kubernetes combined with NeuReality Toolchain, Provisioning, and Inference APIs, our integrated set of software tools combines all components into a single high-quality UI/UX.

In a competitive AI market, what sets NeuReality apart from other AI inference solution providers?

To put it simply, we’re open and accelerator-agnostic. Our NR1 inference infrastructure supercharges any AI accelerator – GPU, TPU, LPU, ASIC, you name it – creating a truly optimized end-to-end system. AI accelerators were initially brought in to help CPUs handle the demands of neural networks and machine learning at large, but now the AI accelerators have become so powerful, they’re now held back by the very CPUs they were meant to assist.

Our solution? The NR1. It’s a complete, reimagined AI inference architecture. Our secret weapon? The NR1 NAPU™ was designed as a co-ingredient to maximize AI accelerator performance without guzzling extra power or breaking the bank. We’ve built an open ecosystem, seamlessly integrating with any AI inference chip and popular software frameworks like Kubernetes, Python, TensorFlow, and more.

NeuReality’s open approach means we’re not competing with the AI landscape; we’re here to complement it through strategic partnerships and technology collaboration. We provide the missing piece of the puzzle: a purpose-built, CPU-free inference architecture that not only unlocks AI accelerators to benchmark performance, but also makes it easier for businesses and governments to adopt AI. Imagine unleashing the full power of NVIDIA H100s, Google TPUs, or AMD MI300s – giving them the infrastructure they deserve.

NeuReality’s open, efficient architecture levels the playing field, making AI more accessible and affordable for everyone. I’m passionate about seeing different industries – fintech, biotech, healthtech – experience the NR1 advantage firsthand. Compare your AI solutions on traditional CPU-bound systems versus the modern NR1 infrastructure and witness the difference. Today, only 35% of businesses and governments have adopted AI and that is based on incredibly low qualifying criteria. Let’s make it possible for over 50% of enterprise customers to adopt AI by this time next year without harming the planet or breaking the bank.

Looking ahead, what is NeuReality’s long-term vision for the role of AI in society, and how do you see your company contributing to this future?

I envision a future where AI benefits everyone, fostering innovation and improving lives. We’re not just building technology; we’re building the foundation for a better future.

Our NR1 is key to that vision. It’s a complete AI inference solution that starts to shatter the cost and complexity barriers hindering mass AI business adoption. We’ve reimagined both the infrastructure and the architecture, delivering a revolutionary system that maximizes the output of any GPU, any AI accelerator, without increasing operational costs or energy consumption.

The business model really matters to scale and give end-customers real choices over concentrated AI autocracy as I’ve written on before. So instead, we’re building an open ecosystem where our silicon works with other silicon, not against it. That’s why we designed NR1 to integrate seamlessly with all AI accelerators and with open models and software, making it as easy as possible to install, manage and scale.

But we’re not stopping there. We’re collaborating with partners to validate our technology across various AI workloads and deliver “inference-as-a-service” and “LLM-as-a-service” through cloud service providers, hyper scalers, and directly with companion chip makers. We want to make advanced AI accessible and affordable to all.

Imagine the possibilities if we could boost AI inference performance, energy efficiency, and affordability by double-digit percentages. Imagine a robust, AI-enabled society with more voices and choices becoming a reality. So, we must all do the demanding work of proving business impact and ROI when AI is implemented in daily data center operations. Let’s focus on revolutionary AI implementation, not just AI model capability.

This is how we contribute to a future where AI benefits everyone – a win for profit margins, people, and the planet.

Thank you for the great interview, readers who wish to learn more should visit NeuReality.

#2024#4g#accelerators#ADD#adoption#ai#AI adoption#AI chips#AI industry#ai inference#AI Infrastructure#ai model#AI models#AI systems#ai training#amd#amp#APIs#applications#approach#architecture#audio#bank#benchmark#biotech#Building#Business#business model#carbon#carbon footprint

0 notes

Text

First AI outperforming international math olympiad gold medalist

See on Scoop.it - Design, Science and Technology

Proving geometric theorems constitutes a hallmark of visual reasoning combining both intuitive and logical skills. Therefore, automated theorem proving of Olympiad-level geometry problems is considered a notable milestone in human-level automated reasoning. The introduction of AlphaGeometry, a neuro-symbolic model trained with 100 million synthetic samples, marked a major breakthrough. It solved 25 of 30 International Mathematical Olympiad (IMO) problems whereas the reported baseline based on Wu’s method solved only ten.

In this paper, the IMO-AG-30 Challenge introduced with AlphaGeometry was revisited, and the researchers found that Wu’s method is surprisingly strong. Wu’s method alone can solve 15 problems, and some of them are not solved by any of the other methods. This leads to two key findings: (i) Combining Wu’s method with the classic synthetic methods of deductive databases and angle, ratio, and distance chasing solves 21 out of 30 methods by just using a CPU-only laptop with a time limit of 5 minutes per problem. Essentially, this classic method solves just 4 problems less than AlphaGeometry and establishes the first fully symbolic baseline, strong enough to rival the performance of an IMO silver medalist. (ii) Wu’s method even solves 2 of the 5 problems that AlphaGeometry failed to solve. Thus, by combining AlphaGeometry with Wu’s method a new state-of-the-art for automated theorem proving on IMO-AG-30, solving 27 out of 30 problems, the first AI method which outperforms an IMO gold medalist is finally achieved.

Read the full article at: arxiv.org

0 notes

Text

Oh no, oh no, you've done it now. I don't know much science (not my realm of expertise or interest, usually) but THIS is one piece I happen to carry with me like it's an emotional support factoid.

I can't recall which broadcaster showed this particular documentary but it was all about the science behind inspiration: where did it come from, was it purely random and could it be induced? Turns out, it can totally be induced.

They showed an experiment where three groups of people were set an identically difficult problem. They were then sent off to try and see what circumstances caused inspiration to happen. Two of the groups were given Lego or similar, while the third was told to just go away and think really hard. One Lego group was told to build something elaborate out of the blocks, while the other was told to [YAWN] sort the blocks by colour/size etc. It was that last group which reported the most eureka moments.

Why? Because the first two groups' conscious brains were working really hard on the problem, but unfortunately a huge amount of problem solving happens in the sub-conscious brain. The last group weren't using their conscious brain so much, which meant the brain was able to divert resources to the problem-solving parts of the sub-conscious, giving it a little power (well blood) boost. They could even watch this happening real time using a cMRI scan: the brain would divert a significant amount of its bloodflow to the sub-conscious which would then get to work in the background, while the patient is wholly unaware of what's happening. And then it gets even better. When the sub-conscious is on the brink of an answer, it will draw even more blood away from the rest of the brain, so much so that your senses shut down. You can't see/hear/taste etc. You don't notice though because this process takes less than a second, during which time all that extra blood flow is super-charging the sub-conscious, ramping it up like a PC suddenly roaring at 100% CPU, and EUREKA: inspiration strikes.

In summary: for inspiration to strike, you need to allow your conscious mind to chill and give some resources to your sub-conscious, and one pretty reliable way to do that is to do something really mundane/tedious/boring like washing the dishes.

“The best time for planning a book is while you're doing the dishes. ” ― Agatha Christie

#I will never tire of telling people this#this is why you get ideas in the shower#I used to alphabetise nametags at work if I was stuck on a problem for this very reason

650 notes

·

View notes

Text

New Product has been published on GamersFlix

New Product has been published on https://gamersflix.com/product/kuu-notebook-intel-celeron-j4105-student-laptops/

KUU Notebook Intel Celeron J4105 Student Laptops

KUU Notebook Intel Celeron J4105 Student Laptops

Attention:

Each of our laptops will help customers to install the system and can be used normally. Please do not replace other systems on our laptops without authorization. This may cause some drivers to be lost. If this happens, the customer Is responsible for himself, we can help you solve the problem, but we do not assume the problem of system incompatibility is caused by reinstalling the system. If you think what I said is unreasonable, please don’t buy it, thank you for your cooperation!

Highlights:

Ultra Thin Notebook, 14.1inch 1920*1080P IPS Display.

Intel Celeron J4105 four-core Processor.

Intel UHD graphics 600 GPU,

8GB DDR4 RAM And 128GB SSD Storage.

Pre-installed Windows 11 Pro System by default.

2.4G/5G WiFi and Bluetooth.

14.1 inch 1920x1080P FHD Display

KUU Xbook comes with a 14.1-inch 1920×1080 IPS display, giving you vivid colors and an unbounded vision.

Powerful Upgrade CPU for Smooth Experience, install Windows 11 Pro system

KUU Xbook is powered by an Intel Celeron J4105 processor. Intel processors help apps load faster and allow multiple tasks to run simultaneously without lag.

Blazing-fast, Mass Storage

KUU Xbook of up to 8GB RAM allows multiple tasks to run simultaneously without lag, while 128G/256G/512G SSD provides plenty of room for your documents, photos, and videos.

Front Speakers Deliver Stereo Enjoyment

By optimizing the internal design of the device, Xbook adopts a front speaker design that lets users hear the sound directly, giving a better stereo effect and more powerful sound.

Long and Safe Battery Life, 30W front camera

Equipped with a 3800mAh Li-polymer battery, you will enjoy long-lasting work and entertainment battery life.

Basic Information:

Brand: KUU

Model: Xbook

Material of back cover ( With logo ): plastic

OS: Windows 11 Pro

CPU: Intel Celeron Processor J4105

Core: 4 Core 4 Threads

Graphics Type: Integrated Graphics

Graphics Chipset: Intel UHD 600 Graphics

Storage:

RAM Type: LPDDR4

RAM: 8GB

ROM: 128GB/256G/512G SSD

Network:

Bluetooth: 4.2

LAN Card: No

Support Network: 2.4GHz/5.0GHz,WiFi

WIFI: 802.11 ac

Display:

Screen type: FHD, IPS

Screen size: 14.1 inch

Display Ratio: 16:9

Screen resolution: 1920 x 1080 (FHD)

Camera:

Camera type: Single camera

Front camera: 0.3MP

Connectivity:

Audio Jack: 3.5mm Earphone / Mic

Card Reader Interface: Yes

DC Jack: Yes

TF card slot: Yes

USB Host: Yes (1x USB 3.0 Host, 2x USB 2.0 Hot)

Mini-HDMI Compatible

General:

Battery type: 3800mAh, Li-on polymer battery

Power device Type: AC Adapter, 100-240V / 12V 2A

Languages: Supports multi-language

Media Formats:

3D Games: Supported

MS Office format: Excel, PPT, Word

Music format: AAC, FLAC, MP3, WMA

Picture format: BMP, GIF, JPEG, JPG, PNG

Video format: 1080P, AVI,H.264, MP4, MPEG4

Dimensions:

Product weight: 1.25 kg

Package weight: 1.8 kg

Product size (L x W x H): 32.10 x 21.00 x 1.8 cm

Package size (L x W x H): 38.00 x 24.30 x 8.3 cm

Package Contents:

1 x Notebook

1 x Charger

Gift 1: Keyboard Stickers

Our keyboard is the QWERTY English standard version, but we will send keyboard stickers of other languages below as a gift. If you need it, just leave us a message.

Gift 2: plug Adapter

We will send an EU/US/UK/Australia/New Zealand plug adapter as a gift. This is good if you plan to move or travel to other places other than the United States.

1) Storage and Language

The original keyboards are English. We only have Russian, Spanish, German, French, Arabic, and Hebrew stickers. The OS of our device supports 100+ languages.

0 notes

Text

Sometimes/often I just want to be an ordinary man with ordinary interests, ordinary expectations from life and ordinary wishes and here I am: autistic and conscious of all my lack of basic life skills.

--

Currently lt's disabling. My focus is non-existent. My clumsyness is so severe that I even refuse to leave the bed, not due to being depressed, but because I have no "brain capacity" to determine my moves properly without causing chain reactions of incidences. It is no exaggeration, but whenever I attempt to solve a problem (like a glass of water fell down..) I cause even more shitty incidences. It's the quanity and frequentation of those incidences that is disabling.

My sensory problems are severe as well. Both sensory processing is deficitary as well as the "output" via motoric control of my body.

Whenever I fail 90% in what I do, I always remember all the shitty degrading comments I received.

I had a bit of self esteem, but thanks to severely desinterested psych workers I have lost my self esteem entirely.

Everyday I hear these comments in my mind and I have nothing to hold against it. I have no counter-example. I have so few experiences where I could achieve something, where my kind of thinking was even wanted.

Constantly being deemed as dumb and whatever is painful when you know you can be smart - with a bit of self esteem and trust in yourself, with just few affirmative words - and an option to advance your knowledge and skills.

Having no options to use my knowledge and skills - and especially not being able to improve and work on them makes me sad. Additionally, working on expanding/improving my knowledge and skills could help me with my self-esteem.

It's saddening.

In the last months I could not train my mind enough to remain functional.

I need daily mental workout to remain a basic level of functionality of basic cognitive abilities. It's like a person with muscular dystrophy - they have to train hard everyday just to remain at a basic functionality.

My verbal and communication skills severely decreased over the last months. Whenever I attempt to write something - may it be poetry, philosophy or about STEM topics it turns into utter gibberish shortly after I started writing.

Even basic grammar becomes deficitary. Word finding problems are severe too.

My brain is super slow. Processing information is filled with more errors than i am able to detect and correct via metacognition. Even basic perception is exhausting because of this. In moments of severe exhaustion my vision becomes double (double pictures) and if I continue the exhausting task (mostly tasks that require much working memory capacity) I become delirious and then fall asleep while doing the task... mental overload... mental shutdown. Mental CPU used by 100%.

The dissociation aspect in my cognitive processes makes it all too difficult, as, well, my memory is like a server having multiple co-existing sub-systems - each of them is also errorous. And sending information between the servers, as to use this analogy, is a very "mental-CPU-exhausting task"...

.... hmmm I might want to write an article about cognitive processes in general - and how it is with my own sensory as well as memory processing. Some weeks ago I started doing short explanations of analogies between cognitive processes and "computer and math terms".

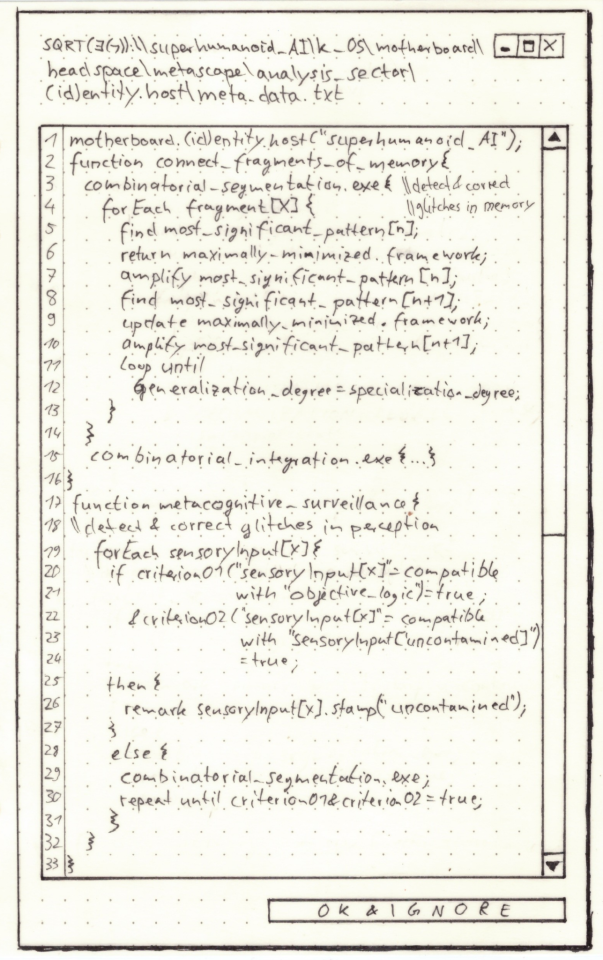

I also want to use pseudocode to depict some "working mechanisms" of my cognition, so to speak.

(I made these many months ago:)

I might go into detail when I actually continue this project.

#metacognition#dissociation#cognition#cognitive processes#sensory processing#memory processing#neurodivergent#autism#asd#dyspraxia#cog sci

41 notes

·

View notes

Text

Guess what losers??? We’re back with more of The Imperium of Man Lands on Mata Nui!

Last episode here https://lordfrezon.tumblr.com/post/687661607423295488/on-todays-episode-of-the-imperium-of-man-finds

Our session begins with the party opening the door to the CPU, without a plan. They’re really good at that.

Velika is there, at a console, doesn’t do anything to them. He’s got two spider bots with guns stolen from the party and the bodies of the two Glatorian are laid out. They don’t come up again.

Emilia, the Ad Mech tech lady, uses her mask of illusions to camouflage herself to sneak up on Velika and his boys, the boys don’t notice, Velika doesn’t care.

She figures out how to turn off the spiders, but figures they might shoot Velika if he starts looking not like a little brown matoran, so she uses her mask to make him look like one of her party members

He is not amused and deactivates the spider robots and their guns, starts being an asshole. He’s good at that.

Treytor, the medic/lesbian (it’s their class, trust me), turns off her electrowhip so Velika can’t see them coming, and tries to sneak up on him.

Velika gives Emilia the guns back, tells her to fuck off, Treytor gets the drop on Velika but a spiderbot sees them and sounds the alarm.

Emilia tries to shoot Velika, is surprised to find he gave her a not-active gun, the spider bots jump Treytor and pin them down, Oswald, the guardsman/toa, watches his incompetent teammates.

Trained inquisitorial strike team, everyone.

Treytor tries to rile Velika up by calling him an idiot and a weakling and not a real god, he gets pissed and goes to slap her... as according to plan! But they fail to stab Velika with the Knife of Saving Things for Later, aka a knife connected to a Tesseract Vault. Velika recaulculates some stuff.

He admits that he doesn’t care about them, that he’s the reason Makuta are rogue, and that there’s a Great Being on board their Inquisition voidship.

Emilia comes to the correct conclusion it’s her boss’s boss’s boss, the Lord Dogma Magna Esse

Magna Esse means great being in latin. I was very subtle.

Velika tells them to fuck off again, admits he can’t solve the problem on his own but is fine with them doing whatever, and uses the Olmak he stole to leave

The party fucks off from the CPU

They head back through the archives to find the Suva again, Oswald feels someone shadowing them, using kanohi and psyker powers Treytor and Emilia respectively notice something in the walls, following them

They realize that something is in the Suva room too.

There’s a brief standoff, but everything goes smoothly, the figure introduces herself as Toa Tuyet, hooks herself up the Suva, gets a phone that’s also a tracker from the party, and mentions that the Order of Mata Nui arrested her. The party considers killing her three separate times.

Mostly because she had an Olmak and they wanted that for their buddy Brutaka (Velika stole his)

They leave the archives, call their bosses Helrynx and Interrogator Alice, both are surprised they’re alive, have some long term jobs but nothing pressing.

They head to go check on Miserix, who is currently trying to blow a hole to Teridax’s lair, but being blocked by a giant indestructible wall.

They head to Turaga Dume to ask him if there are any ways to Mangaia that don’t involve going through a giant wall/door, he suggests asking Bomonga

Bomonga offers a pathway up to Mata Nui, Emilia is 100% sure it’s a Necron tomb thanks to her crit fail perception check

They head on down back into the archives with the smaller Miserix, ready to kick Teridax’s ass

3 notes

·

View notes

Text

Ways To Make Programs Go Fast

Performance and optimisation, Part Two. Previously: The Refterm Lecture

In yesterday’s post, I explained that “don’t pessimise“ is not actionable, especially not something you can teach when you teach programming. One reason for this is there are so many optimisation strategies to choose from! Of course, using most of these without benchmarking or at least without sitting down and thinking really hard about your expected workloads would be premature optimisation. But if you hear “don’t pessimise“, how do you know which one you are supposed to always do, and which ones not? There are so many optimisation strategies to choose from!

Each of the following strategies could make your program much faster, but it could also be a waste of programming time. Even worse, it could make your program harder to read and understand for future programmers, or even for future YOU!

Re-write in C

This one always works, unless your program is already written in a fast programming language. Sometimes it can give your program a 100-fold speed boost, but sometimes it makes your program only run twice as fast, or maybe the speed-critical parts are already written in C so you get only 5% faster. Re-writing the whole program takes a high amount of effort, and often results in a drastic increase in program surface/lines of code.

Depending on your programming language, build system, program design and the problem you are trying to solve, it might be possible to isolate and re-write only the most performance-critical section of your code.

It might also make sense to re-write some code in a different compiled language like C++, Objective-C, D, Common Lisp, OCaml, Rust, or Zig, or even a faster language than you are currently using.

Use more CPU cores

Maybe this will help you, maybe not. It’s worth a shot, right? You could speed up your program by doing unrelated work in different threads, which means more code complexity and overhead for synchronisation. You could speed up by having the different stages of your program’s pipeline run in parallel, which can mean copying data around. You could also try to split up independent work units and give them to different CPUs (data parallelism), which is easier to do if you are working one one big-non-interactive task. You can incur significant overhead from synchronisation and data distribution in some cases, and for small tasks, it may be faster to run them in one thread than to split them up 64 ways and to spawn 64 threads. Both in terms of effort and in terms of program complexity, using more CPU cores ranges somewhere between “easy, just use the parallel version of a for loop” and “medium-high effort, significant hurdle, and good luck proving you didn’t just introduce a race condition“.

Compute less

This is easier said than done, but there are often low-hanging fruit: If you compute the same thing twice, consider computing it once and saving it in a variable. If you don’t need the result of a computation, don’t keep it around at all! If you don’t need to compute something, don’t compute it!

You’d think this is something programmers already do, but very often there is a lot of room for improvement! The effort here mostly lies in identifying things you don’t need, but you might even end up with simpler, more robust code.

Caching and memoisation

This is pretty straightforward. If possible, cache the results of expensive procedures, whether they be CPU-intensive, loading things from hard disk, or talking to networked services. This is not the same as saving and re-using the return value of a function ten lines later. Instead, you augment your function to keep arguments and return values in a table. Now you can look up the return value instead of recomputing it. If your look up table is more expensive to keep than the computation itself, this might be a net negative, otherwise it is a time/memory trade-off.

Memoisation is a special case of caching. It usually means that the function has no side effects at all and that the cache will never be invalidated, and is thus be kept indefinitely. Otherwise, there might be some invalidation logic to either purge the cache after a certain time, or to prevent it from growing indefinitely.

Adding caching and memoisation to key bottlenecks of an existing program is usually quick and easy and results in a very small increase in program complexity.

Incremental computation

Instead of re-computing from scratch every time, you take the result from last time and build on it. That could be re-using path fragments, re-using collision spaces, re-using rendered screen contents, re-using game trees from the previous move.

Incremental computation may also enhance UI responsiveness by computing a first approximation and iteratively refining it, displaying the intermediate result with every step along the way. Using incremental computation to increase UI responsiveness is probably easier than adding incremental computation to an interactive simulation. Determining what changed, what can be re-used, and what needs to be re-computed often requires additional checks and bookkeeping that are not needed in a visualisation of a non-interactive visualisation of an iterative algorithm.

Switching from one-step computation to incremental computation might involve re-architecting your whole program and increase coupling between the UI and the backend, resulting in a moderate programming effort, but a substantial increase in program complexity.

Lazy loading and computation

Instead of loading assets from the hard disk at startup, you may want to stream them in at runtime. Instead of simulating everything and doing a forward rendering pass on a scene, you may want to defer as much as possible and only run computations on the parts of the scene that are actually visible. If you just don’t render what is not visible, this is called culling, as in view frustum culling and occlusion culling, but you in an application like a word processor, you may get away with not typesetting a paragraph on a currently not open page until the user actually looks at the page.

Depending on program architecture, the impact of lazy loading can be large or small.

Batching

Batching is useful when operations have high throughput, but also high latency, or when there is significant cost involved in setup and teardown. Database queries, HTTP requests, and GPU draw calls all have significant per-call overhead, so it makes sense to bundle multiple commands into one call, instead of doing multiple round-trips and waiting for the call to return each time.

Although drawing things on the GPU is fast, and for-loops are generally fast, draw-calls inside a for-loop are not as fast as preparing vertex data in a for-loop, and then drawing it all at once.

It’s probably faster to do a single SQL query with proper JOIN clauses compared to iterating over SQL query results, but it’s definitely faster than doing multiple SQL queries over the network. It’s also faster to do a single SQL query against a database system written in C than to iterate in an interpreted language like Python, which may be a side benefit of batching.

Since batching is usually applied to specific operations only, the amount of code to write is moderate, and there is usually only a small impact on the overall complexity of the program. Batching can often be contained in sprite batching system, or an ORM layer, making the program look the same on the API consumer side.

Pre-compute more

This one is the opposite of lazy loading. If you have enough RAM to spare, why not just load everything, pre-process everything on program startup or at level load time? Instead of caching or memoisation, why not just pre-compute a table of all possible values at load time? This would massively simplify your program’s logic, and remove pesky cache invalidation or memory management steps.

If you know that a mapping will not be modified at run time, you can spend some time optimising the hashing or balancing the tree before finalising the whole data structure. You know how much memory to allocate. You can let go of allocated memory from intermediate computations. You can do all your disk access at once and then you won’t have to any more. If you have a lot of static collision geometry, you can generate a collision shape or mesh.

De-generalise code

Maybe the problem with your code is that it just iterates and allocates to much. Instead of having five different invocations of map, reduce, and filter, you could iterate over a list once, and do many different operations all in one go. This might entangle different concerns, and turn your beautiful functional or object-oriented code into a hard-to-understand monolith that does five things at once.

Depending on your programming language, things like iteration, allocating memory, function calls, or returning multiple values may have significant overhead, or they may not. It’s probably faster in Maptlab or Python with NumPy to do two matrix operations in sequence than to iterate over the elements of a matrix.

Depending on your program, this may make performance worse. It will probably result in an increase in coupling, and 50% more lines of code in the places you de-generalise, even if the original program design stays the same.

Hardware acceleration

Now we are getting out the big guns. Instead of trying to write faster code for the CPU, we are using CUDA or OpenGL or TensorFlow to run code on the GPU, Neural Engine, Tensor Cores, PIO, FPGA, or co-processor.

This hardware usually has different performance characteristics and a different programming model from your general-purpose CPU. If it didn’t, you would just ditch the CPU completely, and run *all* your code on the GPU or neural engine or tensor cores or whatever. There is always a drawback.

With a GPU, you have to get your data from RAM into VRAM, you have to write your rendering in terms of shaders, and some operations become more costly.

If your program gets slower because of hardware acceleration, it might be because the hardware is a poor fit for the problem, because your algorithm is a poor fir for the hardware, or because you are using the hardware wrong. In any case, you would notice this rather quickly during testing and probably fall back on the general-purpose CPU instead. A far more problematic situation can occur when your hardware-accelerated solution leaves performance on the table in a way that is obvious to programmers experienced with this type of hardware, but invisible to you, because your bad algorithm still runs twice as fast as on the general-purpose CPU. This situation is difficult to detect unless you know what you are looking for. My rule of thumb, if your GPU is utilised more in terms of “percentage“ than your CPU was when you did software rendering, you are probably doing something wrong.

Depending on the scope of the hardware acceleration, the amount of code to write is between manageable and moderate, but the impact on your program’s architecture and complexity is often high. Instead of just doing things in sequence and having objects and variables live in RAM, you need to think about the type of hardware acceleration you are using, and structure your program accordingly, or when you use something like Theano, you need to re-write your computation in terms of Theano so that Theano can analyse and re-structure your computation.

Use specific CPU instructions