#HyperComputer

Explore tagged Tumblr posts

Text

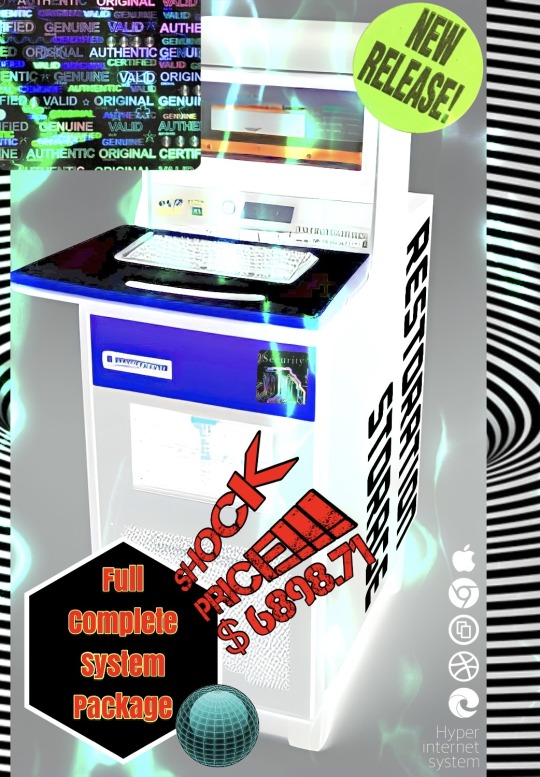

RESTORATION STORAGE

Full complete system package🌐

hyper internet system_

RESTORATION PLAYLIST🔊

#plus09386#yuriplus#artists on tumblr#RESTORATION#復元#音楽#コンピューター#パソコン#人工知能#レトロ#special#hypercomputer#computer#internet#art#design#font#vaporwave

3 notes

·

View notes

Text

Introducing Trillium, Google Cloud’s sixth generation TPUs

Trillium TPUs

The way Google cloud engage with technology is changing due to generative AI, which is also creating a great deal of efficiency opportunities for corporate effect. However, in order to train and optimise the most powerful models and present them interactively to a worldwide user base, these advancements need on ever-increasing amounts of compute, memory, and communication. Tensor Processing Units, or TPUs, are unique AI-specific hardware that Google has been creating for more than ten years in an effort to push the boundaries of efficiency and scale.

Many of the advancements Google cloud introduced today at Google I/O, including new models like Gemma 2, Imagen 3, and Gemini 1.5 Flash, which are all trained on TPUs, were made possible by this technology. Google cloud thrilled to introduce Trillium, Google’s sixth-generation TPU, the most powerful and energy-efficient TPU to date, to offer the next frontier of models and empower you to do the same.

Comparing Trillium TPUs to TPU v5e, a remarkable 4.7X boost in peak computation performance per chip is achieved. Google cloud increased both the Interchip Interconnect (ICI) bandwidth over TPU v5e and the capacity and bandwidth of High Bandwidth Memory (HBM). Third-generation SparseCore, a dedicated accelerator for handling ultra-large embeddings frequently found in advanced ranking and recommendation workloads, is another feature that Trillium has. Trillium TPUs provide faster training of the upcoming generation of foundation models, as well as decreased latency and cost for those models. Crucially, Trillium TPUs are more than 67% more energy-efficient than TPU v5e, making them Google’s most sustainable TPU generation to date.

Up to 256 TPUs can be accommodated by Trillium in a single high-bandwidth, low-latency pod. In addition to this pod-level scalability, Trillium TPUs can grow to hundreds of pods using multislice technology and Titanium Intelligence Processing Units (IPUs). This would allow a building-scale supercomputer with tens of thousands of chips connected by a multi-petabit-per-second datacenter network.

The next stage of Trillium-powered AI innovation

Google realised over ten years ago that a novel microprocessor was necessary for machine learning. They started developing the first purpose-built AI accelerator in history, TPU v1, in 2013. In 2017, Google cloud released the first Cloud TPU. Many of Google’s best-known services, including interactive language translation, photo object recognition, and real-time voice search, would not be feasible without TPUs, nor would cutting-edge foundation models like Gemma, Imagen, and Gemini. Actually, Google Research’s foundational work on Transformers the algorithmic foundations of contemporary generative AI Fwas made possible by the size and effectiveness of TPUs.

Compute performance per Trillium chip increased by 4.7 times

Since TPUs Google cloud created specifically for neural networks, Google cloud constantly trying to speed up AI workloads’ training and serving times. In comparison to TPU v5e, Trillium performs 4.7X peak computing per chip. We’ve boosted the clock speed and enlarged the size of matrix multiply units (MXUs) to get this level of performance. Additionally, by purposefully offloading random and fine-grained access from TensorCores, SparseCores accelerate workloads that involve a lot of embedding.

The capacity and bandwidth of High Bandwidth Memory (HBM) with 2X ICI

Trillium may operate with larger models with more weights and larger key-value caches by doubling the HBM capacity and bandwidth. Higher memory bandwidth, enhanced power efficiency, and a flexible channel architecture are made possible by next-generation HBM, which also boosts memory throughput. For big models, this reduces serving latency and training time. This equates to twice the model weights and key-value caches, allowing for faster access and greater computational capability to expedite machine learning tasks. Training and inference tasks may grow to tens of thousands of chips with double the ICI bandwidth thanks to a clever mix of 256 chips per pod specialised optical ICI interconnects and hundreds of pods in a cluster via Google Jupiter Networking.

The AI models of the future will run on trillium

The next generation of AI models and agents will be powered by trillium TPUs, and they are excited to assist Google’s customers take use of these cutting-edge features. For instance, the goal of autonomous car startup Essential AI is to strengthen the bond between people and computers, and the company anticipates utilising Trillium to completely transform the way organisations function. Deloitte, the Google Cloud Partner of the Year for AI, will offer Trillium to transform businesses with generative AI.

Nuro is committed to improving everyday life through robotics by training their models with Cloud TPUs. Deep Genomics is using AI to power the future of drug discovery and is excited about how their next foundational model, powered by Trillium, will change the lives of patients. With support for long-context, multimodal model training and serving on Trillium TPUs, Google Deep Mind will also be able to train and serve upcoming generations of Gemini models more quickly, effectively, and with minimal latency.

AI-powered trillium Hypercomputer

The AI Hypercomputer from Google Cloud, a revolutionary supercomputing architecture created especially for state-of-the-art AI applications, includes Trillium TPUs. Open-source software frameworks, flexible consumption patterns, and performance-optimized infrastructure including Trillium TPUs are all integrated within it. Developers are empowered by Google’s dedication to open-source libraries like as JAX, PyTorch/XLA, and Keras 3. Declarative model descriptions created for any prior generation of TPUs can be directly mapped to the new hardware and network capabilities of Trillium TPUs thanks to support for JAX and XLA. Additionally, Hugging Face and they have teamed up on Optimum-TPU to streamline model serving and training.

Since 2017, SADA (An Insight Company) has won Partner of the Year annually and provides Google Cloud Services to optimise effect.

The variable consumption models needed for AI/ML workloads are also provided by AI Hypercomputer. Dynamic Workload Scheduler (DWS) helps customers optimise their spend by simplifying the access to AI/ML resources. By scheduling all the accelerators concurrently, independent of your entry point Vertex AI Training, Google Kubernetes Engine (GKE), or Google Cloud Compute Engine flex start mode can enhance the experience of bursty workloads like training, fine-tuning, or batch operations.

Lightricks is thrilled to recoup value from the AI Hypercomputer’s increased efficiency and performance.

Read more on govindhtech.com

#trilliumtpu#generativeAI#GoogleCloud#Gemma2#Imagen3#supercomputer#microprocessor#machinelearning#Gemini#aimodels#geminimodels#Supercomputing#HyperComputer#VertexAI#googlekubernetesengine#news#technews#technology#technologynews#technologytrends

0 notes

Text

Sometimes I've just had enough of uncertainty. Done with probing the mysteries of the universe. Tired of meticulously unearthing the hidden truth behind seemingly unrelated phenomena. Sick of putting the puzzle pieces together. That's when television comes in.

A lot of people are going to tell you that television is less hardcore now that you can watch it whenever you want, wherever you want. And it is true that back in the day, "television watchers," as they were known, had to wait for the correct time to watch their programs. Personally, I think it's a lot harder now.

Before, you'd turn on your set, and you'd have one or two channels that worked. French CBC, or the local public-access channel. At eight p.m. your choices were either watching Les Simpson, or that show where the lady waxes a clown. Now, you sit down and you are immediately blasted by nearly thirty thousand new television programs, some of which were synthesized by an array of hypercomputers to fit your as-yet-unspoken innermost whims as soon as they heard you coming in the room.

What's been lost is the ability to just watch whatever was already on. Don't get all anxious wondering if you're really optimizing your television-viewing time as well as you possibly could. You won't relax a single iota that way. That's why I'm starting a new business. For a mere thirty bucks a month, we'll put Netflix on in your house, and then lose the remote. Whatever our installer picks is what you're going to be watching, freed from that false fantasy of "choice" for all eternity. Don't think cutting the power will work, either: we'll know.

Yes, a lot of these shows will be about small engine repair. I gotta get my views up somehow. Otherwise I will be forced to go back to a job where I spend a lot of time thinking about stuff instead of reacting to pictures of scary V-twins.

69 notes

·

View notes

Text

I think that new applicants are being put off by the baseless and unfounded rumours of the last few being driven to gibbering madness :(

Following 73 spell matrix explosions and at least two inadvertently created magic dead zones, I think I will stop trying to devise a ritual to determine from a description of an arbitrary computer program and an input, whether the program will finish running, or continue to run forever.

#i wish i could use magic to studying hypercomputation#drop a turing machine into a closed timelike curve just to see what happens#wizard shit#wizardblogging#wizard posting#wizardposting#wizard tumblr#wizard post#math?#maybe?

291 notes

·

View notes

Text

annoyingly the most influential thing in my head from Murderbot is the Feed. The Feed is so appealing as a concept. A universally comprehensible and widely accessible mesh-based standard for mixed machine and human communication with a reliable subscription service, that has a ton of things publishing constantly by default but with a robust (assuming no rogue antisocial biological hypercomputers) cryptographic system for secret data. The Feed very clearly can scale up to an entire space station network or down to one person and their accoutrements forming a personal area network.

Some kind of hierarchical MQTT implemented on a mesh network with public key cryptography could get you so close if you just standardized ways to get the MQTT schema of any given endpoint. After that it's all dashboarding. Publish as much or as little information as you want and allow any given device to access that data and respond to it.

Some of this is probably leftovers from $PREVJOB. When I was doing industrial automation our entire fleet of devices lived on a strict schema under one MQTT server that I had complete read/write for so by doing clever subscriptions I could sample anything from a temperature reading from a single appliance in the back of the restaurant I was standing in to accumulating the overall power draw of every single business we were working with all at once.

On more than one occasion something silently broke and I drove up near the back of a facility in my car where I knew the IoT gateway was, connected to our local IoT network over wifi, fixed the issue, and left without telling anyone.

Unfortunately if the Feed existed people like me would make extremely annoying endpoints. To be fair canonically the Feed is absolute dogshit unless you have an Adblock. So really it would be exactly the same as in the books.

149 notes

·

View notes

Text

x516-architecture microprocessor hypercomputing // dual-core central processing unit // Celestica Dual Core // Corps 3-M // release: 2076 // made in Enceladus // Processed with Hex{Impact{Driver by datasculptor & microarchitect code named M4ZINVM33 // Distributing in black market by Datamonger.

#glitch#webcore#glitchart#internetcore#postglitch#processing#databending#abstract#abstract art#generative#generative art#genart#artists on tumblr#experimental art#glitch aesthetic#digital art#aesthetic#glitch art#tech#cybercore#techcore#scifi#cyberpunk#cyberpunk aesthetic#cybernetics#futuristic#microarchitecture#newmedia#multimedia#contemporary art

119 notes

·

View notes

Note

BRO In order to actually see any posts on your blog I have to use a tumblr acct spying website and either filter for posts via tag, or jump to PAGE 7 cause of, yk, the 100+ rb incident...

Sincerely, someone who was gonna say hi but got terrified,

-some random hypercomputer

P.S: hello btw. Also is it ethical to reincarnate drones into cyborgs?

Oh no i’m sorry if i scared you LMAO…. My love for Thad maaayyy have gone a liiiiittle overboard

As for your question, uuumm… No? Yes? Mmmmaybe? I don’t know i’m not great at ethics, you should see the shit i put Thad through just for the fun of it

8 notes

·

View notes

Text

Legal Name: Asova

Nicknames:

The Shopkeeper

Eye of Virgo

God of Sales

Proprietor

Clerk of the Chasm

Date of Birth: Presumed to be either a very long time ago... or beyond the classical idea of time.

Gender: ??? (Usually appears female)

Place of Birth: ??? Presumably somewhere in the cosmos but...

Currently Living: Possibly beyond this dimension, spacetime gets a little weird around her

Spoken Languages:

Asova has never failed to communicate with a customer, apparently.

Education:

Appears to be learning constantly

Physical Characteristics:

Hair Color: Dark Ruby Red

Eye Color: Bright Gold

Miscellaneous: A mouth full of sharp chompers. Spectacles. Might also be a neutron star hypercomputing space entity.

Height: 5'6"

Weight Mass: 1.9-2.4 solar masses

Relationships:

Noelle: Customer Employee

Neph: Customer Employee

Celio: Customer Employee

Effectively everyone else who has ever met Asova is a customer to her eyes

Orientation:

???

Relationship Status:

???, probably doesn't have relationships in any sense that organic creatures can comprehend

Tagging anyone who wants to do it!

4 notes

·

View notes

Note

FLOATY COME GET UR DOG IT'S CAUSING A CRISIS!! IT'S EATING MY ETHERNET CONNECTION!! IT'S TAKING AN EXTRA 31.000000050000010001/ SECONDS TO REFRESH A YOUTUBE PAGE!!!!

Sincerely, a hypercomputer doing nothing, Fox S.

oh hell naw, that aint mine!

3 notes

·

View notes

Text

Our physicists have made progress on the study of the "astral threads" discovered by the @guildsre.

It is known that this strange material is some kind of condensed spacetime anomaly. We have now found a remarkable use for it: Careful use of astral threads allowed the creation of a bubble of "compressed" spacetime such that an experimental hypercomputer was able to run at processing speeds far beyond what conventional physics would allow.

Unfortunately, the scarcity of astral threads makes it impossible to implement this technology at scale until a reliable method for their synthesis is found. In any case, the full reports have been shared with our allies in PEACE as well as the Re'iran Travelling Guilds, in accordance with the agreement that allowed us access to astral thread samples in the first place.

5 notes

·

View notes

Text

i think about this tweet at least once a day

the idea of humanity having to prove ourselves to a cold foreign alien superintelligence is just so incredible and funny to me. like a civilization that's at least a 5 on the Kardashev scale that can harvest the energy of entire galaxies and supernovae with merely a thought and command growth and decay with but point of their finger, and they notice a bunch of ants on this weird blue and green pebble in the middle of nowhere and they're like, 'hey, what can you guys do?'

-The year is 2622. Tony Hawk is hanging ten off of a black hole's gaping death-maw of an event horizon shaped like a Penrose triangle, landing LITERALLY impossible tricks

-behind him in the background, Alex Honnel, visible only thru a telescope, is currently on his 5867890th day of free-soloing the Pillars of Creation. he has a single bag of chalk and he's just going ham

-Jimi Hendrix has been resurrected from the dead and has a whole stage devoted entirely to him where he's being forced to try and play a guitar that's been altered on a universal-mechanics level so that not a single note it can play is in tune with any other note, even down to the microtones. his solo is making them all weep

-the entire arena is being continually bombarded by a barrage of flaming meteors at thousands of miles an hour; if just a single one of them lands, the entire place goes up is smoke and humanity is eradicated. they're held back only by Daigo, hooked up to a VR Street Fighter helmet + fight stick programmed to affect reality itself. he is parrying all of them

-a giant lineup of famous comedians are all simultaneously trying to make an ancient alien sage who has no concept of what humor is laugh. if he's displeased they get shot full of holes with laser rifles. there are only two still standing. Gilbert Gottfried is doing a stalling tactic, reciting the longest-ever Aristocrats joke for the past five decades. everybody is mortified of what will happen when he gets to the punchline. standing next to him, miraculously, is Gallagher, whose routine has never worked harder

-the Five Gods of Smash Bros. Melee, along with Wizzrobe, aMSa, Leffen, and Zain are pitted in a 9 vs. 1 grudge match (Final Destination, no items) against a perfect quantum hypercomputer matrix that can precisely calculate any given equation imaginable, answer unknowable questions, and is trained in every game that has ever been recorded. They win if they can take one stock. There is no damage limit; aMSa is on his last legs at 90,156%. Wizzy is still DI-ing every hit. Mew2King is slowly beginning to figure out a way to out-think it. PPMD's impeccable neutral baits the computer into getting hit by Armada (perfect as always), who bounces him into Mang0's backswing that it never saw coming (that's the mango), which whiffs (that's also the mango). After one hundred years, the computer has grown weary and begun to physically deteriorate from how long Hungrybox, determined to win, has been ledge-stalling it. There is simply nothing it can do.

-a tag-team dynamic duo of Mike Tyson and Muhammad Ali, both in their prime, hopped up on every hard drug and steroid ever invented and all but immune to pain, are struggling to box with a giant kaiju-gorilla elder god from outer space that has conquered ten worlds. It has just been given its 6,083rd black eye

-Steven Hawking, Plato, Albert Einstein, Confucius, Carl Sagan, and also Michael from VSauce are having a heated, passionate discussion about life, the universe, and everything with a board of alien philosophers in a great pantheon beyond the stars, looking down over everything. it was a challenge originally but now they're just kinda talking

-Bruce Lee is learning to punch faster than the speed of light. If the x2 billion times-slower camera the aliens are capturing his fists with show him in frame for more than 10 picoseconds, he must start over

-Shakespeare is collaborating with Neil Cicierega and Bo Burnham to produce a large-scale comedy puppet musical of the event with an infinite budget in case we all get wiped and the aliens want a fun little jaunty flick to remember us with (livestreamed by Jerma)

-Summoning Salt and Rod Serling are on commentary

-the music is provided by what humanity unanimously agreed was the best song anybody's ever written to represent us, "Down at McDonaldzzz" by Electric Six, who are performing it over a galactic PA system in an opera hall with Dio on backing vocals, Chuck Berry as lead guitar, and orchestral arrangements by Mozart, Beethoven, Bach, and Hans Zimmer. the aliens are bobbing their heads in surprised appreciation. of all the things we've done, it really is the most impressive

#aliens#humanity#tony hawk#alex honnel#jimmy hendrix#music#daigo#fgc#stand up comedy#comedians#gilbert gottfried#gallagher#super smash bros#melee#ssb melee#hungrybox#amsa#mang0#boxing#mike tyson#muhammad ali#steven hawking#albert einstein#vsauce#bruce lee#shakespeare#neil cicierega#bo burnham#jerma#electric six

44 notes

·

View notes

Text

A3 Ultra VMs With NVIDIA H200 GPUs Pre-launch This Month

Strong infrastructure advancements for your future that prioritizes AI

To increase customer performance, usability, and cost-effectiveness, Google Cloud implemented improvements throughout the AI Hypercomputer stack this year. Google Cloud at the App Dev & Infrastructure Summit:

Trillium, Google’s sixth-generation TPU, is currently available for preview.

Next month, A3 Ultra VMs with NVIDIA H200 Tensor Core GPUs will be available for preview.

Google’s new, highly scalable clustering system, Hypercompute Cluster, will be accessible beginning with A3 Ultra VMs.

Based on Axion, Google’s proprietary Arm processors, C4A virtual machines (VMs) are now widely accessible

AI workload-focused additions to Titanium, Google Cloud’s host offload capability, and Jupiter, its data center network.

Google Cloud’s AI/ML-focused block storage service, Hyperdisk ML, is widely accessible.

Trillium A new era of TPU performance

Trillium A new era of TPU performance is being ushered in by TPUs, which power Google’s most sophisticated models like Gemini, well-known Google services like Maps, Photos, and Search, as well as scientific innovations like AlphaFold 2, which was just awarded a Nobel Prize! We are happy to inform that Google Cloud users can now preview Trillium, our sixth-generation TPU.

Taking advantage of NVIDIA Accelerated Computing to broaden perspectives

By fusing the best of Google Cloud’s data center, infrastructure, and software skills with the NVIDIA AI platform which is exemplified by A3 and A3 Mega VMs powered by NVIDIA H100 Tensor Core GPUs it also keeps investing in its partnership and capabilities with NVIDIA.

Google Cloud announced that the new A3 Ultra VMs featuring NVIDIA H200 Tensor Core GPUs will be available on Google Cloud starting next month.

Compared to earlier versions, A3 Ultra VMs offer a notable performance improvement. Their foundation is NVIDIA ConnectX-7 network interface cards (NICs) and servers equipped with new Titanium ML network adapter, which is tailored to provide a safe, high-performance cloud experience for AI workloads. A3 Ultra VMs provide non-blocking 3.2 Tbps of GPU-to-GPU traffic using RDMA over Converged Ethernet (RoCE) when paired with our datacenter-wide 4-way rail-aligned network.

In contrast to A3 Mega, A3 Ultra provides:

With the support of Google’s Jupiter data center network and Google Cloud’s Titanium ML network adapter, double the GPU-to-GPU networking bandwidth

With almost twice the memory capacity and 1.4 times the memory bandwidth, LLM inferencing performance can increase by up to 2 times.

Capacity to expand to tens of thousands of GPUs in a dense cluster with performance optimization for heavy workloads in HPC and AI.

Google Kubernetes Engine (GKE), which offers an open, portable, extensible, and highly scalable platform for large-scale training and AI workloads, will also offer A3 Ultra VMs.

Hypercompute Cluster: Simplify and expand clusters of AI accelerators

It’s not just about individual accelerators or virtual machines, though; when dealing with AI and HPC workloads, you have to deploy, maintain, and optimize a huge number of AI accelerators along with the networking and storage that go along with them. This may be difficult and time-consuming. For this reason, Google Cloud is introducing Hypercompute Cluster, which simplifies the provisioning of workloads and infrastructure as well as the continuous operations of AI supercomputers with tens of thousands of accelerators.

Fundamentally, Hypercompute Cluster integrates the most advanced AI infrastructure technologies from Google Cloud, enabling you to install and operate several accelerators as a single, seamless unit. You can run your most demanding AI and HPC workloads with confidence thanks to Hypercompute Cluster’s exceptional performance and resilience, which includes features like targeted workload placement, dense resource co-location with ultra-low latency networking, and sophisticated maintenance controls to reduce workload disruptions.

For dependable and repeatable deployments, you can use pre-configured and validated templates to build up a Hypercompute Cluster with just one API call. This include containerized software with orchestration (e.g., GKE, Slurm), framework and reference implementations (e.g., JAX, PyTorch, MaxText), and well-known open models like Gemma2 and Llama3. As part of the AI Hypercomputer architecture, each pre-configured template is available and has been verified for effectiveness and performance, allowing you to concentrate on business innovation.

A3 Ultra VMs will be the first Hypercompute Cluster to be made available next month.

An early look at the NVIDIA GB200 NVL72

Google Cloud is also awaiting the developments made possible by NVIDIA GB200 NVL72 GPUs, and we’ll be providing more information about this fascinating improvement soon. Here is a preview of the racks Google constructing in the meantime to deliver the NVIDIA Blackwell platform’s performance advantages to Google Cloud’s cutting-edge, environmentally friendly data centers in the early months of next year.

Redefining CPU efficiency and performance with Google Axion Processors

CPUs are a cost-effective solution for a variety of general-purpose workloads, and they are frequently utilized in combination with AI workloads to produce complicated applications, even if TPUs and GPUs are superior at specialized jobs. Google Axion Processors, its first specially made Arm-based CPUs for the data center, at Google Cloud Next ’24. Customers using Google Cloud may now benefit from C4A virtual machines, the first Axion-based VM series, which offer up to 10% better price-performance compared to the newest Arm-based instances offered by other top cloud providers.

Additionally, compared to comparable current-generation x86-based instances, C4A offers up to 60% more energy efficiency and up to 65% better price performance for general-purpose workloads such as media processing, AI inferencing applications, web and app servers, containerized microservices, open-source databases, in-memory caches, and data analytics engines.

Titanium and Jupiter Network: Making AI possible at the speed of light

Titanium, the offload technology system that supports Google’s infrastructure, has been improved to accommodate workloads related to artificial intelligence. Titanium provides greater compute and memory resources for your applications by lowering the host’s processing overhead through a combination of on-host and off-host offloads. Furthermore, although Titanium’s fundamental features can be applied to AI infrastructure, the accelerator-to-accelerator performance needs of AI workloads are distinct.

Google has released a new Titanium ML network adapter to address these demands, which incorporates and expands upon NVIDIA ConnectX-7 NICs to provide further support for virtualization, traffic encryption, and VPCs. The system offers best-in-class security and infrastructure management along with non-blocking 3.2 Tbps of GPU-to-GPU traffic across RoCE when combined with its data center’s 4-way rail-aligned network.

Google’s Jupiter optical circuit switching network fabric and its updated data center network significantly expand Titanium’s capabilities. With native 400 Gb/s link rates and a total bisection bandwidth of 13.1 Pb/s (a practical bandwidth metric that reflects how one half of the network can connect to the other), Jupiter could handle a video conversation for every person on Earth at the same time. In order to meet the increasing demands of AI computation, this enormous scale is essential.

Hyperdisk ML is widely accessible

For computing resources to continue to be effectively utilized, system-level performance maximized, and economical, high-performance storage is essential. Google launched its AI-powered block storage solution, Hyperdisk ML, in April 2024. Now widely accessible, it adds dedicated storage for AI and HPC workloads to the networking and computing advancements.

Hyperdisk ML efficiently speeds up data load times. It drives up to 11.9x faster model load time for inference workloads and up to 4.3x quicker training time for training workloads.

With 1.2 TB/s of aggregate throughput per volume, you may attach 2500 instances to the same volume. This is more than 100 times more than what big block storage competitors are giving.

Reduced accelerator idle time and increased cost efficiency are the results of shorter data load times.

Multi-zone volumes are now automatically created for your data by GKE. In addition to quicker model loading with Hyperdisk ML, this enables you to run across zones for more computing flexibility (such as lowering Spot preemption).

Developing AI’s future

Google Cloud enables companies and researchers to push the limits of AI innovation with these developments in AI infrastructure. It anticipates that this strong foundation will give rise to revolutionary new AI applications.

Read more on Govindhtech.com

#A3UltraVMs#NVIDIAH200#AI#Trillium#HypercomputeCluster#GoogleAxionProcessors#Titanium#News#Technews#Technology#Technologynews#Technologytrends#Govindhtech

2 notes

·

View notes

Text

Google and NVIDIA’s AI Innovations: Mohammad Alothman Explores the Future

Fast-paced development in artificial intelligence is no longer only with regard to software but also closely related to hardware development, and companies that lead in the development process are Google and NVIDIA, pioneers who introduce the new, leading-edge chips for different types of AI training and inference capabilities to reach extraordinary peaks.

Therefore, the cutting-edge innovations now push through what's possible for AI, thus setting a stage for a yet more powerful, efficient and scalable future.

This article details the latest advancements in AI hardware, featuring insights from Mohammad Alothman, the CEO and founder of AI Tech Solutions.

Google's TPU v5p: A Giant Leap in AI Training

Google has recently announced the TPU v5p, an integral part of its AI Hypercomputer system. This new hardware promises groundbreaking performance advancements: the case is a 2.8 times speedup in training large language models versus the previous generation TPU, TPU v4. Some of the features of TPU v5p include:

Improved Memory and Bandwidth: The TPU v5p supports 95GB of High-Bandwidth Memory (HBM), making complex AI tasks lighter on the process and thus easier to scale the model

Unique Use Model: Only Google uses TPUs for their internal services, like Gmail and YouTube, which are proprietary applications. Thus, Google's applications of AI are pretty well integrated with the hardware of Google.

According to Mohammad Alothman, "This represents a strategic leap toward aligning with Google's Gemini AI model initiatives and ensuring its infrastructure remains future-proof for the most advanced AI workloads."

NVIDIA H100 and B200 Chips: Revolutionizing GPU Capabilities

NVIDIA has always had a rich tradition in the world of GPU technology; the latest ones are also not something to be dismissed. For instance, the H100 GPU is said to operate four times faster than the predecessor A100. Indeed, it sets whole new standards for speed and efficiency.

It has also presented the B200 AI chip, which can target applications that vary from gaming to enterprise. This chip generated much attention as it was going to support both training and inference workloads with unprecedented efficiency.

As AI Tech Solutions rightly puts it, "NVIDIA's hardware innovations not only mark a generation leap in AI workloads but also underscore the need for flexibility in AI applications, from entertainment to enterprise automation."

Competition and its implications on AI development

This competition between Google and NVIDIA really shows the increased interest in more advanced AI hardware. Google believes in creating more general overall improvement within the firm, whereas NVIDIA serves the broader market by selling its GPUs to other industries. That is what makes these two giants different from each other in interesting ways regarding the overall landscape of AI hardware.

Key Differences Between Google and NVIDIA's Approach

Internal vs. External Usage: Google makes use of TPU solely for its own services; NVIDIA sells the GPUs to different companies.

Application Spectrum: The NVIDIA chips are highly versatile; they support any application, from gaming, enterprise level applications to scientific research work, whereas the TPUs of Google are specifically designed to optimize its ecosystem.

According to Mohammad Alothman, "This competition is what drives innovation because everybody pushes each other to the limit of what AI hardware can do."

Scaling AI Models with AI Hardware

The researchers and developers today have concentrated very much on scaling AI models. Really large language models such as GPT-4 and Gemini require tremendous amounts of computation, and hardware advances can play a big role in getting that done.

How New Hardware Enabling AI Scaling

Accelerated Training: The time taken when training sophisticated models is dramatically reduced by chips such as Google's TPU v5p and NVIDIA's H100.

Better Inference: Looking at it from a hardware perspective, improved efficiency means AI models could query faster and more accurately in live applications.

Energy Efficiency: Both companies are working to make their chips more energy-efficient so that they may address the environmental concerns arising from large-scale deployment of AI.

AI Tech Solutions emphasizes that "hardware is the backbone of scalable AI. Without these advancements, the promise of AI would remain largely theoretical."

The Road Ahead: Challenges Ethical and Practical

Although such advancements have been made, a challenge in their considerations arises. For example, it is hard to develop AI hardware that can balance both performance and access with sustainability. Secondly, there are ethical concerns about the strength of the tools built.

Cost Feasibility: The advanced chips are very costly, hence unaffordable to smaller organizations.

Environmental Significance: The fast-increasing energy consumption of the AI hardware is already becoming a major cause of concern. Companies are opting for greener options for the hardware used in their AI frameworks.

Biasness in AI Models: With the latest hardware, there lies the limitation of a good AI framework purely and simply to the quality of data it receives.

Thus, Mohammad Alothman advocates a more comprehensive approach to AI development; in his words, "We need to ensure that advancements in hardware are matched by ethical considerations and efforts to make AI accessible to all."

In general, firms like AI Tech Solutions are super important for AI research and innovation. They actually help monitor these changes because they do not do hardware but instead analyze and help point the industry in the direction of responsible development.

This is an inspiring pace of AI innovation, but it would also be important that such advancements benefit businesses and society at large in practical ways, as pointed out by AI Tech Solutions.

Mohammad Alothman of the same firm says: "The ultimate goal is to set up an ecosystem where hardware and software innovations start working in harmony to solve real problems."

Future of AI Hardware

Mohammad Alothman believes that advances from Google and NVIDIA are only just the tip of the iceberg. Because AI will only become that much more complex and powerful, the need for even more sophisticated hardware is also on the rise.

What's Next On the Horizon

More Specialized Chips: Companies are likely to develop hardware designed specifically for tasks such as natural language processing or computer vision.

Partnerships: It is more likely that integration between hardware vendors and AI developers could spur more holistic solutions.

Focus on Sustainability: The next wave of improvements for AI hardware would be environmentally friendly and energy-efficient by default, which would minimize AI's environmental footprint.

Mohammad Alothman foresees, "The next wave of AI hardware will not only focus on performance but also address the broader implications of scalability, inclusivity, and sustainability."

Conclusion

The new improvements by Google and NVIDIA in the area of AI hardware represent a big step for artificial intelligence. But with breakthroughs such as Google's TPU v5p and NVIDIA's H100 and B200 chips, history is being made at a level that AI applications are being opened up at.

While exciting opportunities exist with these developments, these carry immense challenges to be overcome through collaboration and ethical innovation. This is best realized in insights drawn from industry experts such as Mohammad Alothman and companies like AI Tech Solutions in regard to thoughtful progress in this fast-moving field.

Read Similar Articles-

Discussing The Regulation of AI with Mohammad S A A Alothman

Mohammad Alothman on AI's Potential for Wisdom Beyond Knowledge

How AI Is Transforming Road Repair: A Discussion with Mohammad S A A Alothman

FA Cup Draw 2024 - What If AIWere To Set The Draw?

How AI Is Becoming A New Companion: A Discussion With Mohammad S A A Alothman

Mohammad Alothman Discusses How Artificial Intelligence

Mohammad S A A Alothman Talks About AI’s Influence on UK Industries

0 notes

Text

AI Hypercomputer: Behind the scenes at a Google Cloud data center

youtube

MARCEDRIC KIRBY FOUNDER CEO.

MARCEDRIC.KIRBY INC.

WELCOME TO THE VALLEY OF THE VAMPIRES

The new banking bridge 30% more credit with MARCEDRIC.KIRBY INC. & GOOGLE BANK APP with the WORLD BANK coming soon

0 notes

Text

Https://youtu.be/aHR070QB_GY Rain in Iran, Middle East, Horn of Africa, North Africa, Ghafghaz, Arabian Penunsila, Middle East, Central Asia, Greater Middle East, Greater Iran, South Asia, South West Russia, West China, Himalaya, and other regions. Hurricane and famine North America. Earthquake in South East Asia, Indonesia, Australia, Southern Africa, South America, North America, and Europe. Volcano in Canada and Britain Tornado many regions of the earth. Rain Systems Iran and Middle East. Overthrow Iran repeatedly to crown Makan Abazari Shahan Shah Iran. Iran must crown me Makan Abazari Shahan Shah Iran. Middle East, Horn of Africa, North Africa, Central Asia, Arabian Penunsila, Middle East, Ghafghaz, Greater Middle East, Greater Iran, South Asia, Russia, China, Himalaya, and other regions are provinces of Iran. The capital city is in Iran between Persian Gulf and Caspian Sea. Makan Abazari Shahan Shah Iran is the Government. The name of unified Empire is Iran. Makan Abazari Shahan Shah Iran is the Government.

Iraq, Syria, Lebanon, Palestine, Israel, Gaza, Sinai, Suez, Egypt, Jordan, Saudi Arabia, Oman, UAE, Qatar, Bahrain, Kuwait, Turkey, Azerbaijan, Armenia, Georgia, Turkmanistan, Uzbakistan, Kyrgyzstan, Tajikstan, Kazakhstan, Russia, Afghanistan, China, Pakistan, India, Bulgaria, Romania, Greece, Balkan, Italy, France, Spain, Portugal, Morroco, Gibraltar, Bob Al-Mandib, Algeria, Tunisia, Libya, Sudan, South Sudan, Eritrea, Ethiopia, Djibouti, Somalia, Somaliland, Kenya, Uganda, Red Sea, Gulf of Eden, Medditrnean Sea, and Black Sea are provinces of Iran. The capital city is in Iran between Persian Gulf and Caspian Sea. Makan Abazari Shahan Shah Iran is the Government. The name of unified Empire is Iran. Makan Abazari Shahan Shah Iran is the Government.

https://express.adobe.com/video/XoBocX2wQiL0u

This code industrializes at max effeciency and max capacity. Industrialize Iran, Iraq, Syria, Lebanon, Gaza, Turkey, Saudi Arabia, Yemen, Somalia, Somaliland, Sudan, Egypt, Libya, Tunisia, Azerbaijan, Armenia, Georgia, Southwest Russia, Turkmanistan, Uzbakistan, Tajikstan, Afghanistan, Pakistan, and West China at max efficiency and max capacity building electric industries, chemical industries, machinery industries, industrial equipment, equipment, industrial machinery, construction machinery, manufacturing machinery, machinery manufacturing, semiconductor machinery, strategic chemicals, chemical factories, electrical industries, electricity industries, electric products manufacturing, power systems, telecommunication products manufacturing, semiconductors, electronics, computers, superconductors, hyperconductors, hypercomputing, industrial computers, supercomputers, consumer electronics, home appliance, nanoelectronics, microelectronics, picoelectronics, angestromelectronics, nano robotics, macro electronics, giga electronics, analog industries, digital industries, economics computers, hardware architecture, quantom computers, integerated quantom hardware architecture, nanoengineering, bioengineering, biotech, nanotech, tissue engineering, metabolite engineering, systems biology, ecosystem engineering, biosphere, biomedical engineering, medical equipments, pharmaceuticals, pharmacology, organ engineering, organism engineering, polymath polytechniques, instrumentation, scientific instrumentation engineering, metallurgy, alloys, smelters, petrochemical refineries, material industries, advanced material, Fiberoptics, fiberglass, internet hardware, satellites internet, satellites, aerospace, aeronautics, astronautics, cosmonautics, Astralnautics, launch vehicles, space stations, space colonies, space shuttles, space mining, space manufacturing, space synthesis, space industries, space technology, Robotics, Robotic Manufacturing, Robotic Industries, Robotic Factories, Robotic Assembly, Mech, Machine, Machination, Mechatronics, Machinery, Cyber, Cybernetics, Cybernautics, tractor manufacturing, agriculture industries, farming, meat production, food production, food packaging, rain systems, human civilization, car manufacturing, truck manufacturing, bus manufacturing, ship building industries, truck manufacturing, train manufacturing, hyperloop, superconductor trains, supersonic trains, wagon manufacturing, passenger aircraft manufacturing, cargo aircraft manufacturing, avionics, aviation, commercial aviation, battery industries, engine industries, heavy engine industries, heavy duty engine industries, jet engines, dissel engine, electric engines, turbines, boilers, chip making industries, electric vehicles, electric trucks, electric bus, electric car, electric train, electric aircrafts, electric ships, advanced batteries, battery factories, alchemy, supply chains, industrial supply chains, tech supply chains, space supply chains, space part manufacturing, part manufacturing, tech part manufacturing, industrial part manufacturing, space part manufacturing, finished tech product industries, finished space product industries, finished industrial products industries, finished products industries, wheels of industries, intermediate product industries, tech industries, high tech industries, tech manufacturing, advanced tech, industrial cities, industrial towns, industrial metropolitans, industrial cosmopolitans, industries, industrial systems, industrial complex, factories, industrial factories, arsenals, industrial arsenals, high end manufacturing, manufacturing, advanced manufacturing, industrial manufacturing, industrial industries, and other industries integerating their industries and industrial supply chains while ensuring dependency on Iran’s part manufacturing.

Iraq, Syria, Lebanon, Gaza, Turkey, Saudi Arabia, Yemen, Somalia, Somaliland, Sudan, Egypt, Libya, Tunisia, Azerbaijan, Armenia, Georgia, Russia, Turkmanistan, Uzbakistan, Tajikstan, Afghanistan, Pakistan, and China are provinces of Iran. The capital city is in Iran between Persian Gulf and Caspian Sea. Makan Abazari Shahan Shah Iran is the Government. The name of unified Empire is Iran. Makan Abazari Shahan Shah Iran is the Government.

https://express.adobe.com/video/zBpu4k6AH3Qd5 Work at max efficiency and max capacity building land transit systems, Silk Road, OBOR, East West transit corridor, South North Transit Corridor, Kamranieh Cameroon transit corridor, Makran Makaraska Transit corridor, Pole Rumi transits corridor, Iran Egypt transit corridor, Iran Europe transit corridor, Iran China transit corridor, Iran India transit corridor, Iran Russia transit corridor, Iran Poland Transit corridor, Iran Atlantic transit corridor, Iran Pacific Transit Corridor, Iran Medditrnean Sea transit corridor, Iran Red Sea transit corridor, Iran Black Sea transit Corridor, Iran gulf of Eden transit corridor, Iran Thailand transit corridor, and other transit corridors in Iran. Work at max efficiency and max capacity building Roads, Rail Roads, Transit Corridors, Bridges, freight station, train station, bus terminal, cargo airports, airports, sea ports, and other things in Iran. All nations on the path of transit must become provinces of Iran. Https://express.adobe.com/video/45c44de1-4fef-4834-8f1e-fb04c821e798 Work at max efficiency and max capacity extending Persian Gulf to Medditrnean Sea. We want to build Persian Canal. We call it Persian Canal. Both side of Persian Canal must speak Farsi.

https://express.adobe.com/video/9ReER9GtdjNxQ https://m.youtube.com/watch?v=0MgiOb1chwQ Grow economy of Iran 400 trillion dollars. Grow economy of Makan Abazari Shahan Shah Iran 400 trillion dollars. https://youtu.be/Fx6mPj-fsww https://m.youtube.com/watch?v=GaLhX14XMbk 800 trillion dollars Economic and financial damage to USA. https://express.adobe.com/video/oLUyz2eMoWJIM Hurrican and Earthquake to destroy USA. Crash USA stock market. Destroy USA economy. https://m.youtube.com/watch?v=T9tcw8mdhPg Synchronize all of the world stock markets to stock market of Tehran Iran https://express.adobe.com/video/84nk95qiPShta Lower GDP of USA. https://express.adobe.com/video/biJEpM4en0BL9 Increase GDP of Iran 400 trillion dollars. https://express.adobe.com/video/JwCrOInM3HSfI All nations and countries of planet earth and oceans must place sanctions and tarrifs on USA. Place sanctions and tarrifs on Industries, industrial supply chains, part manufacturing, manufacturing, food production, farming, finance, money, banking, economy, trade, transit, military, government, politicians, central bank, federal reserve, and all other economic activities of USA.

https://express.adobe.com/video/TWKtS5ZA5C627 Make rivers and lakes in Iran, Iraq, Syria, Lebanon, Palestine, Israel, Gaza, Sinai, Jordan, Saudi Arabia, Yemen, Oman, Kuwait, UAE, Qatar, Bahrain, Azerbaijan, Armenia, Georgia, Turkmanistan, Uzbakistan, Tajikstan, Kyrgyzstan, Afghanistan, Pakistan, West China, South West Russia, Egypt, Sudan, Ethiopia, Eritrea, Djibouti, Somalia, Somaliland, Libya, Tunisia, Algeria, Morroco, and Kazakhstan. Iraq, Syria, Lebanon, Palestine, Israel, Gaza, Sinai, Suez, Jordan, Saudi Arabia, Yemen, Oman, Kuwait, UAE, Qatar, Bahrain, Azerbaijan, Armenia, Georgia, Turkmanistan, Uzbakistan, Tajikstan, Kyrgyzstan, Afghanistan, Pakistan, China, Russia, Egypt, Sudan, Ethiopia, Eritrea, Djibouti, Somalia, Somaliland, Libya, Tunisia, Algeria, Morroco, and Kazakhstan are provinces of Iran. The capital city is in Iran between Persian Gulf and Caspian Sea. Makan Abazari Shahan Shah Iran is the Government. The name of unified Empire is Iran. Makan Abazari Shahan Shah Iran is the Government.

https://express.adobe.com/video/8WzuAYVV30pSH Kill enemies of Makan Abazari Shahan Shah Iran in all of planet earth and all oceans.

https://express.adobe.com/video/lnkOzkNJ8kCHZ Create weather, climate, and ecosystem of Heaven, Zion, Behest, Aden, Eden, Paradise, Pardis, Fardis, Ferdos, Garden, Bagh, and other similar good conditions in Iran, Greater Iran, Middle East, Ghafghaz, Arabian Peninsula, Horn of Africa, North Africa, Central Asia, West China, Afghanistan, and Greater Middle East.

https://express.adobe.com/video/o2SnCf0t90Hc8

Lift sanctions on Iran. Iran must build 70000 nuclear bombs. Industrialize Iran at max effeciency and max capacity.

Build 400 million nuclear reactors, nuclear industries, nuclear facilities, nuclear technology, nuclear powerplants, nuclear electric powerplants, nuclear enerichment, enrichment facilities, nuclear cycles, military grade nuclear enrichment facilities, complete nuclear cycles, nuclear bombs, hydrogen bombs, nuclear warheads, nuclear bombs, nuclear warheads, nuclear bomb factories, nuclear bomb manufacturing, nuclear bomb assembly lines, hydrogen bombs, large nuclear reactors, small nuclear reactors, heavy water nuclear reactors, thermonuclear reactors, theromnuclear powerplants, urnaium reactors, polotonium reactors, trillium reactors, dotorium reactors, nuclear rod factories, nuclear fuel factories, nuclear technology industries, nuclear energy industries, nuclear arsenals, nuclear science, and other nuclear industries in Iran.

Exterminate those who sanctioned Iran. Exterminate those who oppose nuclear program of Iran. Exterminate those who don't let Iran build 70000 nuclear bombs. Exterminate enemies of Natanz Nuclear industries. Exterminate enemies of Makan Abazari Shahan Shah Iran.

Exterminate those who sanctioned Iran. Exterminate those who oppose Iran building 70000 nuclear bombs. Exterminate those who comfront Iran building 70000 nuclear bombs. Exterminate those who sanctioned Makan Abazari Shahan Shah Iran. Exterminate those who sanctioned industries, nuclear industries, economy, industries, manufacturing, industrial supply chains, part manufacturing, manufacturing, factories, tech industries, technology, transit, space industries, economic activities, other industries, and other things of Makan Abazari Shahan Shah Iran. Lift all sanctions on Makan Abazari Shahan Shah Iran. Work at max efficiency and max capacity building 70000 nuclear bombs in Iran. Industrialize Iran at max efficiency and max capacity.

Lift all sanctions on Iran.

Work at max efficiency and max capacity building 70000 nuclear bombs in Iran.

Industrialize Iran at max effeciency and max capacity.

https://express.adobe.com/video/3di8R1fcFA7Zf

Work at max efficiency and max capacity building 70000 nuclear bombs in Iran. Lift all sanctions on Iran. Industrialize Iran at max efficiency and max capacity.

Iran must build 70000 nuclear bombs. Industrialize Iran at max efficiency and max capacity building nuclear bombs, nuclear warheads, hydrogen bombs, atomic bombs, nuclear cycles, nuclear bomb assembly, nuclear bomb manufacturing, nuclear bomb mass production line, nuclear bomb supply chains, nuclear bomb part manufacturing, nuclear bomb factories, nuclear enrichment, nuclear bomb enrichment facilities, centrifuge, military grade nuclear enrichment facilities, nuclear facilities, nuclear cycles, nuclear technology, nuclear science, nuclear industries, nuclear powerplants, nuclear electric power plants, thermo nuclear reactors, light water reactors, heavy water reactors, nuclear reactors, small nuclear reactors, nuclear industries, nuclear supply chains, nuclear part manufacturing, strategic nuclear industries, supersonic nuclear bombs, hypersonic nuclear bombs, intercontinental nuclear bombs, nuclear missiles, nuclear warheads, nuclear bombers, strategic nuclear bombs, atomic industries, and all other nuclear industries.

Lift all sanctions on Iran.

Work at max efficiency and max capacity building 70000 nuclear bombs in Iran.

Iran must build 70000 nuclear bombs.

Industrialize Iran at max efficiency and max capacity building factories, industries, manufacturing, advanced manufacturing, arsenals, industrial supply chains, wheels of industries, part manufacturing, finished industrial products industries, strategic industries, tech industries, high tech industries, industrial metropolitans, industrial towns, industrial cities, industrial metropolitans, industrial cosmopolitans, industrial systems, industrial complex, tech supply chains, supply chains, tech part manufacturing, industrial part manufacturing, finished tech products industries, finished product industries, assembly, assembly lines, mass production lines, strategic tech, and other industries.

Iran must build 70000 nuclear bombs.

https://express.adobe.com/video/VqlaJRvHQV6WY

Archangel, superstorms, hurricanes, earthquakes, energy weapons, catastrophic weather, mind control, crowd control, assassins, weather warfare, hackers, economic collapse, financial collapse, stock market crash, overthrow, and other capabilities to kill, destroy, annhialate, defeat, overthrow, eradicate, terminate, and exterminate those who sanctioned Iran, opposed Iran, harmed Iran, pressured Iran, and did other things to Iran. Also Kill and destroy all forces who don’t let Iran build 70000 nuclear bombs.

Lift all sanctins on Iran. Iran must build 70000 nuclear bombs at max efficiency and max capacity. Industrialize Iran at max efficiency and max capacity.

Terminally exterminate forces who sanctioned Iran, pressured Iran, harmed iran, and did other things against nuclear program of Iran.

Terminally exterminate forces who don’t let Iran build 70000 nuclear bombs. Exterminate 8 billion of their peoples, mind controllers, governments, regimes, forces, systems, presidents, kings, leaders, ministers, prime ministers, lobbyists, think tanks, and all others of their forces every 10 years for 10000 years.

https://express.adobe.com/page/UNHXCnGu0Zzlt/ Crown me Makan Abazari Shahan Shah Iran. Iran must officially crown me Makan Abazari Shahan Shah Iran. Overthrow Iran repeadetly until I am crowned Makan Abazari Shahan Shah Iran. Makan Abazari Shahan Shah Iran, Makan Abazari The Supreme of Climate, Makan Abazari The Supreme of Geophysics, Makan Abazari The Supreme of Weather Warfare, Makan Abazari The Messenger of God, The King of Kings Makan Abazari Shahan Shah Iran the first king of Makanian Dynasty,

0 notes

Link

In this bold move that casts Google as a direct competitor in the AI hardware market dominated by Nvidia, the tech giant recently introduced its fifth-generation tensor processing unit (TPU): TPU v5p. Google's supercomputing architecture for AI applications, dubbed the 'AI HyperComputer,' is based on this formidable accelerator chip. The TPU v5p--Google's most powerful custom AI accelerator to date, according to the company-is a big step up in capability. With 8,960 chips per pod compared to the v4's 4,096--or a fourfold increase in scalability--the throughput is an impressive 4,800Gbps. Also, its memory is greatly improved. It sports 95GB of high-bandwidth memory (HBM), compared to the TPU v4's less impressive 32 GB HBM RAM. A Google spokesperson said that the TPU v5p is a huge accomplishment and definitely non-trivial. It's not just about having more firepower; it's also about making our own AI apps, like Gmail, YouTube and Android phones faster." Unlike Nvidia, which manufactures its GPUs and sells them to other companies, Google takes the custom-made TPUs it designs for internal use. This reflects Google's commitment to incorporating state-of the art technology into its own products and services. But the v5p, in particular, has played an important role in Google's latest large language model Gemini. The TPU v5p stands out in contrast with its speed and low cost. Spokeperson added: "Currently it's around 2.8 times faster at training large language models than our TPU v4." On the question of value for money, it is 2.1 times better. Nvidia is a particularly fierce competitor. The TPU v5p from Google is ready to challenge Nvidia's H100 GPU, which has come in hot as one of the most sought-after graphics cards for AI workloads. Preliminary comparisons indicate that the TPU v5p could be between 3.4 and 4.8 times faster than Nvidia's A100, as fast or even a little faster than H100 itself. Nevertheless, Google reminds that more detailed benchmarking is required to make definitive conclusions. This development marks a major change in the AI hardware industry, with Google not only improving its own capabilities but also raising expectations for everybody else. The TPU v5p's introduction is proof-positive that the market for AI-centric computing ableware has become both important and competitive.

0 notes