#Data Protection Act

Explore tagged Tumblr posts

Text

डेटा प्रोटेक्शन एक्ट से RTI पर हमला, लोकतंत्र और पारदर्शिता खतरे में; 500 करोड़ का लगेगा जुर्माना

Delhi News: केंद्र की मोदी सरकार द्वारा लाया गया डेटा प्रोटेक्शन एक्ट (Digital Personal Data Protection Act, 2023) सूचना का अधिकार अधिनियम (RTI Act, 2005) को कमजोर करने की दिशा में एक बड़ा कदम माना जा रहा है। इस नए कानून के तहत सरकार व्यक्तिगत डेटा को छुपाने का अधिकार हासिल कर सकती है, जिससे भ्रष्टाचार को उजागर करने वाले खोजी पत्रकारों और RTI कार्यकर्ताओं पर भारी जुर्माना लगाने का रास्ता खुल गया…

#500 Crore Penalty#bank fraud#Corruption Policies#Data Protection Act#Digital Data Processing#Investigative Journalism#Modi government#personal data#Prashant Kumar#Ration Card Fraud#rti act 2005#Supreme Court#Voter List Manipulation

0 notes

Text

Digital Personal Data Protection Act: Shaping India’s AI-driven fintech sector

The Data Protection Act is crucial in shaping India’s evolving AI-driven fintech sector. With increasing reliance on digital technologies, the act aims to safeguard personal data and ensure privacy in financial transactions. It balances innovation and consumer protection, addressing the challenges posed by AI, while fostering trust and security in India’s rapidly growing digital economy.

0 notes

Text

India's DPDPA What you need to know

Introduction Provide a compelling introduction of the topic in this section. Explain that India's Data Protection Bill (DPDPA), a key piece of legislation, addresses privacy and security issues. In the digital age, personal data is shared and processed constantly.

Background to DPDPA: Dig deeper into the history of DPDPA. Discussion of the history of India's data protection laws, including any gaps or previous attempts that led to a comprehensive law. Explain why the proliferation of technologies and the rise in data-driven business necessitated the legislation.

DPDPA Key Provisions: The section should include a detailed breakdown of all the DPDPA's essential components. Each provision should be discussed in detail.

Data processing principles: Explain principles that guide lawful processing of data, such as transparency, purpose limitation and data minimization.

Discussion of the rights of data subjects: Discuss such rights as the right for individuals to access and correct inaccurate data or the right to be wiped out.

Data breach notification obligations: Explain the obligations for organizations to promptly and transparently report data breaches.

Explain the DPDPA's rules on international data transfers.

Data protection officers (DPOs). Discuss their role in ensuring that the DPDPA is adhered to and the required qualifications for this position.

Impact of the DPDPA on Business: Give a detailed analysis of the DPDPA's impact on businesses in India. Distinguish the burden of compliance, possible financial consequences, and changes in operations required. Discuss how organizations can adjust their data handling practices in order to comply with the DPDPA, and avoid penalties.

Comparison to GDPR: The purpose of this section is to provide a detailed comparison between the DPDPA (Data Protection Act) and the General Data Protection Regulations (GDPR) in the European Union. Compare the similarities and differences between the DPDPA and GDPR, including the rights and principles of the data subject, as well as the jurisdiction and enforcement. Talk about how companies operating in India and Europe need to navigate the dual regulatory frameworks.

Challenges & Concerns: Examine the challenges and concerns relating to the DPDPA. Discussions can include issues like compliance complexity, localization of data requirements, and possible conflicts with other laws or regulations. Use real-world case studies or examples to illustrate the challenges.

The Data Protection Authority's Role: Describe the Data Protection Authority of India and its functions. Describe the role of this authority in enforcing DPDPA. This includes investigating data breaches, performing audits and issuing sanctions. Distinguish the possible impact of the DPAI in India on data protection.

The Road ahead: A look at the future of Indian data protection. Discuss the expected developments such as updates to DPDPA and evolving technologies in data privacy. Analyze how the DPDPA could impact India's digital industry, innovation and international data trade agreements.

Conclusion Reiterate the main points of the article, and emphasize the importance for individuals, organizations, and businesses in India to understand and comply with the DPDPA. Encourage the readers to keep up to date with data protection issues and to adapt proactively to an ever-changing landscape.

#dpdpa#digital data protection#data protection act#personal data protection act#digital personal data protection act

0 notes

Text

How to Ensure Data Protection Compliance in Ghana

Data Protection

Data protection refers to the rules and practices put in place to guard against abuse, unauthorized access, and disclosure of sensitive personal information. Securing the data is crucial in today's increasingly digital and interconnected world, where enormous amounts of data are collected and shared, to safeguard individual privacy and win over customers, clients, and other stakeholders.

The basic goal of data protection is to make sure that data is handled, gathered, and stored securely and legally. To prevent cyberattacks, data breaches, and the unauthorized use of information, numerous organizational, technological, and legal procedures must be put in place.

Ghana's Data Protection Act: To regulate the processing of personal data, the Data Protection Act was passed in 2012. Additionally, it created the National Data Protection Commission (NDPC) to oversee the observance of data protection rules.

The scope and applicability of the Act: The Act applies to all processors and data controllers operating in Ghana, regardless of their size or industry.

Penalties for non-compliance: Serious infractions of The Data Protection Act may result in jail time, fines, or other sanctions.

The Fundamental Ideas in Data Protection:

A person's express agreement was obtained before any personal information was gathered, and the data was only used for the purposes for which it was collected.

Data minimization and precision: Keeping only the information that is necessary while making sure it is up to date and accurate.

Information Security and Storage Limitations: Limiting the amount of time that data is retained and putting robust security measures in place to prevent unauthorized access, disclosure, or loss of information.

Personal Rights and Access: Upholding individuals' privacy rights to request access to, correction of, and erasure of their data.

Assuring Data Protection Compliance: Appointing an Officer for Data Protection: Appointing a Data Protection Officer (DPO) who will be responsible for overseeing data protection practices and ensuring compliance throughout the organization.

Implementing Data Protection Impact Assessments: Conduct assessments regularly to identify and resolve any potential threats to and vulnerabilities in data security.

The implementation of security measures: Encryption, access control, and firewalls are all security measures that are put in place to safeguard data from hacker assaults and other security lapses.

Training for employees on data protection: Educating staff members on the fundamentals of data protection policies, practices, and standards to promote a conformist culture.

Reacting to and informing about a data breach:

Planning the Response to a Data Breach: Create a thorough plan to respond to data breaches quickly and successfully.

Notifying the appropriate parties and those affected: To reduce the risk in the event of a breach, contact the NDPC and those who were impacted.

Future Data Breach Mitigation: It is possible to enhance data security and prevent future security breaches by using the lessons learned from past instances.

Data Transfer and Cross-Border Compliance:

When transferring data outside of Ghana, be sure the recipient has given their approval and that the data is being transferred securely.

Putting in place mechanisms like Standard Contractual Clauses (SCCs) to protect data when it is transferred across borders will provide secure adequate safeguards.

Building customer trust is key to data protection, business prosperity, and profitability: Loyalty and Trust: Demonstrating a dedication to data security to win clients' trust and loyalty.

To avoid legal consequences: Respecting the rules on data protection will help you avoid costly legal penalties and reputational damage.

Reputation management: Keeping your business's reputation intact by safeguarding consumer data and responding to data breaches.

Conclusion:

To establish a more secure digital environment and safeguard the fundamental right to privacy for all Ghanaians, data protection in Ghana is a continuing journey that necessitates cooperation between the government, corporations, and people. Ghana may establish itself as a responsible and reliable member of the global digital economy by remaining watchful and aggressive in addressing data privacy issues.

#data protection in Ghana#data protection ghana#data privacy laws#personal data security#data protection act

0 notes

Text

Ad-tech targeting is an existential threat

I'm on a 20+ city book tour for my new novel PICKS AND SHOVELS. Catch me TORONTO on SUNDAY (Feb 23) at Another Story Books, and in NYC on WEDNESDAY (26 Feb) with JOHN HODGMAN. More tour dates here.

The commercial surveillance industry is almost totally unregulated. Data brokers, ad-tech, and everyone in between – they harvest, store, analyze, sell and rent every intimate, sensitive, potentially compromising fact about your life.

Late last year, I testified at a Consumer Finance Protection Bureau hearing about a proposed new rule to kill off data brokers, who are the lynchpin of the industry:

https://pluralistic.net/2023/08/16/the-second-best-time-is-now/#the-point-of-a-system-is-what-it-does

The other witnesses were fascinating – and chilling, There was a lawyer from the AARP who explained how data-brokers would let you target ads to categories like "seniors with dementia." Then there was someone from the Pentagon, discussing how anyone could do an ad-buy targeting "people enlisted in the armed forces who have gambling problems." Sure, I thought, and you don't even need these explicit categories: if you served an ad to "people 25-40 with Ivy League/Big Ten law or political science degrees within 5 miles of Congress," you could serve an ad with a malicious payload to every Congressional staffer.

Now, that's just the data brokers. The real action is in ad-tech, a sector dominated by two giant companies, Meta and Google. These companies claim that they are better than the unregulated data-broker cowboys at the bottom of the food-chain. They say they're responsible wielders of unregulated monopoly surveillance power. Reader, they are not.

Meta has been repeatedly caught offering ad-targeting like "depressed teenagers" (great for your next incel recruiting drive):

https://www.technologyreview.com/2017/05/01/105987/is-facebook-targeting-ads-at-sad-teens/

And Google? They just keep on getting caught with both hands in the creepy commercial surveillance cookie-jar. Today, Wired's Dell Cameron and Dhruv Mehrotra report on a way to use Google to target people with chronic illnesses, people in financial distress, and national security "decision makers":

https://www.wired.com/story/google-dv360-banned-audience-segments-national-security/

Google doesn't offer these categories itself, they just allow data-brokers to assemble them and offer them for sale via Google. Just as it's possible to generate a target of "Congressional staffers" by using location and education data, it's possible to target people with chronic illnesses based on things like whether they regularly travel to clinics that treat HIV, asthma, chronic pain, etc.

Google claims that this violates their policies, and that they have best-of-breed technical measures to prevent this from happening, but when Wired asked how this data-broker was able to sell these audiences – including people in menopause, or with "chronic pain, fibromyalgia, psoriasis, arthritis, high cholesterol, and hypertension" – Google did not reply.

The data broker in the report also sold access to people based on which medications they took (including Ambien), people who abuse opioids or are recovering from opioid addiction, people with endocrine disorders, and "contractors with access to restricted US defense-related technologies."

It's easy to see how these categories could enable blackmail, spear-phishing, scams, malvertising, and many other crimes that threaten individuals, groups, and the nation as a whole. The US Office of Naval Intelligence has already published details of how "anonymous" people targeted by ads can be identified:

https://www.odni.gov/files/ODNI/documents/assessments/ODNI-Declassified-Report-on-CAI-January2022.pdf

The most amazing part is how the 33,000 targeting segments came to public light: an activist just pretended to be an ad buyer, and the data-broker sent him the whole package, no questions asked. Johnny Ryan is a brilliant Irish privacy activist with the Irish Council for Civil Liberties. He created a fake data analytics website for a company that wasn't registered anywhere, then sent out a sales query to a brokerage (the brokerage isn't identified in the piece, to prevent bad actors from using it to attack targeted categories of people).

Foreign states, including China – a favorite boogeyman of the US national security establishment – can buy Google's data and target users based on Google ad-tech stack. In the past, Chinese spies have used malvertising – serving targeted ads loaded with malware – to attack their adversaries. Chinese firms spend billions every year to target ads to Americans:

https://www.nytimes.com/2024/03/06/business/google-meta-temu-shein.html

Google and Meta have no meaningful checks to prevent anyone from establishing a shell company that buys and targets ads with their services, and the data-brokers that feed into those services are even less well-protected against fraud and other malicious act.

All of this is only possible because Congress has failed to act on privacy since 1988. That's the year that Congress passed the Video Privacy Protection Act, which bans video store clerks from telling the newspapers which VHS cassettes you have at home. That's also the last time Congress passed a federal consumer privacy law:

https://en.wikipedia.org/wiki/Video_Privacy_Protection_Act

The legislative history of the VPPA is telling: it was passed after a newspaper published the leaked video-rental history of a far-right judge named Robert Bork, whom Reagan hoped to elevate to the Supreme Court. Bork failed his Senate confirmation hearings, but not because of his video rentals (he actually had pretty good taste in movies). Rather, it was because he was a Nixonite criminal and virulent loudmouth racist whose record was strewn with the most disgusting nonsense imaginable).

But the leak of Bork's video-rental history gave Congress the cold grue. His video rental history wasn't embarrassing, but it sure seemed like Congress had some stuff in its video-rental records that they didn't want voters finding out about. They beat all land-speed records in making it a crime to tell anyone what kind of movies they (and we) were watching.

And that was it. For 37 years, Congress has completely failed to pass another consumer privacy law. Which is how we got here – to this moment where you can target ads to suicidal teens, gambling addicted soldiers in Minuteman silos, grannies with Alzheimer's, and every Congressional staffer on the Hill.

Some people think the problem with mass surveillance is a kind of machine-driven, automated mind-control ray. They believe the self-aggrandizing claims of tech bros to have finally perfected the elusive mind-control ray, using big data and machine learning.

But you don't need to accept these outlandish claims – which come from Big Tech's sales literature, wherein they boast to potential advertisers that surveillance ads are devastatingly effective – to understand how and why this is harmful. If you're struggling with opioid addiction and I target an ad to you for a fake cure or rehab center, I haven't brainwashed you – I've just tricked you. We don't have to believe in mind-control to believe that targeted lies can cause unlimited harms.

And those harms are indeed grave. Stein's Law predicts that "anything that can't go on forever eventually stops." Congress's failure on privacy has put us all at risk – including Congress. It's only a matter of time until the commercial surveillance industry is responsible for a massive leak, targeted phishing campaign, or a ghastly national security incident involving Congress. Perhaps then we will get action.

In the meantime, the coalition of people whose problems can be blamed on the failure to update privacy law continues to grow. That coalition includes protesters whose identities were served up to cops, teenagers who were tracked to out-of-state abortion clinics, people of color who were discriminated against in hiring and lending, and anyone who's been harassed with deepfake porn:

https://pluralistic.net/2023/12/06/privacy-first/#but-not-just-privacy

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2025/02/20/privacy-first-second-third/#malvertising

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#google#ad-tech#ad targeting#surveillance capitalism#vppa#video privacy protection act#mind-control rays#big tech#privacy#privacy first#surveillance advertising#behavioral advertising#data brokers#cfpb

526 notes

·

View notes

Text

It would be great if we had an equivalent to the Data Protection Act here in the USA.

#Data Protection Act#Privacy Online#Online Privacy#Personal Data Protection#Privacy Rights#Online Security

2 notes

·

View notes

Photo

Always rejecting intimacy <<|?? (Patreon)

#Doodles#SCII#ZEX#DAX#At least it's fairly well-earned this time - ZEX is being irresponsible and DAX loves to correct him on that front#For the record - ZEX is now immunized against catching hanahaki again#At least until his affections turn elsewhere lol but considering how infatuated he is with the Captain ♥#And even then he wouldn't be at risk of catching DAX's strain because it's self-inflicted! Yaayyy#But they don't know that! ZEX is putting an awful lot on the line for the possibility of playing test subject for DAX's sake#Very interesting how much he's willing to gamble to see his Sub-Commander well hm ♪#ZEX's selfishness is probably one of my favourite points about him - if he had the hard data to back up that he'd get DAX's strain hmmm#I wonder if he'd be quite so willing to be reinfected - but :) His own experiences - his self-centered viewpoint and confidence and pride#He was fine last time! Surely nothing bad would happen to him! He'd be fine this time too!#That vagueity gives him space to play in#And he's still acting selfishly here as well! Trying to take matters into his own arms without considering DAX's feelings!#Ahh <3 I love him ♥#He's also not particularly partial to DAX trying to protect him when /he's/ the one trying to protect DAX!#Their little mismatches in trying to do right by each other and stopped by the other haha <3#Poor DAX lost his breath just from pushing ZEX away wehh#Usually so strong! Physically even more than ZEX! Though ZEX is more clever hehe <3#But now with the roots in his lungs he can't even push him away fully#Gah what a lovely metaphor <3

4 notes

·

View notes

Text

profits over people — the corporate hellscape of internet censorship, safety, privacy, data mining…

#capitalism#internet censorship#internet#data mining#profit over people#apple#microsoft#kosa#chrome#google#privacy#privacy protection#internet privacy#monopoly#european union#eu#corporate hellscape#kids online safety act#2024

11 notes

·

View notes

Text

I actually have a fic idea but lc is a show that's like. you will never ever have all the information and context until the end. and I am a writer who writes best and more confidently when I have all the info and context at my fingertips. so now I'm just like 🧍♂️

anyway. ramble in the tags

#mine musings#not tagging etc etc#it's an AU so it shouldn't even matter actually. but. whatever. i'll still try to write it. it'll take a while#it's more like character exploration anyway. a role reversal (my favorite kind of au)#i.e. what would the emma case look like if cxs is the one who keeps timelooping to save lg?#it's not a power swap or personality swap so i think it'll be an interesting exploration of the limits of their personalities#for example: in this au i think lg is still protective of cxs and acts as the guide. but he's closer to og!timeline lg#so i'm thinking that he's still very principled but perhaps less strict about doing small deviations from the timeline#cxs is still empathetic and reckless and i think that would actually get worse in a timelooping cxs#since he's the possessor he rationalizes to himself that he gets to shield lg from the messy parts of an operation#and how this self-matyrdom pulls at the fragile trust they have. because their partnership is never equal when someone is timelooping#i'm thinking in like the emma case this all comes to a head when emma gets the text from her parents#in S1 lg tells him “it's better not to look”#i think in this au. cxs would have already honed his acting skills and be like “lg. does she check the phone?”#and lg who is protective but a little naive and not as strict with rules is like#cxs looks so sad :( he's been missing his parents lately :( emma doesn't see the text until tomorrow but...#this probably won't change the timeline too much... right? i think cxs needs to feel loved right now :) “yes she checks her phone”#and cxs is like “... are you sure?”#lg: “yes i'm sure”#and then post-dive cxs finds out emma dies but he doesn't tell lg :) he just keeps it to himself :)#bc it's his job to handle all the messy parts :) like the emotions of their clients. their regrets and obsessions. their fates#in his mind. the more lg knows the more he tries to sacrifice himself to save cxs. so it's important that lg is kept in the dark#something something actor/scriptwriter metaphors idk still working on the idea#just. role reversal shiguang... cxs who keeps timelooping bc he has abandonment issues so he can't handle lg dying...#lg basically is like 9S from nier automata who always dooms himself by learning the truth#this could've been a read more instead of a tag essay i'm sorry. i keep forgetting that feature. i am a yapper in the tags#cxs after dragging lg out for dinner so he doesn't catch the news: “hey lg. we followed the script to a tee right?”#“i didn't forget any lines or anything?”#lg (confused) (lying): “yes. aside from getting the financial data part. we did everything right.”#cxs: “okay 😊 i trust you 😊 past or future let them be”

4 notes

·

View notes

Text

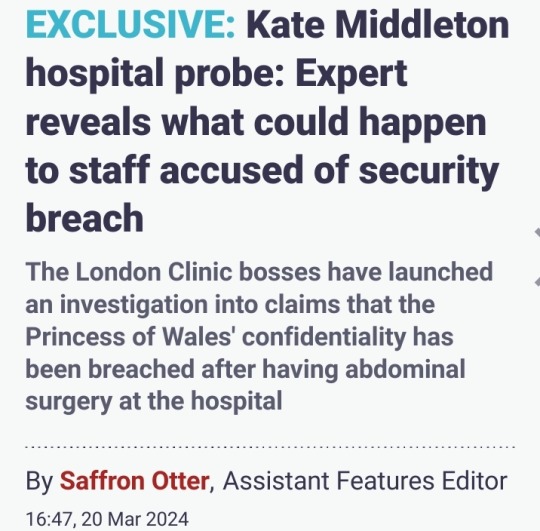

Hospital staff embroiled in a privacy probe involving the Princess of Wales will likely be facing disciplinary action, an expert has warned.

The Mirror revealed an investigation is underway at the world-renowned The London Clinic into claims Catherine's confidentiality was breached while she was a patient in January.

At least one member of staff was said to have been caught trying to access the 42-year-old's medical notes.

The future Queen had abdominal surgery at the London hospital in January and stayed for a fortnight, as she recovered before returning home to Windsor.

The allegations are the latest blow to hit Catherine, whose absence from public life over the past two months has led to wild conspiracy theories on social media about her whereabouts and health.

Now, an employment expert has outlined the likely next steps for accused staff, while a data protection expert has suggested Catherine could well claim compensation.

Employment partner Tracey Guest at law firm Slater Heelis told the Mirror:

"Any hospital employee who has accessed Catherine's private medical records, without any proper work reason to do so, is at risk of being dismissed due to gross misconduct.

Previous cases for dismissal relating to confidential information have held that it is important for employers to have policies in place, which make it abundantly clear to employees that unauthorised interference with computers/accessing confidential information unnecessarily will carry severe penalties.

No doubt all hospital employees will have been given contracts of employment where confidential information is a key term.

And it is likely that the hospital will have policies in place to make it clear that unlawfully accessing patient confidential information is likely to amount to gross misconduct."

The next steps to follow will depend on the alleged employee's years of service at the clinic. Tracey continued:

"If an employee has two or more years' service, the hospital will need to follow a fair procedure prior to dismissing an employee, otherwise they will be at risk of a claim for unfair dismissal.

This means that the hospital should require the employee to attend an investigation meeting, where the allegations are put to the employee and the employee is given a chance to respond and put forward any explanation/deny the allegations.

If the Investigating Officer decides that there is a case to answer, the employee must then be required to attend a disciplinary meeting.

The employee should be advised in advance in writing of the disciplinary allegations against them and warned that a possible outcome may be dismissal.

The employee should also be given the right to be accompanied to the disciplinary meeting by a fellow employee or trade union representative of their choice.

If an employee is dismissed, they should be given the right to appeal the decision."

It is likely that accessing medical records without any proper work reason is also a breach of data protection, and these allegations would also be discussed with the employee concerned, Tracey explained.

Meanwhile, the employees' alleged actions causing reputational damage to the hospital will also be assessed.

"Given the publicity surrounding this matter, this allegation would be genuine and could provide a further reason to warrant dismissal for gross misconduct (subject to the findings of any appropriate investigation and disciplinary)," Tracey added, before suggesting:

"Any employee involved in accessing medical records without a proper reason to do so may be best advised to resign, in order to avoid having a dismissal on their records."

The clinic's boss said that all appropriate investigatory, regulatory and disciplinary steps will be taken when looking at alleged data breaches.

Al Russell, said in a statement:

"Everyone at the London Clinic is acutely aware of our individual, professional, ethical and legal duties with regards to patient confidentiality.

We take enormous pride in the outstanding care and discretion we aim to deliver for all our patients that put their trust in us every day.

We have systems in place to monitor management of patient information and, in the case of any breach, all appropriate investigatory, regulatory and disciplinary steps will be taken.

There is no place at our hospital for those who intentionally breach the trust of any of our patients or colleagues."

It is a criminal offence for any staff in an NHS or private healthcare setting to access the medical records of a patient without the consent of the organisation's data controller.

Looking at somebody's private medical records without permission can result in prosecution from the Information Commissioner's Office in the UK.

A spokesperson for the data watchdog said:

"We can confirm that we have received a breach report and are assessing the information provided."

Jon Baines, Senior Data Protection Specialist at Mishcon de Reya, outlined what this would mean and suggested that Catherine could claim for compensation.

"Any investigation by the ICO is likely to consider whether a criminal offence might have been committed by an individual or individuals," he began.

"Section 170 of the Data Protection Act 2018 says that a person commits an offence if they obtain or disclose personal data 'without the consent of the controller.'

Here, the controller will be the clinic itself.

"Although there are defences available to someone charged with the offence — such as that they reasonably believed they had the right to 'obtain' the personal data, or on grounds of public interest — such defences are unlikely to apply where someone knowingly accesses patient notes for no valid or justifiable reason.

Mr Baines explained that an offence is only punishable by a fine.

In England and Wales, although the maximum fine is unlimited, there is no possibility of any custodial sentence.

"A further area of potential investigation for the ICO will be whether the clinic itself complied with its obligations under the UK GDPR to have 'appropriate technical or organisational measures' in place to keep personal data secure.," the data expert continued.

"Serious failures to comply with that obligation could lead to civil monetary penalties from the ICO, to a maximum of £17.5m although, in reality, given that such civil fines must be proportionate, it is rare that such large sums are even considered by the ICO.

Individuals, such as - in this case - The Princess of Wales, can also bring claims for compensation under the UK GDPR, and for 'misuse of private information', where their data protection and privacy rights have been infringed."

Mr Baines added:

"Whatever the outcome from the ICO, anyone working in an environment where they might have access to personal data, particularly of a sensitive nature, should be aware that there are potential criminal law implications arising from unauthorised access.

Any organisation holding such information should ensure it has appropriate measures in place to prevent, or at least reduce the risk, of such access."

Earlier today, a health minister said police have "been asked to look at" whether staff at The London Clinic attempted to access the Princess of Wales' private medical records.

MP Maria Caulfield, who is a nurse serving as Parliamentary Under-Secretary of State for Mental Health and Women's Health Strategy, said there could be “hefty implications” if it turns out anyone accessed the notes without permission, including prosecution or fines.

When questioned whether it should be dealt with as a police matter, Ms Caulfield told LBC:

“Whether they take action is a matter for them. But the Information Commissioner can also take prosecutions, can also issue fines, the NMC (Nursing and Midwifery Council), other health regulators can strike you off the register if the breach is serious enough.

So there are particularly hefty implications if you are looking at notes for medical records that you should not be looking at."

Reassuring listeners, she also told Times Radio:

"For any patient, you want to reassure your listeners that there are strict rules in place around information governance about being able to look at notes even within the trust or a community setting.

You can't just randomly look at any patient's notes. It's taken extremely seriously, both by the information commissioner but also your regulator.

So the NMC (Nursing and Midwifery Council), if as a nurse, you are accessing notes that you haven't got permission to access, they would take enforcement action against that. So it's extremely serious.

And I want to reassure patients that their notes have those strict rules apply to them as they do for the Princess of Wales."

Kensington Palace refused to confirm what Catherine was being treated for at the time of the announcement she had surgery but later confirmed the condition was non-cancerous.

An official statement read:

"Her Royal Highness The Princess of Wales was admitted to The London Clinic yesterday for planned abdominal surgery.

The surgery was successful and it is expected that she will remain in hospital for ten to fourteen days, before returning home to continue her recovery."

The Palace also raised that they wanted to keep her health concerns private, adding:

"Based on the current medical advice, she is unlikely to return to public duties until after Easter. The Princess of Wales appreciates the interest this statement will generate.

She hopes that the public will understand her desire to maintain as much normality for her children as possible; and her wish that her personal medical information remains private.

Kensington Palace will, therefore, only provide updates on Her Royal Highness' progress when there is significant new information to share.

The Princess of Wales wishes to apologise to all those concerned for the fact that she has to postpone her upcoming engagements.

She looks forward to reinstating as many as possible, as soon as possible."

As speculation has swirled regarding the Princess' whereabouts, Catherine was most recently seen stepping out in public with Prince William for the first time at the weekend.

The couple, dressed in sportswear, were spotted walking with shopping bags at a farm shop close to their home on the Windsor estate.

#Princess of Wales#Catherine Princess of Wales#Catherine Middleton#Kate Middleton#British Royal Family#The London Clinic#NHS#Information Commissioner's Office#Data Protection Act 2018#MP Maria Caulfield#Kensington Palace#medical records access#medical data breach#abdominal surgery#Nursing and Midwifery Council

3 notes

·

View notes

Text

Best Data Privacy Services Providers in 2023 - Digital Personal Data Protection Act 2023

JISA Softech is recognized among one of the best Data Privacy Services Providers in 2023, offering cutting-edge solutions aligned with the Digital Personal Data Protection Act 2023. Their services ensure secure handling of sensitive data through advanced encryption, access control, and regulatory compliance. With a focus on privacy-first architecture, JISA Softech helps businesses stay ahead of evolving data protection laws. Choose JISA Softech for trusted and future-ready data privacy solutions. Read more - https://www.jisasoftech.com/dpdp-act-2023/

#Digital Personal Data Protection Act 2023#jisa softech#Best Data Privacy Services Providers in 2023#DPDP#data security#dpdp act 2023

0 notes

Text

Implementing India’s Digital Personal Data Protection (DPDP) Act 2023 – A Practical Compliance Framework for Businesses

Many professionals today are navigating the complexities of data protection as we embrace India’s Digital Personal Data Protection (DPDP) Act 2023. This blog post provides a comprehensive overview of the Act and creates a practical compliance framework tailored for businesses like yours. I aim to equip you with the tools needed to understand your obligations and the penalties for non-compliance while highlighting the benefits of data protection for your organization. Join me in exploring how we can turn regulatory challenges into opportunities for growth.

Table of Contents

Overview of the DPDP Act 2023

Compliance Framework for Businesses

Data Subject Rights

Data Protection Impact Assessments

Roles and Responsibilities

Penalties and Enforcement

Final Words

Overview of the DPDP Act 2023

Before delving into the specific provisions, it is vital to understand that the Digital Personal Data Protection (DPDP) Act 2023 represents a significant advancement in India’s approach to personal data regulation. This legislation seeks to establish a comprehensive framework that safeguards the personal data of individuals while promoting the responsible use of technology in a rapidly digitalizing world. I believe that this act not only aligns with global data protection standards, but also empowers individuals by reinforcing their rights concerning their personal information.

Key Provisions of the Act

Among the key provisions of the DPDP Act 2023 are the establishment of strict consent requirements for the processing of personal data and the emphasis on data minimization. As you navigate through these provisions, you’ll notice that the act stipulates that consent must be informed, free, and specific, giving individuals greater control over their data. Additionally, organizations are mandated to limit data collection to only what is necessary for the intended purpose, thereby fostering a more responsible data ecosystem.

Objectives and Scope

The primary objective of the DPDP Act 2023 is to protect the fundamental rights of individuals regarding their personal data while facilitating the growth of the digital economy. It aims to create a balance between the interests of data subjects and data processors in a manner that promotes transparency, accountability, and trust. This act applies to all entities processing personal data within India, as well as to foreign organizations that handle data belonging to Indian citizens.

A significant aspect of the DPDP Act 2023 is its broad scope that emphasizes accountability for organizations violating its provisions. By imposing stringent penalties for non-compliance, the act encourages businesses to foster a culture of data protection and privacy by design. You will find it imperative to understand that not only does the act protect individuals, but it also positions businesses to enhance their reputational standing and gain consumer trust through adherence to robust data governance practices.

Read More : Implementing India’s Digital Personal Data Protection (DPDP) Act 2023 — A Practical Compliance Framework for Businesses

0 notes

Text

India’s DPDP Act Explained: What Every Startup Founder Must Know

Imagine you’ve built a fantastic app that collects users’ names, emails, and preferences to recommend local events. Suddenly, regulators knock on your door asking for your data-handling processes. Panic, right? The DPDP Act aims to prevent this scenario by setting clear rules and giving individuals control over their data. Let’s dive into the essentials so you’re prepared, not panicked. What Is…

#Cybersecurity Standards#data provacy#Digital Personal Data Protection#DPDP Act#DPDP Act 2023#legal#privacy

0 notes

Text

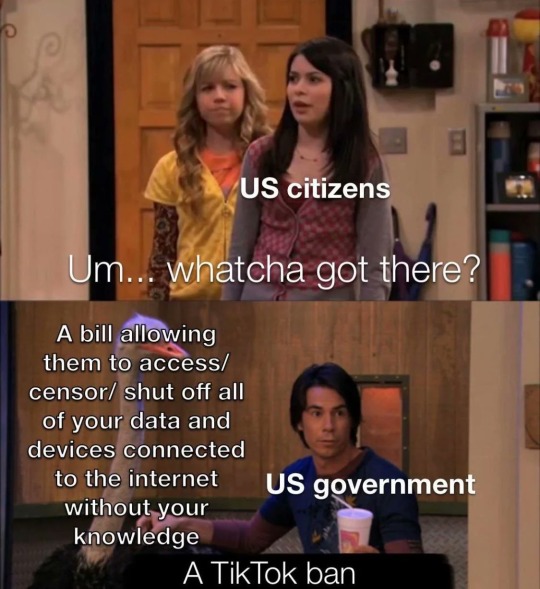

An open letter to the President & U.S. Congress

The DATA Act and the RESTRICT Act are un-American

518 so far! Help us get to 1,000 signers!

I'm alarmed by the Ban TikTok discussion & the RESTRICT Act. We're a democratic country with a First Amendment that guarantees free expression. How does banning a social media platform abide by that principle? Especially since the US government condemns authoritarian governments in other parts of the world for blocking US-based social networks. Examples:

When Nigeria banned Twitter for seven months in June 2021, the U.S. condemned it, reiterating its support for "the fundamental human right of free expression & access to information as a pillar of democracy."

Individuals responsible for the blocking of social media applications in Iran were condemned as "engaging in censorship activities that prohibit, limit, or penalize the exercise of freedom of expression or assembly by citizens of Iran."

When American digital platforms have been banned or severely restricted by governments--including the Chinese Communist Party, Pakistan, & Uganda--seeking to silence & obstruct the open flow of communication & information, the US calls these entities out for it. So why are we doing the same?

TikTok is a red herring. The DATA Act & the RESTRICT Act are very broad & could lead to other apps or communications services with connections to foreign countries being banned in the US. The stated intention is to target apps/services that pose a threat to national security; the way it's currently written raises serious human & civil rights concerns that should be far more important to you.

Caitlin Vogus says: "Any bill that would allow the US government to ban an online service that facilitates Americans' speech raises serious First Amendment concerns…" And those concerns will impact marginalized & oppressed people & groups more.

The "reasoning" behind Ban TikTok is not sound. The racist fearmongering around China is bad enough. Worse is the core of the argument -- data being collected & shared & used against people -- is a problem with ALL social media. Why isn't Congress focusing on that? The apps on your phone (Facebook, Messenger, Instagram, Twitter) are constantly monitoring you & sending information about you to data brokers. Info that can be easily tied to you as an individual despite claims that all the data is "anonymized".

Congress should be addressing the larger problem & not one social network. Restricting what data they can collect about users & forbidding them from selling that data will address the issue with TikTok, too.

I urge you to kill the DATA Act & the RESTRICT Act. They need to be tossed out & more measured legislation proposed in their place that addresses the foundational problems of social media apps & services & the data they collect & who they share it with & how they & other entities use that data.

I know that's not as easy or sexy as Ban TikTok! It does address our Constitutional right to assemble & free expression. That's far more important than knee-jerk reactions & bandwagon jumping.

▶ Created on March 31, 2023 by K T

Text SIGN PNSIMC to 50409

#KT#PNSIMC#resistbot#RESTRICT Act#TikTok Ban#Freedom Of Speech#First Amendment#Data Privacy#Civil Rights#Open Internet#Social Media Freedom#Digital Rights#Online Censorship#Data Protection#Surveillance#Free Expression#Information Access#Tech Policy#Human Rights#Data Broker#Social Media Regulation#Free Speech Crisis#Internet Freedom#Censorship#Government Control#Privacy Rights#Resist Censorship#Fight For Privacy#Privacy Protection#Save TikTok

92K notes

·

View notes

Text

Shifting $677m from the banks to the people, every year, forever

I'll be in TUCSON, AZ from November 8-10: I'm the GUEST OF HONOR at the TUSCON SCIENCE FICTION CONVENTION.

"Switching costs" are one of the great underappreciated evils in our world: the more it costs you to change from one product or service to another, the worse the vendor, provider, or service you're using today can treat you without risking your business.

Businesses set out to keep switching costs as high as possible. Literally. Mark Zuckerberg's capos send him memos chortling about how Facebook's new photos feature will punish anyone who leaves for a rival service with the loss of all their family photos – meaning Zuck can torment those users for profit and they'll still stick around so long as the abuse is less bad than the loss of all their cherished memories:

https://www.eff.org/deeplinks/2021/08/facebooks-secret-war-switching-costs

It's often hard to quantify switching costs. We can tell when they're high, say, if your landlord ties your internet service to your lease (splitting the profits with a shitty ISP that overcharges and underdelivers), the switching cost of getting a new internet provider is the cost of moving house. We can tell when they're low, too: you can switch from one podcatcher program to another just by exporting your list of subscriptions from the old one and importing it into the new one:

https://pluralistic.net/2024/10/16/keep-it-really-simple-stupid/#read-receipts-are-you-kidding-me-seriously-fuck-that-noise

But sometimes, economists can get a rough idea of the dollar value of high switching costs. For example, a group of economists working for the Consumer Finance Protection Bureau calculated that the hassle of changing banks is costing Americans at least $677m per year (see page 526):

https://files.consumerfinance.gov/f/documents/cfpb_personal-financial-data-rights-final-rule_2024-10.pdf

The CFPB economists used a very conservative methodology, so the number is likely higher, but let's stick with that figure for now. The switching costs of changing banks – determining which bank has the best deal for you, then transfering over your account histories, cards, payees, and automated bill payments – are costing everyday Americans more than half a billion dollars, every year.

Now, the CFPB wasn't gathering this data just to make you mad. They wanted to do something about all this money – to find a way to lower switching costs, and, in so doing, transfer all that money from bank shareholders and executives to the American public.

And that's just what they did. A newly finalized Personal Financial Data Rights rule will allow you to authorize third parties – other banks, comparison shopping sites, brokers, anyone who offers you a better deal, or help you find one – to request your account data from your bank. Your bank will be required to provide that data.

I loved this rule when they first proposed it:

https://pluralistic.net/2024/06/10/getting-things-done/#deliverism

And I like the final rule even better. They've really nailed this one, even down to the fine-grained details where interop wonks like me get very deep into the weeds. For example, a thorny problem with interop rules like this one is "who gets to decide how the interoperability works?" Where will the data-formats come from? How will we know they're fit for purpose?

This is a super-hard problem. If we put the monopolies whose power we're trying to undermine in charge of this, they can easily cheat by delivering data in uselessly obfuscated formats. For example, when I used California's privacy law to force Mailchimp to provide list of all the mailing lists I've been signed up for without my permission, they sent me thousands of folders containing more than 5,900 spreadsheets listing their internal serial numbers for the lists I'm on, with no way to find out what these lists are called or how to get off of them:

https://pluralistic.net/2024/07/22/degoogled/#kafka-as-a-service

So if we're not going to let the companies decide on data formats, who should be in charge of this? One possibility is to require the use of a standard, but again, which standard? We can ask a standards body to make a new standard, which they're often very good at, but not when the stakes are high like this. Standards bodies are very weak institutions that large companies are very good at capturing:

https://pluralistic.net/2023/04/30/weak-institutions/

Here's how the CFPB solved this: they listed out the characteristics of a good standards body, listed out the data types that the standard would have to encompass, and then told banks that so long as they used a standard from a good standards body that covered all the data-types, they'd be in the clear.

Once the rule is in effect, you'll be able to go to a comparison shopping site and authorize it to go to your bank for your transaction history, and then tell you which bank – out of all the banks in America – will pay you the most for your deposits and charge you the least for your debts. Then, after you open a new account, you can authorize the new bank to go back to your old bank and get all your data: payees, scheduled payments, payment history, all of it. Switching banks will be as easy as switching mobile phone carriers – just a few clicks and a few minutes' work to get your old number working on a phone with a new provider.

This will save Americans at least $677 million, every year. Which is to say, it will cost the banks at least $670 million every year.

Naturally, America's largest banks are suing to block the rule:

https://www.americanbanker.com/news/cfpbs-open-banking-rule-faces-suit-from-bank-policy-institute

Of course, the banks claim that they're only suing to protect you, and the $677m annual transfer from their investors to the public has nothing to do with it. The banks claim to be worried about bank-fraud, which is a real thing that we should be worried about. They say that an interoperability rule could make it easier for scammers to get at your data and even transfer your account to a sleazy fly-by-night operation without your consent. This is also true!

It is obviously true that a bad interop rule would be bad. But it doesn't follow that every interop rule is bad, or that it's impossible to make a good one. The CFPB has made a very good one.

For starters, you can't just authorize anyone to get your data. Eligible third parties have to meet stringent criteria and vetting. These third parties are only allowed to ask for the narrowest slice of your data needed to perform the task you've set for them. They aren't allowed to use that data for anything else, and as soon as they've finished, they must delete your data. You can also revoke their access to your data at any time, for any reason, with one click – none of this "call a customer service rep and wait on hold" nonsense.

What's more, if your bank has any doubts about a request for your data, they are empowered to (temporarily) refuse to provide it, until they confirm with you that everything is on the up-and-up.

I wrote about the lawsuit this week for @[email protected]'s Deeplinks blog:

https://www.eff.org/deeplinks/2024/10/no-matter-what-bank-says-its-your-money-your-data-and-your-choice

In that article, I point out the tedious, obvious ruses of securitywashing and privacywashing, where a company insists that its most abusive, exploitative, invasive conduct can't be challenged because that would expose their customers to security and privacy risks. This is such bullshit.

It's bullshit when printer companies say they can't let you use third party ink – for your own good:

https://arstechnica.com/gadgets/2024/01/hp-ceo-blocking-third-party-ink-from-printers-fights-viruses/

It's bullshit when car companies say they can't let you use third party mechanics – for your own good:

https://pluralistic.net/2020/09/03/rip-david-graeber/#rolling-surveillance-platforms

It's bullshit when Apple says they can't let you use third party app stores – for your own good:

https://www.eff.org/document/letter-bruce-schneier-senate-judiciary-regarding-app-store-security

It's bullshit when Facebook says you can't independently monitor the paid disinformation in your feed – for your own good:

https://pluralistic.net/2021/08/05/comprehensive-sex-ed/#quis-custodiet-ipsos-zuck

And it's bullshit when the banks say you can't change to a bank that charges you less, and pays you more – for your own good.

CFPB boss Rohit Chopra is part of a cohort of Biden enforcers who've hit upon a devastatingly effective tactic for fighting corporate power: they read the law and found out what they're allowed to do, and then did it:

https://pluralistic.net/2023/10/23/getting-stuff-done/#praxis

The CFPB was created in 2010 with the passage of the Consumer Financial Protection Act, which specifically empowers the CFPB to make this kind of data-sharing rule. Back when the CFPA was in Congress, the banks howled about this rule, whining that they were being forced to share their data with their competitors.

But your account data isn't your bank's data. It's your data. And the CFPB is gonna let you have it, and they're gonna save you and your fellow Americans at least $677m/year – forever.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/11/01/bankshot/#personal-financial-data-rights

#pluralistic#Consumer Financial Protection Act#cfpa#Personal Financial Data Rights#rohit chopra#finance#banking#personal finance#interop#interoperability#mandated interoperability#standards development organizations#sdos#standards#switching costs#competition#cfpb#consumer finance protection bureau#click to cancel#securitywashing#oligarchy#guillotine watch

466 notes

·

View notes

Text

भारत की साइबर सुरक्षा में बड़ी छलांग: टॉप 10 देशों में शामिल, अश्विनी वैष्णव ने दी जानकारी #News #HindiNews #IndiaNews #RightNewsIndia

#Ashwini Vaishnaw#Cert-IN#cyber attacks 2024#cyber security India#Digital Personal Data Protection Act#fruit export India#i4c#India top 10 cyber security ranking#Indian Cyber Crime Coordination Centre#Jitin Prasada

0 notes