#AutoML Deep Learning

Explore tagged Tumblr posts

Text

AutoML: Automação e Eficiência no Aprendizado de Máquina

O termo AutoML está revolucionando a forma como as empresas e os cientistas de dados lidam com os desafios do aprendizado de máquina. Ao permitir a automação de tarefas complexas, o AutoML facilita a criação, implementação e otimização de modelos de aprendizado de máquina com menos intervenção humana. Neste artigo, exploraremos o que é AutoML, como funciona, suas principais vantagens e os casos…

#Aprendizado Autom��tico#Automação Machine Learning#AutoML#AutoML código#AutoML Deep Learning#AutoML exemplos#AutoML Explicação#AutoML Framework#AutoML Google Cloud#AutoML no AWS#AutoML no Azure#AutoML no mercado#AutoML no negócio#AutoML para iniciantes#AutoML passo a passo#AutoML plataformas#AutoML Python#AutoML treinamento#AutoML Tutorial#AutoML VS tradicional#Benefícios do AutoML#Como funciona AutoML#Como usar AutoML#Desenvolvendo com AutoML#Ferramentas AutoML#Google AutoML#Inteligência artificial#Machine Learning#Modelos AutoML#O que é AutoML

0 notes

Text

LightAutoML: AutoML Solution for a Large Financial Services Ecosystem

New Post has been published on https://thedigitalinsider.com/lightautoml-automl-solution-for-a-large-financial-services-ecosystem/

LightAutoML: AutoML Solution for a Large Financial Services Ecosystem

Although AutoML rose to popularity a few years ago, the ealy work on AutoML dates back to the early 90’s when scientists published the first papers on hyperparameter optimization. It was in 2014 when ICML organized the first AutoML workshop that AutoML gained the attention of ML developers. One of the major focuses over the years of AutoML is the hyperparameter search problem, where the model implements an array of optimization methods to determine the best performing hyperparameters in a large hyperparameter space for a particular machine learning model. Another method commonly implemented by AutoML models is to estimate the probability of a particular hyperparameter being the optimal hyperparameter for a given machine learning model. The model achieves this by implementing Bayesian methods that traditionally use historical data from previously estimated models, and other datasets. In addition to hyperparameter optimization, other methods try to select the best models from a space of modeling alternatives.

In this article, we will cover LightAutoML, an AutoML system developed primarily for a European company operating in the finance sector along with its ecosystem. The LightAutoML framework is deployed across various applications, and the results demonstrated superior performance, comparable to the level of data scientists, even while building high-quality machine learning models. The LightAutoML framework attempts to make the following contributions. First, the LightAutoML framework was developed primarily for the ecosystem of a large European financial and banking institution. Owing to its framework and architecture, the LightAutoML framework is able to outperform state of the art AutoML frameworks across several open benchmarks as well as ecosystem applications. The performance of the LightAutoML framework is also compared against models that are tuned manually by data scientists, and the results indicated stronger performance by the LightAutoML framework.

This article aims to cover the LightAutoML framework in depth, and we explore the mechanism, the methodology, the architecture of the framework along with its comparison with state of the art frameworks. So let’s get started.

Although researchers first started working on AutoML in the mid and early 90’s, AutoML attracted a major chunk of the attention over the last few years, with some of the prominent industrial solutions implementing automatically build Machine Learning models are Amazon’s AutoGluon, DarwinAI, H20.ai, IBM Watson AI, Microsoft AzureML, and a lot more. A majority of these frameworks implement a general purpose AutoML solution that develops ML-based models automatically across different classes of applications across financial services, healthcare, education, and more. The key assumption behind this horizontal generic approach is that the process of developing automatic models remains identical across all applications. However, the LightAutoML framework implements a vertical approach to develop an AutoML solution that is not generic, but rather caters to the needs of individual applications, in this case a large financial institution. The LightAutoML framework is a vertical AutoML solution that focuses on the requirements of the complex ecosystem along with its characteristics. First, the LightAutoML framework provides fast and near optimal hyperparameter search. Although the model does not optimize these hyperparameters directly, it does manage to deliver satisfactory results. Furthermore, the model keeps the balance between speed and hyperparameter optimization dynamic, to ensure the model is optimal on small problems, and fast enough on larger ones. Second, the LightAutoML framework limits the range of machine learning models purposefully to only two types: linear models, and GBMs or gradient boosted decision trees, instead of implementing large ensembles of different algorithms. The primary reason behind limiting the range of machine learning models is to speed up the execution time of the LightAutoML framework without affecting the performance negatively for the given type of problem and data. Third, the LightAutoML framework presents a unique method of choosing preprocessing schemes for different features used in the models on the basis of certain selection rules and meta-statistics. The LightAutoML framework is evaluated on a wide range of open data sources across a wide range of applications.

LightAutoML : Methodology and Architecture

The LightAutoML framework consists of modules known as Presets that are dedicated for end to end model development for typical machine learning tasks. At present, the LightAutoML framework supports Preset modules. First, the TabularAutoML Preset focuses on solving classical machine learning problems defined on tabular datasets. Second, the White-Box Preset implements simple interpretable algorithms such as Logistic Regression instead of WoE or Weight of Evidence encoding and discretized features to solve binary classification tasks on tabular data. Implementing simple interpretable algorithms is a common practice to model the probability of an application owing to the interpretability constraints posed by different factors. Third, the NLP Preset is capable of combining tabular data with NLP or Natural Language Processing tools including pre-trained deep learning models and specific feature extractors. Finally, the CV Preset works with image data with the help of some basic tools. It is important to note that although the LightAutoML model supports all four Presets, the framework only uses the TabularAutoML in the production-level system.

The typical pipeline of the LightAutoML framework is included in the following image.

Each pipeline contains three components. First, Reader, an object that receives task type and raw data as input, performs crucial metadata calculations, cleans the initial data, and figures out the data manipulations to be performed before fitting different models. Next, the LightAutoML inner datasets contain CV iterators and metadata that implement validation schemes for the datasets. The third component are the multiple machine learning pipelines stacked and/or blended to get a single prediction. A machine learning pipeline within the architecture of the LightAutoML framework is one of multiple machine learning models that share a single data validation and preprocessing scheme. The preprocessing step may have up to two feature selection steps, a feature engineering step or may be empty if no preprocessing is needed. The ML pipelines can be computed independently on the same datasets and then blended together using averaging (or weighted averaging). Alternatively, a stacking ensemble scheme can be used to build multi level ensemble architectures.

LightAutoML Tabular Preset

Within the LightAutoML framework, TabularAutoML is the default pipeline, and it is implemented in the model to solve three types of tasks on tabular data: binary classification, regression, and multi-class classification for a wide array of performance metrics and loss functions. A table with the following four columns: categorical features, numerical features, timestamps, and a single target column with class labels or continuous value is feeded to the TabularAutoML component as input. One of the primary objectives behind the design of the LightAutoML framework was to design a tool for fast hypothesis testing, a major reason why the framework avoids using brute-force methods for pipeline optimization, and focuses only on efficiency techniques and models that work across a wide range of datasets.

Auto-Typing and Data Preprocessing

To handle different types of features in different ways, the model needs to know each feature type. In the situation where there is a single task with a small dataset, the user can manually specify each feature type. However, specifying each feature type manually is no longer a viable option in situations that include hundreds of tasks with datasets containing thousands of features. For the TabularAutoML Preset, the LightAutoML framework needs to map features into three classes: numeric, category, and datetime. One simple and obvious solution is to use column array data types as actual feature types, that is, to map float/int columns to numeric features, timestamp or string, that could be parsed as a timestamp — to datetime, and others to category. However, this mapping is not the best because of the frequent occurrence of numeric data types in category columns.

Validation Schemes

Validation schemes are a vital component of AutoML frameworks since data in the industry is subject to change over time, and this element of change makes IID or Independent Identically Distributed assumptions irrelevant when developing the model. AutoML models employ validation schemes to estimate their performance, search for hyperparameters, and out-of-fold prediction generation. The TabularAutoML pipeline implements three validation schemes:

KFold Cross Validation: KFold Cross Validation is the default validation scheme for the TabularAutoML pipeline including GroupKFold for behavioral models, and stratified KFold for classification tasks.

Holdout Validation : The Holdout validation scheme is implemented if the holdout set is specified.

Custom Validation Schemes: Custom validation schemes can be created by users depending on their individual requirements. Custom Validation Schemes include cross-validation, and time-series split schemes.

Feature Selection

Although feature selection is a crucial aspect of developing models as per industry standards since it facilitates reduction in inference and model implementation costs, a majority of AutoML solutions do not focus much on this problem. On the contrary, the TabularAutoML pipeline implements three feature selection strategies: No selection, Importance cut off selection, and Importance-based forward selection. Out of the three, Importance cut off selection feature selection strategy is default. Furthermore, there are two primary ways to estimate feature importance: split-based tree importance, and permutation importance of GBM model or gradient boosted decision trees. The primary aim of importance cutoff selection is to reject features that are not helpful to the model, allowing the model to reduce the number of features without impacting the performance negatively, an approach that might speed up model inference and training.

The above image compares different selection strategies on binary bank datasets.

Hyperparameter Tuning

The TabularAutoML pipeline implements different approaches to tune hyperparameters on the basis of what is tuned.

Early Stopping Hyperparameter Tuning selects the number of iterations for all models during the training phase.

Expert System Hyperparameter Tuning is a simple way to set hyperparameters for models in a satisfactory fashion. It prevents the final model from a high decrease in score compared to hard-tuned models.

Tree Structured Parzen Estimation or TPE for GBM or gradient boosted decision tree models. TPE is a mixed tuning strategy that is the default choice in the LightAutoML pipeline. For each GMB framework, the LightAutoML framework trains two models: the first gets expert hyperparameters, the second is fine-tuned to fit into the time budget.

Grid Search Hyperparameter Tuning is implemented in the TabularAutoML pipeline to fine-tune the regularization parameters of a linear model alongside early stopping, and warm start.

The model tunes all the parameters by maximizing the metric function, either defined by the user or is default for the solved task.

LightAutoML : Experiment and Performance

To evaluate the performance, the TabularAutoML Preset within the LightAutoML framework is compared against already existing open source solutions across various tasks, and cements the superior performance of the LightAutoML framework. First, the comparison is carried out on the OpenML benchmark that is evaluated on 35 binary and multiclass classification task datasets. The following table summarizes the comparison of the LightAutoML framework against existing AutoML systems.

As it can be seen, the LightAutoML framework outperforms all other AutoML systems on 20 datasets within the benchmark. The following table contains the detailed comparison in the dataset context indicating that the LightAutoML delivers different performance on different classes of tasks. For binary classification tasks, the LightAutoML falls short in performance, whereas for tasks with a high amount of data, the LightAutoML framework delivers superior performance.

The following table compares the performance of LightAutoML framework against AutoML systems on 15 bank datasets containing a set of various binary classification tasks. As it can be observed, the LightAutoML outperforms all AutoML solutions on 12 out of 15 datasets, a win percentage of 80.

Final Thoughts

In this article we have talked about LightAutoML, an AutoML system developed primarily for a European company operating in the finance sector along with its ecosystem. The LightAutoML framework is deployed across various applications, and the results demonstrated superior performance, comparable to the level of data scientists, even while building high-quality machine learning models. The LightAutoML framework attempts to make the following contributions. First, the LightAutoML framework was developed primarily for the ecosystem of a large European financial and banking institution. Owing to its framework and architecture, the LightAutoML framework is able to outperform state of the art AutoML frameworks across several open benchmarks as well as ecosystem applications. The performance of the LightAutoML framework is also compared against models that are tuned manually by data scientists, and the results indicated stronger performance by the LightAutoML framework.

#ai#Algorithms#Amazon#applications#approach#architecture#Art#Article#Artificial Intelligence#attention#autoML#banking#benchmark#benchmarks#binary#box#Building#change#classes#classical#columns#comparison#continuous#CV#data#data validation#datasets#dates#Decision Tree#Deep Learning

0 notes

Photo

Nvidia has announced the availability of DGX Cloud on Oracle Cloud Infrastructure. DGX Cloud is a fast, easy and secure way to deploy deep learning and AI applications. It is the first fully integrated, end-to-end AI platform that provides everything you need to train and deploy your applications.

#AI#Automation#Data Infrastructure#Enterprise Analytics#ML and Deep Learning#AutoML#Big Data and Analytics#Business Intelligence#Business Process Automation#category-/Business & Industrial#category-/Computers & Electronics#category-/Computers & Electronics/Computer Hardware#category-/Computers & Electronics/Consumer Electronics#category-/Computers & Electronics/Enterprise Technology#category-/Computers & Electronics/Software#category-/News#category-/Science/Computer Science#category-/Science/Engineering & Technology#Conversational AI#Data Labelling#Data Management#Data Networks#Data Science#Data Storage and Cloud#Development Automation#DGX Cloud#Disaster Recovery and Business Continuity#enterprise LLMs#Generative AI

0 notes

Text

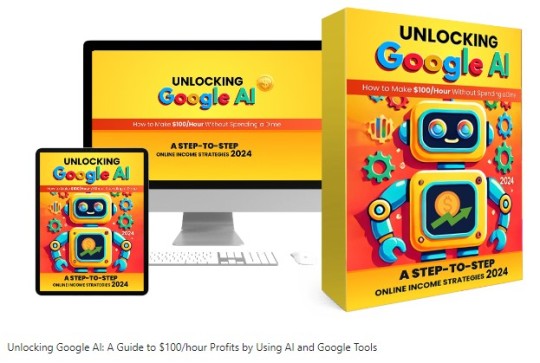

🔓 Unlocking Google AI Review ✅ Your Gateway to Advanced Artificial Intelligence Tools! 🚀🤖🌍

Google AI is one of the most accessible and powerful sets of AI tools and resources available, providing a wide array of solutions that can benefit developers, businesses, educators, and everyday users. Through platforms like Google Cloud AI, TensorFlow, Vertex AI, and Google’s AI-powered tools for productivity (like Google Workspace), Google makes it easier for everyone to leverage the latest advancements in artificial intelligence. Here’s how to unlock and make the most out of Google AI’s capabilities!

👉 Click Here for Get Instant Access Unlocking Google AI 🖰 >>

🔍 Overview:

Unlocking Google AI is a guide or platform aimed at helping users maximize the potential of Google’s AI tools and services. This package offers insights, tips, and strategies to integrate Google AI technology into various applications, from business to personal productivity. Whether you’re looking to automate tasks, improve data analysis, or create intelligent solutions, Unlocking Google AI provides you with the foundational knowledge and practical skills to take full advantage of Google’s cutting-edge AI tools. 📊✨

👉 Click Here for Get Instant Access Unlocking Google AI 🖰 >>

🚀 Key Features

Detailed Walkthrough of Google AI Tools: Learn how to use Google’s AI-driven applications, including Google Cloud AI, Google Machine Learning, TensorFlow, and natural language processing tools. This comprehensive guide makes advanced AI technology accessible for both beginners and seasoned users. 💼📘

Practical AI Integration Tips: Unlocking Google AI provides step-by-step instructions for incorporating AI into everyday tasks. You’ll gain insights into automating processes, optimizing workflows, and improving decision-making through AI-driven data analytics. 🧠🔄

Real-World Applications: Learn how to implement AI in a variety of fields, from customer service and marketing to data science and project management. This feature is ideal for professionals and business owners looking to see tangible benefits from AI integration. 📈🌍

Beginner-Friendly and Advanced Content: Whether you’re new to AI or looking to expand your technical skills, Unlocking Google AI offers a range of content levels, ensuring a comfortable learning pace while also offering deep dives for advanced users. 🚀💻

Tips for Ethical and Responsible AI Use: This guide covers the ethical considerations around AI, helping users understand how to use Google AI responsibly. Perfect for anyone aiming to leverage AI with a focus on fairness, transparency, and ethical responsibility. 🌱🤝

🔧 Why Use Unlocking Google AI?

This guide is valuable for professionals, entrepreneurs, students, and tech enthusiasts who want to leverage Google’s AI capabilities to streamline workflows, gain insights, and remain competitive in an AI-driven world. By making complex AI tools approachable, it empowers users to unlock Google’s AI potential without requiring an extensive technical background. 📅💼

👉 Click Here for Get Instant Access Unlocking Google AI 🖰 >>

🛠️ Core Google AI Tools and Platforms:

Google Cloud AI ☁️

TensorFlow 🧠

Vertex AI 🔧

Google Workspace AI Tools 📊✍️

Google AI Experiments 🎨

✅ Benefits of Unlocking Google AI:

Scalability: Google AI tools are designed to scale, making them suitable for projects of any size.

User-Friendly: With platforms like Vertex AI and AutoML, users can create and deploy machine learning models without needing extensive ML expertise.

Extensive Documentation & Resources: Google provides tutorials, case studies, and community support, making it easier to get started and grow.

Cost-Efficient: Many tools offer free or cost-effective options, especially for smaller projects and developers in their early stages.

👉 Click Here for Get Instant Access Unlocking Google AI 🖰 >>

🚀 How to Get Started with Google AI:

Create a Google Cloud Account: Start by signing up for Google Cloud, which offers a free trial with credits that you can use to explore tools like Vertex AI and other Cloud AI services.

Explore TensorFlow Resources: TensorFlow provides comprehensive documentation, tutorials, and community resources, making it an excellent entry point for hands-on AI learning.

Try Google AI Experiments: Visit Google AI Experiments to get a feel for AI capabilities through fun and interactive projects that require no prior experience.

Leverage AI in Google Workspace: If you’re using Google Workspace, activate AI features like Smart Compose in Gmail or Explore in Google Sheets to see how they can boost productivity.

Experiment with Vertex AI AutoML: Use Vertex AI’s AutoML feature to start building models without in-depth coding knowledge, perfect for small businesses or non-technical users.

🔥 Final Thoughts:

Unlocking Google AI can be transformative, providing tools that make complex AI more approachable and scalable for various users. Whether you're a developer, entrepreneur, or simply someone curious about AI, Google AI’s suite of tools makes it easy to harness advanced artificial intelligence to enhance projects, streamline workflows, and innovate with data.

👉 Click Here for Get Instant Access Unlocking Google AI 🖰 >>

#GoogleAI#MachineLearning#AIforEveryone#Innovation#DigitalTransformation#marketing#affiliatemarketing#onlinemarketing#review#reviews#software#preview#make money as an affiliate#make money tips#digitalmarketing#unlocking google ai review#unlocking google ai review & bonus#unlocking google ai course review#unlocking google ai demo#unlocking google ai bonus#unlocking google ai bonuses#unlocking google ai course demo#unlocking google ai course preview#unlocking google ai course scam#unlocking google ai training course#unlocking google ai scam#unlocking google ai#unlocking google ai preview#google#unlocking google ai review plan

2 notes

·

View notes

Text

Empowering Insights and Intelligence: Navigating the Cutting-Edge of Data Analytics and AI

Leave a Comment / Blog / By Hack Fuel Team

In a world where data reigns supreme, the fusion of Data Analytics and Artificial Intelligence (AI) is forging a path towards unprecedented insights and transformative intelligence. The dynamic interplay of these two technologies is reshaping industries and propelling businesses into a realm of unparalleled efficiency, innovation, and strategic decision-making. Join us on an exploratory journey through the latest frontiers of data analytics and AI, as we delve into the remarkable features that are reshaping the landscape and driving a new era of progress.

1. Illuminating Complexity: The Rise of Cognitive Analytics

Experience the evolution of data interpretation through Cognitive Analytics – a groundbreaking approach that marries AI with advanced analytics. This synergy unlocks the hidden value within both structured and unstructured data, providing profound insights into customer behavior, market trends, and predictive modeling. To further delve into the advantages and best practices of Cognitive Analytics, check out the comprehensive guide provided by Digital Transformation and Platform Engineering on Medium: Cognitive Analytics: Advantages and Best Practices.

2. AI Unveiled: The Era of Explainable Intelligence

Lift the veil on AI decision-making with Explainable AI, a revolutionary advancement that empowers businesses to comprehend and trust AI-generated insights. Dive deep into the inner workings of AI models, unraveling their decision rationale and enhancing transparency. With Explainable AI, you’re equipped to navigate complex AI outputs, ensure compliance, and make informed choices with confidence.

3. AutoML: Data Science for All

Democratize data science with AutoML, a game-changing feature that empowers individuals from diverse backgrounds to engage in machine learning. Witness the fusion of automation and data analysis as AutoML streamlines the model-building process, enabling you to harness predictive analytics, uncover hidden patterns, and drive innovation – regardless of your technical prowess. For a practical illustration of this optimization, you can visit HackFuel.cloud, a prime example of a platform that has seamlessly integrated dark mode to enhance user experience and engagement: HackFuel.cloud.

4. Edge AI: Data Power at Your Fingertips

Embark on a journey to the edge of innovation with Edge AI – where real-time analytics and AI converge at the source. Witness the birth of instant decision-making, revolutionizing industries like IoT, and more. Experience heightened data security, reduced latency, and a world where intelligence is available exactly when and where you need it. Discover how Edge AI is reshaping the landscape of machine learning and operational efficiency: Machine Learning Operations: Edge AI.

5. Quantum Computing: Redefining the Frontiers of Possibility

Peer into the future of data analytics and AI with Quantum Computing – a technology that promises exponential leaps in computational power. Uncover how quantum supremacy is poised to reshape data-intensive tasks, transform AI model training, and usher in a new era of optimization, simulation, and discovery.

6. Precision in Prediction: AI-Powered Predictive Maintenance

Experience a paradigm shift in industrial operations with AI-driven Predictive Maintenance. Witness the power of data analytics in foreseeing equipment failures, optimizing maintenance schedules, and revolutionizing efficiency. Discover how AI is minimizing downtime, maximizing resources, and paving the way for a new era of operational excellence.

Conclusion: Forging Ahead into the Data-Driven Frontier

With each feature – Cognitive Analytics, Explainable AI, AutoML, Federated Learning, Edge AI, Quantum Computing, NLP Advancements, and Predictive Maintenance – we journey into uncharted territories, where the synergy of data and AI transforms industries, amplifies decision-making, and opens doors to boundless possibilities. Welcome to the future where insights and intelligence converge, and where the power of data shapes a world of limitless opportunities.

2 notes

·

View notes

Text

Introduction to AI Platforms

AI Platforms are powerful tools that allow businesses to automate complex tasks, provide real-time insights, and improve customer experiences. With their ability to process massive amounts of data, AI platforms can help organizations make more informed decisions, enhance productivity, and reduce costs.

These platforms incorporate advanced algorithms such as machine learning, natural language processing (NLP), and computer vision to analyze data through neural networks and predictive models. They offer a broad range of capabilities such as chatbots, image recognition, sentiment analysis, and recommendation engines.

Choosing the right AI platform is imperative for businesses that wish to stay ahead of the competition. Each platform has its strengths and weaknesses which must be assessed when deciding on a vendor. Moreover, an AI platform’s ability to integrate with existing systems is critical in effectively streamlining operations.

The history of AI platforms dates back to the 1950s, with the development of early artificial intelligence research. However, over time these technologies have evolved considerably – thanks to advancements in computing power and big data analytics. While still in their infancy stages just a few years ago – today’s AI platforms have matured into complex and feature-rich solutions designed specifically for business use cases.

Ready to have your mind blown and your workload lightened? Check out the best AI platforms for businesses and say goodbye to manual tasks:

Popular Commercial AI Platforms

To explore popular the top AI platforms and make informed decisions, you need to know the benefits each platform offers. With IBM Watson, Google Cloud AI Platform, Microsoft Azure AI Platform, and Amazon SageMaker in focus, this section shows the unique advantages each platform provides for various industries and cognitive services.

IBM Watson

The Innovative AI Platform by IBM:

Transform your business with the dynamic cognitive computing technology of IBM Watson. Enhance decision-making, automate operations, and accelerate the growth of your organization with this powerful tool.

Additional unique details about the platform:

IBM Watson’s Artificial intelligence streamlines workflows and personalizes experiences while enhancing predictive capabilities. The open-source ecosystem allows developers and businesses alike to integrate their innovative applications seamlessly.

Suggested implementation strategies:

1) Leverage Watson’s data visualization tools to clearly understand complex data sets and analyze them. 2) Utilize Watson’s Natural Language processing capabilities for sentiment analysis, identifying keywords, or contextual understanding.

By incorporating IBM Watson’s versatile machine learning functions into your operations, you can gain valuable insights into customer behavior patterns, track industry trends, improve decision-making abilities, and eventually boost revenue. Google’s AI platform is so powerful, it knows what you’re searching for before you do.

Google Cloud AI Platform

The AI platform provided by Google Cloud is an exceptional tool for businesses that major in delivering machine learning services. It provides a broad array of functionalities tailored to meet the diverse demands of clients all over the world.

The following table summarizes the features and capabilities offered by the Google Cloud AI Platform:FeaturesCapabilitiesData Management & Pre-processing

– Large-scale data processing

– Data Integration and Analysis tools

– Deep Learning Frameworks

– Data versioning tools

Model Training

– Scalable training

– AutoML tools

– Advanced tuning configurations

– Distributed Training on CPU/GPU/TPU

Prediction

– High-performance responses within seconds

– Accurate predictions resulting from models trained using large-scale datasets.

Monitoring

– Real-time model supervision and adjustment

– Comprehensive monitoring, management, and optimization of models across various stages including deployment.

One unique aspect of the Google Cloud AI platform is its prominent role in enabling any developer, regardless of their prior experience with machine learning, to build sophisticated models. This ease of use accelerates experimentation and fosters innovation.

Finally, it is worth noting that according to a study conducted by International Business Machines Corporation (IBM), brands that adopted AI for customer support purposes experienced 40% cost savings while improving customer satisfaction rates by 90%.

Continue Reading

2 notes

·

View notes

Text

What Are the Most Popular AI Development Tools in 2025?

As artificial intelligence (AI) continues to evolve, developers have access to an ever-expanding array of tools to streamline the development process. By 2025, the landscape of AI development tools has become more sophisticated, offering greater ease of use, scalability, and performance. Whether you're building predictive models, crafting chatbots, or deploying machine learning applications at scale, the right tools can make all the difference. In this blog, we’ll explore the most popular AI development tools in 2025, highlighting their key features and use cases.

1. TensorFlow

TensorFlow remains one of the most widely used tools in AI development in 2025. Known for its flexibility and scalability, TensorFlow supports both deep learning and traditional machine learning workflows. Its robust ecosystem includes TensorFlow Extended (TFX) for production-level machine learning pipelines and TensorFlow Lite for deploying models on edge devices.

Key Features:

Extensive library for building neural networks.

Strong community support and documentation.

Integration with TensorFlow.js for running models in the browser.

Use Case: Developers use TensorFlow to build large-scale neural networks for applications such as image recognition, natural language processing, and time-series forecasting.

2. PyTorch

PyTorch continues to dominate the AI landscape, favored by researchers and developers alike for its ease of use and dynamic computation graph. In 2025, PyTorch remains a top choice for prototyping and production-ready AI solutions, thanks to its integration with ONNX (Open Neural Network Exchange) and widespread adoption in academic research.

Key Features:

Intuitive API and dynamic computation graphs.

Strong support for GPU acceleration.

TorchServe for deploying PyTorch models.

Use Case: PyTorch is widely used in developing cutting-edge AI research and for applications like generative adversarial networks (GANs) and reinforcement learning.

3. Hugging Face

Hugging Face has grown to become a go-to platform for natural language processing (NLP) in 2025. Its extensive model hub includes pre-trained models for tasks like text classification, translation, and summarization, making it easier for developers to integrate NLP capabilities into their applications.

Key Features:

Open-source libraries like Transformers and Datasets.

Access to thousands of pre-trained models.

Easy fine-tuning of models for specific tasks.

Use Case: Hugging Face’s tools are ideal for building conversational AI, sentiment analysis systems, and machine translation services.

4. Google Cloud AI Platform

Google Cloud AI Platform offers a comprehensive suite of tools for AI development and deployment. With pre-trained APIs for vision, speech, and text, as well as AutoML for custom model training, Google Cloud AI Platform is a versatile option for businesses.

Key Features:

Integrated AI pipelines for end-to-end workflows.

Vertex AI for unified machine learning operations.

Access to Google’s robust infrastructure.

Use Case: This platform is used for scalable AI applications such as fraud detection, recommendation systems, and voice recognition.

5. Azure Machine Learning

Microsoft’s Azure Machine Learning platform is a favorite for enterprise-grade AI solutions. In 2025, it remains a powerful tool for developing, deploying, and managing machine learning models in hybrid and multi-cloud environments.

Key Features:

Automated machine learning (AutoML) for rapid model development.

Integration with Azure’s data and compute services.

Responsible AI tools for ensuring fairness and transparency.

Use Case: Azure ML is often used for predictive analytics in sectors like finance, healthcare, and retail.

6. DataRobot

DataRobot simplifies the AI development process with its automated machine learning platform. By abstracting complex coding requirements, DataRobot allows developers and non-developers alike to build AI models quickly and efficiently.

Key Features:

AutoML for quick prototyping.

Pre-built solutions for common business use cases.

Model interpretability tools.

Use Case: Businesses use DataRobot for customer churn prediction, demand forecasting, and anomaly detection.

7. Apache Spark MLlib

Apache Spark’s MLlib is a powerful library for scalable machine learning. In 2025, it remains a popular choice for big data analytics and machine learning, thanks to its ability to handle large datasets across distributed computing environments.

Key Features:

Integration with Apache Spark for big data processing.

Support for various machine learning algorithms.

Seamless scalability across clusters.

Use Case: MLlib is widely used for recommendation engines, clustering, and predictive analytics in big data environments.

8. AWS SageMaker

Amazon’s SageMaker is a comprehensive platform for AI and machine learning. In 2025, SageMaker continues to stand out for its robust deployment options and advanced features, such as SageMaker Studio and Data Wrangler.

Key Features:

Built-in algorithms for common machine learning tasks.

One-click deployment and scaling.

Integrated data preparation tools.

Use Case: SageMaker is often used for AI applications like demand forecasting, inventory management, and personalized marketing.

9. OpenAI API

OpenAI’s API remains a frontrunner for developers building advanced AI applications. With access to state-of-the-art models like GPT and DALL-E, the OpenAI API empowers developers to create generative AI applications.

Key Features:

Access to cutting-edge AI models.

Flexible API for text, image, and code generation.

Continuous updates with the latest advancements in AI.

Use Case: Developers use the OpenAI API for applications like content generation, virtual assistants, and creative tools.

10. Keras

Keras is a high-level API for building neural networks and has remained a popular choice in 2025 for its simplicity and flexibility. Integrated tightly with TensorFlow, Keras makes it easy to experiment with different architectures.

Key Features:

User-friendly API for deep learning.

Modular design for easy experimentation.

Support for multi-GPU and TPU training.

Use Case: Keras is used for prototyping neural networks, especially in applications like computer vision and speech recognition.

Conclusion

In 2025, AI development tools are more powerful, accessible, and diverse than ever. Whether you’re a researcher, a developer, or a business leader, the tools mentioned above cater to a wide range of needs and applications. By leveraging these cutting-edge platforms, developers can focus on innovation while reducing the complexity of building and deploying AI solutions.

As the field of AI continues to evolve, staying updated on the latest tools and technologies will be crucial for anyone looking to make a mark in this transformative space.

0 notes

Text

Why Should DevOps Be a Key Part of Your IT Strategy in 2024?

Cloud computing continues to revolutionize the way businesses operate, providing scalable, flexible, and cost-efficient solutions for organizations of all sizes. In 2024, the competition among cloud service providers is fiercer than ever, with leading players innovating to offer new features, improved performance, and better integrations.

If you're exploring cloud services for your business or project, knowing the top providers can help you make an informed decision. Let’s dive into the most popular cloud service providers in 2024 and what sets them apart.

1. Amazon Web Services (AWS): The Market Leader

Overview Amazon Web Services (AWS) remains the dominant force in the cloud industry. With its extensive range of services, global infrastructure, and continuous innovation, AWS caters to businesses of all sizes, from startups to multinational enterprises.

Key Features:

Broad Service Portfolio: Over 200 services covering compute, storage, networking, AI, machine learning, and more.

Global Reach: Data centers in over 30 regions and 100+ availability zones.

Custom Solutions: Flexible solutions tailored to industries like healthcare, finance, and gaming.

AWS is particularly popular for its reliability and scalability, making it the go-to choice for businesses with complex workloads.

2. Microsoft Azure: A Strong Contender

Overview Microsoft Azure continues to grow as a leading cloud provider, offering deep integrations with Microsoft's ecosystem. For enterprises already using tools like Office 365 or Dynamics 365, Azure provides a seamless experience.

Key Features:

Hybrid Cloud Capabilities: Azure Arc enables businesses to manage resources across on-premises, multi-cloud, and edge environments.

AI and Data Analytics: Advanced tools for machine learning, data visualization, and predictive analytics.

Developer-Friendly: Comprehensive support for developers with tools like Visual Studio and GitHub integrations.

Azure is favored by enterprises for its compatibility with Microsoft products and its focus on hybrid cloud solutions.

3. Google Cloud Platform (GCP): The Innovator

Overview Google Cloud Platform (GCP) is known for its leadership in AI, machine learning, and data analytics. It’s a preferred choice for developers and organizations aiming for innovation and modern tech solutions.

Key Features:

AI and ML Excellence: Services like TensorFlow, AutoML, and Vertex AI lead the market.

Big Data Expertise: Tools like BigQuery simplify data warehousing and analytics.

Sustainability Focus: Carbon-neutral operations and a commitment to renewable energy.

GCP stands out for its cutting-edge technology, making it ideal for businesses in AI-driven industries.

4. Oracle Cloud Infrastructure (OCI): Enterprise-Grade Cloud

Overview Oracle Cloud Infrastructure (OCI) has carved out a niche for itself in 2024, particularly among enterprise customers. With a focus on databases and enterprise applications, OCI is a strong choice for companies managing large-scale operations.

Key Features:

Database Leadership: Oracle’s Autonomous Database sets benchmarks in automation and efficiency.

Security and Compliance: Robust tools to ensure data security and meet compliance standards.

High Performance: Advanced compute and networking capabilities for demanding workloads.

OCI is a natural choice for businesses already invested in Oracle solutions or seeking enterprise-grade reliability.

5. IBM Cloud: Focused on Hybrid and AI

Overview IBM Cloud continues to lead in hybrid cloud solutions, helping businesses bridge the gap between on-premises and cloud environments. With a strong emphasis on AI and automation, IBM Cloud appeals to enterprises modernizing their operations.

Key Features:

Hybrid Cloud Expertise: Red Hat OpenShift integration for seamless hybrid cloud management.

Watson AI: Advanced AI tools for automation, customer insights, and operational efficiency.

Security Leadership: Industry-leading encryption and compliance certifications.

IBM Cloud is particularly attractive to businesses prioritizing hybrid deployments and AI-driven operations.

6. Alibaba Cloud: The Rising Global Player

Overview Alibaba Cloud, the largest cloud provider in Asia, is rapidly expanding its presence worldwide. With competitive pricing and robust offerings, it’s a strong option for businesses targeting the Asian market.

Key Features:

Asia-Centric Solutions: Tailored services for businesses operating in the Asia-Pacific region.

E-Commerce Integration: Tools for scaling and optimizing e-commerce platforms.

AI and IoT: Comprehensive services for AI, IoT, and edge computing.

Alibaba Cloud is a great choice for businesses seeking reliable solutions in the Asian market or leveraging e-commerce platforms.

7. Other Notable Mentions

DigitalOcean: Popular among startups and developers for its simplicity and affordable pricing.

Linode (Akamai): Known for its focus on developers and small businesses.

Tencent Cloud: A key player in China, offering services tailored for gaming and entertainment.

How to Choose the Right Cloud Provider?

When choosing a cloud provider, consider the following factors:

Business Needs: Define your use cases, from web hosting to AI, and match them with the provider’s strengths.

Budget: Compare pricing models to find a cost-effective solution for your workloads.

Scalability: Ensure the provider can grow with your business.

Global Reach: Choose a provider with data centers in regions critical to your operations.

Support and Ecosystem: Evaluate the provider’s support options and integrations with your existing tools.

Conclusion: The Future of Cloud Computing in 2024

As cloud computing continues to evolve, providers are racing to offer innovative, scalable, and secure solutions. AWS, Azure, and GCP lead the pack, while specialized providers like Oracle Cloud and Alibaba Cloud cater to niche needs. By understanding the unique strengths of each provider, you can make an informed decision and unlock the full potential of cloud computing for your business.

#awstraining#cloudservices#softwaredeveloper#training#iot#data#azurecloud#artificialintelligence#softwareengineer#cloudsecurity#cloudtechnology#business#jenkins#softwaretesting#onlinetraining#ansible#microsoftazure#digitaltransformation#ai#reactjs#awscertification#google#cloudstorage#git#devopstools#coder#innovation#cloudsolutions#informationtechnology#startup

0 notes

Text

Exploring the Next Frontier of Data Science: Trends and Innovations Shaping the Future

Data science has evolved rapidly over the past decade, transforming industries and reshaping how we interact with data. As the field continues to grow, new trends and innovations are emerging that promise to further revolutionize how data is used to make decisions, drive business growth, and solve global challenges. In this blog, we will explore the future of data science and discuss the key trends and technologies that are likely to shape its trajectory in the coming years.

Key Trends in the Future of Data science:

Artificial Intelligence (AI) and Machine Learning Advancements The integration of artificial intelligence (AI) and machine learning (ML) with data science is one of the most significant trends shaping the future of the field. AI algorithms are becoming more sophisticated, capable of automating complex tasks and improving decision-making in real-time. Machine learning models are expected to become more interpretable and transparent, allowing businesses and researchers to better understand how predictions are made. As AI continues to improve, its role in automating data analysis, pattern recognition, and decision-making will become even more profound, enabling more intelligent systems and solutions across industries.

Explainable AI (XAI) As machine learning models become more complex, understanding how these models make decisions has become a crucial issue. Explainable AI (XAI) refers to the development of models that can provide human-understandable explanations for their decisions and predictions. This is particularly important in fields like healthcare, finance, and law, where decision transparency is critical. The future of data science will likely see a rise in demand for explainable AI, which will help increase trust in automated systems and enable better collaboration between humans and machines.

Edge Computing and Data Processing Edge computing is poised to change how data is processed and analyzed in real-time. With the rise of the Internet of Things (IoT) devices and the increasing volume of data being generated at the "edge" of networks (e.g., on smartphones, wearables, and sensors), there is a growing need to process data closer to where it is collected, rather than sending it to centralized cloud servers. Edge computing enables faster data processing, reduced latency, and more efficient use of bandwidth. In the future, data science will increasingly rely on edge computing for applications like autonomous vehicles, smart cities, and real-time healthcare monitoring.

Automated Machine Learning (AutoML) Automated Machine Learning (AutoML) is another trend that will shape the future of data science. AutoML tools are designed to automate the process of building machine learning models, making it easier for non-experts to develop predictive models without needing deep technical knowledge. These tools automate tasks such as feature selection, hyperparameter tuning, and model evaluation, significantly reducing the time and expertise required to build machine learning models. As AutoML tools become more advanced, they will democratize access to data science and enable more organizations to leverage the power of machine learning.

Data Privacy and Security Enhancements As data collection and analysis grow, so do concerns over privacy and security. Data breaches and misuse of personal information have raised significant concerns, especially with regulations like GDPR and CCPA in place. In the future, data science will focus more on privacy-preserving techniques such as federated learning, differential privacy, and homomorphic encryption. These technologies allow data models to be trained and analyzed without exposing sensitive information, ensuring that data privacy is maintained while still leveraging the power of data for analysis and decision-making.

Quantum Computing and Data Science Quantum computing, while still in its early stages, has the potential to revolutionize data science. Quantum computers can perform calculations that would be impossible or take an impractically long time on classical computers. For example, quantum algorithms could dramatically accelerate the processing of complex datasets, optimize machine learning models, and solve problems that currently require huge amounts of computational power. Although quantum computing is still in its infancy, it holds promise for making significant strides in fields like drug discovery, financial modeling, and cryptography, all of which rely heavily on data science.

Emerging Technologies Impacting Data Science:

Natural Language Processing (NLP) and Language Models Natural Language Processing (NLP) has made huge strides in recent years, with language models like GPT-4 and BERT showing remarkable capabilities in understanding and generating human language. In the future, NLP will become even more integral to data science, allowing machines to understand and process unstructured data (like text, speech, and images) more effectively. Applications of NLP include sentiment analysis, chatbots, customer service automation, and language translation. As these technologies advance, businesses will be able to gain deeper insights from textual data, enabling more personalized customer experiences and improved decision-making.

Data Democratization and Citizen Data Science Data democratization refers to the movement toward making data and data analysis tools accessible to a broader range of people, not just data scientists. In the future, more organizations will embrace citizen data science, where employees with little to no formal data science training can use tools to analyze data and generate insights. This trend is driven by the growing availability of user-friendly tools, AutoML platforms, and self-service BI dashboards. The future of data science will see a shift toward empowering more people to participate in data-driven decision-making, thus accelerating innovation and making data science more inclusive.

Augmented Analytics Augmented analytics involves the use of AI, machine learning, and natural language processing to enhance data analysis and decision-making processes. It helps automate data preparation, analysis, and reporting, allowing businesses to generate insights more quickly and accurately. In the future, augmented analytics will play a major role in improving business intelligence platforms, making them smarter and more efficient. By using augmented analytics, organizations will be able to harness the full potential of their data, uncover hidden patterns, and make better, more informed decisions.

Challenges to Address in the Future of Data science:

Bias in Data and Algorithms One of the most significant challenges that data science will face in the future is ensuring fairness and mitigating bias in data and algorithms. Data models are often trained on historical data, which can carry forward past biases, leading to unfair or discriminatory outcomes. As data science continues to grow, it will be crucial to develop methods to identify and address bias in data and algorithms to ensure that machine learning models make fair and ethical decisions.

Ethical Use of Data With the increasing amount of personal and sensitive data being collected, ethical considerations in data science will become even more important. Questions around data ownership, consent, and transparency will need to be addressed to ensure that data is used responsibly. As the field of data science evolves, there will be a growing emphasis on establishing clear ethical guidelines and frameworks to guide the responsible use of data.

Conclusion: The future of Data science is full of exciting possibilities, with advancements in AI, machine learning, quantum computing, and other emerging technologies set to reshape the landscape. As data science continues to evolve, it will play an even more central role in solving complex global challenges, driving business innovation, and enhancing our daily lives. While challenges such as data privacy, ethical concerns, and bias remain, the future of data science holds enormous potential for positive change. Organizations and individuals who embrace these innovations will be well-positioned to thrive in an increasingly data-driven world.

0 notes

Text

Google Cloud AI: Revolucionando a Inteligência Artificial para Empresas

A Google Cloud AI está na vanguarda da inovação tecnológica, oferecendo soluções avançadas de inteligência artificial que transformam a forma como as empresas operam, inovam e atendem seus clientes. Esta plataforma integra o poder do aprendizado de máquina, análise de dados e automação para fornecer recursos personalizados e altamente eficientes. Neste artigo, exploraremos como a Google Cloud AI…

#AI API Google#AI Cloud Computing#AI Google Pricing#AI Google Tutorial#AI no Google Cloud#AI Tools for Developers#Cloud AI Features#Cloud Artificial Intelligence#Cloud Natural Language#Data Science Google#Google AI APIs#Google AI Developers#Google AI para negócios#Google AI Platform#Google AI Platform Tutorial#Google AI Services#Google AI Tools#Google AutoML#Google Cloud AI#Google Cloud AI Benefits#Google Cloud AI Demo#Google Cloud ML#Google Cloud Solutions#Google Cloud Training#Google Deep Learning#Google Machine Learning#Google TensorFlow Cloud#Google Vision AI#Inteligência Artificial Google#Machine Learning Google

0 notes

Text

Data Science with Generative AI Online Training: Generative AI Tools Every Data Scientist Should Know

The rise of data science with generative AI training has transformed how professionals solve problems, generate insights, and create innovative solutions. By leveraging cutting-edge tools, data scientists can automate processes, enhance creativity, and drive data-driven strategies. For those aspiring to excel in this field, enrolling in a data science with generative AI course is a game-changer, equipping learners with the skills to navigate complex AI landscapes. This article explores essential generative AI tools, their benefits, and actionable tips for mastering them through comprehensive data science with generative AI online training.

What is Data Science with Generative AI?

Data Science with Generative AI combines the principles of data science—data analysis, predictive modeling, and statistical computations—with the capabilities of generative AI. Generative AI enables machines to produce new content, such as images, text, and music, by learning from large datasets. Through data science with generative AI courses, learners gain expertise in integrating these powerful technologies to create innovative applications.

Importance of Generative AI in Data Science

Automation of Repetitive Tasks: Generative AI accelerates data cleaning, feature engineering, and visualization tasks.

Enhanced Creativity: From generating synthetic datasets to creating AI-driven designs, the scope is limitless.

Improved Decision-Making: Tools powered by generative AI enhance the accuracy of predictive analytics.

Cost Efficiency: Automating data workflows with generative AI reduces operational costs.

By enrolling in a data science with generative AI training program, professionals can harness these advantages while staying ahead in a competitive job market.

Key Generative AI Tools Every Data Scientist Should Know

GPT Models (Generative Pre-trained Transformers)

GPT models, like OpenAI’s GPT-4, are foundational for text generation, summarization, and translation. These models are widely used in chatbots, content creation, and natural language processing (NLP). Tips:

Use GPT tools for creating automated reports and summarizing datasets.

Experiment with fine-tuning GPT models for industry-specific applications.

DALL·E and Similar Image Generators

These tools create realistic images based on textual descriptions. They are invaluable in marketing, design, and data visualization. Tips:

Integrate these tools into projects requiring AI-driven infographics or visualizations.

Experiment with synthetic image generation for data augmentation in machine learning.

Deep Dream and Neural Style Transfer Tools

These tools enhance images or create artistic transformations. They are useful in fields like media, advertising, and creative analytics. Tips:

Apply these tools to improve visual storytelling in presentations.

Use them for prototyping designs in creative data projects.

AutoML (Automated Machine Learning) Platforms

AutoML platforms like Google AutoML simplify complex machine learning tasks, from model training to deployment. Tips:

Start with pre-built models to save time.

Leverage AutoML to democratize AI by enabling non-technical users to build effective models.

GANs (Generative Adversarial Networks)

GANs are pivotal in generating synthetic data for training machine learning models. Tips:

Use GANs for solving data scarcity problems by generating synthetic samples.

Explore GAN-based models for advanced projects, such as anomaly detection.

Benefits of Learning Generative AI Tools in a Course Setting

Structured Learning Path: A data science with generative AI course provides a well-organized curriculum to master generative AI tools.

Hands-on Practice: Courses often include practical projects to apply concepts.

Expert Guidance: Access to instructors ensures doubts are resolved efficiently.

Collaboration Opportunities: Working with peers enhances learning and broadens perspectives.

Tips for Excelling in Data Science with Generative AI Online Training

Stay Updated: Follow AI research papers, updates, and tools to stay ahead.

Practice Regularly: Implement concepts from the data science with generative AI training into real-world projects.

Focus on Applications: Understand the practical applications of each tool to bridge the gap between theory and practice.

Engage in Communities: Join forums and groups to learn from peers and industry experts.

Build a Portfolio: Showcase your skills through projects demonstrating your proficiency in generative AI.

Applications of Generative AI in Data Science

Text Analytics: Automated report generation, sentiment analysis, and customer feedback summaries.

Predictive Modeling: Accurate forecasting and risk analysis through synthetic data.

Creative Projects: AI-driven marketing campaigns and innovative visual designs.

Healthcare Analytics: Simulating patient outcomes or generating synthetic medical data for analysis.

Finance: Fraud detection using GANs and automated report generation with GPT models.

Why Choose a Data Science with Generative AI Course?

A data science with generative AI course bridges the gap between theory and industry application. From understanding AI fundamentals to mastering advanced tools, these courses empower learners to navigate complex challenges efficiently. Through structured data science with generative AI training, professionals gain access to real-world scenarios, making them industry-ready.

Conclusion

Mastering data science with generative AI tools is no longer optional—it’s a necessity for professionals aiming to excel in data-driven industries. Enrolling in a data science with generative AI course equips learners with essential skills, ensuring they remain competitive in an ever-evolving field. By leveraging tools like GPT, DALL·E, and GANs, data scientists can drive innovation, enhance decision-making, and create impactful solutions. With a commitment to continuous learning and practice, achieving success in this transformative domain is well within reach.

Visualpath Advance your career with Data Science with Generative Ai Course Hyderabad. Gain hands-on training, real-world skills, and certification. Enroll today for the best Data Science Course. We provide to individuals globally in the USA, UK, etc.

Call on: +91 9989971070

Course Covered:

Data Science, Programming Skills, Statistics and Mathematics, Data Analysis, Data Visualization, Machine Learning, Big Data Handling, SQL, Deep Learning and AI

WhatsApp: https://www.whatsapp.com/catalog/919989971070/

Blog link: https://visualpathblogs.com/

Visit us: https://www.visualpath.in/online-data-science-with-generative-ai-course.html

#Data Science Course#Data Science Course In Hyderabad#Data Science Training In Hyderabad#Data Science With Generative Ai Course#Data Science Institutes In Hyderabad#Data Science With Generative Ai#Data Science With Generative Ai Online Training#Data Science With Generative Ai Course Hyderabad#Data Science With Generative Ai Training

0 notes

Text

Emerging Trends in Data Science: A Guide for M.Sc. Students

The field of data science is advancing rapidly, continuously shaped by new technologies, methodologies, and applications. For students pursuing an M.Sc. in Data Science, staying abreast of these developments is essential to building a successful career. Emerging trends like artificial intelligence (AI), deep learning, data ethics, edge computing, and automated machine learning (AutoML) are redefining the scope and responsibilities of data science professionals. Programs like those offered at Suryadatta College of Management, Information Research & Technology (SCMIRT) prepare students not only by teaching foundational skills but also by encouraging awareness of these cutting-edge advancements. Here’s a guide to the current trends and tips on how M.Sc. students can adapt and thrive in this dynamic field.

Current Trends in Data Science

Artificial Intelligence (AI) and Deep Learning: AI is perhaps the most influential trend impacting data science today. With applications in computer vision, natural language processing (NLP), and predictive analytics, AI is becoming a critical skill for data scientists. Deep learning, a subset of AI, uses neural networks with multiple layers to process vast amounts of data, making it a powerful tool for complex pattern recognition. SCMIRT’s program provides exposure to AI and deep learning, ensuring that students understand both the theoretical foundations and real-world applications of these technologies.

Data Ethics and Privacy: As the use of data expands, so do concerns over data privacy and ethics. Companies are increasingly expected to handle data responsibly, ensuring user privacy and avoiding biases in their models. Data ethics has become a prominent area of focus for data scientists, requiring them to consider how data is collected, processed, and used. Courses on data ethics at SCMIRT equip students with the knowledge to build responsible models and promote ethical data practices in their future careers.

Edge Computing: Traditional data processing happens in centralized data centers, but edge computing moves data storage and computation closer to the data source. This trend is particularly important for applications where real-time data processing is essential, such as IoT devices and autonomous vehicles. For data science students, understanding edge computing is crucial for working with decentralized systems that prioritize speed and efficiency.

Automated Machine Learning (AutoML): AutoML is revolutionizing the data science workflow by automating parts of the machine learning pipeline, such as feature selection, model selection, and hyperparameter tuning. This allows data scientists to focus on higher-level tasks and decision-making, while machines handle repetitive processes. AutoML is especially beneficial for those looking to build models quickly, making it a valuable tool for students entering fast-paced industries.

These trends are transforming data science by expanding its applications and pushing the boundaries of what’s possible. For M.Sc. students, understanding and working with these trends is critical to becoming versatile, capable professionals.

Impact of These Trends on the Field

The rise of these trends is reshaping the roles and expectations within data science:

AI and Deep Learning Expertise: As more industries adopt AI solutions, data scientists are expected to have expertise in these areas. Familiarity with deep learning frameworks, like TensorFlow and PyTorch, is becoming a standard requirement for many advanced data science roles.

Ethical Awareness: Data scientists today must be vigilant about ethical considerations, particularly as governments introduce stricter regulations on data privacy. Understanding frameworks like GDPR (General Data Protection Regulation) and HIPAA (Health Insurance Portability and Accountability Act) is essential, and data scientists are increasingly being tasked with ensuring compliance.

Adaptability to Decentralized Data Processing: The shift to edge computing is prompting companies to look for data scientists with knowledge of distributed systems and cloud services. This trend creates opportunities in fields where real-time processing is critical, such as healthcare and autonomous vehicles.

Efficiency through Automation: AutoML allows data scientists to streamline their workflows and build prototypes faster. This trend is leading to a shift in roles, where professionals focus more on strategy and business impact rather than model building alone.

Adapting as a Student

To succeed in this rapidly evolving landscape, M.Sc. students must adopt a proactive approach to learning and development. Here are some tips on how to stay updated with these trends:

Enroll in Specialized Courses: Many M.Sc. programs, like those at SCMIRT, offer courses tailored to emerging technologies. Enrolling in electives focused on AI, deep learning, and big data can provide a solid foundation in the latest trends.

Participate in Research and Projects: Engaging in research projects allows students to apply theoretical knowledge to real-world scenarios. Projects related to data ethics, edge computing, and AutoML are particularly relevant and can provide valuable hands-on experience.

Utilize Online Resources: Platforms like Coursera, Udacity, and edX offer courses on the latest technologies and tools in data science. Supplementing your coursework with these online resources can help you stay ahead in the field.

Attend Conferences and Workshops: Events like the IEEE International Conference on Data Science or regional workshops are great opportunities to learn from experts and network with peers. SCMIRT encourages students to participate in such events, where they can gain insights into industry trends and emerging tools.

Follow Industry Publications and Blogs: Resources like Medium, Towards Data Science, and KDnuggets regularly publish articles on the latest trends in data science. Reading these publications helps students stay informed and engaged with the field.

As data science continues to evolve, emerging trends such as AI, deep learning, data ethics, edge computing, and AutoML are reshaping the landscape of the field. For M.Sc. students, understanding these trends and adapting to them is essential for building a successful career. Programs like Suryadatta College of Management, Information Research & Technology (SCMIRT) equip students with the knowledge and skills needed to navigate these changes, blending a strong academic foundation with exposure to real-world applications. By adopting a continuous learning mindset and staying engaged with the latest developments, M.Sc. students can position themselves as adaptable, innovative professionals ready to make an impact in the ever-evolving world of data science.

0 notes

Text

Title: Unlocking Insights: A Comprehensive Guide to Data Science

Introduction

Overview of Data Science: Define data science and its importance in today’s data-driven world. Explain how it combines statistics, computer science, and domain expertise to extract meaningful insights from data.

Purpose of the Guide: Outline what readers can expect to learn, including key concepts, tools, and applications of data science.

Chapter 1: The Foundations of Data Science

What is Data Science?: Delve into the definition and scope of data science.

Key Concepts: Introduce core concepts like big data, data mining, and machine learning.

The Data Science Lifecycle: Describe the stages of a data science project, from data collection

to deployment.

Chapter 2: Data Collection and Preparation

Data Sources: Discuss various sources of data (structured vs. unstructured) and the importance of data quality.

Data Cleaning: Explain techniques for handling missing values, outliers, and inconsistencies.

Data Transformation: Introduce methods for data normalization, encoding categorical variables, and feature selection.

Chapter 3: Exploratory Data Analysis (EDA)

Importance of EDA: Highlight the role of EDA in understanding data distributions and relationships.

Visualization Tools: Discuss tools and libraries (e.g., Matplotlib, Seaborn, Tableau) for data visualization.

Statistical Techniques: Introduce basic statistical methods used in EDA, such as correlation analysis and hypothesis testing.

Chapter 4: Machine Learning Basics

What is Machine Learning?: Define machine learning and its categories (supervised, unsupervised, reinforcement learning).

Key Algorithms: Provide an overview of popular algorithms, including linear regression, decision trees, clustering, and neural networks.

Model Evaluation: Discuss metrics for evaluating model performance (e.g., accuracy, precision, recall) and techniques like cross-validation.

Chapter 5: Advanced Topics in Data Science

Deep Learning: Introduce deep learning concepts and frameworks (e.g., TensorFlow, PyTorch).

Natural Language Processing (NLP): Discuss the applications of NLP and relevant techniques (e.g., sentiment analysis, topic modeling).

Big Data Technologies: Explore tools and frameworks for handling large datasets (e.g., Hadoop, Spark).

Chapter 6: Applications of Data Science

Industry Use Cases: Highlight how various industries (healthcare, finance, retail) leverage data science for decision-making.

Real-World Projects: Provide examples of successful data science projects and their impact.

Chapter 7: Tools and Technologies for Data Science

Programming Languages: Discuss the significance of Python and R in data science.

Data Science Libraries: Introduce key libraries (e.g., Pandas, NumPy, Scikit-learn) and their functionalities.

Data Visualization Tools: Overview of tools used for creating impactful visualizations.

Chapter 8: The Future of Data Science

Trends and Innovations: Discuss emerging trends such as AI ethics, automated machine learning (AutoML), and edge computing.

Career Pathways: Explore career opportunities in data science, including roles like data analyst, data engineer, and machine learning engineer.

Conclusion

Key Takeaways: Summarize the main points covered in the guide.

Next Steps for Readers: Encourage readers to continue their learning journey, suggest resources (books, online courses, communities), and provide tips for starting their own data science projects.

Data science course in chennai

Data training in chennai

Data analytics course in chennai

0 notes

Text

The Future of Intelligence: Exploring the Transformative Power of Cloud AI

The world is now a global village, and this is where artificial intelligence (AI) comes in; the intelligent tools that run our daily activities from voice commands to recommendation. Yet, there are numerous organisations and individuals using AI and wishing to do it in future experience various problems such as high levels of infrastructure requirements, need to have specialists in this area, and problems with scaling the processes. And that is where Cloud AI enters a game — a new paradigm of AI as a service that doesn’t require the scale of investments like it used to. Well, what is Cloud AI and why is it revolutionising the way we can regard intelligence? Let’s dive in.

Understanding Cloud AI

In its basic definition, cloud AI is the means of obtaining AI solutions and tools through the usage of cloud solutions. This means that Cloud AI doesn’t need dedicated on-site hardware or a group of data scientists to train models and make AI available to everyone with internet access. Some of the readily available powerful AI tools available in the market are Google Cloud AI, Amazon Web Service AI, Microsoft Azure AI and IBM AI which have simpler forms as API that can be integrated to the systems irrespective of the technologist level of the organization.

Why Cloud AI is a Game Changer

There are three key reasons why Cloud AI is transforming the landscape:

Cost-Effectiveness: Classic AI systems are tremendously computationally intensive which in turn requires large investments in hardware. With Cloud AI, one only pays for the service they employ thus making it affordable. Cloud providers take care of all the issues regarding the hardware and software—no need to worry about it, security and system upgrades included.

Scalability: Whether the user is a small, scrappy startup testing the waters with deep learning, or an established enterprise with millions of users to manage, Cloud AI can be easily scaled up or down to meet the particular user’s needs. It enables organizations to introduce products and services to the market with a level of efficiency that does not consider infrastructure bottlenecks.

Accessibility: One of the major challenges that the adoption of AI has faced is that, it has been realized that it requires expertise. Cloud AI provides ways for adopting complex and powerful AI solutions and pre-configured AI solutions for individuals who do not have programming skills.

Key Applications of Cloud AI

The versatility of Cloud AI is vast, touching numerous industries and transforming business processes. Here are some of the most impactful applications:

Machine Learning (ML) Models

Most AI technologies rely on some form of machine learning, yet constructing and training our models is challenging. Various services that work in the cloud have built-in pre-trained AI models that can be returned as necessary. For instance, when using the Google Cloud, AutoML gives clients an opportunity to create new models for different tasks such as image and text classification through interface, and not through coding. This has ensured that new and small businesses seeking to adopt ML for operations such as customer classification, risk management, and recommendation, can easily do so.

Natural Language Processing (NLP)

It involves Text analysis and Speaking and understanding the language of Humans by The machines. At present, cloud AI services provide dependable NLP tools; thus business solutions enable the components for language translation, sentiment analysis, and text summarization. As applied to customer service this is really helpful – one can think of self-learning chatbots that can handle questions or even analyze customer feedback in the hope of enhancing user satisfaction.

Computer Vision

That way, with Cloud AI, companies can take advantage of such technologies, like computer vision, with less expenses in infrastructure. The main areas of use are face identification, object recognition, as well as video analysis at a higher level. For instance, AWS has an AI service named Rekognition that deals with Images and videos to determine objects, text within images among others. Some of the applications of computer vision include in the retail business, the healthcare business, security firms and even in the creation of concepts that make more personalized customers’ touch points.

Speech Recognition

Speech to text has really evolved and thanks to Cloud AI, it is provided as a service which means its more accessible. Speech recognition can make information from voice and audio transcribed and written text which is helpful for the disabled user and new interfaces. Google Cloud Speech-to-Text for instance is popular for converting audio to text enabling many, from voice assistants to a customer care transcribing service.

Data Analysis and Business Insights

The real-time capability of Cloud AI allows data of large quantities to be run through and analyzed. This capability assists businesses to analyze their data in ways that make it easier to know trends, make analyses on the abnormalities, and make concrete decisions based on the outcome. For example, Cognitive Services of the Microsoft Azure offer analytical tools that may help companies to analyze customer actions, define better prices for their goods and services, or avoid possible inefficiencies.

Benefits of Cloud AI for Businesses

Cloud AI bears several significant benefits that many enterprises will find particularly appealing as the world becomes increasingly reliant on technology.