#Google AI APIs

Explore tagged Tumblr posts

Text

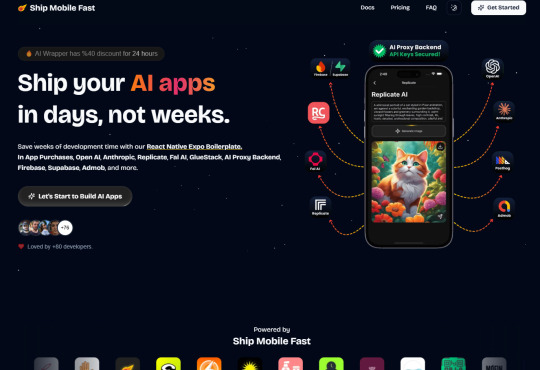

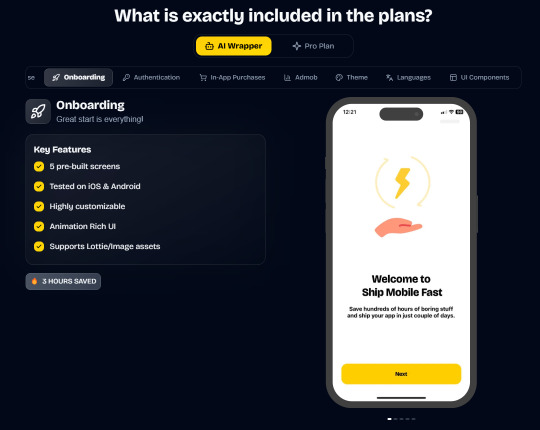

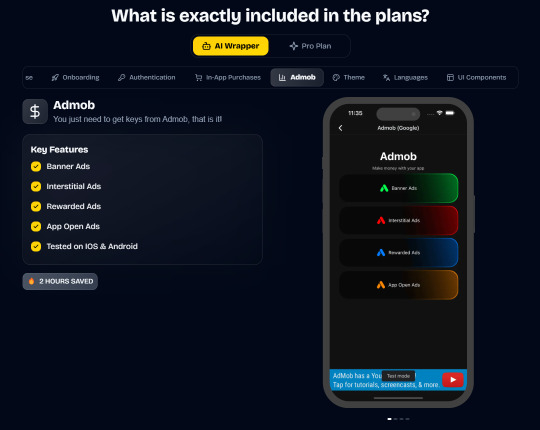

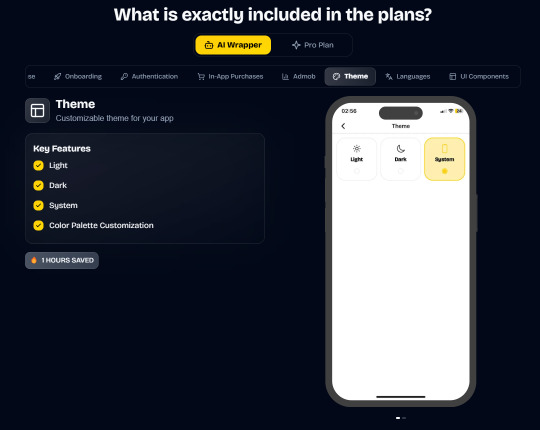

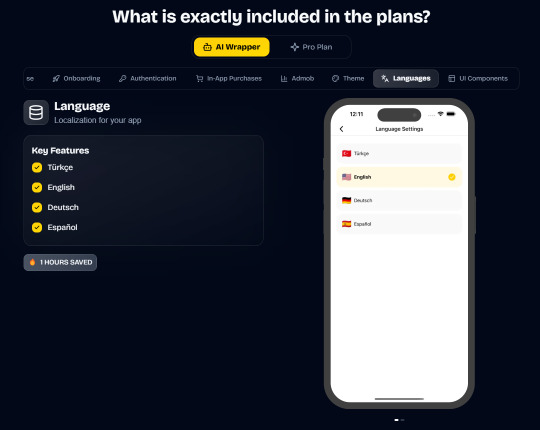

Ship Mobile Fast

Ship your AI apps in days, not weeks.

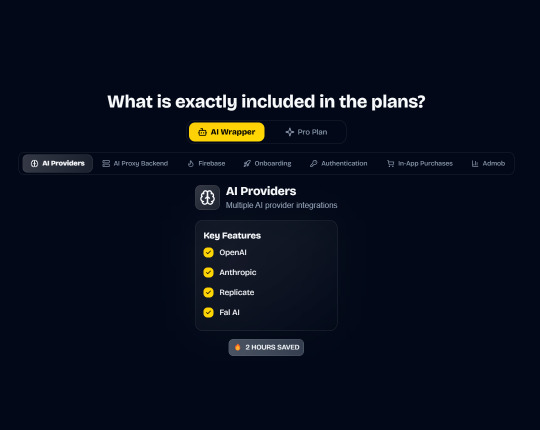

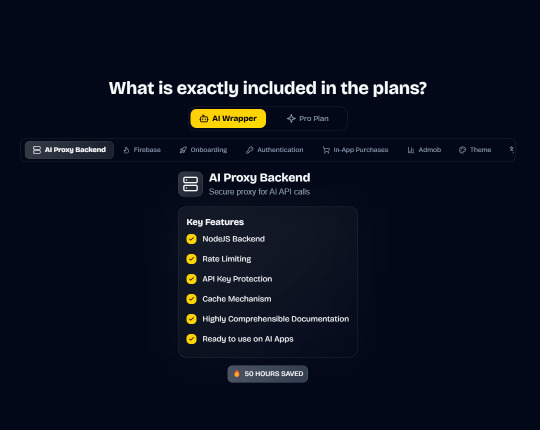

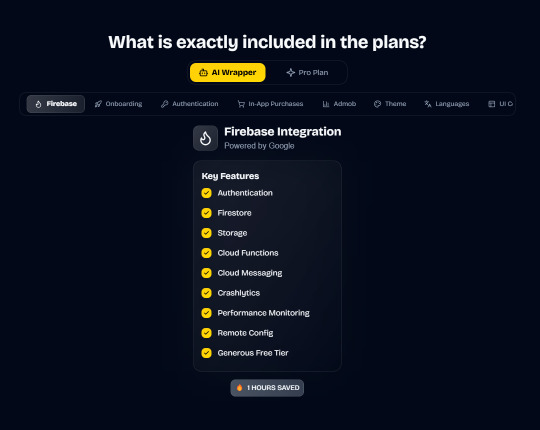

Save weeks of development time with our React Native Expo Boilerplate. In App Purchases, Open AI, Anthropic, Replicate, Fal AI, GlueStack, AI Proxy Backend, Firebase, Supabase, Admob, and more.

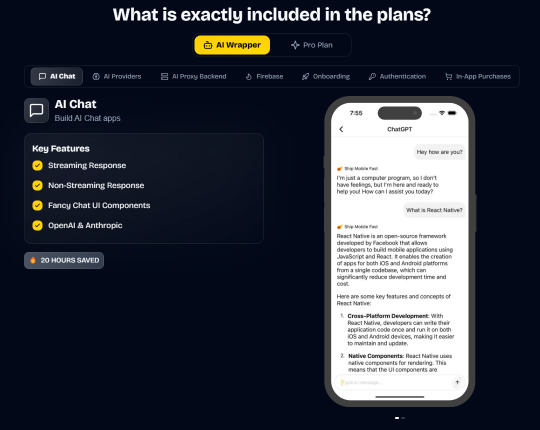

Ship Mobile Fast AI Wrapper is Live!🔥

For those who want to build AI applications…

Now, you can create the apps you envision in just 1-2 days.😎

Integrations with OpenAI, Anthropic, Replicate, and Fal AI. Protect your API keys from being stolen with AI Proxy Backend.

*

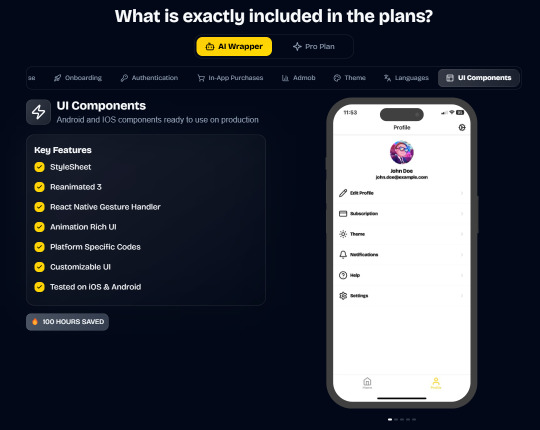

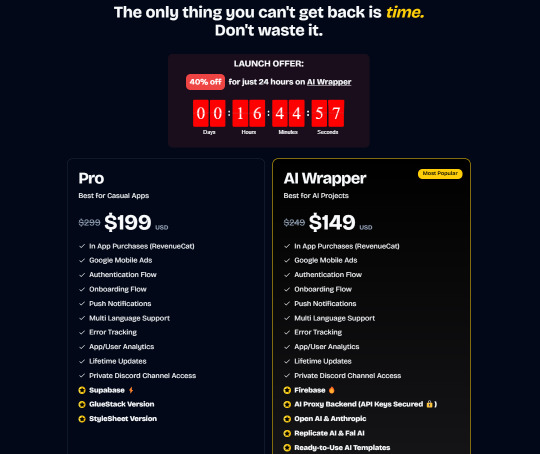

Pro (Best for Casual Apps):

In App Purchases (RevenueCat) Google Mobile Ads Authentication Flow Onboarding Flow Push Notifications Multi Language Support Error Tracking App/User Analytics Lifetime Updates Private Discord Channel Access Supabase⚡️ GlueStack Version StyleSheet Version

*

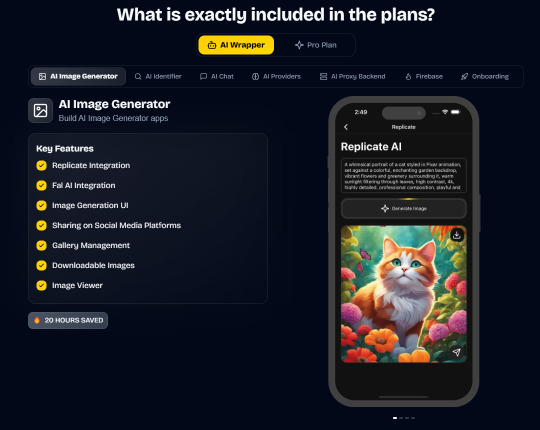

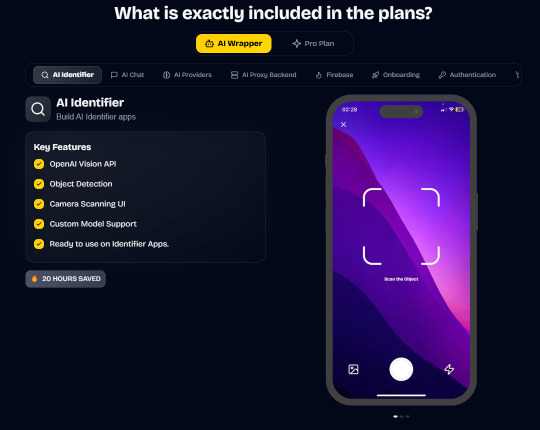

AI Wrapper (Best for AI Projects):

In App Purchases (RevenueCat) Google Mobile Ads Authentication Flow Onboarding Flow Push Notifications Multi Language Support Error Tracking App/User Analytics Lifetime Updates Private Discord Channel Access Firebase🔥 AI Proxy Backend (API Keys Secured🔒) Open AI & Anthropic Replicate AI & Fal AI Ready-to-Use AI Templates

*

Ship Mobile Fast: https://shipmobilefast.com/?aff=1nLNm

Telegram: ahmetmertugrul

#ai#app#ship#mobile#fast#openai#claude#deepseek#proxy#api#admob#ads#firebase#supabase#falai#backend#ui#ux#replicate#revenuecat#google#expo#boilerplate#template#wrapper

2 notes

·

View notes

Text

Gemini Looker: AI-Driven Insights & Streamlined Development

Conversational, visual, AI-powered data discovery and integration are in Gemini Looker.

Google's trusted semantic model underpins accurate, reliable insights in the AI era, and today at Google Cloud Next '25, it's announcing a major step towards making Looker the most powerful data analysis and exploration platform by adding powerful AI capabilities and a new reporting experience.

Conversational analytics using Google's latest Gemini models and natural language is now available to all platform users. Looker has a redesigned reporting experience to better data storytelling and exploration. All Gemini Looker clients can use both technologies.

Modern organisations require AI to find patterns, predict trends, and inspire intelligent action. Looker reports and Gemini make business intelligence easier and more accessible. This frees analysts to focus on more impactful work, empowers enterprise users, and reduces data team labour.

Looker's semantic layer ensures everyone uses a single truth source. Gemini Looker and Google's AI now automate analysis and give intelligent insights, speeding up data-driven organisational decisions.

All Looker users can now utilise Gemini

To make sophisticated AI-powered business intelligence (BI) accessible, you introduced Gemini in Looker at Google Cloud Next ’24. This collection of assistants lets customers ask their data queries in plain language and accelerates data modelling, chart and presentation building, and more.

Since then, those capabilities have been in preview, and now that the product is more accurate and mature, they should be available to all platform users. Conversational Analytics leverages natural language queries to deliver data insights, while Visualisation Assistant makes it easy to configure charts and visualisations for dashboards using natural language.

Formula Assistant provides powerful on-the-fly calculated fields and instant ad hoc analysis; Automated Slide Generation creates insightful and instantaneous text summaries of your data to create impactful presentations; and LookML Code Assistant simplifies code creation by suggesting dimensions, groups, measures, and more.

Business users may perform complex procedures and get projections using the Code Interpreter for Conversational insights in preview.

Chat Analytics API

We also released the Conversational Analytics API to expand conversational analytics beyond Gemini Looker. Developers may now immediately add natural language query capabilities into bespoke apps, internal tools, or workflows due to scalable, reliable data modelling that can adapt to changing requirements and secure data access.

This API enables you develop unique BI agent experiences using Google's advanced AI models (NL2SQL, RAG, and VizGen) and Looker's semantic model for accuracy. Developers may easily leverage this functionality in Gemini Looker to build user-friendly data experiences, ease complex natural language analysis, and share insights from these talks.

Introduce Looker reports

Self-service analysis empowers line-of-business users and fosters teamwork. Looker reports integrate Looker Studio's powerful visualisation and reporting tools into the main Looker platform, boosting its appeal and use.

Looker reports, which feature native Looker content, direct linkages to Google Sheets and Microsoft Excel data, first-party connectors, and ad hoc access to various data sources, increase data storytelling, discovery, and connectivity.

Interactive reports are easier than ever to make. Looker reports includes a huge collection of templates and visualisations, extensive design options, real-time collaboration features, and the popular drag-and-drop interface.

New reporting environment coexists with Looker Dashboards and Explores in Gemini Looker's regulated framework. Importantly, Gemini in Looker readily integrates with Looker Reports, allowing conversational analytics in this new reporting environment.

Continuous integration ensures faster, more dependable development

Google Cloud is automating SQL and LookML testing and validation by purchasing Spectacles.dev, enabling faster and more reliable development cycles. Strong CI/CD methods build data confidence by ensuring semantic model precision and consistency, which is critical for AI-powered BI.

Looker reports, the Conversational Analytics API, Gemini, and native Continuous Integration features promote an AI-for-BI platform. Nous make powerful AI, accurate insights, and a data-driven culture easier than ever.

Attend Google Cloud Next to see Gemini Looker and hear how complete AI for BI can convert your data into a competitive advantage. After the event, Gemini Looker offers AI for BI lecture.

#technology#technews#govindhtech#news#technologynews#AI#artifical intelligence#Gemini Looker#Gemini#Looker reports#Google cloud Looker#Gemini in Looker#Analytics API

0 notes

Text

Le api non vedono il rosso – Giorgio Scianna.. Recensione di Alessandria today

Una sera come tante, Giulio torna dall’ufficio e trova una folla di giornalisti davanti al cancello della sua casa di Pavia.

Una sera come tante, Giulio torna dall’ufficio e trova una folla di giornalisti davanti al cancello della sua casa di Pavia. Qualcosa di grave è accaduto, qualcosa che sconvolgerà la sua vita. Dall’altra parte dell’Italia, un’auto a guida autonoma ha travolto e ucciso una bambina. Nessuno era al volante, eppure la macchina ha deciso il destino di quella piccola esistenza. Giulio, ingegnere…

#AI e responsabilità#Alessandria today#colpa e responsabilità#Einaudi#famiglia e tecnologia#filosofia del diritto#futuro della tecnologia#futuro e moralità#Giorgio Scianna#giudizio e colpa#Google News#ier Carlo Lava#ingegneria etica#Intelligenza artificiale#intelligenza artificiale e morale#italianewsmedia.com#Le api non vedono il rosso#libri da leggere#libri nuovi 2025#macchina a guida autonoma#narrativa civile#narrativa contemporanea#narrativa italiana#narrativa psicologica#Narrativa sociale#progresso e conseguenze#Recensione libro#recensioni libri#romanzi con domande etiche#romanzi italiani consigliati

0 notes

Text

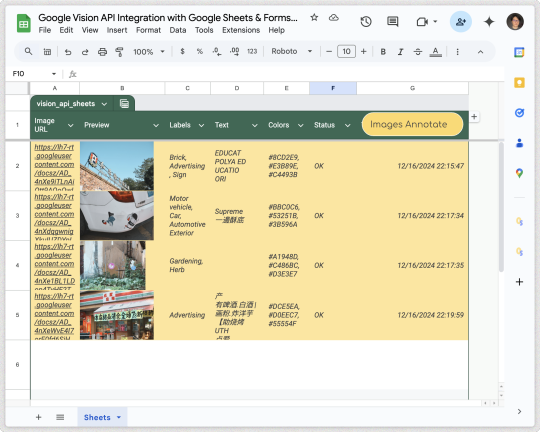

#ashtonfei#vision ai#vision api#google vision#text detection#image labeling#google apps script#google sheets

0 notes

Text

Web scraping with AI takes data extraction to the next level. By combining machine learning and natural language processing, AI-powered scrapers can intelligently extract relevant information from complex, dynamic websites with minimal setup. These tools adapt to changes in site structure, ensure high accuracy, and automate data cleaning for actionable insights.

#serpapi#serphouse#seo#google serp api#serpdata#serp scraping api#api#google#google search api#bing#web scraping#ai

0 notes

Text

Experiment #2.0 Concluded: A Shift in Focus Towards a New AI Venture

A few weeks ago, I shared my excitement about Experiment #2.0: building a multi-platform app for the Google Gemini API competition. It was an ambitious project with a tight deadline, aiming to revolutionize how we achieve long-term goals. Today, I’m announcing a change in direction. I’ve decided not to participate in the competition. Why the Change? While the app idea held immense potential, I…

#AI#AI Venture#Artificial Intelligence#Entrepreneurship#Experiment#Google Gemini API#Lessons Learned#New Project#Personal Growth#Pivot#Software Development#Startup

1 note

·

View note

Text

#AI chatbot#AI ethics specialist#AI jobs#Ai Jobsbuster#AI landscape 2024#AI product manager#AI research scientist#AI software products#AI tools#Artificial Intelligence (AI)#BERT#Clarifai#computational graph#Computer Vision API#Content creation#Cortana#creativity#CRM platform#cybersecurity analyst#data scientist#deep learning#deep-learning framework#DeepMind#designing#distributed computing#efficiency#emotional analysis#Facebook AI research lab#game-playing#Google Duplex

0 notes

Text

Filestore update: GKE stateful workload improvements

Filestore is widely used by clients running stateful apps on Google Kubernetes Engine (GKE). See this blog for background information on the advantages of combining the two.

Fully managed file storage from Google Cloud, Filestore is a multi-reader, multi-writer solution that is independent of computing virtual machines (VMs), making it resistant to VM failures or changes.

Filestore’s contemporary platform, which makes use of scale-out architecture and was created from the bottom up for performance, availability, and durability, is the foundation for Filestore’s Enterprise and Zonal (previously known as High Scale) tiers. In order to guarantee high durability inside a zone, customer data is saved twice, and Zonal provides a 99.9% availability SLA. Offering 99.99% availability, Enterprise is a regional service that duplicates the data synchronously across three zones within a region. Even better, google lowered Filestore costs in October by up to 25% for Zonal, Enterprise, and Basic HDD tiers (Basic SSD pricing stays the same). There is no need for action as the new, reduced pricing are implemented automatically.

With new features, functionality, and GKE integrations added on a regular basis, Filestore is a fully managed service that is incorporated into the CSI driver of GKE. Discover three newly released features that are now widely accessible by reading on.

1. Filestore Zonal support for CSI Driver elevated capacity (100TiB)

Google high-capacity Zonal solution with GKE’s new CSI driver increases capacity and performance linearly to match your high-capacity and high-performance needs up to 100TiB per instance. The integration starts at 10TiB. Large-scale AI/ML training frameworks like PyTorch/Tensorflow that require a file interface can benefit from Filestore Zonal’s high throughput (up to 26GiB/s). It also has 1,000 NFS connections per 10TiB and non-disruptive updates. With up to 10,000 NFS connections running concurrently, large-scale GKE deployments and taxing multi-writer AI/ML workloads are supported.

Google previously offered Filestore Enterprise and Filestore Basic are now enhanced with the addition of the CSI driver for Filestore Zonal. You may now select the ideal Filestore tier according to your demands for capacity, performance, and data security (such as backups and snapshots). Keep in mind that while Filestore Basic tier does not offer non-disruptive upgrades, Filestore Enterprise does.

2. Replicas

Google are pleased to announce the release of Filestore Enterprise’s CSI-integrated backup.

Clients can now safeguard Filestore Enterprise volumes in the same manner that they were able to safeguard their data on Filestore Basic volumes using the Volume Snapshots API. It’s crucial to note that the VolumeSnapshot API’s name is a little deceptive because, unlike what the name suggests, it’s a means for backing up data rather than a local file system snapshot. For both Filestore Basic and Enterprise, the procedure for initiating a backup via the API is the same.

Now that you have backups of your current Enterprise volumes, you may use the snapshot as a data source to create new volumes. You may now backup and restore your instances straight from the CSI driver thanks to this new capability. This feature is offered in single-share deployments on the Enterprise tier. At this time, Enterprise Multishare configurations do not support the Volume Snapshots API.

3. Reduced capacity Multiple shares in Filestore Enterprise

Google’s efficient multishare instances from last year, which let users split a 1TiB instance into several 100GiB persistent volumes to increase storage utilization, are well-liked by GKE and Filestore Enterprise clients.

Google are pleased to present the next iteration of the company’s multi-share capability, which is only accessible via the GKE CSI driver on the Enterprise tier. With multishares, you can start with a minimum share (persistent volume) capacity as low as 10GiB (down from 100GiB previously), and you can divide your Enterprise instance into as many as 80 shares (up from 10 shares previously).

Persistent storage for your GKE stateful workloads with Enterprise Multishares is not only very performant and highly durable (99.99% SLA), but it’s also very efficient, providing complete instance utilization of up to 80 shares per instance. Find out more about how to use the enhanced multishares functionality to boost the effectiveness of your GKE storage here.

Workloads with states, the Filestore method

Filestore is an integrated GKE NFS storage solution that is fully managed. They are still working to enable large-scale, demanding GKE stateful workloads like AI/ML training, and we have added the Filestore Zonal CSI integration, made Volume Snapshot API available for Filestore Enterprise, and provided more granular Filestore Enterprise Multishare persistent volume support.

Read more on Govindhtech.com

#GKE#GoogleCloud#Filestore#Zonal#CSIDriver#AI#ML#Google#SLA#technews#technology#Snapshots#API#GoogleKubernetesEngine#govindhtech

0 notes

Text

Google Gen AI SDK, Gemini Developer API, and Python 3.13

A Technical Overview and Compatibility Analysis 🧠 TL;DR – Google Gen AI SDK + Gemini API + Python 3.13 Integration 🚀 🔍 Overview Google’s Gen AI SDK and Gemini Developer API provide cutting-edge tools for working with generative AI across text, images, code, audio, and video. The SDK offers a unified interface to interact with Gemini models via both Developer API and Vertex AI 🌐. 🧰 SDK…

#AI development#AI SDK#AI tools#cloud AI#code generation#deep learning#function calling#Gemini API#generative AI#Google AI#Google Gen AI SDK#LLM integration#multimodal AI#Python 3.13#Vertex AI

0 notes

Text

"Artists have finally had enough with Meta’s predatory AI policies, but Meta’s loss is Cara’s gain. An artist-run, anti-AI social platform, Cara has grown from 40,000 to 650,000 users within the last week, catapulting it to the top of the App Store charts.

Instagram is a necessity for many artists, who use the platform to promote their work and solicit paying clients. But Meta is using public posts to train its generative AI systems, and only European users can opt out, since they’re protected by GDPR laws. Generative AI has become so front-and-center on Meta’s apps that artists reached their breaking point.

“When you put [AI] so much in their face, and then give them the option to opt out, but then increase the friction to opt out… I think that increases their anger level — like, okay now I’ve really had enough,” Jingna Zhang, a renowned photographer and founder of Cara, told TechCrunch.

Cara, which has both a web and mobile app, is like a combination of Instagram and X, but built specifically for artists. On your profile, you can host a portfolio of work, but you can also post updates to your feed like any other microblogging site.

Zhang is perfectly positioned to helm an artist-centric social network, where they can post without the risk of becoming part of a training dataset for AI. Zhang has fought on behalf of artists, recently winning an appeal in a Luxembourg court over a painter who copied one of her photographs, which she shot for Harper’s Bazaar Vietnam.

“Using a different medium was irrelevant. My work being ‘available online’ was irrelevant. Consent was necessary,” Zhang wrote on X.

Zhang and three other artists are also suing Google for allegedly using their copyrighted work to train Imagen, an AI image generator. She’s also a plaintiff in a similar lawsuit against Stability AI, Midjourney, DeviantArt and Runway AI.

“Words can’t describe how dehumanizing it is to see my name used 20,000+ times in MidJourney,” she wrote in an Instagram post. “My life’s work and who I am—reduced to meaningless fodder for a commercial image slot machine.”

Artists are so resistant to AI because the training data behind many of these image generators includes their work without their consent. These models amass such a large swath of artwork by scraping the internet for images, without regard for whether or not those images are copyrighted. It’s a slap in the face for artists – not only are their jobs endangered by AI, but that same AI is often powered by their work.

“When it comes to art, unfortunately, we just come from a fundamentally different perspective and point of view, because on the tech side, you have this strong history of open source, and people are just thinking like, well, you put it out there, so it’s for people to use,” Zhang said. “For artists, it’s a part of our selves and our identity. I would not want my best friend to make a manipulation of my work without asking me. There’s a nuance to how we see things, but I don’t think people understand that the art we do is not a product.”

This commitment to protecting artists from copyright infringement extends to Cara, which partners with the University of Chicago’s Glaze project. By using Glaze, artists who manually apply Glaze to their work on Cara have an added layer of protection against being scraped for AI.

Other projects have also stepped up to defend artists. Spawning AI, an artist-led company, has created an API that allows artists to remove their work from popular datasets. But that opt-out only works if the companies that use those datasets honor artists’ requests. So far, HuggingFace and Stability have agreed to respect Spawning’s Do Not Train registry, but artists’ work cannot be retroactively removed from models that have already been trained.

“I think there is this clash between backgrounds and expectations on what we put on the internet,” Zhang said. “For artists, we want to share our work with the world. We put it online, and we don’t charge people to view this piece of work, but it doesn’t mean that we give up our copyright, or any ownership of our work.”"

Read the rest of the article here:

https://techcrunch.com/2024/06/06/a-social-app-for-creatives-cara-grew-from-40k-to-650k-users-in-a-week-because-artists-are-fed-up-with-metas-ai-policies/

611 notes

·

View notes

Text

Anon wrote: hello! thank you for running this blog. i hope your vacation was well-spent!

i am an enfp in the third year of my engineering degree. i had initially wanted to do literature and become an author. however, due to the job security associated with this field, my parents got me to do computer science, specialising in artificial intelligence. i did think it was the end of my life at the time, but eventually convinced myself otherwise. after all, i could still continue reading and writing as hobbies.

now, three years in, i am having the same thoughts again. i've been feeling disillusioned from the whole gen-ai thing due to art theft issues and people using it to bypass - dare i say, outsource - creative work. also, the environmental impact of this technology is astounding. yet, every instructor tells us to use ai to get information that could easily be looked up in textbooks or google. what makes it worse is that i recently lost an essay competition to a guy who i know for a fact used chatgpt.

i can't help feeling that by working in this industry, i am becoming a part of the problem. at the same time, i feel like a conservative old person who is rejecting modern technology and griping about 'the good old days'.

another thing is that college work is just so all-consuming and tiring that i've barely read or written anything non-academic in the past few years. quitting my job and becoming a writer a few years down the road is seeming more and more like a doomed possibility.

i've been trying to do what i can at my level. i write articles about ethical considerations in ai for the college newsletter. i am in a technical events club, and am planning out an artificial intelligence introductory workshop for juniors where i will include these topics, if approved by the superiors.

from what i've read on your blog, it doesn't seem like you have a very high opinion of ai, either, but i've only seen you address it in terms of writing. i'd like to know, are there any ai applications that you find beneficial? i think that now that i am here, i could try to make a difference by working on projects that actually help people, rather than use some chatgpt api to do the same things, repackaged. i just felt like i need the perspective of someone who thinks differently than all those around me. not in a 'feed my tunnel-vision' way, but in a 'tell me i'm not stupid' way.

----------------------

It's kind of interesting (in the "isn't life whacky?" sort of way) you chose the one field that has the potential to decimate the field that you actually wanted to be in. I certainly understand your inner conflict and I'll give you my personal views, but I don't know how much they will help your decision making.

I'm of course concerned about the ramifications on writing not just because I'm a writer but because, from the perspective of education and personal growth, I understand the enormous value of writing skills. Learning to write analytically is challenging. I've witnessed many people meet that challenge bravely, and in the process, they became much more intelligent and thoughtful human beings, better able to contribute positively to society. So, it pains me to see the attitude of "don't have to learn it cuz the machine does it". However, writing doesn't encompass my full view on AI.

I wouldn't necessarily stereotype people who are against new technology as "old and conservative", though some of them are. My parents taught me to be an early adopter of new tech, but it doesn't mean I don't have reservations about it. I think, psychologically, the main reason people resist is because of the real threat it poses. Historically, we like to gloss over the real human suffering that results from technological advancement. But it is a reasonable and legitimate response to resist something that threatens your livelihood and even your very existence.

For example, it is already difficult enough to make a living in the arts, and AI just might make it impossible. Even if you do come up with something genuinely creative and valuable, how are you going to make a living with it? As soon as creative products are digitized, they just get scraped up, regurgitated, and disseminated to the masses with no credit or compensation given to the original creator. It's cannibalism. Cannibalism isn't sustainable.

I wonder if people can seriously imagine a society where human creativity in the arts has been made obsolete and people only have exposure to AI creation. There are plenty of people who don't fully grasp the value of human creativity, so they wouldn't mind it, but I would personally consider it to be a kind of hell.

I occasionally mention that my true passion is researching "meaning" and how people come to imbue their life with a sense of meaning. Creativity has a major role to play in 1) almost everything that makes life/living feel worthwhile, 2) generating a culture that is worth honoring and preserving, and 3) building a society that is worthy of devoting our efforts to.

Living in a capitalist society that treats people as mere tools of productivity and treats education as a mere means to a paycheck already robs us of so much meaning. In many ways, AI is a logical result of that mindset, of trying to "extract" whatever value humans have left to offer, until we are nothing but empty shells.

I don't think it's a coincidence that AI comes out of a society that devalues humanity to the point where a troubling portion of the population suffers marginalization, mental disorder, and/or feels existentially empty. Many of the arguments I've heard from AI proponents about how it can improve life sound to me like they're actually going to accelerate spiritual starvation.

Existential concerns are serious enough, before we even get to the environmental concerns. For me, environment is the biggest reason to be suspicious of AI and its true cost. I think too many people are unaware of the environmental impact of computing and networking in general, let alone running AI systems. I recently read about how much energy it takes to store all the forgotten chats, memes, and posts on social media. AI ramps up carbon emissions dramatically and wastes an already dwindling supply of fresh water.

Can we really afford a mass experiment with AI at a time when we are already hurtling toward climate catastrophe? When you think about how much AI is used for trivial entertainment or pointless busywork, it doesn't seem worth the environmental cost. I care about this enough that I try to reduce my digital footprint. But I'm just one person and most of the population is trending the other way.

With respect to integrating AI into personal life or everyday living, I struggle to see the value, often because those who might benefit the most are the ones who don't have access. Yes, I've seen some people have success with using AI to plan and organize, but I also always secretly wonder at how their life got to the point of needing that much outside help. Sure, AI may help with certain disadvantages such as learning or physical disabilities, but this segment of the population is usually the last to reap the benefits of technology.

More often than not, I see people using AI to lie, cheat, steal, and protect their own privilege. It's particularly sad for me to see people lying to themselves, e.g., believing that they're smart for using AI when they're actually making themselves stupider, or thinking that an AI companion can replace real human relationship.

I continue to believe that releasing AI into the wild, without developing proper safeguards, was the biggest mistake made so far. The revolts at OpenAI prove, once again, that companies cannot be trusted to regulate themselves. Tech companies need a constant stream of data to feed the beast and they're willing to sacrifice our well-being to do it. It seems the only thing we can do as individuals is stop offering up our data, but that's not going to happen en masse.

Even though you're aware of these issues, I want to mention them for those who aren't, and for the sake of emphasizing just how important it is to regulate AI and limit its use to the things that are most likely to produce a benefit to humanity, in terms of actually improving quality of human life in concrete terms.

In my opinion, the most worthwhile place to use AI is medicine and medical research. For example, aggregating and analyzing information for doctors, assisting surgeons with difficult procedures, and coming up with new possibilities for vaccines, treatments, and cures is where I'd like to see AI shine. I'd also love to see AI applied to:

scientific research, to help scientists sort, manage, and process huge amounts of information

educational resources, to help learners find quality information more efficiently, rather than feeding them misinformation

engineering and design, to build more sustainable infrastructure

space exploration, to find better ways of traveling through space or surviving on other planets

statistical analysis, to help policymakers take a more objective look at whether solutions are actually working as intended, as opposed to being blinded by wishful thinking, bias, hubris, or ideology (I recognize this point is controversial since AI can be biased as well)

Even though you work in the field, you're still only one person, so you don't have that much more power than anyone else to change its direction. There's no putting the worms back in the can at this point. I agree with you that, for the sake of your well-being, staying in the field means choosing your work carefully. However, if you want to work for an organization that doesn't sacrifice people at the altar of profit, it might be slim pickings and the pay might not be great. Staying true to your values can be costly too.

26 notes

·

View notes

Text

Google Flight Price Tracking helps users monitor flight prices in real time, allowing them to track changes and receive notifications when fares drop. It's a convenient tool for finding the best deals and planning trips more affordably.

#serphouse#seo#google serp api#serpdata#serp scraping api#api#google#google search api#bing#ai content#content creator#focus

0 notes

Text

Major technology companies, including Google, Apple, and Discord, have been enabling people to quickly sign up to harmful “undress” websites, which use AI to remove clothes from real photos to make victims appear to be “nude” without their consent. More than a dozen of these deepfake websites have been using login buttons from the tech companies for months.

A WIRED analysis found 16 of the biggest so-called undress and “nudify” websites using the sign-in infrastructure from Google, Apple, Discord, Twitter, Patreon, and Line. This approach allows people to easily create accounts on the deepfake websites—offering them a veneer of credibility—before they pay for credits and generate images.

While bots and websites that create nonconsensual intimate images of women and girls have existed for years, the number has increased with the introduction of generative AI. This kind of “undress” abuse is alarmingly widespread, with teenage boys allegedly creating images of their classmates. Tech companies have been slow to deal with the scale of the issues, critics say, with the websites appearing highly in search results, paid advertisements promoting them on social media, and apps showing up in app stores.

“This is a continuation of a trend that normalizes sexual violence against women and girls by Big Tech,” says Adam Dodge, a lawyer and founder of EndTAB (Ending Technology-Enabled Abuse). “Sign-in APIs are tools of convenience. We should never be making sexual violence an act of convenience,” he says. “We should be putting up walls around the access to these apps, and instead we're giving people a drawbridge.”

The sign-in tools analyzed by WIRED, which are deployed through APIs and common authentication methods, allow people to use existing accounts to join the deepfake websites. Google’s login system appeared on 16 websites, Discord’s appeared on 13, and Apple’s on six. X’s button was on three websites, with Patreon and messaging service Line’s both appearing on the same two websites.

WIRED is not naming the websites, since they enable abuse. Several are part of wider networks and owned by the same individuals or companies. The login systems have been used despite the tech companies broadly having rules that state developers cannot use their services in ways that would enable harm, harassment, or invade people’s privacy.

After being contacted by WIRED, spokespeople for Discord and Apple said they have removed the developer accounts connected to their websites. Google said it will take action against developers when it finds its terms have been violated. Patreon said it prohibits accounts that allow explicit imagery to be created, and Line confirmed it is investigating but said it could not comment on specific websites. X did not reply to a request for comment about the way its systems are being used.

In the hours after Jud Hoffman, Discord vice president of trust and safety, told WIRED it had terminated the websites’ access to its APIs for violating its developer policy, one of the undress websites posted in a Telegram channel that authorization via Discord was “temporarily unavailable” and claimed it was trying to restore access. That undress service did not respond to WIRED’s request for comment about its operations.

Rapid Expansion

Since deepfake technology emerged toward the end of 2017, the number of nonconsensual intimate videos and images being created has grown exponentially. While videos are harder to produce, the creation of images using “undress” or “nudify” websites and apps has become commonplace.

“We must be clear that this is not innovation, this is sexual abuse,” says David Chiu, San Francisco’s city attorney, who recently opened a lawsuit against undress and nudify websites and their creators. Chiu says the 16 websites his office’s lawsuit focuses on have had around 200 million visits in the first six months of this year alone. “These websites are engaged in horrific exploitation of women and girls around the globe. These images are used to bully, humiliate, and threaten women and girls,” Chiu alleges.

The undress websites operate as businesses, often running in the shadows—proactively providing very few details about who owns them or how they operate. Websites run by the same people often look similar and use nearly identical terms and conditions. Some offer more than a dozen different languages, demonstrating the worldwide nature of the problem. Some Telegram channels linked to the websites have tens of thousands of members each.

The websites are also under constant development: They frequently post about new features they are producing—with one claiming their AI can customize how women’s bodies look and allow “uploads from Instagram.” The websites generally charge people to generate images and can run affiliate schemes to encourage people to share them; some have pooled together into a collective to create their own cryptocurrency that could be used to pay for images.

A person identifying themself as Alexander August and the CEO of one of the websites, responded to WIRED, saying they “understand and acknowledge the concerns regarding the potential misuse of our technology.” The person claims the website has put in place various safety mechanisms to prevent images of minors being created. “We are committed to taking social responsibility and are open to collaborating with official bodies to enhance transparency, safety, and reliability in our services,” they wrote in an email.

The tech company logins are often presented when someone tries to sign up to the site or clicks on buttons to try generating images. It is unclear how many people will have used the login methods, and most websites also allow people to create accounts with just their email address. However, of the websites reviewed, the majority had implemented the sign-in APIs of more than one technology company, with Sign-In With Google being the most widely used. When this option is clicked, prompts from the Google system say the website will get people’s name, email addresses, language preferences, and profile picture.

Google’s sign-in system also reveals some information about the developer accounts linked to a website. For example, four websites are linked to one Gmail account; another six websites are linked to another. “In order to use Sign in with Google, developers must agree to our Terms of Service, which prohibits the promotion of sexually explicit content as well as behavior or content that defames or harasses others,” says a Google spokesperson, adding that “appropriate action” will be taken if these terms are broken.

Other tech companies that had sign-in systems being used said they have banned accounts after being contacted by WIRED.

Hoffman from Discord says that as well as taking action on the websites flagged by WIRED, the company will “continue to address other websites we become aware of that violate our policies.” Apple spokesperson Shane Bauer says it has terminated multiple developer’s licenses with Apple, and that Sign In With Apple will no longer work on their websites. Adiya Taylor, corporate communications lead at Patreon, says it prohibits accounts that allow or fund access to external tools that can produce adult materials or explicit imagery. “We will take action on any works or accounts on Patreon that are found to be in violation of our Community Guidelines.”

As well as the login systems, several of the websites displayed the logos of Mastercard or Visa, implying they can possibly be used to pay for their services. Visa did not respond to WIRED’s request for comment, while a Mastercard spokesperson says “purchases of nonconsensual deepfake content are not allowed on our network,” and that it takes action when it detects or is made aware of any instances.

On multiple occasions, tech companies and payment providers have taken action against AI services allowing people to generate nonconsensual images or video after media reports about their activities. Clare McGlynn, a professor of law at Durham University who has expertise in the legal regulation of pornography and sexual violence and abuse online, says Big Tech platforms are enabling the growth of undress websites and similar websites by not proactively taking action against them.

“What is concerning is that these are the most basic of security steps and moderation that are missing or not being enforced,” McGlynn says of the sign-in systems being used, adding that it is “wholly inadequate” for companies to react when journalists or campaigners highlight how their rules are being easily dodged. “It is evident that they simply do not care, despite their rhetoric,” McGlynn says. “Otherwise they would have taken these most simple steps to reduce access.”

23 notes

·

View notes

Text

Experiment #2.2 Doubling Down: Two Google Gemini AI Apps in 30 Days – My Journey

Hello everyone! 👋 Yesterday, I shared my pivot from my initial app idea due to a saturated market. This led me to explore new horizons with the Google Gemini API. Today, I’m thrilled to announce an even bolder challenge: developing two apps in the next 30 days! Two Apps, Two Purposes Public Project: Your Guide to AI App Development. My original concept, a goal-setting app, will continue…

#30-Day Challenge#AI App Development#AI-Powered Apps#App Development Challenge#App Development Process#Behind the Scenes#Building in Public#Goal-Setting Apps#Google AI Tools#Google Gemini API#Indie Developer#Patreon Exclusive#Solo Developer#Startup Journey#Tech Entrepreneur

1 note

·

View note

Text

Google Gemini: The Ultimate Guide to the Most Advanced AI Model Ever

We hope you enjoyed this article and found it informative and insightful. We would love to hear your feedback and suggestions, so please feel free to leave a comment below or contact us through our website. Thank you for reading and stay tuned for more

Google Gemini: A Revolutionary AI Model that Can Shape the Future of Technology and Society. Artificial intelligence (AI) is one of the most exciting and rapidly evolving fields of technology today. From personal assistants to self-driving cars, AI is transforming various aspects of our lives and society. However, the current state of AI is still far from achieving human-like intelligence and…

View On WordPress

#AI ethics#AI model#AI research#API integration#artificial intelligence#business#creative content generation#discovery#Education#google gemini#language model#learning#marketing#memory#multimodal AI#personal assistants#planning#productivity tools#scientific research#tool integration

0 notes