#CSIDriver

Explore tagged Tumblr posts

Text

Filestore update: GKE stateful workload improvements

Filestore is widely used by clients running stateful apps on Google Kubernetes Engine (GKE). See this blog for background information on the advantages of combining the two.

Fully managed file storage from Google Cloud, Filestore is a multi-reader, multi-writer solution that is independent of computing virtual machines (VMs), making it resistant to VM failures or changes.

Filestore’s contemporary platform, which makes use of scale-out architecture and was created from the bottom up for performance, availability, and durability, is the foundation for Filestore’s Enterprise and Zonal (previously known as High Scale) tiers. In order to guarantee high durability inside a zone, customer data is saved twice, and Zonal provides a 99.9% availability SLA. Offering 99.99% availability, Enterprise is a regional service that duplicates the data synchronously across three zones within a region. Even better, google lowered Filestore costs in October by up to 25% for Zonal, Enterprise, and Basic HDD tiers (Basic SSD pricing stays the same). There is no need for action as the new, reduced pricing are implemented automatically.

With new features, functionality, and GKE integrations added on a regular basis, Filestore is a fully managed service that is incorporated into the CSI driver of GKE. Discover three newly released features that are now widely accessible by reading on.

1. Filestore Zonal support for CSI Driver elevated capacity (100TiB)

Google high-capacity Zonal solution with GKE’s new CSI driver increases capacity and performance linearly to match your high-capacity and high-performance needs up to 100TiB per instance. The integration starts at 10TiB. Large-scale AI/ML training frameworks like PyTorch/Tensorflow that require a file interface can benefit from Filestore Zonal’s high throughput (up to 26GiB/s). It also has 1,000 NFS connections per 10TiB and non-disruptive updates. With up to 10,000 NFS connections running concurrently, large-scale GKE deployments and taxing multi-writer AI/ML workloads are supported.

Google previously offered Filestore Enterprise and Filestore Basic are now enhanced with the addition of the CSI driver for Filestore Zonal. You may now select the ideal Filestore tier according to your demands for capacity, performance, and data security (such as backups and snapshots). Keep in mind that while Filestore Basic tier does not offer non-disruptive upgrades, Filestore Enterprise does.

2. Replicas

Google are pleased to announce the release of Filestore Enterprise’s CSI-integrated backup.

Clients can now safeguard Filestore Enterprise volumes in the same manner that they were able to safeguard their data on Filestore Basic volumes using the Volume Snapshots API. It’s crucial to note that the VolumeSnapshot API’s name is a little deceptive because, unlike what the name suggests, it’s a means for backing up data rather than a local file system snapshot. For both Filestore Basic and Enterprise, the procedure for initiating a backup via the API is the same.

Now that you have backups of your current Enterprise volumes, you may use the snapshot as a data source to create new volumes. You may now backup and restore your instances straight from the CSI driver thanks to this new capability. This feature is offered in single-share deployments on the Enterprise tier. At this time, Enterprise Multishare configurations do not support the Volume Snapshots API.

3. Reduced capacity Multiple shares in Filestore Enterprise

Google’s efficient multishare instances from last year, which let users split a 1TiB instance into several 100GiB persistent volumes to increase storage utilization, are well-liked by GKE and Filestore Enterprise clients.

Google are pleased to present the next iteration of the company’s multi-share capability, which is only accessible via the GKE CSI driver on the Enterprise tier. With multishares, you can start with a minimum share (persistent volume) capacity as low as 10GiB (down from 100GiB previously), and you can divide your Enterprise instance into as many as 80 shares (up from 10 shares previously).

Persistent storage for your GKE stateful workloads with Enterprise Multishares is not only very performant and highly durable (99.99% SLA), but it’s also very efficient, providing complete instance utilization of up to 80 shares per instance. Find out more about how to use the enhanced multishares functionality to boost the effectiveness of your GKE storage here.

Workloads with states, the Filestore method

Filestore is an integrated GKE NFS storage solution that is fully managed. They are still working to enable large-scale, demanding GKE stateful workloads like AI/ML training, and we have added the Filestore Zonal CSI integration, made Volume Snapshot API available for Filestore Enterprise, and provided more granular Filestore Enterprise Multishare persistent volume support.

Read more on Govindhtech.com

#GKE#GoogleCloud#Filestore#Zonal#CSIDriver#AI#ML#Google#SLA#technews#technology#Snapshots#API#GoogleKubernetesEngine#govindhtech

0 notes

Text

Red Hat OpenShift Container Platform is a powerful platform created to provide IT organizations and developers with a hybrid cloud application platform. With this secure and scalable platform, you’re able deploy containerized applications with minimal configuration and management overhead. In this article we look at how you can perform an upgrade from OpenShift 4.8 To OpenShift 4.9. The OpenShift Container Platform 4.9 is supported on Red Hat Enterprise Linux CoreOS (RHCOS) 4.9, as well as on Red Hat Enterprise Linux (RHEL) 8.4 and 7.9. Red Hat recommends you run RHCOS machines on the the control plane nodes, and either RHCOS or RHEL 8.4+/7.9 on the compute machines. The Kubernetes version used in OpenShift Container Platform 4.9 is 1.22. In Kubernetes 1.22, a significant number of deprecated v1beta1 APIs were removed. There was a requirement introduced in OpenShift Container Platform 4.8.14 that requires an administrator to provide a manual acknowledgment before being able to upgrade the Cluster from OpenShift Container Platform 4.8 to 4.9. This helps to prevent issues after an upgrade to OpenShift Container Platform 4.9, where removed APIs are still in use by workloads and components in the Cluster. Removed Kubernetes APIs in OpenShift 4.9 The following is a list of deprecated v1beta1 APIs in OpenShift Container Platform 4.9 which uses Kubernetes 1.22: Resource API Notable changes APIService apiregistration.k8s.io/v1beta1 No CertificateSigningRequest certificates.k8s.io/v1beta1 Yes ClusterRole rbac.authorization.k8s.io/v1beta1 No ClusterRoleBinding rbac.authorization.k8s.io/v1beta1 No CSIDriver storage.k8s.io/v1beta1 No CSINode storage.k8s.io/v1beta1 No CustomResourceDefinition apiextensions.k8s.io/v1beta1 Yes Ingress extensions/v1beta1 Yes Ingress networking.k8s.io/v1beta1 Yes IngressClass networking.k8s.io/v1beta1 No Lease coordination.k8s.io/v1beta1 No LocalSubjectAccessReview authorization.k8s.io/v1beta1 Yes MutatingWebhookConfiguration admissionregistration.k8s.io/v1beta1 Yes PriorityClass scheduling.k8s.io/v1beta1 No Role rbac.authorization.k8s.io/v1beta1 No RoleBinding rbac.authorization.k8s.io/v1beta1 No SelfSubjectAccessReview authorization.k8s.io/v1beta1 Yes StorageClass storage.k8s.io/v1beta1 No SubjectAccessReview authorization.k8s.io/v1beta1 Yes TokenReview authentication.k8s.io/v1beta1 No ValidatingWebhookConfiguration admissionregistration.k8s.io/v1beta1 Yes VolumeAttachment storage.k8s.io/v1beta1 No v1beta1 APIs removed from Kubernetes 1.22 Table You are required to migrate manifests and API clients to use the v1 API version. More information on deprecated APIs migration can be found in the Official Kubernetes documentation. 1) Evaluate OpenShift 4.8 Cluster for removed APIs It is a responsibility of Kubernetes/OpenShift Administrator to properly evaluate all Workloads and other integrations to identify where APIs removed in Kubernetes 1.22 are in use. Ensure you’re on OpenShift Cluster 4.8 before trying to perform an upgrade to 4.9. The following commands helps you identify OCP release $ oc get clusterversions.config.openshift.io NAME VERSION AVAILABLE PROGRESSING SINCE STATUS version 4.8.15 True False 5h45m Cluster version is 4.8.15 You can then use the APIRequestCount API to track API requests – if any of the requests is using one of the removed APIs. The following command can be use to identify APIs that will be removed in a future release but are currently in use. Focus on the REMOVEDINRELEASE output column. $ oc get apirequestcounts NAME REMOVEDINRELEASE REQUESTSINCURRENTHOUR REQUESTSINLAST24H ingresses.v1beta1.extensions 1.22 2 364 The results can be filtered further by using -o jsonpath option: oc get apirequestcounts -o jsonpath='range .

items[?(@.status.removedInRelease!="")].status.removedInRelease"\t".metadata.name"\n"end' Example output: $ oc get apirequestcounts -o jsonpath='range .items[?(@.status.removedInRelease!="")].status.removedInRelease"\t".metadata.name"\n"end' 1.22 ingresses.v1beta1.extensions With API identified, you can examine the APIRequestCount resource for a given API version to help identify which workloads are using the API. oc get apirequestcounts .. -o yaml Example for the ingresses.v1beta1.extensions API: $ oc get apirequestcounts ingresses.v1beta1.extensions NAME REMOVEDINRELEASE REQUESTSINCURRENTHOUR REQUESTSINLAST24H ingresses.v1beta1.extensions 1.22 3 365 Migrate instances of removed APIs You can get more information on migrating removed Kubernetes APIs, see the Deprecated API Migration Guide in the Kubernetes documentation. 2) Acknowledge Upgrade to OpenShift Container Platform 4.9 After the evaluation and migration of removed Kubernetes APIs to v1 is complete, as an OpenShift Administrator you can provide the acknowledgment required to proceed. WARNING: It is a sole responsibility of administrator to ensure all uses of removed APIs is resolved and necessary migrations performed before providing this administrator acknowledgment. Run the following command to acknowledge that you have completed the evaluation and cluster can be upgraded to OpenShift Container Platform 4.9: oc -n openshift-config patch cm admin-acks --patch '"data":"ack-4.8-kube-1.22-api-removals-in-4.9":"true"' --type=merge Expected command output: configmap/admin-acks patched 3) Begin Upgrade from OpenShift 4.8 To 4.9 Login to OpenShift Web Console and navigate to Administration → Cluster Settings > Details Click on “Channel” and update channel to fast-4.9 or stable-4.9. Select fast-4.9 orstable-4.9 from the list of available channels. Channel can also be changed from the command line with below command syntax: oc adm upgrade channel clusterversion version --type json -p '["op": "add", "path": "/spec/channel", "value": "”]' Where is replaced with either; stable-4.9 fast-4.9 candidate-4.9 New cluster updates should be visible now. Use the “Update” link to initiate upgrade from OpenShift 4.8 to OpenShift 4.9. Select the new version of OpenShift 4.9 that you’ll be upgrading to. The update process to OpenShift Container Platform 4.9 should begin shortly. You can also check upgrade status from CLI: $ oc get clusterversions.config.openshift.io NAME VERSION AVAILABLE PROGRESSING SINCE STATUS version 4.8.15 True True 2m26s Working towards 4.9.0: 71 of 735 done (9% complete) Output once all the upgrades are completed. You now have OpenShift cluster version 4.9. List all the available MachineHealthCheck to ensure everything is in healthy state. $ oc get machinehealthcheck -n openshift-machine-api NAME MAXUNHEALTHY EXPECTEDMACHINES CURRENTHEALTHY machine-api-termination-handler 100% Check cluster components: $ oc get cs W1110 20:39:28.838732 2592723 warnings.go:70] v1 ComponentStatus is deprecated in v1.19+ NAME STATUS MESSAGE ERROR scheduler Healthy ok controller-manager Healthy ok etcd-3 Healthy "health":"true","reason":"" etcd-1 Healthy "health":"true","reason":"" etcd-0 Healthy "health":"true","reason":"" etcd-2 Healthy "health":"true","reason":"" List all cluster operators and review current versions. $ oc get co NAME VERSION AVAILABLE PROGRESSING DEGRADED SINCE MESSAGE authentication 4.9.5 True False False 32h baremetal 4.9.5 True False False 84d cloud-controller-manager 4.9.5 True False False 20d

cloud-credential 4.9.5 True False False 84d cluster-autoscaler 4.9.5 True False False 84d config-operator 4.9.5 True False False 84d console 4.9.5 True False False 37d csi-snapshot-controller 4.9.5 True False False 84d dns 4.9.5 True False False 84d etcd 4.9.5 True False False 84d image-registry 4.9.5 True False False 84d ingress 4.9.5 True False False 84d insights 4.9.5 True False False 84d kube-apiserver 4.9.5 True False False 84d kube-controller-manager 4.9.5 True False False 84d kube-scheduler 4.9.5 True False False 84d kube-storage-version-migrator 4.9.5 True False False 8d machine-api 4.9.5 True False False 84d machine-approver 4.9.5 True False False 84d machine-config 4.9.5 True False False 8d marketplace 4.9.5 True False False 84d monitoring 4.9.5 True False False 20d network 4.9.5 True False False 84d node-tuning 4.9.5 True False False 8d openshift-apiserver 4.9.5 True False False 20d openshift-controller-manager 4.9.5 True False False 7d8h openshift-samples 4.9.5 True False False 8d operator-lifecycle-manager 4.9.5 True False False 84d operator-lifecycle-manager-catalog 4.9.5 True False False 84d operator-lifecycle-manager-packageserver 4.9.5 True False False 20d service-ca 4.9.5 True False False 84d storage 4.9.5 True False False 84d Check if all nodes are available and in healthy state. $ oc get nodes Conclusion In this blog post we’ve be able to perform an upgrade of OpenShift from version 4.8 to 4.9. Ensure all Operators previously installed through Operator Lifecycle Manager (OLM) are updated to their latest version in their latest channel as selected during upgrade. Also ensure that all machine config pools (MCPs) are running and not paused. For any issues experienced during upgrade you can reach out to us through our comments section.

0 notes

Text

Kubernetes 1.16 released

Finally it’s the weekend. Peace and quiet to indulge yourself in a new Kubernetes release! Many others have beat me to it, great overviews are available from various sources.

The most exciting thing for me in Kubernetes 1.16 is the graduation of many alpha CSI features to beta. This is removes the friction of tinkering with the feature gates on either the kubelet or API server which is pet peeve of mine and makes me moan out loud when I found out something doesn't work because of it.

TL;DR

All these features have already been demonstrated with the HPE CSI Driver for Kubernetes, it starts about 7 minutes in, I’ve fast forwarded it for you.

At the Helm

Let’s showcase these graduated features with the newly released HPE CSI Driver for Kubernetes. Be warned, issues ahead. Helm is not quite there yet on Kubernetes 1.16, a fix to deploy Tiller on your cluster is available here. Next issue up is that the HPE CSI Driver Helm chart is not yet compatible with Kubernetes 1.16. I’m graciously and temporarily hosting a copy on my GitHub account.

Create a values.yaml file:

backend: 192.168.1.10 # This is your Nimble array username: admin password: admin servicePort: "8080" serviceName: nimble-csp-svc fsType: xfs accessProtocol: "iscsi" storageClass: create: false

Helm your way on your Kubernetes 1.16 cluster:

helm repo add hpe https://drajen.github.io/co-deployments-116 helm install --name hpe-csi hpe/hpe-csi-driver --namespace kube-system -f values.yaml

In my examples repo I’ve dumped a few declarations that I used to walk through these features. When I'm referencing a YAML file name, this is where to find it.

VolumePVCDataSource

This is a very useful capability when you’re interested in creating a clone of an existing PVC in the current state. I’m surprised to see this feature mature to beta before VolumeSnapshotDataSource which has been around for much longer.

Assuming you have an existing PVC named “my-pvc”:

apiVersion: v1 kind: PersistentVolumeClaim metadata: name: my-pvc-clone spec: accessModes: - ReadWriteOnce resources: requests: storage: 32Gi dataSource: kind: PersistentVolumeClaim name: my-pvc storageClassName: my-storageclass

Let’s cuddle:

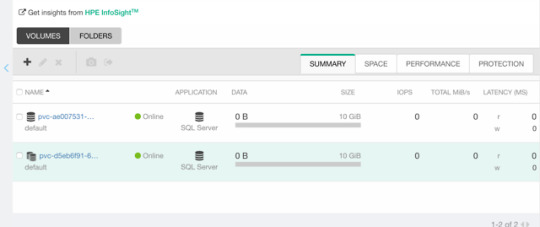

$ kubectl create -f pvc.yaml persistentvolumeclaim/my-pvc created $ kubectl create -f pvc-clone.yaml persistentvolumeclaim/my-pvc-clone created $ kubectl get pvc NAME STATUS VOLUME CAPACITY STORAGECLASS AGE my-pvc Bound pvc-ae0075... 10Gi my-storageclass 34s my-pvc-clone Bound pvc-d5eb6f... 10Gi my-storageclass 14s

On the Nimble array, we can indeed observe we have a clone of the dataSource.

ExpandCSIVolumes and ExpandInUsePersistentVolumes

This is indeed a very welcome addition to be promoted. Among the top complaints from users. This is stupid easy to use. Simply edit or patch your existing PVC to expand your PV.

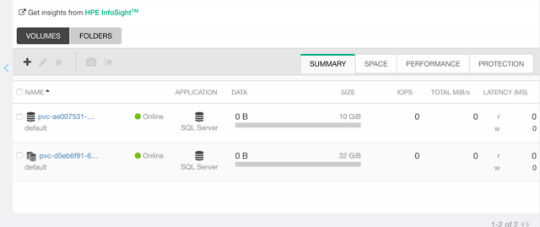

$ kubectl patch pvc/my-pvc-clone -p '{"spec": {"resources": {"requests": {"storage": "32Gi"}}}}' persistentvolumeclaim/my-pvc-clone patched $ kubectl get pv NAME CAPACITY CLAIM STORAGECLASS AGE pvc-d5eb6... 32Gi default/my-pvc-clone my-storageclass 9m25s

Yes, you can expand clones, no problem.

CSIInlineVolume

On of my favorite features of our legacy FlexVolume is the ability to create Inline Ephemeral Clones for CI/CD pipelines. Creating a point in time copy of a volume, do some work and/or tests on it and dispose of it. Leave no trace behind.

If this is something you’d like to walk through, there’s a few prerequisite steps here. The Helm chart does not create the CSIDriver custom resource definition (CRD). It need to be applied first:

apiVersion: storage.k8s.io/v1beta1 kind: CSIDriver metadata: name: csi.hpe.com spec: podInfoOnMount: true volumeLifecycleModes: - Persistent - Ephemeral

Next, the current behavior (subject to change) is that you need a secret for the CSI driver in the namespace you’re deploying to. This is a oneliner to copy from “kube-system” to your current namespace.

$ kubectl get -nkube-system secret/nimble-secret -o yaml | \ sed -e 's/namespace: kube-system//' | \ kubectl create -f-

Now, assuming we have deployed a MariaDB and have that running elsewhere. This example clones the actual Nimble volume. In essence, the volume may reside on a different Kubernetes cluster or hosted on a bare-metal server or virtual machine.

For clarity, the Deployment I’m cloning this volume from is using a secret, I’m using that same secret hosted in dep.yaml.

apiVersion: v1 kind: Pod metadata: name: mariadb-ephemeral spec: spec: containers: - image: mariadb:latest name: mariadb env: - name: MYSQL_ROOT_PASSWORD valueFrom: secretKeyRef: name: mariadb key: password ports: - containerPort: 3306 name: mariadb volumeMounts: - name: mariadb-persistent-storage mountPath: /var/lib/mysql volumes: - name: mariadb-persistent-storage csi: driver: csi.hpe.com nodePublishSecretRef: name: nimble-secret volumeAttributes: cloneOf: pvc-ae007531-e315-4b81-b708-99778fa1ba87

The magic sauce here is of course the .volumes.csi stanza where you specify the driver and your volumeAttributes. Any Nimble StorageClass parameter is supported in volumeAttributes.

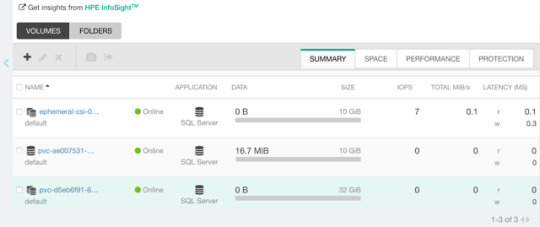

Once, cuddled, you can observe the volume on the Nimble array.

CSIBlockVolume

I’ve visited this feature before in my Frankenstein post where I cobbled together a corosync and pacemaker cluster running as a workload on Kubernetes backed by a ReadWriteMany block device.

A tad bit more mellow example is the same example we used for the OpenShift demos in the CSI driver beta video (fast forwarded).

Creating a block volume is very simple (if the driver supports it). By default volumes are created with the attribue volumeMode: Filesystem. Simply switch this to Block:

apiVersion: v1 kind: PersistentVolumeClaim metadata: name: my-pvc-block spec: accessModes: - ReadWriteOnce resources: requests: storage: 32Gi volumeMode: Block storageClassName: my-storageclass

Once cuddled, you may reference the PVC as any other PVC, but pay attention to the .spec.containers stanza:

apiVersion: v1 kind: Pod metadata: name: my-pod spec: containers: - name: ioping image: hpestorage/ioping command: [ "ioping" ] args: [ "/dev/xvda" ] volumeDevices: - name: data devicePath: /dev/xvda volumes: - name: data persistentVolumeClaim: claimName: my-pvc-block

Normally you would specify volumeMounts and mountPath for a PVC created with volumeMode: Filesystem.

Running this particular Pod using ioping would indeed indicate that we connected a block device:

kubectl logs my-pod -f 4 KiB <<< /dev/xvda (block device 32 GiB): request=1 time=3.71 ms (warmup) 4 KiB <<< /dev/xvda (block device 32 GiB): request=2 time=1.73 ms 4 KiB <<< /dev/xvda (block device 32 GiB): request=3 time=1.32 ms 4 KiB <<< /dev/xvda (block device 32 GiB): request=4 time=1.06 ms ^C

For competitors who landed on this blog in awe looking for Nimble weaknesses, the response time you see above is a Nimble Virtual Array running on my five year old laptop.

So, that was “it” for our graduated storage features! I'm looking forward to Kubernetes 1.17 already.

Release mascot!

I’m a sucker for logos and mascots. Congrats to the Kubernetes 1.16 release team.

0 notes

Quote

There’s no denying the vast popularity of Kubernetes. The newest version is here with the release of Kubernetes 1.18. In total, it includes 38 enhancements, many of which have graduated to GA. Let’s take a look at what this powerhouse brings to the table with version 1.18. Kubernetes’ first release of 2020 arrived on March 25. Kubernetes version 1.18 includes a grand total of 38 enhancements, graduating many features up the rungs from alpha, beta, to stable versions. Earlier this year, The Cloud Native Computing Foundation revealed data suggesting that 78% of survey respondents use Kubernetes in production. There’s no shortage of k8s. While there are 109 tools in the CNCF Cloud Native Landscape, 89% use different forms of Kubernetes. Let’s take a look at what this powerhouse brings to the table with version 1.18. Serverside Apply enters Beta part 2 Two versions ago, Serverside Apply entered beta phase. This is now being extended in 1.18 as it enters the second stage of beta. Included in its second beta phase is the ability to track and manage changes made to fields of new Kubernetes objects. With this update, you can keep all eyes on changes made to your resources. Topology Manager update The Topology Manager feature moves up to beta release in 1.18. According to the announcement blog, this feature “enables NUMA alignment of CPU and devices (such as SR-IOV VFs) that will allow your workload to run in an environment optimized for low-latency.” Changes to Ingress Some new changes for Ingress arrive. First, there is a new pathType field and a new IngressClass resource. Secondly, this now deprecates the kubernetes.io/ingress.class annotation. Windows CSI support moves to alpha Another new feature moves up and enters alpha. From the release blog: CSI proxy enables non-privileged (pre-approved) containers to perform privileged storage operations on Windows. CSI drivers can now be supported in Windows by leveraging CSI proxy. In SIG Storage, progress on features moves them up to general availability in 1.18. Raw Block Support, Volume Cloning, and CSIDriver Kubernetes API Object are all now moved to GA. Moving to alpha release is the Recursive Volume Ownership OnRootMismatch Option. Graduating to stable The following enhancements have all made the journey and are officially stable in 1.18. A round of applause for: Taint Based Eviction kubectl diff CSI Block storage support API Server dry run Pass Pod information in CSI calls Support Out-of-Tree vSphere Cloud Provider Support GMSA for Windows workloads Skip attach for non-attachable CSI volumes PVC cloning Moving kubectl package code to staging RunAsUserName for Windows AppProtocol for Services and Endpoints Extending Hugepage Feature client-go signature refactor to standardize options and context handling Node-local DNS cache Upgrade, ahoy! 1.18 release logo, designed by Maru Lango. Source. Set sail and upgrade via GitHub and download using kubeadm. Binary downloads for v1.18.0 are available here. Currently, there are no known listed issues for v1.18. However, be sure to read the urgent update notes before undertaking the journey to the latest edition. It lists notable changes that users should be aware of in kube-apiserver, kubelet, kubectl, client-go, and a number of deprecations, API changes, and breaking changes. View the changelog and release notes for a comprehensive list of everything new. Keep up with all future announcements and new releases by following the Google Group.

http://damianfallon.blogspot.com/2020/03/kubernetes-118-includes-38-enhancements.html

0 notes