#AutoML Framework

Explore tagged Tumblr posts

Text

AutoML: Automação e Eficiência no Aprendizado de Máquina

O termo AutoML está revolucionando a forma como as empresas e os cientistas de dados lidam com os desafios do aprendizado de máquina. Ao permitir a automação de tarefas complexas, o AutoML facilita a criação, implementação e otimização de modelos de aprendizado de máquina com menos intervenção humana. Neste artigo, exploraremos o que é AutoML, como funciona, suas principais vantagens e os casos…

#Aprendizado Automático#Automação Machine Learning#AutoML#AutoML código#AutoML Deep Learning#AutoML exemplos#AutoML Explicação#AutoML Framework#AutoML Google Cloud#AutoML no AWS#AutoML no Azure#AutoML no mercado#AutoML no negócio#AutoML para iniciantes#AutoML passo a passo#AutoML plataformas#AutoML Python#AutoML treinamento#AutoML Tutorial#AutoML VS tradicional#Benefícios do AutoML#Como funciona AutoML#Como usar AutoML#Desenvolvendo com AutoML#Ferramentas AutoML#Google AutoML#Inteligência artificial#Machine Learning#Modelos AutoML#O que é AutoML

0 notes

Text

Accelerating AI Advancements Via Unveiling the Top AutoML Frameworks | USAII®

Discover the game-changing AutoML frameworks propelling AI professionals into the future. Uncover the best tools and techniques and stay ahead of the curve with the latest innovations in AutoML.

Read more: https://bit.ly/3OR700s

AI professionals, AutoML frameworks, auto ml frameworks, best automl framework, AI tools, Automated Machine Learning, automated ml, best automl libraries python, automl deep learning, automated machine learning tools, machine learning application developers, AI developers

0 notes

Text

Introduction to AI Platforms

AI Platforms are powerful tools that allow businesses to automate complex tasks, provide real-time insights, and improve customer experiences. With their ability to process massive amounts of data, AI platforms can help organizations make more informed decisions, enhance productivity, and reduce costs.

These platforms incorporate advanced algorithms such as machine learning, natural language processing (NLP), and computer vision to analyze data through neural networks and predictive models. They offer a broad range of capabilities such as chatbots, image recognition, sentiment analysis, and recommendation engines.

Choosing the right AI platform is imperative for businesses that wish to stay ahead of the competition. Each platform has its strengths and weaknesses which must be assessed when deciding on a vendor. Moreover, an AI platform’s ability to integrate with existing systems is critical in effectively streamlining operations.

The history of AI platforms dates back to the 1950s, with the development of early artificial intelligence research. However, over time these technologies have evolved considerably – thanks to advancements in computing power and big data analytics. While still in their infancy stages just a few years ago – today’s AI platforms have matured into complex and feature-rich solutions designed specifically for business use cases.

Ready to have your mind blown and your workload lightened? Check out the best AI platforms for businesses and say goodbye to manual tasks:

Popular Commercial AI Platforms

To explore popular the top AI platforms and make informed decisions, you need to know the benefits each platform offers. With IBM Watson, Google Cloud AI Platform, Microsoft Azure AI Platform, and Amazon SageMaker in focus, this section shows the unique advantages each platform provides for various industries and cognitive services.

IBM Watson

The Innovative AI Platform by IBM:

Transform your business with the dynamic cognitive computing technology of IBM Watson. Enhance decision-making, automate operations, and accelerate the growth of your organization with this powerful tool.

Additional unique details about the platform:

IBM Watson’s Artificial intelligence streamlines workflows and personalizes experiences while enhancing predictive capabilities. The open-source ecosystem allows developers and businesses alike to integrate their innovative applications seamlessly.

Suggested implementation strategies:

1) Leverage Watson’s data visualization tools to clearly understand complex data sets and analyze them. 2) Utilize Watson’s Natural Language processing capabilities for sentiment analysis, identifying keywords, or contextual understanding.

By incorporating IBM Watson’s versatile machine learning functions into your operations, you can gain valuable insights into customer behavior patterns, track industry trends, improve decision-making abilities, and eventually boost revenue. Google’s AI platform is so powerful, it knows what you’re searching for before you do.

Google Cloud AI Platform

The AI platform provided by Google Cloud is an exceptional tool for businesses that major in delivering machine learning services. It provides a broad array of functionalities tailored to meet the diverse demands of clients all over the world.

The following table summarizes the features and capabilities offered by the Google Cloud AI Platform:FeaturesCapabilitiesData Management & Pre-processing

– Large-scale data processing

– Data Integration and Analysis tools

– Deep Learning Frameworks

– Data versioning tools

Model Training

– Scalable training

– AutoML tools

– Advanced tuning configurations

– Distributed Training on CPU/GPU/TPU

Prediction

– High-performance responses within seconds

– Accurate predictions resulting from models trained using large-scale datasets.

Monitoring

– Real-time model supervision and adjustment

– Comprehensive monitoring, management, and optimization of models across various stages including deployment.

One unique aspect of the Google Cloud AI platform is its prominent role in enabling any developer, regardless of their prior experience with machine learning, to build sophisticated models. This ease of use accelerates experimentation and fosters innovation.

Finally, it is worth noting that according to a study conducted by International Business Machines Corporation (IBM), brands that adopted AI for customer support purposes experienced 40% cost savings while improving customer satisfaction rates by 90%.

Continue Reading

2 notes

·

View notes

Text

Python Trends 2025: What's Hot in the World of Programming

Python continues to be one of the most influential programming languages in 2025, driving innovation in fields like artificial intelligence (AI), data science, and web development. Its simplicity, versatility, and robust community make it a favorite among developers, from beginners to experts. As technology evolves, Python trends are shaping the programming landscape, enabling businesses and individuals to create smarter, faster, and more efficient solutions.

At LJ Projects, we strive to stay ahead of these trends, helping businesses and developers leverage Python for cutting-edge projects. Here’s a look at what’s hot in Python in 2025 and how these trends are transforming programming.

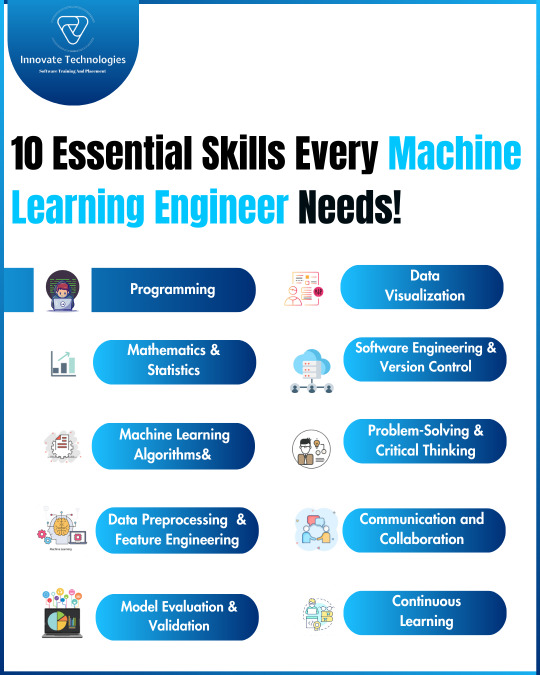

1. Python in AI and Machine Learning

Python has long been the backbone of AI and machine learning (ML), and in 2025, its role is more prominent than ever. The language continues to dominate this field thanks to its powerful libraries and frameworks, such as TensorFlow, PyTorch, and Scikit-learn. Key trends include:

AutoML Tools: Python libraries are increasingly offering automated machine learning tools, making it easier for non-experts to develop ML models.

Explainable AI: Python tools like SHAP and LIME are helping developers understand and explain AI decisions, a critical need for building trust in AI systems.

Edge AI: Python is playing a pivotal role in deploying AI models on edge devices like IoT gadgets and smartphones, enhancing real-time decision-making.

2. Data Science and Big Data Integration

As organisations rely more on data-driven decisions, Python remains the go-to language for data science. Its libraries like Pandas, NumPy, and Matplotlib are evolving to handle larger datasets with greater efficiency. Notable trends include:

Real-Time Analytics: Python’s frameworks are enabling the processing of real-time data streams, crucial for industries like finance, healthcare, and logistics.

Cloud Integration: Python’s compatibility with cloud platforms such as AWS, Google Cloud, and Azure makes it a key player in big data and analytics.

Interactive Dashboards: Python tools like Dash and Streamlit are making it easier to build real-time, interactive dashboards for visualising complex data.

3. Python for Web Development

Python’s versatility extends to web development, where it continues to simplify the process of creating powerful, scalable applications. Trends for 2025 include:

Asynchronous Frameworks: Libraries like FastAPI and asyncio are gaining traction for building faster, high-performance web applications.

API-First Development: Python frameworks like Flask and Django are evolving to support API-first strategies, streamlining backend development for mobile and web apps.

Integration with Frontend Frameworks: Python is increasingly being paired with modern frontend frameworks like React and Vue.js for full-stack development.

4. Python in Cybersecurity

Python’s ease of use and extensive library support make it a natural choice for cybersecurity applications. In 2025, Python trends in this domain include:

Automated Threat Detection: Python’s integration with AI and ML is enabling advanced threat detection systems, helping organizations stay ahead of cyberattacks.

Penetration Testing Tools: Libraries like Scapy and Nmap are simplifying network testing and vulnerability assessments.

Secure Application Development: Python frameworks are increasingly incorporating built-in security features, helping developers create safer applications.

5. Python in Blockchain and Web3

Python’s adaptability has positioned it as a major player in the rapidly evolving world of blockchain and Web3 technologies. Key developments include:

Smart Contracts: Tools like web3.py are making it easier to develop and interact with blockchain smart contracts.

Blockchain Analytics: Python libraries are being used to analyse and visualise blockchain transactions, enhancing transparency and trust in decentralised systems.

Decentralised Applications (dApps): Python frameworks are evolving to support scalable, secure dApp development.

6. Automation and DevOps

Automation remains one of Python’s strongest applications, and in 2025, its role in DevOps is expanding. Key trends include:

Infrastructure as Code (IaC): Python tools like Ansible and Terraform are streamlining infrastructure management.

CI/CD Pipelines: Python scripts are being widely used to automate testing and deployment pipelines, ensuring faster, more reliable software releases.

Task Automation: From automating routine tasks to managing complex workflows, Python remains a critical tool for improving productivity.

Why Python is Thriving in 2025

Python’s sustained success is due to several factors:

Community Support: Python’s global community ensures constant updates, new libraries, and frameworks, keeping it relevant across industries.

Ease of Learning: Its straightforward syntax makes Python accessible to beginners while remaining powerful for advanced users.

Versatility: Python’s ability to adapt to new technologies and integrate with other languages ensures its ongoing popularity.

Conclusion

Python continues to be a driving force in programming, shaping the future of technology with innovative applications across industries. From AI and data science to cybersecurity and blockchain, Python trends in 2025 highlight its versatility and importance in solving modern challenges.

At LJ Projects, we are committed to helping businesses and developers harness the full potential of Python to stay competitive and innovative. Whether you’re looking to build AI solutions, create secure applications, or streamline processes, Python is the language of the future. Stay ahead of the curve and explore the limitless possibilities Python offers in 2025.

#Python2025#ProgrammingTrends#PythonCommunity#TechInnovation#FutureOfProgramming#PythonDevelopers#CodingTrends#AIandPython#ProgrammingFuture#PythonUpdates#TechTrends2025#PythonForBeginners#AdvancedPython#MachineLearningWithPython#CodeTheFuture

0 notes

Text

MLOps Consulting Services: Revolutionizing Machine Learning Operations

MLOps Consulting Services | Goognu

In the age of data-driven decision-making, machine learning (ML) has emerged as a transformative technology for businesses across industries. However, harnessing its full potential requires a structured approach to managing machine learning operations efficiently. This is where MLOps Consulting Services come into play, bridging the gap between development and operations to ensure seamless workflows, automation, and robust security. Goognu, a trusted name in MLOps consulting, provides tailored solutions to empower organizations in optimizing their ML ecosystems.

Understanding MLOps Consulting Services

Machine learning operations, or MLOps, encompass practices that streamline and enhance the lifecycle of machine learning models, from development to deployment and beyond. Goognu’s MLOps Consulting Services aim to help businesses adopt industry best practices, assess their current ML maturity, and develop a roadmap for continuous improvement. Whether you’re starting your ML journey or looking to refine existing processes, Goognu’s services ensure efficiency, scalability, and innovation.

Key offerings include:

Automating ML pipelines to enhance productivity.

Leveraging AutoML platforms for streamlined model development.

Ensuring reliable model training, deployment, and maintenance.

Providing scalable tools and resources for diverse business needs.

Enhancing workflows for seamless machine learning operations.

The Goognu Advantage in MLOps Consulting Services

Goognu stands out as a reliable partner for businesses seeking to optimize their machine learning operations. Here’s why Goognu is a preferred choice:

1. Expertise Across the ML Lifecycle

Goognu team of seasoned professionals manages every aspect of the ML lifecycle, from data preparation to model serving and monitoring. Their expertise ensures that models are deployed efficiently, perform reliably, and adapt to changing business requirements.

2. Customized Solutions for Unique Needs

Recognizing that no two businesses are the same, Goognu tailors its solutions to meet specific client requirements. By combining open-source tools with robust commercial frameworks, they provide flexible setups that deliver optimal results while keeping costs under control.

3. Commitment to Security

In today’s digital landscape, security is paramount. Goognu employs strong encryption and comprehensive security measures to protect data at every stage of the ML lifecycle—whether it’s in use, transit, or storage.

4. Cost-Effective Operations

Goognu offers flexible deployment options, including cloud, on-premises, and hybrid setups. This approach ensures cost efficiency and eliminates vendor lock-in, allowing businesses to adapt and grow without constraints.

5. 24/7 Support

Machine learning operations require continuous monitoring and prompt issue resolution. Goognu provides round-the-clock support, ensuring that clients have access to assistance whenever needed.

Core Services Offered by Goognu

Goognu MLOps Consulting Services encompass a wide range of capabilities designed to help businesses achieve their machine learning objectives efficiently:

1. Aligning ML with Business Goals

Successful machine learning initiatives must align with overarching business objectives. Goognu ensures that ML strategies are purpose-built to drive measurable results and foster innovation.

2. Data Preparation and Management

High-quality data is the backbone of effective machine learning. Goognu excels in data preparation and management, ensuring that datasets are clean, structured, and ready for model training.

3. Model Training and Evaluation

Training and evaluating models are critical to their success. Goognu leverages advanced tools and techniques to ensure models meet performance benchmarks and business requirements.

4. Model Serving and Monitoring

Deploying models is just the beginning. Goognu provides robust solutions for serving and monitoring models in production, ensuring consistent performance, timely updates, and reduced downtime.

Goognu Approach to MLOps Consulting

Goognu adopts a comprehensive approach to MLOps consulting, focusing on key areas that drive efficiency and innovation:

Automating Workflows

By automating infrastructure, data preparation, and routine tasks, Goognu accelerates ML workflows. This enables teams to focus on strategic initiatives and innovation.

End-to-End Development Support

From initial model design to deployment and scaling, Goognu provides end-to-end support using the latest tools and technologies. This eliminates the need for in-house expertise, allowing businesses to concentrate on core operations.

Flexible Toolsets

Goognu solutions integrate open-source tools with commercial frameworks, creating powerful, user-friendly setups tailored to client needs. This flexibility ensures seamless operations and adaptability.

Cost Optimization

Flexible deployment options—including cloud, on-premises, and hybrid—allow businesses to manage costs effectively while maintaining operational excellence. Goognu approach ensures that ML projects remain financially viable.

Collaboration and Organization

Efficient data management and automated processes foster collaboration among team members. By simplifying workflows, Goognu helps businesses achieve project goals with ease.

Top-Tier Security

Strong encryption and stringent security measures safeguard sensitive data throughout its lifecycle. Goognu commitment to security builds trust and ensures compliance with industry standards.

Transformative Impact of MLOps Consulting Services

Goognu MLOps Consulting Services have delivered significant value to businesses across industries. By optimizing machine learning operations, clients have achieved:

Faster time-to-market for ML solutions.

Reduced operational costs through automation.

Improved model performance and reliability.

Enhanced decision-making with timely insights.

Client Success Stories

Several organizations have transformed their machine learning operations with Goognu support:

An IT company achieved a 40% reduction in deployment time by optimizing their ML pipelines with Goognu.

A healthcare provider enhanced patient outcomes by improving model accuracy and reliability.

A financial services firm implemented Goognu MLOps framework to achieve scalability and compliance, realizing significant cost savings.

The Growing Importance of MLOps

As machine learning becomes a cornerstone of modern business strategies, the need for efficient MLOps practices continues to grow. Key challenges that organizations face include:

Managing complex workflows and infrastructure.

Controlling the high costs associated with ML operations.

Addressing security concerns around sensitive data.

MLOps Consulting Services address these challenges by:

Automating repetitive and time-consuming tasks.

Providing scalable, adaptable solutions.

Ensuring robust security measures.

By adopting MLOps best practices, businesses can unlock the full potential of their machine learning initiatives, driving innovation and competitive advantage.

Partner with Goognu for MLOps Excellence

Goognu is committed to helping organizations succeed in their machine learning endeavors. With comprehensive expertise, tailored solutions, and unwavering support, Goognu MLOps Consulting Services empower businesses to optimize their operations and achieve their goals.

0 notes

Text

Which DSML tool supports automated machine learning workflows?

Automated Machine Learning (AutoML) workflows simplify the process of building and deploying machine learning models by automating tasks like feature selection, model training, and hyperparameter tuning. Several tools support these workflows, with popular options including H2O.ai, Google Cloud AutoML, and Azure Machine Learning.

H2O.ai offers a comprehensive AutoML platform that supports feature engineering, algorithm selection, and model evaluation. Its open-source framework, H2O, is widely used for predictive analytics and is compatible with R and Python.

Google Cloud AutoML enables businesses to build custom machine learning models with minimal expertise, focusing on tasks like image recognition, text analysis, and translation. It’s particularly beneficial for integrating ML with cloud-based applications.

Azure Machine Learning provides a robust AutoML service that automates the entire model development lifecycle, from preprocessing to deployment. Its integration with Microsoft tools makes it ideal for enterprise environments.

These tools help reduce the complexity of machine learning, allowing professionals to focus on insights rather than manual tuning. If you're aspiring to explore these technologies, enrolling in a data science and machine learning course can provide you with the foundational knowledge and hands-on experience to excel.

0 notes

Text

Generative AI in the Cloud: Best Practices for Seamless Integration

Generative AI, a subset of artificial intelligence capable of producing new and creative content, has seen widespread adoption across industries. From generating realistic images to creating personalized marketing content, its potential is transformative. However, deploying and managing generative AI applications can be resource-intensive and complex. Cloud computing has emerged as the ideal partner for this technology, providing the scalability, flexibility, and computing power required.

This blog explores best practices for seamlessly integrating generative AI development services with cloud consulting services, ensuring optimal performance and scalability.

1. Understanding the Synergy Between Generative AI and Cloud Computing

Why Generative AI Needs the Cloud

Generative AI models are data-intensive and require substantial computational resources. For instance, training models like GPT or image generators like DALL-E involves processing large datasets and running billions of parameters. Cloud platforms provide:

Scalability: Dynamically adjust resources based on workload demands.

Cost Efficiency: Pay-as-you-go models to avoid high upfront infrastructure costs.

Accessibility: Centralized storage and computing make AI resources accessible globally.

How Cloud Consulting Services Add Value

Cloud consulting services help businesses:

Design architectures tailored to AI workloads.

Optimize cost and performance through resource allocation.

Navigate compliance and security challenges.

2. Choosing the Right Cloud Platform for Generative AI

Factors to Consider

When selecting a cloud platform for generative AI, focus on the following factors:

GPU and TPU Support: Look for platforms offering high-performance computing instances optimized for AI.

Storage Capabilities: Generative AI models require fast and scalable storage.

Framework Compatibility: Ensure the platform supports AI frameworks like TensorFlow, PyTorch, or Hugging Face.

Top Cloud Platforms for Generative AI

AWS (Amazon Web Services): Offers SageMaker for AI model training and deployment.

Google Cloud: Features AI tools like Vertex AI and TPU support.

Microsoft Azure: Provides Azure AI and machine learning services.

IBM Cloud: Known for its AI lifecycle management tools.

Cloud Consulting Insight

A cloud consultant can assess your AI workload requirements and recommend the best platform based on budget, scalability needs, and compliance requirements.

3. Best Practices for Seamless Integration

3.1. Define Clear Objectives

Before integrating generative AI with the cloud:

Identify use cases (e.g., content generation, predictive modeling).

Outline KPIs such as performance metrics, scalability goals, and budget constraints.

3.2. Optimize Model Training

Training generative AI models is resource-heavy. Best practices include:

Preprocessing Data in the Cloud: Use cloud-based tools for cleaning and organizing training data.

Distributed Training: Leverage multiple nodes for faster training.

AutoML Tools: Simplify model training using tools like Google Cloud AutoML or AWS AutoPilot.

3.3. Adopt a Cloud-Native Approach

Design generative AI solutions with cloud-native principles:

Use containers (e.g., Docker) for portability.

Orchestrate workloads with Kubernetes for scalability.

Employ serverless computing to eliminate server management.

3.4. Implement Efficient Resource Management

Cloud platforms charge based on usage, so resource management is critical.

Use spot instances or reserved instances for cost savings.

Automate scaling to match resource demand.

Monitor usage with cloud-native tools like AWS CloudWatch or Google Cloud Monitoring.

3.5. Focus on Security and Compliance

Generative AI applications often handle sensitive data. Best practices include:

Encrypt data at rest and in transit.

Use Identity and Access Management (IAM) policies to restrict access.

Comply with regulations like GDPR, HIPAA, or SOC 2.

3.6. Test Before Full Deployment

Run pilot projects to:

Assess model performance on real-world data.

Identify potential bottlenecks in cloud infrastructure.

Gather feedback for iterative improvement.

4. The Role of Cloud Consulting Services in Integration

Tailored Cloud Architecture Design

Cloud consultants help design architectures optimized for AI workloads, ensuring high availability, fault tolerance, and cost efficiency.

Cost Management and Optimization

Consultants analyze usage patterns and recommend cost-saving strategies like reserved instances, discounts, or rightsizing resources.

Performance Tuning

Cloud consultants monitor performance and implement strategies to reduce latency, improve model inference times, and optimize data pipelines.

Ongoing Support and Maintenance

From updating AI frameworks to scaling infrastructure, cloud consulting services provide end-to-end support, ensuring seamless operation.

5. Case Study: Generative AI in the Cloud

Scenario: A marketing agency wanted to deploy a generative AI model to create personalized ad campaigns for clients. Challenges:

High computational demands for training models.

Managing fluctuating workloads during campaign periods.

Ensuring data security for client information.

Solution:

Cloud Platform: Google Cloud was chosen for its TPU support and scalability.

Cloud Consulting: Consultants designed a hybrid cloud solution combining on-premises resources with cloud-based training environments.

Implementation: Auto-scaling was configured to handle workload spikes, and AI pipelines were containerized for portability. Results:

40% cost savings compared to an on-premise solution.

50% faster campaign deployment times.

Enhanced security through end-to-end encryption.

6. Emerging Trends in Generative AI and Cloud Integration

6.1. Edge AI and Generative Models

Generative AI is moving towards edge devices, allowing real-time content creation without relying on centralized cloud servers.

6.2. Multi-Cloud Strategies

Businesses are adopting multi-cloud setups to avoid vendor lock-in and optimize performance.

6.3. Federated Learning in the Cloud

Cloud platforms are enabling federated learning, allowing AI models to learn from decentralized data sources while maintaining privacy.

6.4. Green AI Initiatives

Cloud providers are focusing on sustainable AI practices, offering carbon-neutral data centers and energy-efficient compute instances.

7. Future Outlook: Generative AI and Cloud Services

The integration of generative AI development services with cloud consulting services will continue to drive innovation. Businesses that embrace best practices will benefit from:

Rapid scalability to meet growing demands.

Cost-effective deployment of cutting-edge AI solutions.

Enhanced security and compliance in a competitive landscape.

With advancements in both generative AI and cloud technologies, the possibilities for transformation are endless.

Conclusion

Integrating generative AI with cloud computing is not just a trend—it’s a necessity for businesses looking to innovate and scale. By leveraging the expertise of cloud consulting services, organizations can ensure seamless integration while optimizing costs and performance.

Adopting the best practices outlined in this blog will help businesses unlock the full potential of generative AI in the cloud, empowering them to create, innovate, and thrive in a rapidly evolving digital landscape.

Would you like to explore implementation strategies or specific cloud platform comparisons in detail?

0 notes

Text

KNIME Analytics Platform

KNIME Analytics Platform: Open-Source Data Science and Machine Learning for All In the world of data science and machine learning, KNIME Analytics Platform stands out as a powerful and versatile solution that is accessible to both technical and non-technical users alike. Known for its open-source foundation, KNIME provides a flexible, visual workflow interface that enables users to create, deploy, and manage data science projects with ease. Whether used by individual data scientists or entire enterprise teams, KNIME supports the full data science lifecycle—from data integration and transformation to machine learning and deployment. Empowering Data Science with a Visual Workflow Interface At the heart of KNIME’s appeal is its drag-and-drop interface, which allows users to design workflows without needing to code. This visual approach democratizes data science, allowing business analysts, data scientists, and engineers to collaborate seamlessly and create powerful analytics workflows. KNIME’s modular architecture also enables users to expand its functionality through a vast library of nodes, extensions, and community-contributed components, making it one of the most flexible platforms for data science and machine learning. Key Features of KNIME Analytics Platform KNIME’s comprehensive feature set addresses a wide range of data science needs: - Data Preparation and ETL: KNIME provides robust tools for data integration, cleansing, and transformation, supporting everything from structured to unstructured data sources. The platform’s ETL (Extract, Transform, Load) capabilities are highly customizable, making it easy to prepare data for analysis. - Machine Learning and AutoML: KNIME comes with a suite of built-in machine learning algorithms, allowing users to build models directly within the platform. It also offers Automated Machine Learning (AutoML) capabilities, simplifying tasks like model selection and hyperparameter tuning, so users can rapidly develop effective machine learning models. - Explainable AI (XAI): With the growing importance of model transparency, KNIME provides tools for explainability and interpretability, such as feature impact analysis and interactive visualizations. These tools enable users to understand how models make predictions, fostering trust and facilitating decision-making in regulated industries. - Integration with External Tools and Libraries: KNIME supports integration with popular machine learning libraries and tools, including TensorFlow, H2O.ai, Scikit-learn, and Python and R scripts. This compatibility allows advanced users to leverage KNIME’s workflow environment alongside powerful external libraries, expanding the platform’s modeling and analytical capabilities. - Big Data and Cloud Extensions: KNIME offers extensions for big data processing, supporting frameworks like Apache Spark and Hadoop. Additionally, KNIME integrates with cloud providers, including AWS, Google Cloud, and Microsoft Azure, making it suitable for organizations with cloud-based data architectures. - Model Deployment and Management with KNIME Server: For enterprise users, KNIME Server provides enhanced capabilities for model deployment, automation, and monitoring. KNIME Server enables teams to deploy models to production environments with ease and facilitates collaboration by allowing multiple users to work on projects concurrently. Diverse Applications Across Industries KNIME Analytics Platform is utilized across various industries for a wide range of applications: - Customer Analytics and Marketing: KNIME enables businesses to perform customer segmentation, sentiment analysis, and predictive marketing, helping companies deliver personalized experiences and optimize marketing strategies. - Financial Services: In finance, KNIME is used for fraud detection, credit scoring, and risk assessment, where accurate predictions and data integrity are essential. - Healthcare and Life Sciences: KNIME supports healthcare providers and researchers with applications such as outcome prediction, resource optimization, and patient data analytics. - Manufacturing and IoT: The platform’s capabilities in anomaly detection and predictive maintenance make it ideal for manufacturing and IoT applications, where data-driven insights are key to operational efficiency. Deployment Flexibility and Integration Capabilities KNIME’s flexibility extends to its deployment options. KNIME Analytics Platform is available as a free, open-source desktop application, while KNIME Server provides enterprise-level features for deployment, collaboration, and automation. The platform’s support for Docker containers also enables organizations to deploy models in various environments, including hybrid and cloud setups. Additionally, KNIME integrates seamlessly with databases, data lakes, business intelligence tools, and external libraries, allowing it to function as a core component of a company’s data architecture. Pricing and Community Support KNIME offers both free and commercial licensing options. The open-source KNIME Analytics Platform is free to use, making it an attractive option for data science teams looking to minimize costs while maximizing capabilities. For organizations that require advanced deployment, monitoring, and collaboration, KNIME Server is available through a subscription-based model. The KNIME community is an integral part of the platform’s success. With an active forum, numerous tutorials, and a repository of workflows on KNIME Hub, users can find solutions to common challenges, share their work, and build on contributions from other users. Additionally, KNIME offers dedicated support and learning resources through KNIME Learning Hub and KNIME Academy, ensuring users have access to continuous training. Conclusion KNIME Analytics Platform is a robust, flexible, and accessible data science tool that empowers users to design, deploy, and manage data workflows without the need for extensive coding. From data preparation and machine learning to deployment and interpretability, KNIME’s extensive capabilities make it a valuable asset for organizations across industries. With its open-source foundation, active community, and enterprise-ready features, KNIME provides a scalable solution for data-driven decision-making and a compelling option for any organization looking to integrate data science into their operations. Read the full article

#AutomatedMachineLearning#AutoML#dataintegration#datapreparation#datascienceplatform#datatransformation#datawrangling#ETL#KNIME#KNIMEAnalyticsPlatform#machinelearning#open-sourceAI

0 notes

Text

Emerging Trends in Data Science: A Guide for M.Sc. Students

The field of data science is advancing rapidly, continuously shaped by new technologies, methodologies, and applications. For students pursuing an M.Sc. in Data Science, staying abreast of these developments is essential to building a successful career. Emerging trends like artificial intelligence (AI), deep learning, data ethics, edge computing, and automated machine learning (AutoML) are redefining the scope and responsibilities of data science professionals. Programs like those offered at Suryadatta College of Management, Information Research & Technology (SCMIRT) prepare students not only by teaching foundational skills but also by encouraging awareness of these cutting-edge advancements. Here’s a guide to the current trends and tips on how M.Sc. students can adapt and thrive in this dynamic field.

Current Trends in Data Science

Artificial Intelligence (AI) and Deep Learning: AI is perhaps the most influential trend impacting data science today. With applications in computer vision, natural language processing (NLP), and predictive analytics, AI is becoming a critical skill for data scientists. Deep learning, a subset of AI, uses neural networks with multiple layers to process vast amounts of data, making it a powerful tool for complex pattern recognition. SCMIRT’s program provides exposure to AI and deep learning, ensuring that students understand both the theoretical foundations and real-world applications of these technologies.

Data Ethics and Privacy: As the use of data expands, so do concerns over data privacy and ethics. Companies are increasingly expected to handle data responsibly, ensuring user privacy and avoiding biases in their models. Data ethics has become a prominent area of focus for data scientists, requiring them to consider how data is collected, processed, and used. Courses on data ethics at SCMIRT equip students with the knowledge to build responsible models and promote ethical data practices in their future careers.

Edge Computing: Traditional data processing happens in centralized data centers, but edge computing moves data storage and computation closer to the data source. This trend is particularly important for applications where real-time data processing is essential, such as IoT devices and autonomous vehicles. For data science students, understanding edge computing is crucial for working with decentralized systems that prioritize speed and efficiency.

Automated Machine Learning (AutoML): AutoML is revolutionizing the data science workflow by automating parts of the machine learning pipeline, such as feature selection, model selection, and hyperparameter tuning. This allows data scientists to focus on higher-level tasks and decision-making, while machines handle repetitive processes. AutoML is especially beneficial for those looking to build models quickly, making it a valuable tool for students entering fast-paced industries.

These trends are transforming data science by expanding its applications and pushing the boundaries of what’s possible. For M.Sc. students, understanding and working with these trends is critical to becoming versatile, capable professionals.

Impact of These Trends on the Field

The rise of these trends is reshaping the roles and expectations within data science:

AI and Deep Learning Expertise: As more industries adopt AI solutions, data scientists are expected to have expertise in these areas. Familiarity with deep learning frameworks, like TensorFlow and PyTorch, is becoming a standard requirement for many advanced data science roles.

Ethical Awareness: Data scientists today must be vigilant about ethical considerations, particularly as governments introduce stricter regulations on data privacy. Understanding frameworks like GDPR (General Data Protection Regulation) and HIPAA (Health Insurance Portability and Accountability Act) is essential, and data scientists are increasingly being tasked with ensuring compliance.

Adaptability to Decentralized Data Processing: The shift to edge computing is prompting companies to look for data scientists with knowledge of distributed systems and cloud services. This trend creates opportunities in fields where real-time processing is critical, such as healthcare and autonomous vehicles.

Efficiency through Automation: AutoML allows data scientists to streamline their workflows and build prototypes faster. This trend is leading to a shift in roles, where professionals focus more on strategy and business impact rather than model building alone.

Adapting as a Student

To succeed in this rapidly evolving landscape, M.Sc. students must adopt a proactive approach to learning and development. Here are some tips on how to stay updated with these trends:

Enroll in Specialized Courses: Many M.Sc. programs, like those at SCMIRT, offer courses tailored to emerging technologies. Enrolling in electives focused on AI, deep learning, and big data can provide a solid foundation in the latest trends.

Participate in Research and Projects: Engaging in research projects allows students to apply theoretical knowledge to real-world scenarios. Projects related to data ethics, edge computing, and AutoML are particularly relevant and can provide valuable hands-on experience.

Utilize Online Resources: Platforms like Coursera, Udacity, and edX offer courses on the latest technologies and tools in data science. Supplementing your coursework with these online resources can help you stay ahead in the field.

Attend Conferences and Workshops: Events like the IEEE International Conference on Data Science or regional workshops are great opportunities to learn from experts and network with peers. SCMIRT encourages students to participate in such events, where they can gain insights into industry trends and emerging tools.

Follow Industry Publications and Blogs: Resources like Medium, Towards Data Science, and KDnuggets regularly publish articles on the latest trends in data science. Reading these publications helps students stay informed and engaged with the field.

As data science continues to evolve, emerging trends such as AI, deep learning, data ethics, edge computing, and AutoML are reshaping the landscape of the field. For M.Sc. students, understanding these trends and adapting to them is essential for building a successful career. Programs like Suryadatta College of Management, Information Research & Technology (SCMIRT) equip students with the knowledge and skills needed to navigate these changes, blending a strong academic foundation with exposure to real-world applications. By adopting a continuous learning mindset and staying engaged with the latest developments, M.Sc. students can position themselves as adaptable, innovative professionals ready to make an impact in the ever-evolving world of data science.

0 notes

Text

Title: Unlocking Insights: A Comprehensive Guide to Data Science

Introduction

Overview of Data Science: Define data science and its importance in today’s data-driven world. Explain how it combines statistics, computer science, and domain expertise to extract meaningful insights from data.

Purpose of the Guide: Outline what readers can expect to learn, including key concepts, tools, and applications of data science.

Chapter 1: The Foundations of Data Science

What is Data Science?: Delve into the definition and scope of data science.

Key Concepts: Introduce core concepts like big data, data mining, and machine learning.

The Data Science Lifecycle: Describe the stages of a data science project, from data collection

to deployment.

Chapter 2: Data Collection and Preparation

Data Sources: Discuss various sources of data (structured vs. unstructured) and the importance of data quality.

Data Cleaning: Explain techniques for handling missing values, outliers, and inconsistencies.

Data Transformation: Introduce methods for data normalization, encoding categorical variables, and feature selection.

Chapter 3: Exploratory Data Analysis (EDA)

Importance of EDA: Highlight the role of EDA in understanding data distributions and relationships.

Visualization Tools: Discuss tools and libraries (e.g., Matplotlib, Seaborn, Tableau) for data visualization.

Statistical Techniques: Introduce basic statistical methods used in EDA, such as correlation analysis and hypothesis testing.

Chapter 4: Machine Learning Basics

What is Machine Learning?: Define machine learning and its categories (supervised, unsupervised, reinforcement learning).

Key Algorithms: Provide an overview of popular algorithms, including linear regression, decision trees, clustering, and neural networks.

Model Evaluation: Discuss metrics for evaluating model performance (e.g., accuracy, precision, recall) and techniques like cross-validation.

Chapter 5: Advanced Topics in Data Science

Deep Learning: Introduce deep learning concepts and frameworks (e.g., TensorFlow, PyTorch).

Natural Language Processing (NLP): Discuss the applications of NLP and relevant techniques (e.g., sentiment analysis, topic modeling).

Big Data Technologies: Explore tools and frameworks for handling large datasets (e.g., Hadoop, Spark).

Chapter 6: Applications of Data Science

Industry Use Cases: Highlight how various industries (healthcare, finance, retail) leverage data science for decision-making.

Real-World Projects: Provide examples of successful data science projects and their impact.

Chapter 7: Tools and Technologies for Data Science

Programming Languages: Discuss the significance of Python and R in data science.

Data Science Libraries: Introduce key libraries (e.g., Pandas, NumPy, Scikit-learn) and their functionalities.

Data Visualization Tools: Overview of tools used for creating impactful visualizations.

Chapter 8: The Future of Data Science

Trends and Innovations: Discuss emerging trends such as AI ethics, automated machine learning (AutoML), and edge computing.

Career Pathways: Explore career opportunities in data science, including roles like data analyst, data engineer, and machine learning engineer.

Conclusion

Key Takeaways: Summarize the main points covered in the guide.

Next Steps for Readers: Encourage readers to continue their learning journey, suggest resources (books, online courses, communities), and provide tips for starting their own data science projects.

Data science course in chennai

Data training in chennai

Data analytics course in chennai

0 notes

Text

Advancing Technology: The New Developments in Data Science, AI, and ML Training

As industries become increasingly data-driven, the demand for professionals skilled in data science, AI, and machine learning (ML) is at an all-time high. These fields are reshaping business processes, creating more intelligent systems, and driving innovation across sectors such as healthcare, finance, and retail. With technologies rapidly evolving, professionals must stay updated through comprehensive data science, AI, ML training programs to remain competitive in their careers and drive impactful innovations across sectors.

New Trends in Data Science, AI, and ML

New trends are transforming how data science, AI, and ML are used across industries. Explainable AI (XAI) focuses on making machine learning models more transparent, helping build trust in AI-driven decisions. This is crucial for fields like healthcare and autonomous driving, where understanding AI decisions is vital. Additionally, Federated Learning allows machine learning models to train across multiple devices without sharing raw data, addressing privacy concerns in sensitive industries like healthcare and finance, where data security is essential.

The Role of Automation and AutoML

Automation is revolutionizing data science and AI workflows. AutoML (Automated Machine Learning) is a game-changer that allows developers to automate tasks such as feature engineering, model selection, and hyperparameter tuning, significantly reducing the time and expertise required to develop high-performing models. AutoML is particularly beneficial for businesses that want to implement machine learning solutions but lack the in-house expertise to build models from scratch. Moreover, automation enables faster deployment and scalability, allowing organizations to stay ahead of competitors and respond quickly to market demands.

Additionally, tools like DataRobot and Google’s AutoML are empowering non-technical teams to harness the power of AI and ML without deep coding knowledge. Professionals who undergo data science, AI, and ML training gain hands-on experience with these automation tools, preparing them for real-world applications and equipping them to lead innovation in their organizations.

Why Data Science, AI, and ML Training is Essential

With rapid technological advancements, continuous learning is essential for professionals in data science, AI, and ML. Training programs have evolved beyond basic tools like Python and TensorFlow, covering new frameworks, ethical considerations, and innovations in deep learning and neural networks. Additionally, training emphasizes data governance, which is crucial as companies handle increasing amounts of sensitive data. Understanding how to manage and secure large datasets is vital for any data professional. Data science, AI, ML training equips professionals to apply these technologies in practical scenarios, from personalized recommendations to predictive modeling for business efficiency.

Preparing for the Future with Data Science, AI, and ML Training

In an era of rapid technological change, investing in data science, AI, ML training is essential to staying competitive. These programs equip professionals with the latest tools and techniques while preparing them to implement cutting-edge solutions in their respective industries. Web Age Solutions offers comprehensive training, ensuring participants gain the expertise necessary to excel in this rapidly evolving landscape and drive future advancements in their fields. This investment helps professionals future-proof their careers while positioning organizations for sustained success in a data-driven world.

For more information visit: https://www.webagesolutions.com/courses/data-science-ai-ml-training

0 notes

Text

Leading the Future: Top 10 Companies in Artificial Intelligence

Artificial intelligence (AI) is transforming the world with solutions that enhance operations, automate tasks, and drive innovation across industries. As AI technology continues to evolve, businesses seek the best artificial intelligence companies to help them stay ahead of the curve. This blog highlights the top 10 AI companies at the forefront of the field, with Vee Technologies leading the list due to its pioneering AI solutions and expertise.

1. Vee Technologies

Vee Technologies stands as a leader among artificial intelligence companies, providing innovative AI solutions tailored to multiple industries, including healthcare, finance, and manufacturing.

From predictive analytics to robotic process automation (RPA) and natural language processing (NLP), Vee Technologies delivers cutting-edge AI technology that optimizes processes and improves decision-making. Its focus on real-world applications makes it one of the best AI companies for businesses seeking transformative solutions.

2. IBM

IBM offers Watson AI, a suite of AI services including machine learning, natural language processing (NLP), and analytics. IBM’s AI services are widely used in healthcare, finance, and customer service industries to automate workflows, generate insights, and build conversational bots.

Key AI Services:

Watson Assistant (Conversational AI)

Watson Studio (Machine Learning)

NLP tools for business insights

AI-powered data analytics

3. Google — Cloud AI & TensorFlow

Google is a pioneer in AI with products like Google Cloud AI and the open-source machine learning library, TensorFlow. Its AI services power everything from search engines to virtual assistants. Google also focuses on AI for healthcare and smart devices.

Key AI Services:

TensorFlow (ML framework)

Google Cloud AI & Vertex AI (AI solutions on the cloud)

Google Assistant (Voice AI)

AutoML (Custom ML models)

4. Microsoft — Azure AI

Microsoft’s Azure AI platform offers a range of cloud-based AI tools, enabling businesses to build intelligent applications. Microsoft integrates AI into productivity tools like Office 365 and Teams, enhancing automation and collaboration.

Key AI Services:

Azure Machine Learning

Computer Vision and Speech Services

Power BI (AI-powered analytics)

Azure OpenAI Service

5. Amazon — AWS AI

Amazon provides powerful AI tools through AWS AI, which helps companies build intelligent applications. Amazon also uses AI in its retail ecosystem, such as Alexa and recommendation engines.

Key AI Services:

Amazon SageMaker (Machine Learning)

Amazon Lex (Chatbots)

AWS Rekognition (Image analysis)

Amazon Polly (Text-to-Speech)

6. OpenAI — GPT and DALL-E

OpenAI is known for developing state-of-the-art AI models like GPT and DALL-E. These tools are transforming industries by enabling content generation, code writing, and advanced NLP capabilities.

Key AI Services:

GPT (Conversational AI)

DALL-E (Image generation from text)

Codex (AI-powered coding)

7. Meta — AI for Social Platforms and Metaverse

Meta integrates AI technology into its platforms like Facebook, Instagram, and WhatsApp. The company is also using AI to develop immersive experiences for the metaverse.

Key AI Services:

AI algorithms for content recommendations

Computer vision for image recognition

AI-powered chat and moderation tools

Research in augmented reality (AR) and virtual reality (VR)

8. Tesla — AI for Autonomous Driving

Tesla relies heavily on AI technology for its self-driving cars and smart manufacturing processes. Tesla’s AI models are designed to enable fully autonomous vehicles.

Key AI Services:

Full Self-Driving (FSD) AI

Neural Networks for vehicle safety

AI-powered manufacturing automation

9. NVIDIA — AI Hardware and Software

NVIDIA is a leader in providing hardware and software solutions for AI development. Its GPUs are widely used in AI research and deep learning applications. NVIDIA’s platforms power everything from gaming to autonomous vehicles.

Key AI Services:

GPUs for AI and ML training

CUDA (Parallel computing platform)

NVIDIA Omniverse (AI for virtual environments)

Deep Learning frameworks

10.SAP — AI for Enterprise Solutions

SAP integrates AI into its enterprise software to optimize operations and drive business transformation. AI capabilities within SAP help automate tasks, forecast trends, and improve customer engagement.

Key AI Services:

AI-powered ERP and CRM tools

Predictive analytics and forecasting

Intelligent automation in business processes

AI-enabled chatbots and virtual assistants

Conclusion

These companies are shaping the future of AI technology, each offering specialized solutions to meet business needs. From cloud AI platforms to autonomous systems, their innovations drive the widespread adoption of AI services across industries.

These AI companies are driving innovation and reshaping industries with their advanced AI technology. From automation to predictive analytics, the best artificial intelligence companies provide the tools and solutions businesses need to stay competitive. Leading the list is Vee Technologies, which stands out for its ability to deliver real-world AI solutions that drive success across industries. Partnering with these AI companies ensures that businesses can unlock new opportunities and thrive in a rapidly evolving landscape.

0 notes

Text

The Future of Data Science: Emerging Trends and Innovations

Data science is a rapidly evolving field that continues to shape how businesses and organizations operate. As technology advances and new challenges emerge, several key trends and innovations are poised to influence the future of data science. Here’s a look at some of the most promising developments on the horizon:

1. Integration of Artificial Intelligence and Machine Learning

The integration of Artificial Intelligence (AI) and Machine Learning (ML) with data science is set to deepen, driving more sophisticated analyses and predictions. AI and ML algorithms will continue to enhance the capabilities of data science, enabling more accurate predictions, automating complex tasks, and providing deeper insights. Innovations in areas like reinforcement learning and neural networks will further expand the potential applications of data science in fields ranging from finance to healthcare.

2. Advanced Data Analytics Platforms

The rise of advanced data analytics platforms is transforming how data is processed and analyzed. Cloud-based platforms are increasingly offering scalable solutions for managing large volumes of data and running complex analyses. Additionally, tools that integrate real-time analytics with streaming data will become more prevalent, allowing businesses to make instantaneous decisions based on the latest information. These platforms will also facilitate better collaboration among data scientists by providing shared workspaces and integrated tools.

3. Growth of Edge Computing

Edge computing is emerging as a crucial trend in data science, particularly with the proliferation of Internet of Things (IoT) devices. By processing data closer to the source — at the edge of the network — this approach reduces latency and bandwidth usage, enabling faster and more efficient data analysis. Edge computing will become increasingly important for applications that require real-time processing, such as autonomous vehicles, smart cities, and industrial IoT.

4. Enhanced Data Privacy and Security

As data science continues to evolve, so does the need for robust data privacy and security measures. Innovations in encryption, anonymization, and secure multi-party computation will play a critical role in protecting sensitive data and ensuring compliance with privacy regulations. The development of privacy-preserving techniques, such as federated learning, will allow organizations to train models on decentralized data without compromising individual privacy.

5. Expansion of Explainable AI (XAI)

Explainable AI (XAI) is gaining traction as a response to the growing need for transparency in AI and ML models. As AI systems become more complex, understanding how they make decisions is crucial for building trust and ensuring ethical use. Advances in XAI will provide clearer insights into how models arrive at their predictions, helping stakeholders interpret results and address potential biases.

6. Adoption of Automated Machine Learning (AutoML)

Automated Machine Learning (AutoML) is streamlining the process of building and deploying ML models by automating repetitive tasks such as feature selection, hyperparameter tuning, and model selection. AutoML tools are making it easier for non-experts to develop effective models and enabling data scientists to focus on more strategic aspects of their work. As AutoML technology matures, it will further democratize access to advanced data science capabilities.

7. Emphasis on Ethical AI and Data Governance

With the growing impact of AI and data science, ethical considerations and data governance will become increasingly important. Organizations will need to establish frameworks for responsible AI use, including guidelines for fairness, accountability, and transparency. Data governance practices will also need to address issues related to data quality, ownership, and compliance, ensuring that data is used ethically and in accordance with regulations.

8. Development of Advanced Data Visualization Techniques

The future of data science will see continued advancements in data visualization, making it easier to interpret and communicate complex data insights. Innovations in interactive visualizations, augmented reality (AR), and virtual reality (VR) will provide new ways to explore and present data, enhancing the ability to convey insights and drive decision-making.

0 notes

Text

Transforming Predictive Maintenance with Sriya Expert Index++ (SXI++): A New Era of Accuracy and Efficiency

In the ever-evolving world of manufacturing and industrial operations, predictive maintenance has become a critical focus area, where the ability to accurately forecast equipment failure and optimize tool usage can significantly impact operational efficiency and cost savings. The Sriya Expert Index++ (SXI++) is a cutting-edge innovation that revolutionizes predictive maintenance by applying advanced artificial intelligence and machine learning algorithms to transform complex, multi-dimensional data into actionable insights.

Understanding the Sriya Expert Index++ (SXI++):

The Sriya Expert Index++ is a dynamic score or index derived from a proprietary formula that integrates the power of 10 AI-ML algorithms. This index acts as a super feature, representing all the critical features in a dataset as a single, weighted score. The uniqueness of SXI++ lies in its ability to simplify multi-dimensional, challenging problems into more manageable two-dimensional solutions, enabling clearer interpretation and more effective decision-making.

SXI++ dynamically adjusts the weights of its algorithms in response to real-time data inputs, ensuring that the most significant features receive the necessary emphasis. This adaptability is crucial in manufacturing environments, where equipment and operational conditions can change rapidly, and timely responses are essential to prevent costly downtime.

The SXI++ introduces a significant advancement with the incorporation of a Proprietary Deep Neural Network. This enhancement allows the system to continuously improve its precision, classification, and scoring capabilities through experience, rather than relying solely on traditional algorithms. Over time, the precision and reliability of SXI++ improve, offering an increasingly powerful tool for predictive maintenance.

SXI++’s Impact on Predictive Maintenance:

The application of SXI++ in predictive maintenance is transformative. By simplifying and streamlining complex datasets, SXI++ enables organizations to predict tool wear and failure with greater accuracy. For instance, in a recent application involving a synthetic milling process dataset from Kaggle, the focus was on predicting Tool Use Time—a critical factor in determining when maintenance should be performed. The dataset comprised 10,000 rows and 13 features, representing various aspects of the milling process. Using AutoML techniques, including algorithms like XGBoost and Random Forest, an accuracy of 82% was achieved. However, with the integration of SXI++, the accuracy was significantly enhanced, reaching 97%.

Result

Correlation Graph

These metrics underscore SXI++'s capability to not only enhance prediction accuracy but also to focus on the most significant factors affecting Tool Use Time. By providing a clear and accurate representation of the factors influencing tool wear, SXI++ empowers maintenance teams to make informed decisions, reducing the likelihood of unexpected breakdowns and extending the operational lifespan of equipment.

Moreover, SXI++ offers a comprehensive decision-making framework through current and target decision trees. These visual representations of the factors influencing tool wear allow maintenance teams to pinpoint potential issues and take targeted actions to prevent them. By concentrating on the most critical features, SXI++ helps organizations optimize maintenance schedules, reduce downtime, and improve overall operational efficiency. Contact: [email protected]

1 note

·

View note

Text

Top Azure Services for Data Analytics and Machine Learning

In today’s data-driven world, mastering powerful cloud tools is essential. Microsoft Azure offers a suite of cloud-based services designed for data analytics and machine learning, and getting trained on these services can significantly boost your career. Whether you're looking to build predictive models, analyze large datasets, or integrate AI into your applications, Azure provides the tools you need. Here’s a look at some of the top Azure services for data analytics and machine learning, and how Microsoft Azure training can help you leverage these tools effectively.

1. Azure Synapse Analytics

Formerly known as Azure SQL Data Warehouse, Azure Synapse Analytics is a unified analytics service that integrates big data and data warehousing. To fully utilize its capabilities, specialized Microsoft Azure training can be incredibly beneficial.

Features:

Integrates with Azure Data Lake Storage for scalable storage.

Supports both serverless and provisioned resources for cost-efficiency.

Provides seamless integration with Power BI for advanced data visualization.

Use Cases: Data warehousing, big data analytics, and real-time data processing.

Training Benefits: Microsoft Azure training will help you understand how to set up and optimize Azure Synapse Analytics for your organization’s specific needs.

2. Azure Data Lake Storage (ADLS)

Azure Data Lake Storage is optimized for high-performance analytics on large datasets. Proper training in Microsoft Azure can help you manage and utilize this service more effectively.

Features:

Optimized for large-scale data processing.

Supports hierarchical namespace for better organization.

Integrates with Azure Synapse Analytics and Azure Databricks.

Use Cases: Big data storage, complex data processing, and analytics on unstructured data.

Training Benefits: Microsoft Azure training provides insights into best practices for managing and analyzing large datasets with ADLS.

3. Azure Machine Learning

Azure Machine Learning offers a comprehensive suite for building, training, and deploying machine learning models. Enrolling in Microsoft Azure training can give you the expertise needed to harness its full potential.

Features:

Automated Machine Learning (AutoML) for faster model development.

MLOps capabilities for model management and deployment.

Integration with Jupyter Notebooks and popular frameworks like TensorFlow and PyTorch.

Use Cases: Predictive modeling, custom machine learning solutions, and AI-driven applications.

Training Benefits: Microsoft Azure training will equip you with the skills to efficiently use Azure Machine Learning for your projects.

4. Azure Databricks

Azure Databricks is an Apache Spark-based analytics platform that facilitates collaborative work among data scientists, data engineers, and business analysts. Microsoft Azure training can help you leverage its full potential.

Features:

Fast, interactive, and scalable big data analytics.

Unified analytics platform that integrates with Azure Data Lake and Azure SQL Data Warehouse.

Built-in collaboration tools for shared workspaces and notebooks.

Use Cases: Data engineering, real-time analytics, and collaborative data science projects.

Training Benefits: Microsoft Azure training programs can teach you how to use Azure Databricks effectively for collaborative data analysis.

5. Azure Cognitive Services

Azure Cognitive Services provides AI APIs that make it easy to add intelligent features to your applications. With Microsoft Azure training, you can integrate these services seamlessly.

Features:

Includes APIs for computer vision, speech recognition, language understanding, and more.

Easy integration with existing applications through REST APIs.

Customizable models for specific business needs.

Use Cases: Image and speech recognition, language translation, and sentiment analysis.

Training Benefits: Microsoft Azure training will guide you on how to incorporate Azure Cognitive Services into your applications effectively.

6. Azure HDInsight

Azure HDInsight is a fully managed cloud service that simplifies big data processing using popular open-source frameworks. Microsoft Azure training can help you get the most out of this service.

Features:

Supports big data technologies like Hadoop, Spark, and Hive.

Integrates with Azure Data Lake and Azure SQL Data Warehouse.

Scalable and cost-effective with pay-as-you-go pricing.

Use Cases: Big data processing, data warehousing, and real-time stream processing.

Training Benefits: Microsoft Azure training will teach you how to deploy and manage HDInsight clusters for efficient big data processing.

7. Azure Stream Analytics

Azure Stream Analytics enables real-time data stream processing. Proper Microsoft Azure training can help you set up and manage real-time analytics pipelines effectively.

Features:

Real-time data processing with low-latency and high-throughput capabilities.

Integration with Azure Event Hubs and Azure IoT Hub for data ingestion.

Outputs results to Azure Blob Storage, Power BI, and other destinations.

Use Cases: Real-time data analytics, event monitoring, and IoT data processing.

Training Benefits: Microsoft Azure training programs cover how to use Azure Stream Analytics to build efficient real-time data pipelines.

8. Power BI

While not exclusively an Azure service, Power BI integrates seamlessly with Azure services for advanced data visualization and business intelligence. Microsoft Azure training can help you use Power BI effectively in conjunction with Azure.

Features:

Interactive reports and dashboards.

Integration with Azure Synapse Analytics, Azure Data Lake, and other data sources.

AI-powered insights and natural language queries.

Use Cases: Business intelligence, data visualization, and interactive reporting.

Training Benefits: Microsoft Azure training will show you how to integrate and leverage Power BI for impactful data visualization.

Conclusion

Mastering Microsoft Azure’s suite of services for data analytics and machine learning can transform how you handle and analyze data. Enrolling in Microsoft Azure training will provide you with the skills and knowledge to effectively utilize these powerful tools, leading to more informed decisions and innovative solutions.

Explore Microsoft Azure training options to gain expertise in these services and enhance your career prospects in the data analytics and machine learning fields. Whether you’re starting out or looking to deepen your knowledge, Azure training is your gateway to unlocking the full potential of cloud-based data solutions.

1 note

·

View note

Link

Automated Machine Learning has become essential in data-driven decision-making, allowing domain experts to use machine learning without requiring considerable statistical knowledge. Nevertheless, a major obstacle that many current AutoML systems enc #AI #ML #Automation

0 notes