#AI in Semiconductor Industry

Explore tagged Tumblr posts

Text

Machine learning applications in semiconductor manufacturing

Machine Learning Applications in Semiconductor Manufacturing: Revolutionizing the Industry

The semiconductor industry is the backbone of modern technology, powering everything from smartphones and computers to autonomous vehicles and IoT devices. As the demand for faster, smaller, and more efficient chips grows, semiconductor manufacturers face increasing challenges in maintaining precision, reducing costs, and improving yields. Enter machine learning (ML)—a transformative technology that is revolutionizing semiconductor manufacturing. By leveraging ML, manufacturers can optimize processes, enhance quality control, and accelerate innovation. In this blog post, we’ll explore the key applications of machine learning in semiconductor manufacturing and how it is shaping the future of the industry.

Predictive Maintenance

Semiconductor manufacturing involves highly complex and expensive equipment, such as lithography machines and etchers. Unplanned downtime due to equipment failure can cost millions of dollars and disrupt production schedules. Machine learning enables predictive maintenance by analyzing sensor data from equipment to predict potential failures before they occur.

How It Works: ML algorithms process real-time data from sensors, such as temperature, vibration, and pressure, to identify patterns indicative of wear and tear. By predicting when a component is likely to fail, manufacturers can schedule maintenance proactively, minimizing downtime.

Impact: Predictive maintenance reduces equipment downtime, extends the lifespan of machinery, and lowers maintenance costs.

Defect Detection and Quality Control

Defects in semiconductor wafers can lead to significant yield losses. Traditional defect detection methods rely on manual inspection or rule-based systems, which are time-consuming and prone to errors. Machine learning, particularly computer vision, is transforming defect detection by automating and enhancing the process.

How It Works: ML models are trained on vast datasets of wafer images to identify defects such as scratches, particles, and pattern irregularities. Deep learning algorithms, such as convolutional neural networks (CNNs), excel at detecting even the smallest defects with high accuracy.

Impact: Automated defect detection improves yield rates, reduces waste, and ensures consistent product quality.

Process Optimization

Semiconductor manufacturing involves hundreds of intricate steps, each requiring precise control of parameters such as temperature, pressure, and chemical concentrations. Machine learning optimizes these processes by identifying the optimal settings for maximum efficiency and yield.

How It Works: ML algorithms analyze historical process data to identify correlations between input parameters and output quality. Techniques like reinforcement learning can dynamically adjust process parameters in real-time to achieve the desired outcomes.

Impact: Process optimization reduces material waste, improves yield, and enhances overall production efficiency.

Yield Prediction and Improvement

Yield—the percentage of functional chips produced from a wafer—is a critical metric in semiconductor manufacturing. Low yields can result from various factors, including process variations, equipment malfunctions, and environmental conditions. Machine learning helps predict and improve yields by analyzing complex datasets.

How It Works: ML models analyze data from multiple sources, including process parameters, equipment performance, and environmental conditions, to predict yield outcomes. By identifying the root causes of yield loss, manufacturers can implement targeted improvements.

Impact: Yield prediction enables proactive interventions, leading to higher productivity and profitability.

Supply Chain Optimization

The semiconductor supply chain is highly complex, involving multiple suppliers, manufacturers, and distributors. Delays or disruptions in the supply chain can have a cascading effect on production schedules. Machine learning optimizes supply chain operations by forecasting demand, managing inventory, and identifying potential bottlenecks.

How It Works: ML algorithms analyze historical sales data, market trends, and external factors (e.g., geopolitical events) to predict demand and optimize inventory levels. Predictive analytics also helps identify risks and mitigate disruptions.

Impact: Supply chain optimization reduces costs, minimizes delays, and ensures timely delivery of materials.

Advanced Process Control (APC)

Advanced Process Control (APC) is critical for maintaining consistency and precision in semiconductor manufacturing. Machine learning enhances APC by enabling real-time monitoring and control of manufacturing processes.

How It Works: ML models analyze real-time data from sensors and equipment to detect deviations from desired process parameters. They can automatically adjust settings to maintain optimal conditions, ensuring consistent product quality.

Impact: APC improves process stability, reduces variability, and enhances overall product quality.

Design Optimization

The design of semiconductor devices is becoming increasingly complex as manufacturers strive to pack more functionality into smaller chips. Machine learning accelerates the design process by optimizing chip layouts and predicting performance outcomes.

How It Works: ML algorithms analyze design data to identify patterns and optimize layouts for performance, power efficiency, and manufacturability. Generative design techniques can even create novel chip architectures that meet specific requirements.

Impact: Design optimization reduces time-to-market, lowers development costs, and enables the creation of more advanced chips.

Fault Diagnosis and Root Cause Analysis

When defects or failures occur, identifying the root cause can be challenging due to the complexity of semiconductor manufacturing processes. Machine learning simplifies fault diagnosis by analyzing vast amounts of data to pinpoint the source of problems.

How It Works: ML models analyze data from multiple stages of the manufacturing process to identify correlations between process parameters and defects. Techniques like decision trees and clustering help isolate the root cause of issues.

Impact: Faster fault diagnosis reduces downtime, improves yield, and enhances process reliability.

Energy Efficiency and Sustainability

Semiconductor manufacturing is energy-intensive, with significant environmental impacts. Machine learning helps reduce energy consumption and improve sustainability by optimizing resource usage.

How It Works: ML algorithms analyze energy consumption data to identify inefficiencies and recommend energy-saving measures. For example, they can optimize the operation of HVAC systems and reduce idle time for equipment.

Impact: Energy optimization lowers operational costs and reduces the environmental footprint of semiconductor manufacturing.

Accelerating Research and Development

The semiconductor industry is driven by continuous innovation, with new materials, processes, and technologies being developed regularly. Machine learning accelerates R&D by analyzing experimental data and predicting outcomes.

How It Works: ML models analyze data from experiments to identify promising materials, processes, or designs. They can also simulate the performance of new technologies, reducing the need for physical prototypes.

Impact: Faster R&D cycles enable manufacturers to bring cutting-edge technologies to market more quickly.

Challenges and Future Directions

While machine learning offers immense potential for semiconductor manufacturing, there are challenges to overcome. These include the need for high-quality data, the complexity of integrating ML into existing workflows, and the shortage of skilled professionals. However, as ML technologies continue to evolve, these challenges are being addressed through advancements in data collection, model interpretability, and workforce training.

Looking ahead, the integration of machine learning with other emerging technologies, such as the Internet of Things (IoT) and digital twins, will further enhance its impact on semiconductor manufacturing. By embracing ML, manufacturers can stay competitive in an increasingly demanding and fast-paced industry.

Conclusion

Machine learning is transforming semiconductor manufacturing by enabling predictive maintenance, defect detection, process optimization, and more. As the industry continues to evolve, ML will play an increasingly critical role in driving innovation, improving efficiency, and ensuring sustainability. By harnessing the power of machine learning, semiconductor manufacturers can overcome challenges, reduce costs, and deliver cutting-edge technologies that power the future.

This blog post provides a comprehensive overview of machine learning applications in semiconductor manufacturing. Let me know if you’d like to expand on any specific section or add more details!

#semiconductor manufacturing#Machine learning in semiconductor manufacturing#AI in semiconductor industry#Predictive maintenance in chip manufacturing#Defect detection in semiconductor wafers#Semiconductor process optimization#Yield prediction in semiconductor manufacturing#Advanced Process Control (APC) in semiconductors#Semiconductor supply chain optimization#Fault diagnosis in chip manufacturing#Energy efficiency in semiconductor production#Deep learning for semiconductor defects#Computer vision in wafer inspection#Reinforcement learning in semiconductor processes#Semiconductor yield improvement using AI#Smart manufacturing in semiconductors#AI-driven semiconductor design#Root cause analysis in chip manufacturing#Sustainable semiconductor manufacturing#IoT in semiconductor production#Digital twins in semiconductor manufacturing

0 notes

Text

The Birth of an Industry: Fairchild’s Pivotal Role in Shaping Silicon Valley

In the late 1950s, the Santa Clara Valley of California witnessed a transformative convergence of visionary minds, daring entrepreneurship, and groundbreaking technological advancements. At the heart of this revolution was Fairchild Semiconductor, a pioneering company whose innovative spirit, entrepreneurial ethos, and technological breakthroughs not only defined the burgeoning semiconductor industry but also indelibly shaped the region’s evolution into the world-renowned Silicon Valley.

A seminal 1967 promotional film, featuring Dr. Harry Sello and Dr. Jim Angell, offers a fascinating glimpse into Fairchild’s revolutionary work on integrated circuits (ICs), a technology that would soon become the backbone of the burgeoning tech industry. By demystifying IC design, development, and applications, Fairchild exemplified its commitment to innovation and knowledge sharing, setting a precedent for the collaborative and open approach that would characterize Silicon Valley’s tech community. Specifically, Fairchild’s introduction of the planar process and the first monolithic IC in 1959 marked a significant technological leap, with the former enhancing semiconductor manufacturing efficiency by up to 90% and the latter paving the way for the miniaturization of electronic devices.

Beyond its technological feats, Fairchild’s entrepreneurial ethos, nurtured by visionary founders Robert Noyce and Gordon Moore, served as a blueprint for subsequent tech ventures. The company’s talent attraction and nurturing strategies, including competitive compensation packages and intrapreneurship encouragement, helped establish the region as a magnet for innovators and risk-takers. This, in turn, laid the foundation for the dense network of startups, investors, and expertise that defines Silicon Valley’s ecosystem today. Notably, Fairchild’s presence spurred the development of supporting infrastructure, including the expansion of Stanford University’s research facilities and the establishment of specialized supply chains, further solidifying the region’s position as a global tech hub. By 1965, the area witnessed a surge in tech-related employment, with jobs increasing by over 300% compared to the previous decade, a direct testament to Fairchild’s catalyzing effect.

The trajectory of Fairchild Semiconductor, including its challenges and eventual transformation, intriguingly parallels the broader narrative of Silicon Valley’s growth. The company’s decline under later ownership and its subsequent re-emergence underscore the region’s inherent capacity for reinvention and adaptation. This resilience, initially embodied by Fairchild’s pioneering spirit, has become a hallmark of Silicon Valley, enabling the region to navigate the rapid evolution of the tech industry with unparalleled agility.

What future innovations will emerge from the valley, leveraging the foundations laid by pioneers like Fairchild, to shape the global technological horizon in the decades to come?

Dr. Harry Sello and Dr. Jim Angell: The Design and Development Process of the Integrated Circuit (Fairchild Semiconductor Corporation, October 1967)

youtube

Robert Noyce: The Development of the Integrated Circuit and Its Impact on Technology and Society (The Computer Museum, Boston, May 1984)

youtube

Tuesday, December 3, 2024

#silicon valley history#tech industry origins#entrepreneurial ethos#innovation and technology#california santa clara valley#integrated circuits#semiconductor industry development#promotional film#ai assisted writing#machine art#Youtube#lecture

9 notes

·

View notes

Text

DeepSeek spells the end of the dominance of Big Data and Big AI, not the end of Nvidia. Its focus on efficiency jump-starts the race for small AI models based on lean data, consuming slender computing resources. The probable impact of DeepSeek’s low-cost and free state-of-the-art AI model will be the reorientation of U.S. Big Tech away from relying exclusively on their “bigger is better” competitive orientation and the accelerated proliferation of AI startups focused on “small is beautiful.”

Wall Street share market reacted in the wrong way for the wrong thing.

Without Nvidia, Deepseek can't survive because they use specific Nvidia chips that are currently not under US ban.

3 notes

·

View notes

Text

#GPU Market#Graphics Processing Unit#GPU Industry Trends#Market Research Report#GPU Market Growth#Semiconductor Industry#Gaming GPUs#AI and Machine Learning GPUs#Data Center GPUs#High-Performance Computing#GPU Market Analysis#Market Size and Forecast#GPU Manufacturers#Cloud Computing GPUs#GPU Demand Drivers#Technological Advancements in GPUs#GPU Applications#Competitive Landscape#Consumer Electronics GPUs#Emerging Markets for GPUs

0 notes

Text

youtube

How the Future Looks Working Together with Infineon

https://www.futureelectronics.com/blog/article/how-the-future-looks-working-together-with-infineon/ . Infineon's Kate Pritchard and Future Electronics' Riccardo Collura sit down for an in-depth discussion on Wide Bandgap products and how our partnership is sure to powerup new designs. https://youtu.be/I3LqvfhvjrA

#ai#future electronics#WT#Infineon#Wide Bandgap#Power Electronics#Electronics Innovation#Semiconductor Solutions#Electronics Design#FutureTech#Electronics Industry#Tech Discussion#Youtube

0 notes

Text

https://electronicsbuzz.in/alphawave-semi-unveils-ai-connectivity-advances-at-ecoc-2024/

#semiconductor#technology#AI#innovations#industry#collaboration#powerelectronics#powermanagement#powersemiconductor

0 notes

Text

Shocking 3 Reasons Why Nvidia Lost Its Crown as World's Most Valuable Company

Why Nvidia lost its crown as the world’s most valuable company is a story of rapid stock movement and market volatility. The semiconductor giant’s stock plummeted by 6.7% over two days, resulting in a $200 billion market value loss and positioning Apple and Microsoft ahead in market capitalization. Shocking 3 Reasons Why Nvidia Lost Its Crown1. Nvidia’s Meteoric Rise Made It Vulnerable to…

View On WordPress

#AI impact#Apple#enterprise software#market cap#market volatility#Microsoft#profit-taking#semiconductor industry#stock decline#why Nvidia

1 note

·

View note

Text

Discover the latest semiconductor industry trends shaping 2024 in the UK. From advanced semiconductors to 5G and AI integration, our article explores key dynamics driving this essential sector. Stay ahead in technology - read now and join the future of innovation!

#UK semiconductor industry 2024#advanced semiconductors#sustainability in tech#5G technology#AI in semiconductors#AGAS

0 notes

Text

AI hasn't improved in 18 months. It's likely that this is it. There is currently no evidence the capabilities of ChatGPT will ever improve. It's time for AI companies to put up or shut up.

I'm just re-iterating this excellent post from Ed Zitron, but it's not left my head since I read it and I want to share it. I'm also taking some talking points from Ed's other posts. So basically:

We keep hearing AI is going to get better and better, but these promises seem to be coming from a mix of companies engaging in wild speculation and lying.

Chatgpt, the industry leading large language model, has not materially improved in 18 months. For something that claims to be getting exponentially better, it sure is the same shit.

Hallucinations appear to be an inherent aspect of the technology. Since it's based on statistics and ai doesn't know anything, it can never know what is true. How could I possibly trust it to get any real work done if I can't rely on it's output? If I have to fact check everything it says I might as well do the work myself.

For "real" ai that does know what is true to exist, it would require us to discover new concepts in psychology, math, and computing, which open ai is not working on, and seemingly no other ai companies are either.

Open ai has already seemingly slurped up all the data from the open web already. Chatgpt 5 would take 5x more training data than chatgpt 4 to train. Where is this data coming from, exactly?

Since improvement appears to have ground to a halt, what if this is it? What if Chatgpt 4 is as good as LLMs can ever be? What use is it?

As Jim Covello, a leading semiconductor analyst at Goldman Sachs said (on page 10, and that's big finance so you know they only care about money): if tech companies are spending a trillion dollars to build up the infrastructure to support ai, what trillion dollar problem is it meant to solve? AI companies have a unique talent for burning venture capital and it's unclear if Open AI will be able to survive more than a few years unless everyone suddenly adopts it all at once. (Hey, didn't crypto and the metaverse also require spontaneous mass adoption to make sense?)

There is no problem that current ai is a solution to. Consumer tech is basically solved, normal people don't need more tech than a laptop and a smartphone. Big tech have run out of innovations, and they are desperately looking for the next thing to sell. It happened with the metaverse and it's happening again.

In summary:

Ai hasn't materially improved since the launch of Chatgpt4, which wasn't that big of an upgrade to 3.

There is currently no technological roadmap for ai to become better than it is. (As Jim Covello said on the Goldman Sachs report, the evolution of smartphones was openly planned years ahead of time.) The current problems are inherent to the current technology and nobody has indicated there is any way to solve them in the pipeline. We have likely reached the limits of what LLMs can do, and they still can't do much.

Don't believe AI companies when they say things are going to improve from where they are now before they provide evidence. It's time for the AI shills to put up, or shut up.

5K notes

·

View notes

Text

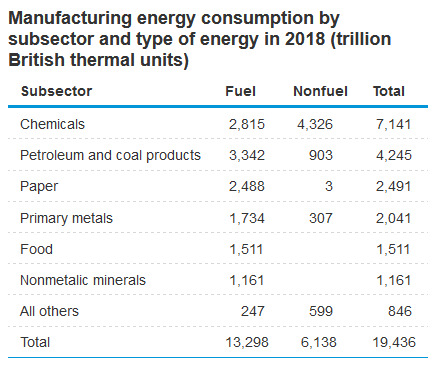

largest industrial consumers of energy:

largest industrial consumers of water:

gosh, it sure seems suspicious to me that people are spending all this time online arguing about the energy and water consumption of things that are simply not represented on these lists! (AI)

finer points that are too much to ask of a tumblr audience below

google, samsung, taiwan semiconductor, intel, and facebook are among the largest companies consuming energy (globally, in contrast to the US statistics shown above). this is a piddling use of statistics because they are vastly outnumbered by the sheer amount of companies in the industries listed above -- because they have largely monopolized their industries, which is why we've imposed sanctions on computing component imports, why we geopolitically oppose one-china policy on taiwan, why we're doing wargames in the south china sea, why we're crying about the russian nuclear submarine docked in cuba, and why we pulled out of the largest nuclear disarmament project in history -- all because we have no manufacturing capability. however, if you look closely, building out two parallel manufacturing industries for the same product actually consumes more energy than simply doing a business deal with communists, but that would be bad for the optics of the great american myth

gallons per dollar output is not the same as total overall, i.e. wineries rank highly because their product is more expensive per gallon consumed than others, but statista was the best available source. if you want EPA data you have to reckon with the fact that they separate direct water withdrawal from use from the public water supply

this is FAR kinder than the first draft, where i said the reason y'all do this is because you book precisely zero paychecks over Writing Stories For Societal Change and you're upset at the prospect of computing getting passable at it because it threatens to interrupt the cashflow you already do not have and shatter the illusion that you're a helpless baby who can't do anything to improve your circumstances except Imagine Things. the term for this is "feigned innocence" and i flatly do not accept it from people who have all the capability in the world to know better

158 notes

·

View notes

Text

more incredible reporting from the bbc

Mr Ma's downfall had preceded a broader crackdown on China's tech industry.

Companies came to face much tighter enforcement of data security and competition rules, as well as state control over important digital assets.

Other companies across the private sector, ranging from education to real estate, also ended up being targeted in what came to be known as the "common prosperity" campaign.

The measures put in place by the common prosperity policies were seen by some as a way to rein in the billionaire owners of some of China's biggest companies, to instead give customers and workers more of a say in how firms operate and distribute their earnings.

enforcing laws is a crackdown apparently

Instead of a return to an era of unregulated growth, some analysts believe Monday's meeting signalled an attempt to steer investors and businesses toward Mr Xi's national priorities.

The Chinese president has been increasingly emphasising policies that the government has referred to as "high-quality development" and "new productive forces".

Such ideas have been used to reflect a switch from what were previously fast drivers of growth, such as property and infrastructure investment, towards high-end industries such as semiconductors, clean energy and AI.

The goal is to achieve "socialist modernisation" by 2035 - higher living standards for everyone, and an economy driven by advanced manufacturing and less reliant on imports of foreign technology.

Mr Xi knows that to get there he will need the private sector fully on board.

"Rather than marking the end of tech sector scrutiny, [Jack Ma's] reappearance suggests that Beijing is pivoting from crackdowns to controlled engagement," an associate professor at the University of Technology Sydney, Marina Zhang told the BBC.

"While the private sector remains a critical pillar of China's economic ambitions, it must align with national priorities - including self-reliance in key technologies and strategic industries."

controlling the national bourgeois and forcing them to supplicate to the communist party and weaponizing them to develop society in pursuit of attaining greater and greater living standards? waoh basedbasedbasedbasedbasedbasedbasedbased

21 February 2025

22 notes

·

View notes

Text

Revolutionizing the Semiconductor Industry with AI: EinnoSys Insights

Welcome to EinnoSys, where innovation meets intelligence! Today, we’re diving into the transformative impact of artificial intelligence (AI) on the semiconductor manufacturing landscape.

The AI-Driven Revolution As the demand for faster and more efficient semiconductors skyrockets, AI technologies are stepping in to streamline manufacturing processes. By leveraging machine learning algorithms, companies can optimize production schedules, predict equipment failures, and reduce waste, leading to significant cost savings.

Smart Manufacturing AI enhances real-time data analysis during the manufacturing process. With advanced analytics, we can identify anomalies in production lines, ensuring high-quality standards and minimizing defects. This not only improves yield but also accelerates time-to-market for new chips.

Design Optimization AI isn’t just for manufacturing; it’s also revolutionizing chip design. Machine learning models can simulate various design parameters, helping engineers create more efficient architectures that push the limits of performance. This synergy between AI and design leads to breakthroughs in semiconductor technology.

AI-Powered Supply Chains The semiconductor supply chain is complex and often unpredictable. AI algorithms can forecast demand trends and manage inventory more effectively, helping companies respond to market fluctuations and avoid shortages.

Looking Ahead The future of AI in the semiconductor industry is bright. As we continue to explore these technologies at EinnoSys, we’re excited to contribute to a more efficient, sustainable, and innovative semiconductor ecosystem.

Join us as we navigate this fascinating intersection of AI and semiconductors! Follow us for more insights, updates, and industry trends.

#artificial intelligence semiconductors#machine learning semiconductor#AI in Semiconductor Industry#semiconductors ai#semiconductors for ai

0 notes

Text

Semiconductors: The Driving Force Behind Technological Advancements

The semiconductor industry is a crucial part of our modern society, powering everything from smartphones to supercomputers. The industry is a complex web of global interests, with multiple players vying for dominance.

Taiwan has long been the dominant player in the semiconductor industry, with Taiwan Semiconductor Manufacturing Company (TSMC) accounting for 54% of the market in 2020. TSMC's dominance is due in part to the company's expertise in semiconductor manufacturing, as well as its strategic location in Taiwan. Taiwan's proximity to China and its well-developed infrastructure make it an ideal location for semiconductor manufacturing.

However, Taiwan's dominance also brings challenges. The company faces strong competition from other semiconductor manufacturers, including those from China and South Korea. In addition, Taiwan's semiconductor industry is heavily dependent on imports, which can make it vulnerable to supply chain disruptions.

China is rapidly expanding its presence in the semiconductor industry, with the government investing heavily in research and development (R&D) and manufacturing. China's semiconductor industry is led by companies such as SMIC and Tsinghua Unigroup, which are rapidly expanding their capacity. However, China's industry still lags behind Taiwan's in terms of expertise and capacity.

South Korea is another major player in the semiconductor industry, with companies like Samsung and SK Hynix owning a significant market share. South Korea's semiconductor industry is known for its expertise in memory chips such as DRAM and NAND flash. However, the industry is heavily dependent on imports, which can make it vulnerable to supply chain disruptions.

The semiconductor industry is experiencing significant trends, including the growth of the Internet of Things (IoT), the rise of artificial intelligence (AI), and the increasing demand for 5G technology. These trends are driving semiconductor demand, which is expected to continue to grow in the coming years.

However, the industry also faces major challenges, including a shortage of skilled workers, the increasing complexity of semiconductor manufacturing and the need for more sustainable and environmentally friendly manufacturing processes.

To overcome the challenges facing the industry, it is essential to invest in research and development, increase the availability of skilled workers and develop more sustainable and environmentally friendly manufacturing processes. By working together, governments, companies and individuals can ensure that the semiconductor industry remains competitive and sustainable, and continues to drive innovation and economic growth in the years to come.

Chip War, the Race for Semiconductor Supremacy (2023) (TaiwanPlus Docs, October 2024)

youtube

Dr. Keyu Jin, a tenured professor of economics at the London School of Economics and Political Science, argues that many in the West misunderstand China’s economic and political models. She maintains that China became the most successful economic story of our time by shifting from primarily state-owned enterprises to an economy more focused on entrepreneurship and participation in the global economy.

Dr. Keyu Jin: Understanding a Global Superpower - Another Look at the Chinese Economy (Wheeler Institute for Economy, October 2024)

youtube

Dr. Keyu Jin: China's Economic Prospects and Global Impact (Global Institute For Tomorrow, July 2024)

youtube

The following conversation highlights the complexity and nuance of Xi Jinping's ideology and its relationship to traditional Chinese thought, and emphasizes the importance of understanding the internal dynamics of the Chinese Communist Party and the ongoing debates within the Chinese system.

Dr. Kevin Rudd: On Xi Jinping - How Xi's Marxist Nationalism Is Shaping China and the World (Asia Society, October 2024)

youtube

Tuesday, October 29, 2024

#semiconductor industry#globalization#technology#innovation#research#development#sustainability#economic growth#documentary#ai assisted writing#machine art#Youtube#presentation#discussion#china#taiwán#south korea

7 notes

·

View notes

Note

What are some of the coolest computer chips ever, in your opinion?

Hmm. There are a lot of chips, and a lot of different things you could call a Computer Chip. Here's a few that come to mind as "interesting" or "important", or, if I can figure out what that means, "cool".

If your favourite chip is not on here honestly it probably deserves to be and I either forgot or I classified it more under "general IC's" instead of "computer chips" (e.g. 555, LM, 4000, 7000 series chips, those last three each capable of filling a book on their own). The 6502 is not here because I do not know much about the 6502, I was neither an Apple nor a BBC Micro type of kid. I am also not 70 years old so as much as I love the DEC Alphas, I have never so much as breathed on one.

Disclaimer for writing this mostly out of my head and/or ass at one in the morning, do not use any of this as a source in an argument without checking.

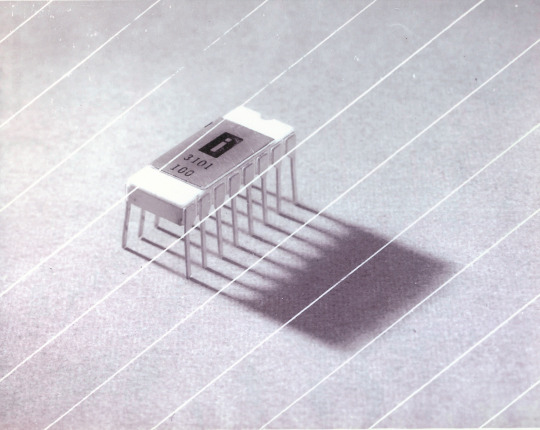

Intel 3101

So I mean, obvious shout, the Intel 3101, a 64-bit chip from 1969, and Intel's first ever product. You may look at that, and go, "wow, 64-bit computing in 1969? That's really early" and I will laugh heartily and say no, that's not 64-bit computing, that is 64 bits of SRAM memory.

This one is cool because it's cute. Look at that. This thing was completely hand-designed by engineers drawing the shapes of transistor gates on sheets of overhead transparency and exposing pieces of crudely spun silicon to light in a """"cleanroom"""" that would cause most modern fab equipment to swoon like a delicate Victorian lady. Semiconductor manufacturing was maturing at this point but a fab still had more in common with a darkroom for film development than with the mega expensive building sized machines we use today.

As that link above notes, these things were really rough and tumble, and designs were being updated on the scale of weeks as Intel learned, well, how to make chips at an industrial scale. They weren't the first company to do this, in the 60's you could run a chip fab out of a sufficiently well sealed garage, but they were busy building the background that would lead to the next sixty years.

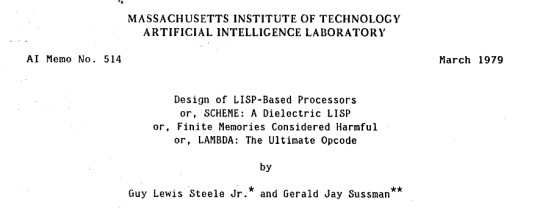

Lisp Chips

This is a family of utterly bullshit prototype processors that failed to be born in the whirlwind days of AI research in the 70's and 80's.

Lisps, a very old but exceedingly clever family of functional programming languages, were the language of choice for AI research at the time. Lisp compilers and interpreters had all sorts of tricks for compiling Lisp down to instructions, and also the hardware was frequently being built by the AI researchers themselves with explicit aims to run Lisp better.

The illogical conclusion of this was attempts to implement Lisp right in silicon, no translation layer.

Yeah, that is Sussman himself on this paper.

These never left labs, there have since been dozens of abortive attempts to make Lisp Chips happen because the idea is so extremely attractive to a certain kind of programmer, the most recent big one being a pile of weird designd aimed to run OpenGenera. I bet you there are no less than four members of r/lisp who have bought an Icestick FPGA in the past year with the explicit goal of writing their own Lisp Chip. It will fail, because this is a terrible idea, but damn if it isn't cool.

There were many more chips that bridged this gap, stuff designed by or for Symbolics (like the Ivory series of chips or the 3600) to go into their Lisp machines that exploited the up and coming fields of microcode optimization to improve Lisp performance, but sadly there are no known working true Lisp Chips in the wild.

Zilog Z80

Perhaps the most important chip that ever just kinda hung out. The Z80 was almost, almost the basis of The Future. The Z80 is bizzare. It is a software compatible clone of the Intel 8080, which is to say that it has the same instructions implemented in a completely different way.

This is, a strange choice, but it was the right one somehow because through the 80's and 90's practically every single piece of technology made in Japan contained at least one, maybe two Z80's even if there was no readily apparent reason why it should have one (or two). I will defer to Cathode Ray Dude here: What follows is a joke, but only barely

The Z80 is the basis of the MSX, the IBM PC of Japan, which was produced through a system of hardware and software licensing to third party manufacturers by Microsoft of Japan which was exactly as confusing as it sounds. The result is that the Z80, originally intended for embedded applications, ended up forming the basis of an entire alternate branch of the PC family tree.

It is important to note that the Z80 is boring. It is a normal-ass chip but it just so happens that it ended up being the focal point of like a dozen different industries all looking for a cheap, easy to program chip they could shove into Appliances.

Effectively everything that happened to the Intel 8080 happened to the Z80 and then some. Black market clones, reverse engineered Soviet compatibles, licensed second party manufacturers, hundreds of semi-compatible bastard half-sisters made by anyone with a fab, used in everything from toys to industrial machinery, still persisting to this day as an embedded processor that is probably powering something near you quietly and without much fuss. If you have one of those old TI-86 calculators, that's a Z80. Oh also a horrible hybrid Z80/8080 from Sharp powered the original Game Boy.

I was going to try and find a picture of a Z80 by just searching for it and look at this mess! There's so many of these things.

I mean the C/PM computers. The ZX Spectrum, I almost forgot that one! I can keep making this list go! So many bits of the Tech Explosion of the 80's and 90's are powered by the Z80. I was not joking when I said that you sometimes found more than one Z80 in a single computer because you might use one Z80 to run the computer and another Z80 to run a specialty peripheral like a video toaster or music synthesizer. Everyone imaginable has had their hand on the Z80 ball at some point in time or another. Z80 based devices probably launched several dozen hardware companies that persist to this day and I have no idea which ones because there were so goddamn many.

The Z80 eventually got super efficient due to process shrinks so it turns up in weird laptops and handhelds! Zilog and the Z80 persist to this day like some kind of crocodile beast, you can go to RS components and buy a brand new piece of Z80 silicon clocked at 20MHz. There's probably a couple in a car somewhere near you.

Pentium (P6 microarchitecture)

Yeah I am going to bring up the Hackers chip. The Pentium P6 series is currently remembered for being the chip that Acidburn geeks out over in Hackers (1995) instead of making out with her boyfriend, but it is actually noteworthy IMO for being one of the first mainstream chips to start pulling serious tricks on the system running it.

The P6 microarchitecture comes out swinging with like four or five tricks to get around the numerous problems with x86 and deploys them all at once. It has superscalar pipelining, it has a RISC microcode, it has branch prediction, it has a bunch of zany mathematical optimizations, none of these are new per se but this is the first time you're really seeing them all at once on a chip that was going into PC's.

Without these improvements it's possible Intel would have been beaten out by one of its competitors, maybe Power or SPARC or whatever you call the thing that runs on the Motorola 68k. Hell even MIPS could have beaten the ageing cancerous mistake that was x86. But by discovering the power of lying to the computer, Intel managed to speed up x86 by implementing it in a sensible instruction set in the background, allowing them to do all the same clever pipelining and optimization that was happening with RISC without having to give up their stranglehold on the desktop market. Without the P5 we live in a very, very different world from a computer hardware perspective.

From this falls many of the bizzare microcode execution bugs that plague modern computers, because when you're doing your optimization on the fly in chip with a second, smaller unix hidden inside your processor eventually you're not going to be cryptographically secure.

RISC is very clearly better for, most things. You can find papers stating this as far back as the 70's, when they start doing pipelining for the first time and are like "you know pipelining is a lot easier if you have a few small instructions instead of ten thousand massive ones.

x86 only persists to this day because Intel cemented their lead and they happened to use x86. True RISC cuts out the middleman of hyperoptimizing microcode on the chip, but if you can't do that because you've girlbossed too close to the sun as Intel had in the late 80's you have to do something.

The Future

This gets us to like the year 2000. I have more chips I find interesting or cool, although from here it's mostly microcontrollers in part because from here it gets pretty monotonous because Intel basically wins for a while. I might pick that up later. Also if this post gets any longer it'll be annoying to scroll past. Here is a sample from a post I have in my drafts since May:

I have some notes on the weirdo PowerPC stuff that shows up here it's mostly interesting because of where it goes, not what it is. A lot of it ends up in games consoles. Some of it goes into mainframes. There is some of it in space. Really got around, PowerPC did.

236 notes

·

View notes

Text

Silicon Valley's GPU Wars: NVIDIA and Its Challengers

The battle for computing supremacy is heating up! Our latest analysis dives deep into how NVIDIA maintains its dominant position in the GPU market while facing growing competition from tech giants and innovative newcomers.

With roughly 80% of the discrete GPU market and 70% of data center GPUs, NVIDIA's reign seems secure. But competitors aren't standing still - AMD's competitive pricing and performance gains, Intel's ambitious GPU plans, and specialized players like Qualcomm and Broadcom are all vying for their piece of the rapidly expanding AI and high-performance computing pie.

From gaming rigs to AI data centers, the stakes have never been higher. Which company has the edge in innovation? How are they positioning themselves for the AI revolution? And what does this mean for consumers and investors?

Read the full analysis on our blog!

#nvidia#intel#amd#broadcom#qualcomm#snapdragon#gpu#artificial intelligence#ai generated#technology#semiconductor

10 notes

·

View notes

Text

youtube

Infineon: Introduction to solid-state isolators and relays

https://www.futureelectronics.com/blog/article/how-the-future-looks-working-together-with-infineon/ . Infineon's Kate Pritchard and Future Electronics' Riccardo Collura sit down for an in-depth discussion on Wide Bandgap products and how our partnership is sure to powerup new designs. https://youtu.be/I3LqvfhvjrA

#ai#future electronics#WT#Infineon#Wide Bandgap#Power Electronics#Electronics Innovation#Semiconductor Solutions#Electronics Design#FutureTech#Electronics Industry#Tech Discussion#Youtube

0 notes