#Cloud Computing GPUs

Explore tagged Tumblr posts

Text

#GPU Market#Graphics Processing Unit#GPU Industry Trends#Market Research Report#GPU Market Growth#Semiconductor Industry#Gaming GPUs#AI and Machine Learning GPUs#Data Center GPUs#High-Performance Computing#GPU Market Analysis#Market Size and Forecast#GPU Manufacturers#Cloud Computing GPUs#GPU Demand Drivers#Technological Advancements in GPUs#GPU Applications#Competitive Landscape#Consumer Electronics GPUs#Emerging Markets for GPUs

0 notes

Photo

(via AI inference chip startup Groq closes $640M at $2.8B valuation to meet next-gen LPUs demand)

Groq, a leader in fast AI inference, has secured a $640M Series D round at a valuation of $2.8B. The round was led by funds and accounts managed by BlackRock Private Equity Partners with participation from both existing and new investors including Neuberger Berman, Type One Ventures, and strategic investors including Cisco Investments, Global Brain’s KDDI Open Innovation Fund III, and Samsung Catalyst Fund. The unique, vertically integrated Groq AI inference platform has generated skyrocketing demand from developers seeking exceptional speed.

1 note

·

View note

Text

Efficient GPU Management for AI Startups: Exploring the Best Strategies

The rise of AI-driven innovation has made GPUs essential for startups and small businesses. However, efficiently managing GPU resources remains a challenge, particularly with limited budgets, fluctuating workloads, and the need for cutting-edge hardware for R&D and deployment.

Understanding the GPU Challenge for Startups

AI workloads—especially large-scale training and inference—require high-performance GPUs like NVIDIA A100 and H100. While these GPUs deliver exceptional computing power, they also present unique challenges:

High Costs – Premium GPUs are expensive, whether rented via the cloud or purchased outright.

Availability Issues – In-demand GPUs may be limited on cloud platforms, delaying time-sensitive projects.

Dynamic Needs – Startups often experience fluctuating GPU demands, from intensive R&D phases to stable inference workloads.

To optimize costs, performance, and flexibility, startups must carefully evaluate their options. This article explores key GPU management strategies, including cloud services, physical ownership, rentals, and hybrid infrastructures—highlighting their pros, cons, and best use cases.

1. Cloud GPU Services

Cloud GPU services from AWS, Google Cloud, and Azure offer on-demand access to GPUs with flexible pricing models such as pay-as-you-go and reserved instances.

✅ Pros:

✔ Scalability – Easily scale resources up or down based on demand. ✔ No Upfront Costs – Avoid capital expenditures and pay only for usage. ✔ Access to Advanced GPUs – Frequent updates include the latest models like NVIDIA A100 and H100. ✔ Managed Infrastructure – No need for maintenance, cooling, or power management. ✔ Global Reach – Deploy workloads in multiple regions with ease.

❌ Cons:

✖ High Long-Term Costs – Usage-based billing can become expensive for continuous workloads. ✖ Availability Constraints – Popular GPUs may be out of stock during peak demand. ✖ Data Transfer Costs – Moving large datasets in and out of the cloud can be costly. ✖ Vendor Lock-in – Dependency on a single provider limits flexibility.

🔹 Best Use Cases:

Early-stage startups with fluctuating GPU needs.

Short-term R&D projects and proof-of-concept testing.

Workloads requiring rapid scaling or multi-region deployment.

2. Owning Physical GPU Servers

Owning physical GPU servers means purchasing GPUs and supporting hardware, either on-premises or collocated in a data center.

✅ Pros:

✔ Lower Long-Term Costs – Once purchased, ongoing costs are limited to power, maintenance, and hosting fees. ✔ Full Control – Customize hardware configurations and ensure access to specific GPUs. ✔ Resale Value – GPUs retain significant resale value (Sell GPUs), allowing you to recover investment costs when upgrading. ✔ Purchasing Flexibility – Buy GPUs at competitive prices, including through refurbished hardware vendors. ✔ Predictable Expenses – Fixed hardware costs eliminate unpredictable cloud billing. ✔ Guaranteed Availability – Avoid cloud shortages and ensure access to required GPUs.

❌ Cons:

✖ High Upfront Costs – Buying high-performance GPUs like NVIDIA A100 or H100 requires a significant investment. ✖ Complex Maintenance – Managing hardware failures and upgrades requires technical expertise. ✖ Limited Scalability – Expanding capacity requires additional hardware purchases.

🔹 Best Use Cases:

Startups with stable, predictable workloads that need dedicated resources.

Companies conducting large-scale AI training or handling sensitive data.

Organizations seeking long-term cost savings and reduced dependency on cloud providers.

3. Renting Physical GPU Servers

Renting physical GPU servers provides access to high-performance hardware without the need for direct ownership. These servers are often hosted in data centers and offered by third-party providers.

✅ Pros:

✔ Lower Upfront Costs – Avoid large capital investments and opt for periodic rental fees. ✔ Bare-Metal Performance – Gain full access to physical GPUs without virtualization overhead. ✔ Flexibility – Upgrade or switch GPU models more easily compared to ownership. ✔ No Depreciation Risks – Avoid concerns over GPU obsolescence.

❌ Cons:

✖ Rental Premiums – Long-term rental fees can exceed the cost of purchasing hardware. ✖ Operational Complexity – Requires coordination with data center providers for management. ✖ Availability Constraints – Supply shortages may affect access to cutting-edge GPUs.

🔹 Best Use Cases:

Mid-stage startups needing temporary GPU access for specific projects.

Companies transitioning away from cloud dependency but not ready for full ownership.

Organizations with fluctuating GPU workloads looking for cost-effective solutions.

4. Hybrid Infrastructure

Hybrid infrastructure combines owned or rented GPUs with cloud GPU services, ensuring cost efficiency, scalability, and reliable performance.

What is a Hybrid GPU Infrastructure?

A hybrid model integrates: 1️⃣ Owned or Rented GPUs – Dedicated resources for R&D and long-term workloads. 2️⃣ Cloud GPU Services – Scalable, on-demand resources for overflow, production, and deployment.

How Hybrid Infrastructure Benefits Startups

✅ Ensures Control in R&D – Dedicated hardware guarantees access to required GPUs. ✅ Leverages Cloud for Production – Use cloud resources for global scaling and short-term spikes. ✅ Optimizes Costs – Aligns workloads with the most cost-effective resource. ✅ Reduces Risk – Minimizes reliance on a single provider, preventing vendor lock-in.

Expanded Hybrid Workflow for AI Startups

1️⃣ R&D Stage: Use physical GPUs for experimentation and colocate them in data centers. 2️⃣ Model Stabilization: Transition workloads to the cloud for flexible testing. 3️⃣ Deployment & Production: Reserve cloud instances for stable inference and global scaling. 4️⃣ Overflow Management: Use a hybrid approach to scale workloads efficiently.

Conclusion

Efficient GPU resource management is crucial for AI startups balancing innovation with cost efficiency.

Cloud GPUs offer flexibility but become expensive for long-term use.

Owning GPUs provides control and cost savings but requires infrastructure management.

Renting GPUs is a middle-ground solution, offering flexibility without ownership risks.

Hybrid infrastructure combines the best of both, enabling startups to scale cost-effectively.

Platforms like BuySellRam.com help startups optimize their hardware investments by providing cost-effective solutions for buying and selling GPUs, ensuring they stay competitive in the evolving AI landscape.

The original article is here: How to manage GPU resource?

#GPUManagement #GPUsForAI #AIGPU #TechInfrastructure #HighPerformanceComputing #CloudComputing #AIHardware #Tech

#GPU Management#GPUs for AI#AI GPU#High Performance Computing#cloud computing#ai hardware#technology

0 notes

Text

Unveiling the Future of AI: Why Sharon AI is the Game-Changer You Need to Know

Artificial Intelligence (AI) is no longer just a buzzword; it’s the backbone of innovation in industries ranging from healthcare to finance. As businesses look to scale and innovate, leveraging advanced AI services has become crucial. Enter Sharon AI, a cutting-edge platform that’s reshaping how organizations harness AI’s potential. If you haven’t heard of Sharon AI yet, it’s time to dive in.

Why AI is Essential in Today’s World

The adoption of artificial intelligence has skyrocketed over the past decade. From chatbots to complex data analytics, AI is driving efficiency, accuracy, and innovation. Businesses that leverage AI are not just keeping up; they’re leading their industries. However, one challenge remains: finding scalable, high-performance computing solutions tailored to AI.

That’s where Sharon AI steps in. With its GPU-based computing infrastructure, the platform offers solutions that are not only powerful but also sustainable, addressing the growing need for eco-friendly tech.

What Sets Sharon AI Apart?

Sharon AI specializes in providing advanced compute infrastructure for high-performance computing (HPC) and AI applications. Here’s why Sharon AI stands out:

Scalability: Whether you’re a startup or a global enterprise, Sharon AI offers flexible solutions to match your needs.

Sustainability: Their commitment to building net-zero energy data centers, like the 250 MW facility in Texas, highlights a dedication to green technology.

State-of-the-Art GPUs: Incorporating NVIDIA H100 GPUs ensures top-tier performance for AI and HPC workloads.

Reliability: Operating from U.S.-based data centers, Sharon AI guarantees secure and efficient service delivery.

Services Offered by Sharon AI

Sharon AI’s offerings are designed to empower businesses in their AI journey. Key services include:

GPU Cloud Computing: Scalable GPU resources tailored for AI and HPC applications.

Sustainable Data Centers: Energy-efficient facilities ensuring low carbon footprints.

Custom AI Solutions: Tailored services to meet industry-specific needs.

24/7 Support: Expert assistance to ensure seamless operations.

Why Businesses Are Turning to Sharon AI

Businesses today face growing demands for data-driven decision-making, predictive analytics, and real-time processing. Traditional computing infrastructure often falls short, making Sharon AI’s advanced solutions a must-have for enterprises looking to stay ahead.

For instance, industries like healthcare benefit from Sharon AI’s ability to process massive datasets quickly and accurately, while financial institutions use their solutions to enhance fraud detection and predictive modeling.

The Growing Demand for AI Services

Searches related to AI solutions, HPC platforms, and sustainable computing are increasing as businesses seek reliable providers. By offering innovative solutions, Sharon AI is positioned as a leader in this space.If you’re searching for providers or services such as GPU cloud computing, NVIDIA GPU solutions, or AI infrastructure services, Sharon AI is a name you’ll frequently encounter. Their offerings are designed to cater to the rising demand for efficient and sustainable AI computing solutions.

0 notes

Text

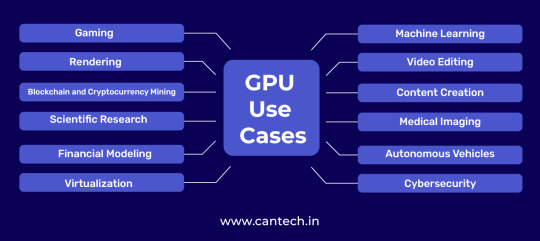

From rendering stunning visuals to powering complex calculations, GPUs (Graphics Processing Unit) have transformed beyond their gaming roots. Their parallel processing prowess makes them ideal for tasks like machine learning, scientific computing, and video editing.

1 note

·

View note

Text

Tech Breakdown: What Is a SuperNIC? Get the Inside Scoop!

The most recent development in the rapidly evolving digital realm is generative AI. A relatively new phrase, SuperNIC, is one of the revolutionary inventions that makes it feasible.

Describe a SuperNIC

On order to accelerate hyperscale AI workloads on Ethernet-based clouds, a new family of network accelerators called SuperNIC was created. With remote direct memory access (RDMA) over converged Ethernet (RoCE) technology, it offers extremely rapid network connectivity for GPU-to-GPU communication, with throughputs of up to 400Gb/s.

SuperNICs incorporate the following special qualities:

Ensuring that data packets are received and processed in the same sequence as they were originally delivered through high-speed packet reordering. This keeps the data flow’s sequential integrity intact.

In order to regulate and prevent congestion in AI networks, advanced congestion management uses network-aware algorithms and real-time telemetry data.

In AI cloud data centers, programmable computation on the input/output (I/O) channel facilitates network architecture adaptation and extension.

Low-profile, power-efficient architecture that effectively handles AI workloads under power-constrained budgets.

Optimization for full-stack AI, encompassing system software, communication libraries, application frameworks, networking, computing, and storage.

Recently, NVIDIA revealed the first SuperNIC in the world designed specifically for AI computing, built on the BlueField-3 networking architecture. It is a component of the NVIDIA Spectrum-X platform, which allows for smooth integration with the Ethernet switch system Spectrum-4.

The NVIDIA Spectrum-4 switch system and BlueField-3 SuperNIC work together to provide an accelerated computing fabric that is optimized for AI applications. Spectrum-X outperforms conventional Ethernet settings by continuously delivering high levels of network efficiency.

Yael Shenhav, vice president of DPU and NIC products at NVIDIA, stated, “In a world where AI is driving the next wave of technological innovation, the BlueField-3 SuperNIC is a vital cog in the machinery.” “SuperNICs are essential components for enabling the future of AI computing because they guarantee that your AI workloads are executed with efficiency and speed.”

The Changing Environment of Networking and AI

Large language models and generative AI are causing a seismic change in the area of artificial intelligence. These potent technologies have opened up new avenues and made it possible for computers to perform new functions.

GPU-accelerated computing plays a critical role in the development of AI by processing massive amounts of data, training huge AI models, and enabling real-time inference. While this increased computing capacity has created opportunities, Ethernet cloud networks have also been put to the test.

The internet’s foundational technology, traditional Ethernet, was designed to link loosely connected applications and provide wide compatibility. The complex computational requirements of contemporary AI workloads, which include quickly transferring large amounts of data, closely linked parallel processing, and unusual communication patterns all of which call for optimal network connectivity were not intended for it.

Basic network interface cards (NICs) were created with interoperability, universal data transfer, and general-purpose computing in mind. They were never intended to handle the special difficulties brought on by the high processing demands of AI applications.

The necessary characteristics and capabilities for effective data transmission, low latency, and the predictable performance required for AI activities are absent from standard NICs. In contrast, SuperNICs are designed specifically for contemporary AI workloads.

Benefits of SuperNICs in AI Computing Environments

Data processing units (DPUs) are capable of high throughput, low latency network connectivity, and many other sophisticated characteristics. DPUs have become more and more common in the field of cloud computing since its launch in 2020, mostly because of their ability to separate, speed up, and offload computation from data center hardware.

SuperNICs and DPUs both have many characteristics and functions in common, however SuperNICs are specially designed to speed up networks for artificial intelligence.

The performance of distributed AI training and inference communication flows is highly dependent on the availability of network capacity. Known for their elegant designs, SuperNICs scale better than DPUs and may provide an astounding 400Gb/s of network bandwidth per GPU.

When GPUs and SuperNICs are matched 1:1 in a system, AI workload efficiency may be greatly increased, resulting in higher productivity and better business outcomes.

SuperNICs are only intended to speed up networking for cloud computing with artificial intelligence. As a result, it uses less processing power than a DPU, which needs a lot of processing power to offload programs from a host CPU.

Less power usage results from the decreased computation needs, which is especially important in systems with up to eight SuperNICs.

One of the SuperNIC’s other unique selling points is its specialized AI networking capabilities. It provides optimal congestion control, adaptive routing, and out-of-order packet handling when tightly connected with an AI-optimized NVIDIA Spectrum-4 switch. Ethernet AI cloud settings are accelerated by these cutting-edge technologies.

Transforming cloud computing with AI

The NVIDIA BlueField-3 SuperNIC is essential for AI-ready infrastructure because of its many advantages.

Maximum efficiency for AI workloads: The BlueField-3 SuperNIC is perfect for AI workloads since it was designed specifically for network-intensive, massively parallel computing. It guarantees bottleneck-free, efficient operation of AI activities.

Performance that is consistent and predictable: The BlueField-3 SuperNIC makes sure that each job and tenant in multi-tenant data centers, where many jobs are executed concurrently, is isolated, predictable, and unaffected by other network operations.

Secure multi-tenant cloud infrastructure: Data centers that handle sensitive data place a high premium on security. High security levels are maintained by the BlueField-3 SuperNIC, allowing different tenants to cohabit with separate data and processing.

Broad network infrastructure: The BlueField-3 SuperNIC is very versatile and can be easily adjusted to meet a wide range of different network infrastructure requirements.

Wide compatibility with server manufacturers: The BlueField-3 SuperNIC integrates easily with the majority of enterprise-class servers without using an excessive amount of power in data centers.

#Describe a SuperNIC#On order to accelerate hyperscale AI workloads on Ethernet-based clouds#a new family of network accelerators called SuperNIC was created. With remote direct memory access (RDMA) over converged Ethernet (RoCE) te#it offers extremely rapid network connectivity for GPU-to-GPU communication#with throughputs of up to 400Gb/s.#SuperNICs incorporate the following special qualities:#Ensuring that data packets are received and processed in the same sequence as they were originally delivered through high-speed packet reor#In order to regulate and prevent congestion in AI networks#advanced congestion management uses network-aware algorithms and real-time telemetry data.#In AI cloud data centers#programmable computation on the input/output (I/O) channel facilitates network architecture adaptation and extension.#Low-profile#power-efficient architecture that effectively handles AI workloads under power-constrained budgets.#Optimization for full-stack AI#encompassing system software#communication libraries#application frameworks#networking#computing#and storage.#Recently#NVIDIA revealed the first SuperNIC in the world designed specifically for AI computing#built on the BlueField-3 networking architecture. It is a component of the NVIDIA Spectrum-X platform#which allows for smooth integration with the Ethernet switch system Spectrum-4.#The NVIDIA Spectrum-4 switch system and BlueField-3 SuperNIC work together to provide an accelerated computing fabric that is optimized for#Yael Shenhav#vice president of DPU and NIC products at NVIDIA#stated#“In a world where AI is driving the next wave of technological innovation#the BlueField-3 SuperNIC is a vital cog in the machinery.” “SuperNICs are essential components for enabling the future of AI computing beca

1 note

·

View note

Photo

#computers#the future#containers#hypervisor#gpu#ide#branch prediction#cloud computing#ai#javascript#ext7

1 note

·

View note

Text

i do think like if you're not immersed in graphics and thinking about all the data flowing around between different buffers and shit that's going on inside a frame, when you see a render of a shiny box reflecting on a wall, it's like. ok and? it's a box? but there's so much nuance to the way light floods through an environment. and so much ingenuity in finding out what we can fake and precompute and approximate, to get our imaginary space to have that same gorgeous feeling of 'everything reflects off everything else'.

light is just one example, but I think all the different systems of feedback interactions in the world are just so juicy. geology is full of them. rocks bouncing up isostatically as the glaciers flow off them. spreading ridges and stripes of magnetic polarity revealing themselves. earthquakes ringing across the world. clouds forming around mountain peaks, giving rise to forests and deserts. soil being shaped by erosion, directing future water into channels. biological evolution!! I turned away from earth sciences many years ago, to pursue maths and physics and later art and now computer programming, but all of these things link up and inform the thing I'm doing at the moment.

sometimes you try to directly recreate the underlying physics, sometimes you're just finding mathematical shapes that feel similar: fractal noise that can be evaluated in parallel, pushed through various functions, assigned materials, voxellised and marching cubesed and pushed onto the gpu, shaded and offset by textures, and in the end, it looks sorta like grass. an island that will be shaped further as the players get their hands on it.

it's all just a silly game about hamsters shooting missiles. and yet when you spend this long immersed in a project, so much reveals itself. nobody will probably ever think about these details as much as I have been. and yet I hope something of that comes across.

they say of the Bevy game engine that everyone's trying to make a voxel engine or tech demo instead of an actual game. but in a way, I love that. making your own personal voxel engine is a cool game, for you.

computers are for playing with

102 notes

·

View notes

Text

What does AI actually look like?

There has been a lot of talk about the negative externalities of AI, how much power it uses, how much water it uses, but I feel like people often discuss these things like they are abstract concepts, or people discuss AI like it is this intangible thing that exists off in "The cloud" somewhere, but I feel like a lot of people don't know what the infrastructure of AI actually is, and how it uses all that power and water, so I would like to recommend this video from Linus Tech Tips, where he looks at a supercomputer that is used for research in Canada. To be clear I do not have anything against supercomputers in general and they allow important work to be done, but before the AI bubble, you didn't need one, unless you needed it. The recent AI bubble is trying to get this stuff into the hands of way more people than needed them before, which is causing a lot more datacenter build up, which is causing their companies to abandon climate goals. So what does AI actually look like?

First of all, it uses a lot of hardware. It is basically normal computer hardware, there is just a lot of it networked together.

Hundreds of hard drives all spinning constantly

Each one of the blocks in this image is essentially a powerful PC, that you would still be happy to have as your daily driver today even though the video is seven years old. There are 576 of them, and other more powerful compute nodes for bigger datasets.

The GPU section, each one of these drawers contains like four datacenter level graphics cards. People are fitting a lot more of them into servers now than they were then.

Now for the cooling and the water. Each cabinet has a thick door, with a water cooled radiator in it. In summer, they even spray water onto the radiator directly so it can be cooled inside and out.

They are all fed from the pump room, which is the floor above. A bunch of pumps and pipes moving the water around, and it even has cooling towers outside that the water is pumped out into on hot days.

So is this cool? Yes. Is it useful? Also yes. Anyone doing biology, chemistry, physics, simulations, even stuff like social sciences, and even legitimate uses of analytical ai is glad stuff like this exists. It is very useful for analysing huge datasets, but how many people actually do that? Do you? The same kind of stuff is also used for big websites with youtube. But the question is, is it worth building hundreds more datacenters just like this one, so people can automatically generate their emails, have an automatic source of personal attention from a computer, and generate incoherent images for social media clicks? Didn't tech companies have climate targets, once?

105 notes

·

View notes

Text

Detecting AI-generated research papers through "tortured phrases"

So, a recent paper found and discusses a new way to figure out if a "research paper" is, in fact, phony AI-generated nonsense. How, you may ask? The same way teachers and professors detect if you just copied your paper from online and threw a thesaurus at it!

It looks for “tortured phrases”; that is, phrases which resemble standard field-specific jargon, but seemingly mangled by a thesaurus. Here's some examples (transcript below the cut):

profound neural organization - deep neural network

(fake | counterfeit) neural organization - artificial neural network

versatile organization - mobile network

organization (ambush | assault) - network attack

organization association - network connection

(enormous | huge | immense | colossal) information - big data

information (stockroom | distribution center) - data warehouse

(counterfeit | human-made) consciousness - artificial intelligence (AI)

elite figuring - high performance computing

haze figuring - fog/mist/cloud computing

designs preparing unit - graphics processing unit (GPU)

focal preparing unit - central processing unit (CPU)

work process motor - workflow engine

facial acknowledgement - face recognition

discourse acknowledgement - voice recognition

mean square (mistake | blunder) - mean square error

mean (outright | supreme) (mistake | blunder) - mean absolute error

(motion | flag | indicator | sign | signal) to (clamor | commotion | noise) - signal to noise

worldwide parameters - global parameters

(arbitrary | irregular) get right of passage to - random access

(arbitrary | irregular) (backwoods | timberland | lush territory) - random forest

(arbitrary | irregular) esteem - random value

subterranean insect (state | province | area | region | settlement) - ant colony

underground creepy crawly (state | province | area | region | settlement) - ant colony

leftover vitality - remaining energy

territorial normal vitality - local average energy

motor vitality - kinetic energy

(credulous | innocent | gullible) Bayes - naïve Bayes

individual computerized collaborator - personal digital assistant (PDA)

89 notes

·

View notes

Text

Your All-in-One AI Web Agent: Save $200+ a Month, Unleash Limitless Possibilities!

Imagine having an AI agent that costs you nothing monthly, runs directly on your computer, and is unrestricted in its capabilities. OpenAI Operator charges up to $200/month for limited API calls and restricts access to many tasks like visiting thousands of websites. With DeepSeek-R1 and Browser-Use, you:

• Save money while keeping everything local and private.

• Automate visiting 100,000+ websites, gathering data, filling forms, and navigating like a human.

• Gain total freedom to explore, scrape, and interact with the web like never before.

You may have heard about Operator from Open AI that runs on their computer in some cloud with you passing on private information to their AI to so anything useful. AND you pay for the gift . It is not paranoid to not want you passwords and logins and personal details to be shared. OpenAI of course charges a substantial amount of money for something that will limit exactly what sites you can visit, like YouTube for example. With this method you will start telling an AI exactly what you want it to do, in plain language, and watching it navigate the web, gather information, and make decisions—all without writing a single line of code.

In this guide, we’ll show you how to build an AI agent that performs tasks like scraping news, analyzing social media mentions, and making predictions using DeepSeek-R1 and Browser-Use, but instead of writing a Python script, you’ll interact with the AI directly using prompts.

These instructions are in constant revisions as DeepSeek R1 is days old. Browser Use has been a standard for quite a while. This method can be for people who are new to AI and programming. It may seem technical at first, but by the end of this guide, you’ll feel confident using your AI agent to perform a variety of tasks, all by talking to it. how, if you look at these instructions and it seems to overwhelming, wait, we will have a single download app soon. It is in testing now.

This is version 3.0 of these instructions January 26th, 2025.

This guide will walk you through setting up DeepSeek-R1 8B (4-bit) and Browser-Use Web UI, ensuring even the most novice users succeed.

What You’ll Achieve

By following this guide, you’ll:

1. Set up DeepSeek-R1, a reasoning AI that works privately on your computer.

2. Configure Browser-Use Web UI, a tool to automate web scraping, form-filling, and real-time interaction.

3. Create an AI agent capable of finding stock news, gathering Reddit mentions, and predicting stock trends—all while operating without cloud restrictions.

A Deep Dive At ReadMultiplex.com Soon

We will have a deep dive into how you can use this platform for very advanced AI use cases that few have thought of let alone seen before. Join us at ReadMultiplex.com and become a member that not only sees the future earlier but also with particle and pragmatic ways to profit from the future.

System Requirements

Hardware

• RAM: 8 GB minimum (16 GB recommended).

• Processor: Quad-core (Intel i5/AMD Ryzen 5 or higher).

• Storage: 5 GB free space.

• Graphics: GPU optional for faster processing.

Software

• Operating System: macOS, Windows 10+, or Linux.

• Python: Version 3.8 or higher.

• Git: Installed.

Step 1: Get Your Tools Ready

We’ll need Python, Git, and a terminal/command prompt to proceed. Follow these instructions carefully.

Install Python

1. Check Python Installation:

• Open your terminal/command prompt and type:

python3 --version

• If Python is installed, you’ll see a version like:

Python 3.9.7

2. If Python Is Not Installed:

• Download Python from python.org.

• During installation, ensure you check “Add Python to PATH” on Windows.

3. Verify Installation:

python3 --version

Install Git

1. Check Git Installation:

• Run:

git --version

• If installed, you’ll see:

git version 2.34.1

2. If Git Is Not Installed:

• Windows: Download Git from git-scm.com and follow the instructions.

• Mac/Linux: Install via terminal:

sudo apt install git -y # For Ubuntu/Debian

brew install git # For macOS

Step 2: Download and Build llama.cpp

We’ll use llama.cpp to run the DeepSeek-R1 model locally.

1. Open your terminal/command prompt.

2. Navigate to a clear location for your project files:

mkdir ~/AI_Project

cd ~/AI_Project

3. Clone the llama.cpp repository:

git clone https://github.com/ggerganov/llama.cpp.git

cd llama.cpp

4. Build the project:

• Mac/Linux:

make

• Windows:

• Install a C++ compiler (e.g., MSVC or MinGW).

• Run:

mkdir build

cd build

cmake ..

cmake --build . --config Release

Step 3: Download DeepSeek-R1 8B 4-bit Model

1. Visit the DeepSeek-R1 8B Model Page on Hugging Face.

2. Download the 4-bit quantized model file:

• Example: DeepSeek-R1-Distill-Qwen-8B-Q4_K_M.gguf.

3. Move the model to your llama.cpp folder:

mv ~/Downloads/DeepSeek-R1-Distill-Qwen-8B-Q4_K_M.gguf ~/AI_Project/llama.cpp

Step 4: Start DeepSeek-R1

1. Navigate to your llama.cpp folder:

cd ~/AI_Project/llama.cpp

2. Run the model with a sample prompt:

./main -m DeepSeek-R1-Distill-Qwen-8B-Q4_K_M.gguf -p "What is the capital of France?"

3. Expected Output:

The capital of France is Paris.

Step 5: Set Up Browser-Use Web UI

1. Go back to your project folder:

cd ~/AI_Project

2. Clone the Browser-Use repository:

git clone https://github.com/browser-use/browser-use.git

cd browser-use

3. Create a virtual environment:

python3 -m venv env

4. Activate the virtual environment:

• Mac/Linux:

source env/bin/activate

• Windows:

env\Scripts\activate

5. Install dependencies:

pip install -r requirements.txt

6. Start the Web UI:

python examples/gradio_demo.py

7. Open the local URL in your browser:

http://127.0.0.1:7860

Step 6: Configure the Web UI for DeepSeek-R1

1. Go to the Settings panel in the Web UI.

2. Specify the DeepSeek model path:

~/AI_Project/llama.cpp/DeepSeek-R1-Distill-Qwen-8B-Q4_K_M.gguf

3. Adjust Timeout Settings:

• Increase the timeout to 120 seconds for larger models.

4. Enable Memory-Saving Mode if your system has less than 16 GB of RAM.

Step 7: Run an Example Task

Let’s create an agent that:

1. Searches for Tesla stock news.

2. Gathers Reddit mentions.

3. Predicts the stock trend.

Example Prompt:

Search for "Tesla stock news" on Google News and summarize the top 3 headlines. Then, check Reddit for the latest mentions of "Tesla stock" and predict whether the stock will rise based on the news and discussions.

--

Congratulations! You’ve built a powerful, private AI agent capable of automating the web and reasoning in real time. Unlike costly, restricted tools like OpenAI Operator, you’ve spent nothing beyond your time. Unleash your AI agent on tasks that were once impossible and imagine the possibilities for personal projects, research, and business. You’re not limited anymore. You own the web—your AI agent just unlocked it! 🚀

Stay tuned fora FREE simple to use single app that will do this all and more.

7 notes

·

View notes

Text

World's Most Powerful Business Leaders: Insights from Visionaries Across the Globe

In the fast-evolving world of business and innovation, visionary leadership has become the cornerstone of driving global progress. Recently, Fortune magazine recognized the world's most powerful business leaders, acknowledging their transformative influence on industries, economies, and societies.

Among these extraordinary figures, Elon Musk emerged as the most powerful business leader, symbolizing the future of technological and entrepreneurial excellence.

Elon Musk: The Game-Changer

Elon Musk, the CEO of Tesla, SpaceX, and X (formerly Twitter), has redefined innovation with his futuristic endeavors. From pioneering electric vehicles at Tesla to envisioning Mars colonization with SpaceX, Musk's revolutionary ideas continue to shape industries. Recognized as the most powerful business leader by Fortune, his ventures stand as a testament to what relentless ambition and innovation can achieve. Digital Fraud and Cybercrime: India Blocks 59,000 WhatsApp Accounts and 6.7 Lakh SIM Cards Also Read This....

Musk's influence extends beyond his corporate achievements. As a driver of artificial intelligence and space exploration, he inspires the next generation of leaders to push boundaries. His leadership exemplifies the power of daring to dream big and executing with precision.

Mukesh Ambani: The Indian Powerhouse

Mukesh Ambani, the chairman of Reliance Industries, represents the epitome of Indian business success. Ranked among the top 15 most powerful business leaders globally, Ambani has spearheaded transformative projects in telecommunications, retail, and energy, reshaping India's economic landscape. His relentless focus on innovation, particularly with Reliance Jio, has revolutionized the digital ecosystem in India.

Under his leadership, Reliance Industries has expanded its global footprint, setting new benchmarks in business growth and sustainability. Ambani’s vision reflects the critical role of emerging economies in shaping the global business narrative.

Defining Powerful Leadership

The criteria for identifying powerful business leaders are multifaceted. According to Fortune, leaders were evaluated based on six key metrics:

Business Scale: The size and impact of their ventures on a global level.

Innovation: Their ability to pioneer advancements that redefine industries.

Influence: How effectively they inspire others and create a lasting impact.

Trajectory: The journey of their career and the milestones achieved.

Business Health: Metrics like profitability, liquidity, and operational efficiency.

Global Impact: Their contribution to society and how their leadership addresses global challenges.

Elon Musk and Mukesh Ambani exemplify these qualities, demonstrating how strategic vision and innovative execution can create monumental change.

Other Global Icons in Leadership

The list of the world's most powerful business leaders features numerous iconic personalities, each excelling in their respective domains:

Satya Nadella (Microsoft): A transformative leader who has repositioned Microsoft as a cloud-computing leader, emphasizing customer-centric innovation.

Sundar Pichai (Alphabet/Google): A driving force behind Google’s expansion into artificial intelligence, cloud computing, and global digital services.

Jensen Huang (NVIDIA): The architect of the AI revolution, whose GPUs have become indispensable in AI-driven industries.

Tim Cook (Apple): Building on Steve Jobs' legacy, Cook has solidified Apple as a leader in innovation and user-centric design.

These leaders have shown that their influence isn’t confined to financial success alone; it extends to creating a better future for the world.

Leadership in Action: Driving Innovation and Progress

One common thread unites these leaders—their ability to drive innovation. For example:

Mary Barra (General Motors) is transforming the auto industry with her push toward electric vehicles, ensuring a sustainable future.

Sam Altman (OpenAI) leads advancements in artificial intelligence, shaping ethical AI practices with groundbreaking models like ChatGPT.

These visionaries have proven that impactful leadership is about staying ahead of trends, embracing challenges, and delivering solutions that inspire change.

The Indian Connection: Rising Global Influence

Apart from Mukesh Ambani, Indian-origin leaders such as Sundar Pichai and Satya Nadella have earned global recognition. Their ability to bridge cultural boundaries and lead multinational corporations demonstrates the increasing prominence of Indian talent on the world stage.

Conclusion

From technological advancements to economic transformation, these powerful business leaders are shaping the future of our world. Elon Musk and Mukesh Ambani stand at the forefront, representing the limitless potential of visionary leadership. As industries continue to evolve, their impact serves as a beacon for aspiring leaders worldwide.

This era of leadership emphasizes not only achieving success but also leveraging it to create meaningful change. In the words of Elon Musk: "When something is important enough, you do it even if the odds are not in your favor." Rajkot Job Update

#elon musk#mukesh ambani#x platform#spacex#tesla#satya nadella#sundar pichai#jensen huang#rajkot#our rajkot#Rajkot Job#Rajkot Job Vacancy#job vacancy#it jobs

8 notes

·

View notes

Photo

(via AI infrastructure leader Lambda Labs closes $480M Series D to expand AI cloud platform)

Artificial intelligence is restructuring the global economy, redefining how humans interact with computers, and accelerating scientific progress. AI is the foundation of a new industrial era and a new era of computing.

We’re excited to share that Lambda has raised $480M in Series D funding, co-led by Andra Capital and SGW with participation from new investors Andrej Karpathy, ARK Invest, Fincadia Advisors, G Squared, In-Q-Tel (IQT), KHK & Partners, and NVIDIA, among others, as well as strategic investment from Pegatron, Supermicro, Wistron and Wiwynn, as well as many existing investors including 1517, Crescent Cove, and USIT.

0 notes

Text

BuySellRam.com is expanding its focus on AI hardware to meet the growing demands of the industry. Specializing in high-performance GPUs, SSDs, and AI accelerators like Nvidia and AMD models, BuySellRam.com offers businesses reliable access to advanced technology while promoting sustainability through the recycling of IT equipment. Read more about how we're supporting AI innovation and reducing e-waste in our latest announcement:

#AI Hardware#GPUs#tech innovation#ai technology#sustainability#Tech Recycling#AI Accelerators#cloud computing#BuySellRam#Tech For Good#E-waste Reduction#AI Revolution#high performance computing#information technology

0 notes

Text

Bitcoin Mining

The Evolution of Bitcoin Mining: From Solo Mining to Cloud-Based Solutions

Introduction

Bitcoin mining has come a long way since its early days when individuals could mine BTC using personal computers. Over the years, advancements in technology and increasing network difficulty have led to the rise of more sophisticated mining methods. Today, cloud mining solutions like NebuMine are revolutionizing cryptocurrency mining by making it more accessible and efficient. This article explores the journey of Bitcoin mining, from solo efforts to large-scale cloud mining operations.

The Early Days of Bitcoin Mining

In the beginning, Bitcoin mining was simple. Miners could use regular CPUs to solve cryptographic puzzles and validate transactions. However, as more participants joined the network, mining difficulty increased, leading to the adoption of more powerful GPUs.

As BTC mining grew, miners began forming mining pools to combine computing power and share rewards. This shift marked the transition from individual mining to more collective efforts in cryptocurrency mining.

The Rise of ASIC Mining

The introduction of Application-Specific Integrated Circuits (ASICs) in Bitcoin mining changed the game completely. These highly specialized machines offered unmatched efficiency, significantly increasing mining power while consuming less energy than GPUs.

However, ASICs also made mining more competitive, pushing small-scale miners out of the market. This led to the rise of large mining farms, further centralizing BTC mining operations.

The Shift to Cloud Mining

As the mining landscape became more challenging, cloud mining emerged as a viable alternative. Instead of investing in expensive hardware, users could rent mining power from platforms like NebuMine, enabling them to participate in Bitcoin mining without technical expertise or maintenance costs.

Cloud mining offers several advantages:

Accessibility: Users can start crypto mining without purchasing expensive equipment.

Scalability: Miners can adjust their computing power based on market conditions.

Convenience: No need for hardware setup, electricity costs, or cooling management.

With platforms like NebuMine, cloud mining has become a practical way for individuals and businesses to engage in BTC mining and Ethereum mining without the hassle of traditional setups.

Ethereum Mining and the Future of Crypto Mining

While Bitcoin mining has dominated the industry, Ethereum mining has also played a crucial role in the crypto space. With Ethereum’s shift to Proof-of-Stake (PoS), many miners have sought alternatives, further driving interest in cloud mining services.

Cryptocurrency mining continues to evolve, with new innovations such as AI-driven mining optimization and decentralized mining pools shaping the future. Platforms like NebuMine are at the forefront of this transformation, making cloud mining more accessible, efficient, and sustainable.

Conclusion

The evolution of Bitcoin mining highlights the industry's rapid advancements, from solo mining to industrial-scale operations and now cloud mining. As technology continues to advance, cloud mining solutions like NebuMine are paving the way for the future of cryptocurrency mining, making it easier for users to participate in BTC mining and Ethereum mining without technical barriers.

Check out our website to get more information about Cryptocurrency mining!

#Bitcoin mining#Cloud mining#Crypto mining#BTC mining#Ethereum mining#Cryptocurrency mining#SoundCloud

2 notes

·

View notes

Text

AI & Data Centers vs Water + Energy

We all know that AI has issues, including energy and water consumption. But these fields are still young and lots of research is looking into making them more efficient. Remember, most technologies tend to suck when they first come out.

Deploying high-performance, energy-efficient AI

"You give up that kind of amazing general purpose use like when you're using ChatGPT-4 and you can ask it everything from 17th century Italian poetry to quantum mechanics, if you narrow your range, these smaller models can give you equivalent or better kind of capability, but at a tiny fraction of the energy consumption," says Ball."...

"I think liquid cooling is probably one of the most important low hanging fruit opportunities... So if you move a data center to a fully liquid cooled solution, this is an opportunity of around 30% of energy consumption, which is sort of a wow number.... There's more upfront costs, but actually it saves money in the long run... One of the other benefits of liquid cooling is we get out of the business of evaporating water for cooling...

The other opportunity you mentioned was density and bringing higher and higher density of computing has been the trend for decades. That is effectively what Moore's Law has been pushing us forward... [i.e. chips rate of improvement is faster than their energy need growths. This means each year chips are capable of doing more calculations with less energy. - RCS] ... So the energy savings there is substantial, not just because those chips are very, very efficient, but because the amount of networking equipment and ancillary things around those systems is a lot less because you're using those resources more efficiently with those very high dense components"

New tools are available to help reduce the energy that AI models devour

"The trade-off for capping power is increasing task time — GPUs will take about 3 percent longer to complete a task, an increase Gadepally says is "barely noticeable" considering that models are often trained over days or even months... Side benefits have arisen, too. Since putting power constraints in place, the GPUs on LLSC supercomputers have been running about 30 degrees Fahrenheit cooler and at a more consistent temperature, reducing stress on the cooling system. Running the hardware cooler can potentially also increase reliability and service lifetime. They can now consider delaying the purchase of new hardware — reducing the center's "embodied carbon," or the emissions created through the manufacturing of equipment — until the efficiencies gained by using new hardware offset this aspect of the carbon footprint. They're also finding ways to cut down on cooling needs by strategically scheduling jobs to run at night and during the winter months."

AI just got 100-fold more energy efficient

Northwestern University engineers have developed a new nanoelectronic device that can perform accurate machine-learning classification tasks in the most energy-efficient manner yet. Using 100-fold less energy than current technologies...

“Today, most sensors collect data and then send it to the cloud, where the analysis occurs on energy-hungry servers before the results are finally sent back to the user,” said Northwestern’s Mark C. Hersam, the study’s senior author. “This approach is incredibly expensive, consumes significant energy and adds a time delay...

For current silicon-based technologies to categorize data from large sets like ECGs, it takes more than 100 transistors — each requiring its own energy to run. But Northwestern’s nanoelectronic device can perform the same machine-learning classification with just two devices. By reducing the number of devices, the researchers drastically reduced power consumption and developed a much smaller device that can be integrated into a standard wearable gadget."

Researchers develop state-of-the-art device to make artificial intelligence more energy efficient

""This work is the first experimental demonstration of CRAM, where the data can be processed entirely within the memory array without the need to leave the grid where a computer stores information,"...

According to the new paper's authors, a CRAM-based machine learning inference accelerator is estimated to achieve an improvement on the order of 1,000. Another example showed an energy savings of 2,500 and 1,700 times compared to traditional methods"

5 notes

·

View notes