#shor’s algorithm

Explore tagged Tumblr posts

Text

Bitcoin in a Post Quantum Cryptographic World

Quantum computing, once a theoretical concept, is now an impending reality. The development of quantum computers poses significant threats to the security of many cryptographic systems, including Bitcoin. Cryptographic algorithms currently used in Bitcoin and similar systems may become vulnerable to quantum computing attacks, leading to potential disruptions in the blockchain ecosystem. The question arises: What will be the fate of Bitcoin in a post-quantum cryptographic world?

Bitcoin relies on two cryptographic principles: the Elliptic Curve Digital Signature Algorithm (ECDSA) and the SHA-256 hashing function. The ECDSA is used for signing transactions, which verifies the rightful owner of the Bitcoin. On the other hand, the SHA-256 hashing function is used for proof-of-work mechanism, which prevents double-spending. Both principles are expected to become vulnerable in the face of powerful quantum computers.

Quantum Threat to Bitcoin

Quantum computers, due to their inherent nature of superposition and entanglement, can process information on a scale far beyond the capability of classical computers. Shor's Algorithm, a quantum algorithm for factoring integers, could potentially break the ECDSA by deriving the private key from the public key, something that is computationally infeasible with current computing technology. Grover's Algorithm, another quantum algorithm, can significantly speed up the process of finding a nonce, thus jeopardizing the proof-of-work mechanism.

Post-Quantum Cryptography

In a post-quantum world, Bitcoin and similar systems must adapt to maintain their security. This is where post-quantum cryptography (PQC) enters the scene. PQC refers to cryptographic algorithms (usually public-key algorithms) that are thought to be secure against an attack by a quantum computer. These algorithms provide a promising direction for securing Bitcoin and other cryptocurrencies against the quantum threat.

Bitcoin in the Post Quantum World

Adopting a quantum-resistant algorithm is a potential solution to the quantum threat. Bitcoin could potentially transition to a quantum-resistant cryptographic algorithm via a hard fork, a radical change to the blockchain protocol that makes previously invalid blocks/transactions valid (or vice-versa). Such a transition would require a complete consensus in the Bitcoin community, a notoriously difficult achievement given the decentralized nature of the platform.

Moreover, the Bitcoin protocol can be updated with quantum-resistant signature schemes like the Lattice-based, Code-based, Multivariate polynomial, or Hash-based cryptography. These cryptosystems are believed to withstand quantum attacks even with the implementation of Shor's Algorithm.

Additionally, Bitcoin could integrate quantum key distribution (QKD), a secure communication method using a cryptographic protocol involving components of quantum mechanics. It enables two parties to produce a shared random secret key known only to them, which can be used to encrypt and decrypt messages.

Conclusion

In conclusion, the advent of quantum computers does indeed pose a threat to Bitcoin's security. However, with the development of post-quantum cryptography, there are potential solutions to this problem. The future of Bitcoin in a post-quantum world is likely to depend on how quickly and effectively these new cryptographic methods can be implemented. The key is to be prepared and proactive to ensure the longevity of Bitcoin and other cryptocurrencies in the face of this new quantum era.

While the quantum threat may seem daunting, it also presents an opportunity - an opportunity to improve, to innovate, and to adapt. After all, the essence of survival lies in the ability to adapt to change. In the end, Bitcoin, like life, will find a way.

#ko-fi#kofi#geeknik#nostr#art#blog#writing#bitcoin#btc#ecdsa#sha256#shor’s algorithm#quantum computing#superposition#entanglement#quantum mechanics#quantum physics#crypto#cryptocurrency#cryptography#encryption#futurism

2 notes

·

View notes

Text

Post Quantum Security: Future-Proof Data From Quantum Threats

What becomes of encryption today when quantum computers are capable of cracking it? As we race toward a future driven by the next generation of technology, one urgent question hangs over cybersecurity. The solution is to use Post Quantum Security, a new generation of cryptography capable of resisting the brute power of quantum computing.

In a world where sensitive information — from personal identities to national secrets — is increasingly vulnerable, Post Quantum Security is not just an upgrade, but a necessity. This article explores how to future-proof your data and systems against quantum threats.

Understanding the Quantum Threat Landscape

The Birth of Quantum Computing

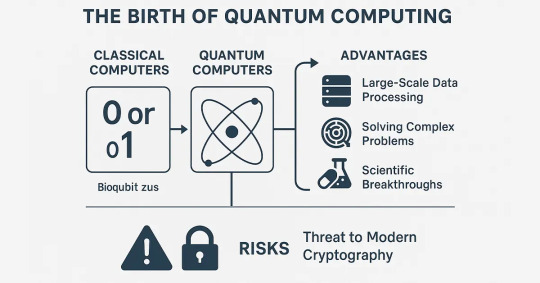

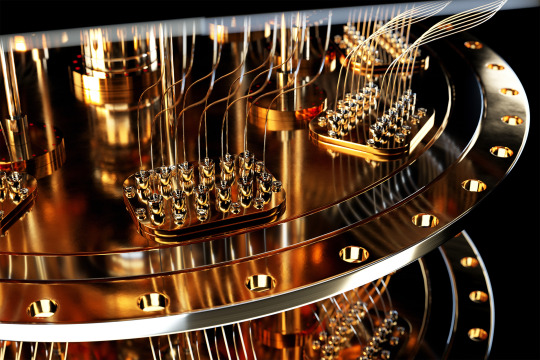

Quantum computing seems to quickly evolve from a new scientific theory into a change- and results-driven technology. Old computers use the binary digit as 0 or 1, whereas quantum computers operate using qubits, which can occupy several states simultaneously owing to superposition and entanglement. As a result, such cutting-edge machines provide an exceptional ability for quick, advanced computations.

Quantum computing excels using its capability of handling large data volumes and solving issues too complicated for existing best classical supercomputers. In particular, quantum computers can effectively address complex logistics tasks, analyze the structure of molecules to invent new medicines, and enhance the approach undertaken in financial forecasting.

But with that potential comes a warning: quantum computing puts modern cryptographic systems at serious risk. The same computational benefits that make quantum computers a breakthrough for scientific research are the ability to compromise classical encryption methods used in our online security.

Implications for Current Cryptography

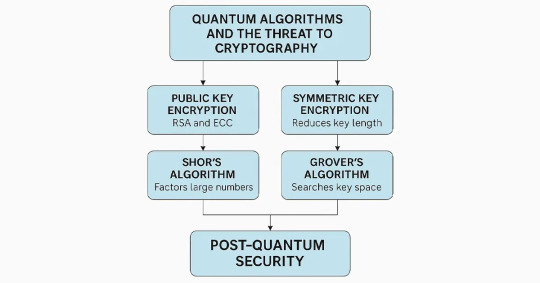

The dominant modalities of the modern digital infrastructure are based on the use of public key cryptography, and such systems as RSA and ECC are among the most widespread representations that people use. These encryption systems are based on mathematically difficult problems for conventional systems — factorizing huge numbers or solving discrete logarithm problems. These are exactly the sorts of problems a quantum computer is designed to demolish with impunity using expert algorithms.

Shor’s Algorithm, created in 1994, is likely the most famous quantum algorithm that targets RSA and ECC directly. It can factor big numbers exponentially quicker than the best-known classical algorithms. When quantum computers are ready — usually called “quantum supremacy” — they will be able to decrypt secure communications, financial transactions, and private information that are now thought to be secure.

Another significant algorithm, Grover’s Algorithm, doesn’t shatter symmetric crypto systems but narrows down their security levels significantly. It indeed cuts a symmetric key’s bits in half, so 256-bit keys would provide only 128-bit security in the quantum age. Less devastating than Shor’s impact on asymmetric crypto, though, Grover’s Algorithm does mean a reconsideration of the dominant symmetric encryption algorithms.

This new threat brings to the forefront the need for post quantum security solutions in an immediate manner. Unlike conventional cryptographic practices, post quantum security is about designing and deploying algorithms that are secure even when quantum computing resources are available. These algorithms are based on challenging mathematical problems for which quantum computers are not yet known to solve efficiently.

The Urgency for Transition

Post quantum security is not a theoretical concept; it’s an urgent, real-life issue. RSA- or ECC-breaking large-scale quantum computers don’t exist yet, but breakthroughs are on the move. The collaborative efforts of governments, corporations, and research bodies across the globe to create quantum technology imply that these machines will soon become real.

There is a dire need to secure the digital infrastructure of organizations, for when quantum technology is available. Transitioning to post quantum security protocols is difficult and time-consuming, not only swapping cryptographic algorithms but also rewriting software, hardware, and communication protocols. Waiting for fully functional quantum computers may expose systems and data to attacks.

Further, hacked encrypted data today can be stored and decoded at a later date after computers develop the capability — the “harvest now, decrypt later” attack. As a countermeasure post quantum security ensures valuable information is safeguarded both today and in the foreseeable future.

The U.S. National Institute of Standards and Technology (NIST) has already started standardizing quantum-resistant algorithms with its Post-Quantum Cryptography Standardization Project. Organizations can standardize their security approach with international best practices for post quantum security using NIST-recommended solutions.

What Is Post Quantum Security?

Post Quantum Security is a term that is used to refer to cryptographic methods that are resistant to attacks carried out by entities that can run large quantum computers. Besides, this category includes the quantum-resistant hash algorithms, encryption algorithms, and digital signature algorithms. These systems are being standardized by efforts such as NIST’s Post-Quantum Cryptography Project.

Embracing Post Quantum Security guarantees that long-lived sensitive information, such as medical records or financial contracts, is still protected even when intercepted today and decrypted in the future quantum world.

ncog.earth incorporates Post Quantum Security into its core blockchain protocol. It provides data security for decades in a post-quantum secure environment.

Core Principles of Post Quantum Security

Below are the fundamental principles that constitute this new field and how they interact with each other to protect digital property during the era of quantum computing.

1. Quantum-Resistant Algorithms

Post quantum security is centered around quantum-resistant algorithms, which are designed to stand up to attacks both from traditional attack methodologies as well as attacks performed with a quantum computer. These algorithms don’t depend on the weak assumptions of the classical encryption methods like RSA or ECC, which can be attacked and broken by quantum algorithms like Shor. Post quantum security instead depends on mathematical problems with cryptographically amicable properties that are difficult for an efficient quantum computer to solve.

Among the most promising quantum-resistant solutions are-

Lattice-based cryptography: Lattice-based cryptosystems rely on the computational subtlety of such problems as the Shortest Vector Problem (SVP) and the Learning With Errors (LWE) problem. They are classical and quantum resistant and thus well positioned to protect encryption, digital signatures, and key exchanges.

Hash-based cryptography: Founded on extensively studied hash functions, this method effectively generates secure digital signatures. Because hash functions are one-way functions, they are extremely secure even against quantum attackers.

Code-based cryptography: Founded on error-correcting codes, this method has stood the test of time for more than four decades. It is strong, well-established, and extremely efficient for encryption and digital signatures in a post quantum security scenario.

Multivariate polynomial cryptography: The hardness of these problems is essentially dependent on the intricacies of solving multivariate quadratic systems of equations, which are intractable for quantum and classical systems.

Deployment and selection of these algorithms are being standardized by bodies such as NIST, which is finalizing a suite of post-quantum cryptographic standards for ease of global implementation of post quantum security protocols.

2. Forward Secrecy

Forward secrecy is yet another significant post quantum security parameter. In the conventional crypto paradigm, it might be possible that breaking a private key today can expose attackers to decrypted communications in the past and future, and it is highly risky if, in a quantum paradigm where harvested encrypted data from today can be broken using quantum computers in the future.

Post quantum security addresses this issue by guaranteeing that even when an encryption key is finally breached, past messages cannot be decoded after the fact. The method is to repeatedly generate ephemeral keys that don’t survive and aren’t easily linked up with each other. Forward secrecy is significant not just for private individuals but for protecting business networks, government files, and money networks as well.

Essentially, forward secrecy reconfigures the way we approach long-term data secrecy, particularly considering the danger represented by “harvest now, decrypt later” attacks facilitated by quantum advancements. In post quantum security, it’s a solid pillar of real future-proofing.

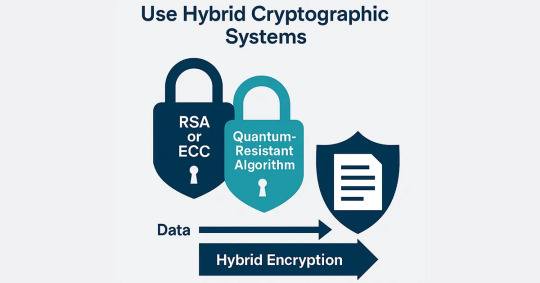

3. Hybrid Cryptographic Models

With the indefinite time frame of quantum progress, most organizations are taking a hybrid approach that brings together classical and post-quantum-resistant cryptography. The cryptographic models are created to fill the security gap during the transition phase when the existing traditional algorithms exist side by side with the post quantum security algorithms.

In a hybrid method, two sets of algorithms are run in parallel. One set offers backwards compatibility with current infrastructures, and the other offers immunity to future quantum attacks. As a simple example, an end-to-end communication channel could employ RSA in conjunction with a lattice-based encryption scheme at the same time. This means that even if the classical part is compromised in the future, the post quantum security layer still protects the underlying data.

This method has some advantages-

Gradual roll-out: Businesses can deploy systems gradually without affecting current services.

Redundancy: Having several levels of encryption offers a backup in case one algorithm is compromised.

Testing ground: Testing the deployment of quantum-resilient tools identifies real-world problems and solutions before mass deployment.

Hybrid deployments are not long-term, but they are essential stepping stones. With increasing trust and confidence in post quantum security technology, hybrid deployments will ultimately make way for fully quantum-resistant architectures.

Why Businesses Must Act Now

In the unfolding digital age, while cyberattacks increase in scale and sophistication, quantum computing’s emergence is at once a dazzling technological advancement and an intrinsic cybersecurity challenge. To companies — particularly those holding sensitive customer data or conducting business in highly regulated industries — the time to prepare is not in the future but today. Post-quantum security is no longer an idealistic aspiration but rather a concrete reality. Forward-thinking companies need to take action today to ensure that they will be able to withstand future cryptographic shocks.

Quantum Threats Are Nearer Than They Seem

The power of quantum computers is that they can use superposition and entanglement to exceed classical machines in certain forms of mathematical operations. The possible advantages that such technology could offer to drug discovery and logistics, as well as machine learning, are substantial, but this danger also undermines the encryption protection that enshrines secure digital communication, financial systems, medical records, and contracts.

Algorithms such as RSA and ECC, the basis of modern public-key cryptography, could be made obsolete by quantum algorithms such as Shor’s. In practical terms, what that would mean is to make information currently encrypted under these systems decrypted within seconds if there existed a sufficiently large quantum computer. The potential effect would be disastrous to companies experiencing huge-scale data breaches, litigations, and erosion of customer confidence.

That’s why post quantum security must be implemented far ahead of the wholesale arrival of quantum computing. Companies that delay are not only taking a risk — they’re deliberately putting their businesses, data, and reputations in long-term jeopardy.

Data Longevity Brings the Risk into the Present

One of the least considered measures of quantum risk is data longevity concern. Whereas real-time data spoils at a fast pace, some forms of data — medical history, legal papers, government agreements, and intellectual property — are priceless for decades or years. If today a cyberattacker got hold of encrypted information, they would be unable to decipher it. But with the advent of quantum power, the same information can be accessed in the foreseeable future. The label is also applicable when talking about attacks referred to as “harvest now, decrypt later”.

On this front, post quantum security plays a twofold role: it safeguards against the threat at hand, but also against potential attempts to decrypt in the future. For businesses tasked with securing data over the long term — insurance providers, banks, and law firms, for example — it is critical that it is pre-emptive. Delaying the implementation of quantum-resistant practices creates a bombshell situation where encrypted files stored could be cracked open at any moment.

Consumer confidence is perhaps the most precious asset of the online marketplace. With high-profile breaches becoming the norm, customers are growing more attuned to where their information lives and how it is secured. Companies that act early to publish quantum security standards communicate that they are serious about leading-edge data protection and customer privacy. This method contributes to the creation and preservation of a reliable brand and earns the loyalty of the clients.

Additionally, regulatory landscapes are changing. Global data privacy legislation like the GDPR, HIPAA, and CCPA demands secure data security procedures, and compliance systems will shortly be following close behind in counteracting quantum-age attacks. Adopting post quantum security today allows organizations to protect themselves against future compliance mandates and sidestep the prohibitively cost-intensive exercise of currency flows.

Smooth Transition Through Strategic Planning

Post quantum migration is not a flip-of-the-switch transition — it requires thoughtful planning, testing, and staged deployment. Businesses must assess their existing cryptographic infrastructure, identify vulnerable endpoints, and decide which quantum-resistant algorithms will best meet their operational needs.

Fortunately, most businesses are not beginning from the ground up. Hybrid crypto designs — blends of conventional and quantum-resistant solutions — permit phased and secure adoption. Post quantum security is viable through hybrid approaches that support organizations in adopting post quantum security without sacrificing current system performance or compatibility. Phase by phase is the way, causing as little disruption as possible while providing security for important information at each stage.

They also must spend on training and awareness programs. Decision-makers, developers, and cybersecurity staff must be made aware of the effects of quantum risk and the pragmatic actions that go into minimizing it. Post quantum security incorporated into cybersecurity roadmaps now will have companies not lag when the quantum era is in full force.

Building Quantum-Resilient Ecosystems

The truth is, cybersecurity is not an isolated practice. A majority of businesses employ third-party suppliers, cloud computing providers, and digital platforms that touch or have access to sensitive information. Having a secure digital environment is a matter of discussing post quantum security with partners and suppliers.

Leading organizations are now starting to measure their supply chains and expect quantum-readiness from their partners. Organizations can minimize systemic vulnerabilities and foster increased collective resilience by establishing post-quantum security as an expectation within ecosystems.

Integrating Post Quantum Security into Your Infrastructure

With continued advances in quantum computing, the danger that it poses to classical cryptographic schemes is more dire. Companies and enterprises cannot afford to wait. There is a need for a transparent and systematic adoption of post quantum security protocols into existing IT infrastructures so that data confidentiality, integrity, and availability are preserved under quantum computing. This has to be done with a strategic roadmap of action covering technology and operations transformation across the digital landscape.

For this transition to be made possible, organizations can utilize a multi-step process that will establish quantum resilience without disrupting essential services. Below, we outline the four main steps of incorporating post-quantum security in your infrastructure.

Audit Your Current Cryptographic Inventory

Before any change is implemented by companies, they must start by defining the scope and utilization of current cryptographic assets. Any effective post quantum security project is based on a thorough cryptographic inventory. This process entails determining where and how cryptographic algorithms are utilized — on SSL/TLS certificates, database encryption, secure email, authentication protocols, digital signatures, API gateways, mobile applications, VPNs, and IoT devices.

This audit must encompass-

Encryption Algorithms Used: Assess if systems are using RSA, ECC, or other vulnerable algorithm-based encryption.

Key Sizes and Expiration Policies: Document existing key sizes and assess how often they are being replaced.

Cryptographic Libraries and APIs: Check for dependencies and assess compatibility with quantum-resistant alternatives.

Certificate Authorities and Issuance Policies: Examine methods of distributing, retaining, and withdrawing digital certificates.

When such data is analyzed, it is possible to identify weak points and establish priorities for strategic transition to post-quantum security. Notably, the process helps organizations to review the data lifecycle and determine long-term sensitive data that needs to be secured today against tomorrow’s quantum decryption power.

Select Quantum-Resistant Algorithms

After identifying your cryptographic footprint, the next step is to select quantum-resistant alternatives. NIST has worked with others to initiate multi-year standardization efforts that will evaluate and promote post-quantum cryptography algorithm recommendations. These algorithms are designed so that they can resist attack by both classical and quantum computers and include key encapsulation processes and digital signature protocols.

Among the most promising contributions to come out of the NIST project are-

CRYSTALS-Kyber (for public-key cryptography and KEM)

CRYSTALS-Dilithium (for digital signatures)

FALCON and SPHINCS+ (signature schemes with performance profiles of differing types)

In choosing algorithms, implementations must consider performance, resource efficiency, complexity to implement, and the extent to which the solution they choose fits their infrastructure. For instance, IoT devices that have little power may need lightweight algorithms, but high performance servers are more than capable of handling complex computing activities.

Selection of the best algorithms is immensely important for effective post quantum security integration. Future-proofing should also be a consideration for security teams as quantum-resistant cryptography develops. Modular architecture solutions for cryptography allow for a seamless switch or upgrade of algorithms as new standards become available.

Use Hybrid Cryptographic Systems

It is not necessary to move away from classical to quantum-resistant cryptography completely. One of the most recommended practices while transitioning to post quantum security is the use of hybrid cryptographic systems. These use current (classical) cryptographic algorithms and couple them with quantum-safe algorithms to provide a multi-layered defense.

In a hybrid system, data is encrypted with both RSA (or ECC) and a quantum-resistant algorithm. It makes the system both backward compatible as well as future-proof against attacks based on quantum. Although quantum computers are unable to break today’s classical encryption now, hybrid encryption protects the data from being exposed in the future, most beneficial for long-shelf-life sensitive data.

Hybrid deployments also enable companies to pilot the in-the-wild usage of post-quantum-safe algorithms without removing trusted current defenses. Phase-in of post quantum security in this manner prevents service disruption, lowers operational risk, and enables incremental testing and tightening.

Key libraries and frameworks increasingly start to include hybrid support. For example-

OpenSSL (with quantum-safe branches)

Cloudflare and Amazon Web Services, which have begun to experiment with post-quantum TLS deployments

Mozilla and Google, pioneering early hybrid deployments in their browsers

These initial hybrid adoption attempts show the growing traction for post-quantum security and offer in-the-wild blueprints for companies willing to make the transition.

Upgrade Key Management Systems (KMS)

The key to the efficiency of encryption lies entirely in the responsible use of its keys. Infrastructures that are quantum-resilient need updates to legacy Key Management Systems (KMS) to accommodate the special needs of post quantum security…

#post quantum#post quantum security#technology#ncog#post quantum blockchain#post quantum encryption#post quantum cryptography#decentralized database#quantum computer#quantum computing#quantum computers#post quantum secure blockchain#rsa#shor algorithm#quantum-resistant cryptography#ECC

1 note

·

View note

Text

Shor’s Algorithm Explained: How Quantum Computing Breaks RSA

Shor’s Algorithm is one of the most celebrated quantum algorithms in theoretical computer science — and for good reason. It provides an exponential speedup for integer factorization, directly threatening the widely used RSA encryption scheme. In this deep technical dive, we’ll explore exactly how Shor’s Algorithm works, why it’s efficient on a quantum computer, and what makes this possible (yes, the Quantum Fourier Transform plays a central role). Read More: https://abhisheyk-gaur.medium.com/shors-algorithm-explained-how-quantum-computing-breaks-rsa-294afa875dc2

0 notes

Text

Quantum computers:

leverage the principles of **quantum mechanics** (superposition, entanglement, and interference) to solve certain problems exponentially faster than classical computers. While still in early stages, they have transformative potential in multiple fields:

### **1. Cryptography & Cybersecurity**

- **Breaking Encryption**: Shor’s algorithm can factor large numbers quickly, threatening RSA and ECC encryption (forcing a shift to **post-quantum cryptography**).

- **Quantum-Safe Encryption**: Quantum Key Distribution (QKD) enables theoretically unhackable communication (e.g., BB84 protocol).

### **2. Drug Discovery & Material Science**

- **Molecular Simulation**: Modeling quantum interactions in molecules to accelerate drug design (e.g., protein folding, catalyst development).

- **New Materials**: Discovering superconductors, better batteries, or ultra-strong materials.

### **3. Optimization Problems**

- **Logistics & Supply Chains**: Solving complex routing (e.g., traveling salesman problem) for airlines, shipping, or traffic management.

- **Financial Modeling**: Portfolio optimization, risk analysis, and fraud detection.

### **4. Artificial Intelligence & Machine Learning**

- **Quantum Machine Learning (QML)**: Speeding up training for neural networks or solving complex pattern recognition tasks.

- **Faster Data Search**: Grover’s algorithm can search unsorted databases quadratically faster.

### **5. Quantum Chemistry**

- **Precision Chemistry**: Simulating chemical reactions at the quantum level for cleaner energy solutions (e.g., nitrogen fixation, carbon capture).

### **6. Climate & Weather Forecasting**

- **Climate Modeling**: Simulating atmospheric and oceanic systems with higher accuracy.

- **Energy Optimization**: Improving renewable energy grids or fusion reactor designs.

### **7. Quantum Simulations**

- **Fundamental Physics**: Testing theories in high-energy physics (e.g., quark-gluon plasma) or condensed matter systems.

### **8. Financial Services**

- **Option Pricing**: Monte Carlo simulations for derivatives pricing (quantum speedup).

- **Arbitrage Opportunities**: Detecting market inefficiencies faster.

### **9. Aerospace & Engineering**

- **Aerodynamic Design**: Optimizing aircraft shapes or rocket propulsion systems.

- **Quantum Sensors**: Ultra-precise navigation (e.g., GPS-free positioning).

### **10. Breakthroughs in Mathematics**

- **Solving Unsolved Problems**: Faster algorithms for algebraic geometry, topology, or number theory.

#future#cyberpunk aesthetic#futuristic#futuristic city#cyberpunk artist#cyberpunk city#cyberpunkart#concept artist#digital art#digital artist#quantum computers#the future of quantum computers#futuristic theory

5 notes

·

View notes

Text

I discovered a variant of Shor's algorithm that allows me to collapse all the timelines where I don't successfully crack someone's egg. I'm keeping this one to myself tho

15 notes

·

View notes

Text

Toward a code-breaking quantum computer

New Post has been published on https://thedigitalinsider.com/toward-a-code-breaking-quantum-computer/

Toward a code-breaking quantum computer

The most recent email you sent was likely encrypted using a tried-and-true method that relies on the idea that even the fastest computer would be unable to efficiently break a gigantic number into factors.

Quantum computers, on the other hand, promise to rapidly crack complex cryptographic systems that a classical computer might never be able to unravel. This promise is based on a quantum factoring algorithm proposed in 1994 by Peter Shor, who is now a professor at MIT.

But while researchers have taken great strides in the last 30 years, scientists have yet to build a quantum computer powerful enough to run Shor’s algorithm.

As some researchers work to build larger quantum computers, others have been trying to improve Shor’s algorithm so it could run on a smaller quantum circuit. About a year ago, New York University computer scientist Oded Regev proposed a major theoretical improvement. His algorithm could run faster, but the circuit would require more memory.

Building off those results, MIT researchers have proposed a best-of-both-worlds approach that combines the speed of Regev’s algorithm with the memory-efficiency of Shor’s. This new algorithm is as fast as Regev’s, requires fewer quantum building blocks known as qubits, and has a higher tolerance to quantum noise, which could make it more feasible to implement in practice.

In the long run, this new algorithm could inform the development of novel encryption methods that can withstand the code-breaking power of quantum computers.

“If large-scale quantum computers ever get built, then factoring is toast and we have to find something else to use for cryptography. But how real is this threat? Can we make quantum factoring practical? Our work could potentially bring us one step closer to a practical implementation,” says Vinod Vaikuntanathan, the Ford Foundation Professor of Engineering, a member of the Computer Science and Artificial Intelligence Laboratory (CSAIL), and senior author of a paper describing the algorithm.

The paper’s lead author is Seyoon Ragavan, a graduate student in the MIT Department of Electrical Engineering and Computer Science. The research will be presented at the 2024 International Cryptology Conference.

Cracking cryptography

To securely transmit messages over the internet, service providers like email clients and messaging apps typically rely on RSA, an encryption scheme invented by MIT researchers Ron Rivest, Adi Shamir, and Leonard Adleman in the 1970s (hence the name “RSA”). The system is based on the idea that factoring a 2,048-bit integer (a number with 617 digits) is too hard for a computer to do in a reasonable amount of time.

That idea was flipped on its head in 1994 when Shor, then working at Bell Labs, introduced an algorithm which proved that a quantum computer could factor quickly enough to break RSA cryptography.

“That was a turning point. But in 1994, nobody knew how to build a large enough quantum computer. And we’re still pretty far from there. Some people wonder if they will ever be built,” says Vaikuntanathan.

It is estimated that a quantum computer would need about 20 million qubits to run Shor’s algorithm. Right now, the largest quantum computers have around 1,100 qubits.

A quantum computer performs computations using quantum circuits, just like a classical computer uses classical circuits. Each quantum circuit is composed of a series of operations known as quantum gates. These quantum gates utilize qubits, which are the smallest building blocks of a quantum computer, to perform calculations.

But quantum gates introduce noise, so having fewer gates would improve a machine’s performance. Researchers have been striving to enhance Shor’s algorithm so it could be run on a smaller circuit with fewer quantum gates.

That is precisely what Regev did with the circuit he proposed a year ago.

“That was big news because it was the first real improvement to Shor’s circuit from 1994,” Vaikuntanathan says.

The quantum circuit Shor proposed has a size proportional to the square of the number being factored. That means if one were to factor a 2,048-bit integer, the circuit would need millions of gates.

Regev’s circuit requires significantly fewer quantum gates, but it needs many more qubits to provide enough memory. This presents a new problem.

“In a sense, some types of qubits are like apples or oranges. If you keep them around, they decay over time. You want to minimize the number of qubits you need to keep around,” explains Vaikuntanathan.

He heard Regev speak about his results at a workshop last August. At the end of his talk, Regev posed a question: Could someone improve his circuit so it needs fewer qubits? Vaikuntanathan and Ragavan took up that question.

Quantum ping-pong

To factor a very large number, a quantum circuit would need to run many times, performing operations that involve computing powers, like 2 to the power of 100.

But computing such large powers is costly and difficult to perform on a quantum computer, since quantum computers can only perform reversible operations. Squaring a number is not a reversible operation, so each time a number is squared, more quantum memory must be added to compute the next square.

The MIT researchers found a clever way to compute exponents using a series of Fibonacci numbers that requires simple multiplication, which is reversible, rather than squaring. Their method needs just two quantum memory units to compute any exponent.

“It is kind of like a ping-pong game, where we start with a number and then bounce back and forth, multiplying between two quantum memory registers,” Vaikuntanathan adds.

They also tackled the challenge of error correction. The circuits proposed by Shor and Regev require every quantum operation to be correct for their algorithm to work, Vaikuntanathan says. But error-free quantum gates would be infeasible on a real machine.

They overcame this problem using a technique to filter out corrupt results and only process the right ones.

The end-result is a circuit that is significantly more memory-efficient. Plus, their error correction technique would make the algorithm more practical to deploy.

“The authors resolve the two most important bottlenecks in the earlier quantum factoring algorithm. Although still not immediately practical, their work brings quantum factoring algorithms closer to reality,” adds Regev.

In the future, the researchers hope to make their algorithm even more efficient and, someday, use it to test factoring on a real quantum circuit.

“The elephant-in-the-room question after this work is: Does it actually bring us closer to breaking RSA cryptography? That is not clear just yet; these improvements currently only kick in when the integers are much larger than 2,048 bits. Can we push this algorithm and make it more feasible than Shor’s even for 2,048-bit integers?” says Ragavan.

This work is funded by an Akamai Presidential Fellowship, the U.S. Defense Advanced Research Projects Agency, the National Science Foundation, the MIT-IBM Watson AI Lab, a Thornton Family Faculty Research Innovation Fellowship, and a Simons Investigator Award.

#2024#ai#akamai#algorithm#Algorithms#approach#apps#artificial#Artificial Intelligence#author#Building#challenge#classical#code#computer#Computer Science#Computer Science and Artificial Intelligence Laboratory (CSAIL)#Computer science and technology#computers#computing#conference#cryptography#cybersecurity#defense#Defense Advanced Research Projects Agency (DARPA)#development#efficiency#Electrical Engineering&Computer Science (eecs)#elephant#email

5 notes

·

View notes

Text

Quantum Computing and Data Science: Shaping the Future of Analysis

In the ever-evolving landscape of technology and data-driven decision-making, I find two cutting-edge fields that stand out as potential game-changers: Quantum Computing and Data Science. Each on its own has already transformed industries and research, but when combined, they hold the power to reshape the very fabric of analysis as we know it.

In this blog post, I invite you to join me on an exploration of the convergence of Quantum Computing and Data Science, and together, we'll unravel how this synergy is poised to revolutionize the future of analysis. Buckle up; we're about to embark on a thrilling journey through the quantum realm and the data-driven universe.

Understanding Quantum Computing and Data Science

Before we dive into their convergence, let's first lay the groundwork by understanding each of these fields individually.

A Journey Into the Emerging Field of Quantum Computing

Quantum computing is a field born from the principles of quantum mechanics. At its core lies the qubit, a fundamental unit that can exist in multiple states simultaneously, thanks to the phenomenon known as superposition. This property enables quantum computers to process vast amounts of information in parallel, making them exceptionally well-suited for certain types of calculations.

Data Science: The Art of Extracting Insights

On the other hand, Data Science is all about extracting knowledge and insights from data. It encompasses a wide range of techniques, including data collection, cleaning, analysis, and interpretation. Machine learning and statistical methods are often used to uncover meaningful patterns and predictions.

The Intersection: Where Quantum Meets Data

The fascinating intersection of quantum computing and data science occurs when quantum algorithms are applied to data analysis tasks. This synergy allows us to tackle problems that were once deemed insurmountable due to their complexity or computational demands.

The Promise of Quantum Computing in Data Analysis

Limitations of Classical Computing

Classical computers, with their binary bits, have their limitations when it comes to handling complex data analysis. Many real-world problems require extensive computational power and time, making them unfeasible for classical machines.

Quantum Computing's Revolution

Quantum computing has the potential to rewrite the rules of data analysis. It promises to solve problems previously considered intractable by classical computers. Optimization tasks, cryptography, drug discovery, and simulating quantum systems are just a few examples where quantum computing could have a monumental impact.

Quantum Algorithms in Action

To illustrate the potential of quantum computing in data analysis, consider Grover's search algorithm. While classical search algorithms have a complexity of O(n), Grover's algorithm achieves a quadratic speedup, reducing the time to find a solution significantly. Shor's factoring algorithm, another quantum marvel, threatens to break current encryption methods, raising questions about the future of cybersecurity.

Challenges and Real-World Applications

Current Challenges in Quantum Computing

While quantum computing shows great promise, it faces numerous challenges. Quantum bits (qubits) are extremely fragile and susceptible to environmental factors. Error correction and scalability are ongoing research areas, and practical, large-scale quantum computers are not yet a reality.

Real-World Applications Today

Despite these challenges, quantum computing is already making an impact in various fields. It's being used for simulating quantum systems, optimizing supply chains, and enhancing cybersecurity. Companies and research institutions worldwide are racing to harness its potential.

Ongoing Research and Developments

The field of quantum computing is advancing rapidly. Researchers are continuously working on developing more stable and powerful quantum hardware, paving the way for a future where quantum computing becomes an integral part of our analytical toolbox.

The Ethical and Security Considerations

Ethical Implications

The power of quantum computing comes with ethical responsibilities. The potential to break encryption methods and disrupt secure communications raises important ethical questions. Responsible research and development are crucial to ensure that quantum technology is used for the benefit of humanity.

Security Concerns

Quantum computing also brings about security concerns. Current encryption methods, which rely on the difficulty of factoring large numbers, may become obsolete with the advent of powerful quantum computers. This necessitates the development of quantum-safe cryptography to protect sensitive data.

Responsible Use of Quantum Technology

The responsible use of quantum technology is of paramount importance. A global dialogue on ethical guidelines, standards, and regulations is essential to navigate the ethical and security challenges posed by quantum computing.

My Personal Perspective

Personal Interest and Experiences

Now, let's shift the focus to a more personal dimension. I've always been deeply intrigued by both quantum computing and data science. Their potential to reshape the way we analyze data and solve complex problems has been a driving force behind my passion for these fields.

Reflections on the Future

From my perspective, the fusion of quantum computing and data science holds the promise of unlocking previously unattainable insights. It's not just about making predictions; it's about truly understanding the underlying causality of complex systems, something that could change the way we make decisions in a myriad of fields.

Influential Projects and Insights

Throughout my journey, I've encountered inspiring projects and breakthroughs that have fueled my optimism for the future of analysis. The intersection of these fields has led to astonishing discoveries, and I believe we're only scratching the surface.

Future Possibilities and Closing Thoughts

What Lies Ahead

As we wrap up this exploration, it's crucial to contemplate what lies ahead. Quantum computing and data science are on a collision course with destiny, and the possibilities are endless. Achieving quantum supremacy, broader adoption across industries, and the birth of entirely new applications are all within reach.

In summary, the convergence of Quantum Computing and Data Science is an exciting frontier that has the potential to reshape the way we analyze data and solve problems. It brings both immense promise and significant challenges. The key lies in responsible exploration, ethical considerations, and a collective effort to harness these technologies for the betterment of society.

#data visualization#data science#big data#quantum computing#quantum algorithms#education#learning#technology

4 notes

·

View notes

Text

youtube

Quantum Computing vs. Crypto: Is Your Digital Wallet at Risk?

Imagine waking up to news that your Bitcoin wallet could be emptied in seconds. This isn't science fiction; it's the very real and looming threat of quantum computing colliding with blockchain technology. In this essential episode from Tech AI Vision, we dive deep into the critical question: Can crypto truly survive the quantum threat?

We explore how powerful quantum computers, with their qubits, superposition, and entanglement, are rapidly advancing to compromise the complex cryptographic codes that protect your Bitcoin, Ethereum, and other digital assets. You'll learn about key algorithms like Shor's, which targets public key encryption, and Grover's, which affects hash functions – both vital to blockchain security.

Discover the unsettling "Harvest Now, Decrypt Later" threat and what it means for your investments today. We'll also cover the crucial developments in post-quantum cryptography and what proactive steps every crypto owner, investor, and developer needs to take right now to protect their digital future. Quantum computing and cryptocurrency are no longer just future concepts; they are current realities. Don't miss this definitive guide to understanding and preparing for the quantum computing crypto crash. Stay updated, stay safe, and help build a stronger future for blockchain in this new era.

#QuantumComputing#CryptoSecurity#Blockchain#Cybersecurity#Bitcoin#Ethereum#QuantumThreat#PostQuantumCryptography#DigitalAssets#TechAIVision#CryptoNews#FutureOfFinance#Youtube

1 note

·

View note

Text

Quantum Computing Revolution: Why It Changes Everything #shorts

youtube

With quantum computing growing at an alarming rate, the underpinnings of current-day blockchain security are under an unprecedented threat. In this revealing episode from Tech AI Vision, we delve into the critical question: Can crypto survive quantum computing? Learn how quantum computers, fueled by qubits, superposition, and entanglement, threaten to compromise cryptographic algorithms defending Bitcoin, Ethereum, and other cryptocurrencies. From Shor's algorithm breaking public key encryption to Grover's breaking hash functions, the implications are enormous. Discover the "Harvest Now, Decrypt Later" threat, post-quantum cryptography, and proactive measures every crypto owner and developer needs to take now. If you're a blockchain aficionado, crypto investor, or technology futurist, this in-depth dive into the relationship between crypto and quantum computing will change your mindset about digital security. Quantum computing and cryptocurrency is no longer an issue of the future but a reality today. Stay updated, stay safe, and contribute to building a strong future for blockchain in the era of quantum computing. Don't miss this definitive guide to weathering the quantum computing crypto crash.

#quantumcomputing#crypto#blockchainsecurity#postquantumcryptography#shorsalgorithm#groversalgorithm#bitcoin#ethereum#digitalwallet#cryptothreat#futureofcrypto#quantumvscrypto#technews#cybersecurity#decentralizedfinance#Youtube

0 notes

Text

Quantum Communications 2025:New Inflexible Encryption

Quantum communication (QComm) uses quantum physics to safely transmit data and is growing fast. It will reach a critical point by 2025, enabling unbreakable encryption and rapid data transport. Because of its potential for groundbreaking discoveries or technological and infrastructure obstacles that could hinder its widespread adoption, this new issue is drawing major technology businesses, governments, and academic institutions.

Knowing Quantum Communication

QComm data is protected by entanglement, superposition, and quantum teleportation. Instead of computer bits, quantum communication uses qubits, small particles like photons, electrons, protons, and ions. When measured, a qubit “collapses” into 0 or 1.

One particle's state instantly impacts others regardless of distance due to quantum entanglement. Quantum state teleportation uses entangled qubits to convey information instantly, using the Einstein-Podolsky-Rosen (EPR) Paradox.

The most important QComm features are PQC and QKD.

QKD secures data and key exchange. Quantum Key Distribution sends encrypted classical bits and qubits via satellites or fiber-optic connections. When Alice and Bob exchange qubits to produce a shared random bit string, an eavesdropper (Eve) can identify flaws when measuring or intercepting qubits. The quantum physics no-cloning theorem and Heisenberg uncertainty principle state that it is impossible to replicate or measure a quantum state without changing it, making this detection possible.

Alice and Bob can start a new key creation if they suspect tampering and destroy the key if the Quantum Bit Error Rate (QBER) exceeds a threshold. E91 and BB84 are popular QKD protocols. QKD is more secure than standard encryption, however side-channel attacks and source authentication still exist. QKD is quantum communication's “first generation”.

Post-Quantum Cryptography (PQC): QRC techniques protect against large-scale Quantum Computing. Peter Shor's 1994 approach might crack most encryption protocols, including Rivest-Shamir-Adleman (RSA), in seconds on quantum computers. Lattice cryptography is often used to create PQC algorithms, which are based on mathematical problems that quantum computers may struggle with. QKD is hardware-dependent and long-term, while Post-Quantum Cryptography is software-based and short-term.

Problems and Obstacles

Despite these advances, quantum communication still faces severe barriers to popular application.

Scalability and Distance: Photon loss in fiber-optic cables limits transmission distance, however quantum repeaters are being developed to enhance range. Building a global quantum network requires expensive infrastructure.

Cost and Commercial Viability: QComm systems require complex and expensive hardware like photon detectors, dilution refrigerators, quantum repeaters, and quantum memories, most of which are currently in development with unpredictable supply chains. Over $1 billion USD is expected to be spent developing a quantum internet over 10 to 15 years. Quantum solutions are expensive, so organisations are hesitant to utilise them until costs drop and benefits become clear.

Quantum Decoherence and Error Correction: External factors impair quantum particles' information. The phenomenon called quantum decoherence. Despite quantum error correction research, effective error correction is still a long-term goal.

Governments struggle to reconcile innovation and national security as quantum encryption standards change frequently. Standards gaps hamper PQC-QKD interoperability. US, China, and EU export bans on quantum technology components for military application hamper international cooperation and information exchange.

Supply Chain Constraints: QComm hardware development requires rare and exotic raw materials like semiconductors, rare earth metals, and critical minerals, which are scarce and processed mostly in China.

skill shortfall: India is no exception to the worldwide quantum technology skill shortfall, which requires specific curricula and qualified professors. Developing a qualified workforce is challenging when career options are unknown.

Although PQC is transitory, its methods, which use current lattice encryption, may break over time. PQC algorithms use greater processing power, which may slow performance and make side-channel assaults harder to stop.

Future Hopes

Researchers predict quantum network development to accelerate through government, IT, and academic cooperation. To improve communication reliability, quantum teleportation, quantum error correction, and AI-driven quantum system optimisation are expected. Governments may also adopt clearer laws and international agreements to regulate cross-border quantum communication and encryption.

Healthcare, banking, and defence will use quantum communication as technology improves and becomes cheaper. Quantum communication will require consistent investment, strategic planning, transnational collaboration, and cunning geopolitical manoeuvring to overcome current obstacles and avert supply chain concerns. Quantum communication will show if it can overcome these obstacles or if it will still face obstacles before reaching its full potential in the next years.

#Quantumcommunication#Qubits#phenomenon#QuantumKeyDistribution#quantummechanics#QuantumBit#QuantumResistantCryptographic#PostQuantumCryptography#quantumnetwork#QComm#News#Technews#Technology#Technologynews#Technologytrends#Govindhtech

0 notes

Text

DevOps with Quantum-Safe Security: Building Future-Ready Software Pipelines

As the digital age advances, so does the potential threat from emerging technologies. One of the most pressing concerns for future-ready businesses is the advent of quantum computing—and its potential to break traditional cryptographic algorithms. While quantum computing promises immense computational power, it also poses a risk to today’s data security. In response, companies are beginning to integrate quantum-safe security into their DevOps pipelines to secure systems proactively. This marks the evolution of DevOps from not only being fast and scalable but also resilient to future cybersecurity threats.

Combining DevOps consulting services with quantum-safe security offers businesses a robust framework for secure, continuous delivery, even in the face of tomorrow’s threats.

Understanding Quantum-Safe Security in DevOps

Quantum-safe security—also called post-quantum cryptography—refers to cryptographic algorithms that are resistant to quantum attacks. Algorithms like RSA and ECC, which are widely used today, can be compromised by quantum computers running Shor’s algorithm. In a DevOps environment, these cryptographic schemes are embedded in source code, APIs, certificates, and deployment scripts.

DevOps teams, therefore, must begin replacing or upgrading these algorithms using quantum-safe alternatives like lattice-based cryptography. Transitioning these practices into CI/CD pipelines through DevOps managed services helps ensure that every update, build, and release is encrypted with future-proof security.

Why DevOps Needs to Adapt Now

Although widespread quantum computing is still a few years away, the data we encrypt today may be vulnerable in the future. Hackers can adopt a “store now, decrypt later” strategy, capturing encrypted data today to decode it with quantum machines tomorrow.

Integrating DevOps consulting and managed cloud services ensures that security updates, key rotations, and quantum-safe encryption become part of automated pipelines—minimizing manual intervention and reducing the time to fix vulnerabilities.

Example: Consider a global bank managing millions of transactions daily across cloud environments. By partnering with a DevOps provider focused on quantum-safe practices, they automated the implementation of lattice-based encryption into their microservices architecture, ensuring customer data remains protected even against future computational threats.

Key Areas Where DevOps Supports Quantum-Resistant Architectures

1. CI/CD Integration: Quantum-safe encryption algorithms are being integrated into build and release pipelines. Automated testing ensures compatibility and performance benchmarks are met before deployment.

2. Secure Configuration Management: Secrets management tools like HashiCorp Vault are being enhanced with quantum-safe cryptography. DevOps teams use automated playbooks to rotate and deploy keys securely across multiple environments.

3. Monitoring and Compliance: DevOps pipelines now include continuous compliance checks to verify that software artifacts meet evolving cybersecurity standards, such as those set by NIST for post-quantum cryptography.

Expert Quotes to Keep in Mind

As noted by Michelle Thompson, a global expert on quantum computing risks:

“Quantum computing changes the rules of security. The time to rethink DevOps pipelines is now.”

Similarly, Bruce Schneier, a well-known cryptographer, states:

“Don’t wait for quantum computers to be a threat—design for them now, and your systems will be ready later.”

These insights underscore the importance of being proactive rather than reactive when building modern DevOps practices.

The Role of DevOps Services in Quantum Security Transformation

Businesses that adopt devops services and solutions now can future-proof their systems while maintaining development agility. Whether it's updating APIs, securing endpoints, or automating key rotations, a DevOps strategy infused with quantum-safe security is crucial.

Such transformation isn’t possible without the right guidance. Leveraging DevOps consulting services ensures enterprises are not only following best practices today but also preparing for the cryptographic standards of tomorrow.

Conclusion: Securing the Future of Continuous Delivery

Quantum-safe security is no longer optional. It is a necessity for enterprises that want to secure their data pipelines in an increasingly complex world. By integrating DevOps practices with quantum-resilient technologies, organizations can develop, deploy, and monitor applications with full confidence that they are protected—now and in the future.

Please visit Cloudastra Technology if you are interested to study more content or explore our services. Cloudastra offers future-ready DevOps consulting services designed to keep your systems secure and efficient in the face of tomorrow’s quantum threats.

0 notes

Text

Quantum Computing in Cybersecurity

Quantum computing is emerging as a transformative force in the field of cybersecurity. Unlike classical computers, which rely on bits, quantum computers use qubits—units that can exist in multiple states simultaneously due to the principles of superposition and entanglement. These unique capabilities allow quantum computers to perform certain calculations exponentially faster than traditional machines, posing both unprecedented opportunities and serious threats to modern cryptographic systems.

One of the most significant impacts of quantum computing lies in its potential to break widely used encryption methods. Algorithms like RSA, ECC (Elliptic Curve Cryptography), and DSA underpin much of the world’s digital security. These algorithms are based on problems that are hard for classical computers to solve, such as integer factorization and discrete logarithms. However, Shor’s algorithm, a quantum algorithm, can solve these problems efficiently. Once large-scale quantum computers become available, they could decrypt sensitive information secured under these systems, rendering current public-key cryptography obsolete.

To address this challenge, researchers are rapidly developing post-quantum cryptography (PQC)—encryption algorithms that are believed to be resistant to quantum attacks. The U.S. National Institute of Standards and Technology (NIST) is actively leading efforts to standardize such quantum-resistant algorithms. Examples include lattice-based cryptography, hash-based signatures, and code-based encryption, which are designed to withstand attacks even from quantum computers. These new methods aim to protect data not only today but also in the future, anticipating a world where quantum technology is mainstream.

On the other hand, quantum computing also presents new tools for enhancing cybersecurity. Quantum Key Distribution (QKD) is a secure communication method that uses quantum mechanics to encrypt and transmit keys in such a way that any attempt to eavesdrop would be immediately detectable. QKD enables the creation of unbreakable encryption under ideal conditions, making it a promising technology for ultra-secure communication between government, military, and financial institutions.

However, the integration of quantum computing into cybersecurity is not without challenges. The practical deployment of quantum-safe protocols requires extensive changes to existing infrastructure. Moreover, current quantum computers are still in their early stages—known as Noisy Intermediate-Scale Quantum (NISQ) devices—which limits their immediate threat potential. Nonetheless, the concept of "harvest now, decrypt later" is a real concern, where adversaries collect encrypted data today with the intention of decrypting it using quantum systems in the future.

In conclusion, quantum computing is reshaping the landscape of cybersecurity, offering both disruptive threats and novel defenses. Preparing for this future involves a dual strategy: developing and deploying quantum-resistant cryptographic standards while exploring secure quantum-enhanced technologies like QKD. Governments, organizations, and researchers must collaborate to ensure a smooth and secure transition into the quantum era, safeguarding data in a world where computing power is no longer limited by classical constraints.

#QuantumComputing #Cybersecurity #EmergingTech#QuantumThreat #ShorsAlgorithm #EncryptionBreakthrough#PostQuantumCryptography #QuantumSafe #NISTStandards#QuantumKeyDistribution #QKD #UnbreakableEncryption#QuantumRisks #NISQ #DataSecurity#QuantumFuture #SecureTransition #QuantumCybersecurity The Scientist Global Awards

Website link : thescientists.net NominationLink :https://thescientists.net/award-nomination/?ecategory=Awards&rcategory=Awardee Contact Us : [email protected]

___________________________________ Social Media:

Twitter : https://x.com/ScientistS59906 Pinterest : https://in.pinterest.com/scientists2025/_profile/ Tumblr : https://www.tumblr.com/blog/thescientistglobalawards FaceBook : https://www.facebook.com/profile.php?id=6157466213823

0 notes

Text

Post Quantum Algorithm: Securing the Future of Cryptography

Can current encryption meet the quantum future? With the entry of quantum computing, classical encryption techniques are under the immediate threat of compromise. There has come a new age with the post quantum algorithm as a vital solution. Having the capability to shield data from being vulnerable to quantum attacks, this fascinating technology promises digital security for the future decades. Different from classic crypto schemes, such algorithms resist even sophisticated quantum attacks. But how do they work, and why are they important? In this article, we’ll explore how post-quantum algorithms are reshaping the cybersecurity landscape — and what it means for the future of encryption.

What is a Post Quantum Algorithm?

A post quantum algorithm is an encryption technique implemented to secure sensitive information from the vast processing power of quantum computers. In contrast to the classic encryption method, which can be cracked using the help of algorithms like Shor’s by quantum computers, this new method takes advantage of maths problems that are difficult for both quantum and classical systems to calculate. Quantum computers employ qubits to process information at new rates, endangering the current state of encryption, such as RSA or ECC.

To counter this, post-quantum solutions employ methods such as lattice-based encryption, code-based cryptography, and hash-based signatures. These are long-term security frameworks that keep data safe, even when there are vast numbers of quantum computers available for cryptographic algorithms.

Why We Need Post Quantum Algorithms Today

Although quantum computers are not yet available, post-quantum implementation of the algorithms in the initial stages is unavoidable. Encryption is not for today — it’s for tomorrow’s data too. A cyberthief will tap encrypted data today and crack it when there’s quantum technology in the future.

The application of a post quantum algorithm nowadays assures long-term secure information protection. Government agencies, banks, and medical providers are already transitioning to quantum-resistant systems.

Types of Post Quantum Algorithms

There are various kinds of post quantum algorithms, and each one has special strengths-

Lattice-based Cryptography: Lattice-based cryptography holds most hope. It relies on lattice problems upon which to build security that even a highly capable quantum computer possesses no way of solving quickly. They do digital signatures and encryption, and are relatively fast. They are quite general, hence are in line for standardization.

Hash-based Cryptography: Hash-based cryptography is primarily digital signature-based. They enjoy the security of traditional cryptographic hash functions and are safe against known quantum attacks. Very secure and grown-up, but generally not employed for encryption due to their size and slow performance, these schemes are only suitable to protect firmware and software patches.

Multivariate Polynomial Cryptography: Multivariate Polynomial Cryptography: Multivariate polynomial cryptography consists of complex mathematical equations involving numerous variables. They provide compact signature generation and verification, which is advantageous in resource-limited settings such as embedded systems.

Code-based Cryptography: Code-based cryptography research has been conducted for many decades and employs error-correcting codes for encrypting and protecting information. It provides very good security against quantum attacks and is particularly suitable for encryption applications. Although code-based cryptosystems have large public key sizes, their long history of resistance makes them a popular selection for protecting information in the long term.

How Post-Quantum Algorithms Work

A post quantum algorithm relies on the concept of using mathematical problems that are hard to break through quantum computers. They are resistant to both classical and quantum attacks. One of them, lattice-based cryptography, uses vectors in high-dimensional space. It is still very hard to solve the lattice problems even for highly powerful quantum processors.

All of the suggested algorithms test extensively for performance, key size, and resistance against any known quantum attacks. The National Institute of Standards and Technology (NIST) coordinates the worldwide effort in testing and standardizing the algorithms. They will work on new cryptographic systems used to replace current systems that are vulnerable and offer long-term security of information in a world where quantum computers are readily available and extremely powerful.

Real-World Applications of Post-Quantum Algorithms

Post quantum algorithm application is not a theory. Many companies have already started using them-

Finance: Organisations in the finance sector employ quantum-resistant cryptography to protect confidential financial transactions and customer data. Financial information is confidential for decades, and quantum-safe encryption protects it from hacking in the future. Banks and payment processors are piloting and implementing post-quantum approaches into core security solutions.

Healthcare: The integrity and confidentiality of medical records form the basis of the healthcare business. Healthcare organizations and hospitals have been using quantum-secure encryption to secure patients’ data for decades. Health information is retained for many years, and post-quantum methods provide guarantees that such information will not be vulnerable to future computing breakthroughs.

Government: Government departments manage national security information that may be useful for many decades. Therefore, they are leading the adoption of post-quantum technologies, primarily for secure communication and sensitive documents. Military, intelligence, and diplomatic operations are investing in quantum-resistant technologies to prepare for the future.

Cloud Services: Cloud service providers deploy Quantum-resistant encryption. As cloud infrastructure is responsible for everything from document storage to software services, they have to ensure data protection both in transit and at rest. Cloud giants are experimenting with hybrid approaches that involve classical and post-quantum encryption to protect data even further.

Post Quantum Security in the Modern World

Security does not only mean encrypting information; it means expecting it. That is where post quantum security comes in. With billions of devices connected and more data exchanges taking place, organizations need to think ahead. One quantum attack will reveal millions of records. Adopting a post-quantum algorithm today, companies construct tomorrow-proof resilience.

Transitioning to Post Quantum Algorithms: Challenges Ahead

The transition to a post quantum algorithm presents a sequence of challenges for contemporary organizations. The majority of today’s digital architectures depend on outdated encryption algorithms such as RSA or ECC. Replacing those systems with quantum-resistant technology requires a lot of time, capital, and extensive testing. Post-quantum techniques have greater key lengths and increased computational overhead, affecting performance, particularly on outdated hardware.

To control this transition, companies have to start with proper risk analysis. Companies have to tag the systems handling sensitive or long-term data and have them upgraded initially. Having a clear migration timeline guarantees the process will be seamless. With early execution and adopting hybrid cryptography, companies can phase their systems gradually while being in advance of the quantum attack without sacrificing the security level.

Governments and Global Efforts Toward Quantum Safety

Governments across the globe are actively engaging in countering quantum computing risks. Governments recognize that tomorrow’s encryption must be quantum-resistant. Organizations such as the National Institute of Standards and Technology (NIST) spearhead initiatives globally by conducting the Post-Quantum Cryptography Standardization Process. The process is to identify the best post quantum algorithm to implement worldwide.

Parallely, nations finance research, sponsor academic research, and engage with private technology companies to develop quantum-resistant digital infrastructures. For the effectiveness of these breakthroughs, global cooperation is necessary. Governments need to collaborate in developing transparent policies, raising awareness, and providing education on quantum-safe procedures. These steps will determine the future of secure communications and data protection.

Understanding Post Quantum Encryption Technologies

Post quantum encryption employs post-quantum-resistant methods to encrypt digital information. This is in conjunction with a post quantum algorithm, which protects encrypted information such that no individual, even quantum computers, can access it. Whether it is emails, financial data, or government documents being protected, encryption is an essential aspect of data protection. Companies embracing quantum encryption today will be tomorrow’s leaders.

The Evolution of Cryptography with Post Quantum Cryptography

Post quantum cryptography is the future of secure communication. Traditional cryptographic systems based on problems like factorization are no longer efficient. Post quantum algorithm…

#post quantum cryptography#post quantum encryption#post quantum blockchain#post quantum secure blockchain#ncog#post quantum#post quantum securityu#tumblr

1 note

·

View note

Text

Quantum Threat to Bitcoin? Google Flags Encryption Risk

Key Notes Google researcher reveals quantum computers could break Bitcoin encryption sooner than expected. Bitcoin’s elliptic curve cryptography (ECC) is vulnerable to quantum attacks like Shor’s algorithm. Current quantum hardware isn’t powerful enough yet but progress is rapidly accelerating. Craig Gidney, a Quantum AI researcher at Google, warned that Bitcoin’s encryption faces growing risks…

0 notes

Text

The Quantum Shield: A Tale of Tomorrow's Cybersecurity

In the heart of New Delhi, under the glass towers of the tech district, Dr. Anika Sharma stood in the dim light control room of QNu Labs, India’s pioneering quantum secure communication company. The humming of servers filled the air, but Anika’s focus was on a glowing terminal displaying streams of quantum-generated random numbers. These weren’t just numbers—they were the future of global security, a barrier against the looming threat of quantum computers.

It was 2025, and the world was on the cusp of a cryptographic revolution. Anika, a physicist turned cybersecurity visionary, had spent years researching quantum key distribution (QKD), a technology that promised unbreakable encryption by harnessing the strange properties of quantum mechanics. Her company’s products—Tropos, a Quantum Random Number Generator (QRNG), and Armos, a QKD system—were designed to protect critical data from the attacks. State-sponsored hackers were already intercepting encrypted data, biding their time until quantum computers could crack it open like a digital Pandora’s box.

Anika’s journey began a decade ago, during her PhD, when Edward Snowden’s 2013 leaks exposed the Tempora project—a British operation that siphoned 20 petabytes of data daily from global optical fibers. The revelation that the NSA had paid $10 million to embed a backdoor in RSA encryption shocked her. If even the most trusted systems could be compromised about the hope on global privacy. In 2020 bombshell: “The Intelligence Coup of the Century,” revealing how the CIA and West German intelligence had secretly decrypted communications from over 60 countries for decades. These betrayals fueled Anika’s mission to build a quantum shield.

Her reverie was interrupted by a ping from her tablet. It was a message from General Vikram Singh, a high-ranking official in India’s Ministry of Defence. QNu Labs had caught theattention after a standout presentation at Defense Expo 2020, where Anika and her team, alongside Bharat Electronics Limited, demonstrated a quantum channel for real-time key distribution. The general’s message was urgent: “Dr. Sharma, we need to discuss QKD deployment for our optical fiber networks.

The next morning, Anika arrived at a heavily guarded military base, her laptop loaded with simulations of QKD-secured networks. General Singh, a grizzled veteran with a strategic foresight, greeted her. “The world’s changing, Doctor,” he said, his voice grave. “Quantum computers are no longer science fiction. Shor’s algorithm could break our encryption in minutes once they scale up. Our defense secrets, our communications—everything is at risk.”

Anika nodded, pulling up a diagram of a secure optical fiber ring network powered by Armos. She explained about QKD comes. It uses quantum properties of light to create and share encryption keys between two parties—let’s call them Alice and Bob. If an eavesdropper, Eve, tries to intercept the key, the quantum state collapses, alerting us instantly. The keys are then used with one-time pad encryption or AES for unbreakable security.”

The general leaned forward, and queried about Tropos system?”

Tropos generates truly random keys using a quantum source,Anika replied. “Unlike classical random number generators, which can be predictable, Tropos leverages quantum uncertainty for perfect entropy. It’s the backbone of secure key generation.”

General Singh’s eyes narrowed. “We’ve got optical fiber backhauls connecting our bases. They’re critical for tactical communications. Can your tech secure them dynamically?”

“Absolutely,” Anika said. “Armos enables real-time key distribution and management, replacing outdated manual systems. It’s crypto-agile, meaning it integrates with existing infrastructure without disruption. We can secure your networks, from cantonments to battlefield communications against quantum threats.”

As they spoke, Anika’s mind flashed to a darker scenario: a future Y2Q event, the “Years to Quantum” moment when quantum computers render today’s encryption obsolete. She envisioned hackers decrypting troves of stolen data—national defense secrets, financial records, personal communications—unleashing a “crypto apocalypse.” But with QKD, that future could be averted.

The meeting ended with a handshake and a promise to pilot QNu’s technology in a secure OFC ring network. As Anika left the base, she felt a surge of purpose. The world was racing toward a quantum showdown, but her team was ready. QNu Labs wasn’t just building technology—they were forging a quantum shield to protect humanity’s digital future.

Source : Finance express LINK : Dark Side of Quantum Computers A Lurking Threat to National Security, Quantum Cryptography Blog - QNu Labs

@sruniversity

0 notes

Text

Quantum Cyber Threats Are Coming—Is Your Encryption Ready?

Are You Ready for the Quantum Cyber Threat?

Quantum computing is poised to revolutionize industries, but it also poses one of the biggest threats to cybersecurity. Once quantum systems mature, today’s encryption methods—RSA, ECC, and others—could be cracked in hours using algorithms like Shor’s.

Cybercriminals are already harvesting encrypted data in anticipation of a quantum future ("Harvest Now, Decrypt Later"), placing sensitive government, financial, and healthcare data at risk.