#magnetic fusion energy computer center

Explore tagged Tumblr posts

Text

A new and unique fusion reactor comes together due to global research collaboration

Like atoms coming together to release their power, fusion researchers worldwide are joining forces to solve the world's energy crisis. Harnessing the power of fusing plasma as a reliable energy source for the power grid is no easy task, requiring global contributions.

The Princeton Plasma Physics Laboratory (PPPL) is leading several efforts on this front, including collaborating on the design and development of a new fusion device at the University of Seville in Spain. The SMall Aspect Ratio Tokamak (SMART) strongly benefits from PPPL computer codes as well as the Lab's expertise in magnetics and sensor systems.

"The SMART project is a great example of us all working together to solve the challenges presented by fusion and teaching the next generation what we have already learned," said Jack Berkery, PPPL's deputy director of research for the National Spherical Torus Experiment-Upgrade (NSTX-U) and principal investigator for the PPPL collaboration with SMART. "We have to all do this together or it's not going to happen."

Manuel Garcia-Munoz and Eleonora Viezzer, both professors at the Department of Atomic, Molecular and Nuclear Physics of the University of Seville as well as co-leaders of the Plasma Science and Fusion Technology Lab and the SMART tokamak project, said PPPL seemed like the ideal partner for their first tokamak experiment. The next step was deciding what kind of tokamak they should build.

"It needed to be one that a university could afford but also one that could make a unique contribution to the fusion landscape at the university scale," said Garcia-Munoz. "The idea was to put together technologies that were already established: a spherical tokamak and negative triangularity, making SMART the first of its kind. It turns out it was a fantastic idea."

SMART should offer easy-to-manage fusion plasma

Triangularity refers to the shape of the plasma relative to the tokamak. The cross section of the plasma in a tokamak is typically shaped like the capital letter D. When the straight part of the D faces the center of the tokamak, it is said to have positive triangularity. When the curved part of the plasma faces the center, the plasma has negative triangularity.

Garcia-Munoz said negative triangularity should offer enhanced performance because it can suppress instabilities that expel particles and energy from the plasma, preventing damage to the tokamak wall.

"It's a potential game changer with attractive fusion performance and power handling for future compact fusion reactors," he said. "Negative triangularity has a lower level of fluctuations inside the plasma, but it also has a larger divertor area to distribute the heat exhaust."

The spherical shape of SMART should make it better at confining the plasma than it would be if it were doughnut shaped. The shape matters significantly in terms of plasma confinement. That is why NSTX-U, PPPL's main fusion experiment, isn't squat like some other tokamaks: the rounder shape makes it easier to confine the plasma. SMART will be the first spherical tokamak to fully explore the potential of a particular plasma shape known as negative triangularity.

PPPL's expertise in computer codes proves essential

PPPL has a long history of leadership in spherical tokamak research. The University of Seville fusion team first contacted PPPL to implement SMART in TRANSP, a simulation software developed and maintained by the Lab. Dozens of facilities use TRANSP, including private ventures such as Tokamak Energy in England.

"PPPL is a world leader in many, many areas, including fusion simulation; TRANSP is a great example of their success," said Garcia-Munoz.

Mario Podesta, formerly of PPPL, was integral to helping the University of Seville determine the configuration of the neutral beams used for heating the plasma. That work culminated in a paper published in the journal Plasma Physics and Controlled Fusion.

Stanley Kaye, director of research for NSTX-U, is now working with Diego Jose Cruz-Zabala, EUROfusion Bernard Bigot Researcher Fellow, from the SMART team, using TRANSP "to determine the shaping coil currents necessary for attaining their design plasma shapes of positive triangularity and negative triangularity at different phases of operation." The first phase, Kaye said, will involve a "very basic" plasma. Phase two will have neutral beams heating the plasma.

Separately, other computer codes were used for assessing the stability of future SMART plasmas by Berkery, former undergraduate intern John Labbate, who is, now a grad student at Columbia University, and former University of Seville graduate student Jesús Domínguez-Palacios, who has now moved to an American company. A new paper in Nuclear Fusion by Domínguez-Palacios discusses this work.

Designing diagnostics for the long haul

The collaboration between SMART and PPPL also extended into and one of the Lab's core areas of expertise: diagnostics, which are devices with sensors to assess the plasma. Several such diagnostics are being designed by PPPL researchers. PPPL Physicists Manjit Kaur and Ahmed Diallo, together with Viezzer, are leading the design of the SMART's Thomson scattering diagnostic, for example.

This diagnostic will precisely measure the plasma electron temperature and density during fusion reactions, as detailed in a new paper published in the journal Review of Scientific Instruments. These measurements will be complemented with ion temperature, rotation and density measurements provided by diagnostics known as the charge exchange recombination spectroscopy suite developed by Alfonso Rodriguez-Gonzalez, graduate student at University of Seville, Cruz-Zabala and Viezzer.

"These diagnostics can run for decades, so when we design the system, we keep that in mind," said Kaur. When developing the designs, it was important the diagnostic can handle temperature ranges SMART might achieve in the next few decades and not just the initial, low values, she said.

Kaur designed the Thomson scattering diagnostic from the start of the project, selecting and procuring its different subparts, including the laser she felt best fits the job. She was thrilled to see how well the laser tests went when Gonzalo Jimenez and Viezzer sent her photos from Spain. The test involved setting up the laser on a bench and shooting it at a piece of special parchment that the researchers call "burn paper." If the laser is designed just right, the burn marks will be circular with relatively smooth edges.

"The initial laser test results were just gorgeous," she said. "Now, we eagerly await receiving other parts to get the diagnostic up and running."

James Clark, a PPPL research engineer whose doctoral thesis focused on Thomson scattering systems, was later brought on to work with Kaur. "I've been designing the laser path and related optics," Clark explained. In addition to working on the engineering side of the project, Clark has also helped with logistics, deciding how and when things should be delivered, installed and calibrated.

PPPL's Head of Advanced Projects Luis Delgado-Aparicio, together with Marie Skłodowska-Curie fellow Joaquin Galdon-Quiroga and University of Seville graduate student Jesus Salas-Barcenas, are leading efforts to add two other kinds of diagnostics to SMART: a multi-energy, soft X-ray (ME-SXR) diagnostic and spectrometers.

The ME-SXR will also measure the plasma's electron temperature and density but using a different approach than the Thomson scattering system. The ME-SXR will use sets of small electronic components called diodes to measure X-rays. Combined, the Thomson scattering diagnostic and the ME-SXR will comprehensively analyze the plasma's electron temperature and density.

By looking at the different frequencies of light inside the tokamak, the spectrometers can provide information about impurities in the plasma, such as oxygen, carbon and nitrogen. "We are using off-the-shelf spectrometers and designing some tools to put them in the machine, incorporating some fiber optics," Delgado-Aparicio said. Another new paper published in the Review of Scientific Instruments discusses the design of this diagnostic.

PPPL Research Physicist Stefano Munaretto worked on the magnetic diagnostic system for SMART with the field work led by University of Seville graduate student Fernando Puentes del Pozo Fernando.

"The diagnostic itself is pretty simple," said Munaretto. "It's just a wire wound around something. Most of the work involves optimizing the sensor's geometry by getting its size, shape and length correct, selecting where it should be located and all the signal conditioning and data analysis involved after that." The design of SMART's magnetics is detailed in a new paper also published in Review of Scientific Instruments.

Munaretto said working on SMART has been very fulfilling, with much of the team working on the magnetic diagnostics made up of young students with little previous experience in the field. "They are eager to learn, and they work a lot. I definitely see a bright future for them."

Delgado-Aparicio agreed. "I enjoyed quite a lot working with Manuel Garcia-Munoz, Eleonora Viezzer and all of the other very seasoned scientists and professors at the University of Seville, but what I enjoyed most was working with the very vibrant pool of students they have there," he said.

"They are brilliant and have helped me quite a bit in understanding the challenges that we have and how to move forward toward obtaining first plasmas."

Researchers at the University of Seville have already run a test in the tokamak, displaying the pink glow of argon when heated with microwaves. This process helps prepare the tokamak's inner walls for a far denser plasma contained at a higher pressure. While technically, that pink glow is from a plasma, it's at such a low pressure that the researchers don't consider it their real first tokamak plasma. Garcia-Munoz says that will likely happen in the fall of 2024.

IMAGE: SMall Aspect Ratio Tokamak (SMART) is being built at the University of Seville in Spain, in collaboration with Princeton Plasma Physics Laboratory. (Photo credit: University of Seville). Credit: University of SevilleL

4 notes

·

View notes

Photo

I wanted to start design work on Hugo’s ship but realized I needed to know what a standard Lamp ship’s insides looked like, so here is that! more info under the cut.

Lamp Ships tend to all have some common room types that are found in the smallest's scout ships to the Kilometer long mining ships. Even in Hivemind ship these spaces are more colourful then one would think do to how even non sentient Lamp respond to colour.

Servers and Brain Room:

Lamps Ships use the same brain cores as the much smaller units but Linked together into huge networks that run though a massive biological/mechanical system in it’s center. This system is what allows Lamp ships to jump into the between as well as sent FTL messages through this Subspace (Spectrum Lamps refer to this as “sing”).

They also have what Lamps call “dumb computers” or “thinking rocks” large silicon base computers that act as servers and archives. this run some of the more mundane tacks around the ship. Surprisingly these computers are programed in binary and function much like human, digital Computers.

Both are heavy liquid cold (see the blue piping) and are some of the most shielded parts of a Ship.

Kitchen and Greenroom:

Lamps have fairy simple digestion tracts, so often have some basic cooking systems on board. Though this is one of the rooms where the difference between Spectrum and Hivemind ships is the largest. The Spectrum Lamps caring more about how good, or interesting a meal is vs a Hive ship just wanting the max nutrition per energy spent on the food. Most Kitchens are equipped with a food printer, simple oven and microwave. As well as freeze driers and cold storage.

The Green Room Pictured is a rack system often used for fast growing fruits and “filter” plants

Hallway:

Lamp ships have circle hallways that run between the ships thick hull and the interior living spaces. Most have a mix of small workstations, assess points and storage. As picture here Yellow is commonly used as the colour for doors and “Floors”, Ships tend to use acceleration to produce “gravity” but if they are using rotation, or planetary forces to produce a “down” whatever wall or ceiling that has become the floor will become yellow.

Fusion Engine room B:

Lamp Ships have at least three engines, the Main Anti-matter reactor that is used in Jumps and for their main thruster and is picture as the Purple Lantern like structure at the very front of the ship.

For the Rest if the Ships systems and back up for the main reactor are two Fusion Engines. These rooms tend to be very purple, a common warning colour do to the high power and magnetic fields in these areas

“MedBay”:

In Hivemind ships these rooms are not so much true med-bays but are instead used to regrow the flesh bodies of the Lamp units who have “died” or to make new limbs. In Spectrum Ships these rooms have much more medical equipment, so medicine is not ether nothing or melt off your current body and probably dying.

Engineering:

Depending on the size of the ship the Engineering floors can have everything form laser cutters, metal 3d printers, looms and Skin printers. Produce anything a lamp ship may need to repair themselves in space. most also have a small “Replicator”; a System that can use the Lamps massive energy systems to move around and change atoms into new chemicals. These are normally use to produce rarer elements from simple ones or harder to made chemicals. As even with two fusion engines, these systems use a lot of power and need deep knowledge of chemistry to use outside of the presets.

#lamp alien#Lamp aliens#Pilot Light#speculative biology#bioship edition#alien#worldbuilding#space ships#Lamp Ships#watercolour#environment#very tiny thumbnails#like these are maybe the size of a match box

52 notes

·

View notes

Text

The tenured engineers of 2024

New Post has been published on https://thedigitalinsider.com/the-tenured-engineers-of-2024/

The tenured engineers of 2024

In 2024, MIT granted tenure to 11 faculty members across the School of Engineering. This year’s tenured engineers hold appointments in the departments of Aeronautics and Astronautics, Chemical Engineering, Civil and Environmental Engineering, Electrical Engineering and Computer Science (EECS, which reports jointly to the School of Engineering and MIT Schwarzman College of Computing), Mechanical Engineering, and Nuclear Science and Engineering.

“My heartfelt congratulations to the 11 engineering faculty members on receiving tenure. These faculty have already made a lasting impact in the School of Engineering through both advances in their field and their dedication as educators and mentors,” says Anantha Chandrakasan, chief innovation and strategy officer, dean of engineering, and the Vannevar Bush Professor of Electrical Engineering and Computer Science.

This year’s newly tenured engineering faculty include:

Adam Belay, associate professor of computer science and principal investigator at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL), works on operating systems, runtime systems, and distributed systems. He is particularly interested in developing practical methods for microsecond-scale computing and cloud resource management, with many applications relating to performance and computing efficiency within large data centers.

Irmgard Bischofberger, Class of 1942 Career Development Professor and associate professor of mechanical engineering, is an expert in the mechanisms of pattern formation and instabilities in complex fluids. Her research reveals new insights into classical understanding of instabilities and has wide relevance to physical systems and industrial processes. Further, she is dedicated to science communication and generates exquisite visualizations of complex fluidic phenomena from her research.

Matteo Bucci serves as the Esther and Harold E. Edgerton Associate Professor of nuclear science and engineering. His research group studies two-phase heat transfer mechanisms in nuclear reactors and space systems, develops high-resolution, nonintrusive diagnostics and surface engineering techniques to enhance two-phase heat transfer, and creates machine-learning tools to accelerate data analysis and conduct autonomous heat transfer experiments.

Luca Carlone, the Boeing Career Development Professor in Aeronautics and Astronautics, is head of the Sensing, Perception, Autonomy, and Robot Kinetics Laboratory and principal investigator at the Laboratory for Information and Decision Systems. His research focuses on the cutting edge of robotics and autonomous systems research, with a particular interest in designing certifiable perception algorithms for high-integrity autonomous systems and developing algorithms and systems for real-time 3D scene understanding on mobile robotics platforms operating in the real world.

Manya Ghobadi, associate professor of computer science and principal investigator at CSAIL, builds efficient network infrastructures that optimize resource use, energy consumption, and availability of large-scale systems. She is a leading expert in networks with reconfigurable physical layers, and many of the ideas she has helped develop are part of real-world systems.

Zachary (Zach) Hartwig serves as the Robert N. Noyce Career Development Professor in the Department of Nuclear Science and Engineering, with a co-appointment at MIT’s Plasma Science and Fusion Center. His current research focuses on the development of high-field superconducting magnet technologies for fusion energy and accelerated irradiation methods for fusion materials using ion beams. He is a co-founder of Commonwealth Fusion Systems, a private company commercializing fusion energy.

Admir Masic, associate professor of civil and environmental engineering, focuses on bridging the gap between ancient wisdom and modern material technologies. He applies his expertise in the fields of in situ and operando spectroscopic techniques to develop sustainable materials for construction, energy, and the environment.

Stefanie Mueller is the TIBCO Career Development Professor in the Department of EECS. Mueller has a joint appointment in the Department of Mechanical Engineering and is a principal investigator at CSAIL. She develops novel hardware and software systems that give objects new capabilities. Among other applications, her lab creates health sensing devices and electronic sensing devices for curved surfaces; embedded sensors; fabrication techniques that enable objects to be trackable via invisible marker; and objects with reprogrammable and interactive appearances.

Koroush Shirvan serves as the Atlantic Richfield Career Development Professor in Energy Studies in the Department of Nuclear Science and Engineering. He specializes in the development and assessment of advanced nuclear reactor technology. He is currently focused on accelerating innovations in nuclear fuels, reactor design, and small modular reactors to improve the sustainability of current and next-generation power plants. His approach combines multiple scales, physics and disciplines to realize innovative solutions in the highly regulated nuclear energy sector.

Julian Shun, associate professor of computer science and principal investigator at CSAIL, focuses on the theory and practice of parallel and high-performance computing. He is interested in designing algorithms that are efficient in both theory and practice, as well as high-level frameworks that make it easier for programmers to write efficient parallel code. His research has focused on designing solutions for graphs, spatial data, and dynamic problems.

Zachary P. Smith, Robert N. Noyce Career Development Professor and associate professor of chemical engineering, focuses on the molecular-level design, synthesis, and characterization of polymers and inorganic materials for applications in membrane-based separations, which is a promising aid for the energy industry and the environment, from dissolving olefins found in plastics or rubber, to capturing smokestack carbon dioxide emissions. He is a co-founder and chief scientist of Osmoses, a startup aiming to commercialize membrane technology for industrial gas separations.

#2024#3d#Aeronautical and astronautical engineering#aeronautics#Algorithms#Analysis#applications#approach#artificial#Artificial Intelligence#assessment#autonomous systems#Awards#honors and fellowships#Boeing#carbon#Carbon dioxide#carbon dioxide emissions#career#career development#chemical#Chemical engineering#Civil and environmental engineering#classical#Cloud#code#college#communication#computer#Computer Science

2 notes

·

View notes

Text

Tests show high-temperature superconducting magnets are ready for fusion

New Post has been published on https://sunalei.org/news/tests-show-high-temperature-superconducting-magnets-are-ready-for-fusion/

Tests show high-temperature superconducting magnets are ready for fusion

In the predawn hours of Sept. 5, 2021, engineers achieved a major milestone in the labs of MIT’s Plasma Science and Fusion Center (PSFC), when a new type of magnet, made from high-temperature superconducting material, achieved a world-record magnetic field strength of 20 tesla for a large-scale magnet. That’s the intensity needed to build a fusion power plant that is expected to produce a net output of power and potentially usher in an era of virtually limitless power production.

The test was immediately declared a success, having met all the criteria established for the design of the new fusion device, dubbed SPARC, for which the magnets are the key enabling technology. Champagne corks popped as the weary team of experimenters, who had labored long and hard to make the achievement possible, celebrated their accomplishment.

But that was far from the end of the process. Over the ensuing months, the team tore apart and inspected the components of the magnet, pored over and analyzed the data from hundreds of instruments that recorded details of the tests, and performed two additional test runs on the same magnet, ultimately pushing it to its breaking point in order to learn the details of any possible failure modes.

All of this work has now culminated in a detailed report by researchers at PSFC and MIT spinout company Commonwealth Fusion Systems (CFS), published in a collection of six peer-reviewed papers in a special edition of the March issue of IEEE Transactions on Applied Superconductivity. Together, the papers describe the design and fabrication of the magnet and the diagnostic equipment needed to evaluate its performance, as well as the lessons learned from the process. Overall, the team found, the predictions and computer modeling were spot-on, verifying that the magnet’s unique design elements could serve as the foundation for a fusion power plant.

Enabling practical fusion power

The successful test of the magnet, says Hitachi America Professor of Engineering Dennis Whyte, who recently stepped down as director of the PSFC, was “the most important thing, in my opinion, in the last 30 years of fusion research.”

Before the Sept. 5 demonstration, the best-available superconducting magnets were powerful enough to potentially achieve fusion energy — but only at sizes and costs that could never be practical or economically viable. Then, when the tests showed the practicality of such a strong magnet at a greatly reduced size, “overnight, it basically changed the cost per watt of a fusion reactor by a factor of almost 40 in one day,” Whyte says.

“Now fusion has a chance,” Whyte adds. Tokamaks, the most widely used design for experimental fusion devices, “have a chance, in my opinion, of being economical because you’ve got a quantum change in your ability, with the known confinement physics rules, about being able to greatly reduce the size and the cost of objects that would make fusion possible.”

The comprehensive data and analysis from the PSFC’s magnet test, as detailed in the six new papers, has demonstrated that plans for a new generation of fusion devices — the one designed by MIT and CFS, as well as similar designs by other commercial fusion companies — are built on a solid foundation in science.

The superconducting breakthrough

Fusion, the process of combining light atoms to form heavier ones, powers the sun and stars, but harnessing that process on Earth has proved to be a daunting challenge, with decades of hard work and many billions of dollars spent on experimental devices. The long-sought, but never yet achieved, goal is to build a fusion power plant that produces more energy than it consumes. Such a power plant could produce electricity without emitting greenhouse gases during operation, and generating very little radioactive waste. Fusion’s fuel, a form of hydrogen that can be derived from seawater, is virtually limitless.

But to make it work requires compressing the fuel at extraordinarily high temperatures and pressures, and since no known material could withstand such temperatures, the fuel must be held in place by extremely powerful magnetic fields. Producing such strong fields requires superconducting magnets, but all previous fusion magnets have been made with a superconducting material that requires frigid temperatures of about 4 degrees above absolute zero (4 kelvins, or -270 degrees Celsius). In the last few years, a newer material nicknamed REBCO, for rare-earth barium copper oxide, was added to fusion magnets, and allows them to operate at 20 kelvins, a temperature that despite being only 16 kelvins warmer, brings significant advantages in terms of material properties and practical engineering.

Taking advantage of this new higher-temperature superconducting material was not just a matter of substituting it in existing magnet designs. Instead, “it was a rework from the ground up of almost all the principles that you use to build superconducting magnets,” Whyte says. The new REBCO material is “extraordinarily different than the previous generation of superconductors. You’re not just going to adapt and replace, you’re actually going to innovate from the ground up.” The new papers in Transactions on Applied Superconductivity describe the details of that redesign process, now that patent protection is in place.

A key innovation: no insulation

One of the dramatic innovations, which had many others in the field skeptical of its chances of success, was the elimination of insulation around the thin, flat ribbons of superconducting tape that formed the magnet. Like virtually all electrical wires, conventional superconducting magnets are fully protected by insulating material to prevent short-circuits between the wires. But in the new magnet, the tape was left completely bare; the engineers relied on REBCO’s much greater conductivity to keep the current flowing through the material.

“When we started this project, in let’s say 2018, the technology of using high-temperature superconductors to build large-scale high-field magnets was in its infancy,” says Zach Hartwig, the Robert N. Noyce Career Development Professor in the Department of Nuclear Science and Engineering. Hartwig has a co-appointment at the PSFC and is the head of its engineering group, which led the magnet development project. “The state of the art was small benchtop experiments, not really representative of what it takes to build a full-size thing. Our magnet development project started at benchtop scale and ended up at full scale in a short amount of time,” he adds, noting that the team built a 20,000-pound magnet that produced a steady, even magnetic field of just over 20 tesla — far beyond any such field ever produced at large scale.

“The standard way to build these magnets is you would wind the conductor and you have insulation between the windings, and you need insulation to deal with the high voltages that are generated during off-normal events such as a shutdown.” Eliminating the layers of insulation, he says, “has the advantage of being a low-voltage system. It greatly simplifies the fabrication processes and schedule.” It also leaves more room for other elements, such as more cooling or more structure for strength.

The magnet assembly is a slightly smaller-scale version of the ones that will form the donut-shaped chamber of the SPARC fusion device now being built by CFS in Devens, Massachusetts. It consists of 16 plates, called pancakes, each bearing a spiral winding of the superconducting tape on one side and cooling channels for helium gas on the other.

But the no-insulation design was considered risky, and a lot was riding on the test program. “This was the first magnet at any sufficient scale that really probed what is involved in designing and building and testing a magnet with this so-called no-insulation no-twist technology,” Hartwig says. “It was very much a surprise to the community when we announced that it was a no-insulation coil.”

Pushing to the limit … and beyond

The initial test, described in previous papers, proved that the design and manufacturing process not only worked but was highly stable — something that some researchers had doubted. The next two test runs, also performed in late 2021, then pushed the device to the limit by deliberately creating unstable conditions, including a complete shutoff of incoming power that can lead to a catastrophic overheating. Known as quenching, this is considered a worst-case scenario for the operation of such magnets, with the potential to destroy the equipment.

Part of the mission of the test program, Hartwig says, was “to actually go off and intentionally quench a full-scale magnet, so that we can get the critical data at the right scale and the right conditions to advance the science, to validate the design codes, and then to take the magnet apart and see what went wrong, why did it go wrong, and how do we take the next iteration toward fixing that. … It was a very successful test.”

That final test, which ended with the melting of one corner of one of the 16 pancakes, produced a wealth of new information, Hartwig says. For one thing, they had been using several different computational models to design and predict the performance of various aspects of the magnet’s performance, and for the most part, the models agreed in their overall predictions and were well-validated by the series of tests and real-world measurements. But in predicting the effect of the quench, the model predictions diverged, so it was necessary to get the experimental data to evaluate the models’ validity.

“The highest-fidelity models that we had predicted almost exactly how the magnet would warm up, to what degree it would warm up as it started to quench, and where would the resulting damage to the magnet would be,” he says. As described in detail in one of the new reports, “That test actually told us exactly the physics that was going on, and it told us which models were useful going forward and which to leave by the wayside because they’re not right.”

Whyte says, “Basically we did the worst thing possible to a coil, on purpose, after we had tested all other aspects of the coil performance. And we found that most of the coil survived with no damage,” while one isolated area sustained some melting. “It’s like a few percent of the volume of the coil that got damaged.” And that led to revisions in the design that are expected to prevent such damage in the actual fusion device magnets, even under the most extreme conditions.

Hartwig emphasizes that a major reason the team was able to accomplish such a radical new record-setting magnet design, and get it right the very first time and on a breakneck schedule, was thanks to the deep level of knowledge, expertise, and equipment accumulated over decades of operation of the Alcator C-Mod tokamak, the Francis Bitter Magnet Laboratory, and other work carried out at PSFC. “This goes to the heart of the institutional capabilities of a place like this,” he says. “We had the capability, the infrastructure, and the space and the people to do these things under one roof.”

The collaboration with CFS was also key, he says, with MIT and CFS combining the most powerful aspects of an academic institution and private company to do things together that neither could have done on their own. “For example, one of the major contributions from CFS was leveraging the power of a private company to establish and scale up a supply chain at an unprecedented level and timeline for the most critical material in the project: 300 kilometers (186 miles) of high-temperature superconductor, which was procured with rigorous quality control in under a year, and integrated on schedule into the magnet.”

The integration of the two teams, those from MIT and those from CFS, also was crucial to the success, he says. “We thought of ourselves as one team, and that made it possible to do what we did.”

0 notes

Text

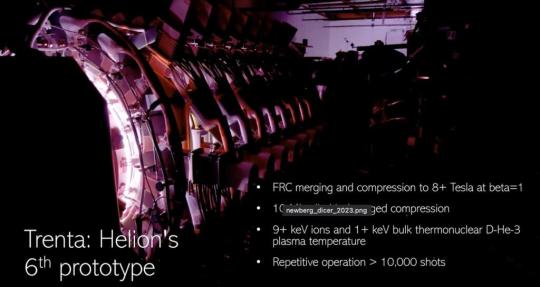

Helion Energy Commits to Fusion Enegy Delivery to Microsoft in 2028

Helion Energy will provide Microsoft (MSFT.O) with electricity from nuclear fusion in about five years. It is the first purchase power agreement for commercial nuclear fusion energy.

Helion Energy (based in Washington State near Microsofts head office) agreed to provide Microsoft with at least 50 megawatts of electricity from its planned first fusion power plant, starting in 2028. Fifty megawatts is enough electricity to power a data center or factory, said David Kirtley, Helion’s CEO.

Founded in 2013, Helion has launched a multi-billion-dollar effort to produce electricity from fusion, the nuclear reaction that powers the sun and stars.

Helion Energy has received over $570 million in funding and commitments for another $1.7 billion to develop commercial nuclear fusion.

Helion has performed thousands of tests with their sixth prototype called Trenta. In 2021, Trenta reached 100 million degrees C, the temperature they could run a commercial reactor. Magnetic compression fields exceeded 10 T, ion temperatures surpassed 8 keV, and electron temperatures exceeded 1 keV. They reported ion densities up to 3 × 10^22 ions/m3 and confinement times of up to 0.5 ms.

7th prototype Polaris Helion’s seventh-generation prototype, Project Polaris was in development in 2021, with completion expected in 2024. The device was expected to increase the pulse rate from one pulse every 10 minutes to one pulse per second for short periods. This prototype is the first of its kind to be able to heat fusion plasma up to temperatures greater than 100 million degrees C. Polaris is 25% larger than Trenta to ensure that ions do not damage the vessel walls.

Helion’s plan with Polaris is to try demonstrate net electricity from fusion. They plan to demonstrate helium-3 production through deuterium-deuterium fusion.

The plan with Polaris is to pulse at a higher repetition rate during continuous operations.

8th prototype Antares As of January 2022, an eighth prototype, Antares, is in the design stage.

Helium-3 is an ultra-rare isotope of helium that is difficult to find on Earth used in quantum computing and critical medical imaging. Helion produces helium-3 by fusing deuterium in its plasma accelerator utilizing a patented high-efficiency closed-fuel cycle. Scientists have even discussed going to the Moon to mine helium-3 where it can be found in much higher abundance. Helion’s new process means we can produce helium-3 on Earth.

Helion’s cost of electricity production is projected to be $0.01 per kWh without assuming any economies of scale from mass production, carbon credits, or government incentives.

Nextbigfuture has interviewed Helion executives a few years ago and has reported on Helion plans before. Helion and all other nuclear fusion companies have missed target dates in the past. The only fusion companies that have not missed target dates are those that are too new.

All current nuclear fusion projects are less capable than the first EBR-1 fission reactor from 1951. However, technological and scientific breakthroughs can happen. Breakthroughs often do not happen. Nuclear fusion projects might succeed or might not. Many normal large projects fail. Multi-billion dollar skyscrapers and building projects that fail not because of difficult science. Big companies and big projects can fail and they can be late and miss deadlines. The SLS rocket is an example of a project going massively over budget and behind schedule.

December 2022 Update on Helion Energy Science

Helion Fusion CEO, David Kirtley, presented an update on the work as of December, 2022.

They are working on their seventh-generation prototype system and they have had over 10,000 shots with the sixth-generation system. They create and form plasmas and accelerate two plasmas to merge at supersonic speeds. They do not inject beams and operate in pulse mode. they do not hold the plasma for long times like the tokamak approaches.

The VC-funded seventh prototype should generate electricity. They have $500 million in funding and commitments of another $2 billion if the seventh prototype achieves its technical goals.

6th system is working at about 10 kEV (10 thousand electron volts). Over 10,000 shots. 7th system will work at 20 kEV. Want to have it operating by the end of 2023. 8th or 9th systems to get to the ideal operating levels of 100 kEV.

It is taking about 2-4 years to make and start operating each new prototype.

They have computational models of their science work and computationally modeled the scaling of the system.

Nextbigfuture has monitored all nuclear fusion programs and advanced nuclear fission systems. Helion Fusion is one of the programs that is the most promising based upon my comprehensive analysis.

The Operation of Helion Fusion Summarized

Scaling and Science Foundation

Brian Wang is a Futurist Thought Leader and a popular Science blogger with 1 million readers per month. His blog Nextbigfuture.com is ranked #1 Science News Blog. It covers many disruptive technology and trends including Space, Robotics, Artificial Intelligence, Medicine, Anti-aging Biotechnology, and Nanotechnology.

Known for identifying cutting edge technologies, he is currently a Co-Founder of a startup and fundraiser for high potential early-stage companies. He is the Head of Research for Allocations for deep technology investments and an Angel Investor at Space Angels.

A frequent speaker at corporations, he has been a TEDx speaker, a Singularity University speaker and guest at numerous interviews for radio and podcasts. He is open to public speaking and advising engagements.

0 notes

Text

Once again, adding context to a post, this time from Vault Of The Atomic Space Age.

Lawrence Berkeley National Laboratory, in a Throwback Thursday post, captions this:

A Cray-1 supercomputer arrives at the Magnetic Fusion Energy Computer Center in May 1978

Oddly, this is a scan of a (slightly wonky) page, which does make the photo easier to track as it makes its way around the internet. That said, I’m not so interested that I care to track down the book (especially as the chances are it’s a LBL internal pamphlet).

#image#reblog#caption#supercomputer#1978#lawrence berkeley lab#magnetic fusion energy computer center#science#context#cray-1

1K notes

·

View notes

Text

Scientists propose solution to long-puzzling fusion problem

Physicist Stephen Jardin with images from his proposed solution. Credit: Elle Starkman/PPPL Office of Communications/Kiran Sudarsanan

The paradox startled scientists at the U.S Department of Energy’s (DOE) Princeton Plasma Physics Laboratory (PPPL) more than a dozen years ago. The more heat they beamed into a spherical tokamak, a magnetic facility designed to reproduce the fusion energy that powers the sun and stars, the less the central temperature increased.

Big mystery

“Normally, the more beam power you put in, the higher the temperature gets,” said Stephen Jardin, head of the theory and computational science group that performed the calculations, and lead author of a proposed explanation published in Physical Review Letters. “So this was a big mystery: Why does this happen?”

Solving the mystery could contribute to efforts around the world to create and control fusion on Earth to produce a virtually inexhaustible source of safe, clean and carbon-free energy to generate electricity while fighting climate change. Fusion combines light elements in the form of plasma to release massive amounts of energy.

Through recent high-resolution computer simulations, Jardin and colleagues showed what can cause the temperature to stay flat or even decrease in the center of the plasma that fuels fusion reactions, even as more heating power is beamed in. Increasing the power also raises pressure in the plasma to the point where the plasma becomes unstable and the plasma motion flattens out the temperature, they found.

“These simulations likely explain an experimental observation made over 12 years ago,” Jardin said. “The results indicate that when designing and operating spherical tokamak experiments, care must be taken to ensure that the plasma pressure does not exceed certain critical values at certain locations in the [facility],” he said. “And we now have a way of quantifying these values through computer simulations.”

The findings highlight a key hurdle for researchers to avoid when seeking to reproduce fusion reactions in spherical tokamaks—devices shaped more like cored apples than more widely used doughnut-shaped conventional tokamaks. Spherical devices produce cost-effective magnetic fields and are candidates to become models for a pilot fusion power plant.

The researchers simulated past experiments on the National Spherical Torus Experiment (NSTX), the flagship fusion facility at PPPL that has since been upgraded, and where the puzzling plasma behavior had been observed. The results largely paralleled those found on the NSTX experiments.

“Through NSTX we got the data and through a DOE program called SciDAC [Scientific Discovery through Advanced Computing] we developed the computer code that we used,” Jardin said.

Physicist and co-author Nate Ferraro of PPPL said, “The SciDAC program was absolutely instrumental in developing the code.”

Discovered mechanism

The discovered mechanism caused heightened pressure at certain locations to break up the nested magnetic surfaces formed by the magnetic fields that wrap around the tokamak to confine the plasma. The breakup flattened the temperature of the electrons inside the plasma and thereby kept the temperature in the center of the hot, charged gas from rising to fusion-relevant levels.

“So what we now think is that when raising the injected beam power you’re also increasing the plasma pressure, and you get to a certain point where the pressure starts destroying the magnetic surfaces near the center of the tokamak,” Jardin said, “and that’s why the temperature stops going up.”

This mechanism might be general in spherical tokamaks, he said, and the possible destruction of surfaces must be taken into account when future spherical tokamaks are planned.

Jardin plans to continue investigating the process to better understand the destruction of magnetic surfaces and why it appears more likely in spherical than conventional tokamaks. He has also been invited to present his findings to the annual meeting of the American Physical Society-Division of Plasma Physics (APS-DPP) in October, where early career scientists could be recruited to take up the issue and flesh out the details of the proposed mechanism.

State-of-the-art computer code could advance efforts to harness fusion energy

More information: S. C. Jardin et al, Ideal MHD Limited Electron Temperature in Spherical Tokamaks, Physical Review Letters (2022). DOI: 10.1103/PhysRevLett.128.245001

Provided by Princeton Plasma Physics Laboratory

Citation: Scientists propose solution to long-puzzling fusion problem (2022, July 13) retrieved 14 July 2022 from https://phys.org/news/2022-07-scientists-solution-long-puzzling-fusion-problem.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no part may be reproduced without the written permission. The content is provided for information purposes only.

New post published on: https://livescience.tech/2022/07/14/scientists-propose-solution-to-long-puzzling-fusion-problem/

0 notes

Photo

Stars

Stars are the most widely recognized astronomical objects, and represent the most fundamental building blocks of galaxies. The age, distribution, and composition of the stars in a galaxy trace the history, dynamics, and evolution of that galaxy. Moreover, stars are responsible for the manufacture and distribution of heavy elements such as carbon, nitrogen, and oxygen, and their characteristics are intimately tied to the characteristics of the planetary systems that may coalesce about them. Consequently, the study of the birth, life, and death of stars is central to the field of astronomy.

How do stars form?

Stars are born within the clouds of dust and scattered throughout most galaxies. A familiar example of such as a dust cloud is the Orion Nebula.

Turbulence deep within these clouds gives rise to knots with sufficient mass that the gas and dust can begin to collapse under its own gravitational attraction. As the cloud collapses, the material at the center begins to heat up. Known as a protostar, it is this hot core at the heart of the collapsing cloud that will one day become a star.

Three-dimensional computer models of star formation predict that the spinning clouds of collapsing gas and dust may break up into two or three blobs; this would explain why the majority the stars in the Milky Way are paired or in groups of multiple stars.

As the cloud collapses, a dense, hot core forms and begins gathering dust and gas. Not all of this material ends up as part of a star — the remaining dust can become planets, asteroids, or comets or may remain as dust.

In some cases, the cloud may not collapse at a steady pace. In January 2004, an amateur astronomer, James McNeil, discovered a small nebula that appeared unexpectedly near the nebula Messier 78, in the constellation of Orion. When observers around the world pointed their instruments at McNeil's Nebula, they found something interesting — its brightness appears to vary. Observations with NASA's Chandra X-ray Observatory provided a likely explanation: the interaction between the young star's magnetic field and the surrounding gas causes episodic increases in brightness.

Main Sequence Stars

A star the size of our Sun requires about 50 million years to mature from the beginning of the collapse to adulthood. Our Sun will stay in this mature phase (on the main sequence as shown in the Hertzsprung-Russell Diagram) for approximately 10 billion years.

Stars are fueled by the nuclear fusion of hydrogen to form helium deep in their interiors. The outflow of energy from the central regions of the star provides the pressure necessary to keep the star from collapsing under its own weight, and the energy by which it shines.

As shown in the Hertzsprung-Russell Diagram, Main Sequence stars span a wide range of luminosities and colors, and can be classified according to those characteristics. The smallest stars, known as red dwarfs, may contain as little as 10% the mass of the Sun and emit only 0.01% as much energy, glowing feebly at temperatures between 3000-4000K. Despite their diminutive nature, red dwarfs are by far the most numerous stars in the Universe and have lifespans of tens of billions of years.

On the other hand, the most massive stars, known as hypergiants, may be 100 or more times more massive than the Sun, and have surface temperatures of more than 30,000 K. Hypergiants emit hundreds of thousands of times more energy than the Sun, but have lifetimes of only a few million years. Although extreme stars such as these are believed to have been common in the early Universe, today they are extremely rare - the entire Milky Way galaxy contains only a handful of hypergiants.

Stars and Their Fates

In general, the larger a star, the shorter its life, although all but the most massive stars live for billions of years. When a star has fused all the hydrogen in its core, nuclear reactions cease. Deprived of the energy production needed to support it, the core begins to collapse into itself and becomes much hotter. Hydrogen is still available outside the core, so hydrogen fusion continues in a shell surrounding the core. The increasingly hot core also pushes the outer layers of the star outward, causing them to expand and cool, transforming the star into a red giant.

If the star is sufficiently massive, the collapsing core may become hot enough to support more exotic nuclear reactions that consume helium and produce a variety of heavier elements up to iron. However, such reactions offer only a temporary reprieve. Gradually, the star's internal nuclear fires become increasingly unstable - sometimes burning furiously, other times dying down. These variations cause the star to pulsate and throw off its outer layers, enshrouding itself in a cocoon of gas and dust. What happens next depends on the size of the core.

Average Stars Become White Dwarfs

For average stars like the Sun, the process of ejecting its outer layers continues until the stellar core is exposed. This dead, but still ferociously hot stellar cinder is called a White Dwarf. White dwarfs, which are roughly the size of our Earth despite containing the mass of a star, once puzzled astronomers - why didn't they collapse further? What force supported the mass of the core? Quantum mechanics provided the explanation. Pressure from fast moving electrons keeps these stars from collapsing. The more massive the core, the denser the white dwarf that is formed. Thus, the smaller a white dwarf is in diameter, the larger it is in mass! These paradoxical stars are very common - our own Sun will be a white dwarf billions of years from now. White dwarfs are intrinsically very faint because they are so small and, lacking a source of energy production, they fade into oblivion as they gradually cool down. This fate awaits only those stars with a mass up to about 1.4 times the mass of our Sun. Above that mass, electron pressure cannot support the core against further collapse. Such stars suffer a different fate as described below.

Supernovae Leave Behind Neutron Stars or Black Holes

Main sequence stars over eight solar masses are destined to die in a titanic explosion called a supernova. A supernova is not merely a bigger nova. In a nova, only the star's surface explodes. In a supernova, the star's core collapses and then explodes. In massive stars, a complex series of nuclear reactions leads to the production of iron in the core. Having achieved iron, the star has wrung all the energy it can out of nuclear fusion - fusion reactions that form elements heavier than iron actually consume energy rather than produce it. The star no longer has any way to support its own mass, and the iron core collapses. In just a matter of seconds the core shrinks from roughly 5000 miles across to just a dozen, and the temperature spikes 100 billion degrees or more. The outer layers of the star initially begin to collapse along with the core, but rebound with the enormous release of energy and are thrown violently outward. Supernovae release an almost unimaginable amount of energy. For a period of days to weeks, a supernova may outshine an entire galaxy. Likewise, all the naturally occurring elements and a rich array of subatomic particles are produced in these explosions. On average, a supernova explosion occurs about once every hundred years in the typical galaxy. About 25 to 50 supernovae are discovered each year in other galaxies, but most are too far away to be seen without a telescope.

Neutron Stars

If the collapsing stellar core at the center of a supernova contains between about 1.4 and 3 solar masses, the collapse continues until electrons and protons combine to form neutrons, producing a neutron star. Neutron stars are incredibly dense - similar to the density of an atomic nucleus. Because it contains so much mass packed into such a small volume, the gravitation at the surface of a neutron star is immense.

Neutron stars also have powerful magnetic fields which can accelerate atomic particles around its magnetic poles producing powerful beams of radiation. Those beams sweep around like massive searchlight beams as the star rotates. If such a beam is oriented so that it periodically points toward the Earth, we observe it as regular pulses of radiation that occur whenever the magnetic pole sweeps past the line of sight. In this case, the neutron star is known as a pulsar.

Black Holes

If the collapsed stellar core is larger than three solar masses, it collapses completely to form a black hole: an infinitely dense object whose gravity is so strong that nothing can escape its immediate proximity, not even light. Since photons are what our instruments are designed to see, black holes can only be detected indirectly. Indirect observations are possible because the gravitational field of a black hole is so powerful that any nearby material - often the outer layers of a companion star - is caught up and dragged in. As matter spirals into a black hole, it forms a disk that is heated to enormous temperatures, emitting copious quantities of X-rays and Gamma-rays that indicate the presence of the underlying hidden companion.

From the Remains, New Stars Arise

The dust and debris left behind by novae and supernovae eventually blend with the surrounding interstellar gas and dust, enriching it with the heavy elements and chemical compounds produced during stellar death. Eventually, those materials are recycled, providing the building blocks for a new generation of stars and accompanying planetary systems.

Credit and reference: science.nasa.gov

image credit: ESO, NASA, ESA, Hubble

#space#espaço#universo#universe#galaxy#galaxia#astronomia#astronomy#astrophysics#sun#sol#estrela#star#element#nebula#whitedwarf#anãbranca#blackhole#buraconegro#neutronstar#estreladeneutrons#po#poeira#dust#gas

5K notes

·

View notes

Text

Stars

Stars are the most widely recognized astronomical objects, and represent the most fundamental building blocks of galaxies. The age, distribution, and composition of the stars in a galaxy trace the history, dynamics, and evolution of that galaxy. Moreover, stars are responsible for the manufacture and distribution of heavy elements such as carbon, nitrogen, and oxygen, and their characteristics are intimately tied to the characteristics of the planetary systems that may coalesce about them. Consequently, the study of the birth, life, and death of stars is central to the field of astronomy.

How do stars form?

Stars are born within the clouds of dust and scattered throughout most galaxies. A familiar example of such as a dust cloud is the Orion Nebula.

Turbulence deep within these clouds gives rise to knots with sufficient mass that the gas and dust can begin to collapse under its own gravitational attraction. As the cloud collapses, the material at the center begins to heat up. Known as a protostar, it is this hot core at the heart of the collapsing cloud that will one day become a star.

Three-dimensional computer models of star formation predict that the spinning clouds of collapsing gas and dust may break up into two or three blobs; this would explain why the majority the stars in the Milky Way are paired or in groups of multiple stars.

As the cloud collapses, a dense, hot core forms and begins gathering dust and gas. Not all of this material ends up as part of a star — the remaining dust can become planets, asteroids, or comets or may remain as dust.

In some cases, the cloud may not collapse at a steady pace. In January 2004, an amateur astronomer, James McNeil, discovered a small nebula that appeared unexpectedly near the nebula Messier 78, in the constellation of Orion. When observers around the world pointed their instruments at McNeil’s Nebula, they found something interesting — its brightness appears to vary. Observations with NASA’s Chandra X-ray Observatory provided a likely explanation: the interaction between the young star’s magnetic field and the surrounding gas causes episodic increases in brightness.

Main Sequence Stars

A star the size of our Sun requires about 50 million years to mature from the beginning of the collapse to adulthood. Our Sun will stay in this mature phase (on the main sequence as shown in the Hertzsprung-Russell Diagram) for approximately 10 billion years.

Stars are fueled by the nuclear fusion of hydrogen to form helium deep in their interiors. The outflow of energy from the central regions of the star provides the pressure necessary to keep the star from collapsing under its own weight, and the energy by which it shines.

As shown in the Hertzsprung-Russell Diagram, Main Sequence stars span a wide range of luminosities and colors, and can be classified according to those characteristics. The smallest stars, known as red dwarfs, may contain as little as 10% the mass of the Sun and emit only 0.01% as much energy, glowing feebly at temperatures between 3000-4000K. Despite their diminutive nature, red dwarfs are by far the most numerous stars in the Universe and have lifespans of tens of billions of years.

On the other hand, the most massive stars, known as hypergiants, may be 100 or more times more massive than the Sun, and have surface temperatures of more than 30,000 K. Hypergiants emit hundreds of thousands of times more energy than the Sun, but have lifetimes of only a few million years. Although extreme stars such as these are believed to have been common in the early Universe, today they are extremely rare - the entire Milky Way galaxy contains only a handful of hypergiants.

Stars and Their Fates

In general, the larger a star, the shorter its life, although all but the most massive stars live for billions of years. When a star has fused all the hydrogen in its core, nuclear reactions cease. Deprived of the energy production needed to support it, the core begins to collapse into itself and becomes much hotter. Hydrogen is still available outside the core, so hydrogen fusion continues in a shell surrounding the core. The increasingly hot core also pushes the outer layers of the star outward, causing them to expand and cool, transforming the star into a red giant.

If the star is sufficiently massive, the collapsing core may become hot enough to support more exotic nuclear reactions that consume helium and produce a variety of heavier elements up to iron. However, such reactions offer only a temporary reprieve. Gradually, the star’s internal nuclear fires become increasingly unstable - sometimes burning furiously, other times dying down. These variations cause the star to pulsate and throw off its outer layers, enshrouding itself in a cocoon of gas and dust. What happens next depends on the size of the core.

Average Stars Become White Dwarfs

For average stars like the Sun, the process of ejecting its outer layers continues until the stellar core is exposed. This dead, but still ferociously hot stellar cinder is called a White Dwarf. White dwarfs, which are roughly the size of our Earth despite containing the mass of a star, once puzzled astronomers - why didn’t they collapse further? What force supported the mass of the core? Quantum mechanics provided the explanation. Pressure from fast moving electrons keeps these stars from collapsing. The more massive the core, the denser the white dwarf that is formed. Thus, the smaller a white dwarf is in diameter, the larger it is in mass! These paradoxical stars are very common - our own Sun will be a white dwarf billions of years from now. White dwarfs are intrinsically very faint because they are so small and, lacking a source of energy production, they fade into oblivion as they gradually cool down.

This fate awaits only those stars with a mass up to about 1.4 times the mass of our Sun. Above that mass, electron pressure cannot support the core against further collapse. Such stars suffer a different fate as described below.

Supernovae Leave Behind Neutron Stars or Black Holes

Main sequence stars over eight solar masses are destined to die in a titanic explosion called a supernova. A supernova is not merely a bigger nova. In a nova, only the star’s surface explodes. In a supernova, the star’s core collapses and then explodes. In massive stars, a complex series of nuclear reactions leads to the production of iron in the core. Having achieved iron, the star has wrung all the energy it can out of nuclear fusion - fusion reactions that form elements heavier than iron actually consume energy rather than produce it. The star no longer has any way to support its own mass, and the iron core collapses. In just a matter of seconds the core shrinks from roughly 5000 miles across to just a dozen, and the temperature spikes 100 billion degrees or more. The outer layers of the star initially begin to collapse along with the core, but rebound with the enormous release of energy and are thrown violently outward. Supernovae release an almost unimaginable amount of energy. For a period of days to weeks, a supernova may outshine an entire galaxy. Likewise, all the naturally occurring elements and a rich array of subatomic particles are produced in these explosions. On average, a supernova explosion occurs about once every hundred years in the typical galaxy. About 25 to 50 supernovae are discovered each year in other galaxies, but most are too far away to be seen without a telescope.

Neutron Stars

If the collapsing stellar core at the center of a supernova contains between about 1.4 and 3 solar masses, the collapse continues until electrons and protons combine to form neutrons, producing a neutron star. Neutron stars are incredibly dense - similar to the density of an atomic nucleus. Because it contains so much mass packed into such a small volume, the gravitation at the surface of a neutron star is immense.

Neutron stars also have powerful magnetic fields which can accelerate atomic particles around its magnetic poles producing powerful beams of radiation. Those beams sweep around like massive searchlight beams as the star rotates. If such a beam is oriented so that it periodically points toward the Earth, we observe it as regular pulses of radiation that occur whenever the magnetic pole sweeps past the line of sight. In this case, the neutron star is known as a pulsar.

Black Holes

If the collapsed stellar core is larger than three solar masses, it collapses completely to form a black hole: an infinitely dense object whose gravity is so strong that nothing can escape its immediate proximity, not even light. Since photons are what our instruments are designed to see, black holes can only be detected indirectly. Indirect observations are possible because the gravitational field of a black hole is so powerful that any nearby material - often the outer layers of a companion star - is caught up and dragged in. As matter spirals into a black hole, it forms a disk that is heated to enormous temperatures, emitting copious quantities of X-rays and Gamma-rays that indicate the presence of the underlying hidden companion.

From the Remains, New Stars Arise

The dust and debris left behind by novae and supernovae eventually blend with the surrounding interstellar gas and dust, enriching it with the heavy elements and chemical compounds produced during stellar death. Eventually, those materials are recycled, providing the building blocks for a new generation of stars and accompanying planetary systems.

Credit and reference: science.nasa.gov

image credit: ESO, NASA, ESA, Hubble

1 note

·

View note

Text

There’s Only One Way To Beat The Speed Of Light

https://sciencespies.com/news/theres-only-one-way-to-beat-the-speed-of-light/

There’s Only One Way To Beat The Speed Of Light

In our Universe, there are a few rules that everything must obey. Energy, momentum, and angular momentum are always conserved whenever any two quanta interact. The physics of any system of particles moving forward in time is identical to the physics of that same system reflected in a mirror, with particles exchanged for antiparticles, where the direction of time is reversed. And there’s an ultimate cosmic speed limit that applies to every object: nothing can ever exceed the speed of light, and nothing with mass can ever reach that vaunted speed.

Over the years, people have developed very clever schemes to try and circumvent this last limit. Theoretically, they’ve introduced tachyons as hypothetical particles that could exceed the speed of light, but tachyons are required to have imaginary masses, and do not physically exist. Within General Relativity, sufficiently warped space could create alternative, shortened pathways over what light must traverse, but our physical Universe has no known wormholes. And while quantum entanglement can create “spooky” action at a distance, no information is ever transmitted faster than light.

But there is one way to beat the speed of light: enter any medium other than a perfect vacuum. Here’s the physics of how it works.

Light is nothing more than an electromagnetic wave, with in-phase oscillating electric and magnetic … [+] fields perpendicular to the direction of light’s propagation. The shorter the wavelength, the more energetic the photon, but the more susceptible it is to changes in the speed of light through a medium.

And1mu / Wikimedia Commons

Light, you have to remember, is an electromagnetic wave. Sure, it also behaves as a particle, but when we’re talking about its propagation speed, it’s far more useful to think of it not only as a wave, but as a wave of oscillating, in-phase electric and magnetic fields. When it travels through the vacuum of space, there’s nothing to restrict those fields from traveling with the amplitude they’d naturally choose, defined by the wave’s energy, frequency, and wavelength. (Which are all related.)

But when light travels through a medium — that is, any region where electric charges (and possibly electric currents) are present — those electric and magnetic fields encounter some level of resistance to their free propagation. Of all the things that are free to change or remain the same, the property of light that remains constant is its frequency as it moves from vacuum to medium, from a medium into vacuum, or from one medium to another.

If the frequency stays the same, however, that means the wavelength must change, and since frequency multiplied by wavelength equals speed, that means the speed of light must change as the medium you’re propagating through changes.

Schematic animation of a continuous beam of light being dispersed by a prism. Note how the wave … [+] nature of light is both consistent with and a deeper explanation of the fact that white light can be broken up into differing colors.

Wikimedia Commons user LucasVB

One spectacular demonstration of this is the refraction of light as it passes through a prism. White light — like sunlight — is made up of light of a continuous, wide variety of wavelengths. Longer wavelengths, like red light, possess smaller frequencies, while shorter wavelengths, like blue light, possess larger frequencies. In a vacuum, all wavelengths travel at the same speed: frequency multiplied by wavelength equals the speed of light. The bluer wavelengths have more energy, and so their electric and magnetic fields are stronger than the redder wavelength light.

When you pass this light through a dispersive medium like a prism, all of the different wavelengths respond slightly differently. The more energy you have in your electric and magnetic fields, the greater the effect they experience from passing through a medium. The frequency of all light remains unchanged, but the wavelength of higher-energy light shortens by a greater amount than lower-energy light.

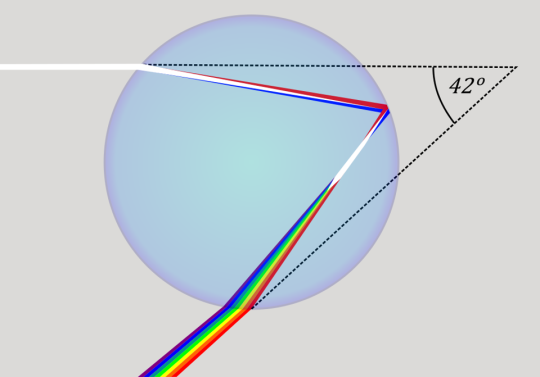

As a result, even though all light travels slower through a medium than vacuum, redder light slows by a slightly smaller amount than blue light, leading to many fascinating optical phenomena, such as the existence of rainbows as sunlight breaks into different wavelengths as it passes through water drops and droplets.

When light transitions from vacuum (or air) into a water droplet, it first refracts, then reflects … [+] off of the back, and at last refracts back into vacuum (or air). The angle that the incoming light makes with the outgoing light always peaks at an angle of 42 degrees, explaining why rainbows always make the same angle on the sky.

KES47 / Wikimedia Commons / Public Domain

In the vacuum of space, however, light has no choice — irrespective of its wavelength or frequency — but to travel at one speed and one speed only: the speed of light in a vacuum. This is also the speed that any form of pure radiation, such as gravitational radiation, must travel at, and also the speed, under the laws of relativity, that any massless particle must travel at.

But most particles in the Universe have mass, and as a result, they have to follow slightly different rules. If you have mass, the speed of light in a vacuum is still your ultimate speed limit, but rather than being compelled to travel at that speed, it’s instead a limit that you can never attain; you can only approach it.

The more energy you put into your massive particle, the closer it can move to the speed of light, but it must always travel more slowly. The most energetic particles ever made on Earth, which are protons at the Large Hadron Collider, can travel incredibly close to the speed of light in a vacuum: 299,792,455 meters-per-second, or 99.999999% the speed of light.

Time dilation (L) and length contraction (R) show how time appears to run slower and distances … [+] appear to get smaller the closer you move to the speed of light. As you approach the speed of light, clocks dilate towards time not passing at all, while distances contract down to infinitesimal amounts.

WIKIMEDIA COMMONS USERS ZAYANI (L) AND JROBBINS59 (R)

No matter how much energy we pump into those particles, we can only add more “9s” to the right of that decimal place, however. We can never reach the speed of light.

Or, more accurately, we can never reach the speed of light in a vacuum. That is, the ultimate cosmic speed limit, of 299,792,458 m/s is unattainable for massive particles, and simultaneously is the speed that all massless particles must travel at.

But what happens, then, if we travel not through a vacuum, but through a medium instead? As it turns out, when light travels through a medium, its electric and magnetic fields feel the effects of the matter that they pass through. This has the effect, when light enters a medium, of immediately changing the speed at which light travels. This is why, when you watch light enter or leave a medium, or transition from one medium to another, it appears to bend. The light, while free to propagate unrestricted in a vacuum, has its propagation speed and its wavelength depend heavily on the properties of the medium it travels through.

Light passing from a negligible medium through a dense medium, exhibiting refraction. Light comes in … [+] from the lower right, strikes the prism and partially reflects (top), while the remainder is transmitted through the prism (center). The light that passes through the prism appears to bend, as it travels at a slower speed than the light traveling through air did earlier. When it re-emerged from the prism, it refracts once again, returning to its original speed.

Wikimedia Commons user Spigget

However, particles suffer a different fate. If a high-energy particle that was originally passing through a vacuum suddenly finds itself traveling through a medium, its behavior will be different than that of light.

First off, it won’t experience an immediate change in momentum or energy, as the electric and magnetic forces acting on it — which change its momentum over time — are negligible compared to the amount of momentum it already possesses. Rather than bending instantly, as light appears to, its trajectory changes can only proceed in a gradual fashion. When particles first enter a medium, they continue moving with roughly the same properties, including the same speed, as before they entered.

Second, the big events that can change a particle’s trajectory in a medium are almost all direct interactions: collisions with other particles. These scattering events are tremendously important in particle physics experiments, as the products of these collisions enable us to reconstruct whatever it is that occurred back at the collision point. When a fast-moving particle collides with a set of stationary ones, we call these “fixed target” experiments, and they’re used in everything from creating neutrino beams to giving rise to antimatter particles that are critical for exploring certain properties of nature.

Here, a proton beam is shot at a deuterium target in the LUNA experiment. The rate of nuclear fusion … [+] at various temperatures helped reveal the deuterium-proton cross-section, which was the most uncertain term in the equations used to compute and understand the net abundances that would arise at the end of Big Bang Nucleosynthesis. Fixed-target experiments have many applications in particle physics.

LUNA Collaboration/Gran Sasso

But the most interesting fact is this: particles that move slower than light in a vacuum, but faster than light in the medium that they enter, are actually breaking the speed of light. This is the one and only real, physical way that particles can exceed the speed of light. They can’t ever exceed the speed of light in a vacuum, but can exceed it in a medium. And when they do, something fascinating occurs: a special type of radiation — Cherenkov radiation — gets emitted.

Named for its discoverer, Pavel Cherenkov, it’s one of those physics effects that was first noted experimentally, before it was ever predicted. Cherenkov was studying radioactive samples that had been prepared, and some of them were being stored in water. The radioactive preparations seemed to emit a faint, bluish-hued light, and even though Cherenkov was studying luminescence — where gamma-rays would excite these solutions, which would then emit visible light when they de-excited — he was quickly able to conclude that this light had a preferred direction. It wasn’t a fluorescent phenomenon, but something else entirely.

Today, that same blue glow can be seen in the water tanks surrounding nuclear reactors: Cherenkov radiation.

Reactor nuclear experimental RA-6 (Republica Argentina 6), en marcha, showing the characteristic … [+] Cherenkov radiation from the faster-than-light-in-water particles emitted. As these particle travel faster than light does in this medium, they emit radiation to shed energy and momentum, which they’ll continue to do until they drop below the speed of light.

Centro Atomico Bariloche, via Pieck Darío

Where does this radiation come from?

When you have a very fast particle traveling through a medium, that particle will generally be charged, and the medium itself is made up of positive (atomic nuclei) and negative (electrons) charges. The charged particle, as it travels through this medium, has a chance of colliding with one of the particles in there, but since atoms are mostly empty space, the odds of a collision are relatively low over short distances.

Instead, the particle has an effect on the medium that it travels through: it causes the particles in the medium to polarize — where like charges repel and opposite charges attract — in response to the charged particle that’s passing through. Once the charged particle is out of the way, however, those electrons return back to their ground state, and those transitions cause the emission of light. Specifically, they cause the emission of blue light in a cone-like shape, where the geometry of the cone depends on the particle’s speed and the speed of light in that particular medium.

This animation showcases what happens when a relativistic, charged particle moves faster than light … [+] in a medium. The interactions cause the particle to emit a cone of radiation known as Cherenkov radiation, which is dependent on the speed and energy of the incident particle. Detecting the properties of this radiation is an enormously useful and widespread technique in experimental particle physics.

vlastni dilo / H. Seldon / public domain

This is an enormously important property in particle physics, as it’s this very process that allows us to detect the elusive neutrino at all. Neutrinos hardly ever interact with matter at all. However, on the rare occasions that they do, they only impart their energy to one other particle.

What we can do, therefore, is to build an enormous tank of very pure liquid: liquid that doesn’t radioactively decay or emit other high-energy particles. We can shield it very well from cosmic rays, natural radioactivity, and all sorts of other contaminating sources. And then, we can line the outside of this tank with what are known as photomultiplier tubes: tubes that can detect a single photon, triggering a cascade of electronic reactions enabling us to know where, when, and in what direction a photon came from.

With large enough detectors, we can determine many properties about every neutrino that interacts with a particle in these tanks. The Cherenkov radiation that results, produced so long as the particle “kicked” by the neutrino exceeds the speed of light in that liquid, is an incredibly useful tool for measuring the properties of these ghostly cosmic particles.