#kubernetes testing

Explore tagged Tumblr posts

Text

Tips to Boost Release Confidence in Kubernetes

Software development takes a lot of focus and practice, and many newcomers find the thought of releasing a product into the world a bit daunting. All kinds of worries and fears can crop up before release, and even when unfounded, doubt can make it difficult to pull the trigger.

If you’re using a solution like Kubernetes software to develop and release your next project, below are some tips to boost your confidence and get your product released for the world to enjoy:

Work With a Mentor

Having a mentor on your side can be a big confidence booster when it comes to Kubernetes software. Mentors provide not only guidance and advice, but they can also boost your confidence by sharing stories of their own trials. Finding a mentor who specializes in Kubernetes is ideal if this is the container orchestration system you’re working with, but a mentor with experience in any type of software development product release can be beneficial.

Take a Moment Away From Your Project

In any type of intensive development project, it can be easy to lose sight of the bigger picture. Many developers find themselves working longer hours as the release of a product grows near, and this can contribute to stress, worry and doubt.

When possible, take some time to step away from your work for a bit. If you can put your project down for a few days to get your mind off of things, this will provide you with some time to relax and come back to your project with a fresh set of eyes and a clear mind.

Ask for a Review

You can also ask trusted friends and colleagues to review your work before release. This may not be a full-on bug hunt, but it can help you have confidence that the main parameters are working fine and that no glaring issues exist. You can also ask for general feedback, but be careful not to let the opinions of others sway you from your overall mission of developing a stellar product that fulfills your vision.

Read a similar article about Kubernetes dev environments here at this page.

0 notes

Text

Manager - Lead Data & AI Platforms Security Engineer

Job title: Manager – Lead Data & AI Platforms Security Engineer Company: KPMG Job description: containerized applications (Docker and Kubernetes security) Experienced in securing API’s and Web Applications. Experience… Microsoft Identity and Access Administrator SC-300 CISSP To discuss this or wider Audit roles with our recruitment team… Expected salary: Location: London Job date: Wed, 25 Jun…

#artificial intelligence#audio-dsp#Azure#Backend#business-intelligence#cleantech#cloud-native#Cybersecurity#data-science#dotnet#Ecommerce#edtech#embedded-systems#ethical AI#game-dev#gcp#healthtech#Kubernetes Administrator#mobile-development#Networking#qa-testing#quantum computing#regtech#remote-jobs#site-reliability#technical-writing#uk-jobs#ux-design#visa-sponsorship

0 notes

Text

#TechKnowledge Have you heard of Containerization?

Swipe to discover what it is and how it can impact your digital security! 🚀

👉 Stay tuned for more simple and insightful tech tips by following us.

🌐 Learn more: https://simplelogic-it.com/

💻 Explore the latest in #technology on our Blog Page: https://simplelogic-it.com/blogs/

✨ Looking for your next career opportunity? Check out our #Careers page for exciting roles: https://simplelogic-it.com/careers/

#techterms#technologyterms#techcommunity#simplelogicit#makingitsimple#techinsight#techtalk#containerization#application#development#testing#deployment#devops#docker#kubernets#openshift#scalability#security#knowledgeIispower#makeitsimple#simplelogic#didyouknow

0 notes

Text

A Comprehensive Guide to Building Microservices with Node.js

Introduction:The microservices architecture has become a popular approach for developing scalable and maintainable applications. Unlike monolithic architectures, where all components are tightly coupled, microservices allow you to break down an application into smaller, independent services that can be developed, deployed, and scaled independently. Node.js, with its asynchronous, event-driven…

0 notes

Text

Driving Innovation: A Case Study on DevOps Implementation in BFSI Domain

Banking, Financial Services, and Insurance (BFSI), technology plays a pivotal role in driving innovation, efficiency, and customer satisfaction. However, for one BFSI company, the journey toward digital excellence was fraught with challenges in its software development and maintenance processes. With a diverse portfolio of applications and a significant portion outsourced to external vendors, the company grappled with inefficiencies that threatened its operational agility and competitiveness. Identified within this portfolio were 15 core applications deemed critical to the company’s operations, highlighting the urgency for transformative action.

Aspirations for the Future:

Looking ahead, the company envisioned a future state characterized by the establishment of a matured DevSecOps environment. This encompassed several key objectives:

Near-zero Touch Pipeline: Automating product development processes for infrastructure provisioning, application builds, deployments, and configuration changes.

Matured Source-code Management: Implementing robust source-code management processes, complete with review gates, to uphold quality standards.

Defined and Repeatable Release Process: Instituting a standardized release process fortified with quality and security gates to minimize deployment failures and bug leakage.

Modernization: Embracing the latest technological advancements to drive innovation and efficiency.

Common Processes Among Vendors: Establishing standardized processes to enhance understanding and control over the software development lifecycle (SDLC) across different vendors.

Challenges Along the Way:

The path to realizing this vision was beset with challenges, including:

Lack of Source Code Management

Absence of Documentation

Lack of Common Processes

Missing CI/CD and Automated Testing

No Branching and Merging Strategy

Inconsistent Sprint Execution

These challenges collectively hindered the company’s ability to achieve optimal software development, maintenance, and deployment processes. They underscored the critical need for foundational practices such as source code management, documentation, and standardized processes to be addressed comprehensively.

Proposed Solutions:

To overcome these obstacles and pave the way for transformation, the company proposed a phased implementation approach:

Stage 1: Implement Basic DevOps: Commencing with the implementation of fundamental DevOps practices, including source code management and CI/CD processes, for a select group of applications.

Stage 2: Modernization: Progressing towards a more advanced stage involving microservices architecture, test automation, security enhancements, and comprehensive monitoring.

To Expand Your Awareness: https://devopsenabler.com/contact-us

Injecting Security into the SDLC:

Recognizing the paramount importance of security, dedicated measures were introduced to fortify the software development lifecycle. These encompassed:

Security by Design

Secure Coding Practices

Static and Dynamic Application Security Testing (SAST/DAST)

Software Component Analysis

Security Operations

Realizing the Outcomes:

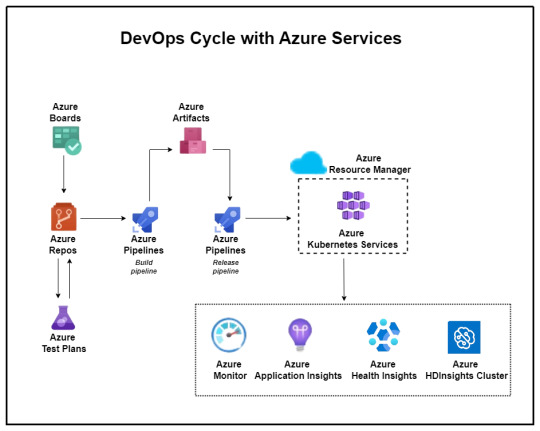

The proposed solution yielded promising outcomes aligned closely with the company’s future aspirations. Leveraging Microsoft Azure’s DevOps capabilities, the company witnessed:

Establishment of common processes and enhanced visibility across different vendors.

Implementation of Azure DevOps for organized version control, sprint planning, and streamlined workflows.

Automation of builds, deployments, and infrastructure provisioning through Azure Pipelines and Automation.

Improved code quality, security, and release management processes.

Transition to microservices architecture and comprehensive monitoring using Azure services.

The BFSI company embarked on a transformative journey towards establishing a matured DevSecOps environment. This journey, marked by challenges and triumphs, underscores the critical importance of innovation and adaptability in today’s rapidly evolving technological landscape. As the company continues to evolve and innovate, the adoption of DevSecOps principles will serve as a cornerstone in driving efficiency, security, and ultimately, the delivery of superior customer experiences in the dynamic realm of BFSI.

Contact Information:

Phone: 080-28473200 / +91 8880 38 18 58

Email: [email protected]

Address: DevOps Enabler & Co, 2nd Floor, F86 Building, ITI Limited, Doorvaninagar, Bangalore 560016.

#BFSI#DevSecOps#software development#maintenance#technology stack#source code management#CI/CD#automated testing#DevOps#microservices#security#Azure DevOps#infrastructure as code#ARM templates#code quality#release management#Kubernetes#testing automation#monitoring#security incident response#project management#agile methodology#software engineering

0 notes

Text

NARWEB (5)

Teknolojinin hızla geliştiği günümüzde, veri yönetimi ve bulut sistemleri işletmeler için kritik bir önem taşımaktadır. Narweb, kullanıcılarına güvenilir ve yenilikçi çözümler sunarak, iş süreçlerini kolaylaştırmayı hedeflemektedir. Bu makalede, Narweb’in sunduğu hizmetlerin detaylarına dalacağız; Nextcloud ile dosya paylaşımının kolaylığını, Kubernetes ile ölçeklenebilirliği, Docker ile konteynerleştirme avantajlarını keşfedeceğiz. Yenilikçi yazılımları ve modern altyapısı ile Narweb, işletmelere verimlilik kazandırırken, aynı zamanda veri güvenliğini de ön planda tutar.

Nextcloud

Nextcloud, kendinize ait bir bulut depolama çözümü olarak, verilerinizi güvende tutmanıza olanak tanır. Kullanıcı dostu arayüzü sayesinde dosyalarınıza her yerden erişebilir, dosya paylaşımını kolayca gerçekleştirebilirsiniz.

Kubernetes ile entegrasyonu sayesinde, Nextcloud'ınızın ölçeklenebilirliğini artırabilir ve yüksek erişilebilirlik sağlayabilirsiniz. Bu, kullanıcı deneyimini en üst düzeye çıkarmak için önemli bir avantajdır.

Veri güvenliğini ve gizliliğini kendiniz yönetmek, Nextcloud'un en büyük avantajlarından biridir. Kullanıcılar, kendi sunucularında Nextcloud kurarak daha fazla kontrol sahibi olurlar.

Tüm bunların yanı sıra, Narweb ile size özel yapılandırma ve destek hizmetleri sunarak Nextcloud deneyiminizi daha da geliştirebiliriz. Verilerinizi güvenle saklayın ve Nextcloud ile bulut dünyasında kaybolmayın!

Kubernetes

Kubernetes, modern uygulama dağıtımı için kritik bir platformdur. Nextcloud’unuzu yönetmek için Kubernetes’i kullanarak, hem ölçeklenebilirliği artırabilir hem de performansı optimize edebilirsiniz. Docker ile entegre bir şekilde çalışan Kubernetes, konteyner tabanlı uygulamaların yönetimini basitleştirir. Kolayca otomatikleştirilmiş dağıtım ve ölçekleme olanakları sunarak, sistem kaynaklarını daha verimli kullanmanıza yardımcı olur.

Nextcloud Kubernetes Docker kombinasyonu sayesinde, depolama ve veri erişiminde kesintisiz bir deneyim sağlayabilirsiniz. Kubernetes’in sunduğu yük dengeleme, hata toleransı ve otomatik güncelleme özellikleri ile verileriniz her zaman güvende ve erişilebilir durumda olur. Ayrıca, uygulama yönetimi sürecinde daha fazla esneklik ve kontrol elde edersiniz.

Başlamak için buraya tıklayarak daha fazla bilgi alabilir ve Nextcloud’unuz ile Kubernetes ve Docker’ı nasıl entegre edebileceğinizi öğrenebilirsiniz.

Docker

Docker, uygulamaların container teknolojisi ile izole bir ortamda geliştirilmesini ve dağıtılmasını sağlayan güçlü bir platformdur. Nextcloud, Kubernetes üzerinde çalışırken, Docker'ın sağladığı esneklik ve taşınabilirlikten yararlanarak sistemin kurulumunu ve yönetimini kolaylaştırır.

Docker sayesinde, geliştiriciler uygulamalarını hızlı bir şekilde oluşturarak, test ortamlarından üretim ortamlarına sorunsuz bir geçiş yapabilirler. Nextcloud uygulamanızı Kubernetes üzerinde çalıştırdığınızda, Docker container'ları ile ölçeklenebilirlik avantajını yakalarsınız.

Nextcloud Kubernetes Docker kombinasyonu, modern uygulama geliştirme ve dağıtımı için ideal bir çözüm sunar. Bu teknoloji yelpazesi ile, iş akışlarınızı optimize edebilir ve kullanıcı deneyimini zenginleştirebilirsiniz.

411 notes

·

View notes

Text

What is an open source platform used for?

Open Source Development

An open source platform is a type of software whose source code or the base code is freely available to the public and open in segments. This means anyone can view, use, modify, and share the code. Open source platforms are widely used across industries because they offer flexibility, cost savings, and community-driven innovation. Open source development has become an initial go-to approach and one of the ideal options for building scalable and secure digital solutions.

Common Uses of Open Source Platforms -

1. Web and App Development

Open source platforms like WordPress, Drupal, and Joomla are widely used for building websites. The coders working in open source development department can customize and personalize these platforms to meet specific and unique business needs. Similarly, common frameworks such as React, Angular, and Laravel facilitate the development of dynamic and feature-rich web applications with their implementation and usage.

2. Operating Systems and Servers

Linux is one of the most well-known open-source operating systems. Many companies use Linux-based servers for hosting websites and applications because they are secure, fast, and cost-effective. These platforms are maintained by communities that constantly improve performance and patch vulnerabilities.

3. Cloud Computing

While the additional tools like OpenStack and Kubernetes are open source platforms and mediums that support the cloud infrastructure. They help businesses to manage their storage, networking, and computing resources efficiently while keeping operating costs low through open-source development.

4. Software Testing and DevOps

Open source tool and the common resources such as Selenium (for test automation) and Jenkins (for continuous integration) are essential and most considered in DevOps environments and stiff settings. These tools support the automated testing process, faster deployments, and seamless collaboration.

5. Data Management and Analytics

Platforms like Hadoop, Apache Spark, and PostgreSQL facilitate the processing and management of large datasets. These open-source solutions are widely used and leveraged in industrial sectors such as healthcare, finance with e-commerce for better understanding.

Why Do Businesses Prefer Open Source?

Open source platforms are cost-effective, highly customizable, and backed by active communities. They reduce and lower down the dependency on single vendors and parties and offer a general transparency in security and performance with its adaptation into the system or the infrastructure. Trusted providers and professional experts like Suma Soft, IBM, and Cyntexa specialize in delivering tailored and custom solutions through open source development, helping businesses to stay flexible, secure, and future-ready in this digital age and competitive market.

#it services#technology#saas#software#saas development company#saas technology#digital transformation

3 notes

·

View notes

Text

Build the Future of Tech: Enroll in the Leading DevOps Course Online Today

In a global economy where speed, security, and scalability are parameters of success, DevOps has emerged as the pulsating core of contemporary IT operations. Businesses are not recruiting either developers or sysadmins anymore—employers need DevOps individuals who can seamlessly integrate both worlds.

If you're willing to accelerate your career and become irreplaceable in the tech world, then now is the ideal time to sign up for Devops Course Online. And ReferMe Group's AWS DevOps Course is the one to take you there—quicker.

Why DevOps? Why Now?

The need for DevOps professionals is growing like crazy. As per current industry reports, job titles such as DevOps Engineer, Cloud Architect, and Site Reliability Engineer are among the best-paying and safest careers in technology today.

Why? Because DevOps helps businesses to:

Deploy faster using continuous integration and delivery (CI/CD)

Boost reliability and uptime

Automate everything-from infrastructure to testing

Scale apps with ease on cloud platforms like AWS

And individuals who develop these skills are rapidly becoming the pillars of today's tech teams.

Why Learn a DevOps Online?

Learning DevOps online provides more than convenience—it provides liberation. As a full-time professional, student, or career changer, online learning allows you:

✅ To learn at your own pace

✅ To access world-class instructors anywhere

✅ To develop real-world, project-based skills

✅ To prepare for globally recognized certifications

✅ J To join a growing network of DevOps learners and mentors

It’s professional-grade training—without the classroom limitations.

What Makes ReferMe Group’s DevOps Course Stand Out?

The AWS DevOps Course from ReferMe Group isn’t just a course—it’s a career accelerator. Here's what sets it apart:

Hands-On Labs & Projects: You’ll work on live AWS environments and build end-to-end DevOps pipelines using tools like Jenkins, Docker, Terraform, Git, Kubernetes, and more.

Training from Experts: Learn from experienced industry experts who have used DevOps at scale.

Resume-Reinforcing Certifications: Train to clear AWS and DevOps certification exams confidently.

Career Guidance: From resume creation to interview preparation, we prepare you for jobs, not course completion.

Lifetime Access: Come back to the content anytime with future upgrades covered.

Who Should Take This Course?

This DevOps course is ideal for:

Software Developers looking to move into deployment and automation

IT Professionals who want to upskill in cloud infrastructure

System Admins transitioning to new-age DevOps careers

Career changers entering the high-demand cloud and DevOps space

Students and recent graduates seeking a future-proof skill set

No experience in DevOps? No worries. We take you from the basics to advanced tools.

Final Thoughts: Your DevOps Journey Starts Here

As businesses continue to move to the cloud and automate their pipelines, DevOps engineers are no longer a nicety—they're a necessity. Investing in a high-quality DevOps course online provides you with the skills, certification, and confidence to compete and succeed in today's tech industry.

Start building your future today.

Join ReferMe Group's AWS DevOps Course today and become the architect of tomorrow's technology.

2 notes

·

View notes

Text

A Comprehensive Guide to Deploy Azure Kubernetes Service with Azure Pipelines

A powerful orchestration tool for containerized applications is one such solution that Azure Kubernetes Service (AKS) has offered in the continuously evolving environment of cloud-native technologies. Associate this with Azure Pipelines for consistent CI CD workflows that aid in accelerating the DevOps process. This guide will dive into the deep understanding of Azure Kubernetes Service deployment with Azure Pipelines and give you tips that will enable engineers to build container deployments that work. Also, discuss how DevOps consulting services will help you automate this process.

Understanding the Foundations

Nowadays, Kubernetes is the preferred tool for running and deploying containerized apps in the modern high-speed software development environment. Together with AKS, it provides a high-performance scale and monitors and orchestrates containerized workloads in the environment. However, before anything, let’s deep dive to understand the fundamentals.

Azure Kubernetes Service: A managed Kubernetes platform that is useful for simplifying container orchestration. It deconstructs the Kubernetes cluster management hassles so that developers can build applications instead of infrastructure. By leveraging AKS, organizations can:

Deploy and scale containerized applications on demand.

Implement robust infrastructure management

Reduce operational overhead

Ensure high availability and fault tolerance.

Azure Pipelines: The CI/CD Backbone

The automated code building, testing, and disposition tool, combined with Azure Kubernetes Service, helps teams build high-end deployment pipelines in line with the modern DevOps mindset. Then you have Azure Pipelines for easily integrating with repositories (GitHub, Repos, etc.) and automating the application build and deployment.

Spiral Mantra DevOps Consulting Services

So, if you’re a beginner in DevOps or want to scale your organization’s capabilities, then DevOps consulting services by Spiral Mantra can be a game changer. The skilled professionals working here can help businesses implement CI CD pipelines along with guidance regarding containerization and cloud-native development.

Now let’s move on to creating a deployment pipeline for Azure Kubernetes Service.

Prerequisites you would require

Before initiating the process, ensure you fulfill the prerequisite criteria:

Service Subscription: To run an AKS cluster, you require an Azure subscription. Do create one if you don’t already.

CLI: The Azure CLI will let you administer resources such as AKS clusters from the command line.

A Professional Team: You will need to have a professional team with technical knowledge to set up the pipeline. Hire DevOps developers from us if you don’t have one yet.

Kubernetes Cluster: Deploy an AKS cluster with Azure Portal or ARM template. This will be the cluster that you run your pipeline on.

Docker: Since you’re deploying containers, you need Docker installed on your machine locally for container image generation and push.

Step-by-Step Deployment Process

Step 1: Begin with Creating an AKS Cluster

Simply begin the process by setting up an AKS cluster with CLI or Azure Portal. Once the process is completed, navigate further to execute the process of application containerization, and for that, you would need to create a Docker file with the specification of your application runtime environment. This step is needed to execute the same code for different environments.

Step 2: Setting Up Your Pipelines

Now, the process can be executed for new projects and for already created pipelines, and that’s how you can go further.

Create a New Project

Begin with launching the Azure DevOps account; from the screen available, select the drop-down icon.

Now, tap on the Create New Project icon or navigate further to use an existing one.

In the final step, add all the required repositories (you can select them either from GitHub or from Azure Repos) containing your application code.

For Already Existing Pipeline

Now, from your existing project, tap to navigate the option mentioning Pipelines, and then open Create Pipeline.

From the next available screen, select the repository containing the code of the application.

Navigate further to opt for either the YAML pipeline or the starter pipeline. (Note: The YAML pipeline is a flexible environment and is best recommended for advanced workflows.).

Further, define pipeline configuration by accessing your YAML file in Azure DevOps.

Step 3: Set Up Your Automatic Continuous Deployment (CD)

Further, in the next step, you would be required to automate the deployment process to fasten the CI CD workflows. Within the process, the easiest and most common approach to execute the task is to develop a YAML file mentioning deployment.yaml. This step is helpful to identify and define the major Kubernetes resources, including deployments, pods, and services.

After the successful creation of the YAML deployment, the pipeline will start to trigger the Kubernetes deployment automatically once the code is pushed.

Step 4: Automate the Workflow of CI CD

Now that we have landed in the final step, it complies with the smooth running of the pipelines every time the new code is pushed. With the right CI CD integration, the workflow allows for the execution of continuous testing and building with the right set of deployments, ensuring that the applications are updated in every AKS environment.

Best Practices for AKS and Azure Pipelines Integration

1. Infrastructure as Code (IaC)

- Utilize Terraform or Azure Resource Manager templates

- Version control infrastructure configurations

- Ensure consistent and reproducible deployments

2. Security Considerations

- Implement container scanning

- Use private container registries

- Regular security patch management

- Network policy configuration

3. Performance Optimization

- Implement horizontal pod autoscaling

- Configure resource quotas

- Use node pool strategies

- Optimize container image sizes

Common Challenges and Solutions

Network Complexity

Utilize Azure CNI for advanced networking

Implement network policies

Configure service mesh for complex microservices

Persistent Storage

Use Azure Disk or Files

Configure persistent volume claims

Implement storage classes for dynamic provisioning

Conclusion

Deploying the Azure Kubernetes Service with effective pipelines represents an explicit approach to the final application delivery. By embracing these practices, DevOps consulting companies like Spiral Mantra offer transformative solutions that foster agile and scalable approaches. Our expert DevOps consulting services redefine technological infrastructure by offering comprehensive cloud strategies and Kubernetes containerization with advanced CI CD integration.

Let’s connect and talk about your cloud migration needs

2 notes

·

View notes

Text

Top 10 In- Demand Tech Jobs in 2025

Technology is growing faster than ever, and so is the need for skilled professionals in the field. From artificial intelligence to cloud computing, businesses are looking for experts who can keep up with the latest advancements. These tech jobs not only pay well but also offer great career growth and exciting challenges.

In this blog, we’ll look at the top 10 tech jobs that are in high demand today. Whether you’re starting your career or thinking of learning new skills, these jobs can help you plan a bright future in the tech world.

1. AI and Machine Learning Specialists

Artificial Intelligence (AI) and Machine Learning are changing the game by helping machines learn and improve on their own without needing step-by-step instructions. They’re being used in many areas, like chatbots, spotting fraud, and predicting trends.

Key Skills: Python, TensorFlow, PyTorch, data analysis, deep learning, and natural language processing (NLP).

Industries Hiring: Healthcare, finance, retail, and manufacturing.

Career Tip: Keep up with AI and machine learning by working on projects and getting an AI certification. Joining AI hackathons helps you learn and meet others in the field.

2. Data Scientists

Data scientists work with large sets of data to find patterns, trends, and useful insights that help businesses make smart decisions. They play a key role in everything from personalized marketing to predicting health outcomes.

Key Skills: Data visualization, statistical analysis, R, Python, SQL, and data mining.

Industries Hiring: E-commerce, telecommunications, and pharmaceuticals.

Career Tip: Work with real-world data and build a strong portfolio to showcase your skills. Earning certifications in data science tools can help you stand out.

3. Cloud Computing Engineers: These professionals create and manage cloud systems that allow businesses to store data and run apps without needing physical servers, making operations more efficient.

Key Skills: AWS, Azure, Google Cloud Platform (GCP), DevOps, and containerization (Docker, Kubernetes).

Industries Hiring: IT services, startups, and enterprises undergoing digital transformation.

Career Tip: Get certified in cloud platforms like AWS (e.g., AWS Certified Solutions Architect).

4. Cybersecurity Experts

Cybersecurity professionals protect companies from data breaches, malware, and other online threats. As remote work grows, keeping digital information safe is more crucial than ever.

Key Skills: Ethical hacking, penetration testing, risk management, and cybersecurity tools.

Industries Hiring: Banking, IT, and government agencies.

Career Tip: Stay updated on new cybersecurity threats and trends. Certifications like CEH (Certified Ethical Hacker) or CISSP (Certified Information Systems Security Professional) can help you advance in your career.

5. Full-Stack Developers

Full-stack developers are skilled programmers who can work on both the front-end (what users see) and the back-end (server and database) of web applications.

Key Skills: JavaScript, React, Node.js, HTML/CSS, and APIs.

Industries Hiring: Tech startups, e-commerce, and digital media.

Career Tip: Create a strong GitHub profile with projects that highlight your full-stack skills. Learn popular frameworks like React Native to expand into mobile app development.

6. DevOps Engineers

DevOps engineers help make software faster and more reliable by connecting development and operations teams. They streamline the process for quicker deployments.

Key Skills: CI/CD pipelines, automation tools, scripting, and system administration.

Industries Hiring: SaaS companies, cloud service providers, and enterprise IT.

Career Tip: Earn key tools like Jenkins, Ansible, and Kubernetes, and develop scripting skills in languages like Bash or Python. Earning a DevOps certification is a plus and can enhance your expertise in the field.

7. Blockchain Developers

They build secure, transparent, and unchangeable systems. Blockchain is not just for cryptocurrencies; it’s also used in tracking supply chains, managing healthcare records, and even in voting systems.

Key Skills: Solidity, Ethereum, smart contracts, cryptography, and DApp development.

Industries Hiring: Fintech, logistics, and healthcare.

Career Tip: Create and share your own blockchain projects to show your skills. Joining blockchain communities can help you learn more and connect with others in the field.

8. Robotics Engineers

Robotics engineers design, build, and program robots to do tasks faster or safer than humans. Their work is especially important in industries like manufacturing and healthcare.

Key Skills: Programming (C++, Python), robotics process automation (RPA), and mechanical engineering.

Industries Hiring: Automotive, healthcare, and logistics.

Career Tip: Stay updated on new trends like self-driving cars and AI in robotics.

9. Internet of Things (IoT) Specialists

IoT specialists work on systems that connect devices to the internet, allowing them to communicate and be controlled easily. This is crucial for creating smart cities, homes, and industries.

Key Skills: Embedded systems, wireless communication protocols, data analytics, and IoT platforms.

Industries Hiring: Consumer electronics, automotive, and smart city projects.

Career Tip: Create IoT prototypes and learn to use platforms like AWS IoT or Microsoft Azure IoT. Stay updated on 5G technology and edge computing trends.

10. Product Managers

Product managers oversee the development of products, from idea to launch, making sure they are both technically possible and meet market demands. They connect technical teams with business stakeholders.

Key Skills: Agile methodologies, market research, UX design, and project management.

Industries Hiring: Software development, e-commerce, and SaaS companies.

Career Tip: Work on improving your communication and leadership skills. Getting certifications like PMP (Project Management Professional) or CSPO (Certified Scrum Product Owner) can help you advance.

Importance of Upskilling in the Tech Industry

Stay Up-to-Date: Technology changes fast, and learning new skills helps you keep up with the latest trends and tools.

Grow in Your Career: By learning new skills, you open doors to better job opportunities and promotions.

Earn a Higher Salary: The more skills you have, the more valuable you are to employers, which can lead to higher-paying jobs.

Feel More Confident: Learning new things makes you feel more prepared and ready to take on tougher tasks.

Adapt to Changes: Technology keeps evolving, and upskilling helps you stay flexible and ready for any new changes in the industry.

Top Companies Hiring for These Roles

Global Tech Giants: Google, Microsoft, Amazon, and IBM.

Startups: Fintech, health tech, and AI-based startups are often at the forefront of innovation.

Consulting Firms: Companies like Accenture, Deloitte, and PwC increasingly seek tech talent.

In conclusion, the tech world is constantly changing, and staying updated is key to having a successful career. In 2025, jobs in fields like AI, cybersecurity, data science, and software development will be in high demand. By learning the right skills and keeping up with new trends, you can prepare yourself for these exciting roles. Whether you're just starting or looking to improve your skills, the tech industry offers many opportunities for growth and success.

#Top 10 Tech Jobs in 2025#In- Demand Tech Jobs#High paying Tech Jobs#artificial intelligence#datascience#cybersecurity

2 notes

·

View notes

Text

Top Trends in Software Development for 2025

The software development industry is evolving at an unprecedented pace, driven by advancements in technology and the increasing demands of businesses and consumers alike. As we step into 2025, staying ahead of the curve is essential for businesses aiming to remain competitive. Here, we explore the top trends shaping the software development landscape and how they impact businesses. For organizations seeking cutting-edge solutions, partnering with the Best Software Development Company in Vadodara, Gujarat, or India can make all the difference.

1. Artificial Intelligence and Machine Learning Integration:

Artificial Intelligence (AI) and Machine Learning (ML) are no longer optional but integral to modern software development. From predictive analytics to personalized user experiences, AI and ML are driving innovation across industries. In 2025, expect AI-powered tools to streamline development processes, improve testing, and enhance decision-making.

Businesses in Gujarat and beyond are leveraging AI to gain a competitive edge. Collaborating with the Best Software Development Company in Gujarat ensures access to AI-driven solutions tailored to specific industry needs.

2. Low-Code and No-Code Development Platforms:

The demand for faster development cycles has led to the rise of low-code and no-code platforms. These platforms empower non-technical users to create applications through intuitive drag-and-drop interfaces, significantly reducing development time and cost.

For startups and SMEs in Vadodara, partnering with the Best Software Development Company in Vadodara ensures access to these platforms, enabling rapid deployment of business applications without compromising quality.

3. Cloud-Native Development:

Cloud-native technologies, including Kubernetes and microservices, are becoming the backbone of modern applications. By 2025, cloud-native development will dominate, offering scalability, resilience, and faster time-to-market.

The Best Software Development Company in India can help businesses transition to cloud-native architectures, ensuring their applications are future-ready and capable of handling evolving market demands.

4. Edge Computing:

As IoT devices proliferate, edge computing is emerging as a critical trend. Processing data closer to its source reduces latency and enhances real-time decision-making. This trend is particularly significant for industries like healthcare, manufacturing, and retail.

Organizations seeking to leverage edge computing can benefit from the expertise of the Best Software Development Company in Gujarat, which specializes in creating applications optimized for edge environments.

5. Cybersecurity by Design:

With the increasing sophistication of cyber threats, integrating security into the development process has become non-negotiable. Cybersecurity by design ensures that applications are secure from the ground up, reducing vulnerabilities and protecting sensitive data.

The Best Software Development Company in Vadodara prioritizes cybersecurity, providing businesses with robust, secure software solutions that inspire trust among users.

6. Blockchain Beyond Cryptocurrencies:

Blockchain technology is expanding beyond cryptocurrencies into areas like supply chain management, identity verification, and smart contracts. In 2025, blockchain will play a pivotal role in creating transparent, tamper-proof systems.

Partnering with the Best Software Development Company in India enables businesses to harness blockchain technology for innovative applications that drive efficiency and trust.

7. Progressive Web Apps (PWAs):

Progressive Web Apps (PWAs) combine the best features of web and mobile applications, offering seamless experiences across devices. PWAs are cost-effective and provide offline capabilities, making them ideal for businesses targeting diverse audiences.

The Best Software Development Company in Gujarat can develop PWAs tailored to your business needs, ensuring enhanced user engagement and accessibility.

8. Internet of Things (IoT) Expansion:

IoT continues to transform industries by connecting devices and enabling smarter decision-making. From smart homes to industrial IoT, the possibilities are endless. In 2025, IoT solutions will become more sophisticated, integrating AI and edge computing for enhanced functionality.

For businesses in Vadodara and beyond, collaborating with the Best Software Development Company in Vadodara ensures access to innovative IoT solutions that drive growth and efficiency.

9. DevSecOps:

DevSecOps integrates security into the DevOps pipeline, ensuring that security is a shared responsibility throughout the development lifecycle. This approach reduces vulnerabilities and ensures compliance with industry standards.

The Best Software Development Company in India can help implement DevSecOps practices, ensuring that your applications are secure, scalable, and compliant.

10. Sustainability in Software Development:

Sustainability is becoming a priority in software development. Green coding practices, energy-efficient algorithms, and sustainable cloud solutions are gaining traction. By adopting these practices, businesses can reduce their carbon footprint and appeal to environmentally conscious consumers.

Working with the Best Software Development Company in Gujarat ensures access to sustainable software solutions that align with global trends.

11. 5G-Driven Applications:

The rollout of 5G networks is unlocking new possibilities for software development. Ultra-fast connectivity and low latency are enabling applications like augmented reality (AR), virtual reality (VR), and autonomous vehicles.

The Best Software Development Company in Vadodara is at the forefront of leveraging 5G technology to create innovative applications that redefine user experiences.

12. Hyperautomation:

Hyperautomation combines AI, ML, and robotic process automation (RPA) to automate complex business processes. By 2025, hyperautomation will become a key driver of efficiency and cost savings across industries.

Partnering with the Best Software Development Company in India ensures access to hyperautomation solutions that streamline operations and boost productivity.

13. Augmented Reality (AR) and Virtual Reality (VR):

AR and VR technologies are transforming industries like gaming, education, and healthcare. In 2025, these technologies will become more accessible, offering immersive experiences that enhance learning, entertainment, and training.

The Best Software Development Company in Gujarat can help businesses integrate AR and VR into their applications, creating unique and engaging user experiences.

Conclusion:

The software development industry is poised for significant transformation in 2025, driven by trends like AI, cloud-native development, edge computing, and hyperautomation. Staying ahead of these trends requires expertise, innovation, and a commitment to excellence.

For businesses in Vadodara, Gujarat, or anywhere in India, partnering with the Best Software Development Company in Vadodara, Gujarat, or India ensures access to cutting-edge solutions that drive growth and success. By embracing these trends, businesses can unlock new opportunities and remain competitive in an ever-evolving digital landscape.

#Best Software Development Company in Vadodara#Best Software Development Company in Gujarat#Best Software Development Company in India#nividasoftware

5 notes

·

View notes

Text

Guide on How to Build Microservices

Microservices are quickly becoming the go-to architectural and organizational strategy for application development. The days of monolithic approaches are behind us as software becomes more complex. Microservices provide greater flexibility and software resilience while creating a push for innovation.

At its core, microservices are about having smaller, independent processes that communicate with one another through a well-designed interface and lightweight API. In this guide, we'll provide a quick breakdown of how to build easy microservices.

Create Your Services

To perform Kubernetes microservices testing, you need to create services. The goal is to have independent containerized services and establish a connection that allows them to communicate.

Keep things simple by developing two web applications. Many developers experimenting with microservices use the iconic "Hello World" test to understand how this architecture works.

Start by building your file structure. You'll need a directory, subdirectories and files to set up the blueprint for your microservices application. The amount of code you'll write for a true microservices application is great. But to keep things simple for experimentation, you can use prewritten codes and directory structures.

The first service you should create is your "hello-world-service." This flask-based application has two endpoints. The first is the welcome page. It includes a button to test the connection to your second service. The second endpoint communicates with your second service.

The second service is the REST-based "welcome service." It delivers a message, allowing you to test that your two services communicate effectively.

The concept is simple: You have one service that you can interact with directly and a second service with the sole function of delivering a message. Using Kubernetes microservices testing, you can force the first service to send a GET request to your REST-based second service.

Containerizing

Before testing, you must make your microservices independent from the hosting environment. To do that, you encapsulate them in Docker containers. You can then set the build path for each service, put them in a running state and start testing.

The result should be a simple web-based application. When you click the "test connection" button, your first service will connect to the second, delivering your "Hello World" message.

Explore the future of scalability – click to harness the power of our Kubernetes platform!

#kubernetes platform#kubernetes preview environments#kubernetes microservices testing#microservice local development environments

0 notes

Text

Azure Cloud Engineer

Job title: Azure Cloud Engineer Company: Ashdown Group Job description: for this role you must be an accomplished cloud engineer with Azure Administrator Associate accreditation. The purpose of the role… Infrastructure services, including Azure Active Directory and Azure AD connect, and will be fully conversant with Azure Kubernetes… Expected salary: £80000 per year Location: London Job date:…

#Android#artificial intelligence#Azure#Blockchain#Broadcast#Cybersecurity#data-engineering#embedded-systems#generative AI#govtech#healthtech#it-consulting#Kubernetes Administrator#legaltech#metaverse#mobile-development#Networking#no-code#power-platform#product-management#prompt-engineering#Python#qa-testing#regtech#scrum#solutions-architecture#system-administration#uk-jobs#vr-ar

0 notes

Text

Cloud-Native Development in the USA: A Comprehensive Guide

Introduction

Cloud-native development is transforming how businesses in the USA build, deploy, and scale applications. By leveraging cloud infrastructure, microservices, containers, and DevOps, organizations can enhance agility, improve scalability, and drive innovation.

As cloud computing adoption grows, cloud-native development has become a crucial strategy for enterprises looking to optimize performance and reduce infrastructure costs. In this guide, we’ll explore the fundamentals, benefits, key technologies, best practices, top service providers, industry impact, and future trends of cloud-native development in the USA.

What is Cloud-Native Development?

Cloud-native development refers to designing, building, and deploying applications optimized for cloud environments. Unlike traditional monolithic applications, cloud-native solutions utilize a microservices architecture, containerization, and continuous integration/continuous deployment (CI/CD) pipelines for faster and more efficient software delivery.

Key Benefits of Cloud-Native Development

1. Scalability

Cloud-native applications can dynamically scale based on demand, ensuring optimal performance without unnecessary resource consumption.

2. Agility & Faster Deployment

By leveraging DevOps and CI/CD pipelines, cloud-native development accelerates application releases, reducing time-to-market.

3. Cost Efficiency

Organizations only pay for the cloud resources they use, eliminating the need for expensive on-premise infrastructure.

4. Resilience & High Availability

Cloud-native applications are designed for fault tolerance, ensuring minimal downtime and automatic recovery.

5. Improved Security

Built-in cloud security features, automated compliance checks, and container isolation enhance application security.

Key Technologies in Cloud-Native Development

1. Microservices Architecture

Microservices break applications into smaller, independent services that communicate via APIs, improving maintainability and scalability.

2. Containers & Kubernetes

Technologies like Docker and Kubernetes allow for efficient container orchestration, making application deployment seamless across cloud environments.

3. Serverless Computing

Platforms like AWS Lambda, Azure Functions, and Google Cloud Functions eliminate the need for managing infrastructure by running code in response to events.

4. DevOps & CI/CD

Automated build, test, and deployment processes streamline software development, ensuring rapid and reliable releases.

5. API-First Development

APIs enable seamless integration between services, facilitating interoperability across cloud environments.

Best Practices for Cloud-Native Development

1. Adopt a DevOps Culture

Encourage collaboration between development and operations teams to ensure efficient workflows.

2. Implement Infrastructure as Code (IaC)

Tools like Terraform and AWS CloudFormation help automate infrastructure provisioning and management.

3. Use Observability & Monitoring

Employ logging, monitoring, and tracing solutions like Prometheus, Grafana, and ELK Stack to gain insights into application performance.

4. Optimize for Security

Embed security best practices in the development lifecycle, using tools like Snyk, Aqua Security, and Prisma Cloud.

5. Focus on Automation

Automate testing, deployments, and scaling to improve efficiency and reduce human error.

Top Cloud-Native Development Service Providers in the USA

1. AWS Cloud-Native Services

Amazon Web Services offers a comprehensive suite of cloud-native tools, including AWS Lambda, ECS, EKS, and API Gateway.

2. Microsoft Azure

Azure’s cloud-native services include Azure Kubernetes Service (AKS), Azure Functions, and DevOps tools.

3. Google Cloud Platform (GCP)

GCP provides Kubernetes Engine (GKE), Cloud Run, and Anthos for cloud-native development.

4. IBM Cloud & Red Hat OpenShift

IBM Cloud and OpenShift focus on hybrid cloud-native solutions for enterprises.

5. Accenture Cloud-First

Accenture helps businesses adopt cloud-native strategies with AI-driven automation.

6. ThoughtWorks

ThoughtWorks specializes in agile cloud-native transformation and DevOps consulting.

Industry Impact of Cloud-Native Development in the USA

1. Financial Services

Banks and fintech companies use cloud-native applications to enhance security, compliance, and real-time data processing.

2. Healthcare

Cloud-native solutions improve patient data accessibility, enable telemedicine, and support AI-driven diagnostics.

3. E-commerce & Retail

Retailers leverage cloud-native technologies to optimize supply chain management and enhance customer experiences.

4. Media & Entertainment

Streaming services utilize cloud-native development for scalable content delivery and personalization.

Future Trends in Cloud-Native Development

1. Multi-Cloud & Hybrid Cloud Adoption

Businesses will increasingly adopt multi-cloud and hybrid cloud strategies for flexibility and risk mitigation.

2. AI & Machine Learning Integration

AI-driven automation will enhance DevOps workflows and predictive analytics in cloud-native applications.

3. Edge Computing

Processing data closer to the source will improve performance and reduce latency for cloud-native applications.

4. Enhanced Security Measures

Zero-trust security models and AI-driven threat detection will become integral to cloud-native architectures.

Conclusion

Cloud-native development is reshaping how businesses in the USA innovate, scale, and optimize operations. By leveraging microservices, containers, DevOps, and automation, organizations can achieve agility, cost-efficiency, and resilience. As the cloud-native ecosystem continues to evolve, staying ahead of trends and adopting best practices will be essential for businesses aiming to thrive in the digital era.

1 note

·

View note

Text

What are the latest trends in the IT job market?

Introduction

The IT job market is changing quickly. This change is because of new technology, different employer needs, and more remote work.

For jobseekers, understanding these trends is crucial to positioning themselves as strong candidates in a highly competitive landscape.

This blog looks at the current IT job market. It offers insights into job trends and opportunities. You will also find practical strategies to improve your chances of getting your desired role.

Whether you’re in the midst of a job search or considering a career change, this guide will help you navigate the complexities of the job hunting process and secure employment in today’s market.

Section 1: Understanding the Current IT Job Market

Recent Trends in the IT Job Market

The IT sector is booming, with consistent demand for skilled professionals in various domains such as cybersecurity, cloud computing, and data science.

The COVID-19 pandemic accelerated the shift to remote work, further expanding the demand for IT roles that support this transformation.

Employers are increasingly looking for candidates with expertise in AI, machine learning, and DevOps as these technologies drive business innovation.

According to industry reports, job opportunities in IT will continue to grow, with the most substantial demand focused on software development, data analysis, and cloud architecture.

It’s essential for jobseekers to stay updated on these trends to remain competitive and tailor their skills to current market needs.

Recruitment efforts have also become more digitized, with many companies adopting virtual hiring processes and online job fairs.

This creates both challenges and opportunities for job seekers to showcase their talents and secure interviews through online platforms.

NOTE: Visit Now

Remote Work and IT

The surge in remote work opportunities has transformed the job market. Many IT companies now offer fully remote or hybrid roles, which appeal to professionals seeking greater flexibility.

While remote work has increased access to job opportunities, it has also intensified competition, as companies can now hire from a global talent pool.

Section 2: Choosing the Right Keywords for Your IT Resume

Keyword Optimization: Why It Matters

With more employers using Applicant Tracking Systems (ATS) to screen resumes, it’s essential for jobseekers to optimize their resumes with relevant keywords.

These systems scan resumes for specific words related to the job description and only advance the most relevant applications.

To increase the chances of your resume making it through the initial screening, jobseekers must identify and incorporate the right keywords into their resumes.

When searching for jobs in IT, it’s important to tailor your resume for specific job titles and responsibilities. Keywords like “software engineer,” “cloud computing,” “data security,” and “DevOps” can make a huge difference.

By strategically using keywords that reflect your skills, experience, and the job requirements, you enhance your resume’s visibility to hiring managers and recruitment software.

Step-by-Step Keyword Selection Process

Analyze Job Descriptions: Look at several job postings for roles you’re interested in and identify recurring terms.

Incorporate Specific Terms: Include technical terms related to your field (e.g., Python, Kubernetes, cloud infrastructure).

Use Action Verbs: Keywords like “developed,” “designed,” or “implemented” help demonstrate your experience in a tangible way.

Test Your Resume: Use online tools to see how well your resume aligns with specific job postings and make adjustments as necessary.

Section 3: Customizing Your Resume for Each Job Application

Why Customization is Key

One size does not fit all when it comes to resumes, especially in the IT industry. Jobseekers who customize their resumes for each job application are more likely to catch the attention of recruiters. Tailoring your resume allows you to emphasize the specific skills and experiences that align with the job description, making you a stronger candidate. Employers want to see that you’ve taken the time to understand their needs and that your expertise matches what they are looking for.

Key Areas to Customize:

Summary Section: Write a targeted summary that highlights your qualifications and goals in relation to the specific job you’re applying for.

Skills Section: Highlight the most relevant skills for the position, paying close attention to the technical requirements listed in the job posting.

Experience Section: Adjust your work experience descriptions to emphasize the accomplishments and projects that are most relevant to the job.

Education & Certifications: If certain qualifications or certifications are required, make sure they are easy to spot on your resume.

NOTE: Read More

Section 4: Reviewing and Testing Your Optimized Resume

Proofreading for Perfection

Before submitting your resume, it’s critical to review it for accuracy, clarity, and relevance. Spelling mistakes, grammatical errors, or outdated information can reflect poorly on your professionalism.

Additionally, make sure your resume is easy to read and visually organized, with clear headings and bullet points. If possible, ask a peer or mentor in the IT field to review your resume for content accuracy and feedback.

Testing Your Resume with ATS Tools

After making your resume keyword-optimized, test it using online tools that simulate ATS systems. This allows you to see how well your resume aligns with specific job descriptions and identify areas for improvement.

Many tools will give you a match score, showing you how likely your resume is to pass an ATS scan. From here, you can fine-tune your resume to increase its chances of making it to the recruiter’s desk.

Section 5: Trends Shaping the Future of IT Recruitment

Embracing Digital Recruitment

Recruiting has undergone a significant shift towards digital platforms, with job fairs, interviews, and onboarding now frequently taking place online.

This transition means that jobseekers must be comfortable navigating virtual job fairs, remote interviews, and online assessments.

As IT jobs increasingly allow remote work, companies are also using technology-driven recruitment tools like AI for screening candidates.

Jobseekers should also leverage platforms like LinkedIn to increase visibility in the recruitment space. Keeping your LinkedIn profile updated, networking with industry professionals, and engaging in online discussions can all boost your chances of being noticed by recruiters.

Furthermore, participating in virtual job fairs or IT recruitment events provides direct access to recruiters and HR professionals, enhancing your job hunt.

FAQs

1. How important are keywords in IT resumes?

Keywords are essential in IT resumes because they ensure your resume passes through Applicant Tracking Systems (ATS), which scans resumes for specific terms related to the job. Without the right keywords, your resume may not reach a human recruiter.

2. How often should I update my resume?

It’s a good idea to update your resume regularly, especially when you gain new skills or experience. Also, customize it for every job application to ensure it aligns with the job’s specific requirements.

3. What are the most in-demand IT jobs?

Some of the most in-demand IT jobs include software developers, cloud engineers, cybersecurity analysts, data scientists, and DevOps engineers.

4. How can I stand out in the current IT job market?

To stand out, jobseekers should focus on tailoring their resumes, building strong online profiles, networking, and keeping up-to-date with industry trends. Participation in online forums, attending webinars, and earning industry-relevant certifications can also enhance visibility.

Conclusion

The IT job market continues to offer exciting opportunities for jobseekers, driven by technological innovations and changing work patterns.

By staying informed about current trends, customizing your resume, using keywords effectively, and testing your optimized resume, you can improve your job search success.

Whether you are new to the IT field or an experienced professional, leveraging these strategies will help you navigate the competitive landscape and secure a job that aligns with your career goals.

NOTE: Contact Us

2 notes

·

View notes

Text

The Best Software Training Institute in Chennai for IT Courses

Looking for the best software training institute in Chennai? Trendnologies offers top-rated IT courses with industry-oriented training and placement assistance to help you grow in your career. In today’s digital world, technology continues to advance rapidly, creating a demand for skilled professionals who are equipped to tackle real-world challenges. Whether you’re just starting your career or looking to advance your skill set, a reputable training institute can make all the difference. Among the top choices in Chennai, Trendnologies stands out as one of the best software training institutes offering specialized IT courses. With a solid reputation for excellence, Trendnologies provides students with hands-on experience and industry-relevant skills needed to excel in the tech world. Why Choose Trendnologies for Your Software Training in Chennai? Industry-Relevant Courses

Trendnologies offers a wide range of software training programs designed to keep pace with the latest trends in the IT industry. The courses are designed by industry experts who ensure that the syllabus is up-to-date and aligned with the demands of employers. Software Development: Java, Python, C++, PHP, and Full Stack Development.

Web Development: HTML, CSS, JavaScript, ReactJS, Angular.

Data Science & Machine Learning: Data Analytics, Python for Data Science, Artificial Intelligence, Big Data.

Mobile App Development: Android, iOS, Flutter, and React Native.

Cloud Computing & DevOps: AWS, Azure, Google Cloud, Kubernetes, Docker.

Cybersecurity: Ethical Hacking, Network Security, Penetration Testing.

Experienced Trainers

At Trendnologies, the trainers are professionals with years of experience in the IT industry. They bring real-world insights into the classroom, ensuring that students not only learn theoretical concepts but also acquire practical skills. The trainers use hands-on projects, case studies, and industry-specific examples to make the learning process more interactive and effective.

Whether you are learning programming languages like Java and Python or diving into emerging fields like data science and machine learning, the experienced trainers at Trendnologies provide valuable guidance that will prepare you for real-world challenges. Hands-on Training Approach

One of the most important aspects of IT training is hands-on experience. At Trendnologies, students are given access to a variety of tools and technologies that they will use in their careers. The institute emphasizes practical learning, which is why the courses include live projects, coding challenges, and industry simulations. By working on real projects, students gain the confidence to handle complex tasks in their professional careers.

The hands-on training approach helps students bridge the gap between theoretical knowledge and practical application, making them job-ready from day one.

Flexible Learning Options

Understanding that each student’s learning style and schedule are different, Trendnologies offers a variety of learning options. You can choose between:

Classroom Training: Traditional in-person training, where students can interact with instructors and fellow learners.

Online Training: For those who prefer the flexibility of learning from home, Trendnologies offers live online training with the same level of interactivity as classroom sessions.

Weekend Training: For professionals who want to upgrade their skills while working, weekend classes provide a flexible learning option without interrupting work schedules.

Personalized Attention

Trendnologies is committed to providing personalized attention to each student. Small batch sizes ensure that every learner receives one-on-one guidance and support from instructors. Whether you are struggling with a specific topic or need help with a project, the trainers are always available to offer assistance.

This approach ensures that all students, regardless of their learning pace or skill level, receive the necessary attention to succeed in their courses.

Courses Offered at Trendnologies

Trendnologies offers a wide range of software training programs catering to various fields of IT. Whether you are interested in software development, data science, or cloud computing, there’s a course that fits your career goals.

Software Development Courses

Java Training: Java is one of the most popular programming languages, widely used in software development, web development, and mobile app development. Trendnologies offers in-depth training in Java programming, covering basic concepts to advanced topics such as frameworks, multi-threading, and database connectivity.

Python Training: Python is a versatile and beginner-friendly programming language. Trendnologies offers hands-on training in Python, focusing on its applications in web development, data analysis, and automation.

Full Stack Development: Full stack developers are in high demand, as they are skilled in both front-end and back-end development. Trendnologies offers a comprehensive Full Stack Development course that covers front-end technologies like HTML, CSS, and JavaScript, as well as back-end technologies like Node.js, MongoDB, and Express.js.

Data Science & Machine Learning

Data Science with Python: This course is perfect for those looking to enter the field of data science. It covers topics such as data preprocessing, statistical analysis, and data visualization using Python libraries like Pandas, NumPy, and Matplotlib.

Machine Learning: Trendnologies offers specialized training in machine learning, covering algorithms, data modeling, and real-world applications. Students will learn how to build machine learning models using tools like Scikit-learn and TensorFlow.

Cloud Computing & DevOps

AWS Training: As cloud computing becomes more popular, AWS (Amazon Web Services) is the leading cloud platform. Trendnologies offers training in AWS services, including EC2, S3, Lambda, and more, preparing students for AWS certifications.

DevOps Training: This course focuses on integrating software development and IT operations, streamlining the software development lifecycle. Students will learn about CI/CD pipelines, automation tools, and containerization using Docker and Kubernetes.

Mobile App Development

Android Development: Trendnologies offers Android app development courses that teach students how to build mobile applications for Android using Java and Kotlin.

iOS Development: For those interested in building apps for Apple devices, Trendnologies provides iOS development training using Swift and Objective-C.

Student Testimonials and Success Stories

Over the years, Trendnologies has helped thousands of students achieve their career goals. Here’s what some of them have to say:

“I enrolled in the Full Stack Development course at Trendnologies, and the learning experience has been amazing! The trainers were knowledgeable, and the hands-on projects helped me understand the concepts in-depth. I secured a job as a Full Stack Developer within two months of completing the course.” – Rajesh K., Full Stack Developer

“The Data Science training at Trendnologies was top-notch. The trainers were very experienced and guided me through every step of the learning process. I now work as a Data Scientist at a leading tech company.” – Priya R., Data Scientist

Conclusion

When it comes to quality software training in Chennai, Trendnologies stands out as the best choice. The institute’s industry-relevant courses, experienced trainers, flexible learning options, and placement assistance ensure that students are fully prepared for the job market. Whether you’re a beginner or an experienced professional looking to upskill, Trendnologies provides the perfect platform to help you achieve your career goals in the IT industry.

Enroll at Trendnologies today and take the first step toward a successful career in technology.

1 note

·

View note