#architecture principles

Explore tagged Tumblr posts

Video

youtube

Liskov Substitution Principle Tutorial with Java Coding Example for Begi...

Hello friends, new #video on #liskovsubstitutionprinciple #solidprinciples with #Java #coding #example is published on #codeonedigest #youtube channel. Learn #lsp #principle #programming #coding with codeonedigest.

@java #java #awscloud @awscloud @AWSCloudIndia #Cloud #CloudComputing @YouTube #youtube #liskovsubstitutionprinciple #liskovsubstitutionprinciplesolid #lsp #lspprinciple #liskovsubstitutionprinciple #liskov #liskovprinciple #solidprinciples #solidprinciplesinterviewquestions #solidprinciplesjavainterviewquestions #solidprinciplesreact #solidprinciplesinandroid #solidprinciplestutorial #solidprinciplesexplained #solidprinciplesjava #singleresponsibilityprinciple #openclosedprinciple #liskovsubstitutionprinciple #interfacesegregationprinciple #dependencyinversionprinciple #objectorientedprogramming #objectorienteddesignandmodelling #objectorienteddesign #objectorienteddesignsoftwareengineering #objectorienteddesigninterviewquestions #objectorienteddesignandanalysis #objectorienteddesigninjava #objectorienteddesignmodel #objectorienteddesignapproach #objectorienteddesignparadigm #objectorienteddesignquestions

#youtube#solid#solid principle#liskov substitution#liskov substitution principle#object oriented programming#oops#java#design principles#design patterns#software design#architecture principles

2 notes

·

View notes

Text

I was just reminded that the art collective Forensic Architecture exists and once again I’m disgusted.

For those of you who don’t know, it’s a collective of various artists who play at forensic science, conduct “forensic investigations”, and then make art exhibits of their “results”. Their reports and exhibits will make statements such as “the evidence shows that X is linked to Y” but the statistical output that they share will show something like a 5% confidence in the match.

That's right. They make art exhibits of their "investigations".

You want to talk about fandomizing tragedy? Making “forensic investigations” into art exhibits is the bougiest version I can think of, and it's only to serve an echelon of people who enjoy that kind of stuff. If any of the people in this art collective had a background in forensic science they would have taken ethics courses that would tell them how horrid putting on an art exhibit like this actually is. You don't honor the victims by putting on an art show for the rich and powerful to gasp and faint over so that you can fundraise for your next show.

Their founder has even stated that they’re not in forensics but “counter-forensics” and "counter-investigation". They eschew the practices and norms of the scientific community for telling their own version of investigative “truth”. They’ve even gone so far as to quote post-truth philosophies in their work and the controversial Nietzsche quote about there being no facts, only interpretations. Both are dangerous philosophies to hold in forensic science as it presents the evidence as subjective rather than objective. This is why they're an art collective and not a forensic science research group as they purport, they're rejecting objective scientific outcome for subjective interpretation.

You can go to the group's website and they have profiles on all of their team members. Almost every person is labeled as a "researcher", but once you click on their profile it quickly tells you that they're an artist, designer, activist, or some combination of the three. No mention of any scientific background whatsoever. That indicates their ability to actual conduct forensic science research is not great as they don't have any training or education on the methods involved. In fact, their entire program and personnel are out of an arts college with no science programs or faculty outside of anthropology.

That's weird, right?

A group that supposedly made a new discipline of forensic science, according to them, has no members with actual backgrounds in forensic science or scientific disciplines relating to it?

None of the team member profiles detail any scientific background that would be relevant to forensics outside of a few people with engineering and computer science degrees. Neither of the aforementioned disciplines typically train you in forensic practices anyway unless you take certain courses. Because these profiles are public you can go and checked LinkedIn profiles and find the CVs for each member as well. Guess what? No forensic science or relevant scientific backgrounds listed there as well.

But for some reason this art collective has received funding from governments and NGOs for "creating" a new discipline of forensic science. They're a "trusted" source for forensic investigations. That's worrying. That's terrifying.

I'm a forensic scientist and to make an objective field based upon methodology and empirically supported practice into one that is subjective and throws out the empirical aspects is terrifying. Everyone should have klaxons going off in their head whenever they see Forensic Architecture's name appear in a publication. I've reviewed a few of their "investigations" and they are rife with bad practice, manipulation, and misinformation. In fact, it appears that they present their work in art exhibits more than they testify to it in court due to their methods being questionable and their intent being not to help the investigation but to be a "counter-investigation" that can be judged by the court of public opinion. What do I mean by this? In many of their investigations the collective does not actively have personnel at the scene. Meaning they are not getting first hand physical evidence and measurements. Now, it's not always possible to be there personally and as such you rely upon crime scene techs, investigators, and other personnel to collect this stuff. Typically if you're a consultant or outside firm you are getting the evidence after it has been collected for analysis. You want the physical evidence in your hands as much as possible so that you can analyze it properly. Sometimes you have to request going to the scene yourself to get the measurements and evidence you need. The worst type of evidence to receive is honestly digital images of the scene as you are now having to analyze something a general investigator, who likely does not have specialized training, took a picture of.

In situations where you cannot have the physical evidence for analysis and you are left with only photographs then a forensic expert should be tempering their responses and conclusions. You cannot confidently come to conclusions based simply on looking at photos. This is something that is hammered home repeatedly in forensic programs and professionals.

In the case of warzone crime scene analysis, as FA typically does, they are, typically, not collecting evidence first hand from the scene, nor are they receiving evidence secondarily from actual trained investigators (when they are there first hand they also rely excessively upon expensive technology instead of best practices). They rely upon third party photos and satellite imagery to do their analysis.

Time and time again, forensic experts who rely solely upon digital photos and media to make their analysis get ripped apart by a good lawyer. Being confident in conclusions based upon photographs is the easiest way to lose your credibility. But again, the art collective playing forensic scientist primarily puts their work in art exhibits where they are not scrutinized by experts. Hell, I don't think I've ever seen them present at one of our professional conferences nationally or internationally (I would love to be a fly on the wall when that happens).

And finally, if this was an actual credible scientific group that produced credible investigations and had created a brand new field with methodology that stood to scrutiny there would be publications in the forensic journals detailing this. Especially from the "creator" of the field Eyal Weizman.

Guess what there isn't?

But in the end all they’re actually doing is crime scene reconstruction from people who want to cosplay as forensic scientists.

(for more reading on the group see this article that highlights issues with FA from another perspective https://www.artnews.com/art-in-america/features/forensic-architecture-fake-news-1234661013/)

#Forensic architecture#forensic science#Forensic Architecture is not made of forensic scientist but of artists#Forensic Architecture admittedly does not follow established forensic practices and principles#This is the group that Western Activists will share as “proof” for the “crimes” of Israel#Their rejection of scientific methodology is all you need to know about the veracity of their “proof”#They use tertiary evidence in their analysis and very rarely provide an actual report on their methodology - which is horrific#FA is being used by antisemites as an “authority” and they should be summarily ignored for poor scientific practice

56 notes

·

View notes

Text

🌲The Beauty of TTP 2 part three🌲

#pics(edits) made by me :)#assassin1513#video games#gaming#games#mystical#the talos principle 2#the talos principle#talos principle 2#talos#talos principle#game screenshots#screenshots#screenshot#virtual photography#nature#trees#architecture#minimalist#minimalistic design#nature and culture#puzzle#landscape#infinity#green#concrete#flowers

46 notes

·

View notes

Text

osamu mikumo: World's Squishiest Wizard

#i'm trying to write a fic with him and rinji doing Man Things#(getting into increasingly worse and stupider situations while stuck in the jungle with no practical knowledge of the terrain)#and somehow the dynamic i have them in is#'man who has a sword he made himself for no good reason and worked out how to use it from first principles'#and the world's squishiest wizard except there's no magic so he mostly runs around trying and failing to climb the architecture.#writing osamu is fun because i have expanded his canon love of bridges into him knowing stuff about architecture.#he thinks about moishe safdie a lot because of Habitat 67#which has the Afto Aesthetic imo.#world trigger#osamu mikumo#rinji amatori#see it works because they're both committed to their goals to the point of insanity but also. chika is not there.#they cannot pretend that the whole thing isn't about trying to run away anymore.#so what even is the goal if they can't pretend that it was about her? what happens when they have to face their own selfishness?

9 notes

·

View notes

Text

looking at solarpunk art and concepts for reference and like as awesome as it is i feel like so many of these people don't super understand the reality of what would make this kind of solarpunk/ecofuturist future a reality

#you. solarpunk artist. do you know what a windcatcher is#you! solarpunk artist! do you know any principles of low-impact and sustainable architecture!#you!!! solarpunk artist!!! can you design a solarpunk or humanist future around existing architecture which exists all around us!!!#leo rambles#not to mention so much of this is AI generated which is like. darkly ironic#i won't claim to be any kind of sustainability or engineering expert but like as nice as these theoretical futures seem#they're harder to envision and also i want to see how things work#SHOW ME the systems for energy generation!!! show me the indoor climate control. i want to know more

13 notes

·

View notes

Photo

Sea Ranch principles

7 notes

·

View notes

Text

"winter isn't just snow clearly op has never experienced [something I've experienced in my climate]"

what do you think the fucking "desolation" means shit for brains

haters will see a winter enjoyer and say "why are you going outside it's cold and boring" and not even see the beauty in desolation and stillness 🙄

#Also comments like 'must be nice having a rich family to pay for a warm house'#Like I pay for my own rent in a cheap ass apartment that only barely maintains heat#And honestly probably maintains heat more because it's half in the ground than good architectural principles#Like c'mon now

96K notes

·

View notes

Text

Architecture is about the important stuff. Whatever that is. - Ralph Johnson

0 notes

Text

CSSWG Minutes Telecon (2024-12-04): Just Use Grid vs. Display: Masonry

New Post has been published on https://thedigitalinsider.com/csswg-minutes-telecon-2024-12-04-just-use-grid-vs-display-masonry/

CSSWG Minutes Telecon (2024-12-04): Just Use Grid vs. Display: Masonry

The CSS Working Group (CSSWG) meets weekly (or close to it) to discuss and quickly resolve issues from their GitHub that would otherwise be lost in the back-and-forth of forum conversation. While each meeting brings interesting conversation, this past Wednesday (December 4th) was special. The CSSWG met to try and finally squash a debate that has been going on for five years: whether Masonry should be a part of Grid or a separate system.

I’ll try to summarize the current state of the debate, but if you are looking for the long version, I recommend reading CSS Masonry & CSS Grid by Geoff and Choosing a Masonry Syntax in CSS by Miriam Suzanne.

In 2017, it was frequently asked whether Grid could handle masonry layouts; layouts where the columns (or the rows) could hold unevenly sized items without gaps in between. While this is just one of several possibilities with masonry, you can think about the layout popularized by Pinterest:

In 2020, Firefox released a prototype in which masonry was integrated into the CSS Grid layout module. The main voice against it was Rachel Andrew, arguing that it should be its own, separate thing. Since then, the debate has escalated with two proposals from Apple and Google, arguing for and against a grid-integrated syntax, respectively.

There were some technical worries against a grid-masonry implementation that were since resolved. What you have to know is this: right now, it’s a matter of syntax. To be specific, which syntax is

a. is easier to learn for authors and

b. how might this decision impact possible future developments in one or both models (or CSS in general).

In the middle, the W3C Technical Architecture Group (TAG) was asked for input on the issue which has prompted an effort to unify the two proposals. Both sides have brought strong arguments to the table over a series of posts, and in the following meeting, they were asked to lay those arguments once again in a presentation, with the hope of reaching a consensus.

Remember that you can subscribe and read the full minutes on W3C.org

The Battle of PowerPoints

Alison Maher representing Google and an advocate of implementing Masonry as a new display value, opened the meeting with a presentation. The main points were:

Several properties behave differently between masonry and grid.

Better defaults when setting display: masonry, something that Rachel Andrew recently argued for.

There was an argument against display: masonry since fallbacks would be more lengthy to implement, whereas in a grid-integrated the fallback to grid is already there. Alison Maher refutes this since “needing one is a temporary problem, so [we] should focus on the future,” and that “authors should make explicit fallback, to avoid surprises.”

“Positioning in masonry is simpler than grid, it’s only placed in 1 axis instead of 2.”

Shorthands are also better: “Grid shorthand is complicated, hard to use. Masonry shorthand is easier because don’t need to remember the order.”

“Placement works differently in grid vs masonry” and “alignment is also very different”

There will be “other changes for submasonry/subgrid that will lead to divergences.”

“Integrating masonry into grid will lead to spec bloat, will be harder to teach, and lead to developer confusion.”

alisonmaher: “Conclusion: masonry should be a separate display type”

Jen Simmons, representing the WebKit team and advocate of the “Just Use Grid” approach followed with another presentation. On this side, the main points were:

Author learning could be skewed since “a new layout type creates a separate tool with separate syntax that’s similar but not the same as what exists […]. They’re familiar but not quite the same”

The Chrome proposal would add around 10 new properties. “We don’t believe there’s a compelling argument to add so many new properties to CSS.”

“Chromium argues that their new syntax is more understandable. We disagree, just use grid-auto-flow“

“When you layout rows in grid, template syntax is a bit different — you stack the template names to physically diagram the names for the rows. Just Use Grid re-uses this syntax exactly; but new masonry layout uses the column syntax for rows”

“Other difference is the auto-flow — grid’s indicates the primary fill direction, Chrome believes this doesn’t make sense and changed it to match the orientation of lines”

“Chrome argues that new display type allows better defaults — but the defaults propose aren’t good […] it doesn’t quite work as easily as claimed [see article] requires deep understanding of autosizing”

“Easier to switch, e.g. at breakpoints or progressive enhancement”

“Follows CSS design principles to re-use what already exists”

The TAG review

After two presentations with compelling arguments, Lea Verou (also a member of the TAG) followed with their input.

lea: We did a TAG review on this. My opinion is fully reflected there. I think the arguments WebKit team makes are compelling. We thought not only should masonry be part of grid, but should go further. A lot of arguments for integrating is that “grid is too hard”. In that case we should make grid things easier. Complex things are possible, but simple things are not so easy.

Big part of Google’s argument is defaults, but we could just have smarter defaults — there is precedent for this in CSS if we decided that would help ergonomics We agree that switching between grid vs. masonry is common. Grid might be a slightly better fallback than nothing, but minor argument because people can use @supports. Introducing all these new properties increasing the API surfaces that authors need to learn. Less they can port over. Even if we say we will be disciplined, experience shows that we won’t. Even if not intentional, accidental. DRY – don’t have multiple sources of truth

One of arguments against masonry in grid is that grids are 2D, but actually in graphic design grids were often 1D. I agree that most masonry use cases need simpler grids than general grid use cases, but that means we should make those grids easier to define for both grid and masonry. The more we looked into this, we realize there are 3 different layout modes that give you 2D arrangement of children. We recommended not just make masonry part of grid, but find ways of integrating what we already have better could we come up with a shorthand that sets grid-auto-flow and flex-direction, and promote that for layout direction in general? Then authors only need to learn one control for it.

The debate

All was laid out onto the table, it was only left what other members had to say.

oriol: Problem with Jen Simmons’s reasoning. She said the proposed masonry-direction property would be new syntax that doesn’t match grid-auto-flow property, but this property matches flex-direction property so instead of trying to be close to grid, tries to be close to flexbox. Closer to grid is a choice, could be consistent with different things.

astearns: One question I asked is, has anyone changed their mind on which proposal they support? I personally have. I thought that separate display property made a lot more sense, in terms of designing the feature and I was very daunted by the idea that we’d have to consider both grid and masonry for any new development in either seemed sticky to me but the TAG argument convinced me that we should do the work of integrating these things.

TabAtkins: Thanks for setting that up for me, because I’m going to refute the TAG argument! I think they’re wrong in this case. You can draw a lot of surface-level connections between Grid and Masonry, and Flexbox, and other hypothetical layouts but when you actually look at details of how they work, behaviors each one is capable of, they’re pretty distinct if you try to combine together, it would be an unholy mess of conflicting constraints — e.g. flexing in items of masonry or grid or you’d have a weird mish-mash of, “the 2D layout.

But if you call it a flex you get access to these properties, call it grid, access to these other properties concrete example, “pillar” example mentioned in webKit blog post, that wasn’t compatible with the base concepts in masonry and flex because it wants a shared block formatting context grid etc have different formatting contexts, can’t use floats.

lea: actually, the TAG argument was that layout seems to actually be a continuum, and syntax should accommodate that rather than forcing one of two extremes (current flex vs current grid).

The debate kept back and forth until there was an attempt to set a general north star to follow.

jyasskin: Wanted to emphasize a couple aspects of TAG review. It seems really nice to keep the property from Chrome proposal that you don’t have to learn both, can just learn to do masonry without learning all of Grid even if that’s in a unified system perhaps still define masonry shorthand, and have it set grid properties

jensimmons: To create a simple masonry-style layout in Grid, you just need 3 lines of code (4 with a gap). It’s quite simple.

jyasskin: Most consensus part of TAG feedback was to share properties whenever possible. Not necessary to share the same ‘display’ values; could define different ‘display’ values but share the properties. One thing we didn’t like about unified proposal was grid-auto-flow in the unified proposal, where some values were ignored. Yeah, this is the usability point I’m pounding on

Another Split Decision

Despite all, it looked like nobody was giving away, and the debate seemed stuck once again:

astearns: I’m not hearing a way forward yet. At some point, one of the camps is going to have to concede in order to move this forward.

lea: What if we do a straw poll. Not to decide, but to figure out how far are we from consensus?

The votes were cast and the results were… split.

florian: though we could still not reach consensus, I want to thank both sides for presenting clear arguments, densely packed, well delivered. I will go back to the presentations, and revisit some points, it really was informative to present the way it was.

That’s all folks, a split decision! There isn’t a preference for either of the two proposals and implementing something with such mixed opinions is something nobody would approve. After a little over five years of debate, I think this meeting is yet another good sign that a new proposal addressing the concerns of both sides should be considered, but that’s just a personal opinion. To me, masonry (or whatever name it may be) is an important step in CSS layout that may shape future layouts, it shouldn’t be rushed so until then, I am more than happy to wait for a proposal that satisfies both sides.

Further Reading

Relevant Issues

#2024#ADD#amp#API#apple#approach#architecture#arrangement#Article#Articles#author#Blog#Children#chrome#chromium#code#columns#concrete#CSS#CSS Grid#csswg#december#Design#design principles#details#Developer#development#Developments#direction#display

0 notes

Text

ARCHITECTURAL DESIGN - ORDERING PRINCIPLES

consider these principles "fundamental ideas" that serve as the foundation for a system. these principles can structure your design and bring order to the lay-out of a building.

AXIS

SYMMETRY

HIERARCHY

TRANSFORMATION

DATUM

RHYTHM

PATTERN

REPETITION

#order#architecture#design#principles#axis#hierarchy#symmetry#transformation#datum#rhythm#pattern#repetition

0 notes

Text

How to Effectively Apply Behavioral Economics for Consumer Engagement?

I never thought behavioral economics would revolutionize my marketing strategy.

But here I am, telling you how it changed everything.

It all started when our company's engagement rates plummeted. We were losing customers faster than we could acquire them.

That's when I stumbled upon behavioral economics.

I began by implementing subtle changes. We reframed our pricing strategy using the decoy effect.

Suddenly, our premium package became more attractive. Sales increased by 15% in the first month.

Next, we tapped into loss aversion. Our email campaigns highlighted what customers might miss out on. Open rates soared from 22% to 37%.

But the real game-changer was social proof. We showcased user testimonials prominently on our website. Conversion rates jumped by 28%.

As we delved deeper, we encountered challenges. Some team members worried about ethical implications.

Were we manipulating consumers?

We addressed this by prioritizing transparency.

Every nudge we implemented was designed to benefit both the customer and our business.

This approach not only eased internal concerns but also built trust with our audience.

The results spoke for themselves. Overall engagement increased by 45% within six months. Customer retention improved by 30%.

But it wasn't just about numbers. We were creating meaningful connections. Customers felt understood and valued.

Looking back, I realize behavioral economics isn't about tricks or gimmicks. It's about understanding human behavior and using that knowledge to create win-win situations.

So, how can you improve your consumer engagement using behavioral economics?

Start by observing your customers' behaviors. What motivates them? What holds them back?

Use these insights to craft strategies that resonate.

Remember, the goal is to guide, not manipulate.

How are you applying behavioral economics in your business?

Get Tips, Suggestions, & Workarounds, in 2-3 mins, on How to Effectively Apply Behavioral Economics for Consumer Engagement?

#behavioral economics#consumer psychology#marketing strategy#customer engagement#neuromarketing#decision making#consumer behavior#nudge theory#choice architecture#framing effect#loss aversion#social proof#anchoring#scarcity principle#cognitive biases#consumer insights#behavioral science#customer retention#conversion optimization#user experience#A/B testing#persuasion techniques#emotional marketing#brand loyalty#customer journey#pricing strategies#consumer decision making#marketing psychology#behavioral marketing#SubhamDas

0 notes

Text

🌲The Beauty of TTP 2 part two🌲

#pics(edits) made by me :)#assassin1513#video games#gaming#games#the talos principle 2#the talos principle#talos principle 2#talos#principles#architecture#minimalist#minimalistic design#screenshots#game screenshots#screenshot#virtual photography#nature#talos principle#beton#structure#puzzle#history#statues#statue

43 notes

·

View notes

Text

The way the government treats homeless people and panhandlers ought to be evidence enough on its own that empathy doesn't always lead to ethical behavior. Middle and upper class people get uncomfortable seeing homeless people because seeing someone in a bad state triggers an uncomfortable empathic response. They feel bad seeing people suffering. But people respond by trying to remove the source of their discomfort just as often as they respond with compassion.

That's why cities respond with hostile architecture and brutality. They just need to make the problem invisible, and people stop complaining.

Empathy is very useful, but it's no substitute for actual ethical principles.

12K notes

·

View notes

Text

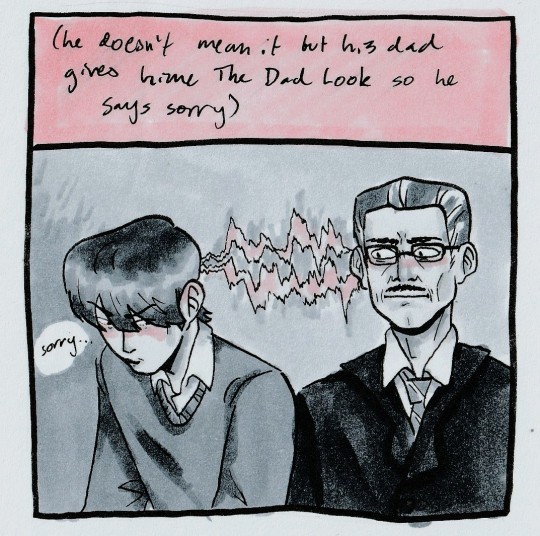

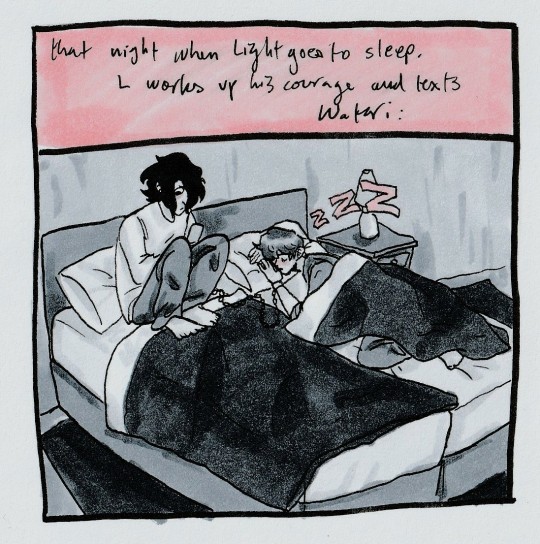

The fanon about L & Watari's relationship is one of my favorite things around here that are also most definitely true. Later in life L & Light would act exactly, and I mean exactly like this.

The faultless honesty; The buddy-buddy between geniuses how you'd only see it amongst scientists at global conferences, mixed with an hour of hesitation because 'I Care Him but he needs to know...'

Chef kiss. (Sorry for the tag essay. That's a lie I'm not)

funny dumb comic made using this post

#no i mean this#the way watari takes care of L's principles. calling him Ryuzaki even when they're certainly alone#how L approachs him in the first place as watari seems to be the only person who he entrusts with his emotions.#they've both done questionable shit for not entrely selfish reasons. they both have that same creative madness inside & both enjoy similar#things over which they bond. the aesthetic (even though i probably made that up but L grew up at Wammy's which likely instilled comfort in#grandfather clocks old architecture & vintage tea sets. WHCH *IS* CANON MIND YOU)#in every canon piece where they interact its just natural. reason why i dislike when fanfiction use wammy as point for lawlight angst(💀?)#its just silly. like L thinks he doesn't know & frantically attemps to hide & its comedic when you realize that there is no way he Doesn't#Plus mop-haired L#bby girl#death note#my writing

8K notes

·

View notes

Text

Maximize Your Growth: Unlock The Power Of Zero Trust Architecture

Boosting Scalability and Growth: The Quantifiable Impact of Zero Trust Architecture on Organizations

Amid rising security breaches, the limitations of traditional cybersecurity models highlight the need for a robust, adaptive framework. Zero Trust Architecture (ZTA), operating on the principle of "trust no one, verify everything," offers enhanced protection aligned with modern tech trends like remote work and IoT. This article explores ZTA's core components, implementation strategies, and transformative impacts for stronger cyber defenses.

Understanding Zero Trust Architecture

Zero Trust Architecture is a cybersecurity strategy that revolves around the belief that organizations should not automatically trust anything inside or outside their perimeters. Instead, they must verify anything and everything by trying to connect to its systems before granting access. This approach protects modern digital environments by leveraging network segmentation, preventing lateral movement, providing Layer 7 threat prevention, and simplifying granular user-access control.

Core Principles of Zero Trust

Explicit Verification: Regardless of location, every user, device, application, and data flow is authenticated and authorized under the strictest possible conditions. This ensures that security does not rely on static, network-based perimeters.

Least Privilege Access: Users are only given access to the resources needed to perform their job functions. This minimizes the risk of attackers accessing sensitive data through compromised credentials or insider threats.

Micro-segmentation: The network is divided into secure zones, and security controls are enforced on a per-segment basis. This limits an attacker's ability to move laterally across the network.

Continuous Monitoring: Zero Trust systems continuously monitor and validate the security posture of all owned and associated devices and endpoints. This helps detect and respond to threats in real-time.

Historical Development

With the advent of mobile devices, cloud technology, and the dissolution of conventional perimeters, Zero Trust offered a more realistic model of cybersecurity that reflects the modern, decentralized network environment.

Zero Trust Architecture reshapes how we perceive and implement cybersecurity measures in an era where cyber threats are ubiquitous and evolving. By understanding these foundational elements, organizations can better plan and transition towards a Zero Trust model, reinforcing their defenses against sophisticated cyber threats comprehensively and adaptively.

The Need for Zero Trust Architecture

No matter how spooky the expression 'zero trust' might sound, we must address that the rapidly advancing technology landscape dramatically transformed how businesses operate, leading to new vulnerabilities and increasing the complexity of maintaining secure environments. The escalation in frequency and sophistication of cyber-attacks necessitates a shift from traditional security models to more dynamic, adaptable frameworks like Zero Trust Architecture. Here, we explore why this shift is not just beneficial but essential.

Limitations of Traditional Security Models

Traditional security models often operate under the premise of a strong perimeter defense, commonly referred to as the "castle-and-moat" approach. This method assumes that threats can be kept out by fortifying the outer defenses. However, this model falls short in several ways:

Perimeter Breach: Once a breach occurs, the attacker has relatively free reign over the network, leading to potential widespread damage.

Insider Threats: It inadequately addresses insider threats, where the danger comes from within the network—either through malicious insiders or compromised credentials.

Network Perimeter Dissolution: The increasing adoption of cloud services and remote workforces has blurred the boundaries of traditional network perimeters, rendering perimeter-based defenses less effective.

Rising Cybersecurity Challenges

Traditional security models often operate under the premise of a strong perimeter defense, commonly referred to as the "castle-and-moat" approach. This method assumes that threats can be kept out by fortifying the outer defenses. However, this model falls short in several ways:

Increased Data Breaches: Recently, annual data breaches exploded, with billions of records being exposed each year, affecting organizations of all sizes.

Cost of Data Breaches: The average cost of a data breach has risen, significantly impacting the financial health of affected organizations.

Zero Trust: The Ultimate Response to Modern Challenges

Zero Trust Architecture arose to address the vulnerabilities inherent in modern network environments:

Remote Work: With more talent working remotely, traditional security boundaries became obsolete. Zero Trust ensures secure access regardless of location.

Cloud Computing: As more data and applications move to the cloud, Zero Trust provides rigorous access controls that secure cloud environments effectively.

Advanced Persistent Threats (APTs)

Zero Trust's continuous verification model is ideal for detecting and mitigating sophisticated attacks that employ long-term infiltration strategies.

The Shift to Zero Trust

Organizations increasingly recognize the limitations of traditional security measures and shift towards Zero Trust principles. Several needs drive this transition:

Enhance Security Posture:Implement robust, flexible security measures that adapt to the evolving IT landscape.

Minimize Attack Surfaces:Limit the potential entry points for attackers, thereby reducing overall risk.

Improve Regulatory Compliance

Meet stringent data protection regulations that demand advanced security measures.

In the face of ever-evolving threats and changing business practices, it becomes clear that Zero Trust Architecture goes beyond a simple necessity.

By adopting Zero Trust, not only can organizations stand tall against current threats more effectively but also position themselves to adapt to future challenges in the cybersecurity landscape. This proactive approach is critical to maintaining the integrity and resilience of modern digital enterprises.

Critical Components of Zero Trust Architecture

Zero Trust Architecture (ZTA) redefines security by systematically addressing the challenges of a modern digital ecosystem. Architecture comprises several vital components that ensure robust protection against internal and external threats. Understanding these components provides insight into how Zero Trust operates and why it is effective.

Multi-factor Authentication (MFA)

A cornerstone of Zero Trust is Multi-factor Authentication (MFA), which enhances security by requiring multiple proofs of identity before granting access. Unlike traditional security that might rely solely on passwords, MFA can include a combination of:

By integrating MFA, organizations significantly reduce the risk of unauthorized access due to credential theft or simple password breaches.

Least Privilege Access Control

At the heart of the Zero Trust model is the principle of least privilege, which dictates that users and devices only get the minimum access necessary for their specific roles. This approach limits the potential damage from compromised accounts and reduces the attack surface within an organization. Implementing the least privilege requires:

Rigorous user and entity behavior analytics (UEBA) to understand typical access patterns.

Dynamic policy enforcement to adapt permissions based on the changing context and risk level.

Microsegmentation

Microsegmentation divides network resources into separate, secure zones. Each zone requires separate authentication and authorization to access, which prevents an attacker from moving laterally across the network even if they breach one segment. This strategy is crucial in minimizing the impact of an attack by:

Isolating critical resources and sensitive data from broader network access.

Applying tailored security policies specific to each segment's function and sensitivity.

Continuous Monitoring and Validation

Zero Trust insists on continuously monitoring and validating all devices and user activities within its environment. This proactive stance ensures that anomalies or potential threats are quickly identified and responded to. Key aspects include:

Real-time threat detection using advanced analytics, machine learning, and AI.

Automated response protocols that can isolate threats and mitigate damage without manual intervention.

Device Security

In Zero Trust, security extends beyond the user to their devices. Every device attempting to access resources must be secured and authenticated, including:

The assurance that devices meet security standards before they can connect.

Continuously assessing device health to detect potential compromises or anomalies.

Integration of Security Policies and Governance

Implementing Zero Trust requires a cohesive integration of security policies and governance frameworks that guide the deployment and operation of security measures. This integration helps in:

Standardizing security protocols across all platforms and environments.

Ensuring compliance with regulatory requirements and internal policies.

Implementing Zero Trust Components.

Implementing Zero Trust involves assessing needs, defining policies, and integrating solutions, requiring cross-departmental collaboration. This proactive approach creates a resilient security posture, adapting to evolving threats and transforming security strategy.

Implementing Zero Trust Architecture

Implementing Zero Trust Architecture (ZTA) is a strategic endeavor that requires careful planning, a detailed understanding of existing systems, and a clear roadmap for integration. Here's a comprehensive guide to deploying Zero Trust in an organization, ensuring a smooth transition and security enhancements to ensure a practical realization.

Step 1: Define the Protect Surface

The first step in implementing Zero Trust is to identify and define the 'protect surface'—the critical data, assets, applications, and services that need protection. Such an implementation will involve the following:

Data Classification: Identify where sensitive data resides, how it moves, and who accesses it.

Asset Management: Catalog and manage hardware, software, and network resources to understand the full scope of the digital environment.

Step 2: Map Transaction Flows

Understanding how data and requests flow within the network is crucial. Mapping transaction flows helps in the following:

Identifying legitimate traffic patterns: This aids in designing policies that allow normal business processes while blocking suspicious activities.

Establishing baselines for network behavior: Anomalies from these baselines can be quickly detected and addressed.

Step 3: Architect a Zero Trust Network

With a clear understanding of the protected surface and transaction flows, the next step is to design the network architecture based on Zero Trust principles:

Microsegmentation: Design network segments based on the sensitivity and requirements of the data they contain.

Least Privilege Access Control: Implement strict access controls and enforce them consistently across all environments.

Step 4: Create a Zero Trust Policy

Zero Trust policies dictate how identities and devices access resources, including:

Policy Engine Creation: Develop a policy engine that uses dynamic security rules to make access decisions based on the trust algorithm.

Automated Rules and Compliance: Utilize automation to enforce policies efficiently and ensure compliance with regulatory standards.

Step 5: Monitor and Maintain

Zero Trust requires ongoing evaluation and adaptation to remain effective. Continuous monitoring and maintenance involve:

Advanced Threat Detection: Use behavioral analytics, AI, and machine learning to detect and respond to anomalies in real-time.

Security Posture Assessment: Regularly assess the security posture to adapt to new threats and incorporate technological advancements.

Feedback Loops: Establish mechanisms to learn from security incidents and continuously improve security measures.

Step 6: Training and Culture Change

Implementing Zero Trust affects all aspects of an organization and requires a shift in culture and mindset:

Comprehensive Training: Educate staff about the principles of Zero Trust, their roles within the system, and the importance of security in their daily activities.

Promote Security Awareness: Foster a security-first culture where all employees are vigilant and proactive about security challenges.

Challenges in Implementation

The transition to Zero Trust is not without its challenges:

Complexity in Integration: Integrating Zero Trust with existing IT and legacy systems can be complex and resource-intensive.

Resistance to Change: Operational disruptions and skepticism from stakeholders can impede progress.

Cost Implications: Initial setup, especially in large organizations, can be costly and require significant technological and training investments.

Successfully implementing Zero Trust Architecture demands a comprehensive approach beyond technology, including governance, behavior change, and continuous improvement. By following these steps, organizations can enhance their cybersecurity defenses and build a more resilient and adaptive security posture equipped to handle the threats of a dynamic digital world.

Impact and Benefits of Zero Trust Architecture

Implementing Zero Trust Architecture (ZTA) has far-reaching implications for an organization's cybersecurity posture. This section evaluates the tangible impacts and benefits that Zero Trust provides, supported by data-driven outcomes and real-world applications.

Reducing the Attack Surface

Zero Trust minimizes the organization's attack surface by enforcing strict access controls and network segmentation. With the principle of least privilege, access is granted only based on necessity, significantly reducing the potential pathways an attacker can exploit.

Statistical Impact

Organizations employing Zero Trust principles have observed a marked decrease in the incidence of successful breaches. For instance, a report by Forrester noted that Zero Trust adopters saw a 30% reduction in security breaches.

Case Study

A notable financial institution implemented Zero Trust strategies and reduced the scope of breach impact by 40%, significantly lowering their incident response and recovery costs.

Enhancing Regulatory Compliance

Zero Trust aids in compliance with stringent data protection regulations such as GDPR, HIPAA, and PCI-DSS by providing robust mechanisms to protect sensitive information and report on data access and usage.

Compliance Metrics

Businesses that transition to Zero Trust report higher compliance rates, with improved audit performance due to better visibility and control over data access and usage.

Improving Detection and Response Times

The continuous monitoring component of Zero Trust ensures that anomalies are detected swiftly, enabling quicker response to potential threats. This dynamic approach helps in adapting to emerging threats more effectively.

Operational Efficiency

Studies show that organizations using Zero Trust frameworks have improved their threat detection and response times by up to 50%, enhancing operational resilience.

Cost-Effectiveness

While the initial investment in Zero Trust might be considerable, the architecture can lead to significant cost savings in the long term through reduced breach-related costs and more efficient IT operations.

Economic Benefits

Analysis indicates that organizations implementing Zero Trust save on average 30% in incident response costs due to the efficiency and efficacy of their security operations.

Future-Proofing Security

Zero Trust architectures aim to be flexible and adaptable, which makes them particularly suited to evolving alongside emerging technologies and changing business models, thus future-proofing an organization's security strategy.

Strategic Advantage

Adopting Zero Trust provides a strategic advantage in security management, positioning organizations to quickly adapt to new technologies and business practices without compromising security.

The impacts and benefits of Zero Trust Architecture make a compelling case for its adoption. As the digital landscape continues to evolve, the principles of Zero Trust provide a resilient and adaptable framework that addresses current security challenges and anticipates future threats. By embracing Zero Trust, organizations can significantly enhance their security posture, ensuring robust defense mechanisms that scale with their growth and technological advancements.

Future Trends and Evolution of Zero Trust

With digital transformation emerges highly sophisticated cybersecurity threats pushing Zero Trust Architecture (ZTA) to evolve in response to these dynamic challenges. In this final section, we explore future Zero Trust trends, their ongoing development, and the potential challenges organizations may face as they continue to implement this security model.

Evolution of Zero Trust Principles

Zero Trust is not a static model and must continuously be refined as new technologies and threat vectors emerge. Critical areas of evolution include:

Integration with Emerging Technologies

As organizations increasingly adopt technologies like 5G, IoT, and AI, Zero Trust principles must be adapted to secure these environments effectively. For example, the proliferation of IoT devices increases the attack surface, necessitating more robust identity verification and device security measures within a Zero Trust framework.

Advanced Threat Detection Using AI

Artificial Intelligence and Machine Learning will play pivotal roles in enhancing the predictive capabilities of zero-trust systems. AI can analyze vast amounts of data to detect patterns and anomalies that signify potential threats, enabling proactive threat management and adaptive response strategies.

Challenges in Scaling Zero Trust

As Zero Trust gains visibility, organizations may encounter several challenges:

Future Research and Standardization

Continued research and standardization efforts are needed to address gaps in Zero Trust methodologies and to develop best practices for their implementation. Industry collaboration and partnerships will be vital in creating standardized frameworks that effectively guide organizations in adopting Zero Trust.

Developing Zero Trust Maturity Models

Future efforts could focus on developing maturity models that help organizations assess their current capabilities and guide their progression toward more advanced Zero Trust implementations.

Legal and Regulatory Considerations

As Zero Trust impacts data privacy and security, future legal frameworks must consider how Zero Trust practices align with global data protection regulations. Ensuring compliance while implementing Zero Trust will be an ongoing challenge.

The future of Zero Trust Architecture is one of continual adaptation and refinement. By staying ahead of technological advancements and aligning with emerging security trends, Zero Trust can provide organizations with a robust framework capable of defending against the increasingly sophisticated cyber threats of the digital age. As this journey unfolds, embracing Zero Trust will enhance security and empower organizations to innovate and grow confidently.

Concluding Thoughts:

As cyber threats keep evolving, Zero Trust Architecture (ZTA) emerges as the most effective cybersecurity strategy, pivotal for safeguarding organizational assets in an increasingly interconnected world. The implementation of Zero Trust not only enhances security postures but also prompts a significant shift in organizational culture and operational frameworks. How will integrating advanced technologies like AI and blockchain influence the evolution of zero-trust policies? Can Zero Trust principles keep pace with the rapid expansion of IoT devices across corporate networks?

Furthermore, questions about their scalability and adaptability remain at the forefront as Zero Trust principles evolve. How will organizations overcome the complexities of deploying Zero Trust across diverse and global infrastructures? Addressing these challenges and questions will be crucial for organizations that leverage Zero Trust Architecture effectively.

How Coditude can help you

For businesses looking to navigate the complexities of Zero Trust and fortify their cybersecurity measures, partnering with experienced technology providers like Coditude offers a reassuring pathway to success. Coditude's expertise in cutting-edge security solutions can help demystify Zero Trust implementation and tailor a strategy that aligns with your business objectives. Connect with Coditude today to secure your digital assets and embrace the future of cybersecurity with confidence.

#zero trust security#zero trust architecture#zero trust model#zero trust network#zero trust policy#zero trust security model#zero trust principles#what is zero trust architecture#what is zero trust

0 notes

Text

"What is Universal Design? Learn here, how accessibility plays a role in web designing, and what points are to be remembered for the perfect design. So, let’s dive into"

#universal design#universal design examples#universal design architecture#universal design principles#UX Design#UI Design#ethicsfirst#habilelabs

0 notes