#ai topic

Explore tagged Tumblr posts

Text

No, but this is actually crazy in the most dystopian sense.

33K notes

·

View notes

Text

THIS ISN'T A ROAST. It's some thoughts and backgrounds on a thing that's been eating my brainworms for a bit.

haha ok so, the last few years I've been hearing a lot about how AI can be useful and a tool, which i refuse to believe it is (and if so, the damage it causes to artists FAR OUTWEIGHT any benefit).

So, there's this youtuber that covers, like, tech and stuff. When generative AI for images and text came up , they were rightfully poking at the ethical dilemma, and copyright infringement, but STILL made a soft argument about how useful it can be on pitch ideas, concepts, and other things that quick and cheap art could be used (also fuck whoever did this jobs before i guess lol)

BUT THEN an app finally did the same for music, generating indistinguishable quality music. The said youtuber makes music, usually vibe check music to hear on video's background and such.

but see

this time they were frustrated, and made a video about the ethical concern, how unfair it is, how it destroys the effort and passion of creating and all, and did NOT make an argument about how it could be useful, because now they knew that despite the benefit, if it goes off, their dreams is over.

A lot of people are quick to jump into "this can be useful" for generative tools, and very rarely i see artists, especially digital artists, agreeing with this. And most that do either have no stakes on their careers, aka they're already well off, or have no careers to begin with.

Serious artists KNOW how bad it is, no matter what. The youtuber had no stakes in visual art. They doesn't make them, they don't really profit or benefit from making them.

When it came to a thing they DO understand and make, now they've got to understand why it's bad. Why the benefits are not good enough to justify the disaster it makes on artist's life, on the humankind development as a whole.

idk what the point is here, i just really got pissed that at the point, they've been really kinda "this is bad but this exist, who knows, could be good if it's not unethical etc", to go fully into "this is bad. like, real bad for everyone here and music industry".

Wish that understanding came from their kindness, not AI coming with a baseball bat to their knees. Makes me real sad that it came for them too.

#not gonna tell who the youtuber is cuz idk man i don't see ill intent form them#and even so it's not that deep bro#it's like seeing someone go through the same bad thing you're going though#anyway#AI topic#“this tool kills AI” and it's a baseball bat with nails. I am wielding it. I'll swing it.

291 notes

·

View notes

Text

the conversation around generative neural networks is a dumpster fire in a dozen different ways but I think the part that disproportionately frustrates me, like on an irrational pet peeve level, is that nobody in that conversation seems to understand automata theory

back before most of these deep learning techniques were a twinkle in a theorist's eye, back when computing was a lot less engineering and a lot more math, computer scientists had worked out the math of different "classes" of computer system and what kinds of problems they could and couldn't solve

these aren't arbitrary classifications like most taxonomy turns out to be. there's qualitative differences. you can draw hard lines: "it takes class X or above to run programs with Y trait", and "only class X programs or below are guaranteed to have Y trait". and all of those lines have been mathematically proven; if you ever found a counterexample, then we'd be in "math is a lot of bunk" territory and we'd have way bigger things to worry about

this has nothing to do with how fast/slow the computer system goes; it's about "what kinds of program can it run at all". so it includes emulation and such. you can emulate a lower system in a higher one, but not vice versa

at the top of this heap is turing machines, which includes most computers we'd bother to build. there's a lot of programs that it's been mathematically proven require at least a turing machine to run. and this class of programs includes a lot of things that humans can do, too

but with this power comes some inevitable restrictions. for example, if you feed a program to a turing machine, there's no way to guarantee that the program will finish; it might get stuck somewhere and loop forever. in fact there's some programs that you straight up can't predict whether they'll ever finish even if you're looking at the code yourself

these two are intrinsically linked. if your program solves a turing complete problem, it needs a turing machine; nothing less will do. and a turing machine is capable of running all such programs, given enough time.

ok. great. what does any of that jargon have to do with AI?

well... the important thing to know is that the machine learning models we're using right now can't loop forever. if they could loop forever they couldn't be trained. for any given input, they'll produce an output in finite time

which means... well, any program that requires a turing machine to run, or even requires a push-down automaton to run (a weaker type of computer system that can get into infinite loops but that you can at least check ahead of time if a program will get stuck or not), can't be emulated by these systems. they've got to be in the next category down: finite state machines at most - and thus unable to compute, or emulate computation of, programs that inhabit a higher tier

and there is a heck of a lot of stuff we conceptualize as "thinking" that doesn't fit in a finite state machine

...I suspect it will some day be possible for a computer program to be a person. I am absolutely certain that when that day comes, the computer program who's a person would require at least a turing machine to run them

what we have right now isn't that. what we have right now is eye spots on moths, bee orchids, mockingbirds. it might be "artificial intelligence", depending on your definition of "intelligence", but prompt it to do things that we've proven only a turing machine can do, and it will fall over

and the reason I consider this an "irrational pet peeve" and not something more severe? is because this information doesn't actually help solve policy questions! if this is a tool, then we still need to decide how we're going to allow such tools to be built, and used. it's not as simple as a blanket ban, and it's not as simple as letting the output of GNNs fully launder the input, because either of those "simple" solutions are rife for abuse

but I can't help but feel like the conversation is in part held back by specious "is a GNN a people" arguments on the one hand, and "can a GNN actually replace writers, or is it just fooling execs into thinking it can" arguments on the other, when the answer to both seems to me like it was solved 40 years ago

228 notes

·

View notes

Text

my older teto design redraw ^_^

#my art#i kinda cooked back then ngl#maybe ill reanimate that video make it all topical n stuff#now her mental breakdown will be about ai

2K notes

·

View notes

Text

Tfw you go to your happy place and they’ve partnered with Midjourney

1K notes

·

View notes

Text

every so often i see such an insane opinion on this site i immediately go to the dash and hover my cursor over the text post button to make a post about it and then i have to tell myself "the first person to get mad loses" and then i do the right thing and stay my blade

#i'm blessed to not get Actually Mad at fandom opinions these days; usually this sorta thing just occurs over like irl topics#i have to remind myself that my post about it would do nothing and neither did the original stupid post i'm mad at LOL#in this case it was a very very stupid very pathetic pro-ai-art post that just made me barf at how ''uwuuuu poor meeeee'' it was being#shebbz shoutz

195 notes

·

View notes

Text

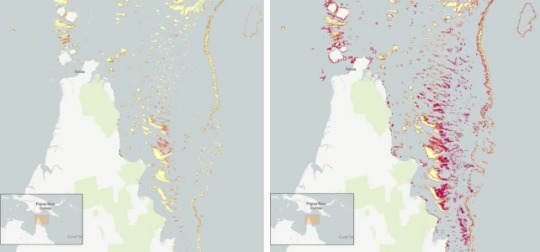

"The world's coral reefs are close to 25 percent larger than we thought. By using satellite images, machine learning and on-ground knowledge from a global network of people living and working on coral reefs, we found an extra 64,000 square kilometers (24,700 square miles) of coral reefs – an area the size of Ireland.

That brings the total size of the planet's shallow reefs (meaning 0-20 meters deep) to 348,000 square kilometers – the size of Germany. This figure represents whole coral reef ecosystems, ranging from sandy-bottomed lagoons with a little coral, to coral rubble flats, to living walls of coral.

Within this 348,000 km² of coral is 80,000 km² where there's a hard bottom – rocks rather than sand. These areas are likely to be home to significant amounts of coral – the places snorkelers and scuba divers most like to visit.

You might wonder why we're finding this out now. Didn't we already know where the world's reefs are?

Previously, we've had to pull data from many different sources, which made it harder to pin down the extent of coral reefs with certainty. But now we have high resolution satellite data covering the entire world – and are able to see reefs as deep as 30 meters down.

Pictured: Geomorphic mapping (left) compared to new reef extent (red shading, right image) in the northern Great Barrier Reef.

[AKA: All the stuff in red on that map is coral reef we did not realize existed!! Coral reefs cover so much more territory than we thought! And that's just one example. (From northern Queensland)]

We coupled this with direct observations and records of coral reefs from over 400 individuals and organizations in countries with coral reefs from all regions, such as the Maldives, Cuba, and Australia.

To produce the maps, we used machine learning techniques to chew through 100 trillion pixels from the Sentinel-2 and Planet Dove CubeSat satellites to make accurate predictions about where coral is – and is not. The team worked with almost 500 researchers and collaborators to make the maps.

The result: the world's first comprehensive map of coral reefs extent, and their composition, produced through the Allen Coral Atlas. [You can see the interactive maps yourself at the link!]

The maps are already proving their worth. Reef management agencies around the world are using them to plan and assess conservation work and threats to reefs."

-via ScienceDirect, February 15, 2024

#oceanography#marine biology#marine life#marine science#coral#coral reefs#environment#geography#maps#interactive maps#ai#ai positive#machine learning#conservation news#coral reef#conservation#tidalpunk#good news#hope#full disclosure this is the same topic I published a few days ago#but with a different article/much better headline that makes it clear that this is “throughout the world there are more reefs”#rather than “we just found an absolutely massive reef”#also included one of the maps this time around#bc this is a really big deal and huge sign of hope actually!!!#we were massively underestimating how many coral reefs the world has left!#and now that we know where they are we can do a much better job of protecting them

444 notes

·

View notes

Text

i know some fuckers reupload my art without a care in the world, im fighting w this for years now and it was a major reason why i deleted my previous art blog and stopped posting art for a long time. i dont hunt the internet to catch everyone tho, even if it pisses me off greatly.

BUT if anyone ever sees my oc art reuploaded, let me know so i can deal with this. one of my biggest fears is people stealing my ocs/worlds or claiming them, i saw that happen to someone once and its scary as hell. i wont tolerate that w my ocs. literally fuck you.

and regarding the AI ask just now; please dont use my OCs for things such as roleplay or anything.

also i rb sm abt it and thoughts its obvious - especially as an artist - what my stance on AI is and what a deep hatred i have for it. do NOT use my things for ANY of that shit.

and, in general while were on the topic of AI bc i see this SO OFTEN: you cant be anti AI and then turn around and use AI writing programs, its all scraped and based on stolen shit. please protect writers as much as artists and VAs.

#fanart of my ocs is ok yes but what i mean is ITS NOT OK TO REUPLOAD MY OCS or use them for personal stuff like RPs !!!!!!!!#im not coming for the anon specifically. this is a general thing and im just terribly upset at the AI shit as a whole#its frustrating me so much. my aunts fiance is a super arrogant useless techbro and its a topic we often had as discussion#and he couldnt even bring proper explanations that mf was just fumbling for excuses and unable to give me any coherent explanations#(by now i mostly dont even acknowledge him outside of basic greetings bc i dont like him anyway. that guy is an idiot in the worst way)#it rlly gets me from 0 to 100#babbles

267 notes

·

View notes

Text

A message to artists against AI. From @/dannyphantomexe on YouTube.

8 notes

·

View notes

Text

Funniest thing for me about the TS MC is that they've never heard the saying "still waters run deep."

Just b/c it made me wonder what other common sayings they've never heard.

Leander: I hope I can help you learn your way around! You must feel like a fish out of water in Eridia. MC: The city's not so bad I feel like I'm choking to death, Leander. Leander: That's...not quite what I meant?

Here's another one:

Mhin: I found a way into the Senobium. If I'm being honest, it feels too good to be true, but I'm not going to look a gift horse in the mouth. MC: Is...giving horses as gifts common where you're from? Mhin: What.

Wait, one more, one more:

Ais: Little birdie told me you broke into the Senobium last night. MC: You can talk to birds? Ais: I'm talkin' to you, aren't I, sparrow?

#luckymeme#touchstarved game#touchstarved meme#touchstarved mc#vere#touchstarved vere#leander#touchstarved leander#mhin#touchstarved mhin#ais#touchstarved ais#i love a smart mc esp in a dating sim#but even more than that#i love a smart mc that's stupid about a couple things#just not in the know on some topics

801 notes

·

View notes

Note

Have you seen the AutoGPT framework? That adds a scaffolding to LLMs so that they can run indefinitely with a memory store, would that be Turing complete?

so there's three caveats here:

it's been 20 years since I actually studied this topic, and have forgotten like 95% of what I've learned

a wrapper program that runs an FSM in a loop with extra input from an oracle (the internet) is a lot harder to reason about than an FSM on its own

I'm found the GitHub for this but I'm not gonna read that many lines of code for free

all that said, my initial skim of the AutoGPT codebase is that the way it's implemented is making some pretty extraordinary assumptions about GPT's ability to generate sensible results for the kind of prompts it uses.

it's essentially trying to break down work into bite sized pieces by telling the text generator to:

deepdream a bureaucracy of specialized task runners

handle a user request by writing delegated tasks for members of the bureaucracy

execute those tasks as though you're the recipient member of the bureaucracy

repeat until "done"

there's a couple different ways that this can go wrong

first off, it's not clear to me whether the above hierarchical breakdown of work is being done in a way that's allowed to loop "until done". if not, we're back at FSMs.

second off, it's not clear to me whether the text generator can generate subtasks competently, the way AutoGPT is requesting them, without already being turing complete. I see a lot of hay being made in the prompts about "explain your reasoning", as context to be passed along to future invocations in order to produce more meaningful results, but answering that prompt requires an amount of introspection where I'd be surprised if an FSM was capable of generating a real answer, instead of some "sounds normal" mimic handwave. and if these output fields are garbage, then the proof by induction falls apart that the preserved context is making outputs better and not worse; you'd get something that maybe has all the physical organs to emulate a turing machine, but miswired such that it'll never actually succeed at computing anything beyond the sum of its parts.

8 notes

·

View notes

Note

Au where Conan is a weed dealer. Cue him sitting inside Kogoro's office and laughing calmly with bloodshot, red eyes while smoking.

taking down the B.O. the "normal" way is taking too long. Conan and Haibara are going to build a criminal empire so large it will chase them out of business and they're going to start it by selling meth without getting caught because who would even suspect them?

#asks#dcmk#detective conan#'it's pride month kids you know what that means' repeating endlessly in my head#shout out to that one case where conan was like 'taking drugs is as evil as committing murder'#also same case where the americans were like 'we're gonna name our restaurant K3 which stands for three Ks' bro.... brooo#sorry for going a bit off topic from the original prompt..#i've started watching breaking bad and i'm really feeling that 'walter white stop lying to your poor wife' post#my art#edogawa conan#haibara ai#agasa hiroshi

1K notes

·

View notes

Text

For all the talk about Arcane and the (rightful) vitriol I see towards generative AI I see on my dash I’m kinda surprised that I haven’t seen any discussion of the fact that Viktor invented magical AI with the Hexcore, Hextech that learns is just another way of saying machine learning (the other name for AI, eh, it’s more nuanced than that but you get it) and Viktor’s plotline basically turns into a standard “scientist consumed by his own AI” plot after that

#arcane#arcane meta#Viktor arcane#maybe all that discussion was back in the S1 fandom days idk#but with AI such a hot topic the fact that Viktor made magical AI using Jayce’s more standard invention as a foundation#is surprisingly not a line of meta I’ve seen anywhere else#if anything Arcane is a surprisingly anti scientific text on some levels#or rather it deals with the positives and negatives of rapid innovation and societal polarization

118 notes

·

View notes

Text

OpenAI's "o1" reasoning model is incapable by design of saying anything without thinking it over first.

Which results in cute (?) moments like this, where – in response to the single word "Thanks!" – it says the same "any more questions" sound byte that all its siblings say, but only after five seconds of careful, verbose inner deliberation

(N. B. the user only gets to see a summary o1's chain of thought, not the thing itself. The blockquote under "Thought for 5 seconds" is this summary; the original chain of thought was presumably somewhat longer, given that o1 can write ~30 words per second)

#ai tag#btw “Addressing the invariance of divergences” relates to the topic of the conversation before this#and looks like a mistake on the part of their very-janky summarizer model

159 notes

·

View notes

Text

So do we wipe our blogs or what?

877 notes

·

View notes

Text

Why do you always use that to piss me off? ...It makes me happy. We agreed that I'm in charge of the bars. But you come here all the time to watch me. How am I supposed to lead my people? Use your head, okay? Suit yourself. What's the matter? Chen Yi. Chen Yi! [...] Don't make me worry.

Chen Bowen as CHEN YI & Chiang Tien as AI DI KISEKI: DEAR TO ME (2023)

#kiseki: dear to me#kisekiedit#kdtm#kiseki dear to me#ai di x chen yi#chen yi x ai di#nat chen#chen bowen#louis chiang#chiang tien#jiang dian#userspring#userspicy#userrain#uservid#pdribs#userjjessi#*cajedit#*gif#your honor i would like to remind you and the jury that ai di is faking drunk at this time#at the most he is a little tipsy and Definitely pretending to be asleep.#now your honor please observe in the fifth gif ai di slinging his other arm around chen yi's neck. while ''''''asleep''''''#as well as the way it slides back down chen yi's shoulder and how he clearly puts it back to get a better grip#and next your honor i would like to direct your attention to the last gif. and the way ai di's fingers curl when zherui says#'love and admiration are different'. not only do they curl but they pinch. do you see?#as you can see from this evidence he is very aware of the conversation and desperately in need of chen yi's affection and attention#.............and its better than the goddamn darcy hand flex in my Personal Opinion. act your fucking heart out diandian.#and NOW observe the caption. by combining the conversation where chen yi drove off angrily with the one where he comes back for ai di#you can see that the Real reason he was upset was bc ai di was pushing him away#& he came back for him anyway. he just wants to be close to ai di all his actions & feelings are ai di-centered even when the topic is cdy

153 notes

·

View notes