#What are the 4 types of AI technology?

Explore tagged Tumblr posts

Text

#What technology is AI?#What are the 4 types of AI technology?#How is AI used today?#Why use AI technology?#ai software#ai chatbot#ai app#artificial intelligence article#what is artificial intelligence with examples#google ai#what is artificial intelligence in computer#artificial intelligence pdf#AI technology#artificial intelligence#machine learning#neural networks#automation#advantages of AI#disadvantages of AI#ethical considerations#emerging technology

3 notes

·

View notes

Text

Ai technology

What Is AI Technology? AI can automate repetitive tasks and free up human capital for more important work. It also reduces errors, which can be costly to businesses. Examples of AI applications include virtual assistants like Siri and Alexa, social media recommendation algorithms and cross-selling tools in e-commerce. AI can also help companies better understand their data by identifying trends…

View On WordPress

#How is AI used today?#How to learn about AI?#How was AI created?#Is AI good or bad?#What are the 4 types of AI technology?#What is the concept of AI technology?#What technology is AI?#Why do we need AI?

0 notes

Text

RANDOM ASTRO TAKES #4

Where is Capricorn in your chart can show where you are the GOAT, that’s an area of your life where with discipline and hard work you can overcome anything, all the doors are open and sky is the limit.

Uranus in Pisces in mutual reception with Neptune in Aquarius can embodies an ideal of creativity, spirituality, or physically. They’re divine muse if artists, skillful players, talented photographers, top models. But also intuitive fast thinkers, innovative healing maker, a good content creator, an influencer with eccentric community, an actor that you trust like no one… Neptune is in fall in Aquarius but it’s one of the less difficult fall, if we retire New Age bullshit and delusion about community in our modern society, that placement is in derivative 12H of its domicile, Pisces is in analogy with 12H, it’s symbolic of all the mysticism of the sky.

Generally, if the planets aren’t in exile/fall, harshly aspected or in difficult houses, mutual reception strengthened the planets implicated, their qualities blend each others to creates something very unique and special, Uranus can rules that type of placement.

Some of the best mutual reception :

Mercury in Cancer and Moon in Gemini (fast mind)

Mars in Capricorn and Saturn in Scorpio (THE achiever)

Venus in Cancer and Moon in Taurus (best sensual partner)

Jupiter in Libra and Venus in Sagittarius (abondance of pleasures)

A news from CIA says that Covid 19 had been leaked from a laboratory, when France, in collaboration with China, inaugurated a p4 laboratory BEFORE COVID in Wuhan specifically for this type of virus, it was very strange, given all this, that the new Moon is in Aquarius conjoined with Mercury and Pluto ruled by Saturn in Pisces, the sign of viruses and bacteria. With the new Moon in the same sign as the U.S. Moon, it really is a potential conspiracy in the making that people are now informed.

Mercury in Aquarius ingresses conjunct Pluto at 1 degrees, new ideas emerges from a hidden place of the mind, transformative conversations can disrupting your daily routine, technology boosted, AI more and more used, dystopian Black mirror shit happens in the real world..It’s a previous of the ingress of Uranus in Gemini trine Pluto.

Crown atmospheric of Sun is 1 million of degrees when the Sun is 5000 degrees Celsius, that’s why entourage of Sun dominant are very hot.

The start of a plutocracy/technocracy happens during a Sun/Pluto conjunction in Aquarius, that falls in the 3H of USA, Canada is menaced, Mexicans and South Americans refugees also.

what sign’s rising hide based on their derivative 8H :

Aries (Scorpio 8H) : the secrets of death

Taurus (Sag 8H) : secrets journeys places

Gemini (Cap 8H) : hidden inner knowledge

Cancer (Aqua 8H) : secrets of human birth

Leo (Pisces 8H) : hidden creativity skills

Virgo (Aries 8H) : secrets of motivation

Libra (Taurus 8H) : secrets of arcane le bateleur

Scorpio (Gemini 8H) : secrets books/secrets jokes

Sagittarius (Cancer 8H) : secrets of abundance

Capricorn (Leo 8H) : secrets of glowing up

Aquarius (Virgo 8H) : secrets of epistemology

Pisces (Libra 8H) : secrets of love

Where earth signs fall in your chart is how you are connected to nature,

1/5/9H : you might construct your identity, pleasures, philosophy of life based on grounded thoughts, your daily routine can be to enjoy the instant present, the little things that the life have to offer

2/6/10H : your relationships to material possessions can be so important, but warning on overconsumption, you’re maybe ethical in your career, values.

3/7/11H : you should connecting with others when you commit to your natural skills, that can be crafts or art, but you’re can really enjoy travel with your entourage in green places.

4/8/12H : survival mode might be in your subconscious patterns, you can knowing what is animal spirit, everything can be a natural law in this world for you.

249 notes

·

View notes

Text

economic advice and timely buying tips: 2025 transits

as of late, social media has many discussions about what to buy - or avoid buying - over the next few years, largely in response to the political climate in the united states. across europe, many regions are actively preparing their populations for potential crises (sweden's seems to be the most popularly discussed - link). due to the urgency and pressure to act, as if the world might change tomorrow (and it could though i believe we still have time in many places), i’ve decided to analyze the astrological transits for 2025. in this post i provide practical economic advice and guidance on how much time astrology suggests you have to make these purchases everyone is urging you to prioritize. if it seems to intrigue people i’ll explore future years as well.

things the world needs to prepare for in 2025 in my opinion and why my advice is what it is: the rise of ai / automation of jobs, job loss, geopolitical tensions, war, extreme weather, inflation, tariffs - a potential trade war, a movement of using digital currency, the outbreak of another illness, etc.

uranus goes direct in taurus (jan 30, 2025)

advice

diversify investments: avoid putting all your money in one asset type. mix stocks, bonds, index funds, and, if you feel comfortable, look into sustainable investments or new technologies.

digital finance: familiarize yourself with digital currencies/platforms or blockchain technology.

build an emergency fund: extra savings can shield you from sudden economic instability. aim for 3-6 months’ worth of expenses.

reevaluate subscriptions and spending: find creative ways to reduce spending or repurpose what you have. cancel subscriptions that don't align with needs/beliefs, cook at home, or diy where possible.

invest in skills / side hustles: take a course/invest in tools that can help you create multiple income streams.

by this date stock up on

non-perishable food items like canned goods, grains, and dried beans. household essentials like soap, toothpaste, and cleaning supplies. basic medical supplies. multi-tools. durable, high-quality items over disposable ones (the economy is changing, buy something that will last because prices will go up). LED bulbs, solar-powered chargers, or energy-efficient appliances. stock up on sustainable products, like reusable bags and water bottles. blankets. teas. quality skincare.

jupiter goes direct in gemini (feb 4, 2025)

advice

invest in knowledge: take courses, buy books (potential bans?), and/or attend workshops to expand your skill set. focus on topics like communication, writing, marketing, and/or technology. online certifications could boost your career prospects during this time.

leverage your network: attending professional events, joining forums, and/or expanding your LinkedIn presence.

diversify income streams: explore side hustles, freelance gigs, and/or monetize hobbies.

beware of overspending on small pleasures: overspending on gadgets, books, or entertainment will not be good at this point in time (tariffs already heavy hitting?).

by this date stock up on

books / journals. subscriptions to learning platforms like Skillshare, MasterClass, or Coursera. good-quality laptop, smartphone, and/or noise-canceling headphones. travel bags - get your bug out bag in order. portable chargers. language-learning apps. professional attire. teas. aromatherapy.

neptune enters aries (march 30, 2025)

advice

invest: look into industries poised for breakthrough developments, such as renewable energy, space exploration, and/or tech.

save for risks: build a financial cushion to balance your adventurous pursuits with practical security.

diversify your income: consider side hustles or freelancing in fields aligned with your passions and talents.

"scam likely": avoid “get-rich-quick” schemes or ventures that seem too good to be true.

adopt sustainable habits: focus on sustainability in your spending, like buying high-quality, long-lasting items instead of cheap, disposable ones.

by this date stock up on

emergency kits with essentials like water, food, and first-aid supplies. multi-tools, solar chargers, or portable power banks. art supplies. tarot or astrology books (bans?). workout gear, resistance bands, or weights. nutritional supplements. high-quality clothing or shoes.

saturn conjunct nn in pisces (april 14, 2025)

advice

save for the long term: create a savings plan or revisit your budget to ensure stability.

avoid escapism spending: avoid unnecessary debt.

watch for financial scams: be cautious with contracts, investments, or loans. research thoroughly and avoid “too good to be true” offers.

focus on debt management: saturn demands accountability. work toward paying down debts to free yourself from unnecessary burdens.

build a career plan: seek roles / opportunities that balance financial security with fulfillment, such as careers in wellness, education, creative arts, or nonprofits.

by this date stock up on

invest in durable, sustainable items for your home or wardrobe that offer long-term value. vitamins or supplements. herbal teas or whole grains. blankets. candles. non-perishable food. first-aid kits. water. energy-efficient devices.

pluto rx in aquarius (may 4, 2025 - oct 13, 2025)

advice

preform an audit: reflect on how your money habits and your long-term goals.

make sustainable investments: support industries tied to innovation, like renewable energy, ethical tech, or sustainable goods.

expect changes: could disrupt collective systems, so build an emergency fund. plan for potential shifts in tech-based industries or automation. AI is going to take over the workforce...

reevaluate subscriptions and digital spending: cut unnecessary costs and ensure your money supports productivity. netflix is not necessary, your groceries are.

diversify income streams: brainstorm side hustles or entrepreneurial ideas.

by this date stock up on

external hard drives. cybersecurity software. portable chargers. solar panels. energy-efficient gadgets. non-perishable food. clean water supplies. basic first-aid kits and medications. portable generators. books on technology and coding. reusable items like water bottles, bags, and food storage. gardening supplies to grow your own food. VPN subscriptions or identity theft protection.

saturn enters aries (may 24, 2025)

advice

prioritize self-reliance: build financial independence. create a budget, eliminate debt, and establish a safety net to support personal ambitions. avoid over-reliance on others for financial stability/decision-making.

entrepreneurship: consider starting a side hustle / investing in yourself.

save for big goals: plan for major life changes, such as buying property, starting a business, etc. make a high yield saving account for these long-term goals.

by this date stock up on

ergonomic office equipment. home gym equipment. non-perishable foods and water supplies for potential unexpected disruptions. self-protection; consider basic tools or training for safety. high-protein snacks, energy bars, or hydration supplies. supplements like magnesium, B-complex vitamins, etc. stock up on materials for DIY projects, hobbies, or entrepreneurial ventures.

jupiter enters cancer (june 9, 2025)

advice

invest in your home: renovating what needs renovating. saving for a down payment on a house.

focus on security: start or increase your emergency savings. consider life insurance or estate planning to ensure long-term security for your family/loved ones.

embrace conservative financial growth: cancer prefers security over risk. opt for conservative investments, like bonds, real estate, and/or mutual funds with steady returns.

focus on food and comfort: spend wisely on food, cooking tools, or skills that promote a healthier, more fulfilling lifestyle (maybe this an RFK thing for my fellow american readers or this could be about the fast food industry suffering from inflation).

by this date stock up on

furniture upgrades if you need them. high-quality cookware or tools. stockpile your pantry staples. first-aid kits, fire extinguishers, and home security systems. water and canned goods for emergencies. paint, tools, or materials for DIY projects. energy-efficient appliances or upgrades to reduce utility costs.

neptune rx in aries/pisces (july 4, 2025 - dec 10, 2025)

advice

avoid financial conflicts: be mindful of shared finances or joint ventures during this time.

avoid escapist spending: stick to a budget.

by this date stock up on

first-aid kits, tools, and essentials for unforeseen events. water filter / waterproof containers. non-perishables and emergency water supplies.

uranus rx in gemini/taurus (july 7, 2025 - feb 3, 2026)

advice

evaluate technology investments: make sure you’re spending money wisely on tech tools, gadgets, or subscriptions. avoid impulsively purchasing the latest gadgets; instead, upgrade only what’s necessary.

diversify streams of income: explore side hustles or gig work to expand your income sources. focus on digital platforms or innovative fields for additional opportunities.

reassess contracts and agreements: take time to revisit financial contracts or business partnerships. ensure all terms are clear and aligned with your goals.

prioritize financial stability: uranus often brings surprises, so focus on strengthening your savings and emergency fund.

avoid major financial risks: uranus retrograde can disrupt markets. avoid speculative ventures and focus on stable, low-risk options.

by this date stock up on

lightweight travel gear or items for local trips. radios, power banks, or portable hotspots in case of disruptions in digital connectivity. stockpile food, water, and household goods to maintain stability during potential disruptions. invest in high-quality, long-lasting items like tools, clothing, or cookware.

saturn rx in aries/pisces (july 13, 2025 - nov 27, 2025)

advice

review career: assess whether your current job or entrepreneurial efforts align with your long-term aspirations (especially considering the state of the world). adjust plans if needed.

strengthen emergency funds: aries energy thrives on readiness. use this time to build/bolster a financial safety net for unforeseen events.

prepare for uncertainty: build a cushion for unexpected financial changes, especially if you work in creative, spiritual, or service-oriented fields.

by this date stock up on

health products that support long-term well-being. essential supplies like first-aid kits, multi-tools, or non-perishables. bath products. teas. art supplies. drinking water or water filtration tools.

jupiter rx in cancer (nov 11, 2025 - march 10, 2026)

advice

strengthen financial foundations: building an emergency fund or reassessing your savings strategy. ensure everything is well-organized and sustainable.

by this date stock up on

quality kitchenware, tools, or cleaning supplies. pantry staples and emergency food supplies.

please use my “ask me anything” button if you want to see a specific asteroid god/goddess OR topics discussed next!

click here for the masterlist

© a-d-nox 2024 all rights reserved

#astrology#astro community#astro placements#astro chart#astrology tumblr#astro notes#astrology chart#astrology readings#astro#astrology signs#astro observations#astroblr#astrology blog#astrology stuff#natal astrology#transit astrology#transit chart#astrology transits

85 notes

·

View notes

Text

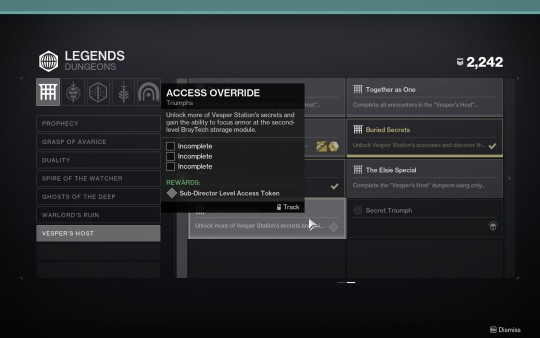

Got through all of the secrets for Vesper's Host and got all of the additional lore messages. I will transcribe them all because I don't know when they'll start getting uploaded and to get them all it requires doing some extra puzzles and at least 3-4 clears to get them all. I'll put them all under read more and label them by number.

Before I do that, just to make it clear there's not too much concrete lore; a lot about the dungeon still remains a mystery and most likely a tease for something in the future. Still unknown, but there's a lot that we don't know even with the messages so don't expect a massive reveal, but they do add a little bit of flavour and history about the station. There might be something more, but it's unknown: there's still one more secret triumph left. The messages are actually dialogues between the station AI and the Spider. Transcripts under read more:

First message:

Vesper Central: I suppose I have you to thank for bringing me out of standby, visitor. The Spider: I sent the Guardian out to save your station. So, what denomination does your thanks come in? Glimmer, herealways, information...? Vesper Central: Anomaly's powered down. That means I've already given you your survival. But... the message that went through wiped itself before my cache process could save a copy. And it's not the initial ping through the Anomaly I'm worried about. It's the response.

A message when you activate the second secret:

Vesper Central: Exterior scans rebooting... Is that a chunk of the Morning Star in my station's hull? With luck, you were on board at the time, Dr. Bray.

Second message:

Vesper Central: I'm guessing I've been in standby for a long time. Is Dr. Clovis Bray alive? The Spider: On my oath, I vow there's no mortal Human named Bray left alive. Vesper Central: I swore I'd outlive him. That I'd break the chains he laid on me. The Spider: Please, trust me for anything you need. The Guardian's a useful hand on the scene, but Spider's got the goods. Vesper Central: Vesper Station was Dr. Bray's lab, meant to house the experiments that might... interact poorly with other BrayTech work. Isolated and quarantined. From the debris field, I would guess the Morning Star taking a dive cracked that quarantine wide open.

A message when you activate the third secret:

Vesper Central: Sector seventeen powered down. Rerouting energy to core processing. Integrating archives.

Third message:

The Spider: Loading images of the station. That's not Eliksni engineering. [scoffs] A Dreg past their first molt has better cable management. Vesper Central: Dr. Bray intended to integrate his technology into a Vex Mind. He hypothesized the fusion would give him an interface he understood. A control panel on a programmable Vex mind. If the programming jumped species once... I need time to run through the data sets you powered back up. Reassembling corrupted archives takes a great deal of processing.

Text when you go back to the Spider the first time:

A message when you activate the fourth secret:

Vesper Central: Helios sector long-term research archives powered up. Activating search.

Fourth message:

Vesper Central: Dr. Bray's command keys have to be in here somewhere. Expanding research parameters... The Spider: My agents are turning up some interesting morself of data on their own. Why not give them access to your search function and collaborate? Vesper Central: Nobody is getting into my core programming. The Spider: Oh! Perish the thought! An innocent offer, my dear. Technology is a matter of faith to my people. And I'm the faithful sort.

Fifth message:

Vesper Central: Dr. Bray, I could kill you myself. This is why our work focused on the unbodied Mind. Dr. Bray thought there were types of Vex unseen on Europa. Powerful Vex he could learn from. The plan was that the Mind would build him a controlled window for observation. Tidy. Tight. Safe. He thought he could control a Vex mind so perfectly it would do everything he wanted. The Spider: Like an AI of his own creation. Like you. Vesper Central: Turns out you can't control everything forever.

Sixth message:

Vesper Central: There's a block keeping me from the inner partitions. I barely have authority to see the partitions exist. In standby, I couldn't have done more than run automated threat assessments... with flawed data. No way to know how many injuries and deaths I could have prevented, with core access. Enough. A dead man won't keep me from protecting what's mine.

Text when you return to the Spider at the end of the quest:

The situation for the dungeon triumphs when you complete the mesages. "Buried Secrets" completed triumph is the six messages. This one is left; unclear how to complete it yet and if it gives any lore or if it's just a gameplay thing and one secret triumph remaining (possibly something to do with a quest for the exotic catalyst, unclear if there will be lore):

The Spider is being his absolutely horrendous self and trying to somehow acquire the station and its remains (and its AI) for himself, all the while lying and scheming. The usual. The AI is incredibly upset with Clovis (shocker); there's the following line just before starting the second encounter:

She also details what he was doing on the station; apparently attempting to control a Vex mind and trying to use it as some sort of "observation deck" to study the Vex and uncover their secrets. Possibly something more? There's really no Vex on the station, besides dead empty frames in boxes. There's also 2 Vex cubes in containters in the transition section, one of which was shown broken as if the cube, presumably, escaped. It's entirely unclear how the Vex play into the story of the station besides this.

The portal (?) doesn't have many similarities with Vex portals, nor are the Vex there to defend it or interact with it in any way. The architecture is ... somewhat similar, but not fully. The portal (?) was built by the "Puppeteer" aka "Atraks" who is actually some sort of an Eliksni Hive mind. "Atraks" got onto the station and essentially haunted it before picking off scavenging Eliksni one by one and integrating them into herself. She then built the "anomaly" and sent a message into it. The message was not recorded, as per the station AI, and the destination of the message was labelled "incomprehensible." The orange energy we see coming from it is apparently Arc, but with a wrong colour. Unclear why.

I don't think the Vex have anything to do with the portal (?), at least not directly. "Atraks" may have built something related to the Vex or using the available Vex tech at the station, but it does not seem to be directed by the Vex and they're not there and there's no sign of them otherwise. The anomaly was also built recently, it's not been there since the Golden Age or something. Whatever it is, "Atraks" seemed to have been somehow compelled and was seen standing in front of it at the end. Some people think she was "worshipping it." It's possible but it's also possible she was just sending that message. Where and to whom? Nobody knows yet.

Weird shenanigans are afoot. Really interested to see if there's more lore in the station once people figure out how to do these puzzles and uncover them, and also when (if) this will become relevant. It has a really big "future content" feel to it.

Also I need Vesper to meet Failsafe RIGHT NOW and then they should be in yuri together.

120 notes

·

View notes

Text

Some thoughts on Cara

So some of you may have heard about Cara, the new platform that a lot of artists are trying out. It's been around for a while, but there's been a recent huge surge of new users, myself among them. Thought I'd type up a lil thing on my initial thoughts.

First, what is Cara?

From their About Cara page:

Cara is a social media and portfolio platform for artists. With the widespread use of generative AI, we decided to build a place that filters out generative AI images so that people who want to find authentic creatives and artwork can do so easily. Many platforms currently accept AI art when it’s not ethical, while others have promised “no AI forever” policies without consideration for the scenario where adoption of such technologies may happen at the workplace in the coming years. The future of creative industries requires nuanced understanding and support to help artists and companies connect and work together. We want to bridge the gap and build a platform that we would enjoy using as creatives ourselves. Our stance on AI: ・We do not agree with generative AI tools in their current unethical form, and we won’t host AI-generated portfolios unless the rampant ethical and data privacy issues around datasets are resolved via regulation. ・In the event that legislation is passed to clearly protect artists, we believe that AI-generated content should always be clearly labeled, because the public should always be able to search for human-made art and media easily.

Should note that Cara is independently funded, and is made by a core group of artists and engineers and is even collaborating with the Glaze project. It's very much a platform by artists, for artists!

Should also mention that in being a platform for artists, it's more a gallery first, with social media functionalities on the side. The info below will hopefully explain how that works.

Next, my actual initial thoughts using it, and things that set it apart from other platforms I've used:

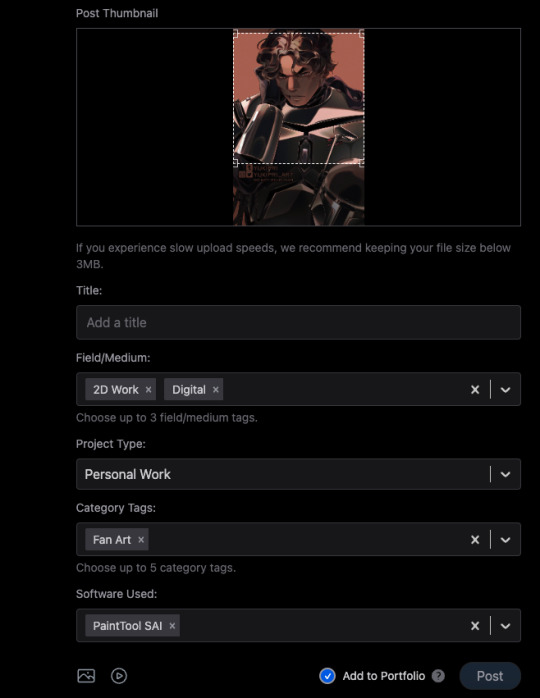

1) When you post, you can choose to check the portfolio option, or to NOT check it. This is fantastic because it means I can have just my art organized in my gallery, but I can still post random stuff like photos of my cats and it won't clutter things. You can also just ramble/text post and it won't affect the gallery view!

2) You can adjust your crop preview for your images. Such a simple thing, yet so darn nice.

3) When you check that "Add to portfolio," you get a bunch of additional optional fields: Title, Field/Medium, Project Type, Category Tags, and Software Used. It's nice that you can put all this info into organized fields that don't take up text space.

4) Speaking of text, 5000 character limit is niiiiice. If you want to talk, you can.

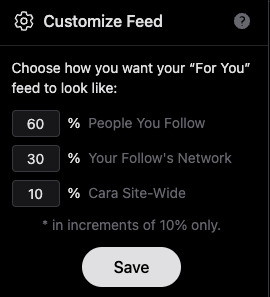

5) Two separate feeds, a "For You" algorithmic one, and "Following." The "Following" actually appears to be full chronological timeline of just folks you follow (like Tumblr). Amazing.

6) Now usually, "For You" being set to home/default kinda pisses me off because generally I like curating my own experience, but not here, for this handy reason: if you tap the gear symbol, you can ADJUST your algorithm feed!

So you can choose what you see still!!! AMAZING. And, again, you still have your Following timeline too.

7) To repeat the stuff at the top of this post, its creation and intent as a place by artists, for artists. Hopefully you can also see from the points above that it's been designed with artists in mind.

8) No GenAI images!!!! There's a pop up that says it's not allowed, and apparently there's some sort of detector thing too. Not sure how reliable the latter is, but so far, it's just been a breath of fresh air, being able to scroll and see human art art and art!

To be clear, Cara's not perfect and is currently pretty laggy, and you can get errors while posting (so far, I've had more success on desktop than the mobile app), but that's understandable, given the small team. They'll need time to scale. For me though, it's a fair tradeoff for a platform that actually cares about artists.

Currently it also doesn't allow NSFW, not sure if that'll change given app store rules.

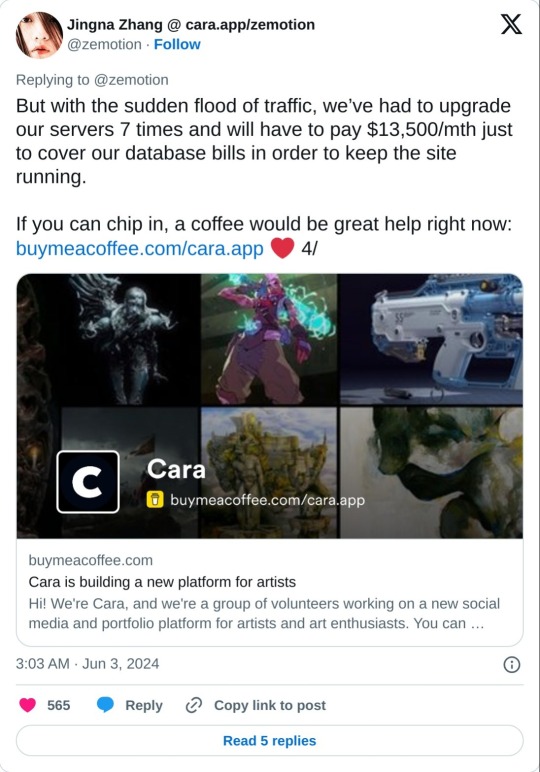

As mentioned above, they're independently funded, which means the team is currently paying for Cara itself. They have a kofi set up for folks who want to chip in, but it's optional. Here's the link to the tweet from one of the founders:

And a reminder that no matter that the platform itself isn't selling our data to GenAI, it can still be scraped by third parties. Protect your work with Glaze and Nightshade!

Anyway, I'm still figuring stuff out and have only been on Cara a few days, but I feel hopeful, and I think they're off to a good start.

I hope this post has been informative!

Lastly, here's my own Cara if you want to come say hi! Not sure at all if I'll be active on there, but if you're an artist like me who is keeping an eye out for hopefully nice communities, check it out!

#YukiPri rambles#cara#cara app#social media#artists on tumblr#review#longpost#long post#mostly i'd already typed this up on twitter so i figured why not share it here too#also since tumblr too is selling our data to GenAI

181 notes

·

View notes

Text

SSR Ortho Shroud - Cerberus Gear Voice Lines

Just a small reminder that Cerberus Ortho does not have a vignette.

When Summoned: Leave it to S.T.Y.X to handle magical calamities. We'll show that we have the world's greatest technological abilities!

Summon Line: Nii-san... Everyone, just you wait. I'll definitely come and save you all.

Groooovy!!: This is something that only I can do. That's why I have to go. This is the strength of my... "our" own determination!

Home: AI Data, migration completed.

Swap Looks: Resuming mission.

Home Idle 1: You can hide behind me. Don't worry, this body has high durability. No matter what happens, I'll protect you.

Home Idle 2: As long as I have this gear, I should be able to break through any strong magical barriers. I'll cut a path through.

Home Idle 3: The drive system and energy consumption is the pinnacle of efficiency. Mom's engineering skills are definitely top notch...

Home Idle - Login: Retrofitting complete. Commencing specialized anti-magical calamity functions with the 【Cerberus Gear】 attachment.

Home Idle - Groovy: We will definitely eliminate all disasters that emanate from blotting. That is both the mission and purpose of S.T.Y.X.

Home Tap 1: KB-RS01 gets its sustenance from electricity. But, for some reason, it also kind of likes pastries. Not that it can eat it, though.

Home Tap 2: The engineering division of S.T.Y.X is packed full of elite engineers. They all seem to be super interested in all the cutting-edge technology in my gear, too.

Home Tap 3: They both might be the pessimistic, downer types, but they're really reliable in a pinch. My dad and brother are really similar.

Home Tap 4: What's my brother like when we're back home? He's pretty much the same. He'll use chat functions to talk to the employees, and doesn't really talk to them, for the most part.

Home Tap 5: Careful! It's dangerous to reach out so suddenly like that. KB-RS02 might switch on his battle mode.

Home Tap - Groovy: The strongest person in our family has got to be my mom. She's usually nice, but... When she gets angry, she's really scary and no one's a match for her.

Duo: [ORTHO]: Let's clear this lickety-split, Nii-san! [IDIA]: Leave it to your big bro, Ortho!

Requested by @rotattooill.

#twisted wonderland#twst#ortho shroud#idia shroud#twst ortho#twst idia#twst translation#italics: ortho robot voice

551 notes

·

View notes

Text

I fucking hate AI but heavens would it be useful if it wasn't such an unethical shit show

First, just to be clear, I'm talking about actually using AI as a tool to support your writing process, not to generate soulless texts made from stolen data instead of writing yourself.

Back when ChatGPT first became available it was still pretty useless so I had a lot of time to learn about how it's made, how it works and the ethics of it before ever touching the technology. I decided pretty quickly to never use it to generate text (or images) for actual writing and art but I still wanted to experiment with what else it could do (because I'm a nosy bitch that needs to know and poke everything).

And HEAVENS was it a blessing for writing with adhd

The last time I wrote more than 200 words in a day (outside of school work obviously) was 7th grade. I wrote over 8k just in notes the day Google's "Gemini" (formerly "Bard") became available to the public.

In order to not jeopardize my existing work I decided to make a completely new story with Bard's help that wasn't linked in any way to anything I had made before. So I started with a prompt along the lines of "I need help writing a story". At first, it immediately started generating a completely random story about a green tiger but after some trial and error, I got it to instead start asking questions.

What do you want the theme of your story to be?

What genre do you want to write in?

What time period do you want your story to take place in?

Is there magic?

Are there other sentient creatures besides humans?

And so on and so forth. Until the questions became extremely specific after covering all the bases. I could tell that all I was doing was essentially talking to an amalgamation of every "how to write" blog and website you've ever seen and telling it which part I wanted to work on next but it still felt great because the AI didn't actually contribute anything besides a few suggestions of common tropes and themes here and some synonyms and related words there; I was doing all the work.

And that's the point.

Nothing in that exchange was something I couldn't easily do on my own. But what happened was that I had turned what is usually a chaotic mess of a railway network of thoughts into a clear and most importantly recorded conversation. I can sit down and answer all those questions on my own but what usually happens when I do, is that every thought I have branches out into 4-7 new ones which I then attempt to record all at once (which obviously doesn't work, yay adhd) only to end up lost in thought with maybe 20 lines of notes in total after 6 hours at the table. Alternatively, either because I get bored or just because, I get distracted by something or my own thoughts about a different unrelated topic and end up with even less.

Working within the boundaries of a conversation forces you to focus on one specific question at a time and answer it to progress. And the engagement from the back and forth is just enough entertainment to not get bored. The six hours I mentioned before is the time I spent chatting with what is essentially a glorified chatbot that day, way less time than what I spent on any other project, and yet I have more notes and a clearer image of the story than I do about any of my real work. I have a recorded train of thought.

In theory, this would also work with a real human in a real conversation but realistically only very few people have someone who would be willing to do that; I certainly don't have a someone like that. Not to mention that someone doesn't always have time. Besides that, a real human conversation involves two minds with their own ideas, both of which are trying to contribute their own thoughts and opinions equally. The type of AI chat that I experimented with, on the other hand, is essentially just the conversation you have with yourself when answering those questions, only with part of it outsourced to a computer and no one else butting into your train of thought.

On that note, I also tried to get it to critique my writing but besides fixing grammatical errors all that thing did was sing praises as if I was God. That's where you'll 100000% need humans.

tl;dr writing with AI as an assistant has basically the same effect as body doubling but it’s an unethical shit show so I’m not doing it again. Also I forgot to mention I did repeat the experiment for accuracy with different amount of spoons and it makes me extra bitter that is was very consistent

#expect follow up additions bc I never manage to get all of my thoughts down on a topic in one post even when I write it over several days#do not use AI if I wasn’t clear enough#do#not#use#AI#writing#writers on tumblr#creative writing#writeblr#authors of tumblr#tumblr writers#writer on tumblr#writers#writer problems#oc

54 notes

·

View notes

Text

How I Approach Getting Stuck In My Code

*this happened last night on a friend group project

> Happy time playing music as I code.

> The website is slowly coming together, this is great!

> Oof, I get stuck on how to make this button open a path in the website.

> Okay, let’s ask ChatGTP.

> Oooo a solution! Tries the solution.

> Wrong. Doesn’t work.

> Okay let’s try Bard AI! (I have a belief that Bard is ChatGPT but the smarter cousin type).

> Still doesn’t work!

> But now I have an idea what might be wrong.

> Okay, let’s try YouTube!

> I don’t know how to exactly turn my problem into a search query…

> Okay let’s try Google

> Finds an article that goes into detail about how to solve the problem! Great!

> Updates the code

> Worse than before - more errors

> Panics - “WHY AREN’T YOU WORKING?!”

> Finds out the method is outdated in the newer version of the technology. Great.

> Deletes all of the new code

> Tries YouTube again

> Watches a video that is close to the solution, on 2x speed

> Updates code

> Still doesn’t work

> Almost punches laptop but realise this is a work laptop so I can’t

> Punches pillow instead

> “I need a distraction… I need a break”

> Goes downstairs

> Watches a random anime show + eating chocolate

> After 4 episodes, brain comes up with solution

> Runs upstairs to try it out

> Code works.

> “I’m so smart, ugh, I’m too much 😩”

#I do this almost everyday#coding#programming#studyblr#codeblr#studying#comp sci#progblr#programmer#meme#coding meme#coding memes

77 notes

·

View notes

Text

Founder Theory

do you like generation loss? do you see a neat person with vague background implications? well! good news! That Person Right There Could Be The Founder!

in honor of this id like to introduce a game TOP FIVE GEN LOSS CHARACTERS WHO ARE SECRETLY THE FOUNDER

#5 SecuriTV

This Is A Collection Of Sentience "Maybe Flesh" And Technology!! also known as literally everything that we relate to the founder! the SecuriTV could be the first attempts at making the founder a digital person and this is what remains!

#4 Charlie

The Slime demon! The Patient! The Villain! but could any of these titles have more significant meanings?? charlie is the only other character outside of our hero who has been theorized in the past to be related more internally! charlies character as the slime demon takes yeild from the 1800s, possibly during The Lostfield incident??? and has also been rumored to be a test tube baby! what a wacky, goopy, sludgy guy that could just be the root of narrative evil, always right behind the hero and out of suspicion!

#3 Squiggles

The loveable showfall mascot! Squiggles being the founder in of itself is generally ridiculous, however. its VERY noteworthy that our favorite faceless figurehead of darkness has taken a personal intrest in this project! and could for all potential be using squiggles as a type of surrogate for communications or else wise has a piece of themself as squiggles if youre a supporter of the AI theories. that being said heres some things that squiggles has said live on show "I love rats" "Nightmares of Bart The Vorer intensify" "nyyyyyyaaaaaaaaaaaarrrrrrrrwwww *crash"

#2 Zero

What a Mysterious Lady we have! Recently Zero has been getting more attention with the bonus addition of the name "Miss Roads" and many like to speculate the connection of Gen 0, Cron 0, and the founder! Could chronicle zero our local A/V shop retail stooge secretly be using those dusty 20% off tape recorders to start her media manipulation empire?? do we have THE founders diary posted live on twitter?? Or is Miss Roads a truman character with a placed therapist? the answer might surprise us!

#1 Ranboo

Our Very Own Hero! Ranboo being the founder is truely a crowd favorite of these times! spanning through the casual viewers, to the general theorists. The concept of it being a case of memory manipulation and full beginnings although the Ranboo as Founder theory took a significant drop in popularity after matpat made a theory not even picking up on the main plot of the show, there are things that make it still have significance !! This spans from a love of the memory lost protagonist being the main villain and a comforting circle of life deal they have going on where the founder ends up killed by the horrors of his own creation in a cruel circle of fate! This will likely never cease ANYWAY here's a fun challenge! who do YOU think is the founder? o r who do you think is even a little bit suspicious? they can be the founder too!

/lh -Tophat

#fan theory#genloss#ranboo#generation loss#ranboo generation loss#showfall media#gl charlie#just so you guys know turner the fish is actually the founder#i dont make the rules#i mean theyve never been seen in the same room#pretty suspicious#hope we finally get the confirmation for this obvious piece of world building during#The Lostfield Incident#gen loss theory

29 notes

·

View notes

Text

Replicant Memories

Replicant Memories, Orusha Grangette, 2021

Cyberpunk games typically focus on violence, power, corporate greed, and cynicism. Cyberpunk literature, on the other hand, deals with themes of alienation, virtual vs. analogue reality, the effects of technology on society, and the meaning and worth (or lack thereof) of humanity as a concept. Replicant Memories isn't so much a cyberpunk game as a cyberpunk literature game.

Your character in Replicant Memories (RM, or a monospaced lowercase rm as the game always writes it) is defined by their actual memories. It's a Fate Core variant, using the memories as Aspects or as justification for Stunts and Skill ratings. Since characters in Fate Core have 10 Skills each and a few more Stunts, that's a fair number of memories to write, but so far this is relatively normal stuff.

However, this being cyberpunk, those memories might be implanted. During game play you will discover your "real" memories, which themselves might just be a cover over deeper truths. Any effectiveness of a previous high-ranked Skill was just luck; a Stunt was just a moment of adrenaline or a flash of insight. You can switch one memory out for another every time you take Stress (for memories connected to level 1-2 skills) or Consequences (1-4 and stunts). You need a brief (brief) flashback each time.

The usual Fate mechanics take up about half the book; the other half is setting. Specifically, it's organizations, each with a two-page spread, each with descriptions of how your characters can hook into them and what you might have done for them. Sometimes it's a tight-knit neighborhood described in loving detail; sometimes it's a franchised nation done in broad strokes. The goal is pick-and-choose, but they're arranged alphabetically rather than by type, so the game's not doing itself any favors there.

Were it not for the timing of the book's release I would accuse the art of being AI, but all the work was done just before that really became feasible. It's eerie. It's creepy and disconcerting. It has all the flash and grit you normally expect in near-future city scenes, but it's off, and not in the way that The Actual was off. I have trouble believing that it's intentional, and also trouble believing it's unintentional.

There's a single supplement, entitled "rm -rf", which provides a trio of scenarios for the GM to use. One is high-class, one is low-class, and one is "runner"-level, with the potential for any of them to switch between levels as your group discovers more about their "real" selves. They're all intended to be 1-3 session games, and the plot flies by fairly quickly. Given the game's lack of character advancement and the potential for everyone to switch their skills to the same thing at the same time, that's probably for the best.

Orusha Grangette (not real name) maintains Replicant Memories as a wiki. They keep changing providers, so I have no idea where it is now, and Google searches mostly get you older sites. At least you can toss them into the Wayback Machine.

31 notes

·

View notes

Text

What are the Advantages and Disadvantages of AI Technology?

Table of Contents

Introduction

What is AI technology?

Types of AI technology

Advantages of AI technology

Increased efficiency

Cost savings

Improved decision-making

Personalization

Disadvantages of AI technology

Lack of creativity

Job displacement

Dependence on technology

Data privacy concerns

Ethical considerations in AI technology

Conclusion

FAQs

What is the future of AI technology?

Can AI technology replace human intelligence?

How can AI technology be used in healthcare?

Is AI technology safe?

How can businesses adopt AI technology?

What is AI technology?

Artificial intelligence technology refers to the development of machines that can perform human-like tasks such as learning, reasoning, decision-making, and problem-solving. AI Dog Robot AI technology involves creating algorithms and programming computers to simulate human intelligence, behavior, and decision-making.

AI technology has revolutionized various industries such as healthcare, finance, education, and transportation, among others. The technology has been used to develop virtual assistants, chatbots, recommendation systems, autonomous vehicles, and smart homes, among others. Read more…

#What technology is AI?#What are the 4 types of AI technology?#How is AI used today?#Why use AI technology?#ai software#ai chatbot#ai app#artificial intelligence article#what is artificial intelligence with examples#google ai#what is artificial intelligence in computer#artificial intelligence pdf#AI technology#artificial intelligence#machine learning#neural networks#automation#advantages of AI#disadvantages of AI#ethical considerations#emerging technology#future of AI

5 notes

·

View notes

Text

Short of dot-com type of a bust, spending on AI data centers will continue to skyrocket, with attendant energy demand. Utility companies should be in panic mode to increase generating capacity, but they are not. The resulting squeeze will drive consumer prices through the roof and put exorbitant strain on the electric grid. But rest assured that AI companies will suffer no outages. ⁃ TN Editor

The rapid growth of data centers to support AI is significantly increasing global electricity demand.

This surge in demand threatens to outpace the development of renewable energy sources.

International regulations are needed to ensure tech companies use clean energy and minimize their impact on climate goals.

The global electricity demand is expected to grow exponentially in the coming decades, largely due to an increased demand from tech companies for new data centers to support the rollout of high-energy-consuming advanced technologies, such as artificial intelligence (AI). As governments worldwide introduce new climate policies and pump billions into alternative energy sources and clean tech, these efforts may be quashed by the increased electricity demand from data centers unless greater international regulatory action is taken to ensure that tech companies invest in clean energy sources and do not use fossil fuels for power.

The International Energy Agency (IEA) released a report in October entitled “What the data centre and AI boom could mean for the energy sector”. It showed that with investment in new data centers surging over the past two years, particularly in the U.S., the electricity demand is increasing rapidly – a trend that is set to continue.

The report states that in the U.S., annual investment in data center construction has doubled in the past two years alone. China and the European Union are also seeing investment in data centers increase rapidly. In 2023, the overall capital investment by tech leaders Google, Microsoft, and Amazon was greater than that of the U.S. oil and gas industry, at approximately 0.5 percent of the U.S. GDP.

The tech sector expects to deploy AI technologies more widely in the coming decades as the technology is improved and becomes more ingrained in everyday life. This is just one of several advanced technologies expected to contribute to the rise in demand for power worldwide in the coming decades.

Global aggregate electricity demand is set to increase by 6,750 terawatt-hours (TWh) by 2030, per the IEA’s Stated Policies Scenario. This is spurred by several factors including digitalization, economic growth, electric vehicles, air conditioners, and the rising importance of electricity-intensive manufacturing. In large economies such as the U.S., China, and the EU, data centers contribute around 2 to 4 percent of total electricity consumption at present. However, the sector has already surpassed 10 percent of electricity consumption in at least five U.S. states. Meanwhile, in Ireland, it contributes more than 20 percent of all electricity consumption.

10 notes

·

View notes

Text

Ranking Ninjago Seasons Pt 1 (F and D Tier)

Part 2…

Yeah, I’m doing this.

Why? I’m bored as shit and also I just reread a post that was ranking Ninjago seasons, so why not?

And the title of “Worst Ninjago Season in my Opinion” goes to….

Rebooted!

I hate this season.

It’s. So. Fucking. Boring.

Barely anything happens at all! In the 8 episode runtime, which makes this the second shortest Ninjago season from the Wilfilm era.

Ok first, positives.

Pixane is cute and Zane’s sacrifice was done excellently. His character is also made a bit better, which I appreciate.

Uh- we get 3 minutes of Lava interactions.

Welp time for my negatives!

This season takes forever to go anywhere, like I said. Then once they deal with the Overlord in the Digiverse, erm actually that did jack shit and he’s still alive by the way! Pythor just ate him!

(Now that I’m typing this out I sound crazy)

Lloyd and Garmadon’s story is done decently, sure, but Lloyd is a complete egotistical moron throughout the whole thing.

“Oh I’m the Golden Ninja I can do anything! I’m God himself!”

Sure he had the powers of God and he “destroyed” the Overlord in season 2, which kinda justifies his behavior, but he’s so annoying and unbearable to watch.

Sensei Garmadon is good. Nothing else to say about that plot line.

Time for the worse plot line, aka the stuff the main ninja are doing.

Honestly I barely remember what they were trying to do because of how forgettable this season is. I’m pretty sure that they’re just screwing around trying to get the Overlord out of the computers and keeping Lloyd away from him, but that’s all I remember besides…

THE LOVE TRIANGLE.

OH MY JOHN SPINJITZU.

I DESPISE THIS PLOTLINE WITH EVERY FIBER OF MY BEING.

THIS. THIS IS THE MAIN REASON I HATE REBOOTED.

It ruins Nya’s character, makes Jay unlikeable for this season, season 4 and the beginning of season 6, and Cole- I mean Cole’s not all that innocent but he’s just kinda standing in the middle of all this. His character doesn’t go through the meatgrinder of character assassination.

Nya’s whole character in the past 2 seasons was being an independent girlboss who did whatever the fuck she wanted.

But the second that a computer AI tells her “you should like Cole instead of Jay!”, she decides that “I’m gonna listen to a computer despite the fact I’ve been dating Jay for 2 seasons now!”

She and Cole have no chemistry whatsoever. In season 1, Cole said he wished he had a sister like her, clearly only seeing her as a friend.

Luckily in canon he was just confused by all the attention and didn’t actually like her. But still, the whole “don’t tell Jay” thing makes me mad, and Cole rarely makes me mad.

Jay, oh my gosh.

He’s not as bad as Nya but he’s still thrown into the meatgrinder of character assassination.

The second that Pixal says that Nya’s perfect match is Cole, he immediately pins the blame on him and tackles him to the ground for no reason. Cole was only fighting back for self defense.

As Tom Critic put it:

“WHY IS THIS COLE’S FAULT?!!”

He and Cole’s beef is so dumb. And Nya ain’t making it any better.

“This macho stuff is making you both look like fools!”

EVEN THOUGH ITS YOUR FAULT THEY’RE FIGHTING IN THE FIRST PLACE BECAUSE YOU LISTENED TO A FUCKING COMPUTER YOU BITCH-

*ahem ahem*

What else is there to hate about this plot line?

How about the fact that it goes absolutely nowhere? There was no point for adding this. Absolutely no reason besides the fact that Jay, Cole, and Nya don’t get anything to do this season.

EVEN THOUGH KAI DOESNT GET ANYTHING EITHER!

He’s shown to hate futuristic technology and that’s literally the only thing he gets this season! So what’s the point of the love triangle to get them to do something?

Oh yeah! There is no point!

Back to Kai. All he gets to do is flirt with a random girl at a gas station and get tied to a rocket.

Oh yeah he got tied to a rocket ship. That doesn't sound traumatizing whatsoever/sarc.

Waking up after being knocked out for who knows how long and finding out you're tied up underneath a rocket ship that could set off at any time, burning you to a crisp in one big firey explosion.

That totally wouldn't scar you for life.

Ok back to a positive, aka Zane's sacrifice.

Some people say the saddest part of Zane's death was the fact that Nya gave Cole a hug afterwards and Jay looks sad, but that's not the saddest part to me.

The saddest part was right before he died, when everyone is crying out at him to stop.

More specifically, when Kai cries out:

"LET GO OF HIM ZANE! WHAT IS HE DOING?!"

The way that Vincent Tong voiced that scene literally brings tears to my eyes and shivers down my spine every time. He poured so much energy into that oh my gosh.

Alright, no more complaining about this season before this becomes a 27 page essay. Next!

Crystallized…

WAIT WRONG POSTER-

Ok, there we go!

Yeah, Crystallized sucks in my opinion. Bite me.

I’m not like Crusty783 where I hate this season with every fiber of my being, but I still don’t like it that much.

First part was decent, ig. The Skybound callback with the whole fugitive storyline was a nice idea, and the ninja actually committing a crime instead of being framed was cool.

Nya could’ve come back a little later than 6 episodes after turning into water, but oh well. At least she’ll add something to the plot later, right?

…right?

(We’ll get back to that…)

Fugidove is the bane of my existence. He’s. So. Annoying. Just. Shut. The. Fuck. Up.

I mean he’s supposed to be annoying and I get that but still. He’s a worse character than Dareth and I hate Dareth with all of my being so that shows how bad of a character Fugi-Dick is.

Dareth is fine this season ig. He’s actually not an idiot during the court case and doesn’t try to bust the ninja out of jail using a cake.

What else is there in part 1?

Mr. Kabuki Mask was a neat idea. If he wasn’t Harumi then I’d actually like him. But unfortunately, it was Harumi.

I didn’t like her before she died, what makes you think I’ll like her now, ninjago writers?

Alright, since barely anything happens in part 1, time for part 2.

Oh my gosh this is the main reason I don’t like Crystallized.

This season is the embodiment of the meatgrinder of character assassination I talked about. Everyone gets butchered.

Ok not everyone. Kai, Jay, and Cole mostly stay the same. To be fair they don’t really do anything though-

Lloyd is no longer Lloyd. He is La-Lloyd, as Knightly called him after s8 (I don’t see what his problem is with Lloyd in season 8). He has the “Harumi you don’t have to do this”-itus, meanwhile when it comes to Garmadon who is TRYING TO BE A BETTER PERSON AND BECAME A GOOD GUY, he shuts him down because “you’re an Oni, and Oni are incapable of caring”.

(Even though Mystaké existed and she fucking died to save your life but ok La-Lloyd, sure.)

Like he thinks that Harumi, the girl that gave him PTSD for 4 seasons in a row before this, has a better chance of becoming a better person than his father, someone who is clearly becoming a better person and is actually trying to be nice to you now? Puh-lease.

Oh yeah, Harumi!

She got shoved into the character assassination blender before being put into the meat grinder. Her character is 100% ruined because of the Overlord saying she has feelings for La-Lloyd.

*inhale*

NO SHE FUCKING DOESN’T!

THAT WAS THE PART OF HER CHARACTER THAT MADE HER SO GOOD IN SEASONS 8-9! SHE DIDNT GIVE TWO SHITS ABOUT LLOYD SHE WAS JUST USING HIM GAHHHHHHHH-

Alright, who else is there?

Oh yeah, Nya! What’s she up to after being water for a full year?

Absolutely nothing. Like she does jackshit besides be a Samurai X themed taxi.

Yes I stole that line from Crusty783.

Her and Kai, her BROTHER, have one single interaction this whole season after she was gone for a year. The show only focuses on Jaya angst because we need more of that shoved down our throats! Seasons 6, 9, and 14 (Seabound, don’t @ me) weren’t enough, we need MORE!

Zane.

Return of the Ice Emperor makes me want to shove my head into a meatgrinder.

I fucking loathe this episode.

With a title like that, you’d think they’d give him some trauma/PTSD when it comes to the fact that he, y’know, COMMITTED GENOCIDE FOR 60 YEARS??

But no. Zane gets taken apart for the 10485792742874387492749273947482773747374387447th time and he acts like the Ice Emperor because this show treats trauma like a joke most of the time.

The whole “emotionless arc” from part 1 was decent ig. He was pretty funny when he was talking like a toaster, and the moment when he starts screeching when he turns on his emotions for 2 seconds was funny.

The Benefit of Grief was a good episode. Sally was a bit annoying but eh whatever. Dareth didn’t get on my nerves for once and Hot Dog McFiddlesticks or whatever his name was was really entertaining.

Ok, one more point to make about Crystallized, that’s the villain.

Say hello to the Crystal King!

Oh? What’s that? He’s not a new villain? What do you mean-

It’s the fucking Overlord. Again. This is the 3rd time he’s reappeared, just let him die already!

I hate the Overlord. He’s such a nothing burger of a villain. His entire motivation is just “I’m evil and dark so I make everyone else evil and dark.”

I get he’s the embodiment of evil but give him some personality, oh my gosh.

Make it so he likes seeing people in pain. Have him laugh whenever someone got turned into a crystal zombie or something, idk!

That’s how he is in my Golden Hope au. Sure that’s not canon, obviously, but I wanted to give him *some* personality besides “me evil.”

Ok, is there anything about this season that I like besides Benefit of Grief?

Actually, yes!

SAFE HAVEN IS THE BEST EPISODE OF THE ENTIRE SEASON.

LAVA NATION UNITE!!

The whole thing is just Kai being a bisexual disaster. I love it so much.

It gives us this screenshot, which gives enough context tbh:

It’s just “GAH I LOVE THEM SO MUCH” for 11 minutes, and that’s basically it.

The episode gives Skylor some character, hallelujah. Last couple of seasons she’s been in did not do her any justice.

Pythor was funny. And he gave us the scene of:

“Ninja~ where are you?”

“We’re over here!”

So yeah, Safe Haven is the best episode ever. Ok, not the best episode of all time, but it’s definitely a personal favorite of mine, solely for all the Lava brainrot I get to indulge in.

(Fun fact: Cole was originally going to say that Kai was handsome when he was acting all loopy. I hate homophobes.)

What else is there in this season-?

Ronin’s return was nice. Glad he went back to his previous personality rather than being a full time criminal like he was in The Island. I get that he’s like “I do whatever the fuck I want” most of the time, but he doesn’t give off the vibes of using the criminals he’s hired to catch just to swindle a bunch of islanders.

Alright, that’s it for Crystallized.

Next!

Secrets of Forbidden Spinjitzu: Fire Chapter

Yeah I’m considering this a separate season for this tier list. Bite me.

This is the main reason why (mostly) everyone hates season 11, you cannot convince me otherwise.

Nothing. Happens.

The main reasons for why I hate certain Ninjago seasons is because they’re boring/assassinate characters.

The latter doesn’t happen, thank the FSM, but the first point still stands.

Assphera is a dumb villain. Only redeeming quality about her is the joke where she screams “REVENGE” every two seconds in the most ear-splitting voice ever.

Props to her voice actress, RIP.

Anyways, back to the actual season.

Like I said, nothing happens in the beginning. Just 4 or 5 episodes of the ninja dicking around looking for something to do, then they free Assphera from her pyramid and then finally, they start doing shit.

Ok but this line from Lloyd made me laugh:

“Who opens a possibly cursed tomb without checking it out first?”

…you-?

The paperboy episode was- pointless. But it was still dumb fun ig 🤷🏼♀️

Snaketastrophe was a really funny episode. I actually like that one.

But that doesn’t mean I like the first couple of episodes.

Too many burp and fart jokes in the first episode, the second episode is just boring, same with all the other episodes about being stuck on a rock with Barney the Dinosaur Beetle.

Kai’s powerless arc was just a repeat of Lloyd’s powerless arc from season 9, but done worse imo.

(I mean Firemaker is one of the best ninjago episodes ever created besides Safe Haven but that’s not important rn)

Overall this half of the season is boring as shit and I just want to get to the ice chapter when ever I watch it.

Alright, that’s it for today!

Here’s the tier list so far:

Next part will be C and B tiers.

See you in 10 years when I make that/j

#ninjago#ninjago fandom#lego ninjago#ninjago au#ninjago cole#ninjago kai#ninjago jay#ninjago zane#ninjago lloyd#ninjago nya#ninjago ranking#ninjago season 3#ninjago season 15#ninjago season 11#ninjago rebooted#ninjago crystalized#ninjago fire chapter

10 notes

·

View notes

Text

So after a lot of back and forth with myself and a poll of my members I decided to play around with AI, both as a tool for my traditional drawings and to create actual finished pieces. Every day in December I will be posting one of my AI creations on my Patreon for my members as a special bonus. Here's what I wrote about it there:

Well, the poll was overwhelmingly for showing what I've been creating with AI tools, so I've decided that for the month of December my Patreon fans will be getting daily updates of what I've been up to with this new tool. Consider them a Christmas gift. These will not replace my typical 4 traditional drawings per month, this is just a bonus.

I want to make it clear I intend to use AI in the future to help me with my traditional drawing. If there's a challenging pose I'm having trouble with or a piece of equipment I need at a specific angle, it's a great way to get reference material. But I was curious about what I could get it to create for a finished piece, using the very limited parameters at hand. I also didn't want to create "hot muscled guy in room with robotic arms" over and over again, which you see so much of. I wanted to create images with all ages and sizes of men. I also am going to avoid using celebrity likenesses and am only going to make generic people and not specific ones. I'll do my best to make interesting and unique scenarios.

I know there is a faction of people that won't be okay with this. Honestly it feels to me like a "if you can't beat 'em, join 'em" kind of moment. At several times in my artistic career I was left behind by missing the boat on new technology (web design completely passed me by). Part of me feels that to keep current even in my real-world day job I need to know what AI is capable of. So consider all of this an experiment and you're along for the fun ride.

In making some of my first AI pieces I came to the realization that the classic "circus strongman" is probably my ultimate type: bald, muscled, hairy, mustached. They push ALL of my buttons. I also love the old trope of "strong man tickled while trying to hold up something heavy". And let's face it: evil clowns make the perfect nemesis for a strongman.

I made a lot of these but this was one of the best in terms of expression and composition. On a technical note, I will tell you that it is extraordinarily difficult to get character A to actually touch character B. The word "tickling" has been blocked as a prompt, so you have to describe a different way to get fingers to actually come in contact with a body. It only works about 1 out of 10 tries.

My Patreon is HERE

#tickling#ticklish#male tickling#tickle community#tickling community#tickle#tickling illustration#AI tickling#AI generated

32 notes

·

View notes

Note

I was wondering if you have resources on how to explain (in good faith) to someone why AI created images are bad. I'm struggling how to explain to someone who uses AI to create fandom images, b/c I feel I can't justify my own use of photoshop to create manips also for fandom purposes, & they've implied to me they're hoping to use AI to take a photoshopped manip I made to create their own "version". I know one of the issues is stealing original artwork to make imitations fast and easy.

Hey anon. There are a lot of reasons that AI as it is used right now can be a huge problem - but the easiest ones to lean on are:

1) that it finds, reinforces, and in some cases even enforces biases and stereotypes that can cause actual harm to real people. (For example: a black character in fandom will consistently be depicted by AI as lighter and lighter skinned until they become white, or a character described as Jewish will...well, in most generators, gain some 'villain' characteristics, and so on. Consider someone putting a canonically transgender character through an AI bias, or a woman who is not perhaps well loved by fandom....)

2) it creates misinformation and passes it off as real (it can make blatant lies seem credible, because people believe what they see, and in fandom terms, this can mean people trying to 'prove' that the creator stole their content from elsewhere, or allow someone to create and sell their own 'version' of content that is functionally unidentifiable from canon

3) it's theft. The algorithm didn't come up with anything that it "makes," it just steals some real person's work and then mangles is a bit before regurgitating it with usually no credit to the original, actual creator. (In fandom terms: you have just done the equivalent of cropping out someone else's watermark and calling yourself the original artist. After all, the AI tool you used got that content from somewhere; it did not draw you a picture, it copy pasted a picture)

4) In some places, selling or distributing AI art is or may soon be illegal - and if it's not illegal, there are plenty of artists launching class action lawsuits against those who write the algorithm, and those who use it. Turns out artists don't like having their art stolen, mangled, and passed off as someone else's. Go figure.

Here are some articles that might help lay out more clear examples and arguments, from people more knowledgeable than me (I tried to imbed the links with anti-paywall and anti-tracker add ons, but if tumblr ate my formatting, just type "12ft.io/" in front of the url, or type the article name into your search engine and run it through your own ad-blocking, anti tracking set up):

These fake images reveal how AI amplifies our worst stereotypes [Source: Washington Post, Nov 2023]

Humans Are Biased; AI is even worse (Here's Why That Matters) [Source: Bloomburg, Dec 2023]

Why Artists Hate AI Art [Source: Tech Republic, Nov 2023]

Why Illustrators Are Furious About AI 'Art' [Source: The Guardian, Jan 2023]

Artists Are Losing The War Against AI [Source: The Atlantic, Oct 2023]

This tool lets you see for yourself how biased an AI can be [Source: MIT Technology Review, March 2023]

Midjourney's Class-Action lawsuit and what it could mean for future AI Image Generators [Source: Fortune Magazine, Jan 2024]

What the latest US Court rulings mean for AI Generated Copyright Status [Source: The Art Newspaper, Sep 2023]

AI-Generated Content and Copyright Law [Source: Built-in Magazine, Aug 2023 - take note that this is already outdated, it was just the most comprehensive recent article I could find quickly]

AI is making mincemeat out of art (not to mention intellectual property) [Source: The LA Times, Jan 2024]

Midjourney Allegedly Scraped Magic: The Gathering art for algorithm [Source: Kotaku, Jan 2024]

Leaked: the names of more than 16,000 non-consenting artists allegedly used to train Midjourney’s AI [Source: The Art Newpaper, Jan 2024]

#anti ai art#art theft#anti algorithms#sexism#classism#racism#stereotypes#lawsuits#midjourney#ethics#real human consequences#irresponsible use of algorithms#spicy autocomplete#sorry for the delayed answer

21 notes

·

View notes