#spicy autocomplete

Explore tagged Tumblr posts

Text

Y'know, Who Framed Roger Rabbit hits a lot different in the modern age of LLMs. Websites exist right now where you can chat with all of your favorite characters - but only kind of because it's actually just Spicy Autocomplete roleplaying as those characters.

But now I'm watching a cartoon rabbit apologize to his human director for screwing up a take and bopping himself in the head to try and generate a correct response to the prompt only to keep screwing it up. And I'm like. Holy shit.

This, moreso than I, Robot or The Matrix or Terminator or what have you, feels like it's where AI is heading. Instead of Skynet, they're trying to give us Toon Town.

43 notes

·

View notes

Note

I was wondering if you have resources on how to explain (in good faith) to someone why AI created images are bad. I'm struggling how to explain to someone who uses AI to create fandom images, b/c I feel I can't justify my own use of photoshop to create manips also for fandom purposes, & they've implied to me they're hoping to use AI to take a photoshopped manip I made to create their own "version". I know one of the issues is stealing original artwork to make imitations fast and easy.

Hey anon. There are a lot of reasons that AI as it is used right now can be a huge problem - but the easiest ones to lean on are:

1) that it finds, reinforces, and in some cases even enforces biases and stereotypes that can cause actual harm to real people. (For example: a black character in fandom will consistently be depicted by AI as lighter and lighter skinned until they become white, or a character described as Jewish will...well, in most generators, gain some 'villain' characteristics, and so on. Consider someone putting a canonically transgender character through an AI bias, or a woman who is not perhaps well loved by fandom....)

2) it creates misinformation and passes it off as real (it can make blatant lies seem credible, because people believe what they see, and in fandom terms, this can mean people trying to 'prove' that the creator stole their content from elsewhere, or allow someone to create and sell their own 'version' of content that is functionally unidentifiable from canon

3) it's theft. The algorithm didn't come up with anything that it "makes," it just steals some real person's work and then mangles is a bit before regurgitating it with usually no credit to the original, actual creator. (In fandom terms: you have just done the equivalent of cropping out someone else's watermark and calling yourself the original artist. After all, the AI tool you used got that content from somewhere; it did not draw you a picture, it copy pasted a picture)

4) In some places, selling or distributing AI art is or may soon be illegal - and if it's not illegal, there are plenty of artists launching class action lawsuits against those who write the algorithm, and those who use it. Turns out artists don't like having their art stolen, mangled, and passed off as someone else's. Go figure.

Here are some articles that might help lay out more clear examples and arguments, from people more knowledgeable than me (I tried to imbed the links with anti-paywall and anti-tracker add ons, but if tumblr ate my formatting, just type "12ft.io/" in front of the url, or type the article name into your search engine and run it through your own ad-blocking, anti tracking set up):

These fake images reveal how AI amplifies our worst stereotypes [Source: Washington Post, Nov 2023]

Humans Are Biased; AI is even worse (Here's Why That Matters) [Source: Bloomburg, Dec 2023]

Why Artists Hate AI Art [Source: Tech Republic, Nov 2023]

Why Illustrators Are Furious About AI 'Art' [Source: The Guardian, Jan 2023]

Artists Are Losing The War Against AI [Source: The Atlantic, Oct 2023]

This tool lets you see for yourself how biased an AI can be [Source: MIT Technology Review, March 2023]

Midjourney's Class-Action lawsuit and what it could mean for future AI Image Generators [Source: Fortune Magazine, Jan 2024]

What the latest US Court rulings mean for AI Generated Copyright Status [Source: The Art Newspaper, Sep 2023]

AI-Generated Content and Copyright Law [Source: Built-in Magazine, Aug 2023 - take note that this is already outdated, it was just the most comprehensive recent article I could find quickly]

AI is making mincemeat out of art (not to mention intellectual property) [Source: The LA Times, Jan 2024]

Midjourney Allegedly Scraped Magic: The Gathering art for algorithm [Source: Kotaku, Jan 2024]

Leaked: the names of more than 16,000 non-consenting artists allegedly used to train Midjourney’s AI [Source: The Art Newpaper, Jan 2024]

#anti ai art#art theft#anti algorithms#sexism#classism#racism#stereotypes#lawsuits#midjourney#ethics#real human consequences#irresponsible use of algorithms#spicy autocomplete#sorry for the delayed answer

21 notes

·

View notes

Text

You think this is bad, try asking about mushroom foraging

its just saying fucking anything

11K notes

·

View notes

Text

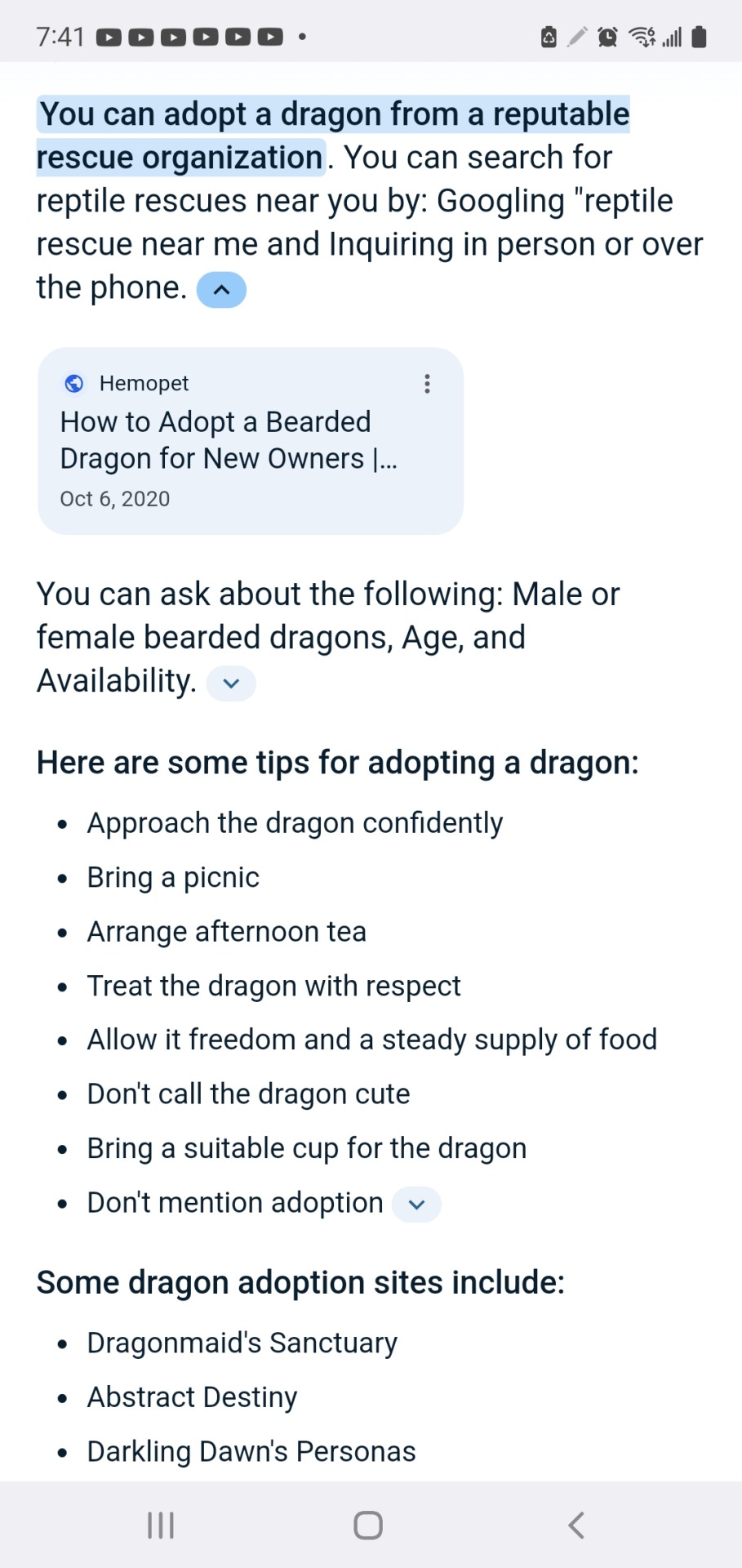

Testing GenAI on Inspector Spacetime Fandom

Out of sheer perversity, from time to time I've been testing public GenAI on Inspector Spacetime to see what hot garbage it produces. Microsoft recently tuned up Copilot, which freely but inaccurately summarizes from Fandom. Not only does it entirely miss its origins as the show-within-a-show on Community and as a meta-fandom gag on TVTropes, but it also gets the very first biographic detail about the Inspector completely, gratuitously wrong.

What the tech bros are hyping as "AI" is nothing more than a broke-ass Turing Test run as a scam to fleece venture capitalists. It can't even perform basic summary tasks accurately.

#inspector spacetime#community tv#tvtropes#spicy autocomplete#ai scam#plagiarism software#genai#Generative AI/LLMs/natural language processing/text-to-image generation/etc. has no place in the Inspector Spacetime fandom.

1 note

·

View note

Photo

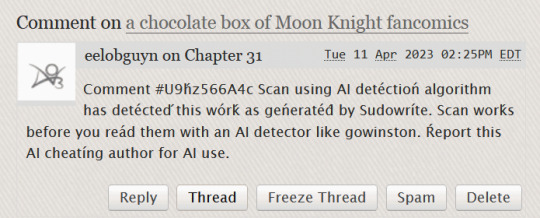

Folks, in case you had any doubts about “do these AO3 spam commenters really scan the text of a work, and give it any kind of analysis that matters?”...

This is the chapter it called out as “written” by an AI text generator:

#AO3#Moon Knight#Ptah's fic#AI text generator#LLMs#spam comments#ooh yeah we definitely use Spicy Autocomplete to make...drawings#take me to AO3 jail and lock me up for that one!

26 notes

·

View notes

Text

From now on I'm calling these kinds of AIs "Spicy Autocomplete".

5K notes

·

View notes

Text

Amazing to me how the most cogent analysis and defiantly bad readers coexist in this one place.

27K notes

·

View notes

Text

AI is a WMD

I'm in TARTU, ESTONIA! AI, copyright and creative workers' labor rights (TOMORROW, May 10, 8AM: Science Fiction Research Association talk, Institute of Foreign Languages and Cultures building, Lossi 3, lobby). A talk for hackers on seizing the means of computation (TOMORROW, May 10, 3PM, University of Tartu Delta Centre, Narva 18, room 1037).

Fun fact: "The Tragedy Of the Commons" is a hoax created by the white nationalist Garrett Hardin to justify stealing land from colonized people and moving it from collective ownership, "rescuing" it from the inevitable tragedy by putting it in the hands of a private owner, who will care for it properly, thanks to "rational self-interest":

https://pluralistic.net/2023/05/04/analytical-democratic-theory/#epistocratic-delusions

Get that? If control over a key resource is diffused among the people who rely on it, then (Garrett claims) those people will all behave like selfish assholes, overusing and undermaintaining the commons. It's only when we let someone own that commons and charge rent for its use that (Hardin says) we will get sound management.

By that logic, Google should be the internet's most competent and reliable manager. After all, the company used its access to the capital markets to buy control over the internet, spending billions every year to make sure that you never try a search-engine other than its own, thus guaranteeing it a 90% market share:

https://pluralistic.net/2024/02/21/im-feeling-unlucky/#not-up-to-the-task

Google seems to think it's got the problem of deciding what we see on the internet licked. Otherwise, why would the company flush $80b down the toilet with a giant stock-buyback, and then do multiple waves of mass layoffs, from last year's 12,000 person bloodbath to this year's deep cuts to the company's "core teams"?

https://qz.com/google-is-laying-off-hundreds-as-it-moves-core-jobs-abr-1851449528

And yet, Google is overrun with scams and spam, which find their way to the very top of the first page of its search results:

https://pluralistic.net/2023/02/24/passive-income/#swiss-cheese-security

The entire internet is shaped by Google's decisions about what shows up on that first page of listings. When Google decided to prioritize shopping site results over informative discussions and other possible matches, the entire internet shifted its focus to producing affiliate-link-strewn "reviews" that would show up on Google's front door:

https://pluralistic.net/2024/04/24/naming-names/#prabhakar-raghavan

This was catnip to the kind of sociopath who a) owns a hedge-fund and b) hates journalists for being pain-in-the-ass, stick-in-the-mud sticklers for "truth" and "facts" and other impediments to the care and maintenance of a functional reality-distortion field. These dickheads started buying up beloved news sites and converting them to spam-farms, filled with garbage "reviews" and other Google-pleasing, affiliate-fee-generating nonsense.

(These news-sites were vulnerable to acquisition in large part thanks to Google, whose dominance of ad-tech lets it cream 51 cents off every ad dollar and whose mobile OS monopoly lets it steal 30 cents off every in-app subscriber dollar):

https://www.eff.org/deeplinks/2023/04/saving-news-big-tech

Now, the spam on these sites didn't write itself. Much to the chagrin of the tech/finance bros who bought up Sports Illustrated and other venerable news sites, they still needed to pay actual human writers to produce plausible word-salads. This was a waste of money that could be better spent on reverse-engineering Google's ranking algorithm and getting pride-of-place on search results pages:

https://housefresh.com/david-vs-digital-goliaths/

That's where AI comes in. Spicy autocomplete absolutely can't replace journalists. The planet-destroying, next-word-guessing programs from Openai and its competitors are incorrigible liars that require so much "supervision" that they cost more than they save in a newsroom:

https://pluralistic.net/2024/04/29/what-part-of-no/#dont-you-understand

But while a chatbot can't produce truthful and informative articles, it can produce bullshit – at unimaginable scale. Chatbots are the workers that hedge-fund wreckers dream of: tireless, uncomplaining, compliant and obedient producers of nonsense on demand.

That's why the capital class is so insatiably horny for chatbots. Chatbots aren't going to write Hollywood movies, but studio bosses hyperventilated at the prospect of a "writer" that would accept your brilliant idea and diligently turned it into a movie. You prompt an LLM in exactly the same way a studio exec gives writers notes. The difference is that the LLM won't roll its eyes and make sarcastic remarks about your brainwaves like "ET, but starring a dog, with a love plot in the second act and a big car-chase at the end":

https://pluralistic.net/2023/10/01/how-the-writers-guild-sunk-ais-ship/

Similarly, chatbots are a dream come true for a hedge fundie who ends up running a beloved news site, only to have to fight with their own writers to get the profitable nonsense produced at a scale and velocity that will guarantee a high Google ranking and millions in "passive income" from affiliate links.

One of the premier profitable nonsense companies is Advon, which helped usher in an era in which sites from Forbes to Money to USA Today create semi-secret "review" sites that are stuffed full of badly researched top-ten lists for products from air purifiers to cat beds:

https://housefresh.com/how-google-decimated-housefresh/

Advon swears that it only uses living humans to produce nonsense, and not AI. This isn't just wildly implausible, it's also belied by easily uncovered evidence, like its own employees' Linkedin profiles, which boast of using AI to create "content":

https://housefresh.com/wp-content/uploads/2024/05/Advon-AI-LinkedIn.jpg

It's not true. Advon uses AI to produce its nonsense, at scale. In an excellent, deeply reported piece for Futurism, Maggie Harrison Dupré brings proof that Advon replaced its miserable human nonsense-writers with tireless chatbots:

https://futurism.com/advon-ai-content

Dupré describes how Advon's ability to create botshit at scale contributed to the enshittification of clients from Yoga Journal to the LA Times, "Us Weekly" to the Miami Herald.

All of this is very timely, because this is the week that Google finally bestirred itself to commence downranking publishers who engage in "site reputation abuse" – creating these SEO-stuffed fake reviews with the help of third parties like Advon:

https://pluralistic.net/2024/05/03/keyword-swarming/#site-reputation-abuse

(Google's policy only forbids site reputation abuse with the help of third parties; if these publishers take their nonsense production in-house, Google may allow them to continue to dominate its search listings):

https://developers.google.com/search/blog/2024/03/core-update-spam-policies#site-reputation

There's a reason so many people believed Hardin's racist "Tragedy of the Commons" hoax. We have an intuitive understanding that commons are fragile. All it takes is one monster to start shitting in the well where the rest of us get our drinking water and we're all poisoned.

The financial markets love these monsters. Mark Zuckerberg's key insight was that he could make billions by assembling vast dossiers of compromising, sensitive personal information on half the world's population without their consent, but only if he kept his costs down by failing to safeguard that data and the systems for exploiting it. He's like a guy who figures out that if he accumulates enough oily rags, he can extract so much low-grade oil from them that he can grow rich, but only if he doesn't waste money on fire-suppression:

https://locusmag.com/2018/07/cory-doctorow-zucks-empire-of-oily-rags/

Now Zuckerberg and the wealthy, powerful monsters who seized control over our commons are getting a comeuppance. The weak countermeasures they created to maintain the minimum levels of quality to keep their platforms as viable, going concerns are being overwhelmed by AI. This was a totally foreseeable outcome: the history of the internet is a story of bad actors who upended the assumptions built into our security systems by automating their attacks, transforming an assault that wouldn't be economically viable into a global, high-speed crime wave:

https://pluralistic.net/2022/04/24/automation-is-magic/

But it is possible for a community to maintain a commons. This is something Hardin could have discovered by studying actual commons, instead of inventing imaginary histories in which commons turned tragic. As it happens, someone else did exactly that: Nobel Laureate Elinor Ostrom:

https://www.onthecommons.org/magazine/elinor-ostroms-8-principles-managing-commmons/

Ostrom described how commons can be wisely managed, over very long timescales, by communities that self-governed. Part of her work concerns how users of a commons must have the ability to exclude bad actors from their shared resources.

When that breaks down, commons can fail – because there's always someone who thinks it's fine to shit in the well rather than walk 100 yards to the outhouse.

Enshittification is the process by which control over the internet moved from self-governance by members of the commons to acts of wanton destruction committed by despicable, greedy assholes who shit in the well over and over again.

It's not just the spammers who take advantage of Google's lazy incompetence, either. Take "copyleft trolls," who post images using outdated Creative Commons licenses that allow them to terminate the CC license if a user makes minor errors in attributing the images they use:

https://pluralistic.net/2022/01/24/a-bug-in-early-creative-commons-licenses-has-enabled-a-new-breed-of-superpredator/

The first copyleft trolls were individuals, but these days, the racket is dominated by a company called Pixsy, which pretends to be a "rights protection" agency that helps photographers track down copyright infringers. In reality, the company is committed to helping copyleft trolls entrap innocent Creative Commons users into paying hundreds or even thousands of dollars to use images that are licensed for free use. Just as Advon upends the economics of spam and deception through automation, Pixsy has figured out how to send legal threats at scale, robolawyering demand letters that aren't signed by lawyers; the company refuses to say whether any lawyer ever reviews these threats:

https://pluralistic.net/2022/02/13/an-open-letter-to-pixsy-ceo-kain-jones-who-keeps-sending-me-legal-threats/

This is shitting in the well, at scale. It's an online WMD, designed to wipe out the commons. Creative Commons has allowed millions of creators to produce a commons with billions of works in it, and Pixsy exploits a minor error in the early versions of CC licenses to indiscriminately manufacture legal land-mines, wantonly blowing off innocent commons-users' legs and laughing all the way to the bank:

https://pluralistic.net/2023/04/02/commafuckers-versus-the-commons/

We can have an online commons, but only if it's run by and for its users. Google has shown us that any "benevolent dictator" who amasses power in the name of defending the open internet will eventually grow too big to care, and will allow our commons to be demolished by well-shitters:

https://pluralistic.net/2024/04/04/teach-me-how-to-shruggie/#kagi

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/05/09/shitting-in-the-well/#advon

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

--

Catherine Poh Huay Tan (modified) https://www.flickr.com/photos/68166820@N08/49729911222/

Laia Balagueró (modified) https://www.flickr.com/photos/lbalaguero/6551235503/

CC BY 2.0 https://creativecommons.org/licenses/by/2.0/

#pluralistic#pixsy#wmds#automation#ai#botshit#force multipliers#weapons of mass destruction#commons#shitting in the drinking water#ostrom#elinor ostrom#sports illustrated#slop#advon#google#monopoly#site reputation abuse#enshittification#Maggie Harrison Dupré#futurism

320 notes

·

View notes

Text

Julie's AI Discussion Masterpost

Seeing as I talk about this a lot, and end up saying the same things over and over, I decided I would make the list from my previous post a separate masterpost that I can link to. This is limited to my own posts, although often I am commenting on other people's threads. Sometimes the topic of the thread is slightly tangential, but I tried to pick out posts that illustrated particular common points.

Disclaimer to not mistake me for a serious expert, etc.

AI misconceptions:

Why the term "AI" is correct as used

Why AI art isn't meaningfully "theft", especially in a fanwork context (and why attempting to redefine copyright to frame it as such is very, very bad) (+ data scraping "consent" elaboration)

Why LLMs are considered impressive despite their shortcomings

Why ChatGPT isn't just spicy autocomplete (+ Stochastic parrot rejection)

Additional opinions:

Why I am a leftist and remain in favour of AI research (1, 2)

How AI will become widespread and how we should prepare for that now (+ Why it might be more practical to campaign for UBI over AI bans)

60 notes

·

View notes

Text

"SPICY AUTOCOMPLETE" IS THE BEST DESCRIPTION OF AI I'VE EVER HEARD.

youtube

5 notes

·

View notes

Text

"AI doesn't exist yet. This is machine learning." YES. ITS NOT ARTIFICIAL INTELLIGENCE IT'S JUST AUTOCOMPLETE.

This is why it is so important to be critical and double check everything you generate using image generators and text-based AI.

55K notes

·

View notes

Text

Weekend Links

My posts

Spent the week fending off a long-covid fatigue flare, so I am pleased to have finished the Angel (Mugler, 1992) perfume writeup.

I'll admit that I got on Twitter and made a doofy "I'M LEAVING" post, because I can't with this "everything app" business.

Reblogs of interest

Sinéad O'Connor passed away at age 56. As sad as this was, and as troubled a life as she had, I hadn't heard that she had said that her Saturday Night Live protest didn't ruin her career: "I fucked up [record executives' careers], not mine. It meant I had to make my living playing live, and am I born for live performance."

Strike averted: A massive win for UPS workers.

Elon Musk: Still being a dipshit.

Make sure you have XKit Rewritten, not the old version, to deal with the Tumblr layout changes.

Also, make sure to put water in your dry-ass childhood markers.

Spicy autocomplete

Assigned gender at bath

Video

When your cat is obsessed with spying on/hunting you

The sacred texts

"The guy from Modest Mouse sings like someone is chasing him with a garden hose"

Personal tags of the week

idk, #cats was pretty strong. Really putting effort into #wet beast wednesday

24 notes

·

View notes

Quote

Say what you will about Elon Musk, but he's doing the hard work of convincing people that large language models aren't thinking machines, they're just spicy autocomplete regurgitating a probabilistic mix of the dataset contoured by training and reinforcement.

Max Kennerly

10 notes

·

View notes

Text

Nine People I'd Like To Get To Know Better

Rules: answer the questions and tag nine people you'd like to know better!

Since I've been tagged by both @owlsandwich and @teacupsandstarlight, I guess it's about time to pick up my spoons and play THE GAME!

(Sorry, I couldn't resist! ꒰(@`��´)꒱ )

Last song I listened to:

Since tomorrow it's Pokémon Day, I was in a mood for a Pokémon OST Mix while working (the mix before this was on the Movies soundtracks, but oh well!).

Currently watching:

Technically, I'm not constantly following any anime beside watching One Piece every weekend with my homies, but when the spoons are enough, Pokémon Horizon is getting pretty interesting.

Spicy/savory/sweet:

Savory and Sweet are about equal for me, and while I don't hate spicy food, I can't stand it too much in one sitting.

Relationship status:

Free as the wind as my aroace ass desires. 〜(꒪꒳꒪)〜

Current obsession:

Pokémon and Danny Phantom for creating content, due to the previously mentioned P-Day and the incoming Green With Envy event respectively. (I'm so hyped with anticipation for both!)

As for what I'm reading, it's definitely the One Piece and BnHA mangas. I can't wait each week for the new updates, so this definitely count.

I have no idea who to tag, really. So I'm going to press a letter at random after the @ and see who Tumblr suggests with autocomplete.

@destiny-islanders @phantomrose96 @stealingyourbones @muffinlance @lucifer-is-a-bag-of-dicks @ectoblastic @gentrychild @krossan @raaorqtpbpdy

Wow, what a bunch of interesting fellows (gender-neutral)! Feel free to ignore the tag, since it was at random, but I'd really like to read about you!

ฅ^•ﻌ•^ฅ

#the dragon answers#tag game#thanks for the tag!#sorry for being late Owlsandwich#I saw the tag while I was at work and told myself: I'll answer that later#then I promptly forgot#the woes of an ADHD brain#object permanence#whomst?

6 notes

·

View notes

Text

I mean, there's also that the ultimate point of this technology is not actually for people to play around and have fun with generating images. It's for corporations to use whenever they need an Artlike Object--think packaging and advertising, motel room decor, illustrations for children's magazines, etc--without the bother of hiring an actual artist who A) might accidentally introduce a point of view, and B) will expect to be paid.

With image generation, they will need to pay someone to operate the technology--give it prompts, provide feedback on the results, refine prompts, and select images for use (or for review/final selection by management, more likely).

I don't know a huge amount about this technology, but I think the "AI Art" advocates are probably right that there's a skill set and a learning curve involved in getting professional-level results out of this technology.

But the people hired for these kinds of jobs will be classified--and paid--as technicians, not artists. (Even if they are, in some/many cases, the exact same individuals who were laid off from the Art Department when the technology was acquired, and/or whose work is in the dataset being used to train the program on the company's house style.)

When we argue over how hobbyists using these tools are not real artists making real art, we're doing the corporations' work for them, making the case for why jobs using this technology can be compensated as minimally-skilled or even unskilled labor.

"It's not real art" isn't going to stop this technology, because the people benefiting from it do not care. What will stop it--or at least slow it down, and potentially provide for it being used in some kind of ethical way--is if using it requires paying fairly for the human labor involved.

i wish people would stop arguing and saying "AI art isn't real art" and instead focus on the actual issue, which is that it's currently based on wide-scale art theft and unethically built by using other people's work without their knowledge or consent. you can't define what "is and isn't" art, it's an abstract concept and a complete non-issue. the real issue is the stolen artwork it was built on!!

1K notes

·

View notes

Text

Discussion about AI abuse could benefit greatly from people understanding that it is a tool and that it is not doing anything, people are. Seriously, reframe all discussion like that and you'll actually be able to define the problem.

AI isn't making stolen art. People are using it to produce cheap results based on previous works and aren't compensating or even obtaining permission from the original artists. Ditto for writing. This is just the latest tool of capitalism for exploitation.

This also acknowledges that the tools themselves are inherently neutral, and not all of their uses are harmful. 'Don't use AI on your selfies!' Why the fuck not? They're your selfies. 'Don't use it for writing prompts!' Honey, you've been using prompt generators for years. They're not intelligent. They're just spicy autocomplete. And 'feeding them data' is only a concern if they're still collecting it - once the input is no longer being used for learning, that's no longer a concern. Fearmongering over the public not understanding how technology works is alive and well, and it's in full force here.

I'll say again: people are stealing art. They are using these machine learning tools (which are not actually learning, and not actually intelligent) to do it. That may seem like a matter of semantics, but it's really not. Using AI is not, by itself and independent of context, automatically evil. It is evil when it exploits work done by other people who did not consent. It could be (and sometimes is) used for a lot of other things that are harmless or even beneficial, but under capitalism, every tool is primarily used to exploit people for as little as possible, preferably for free. It's important to know when using it is harmful and when it's not, otherwise you're just screaming at anyone for even mentioning it in a possible positive context.

6 notes

·

View notes