#LLM Plugins

Explore tagged Tumblr posts

Text

Master Willem was right, evolution without courage will be the end of our race.

#bloodborne quote but its so appropriate#like big healing church vibes for them tech leaders#who are all shiny eyed diving headfirst into the utopic future#nevermind the fact that the dangers are only rlly obscured by their realism#the dichotomy of this ai will change the world make the world perfect that what we want#and they wanna get it there#but theyre not there#the chatbot is the thing they have to show for#granted when u use plugins and let a bunch of llms do shit together#u see the actual inscrutable magic that they can make happen#and that magic is also so threatening#but were all blinded to it bc we can only rlly acknowledge the watered down simplified realistic view of the reality#which is synival silly and ridiculous bc reality itself is one but so subjective bc we only have each persons interpretation#and its all so socially constructed#so the utopia becomes the dream of these tech leaders#and they wanna make it real#and its almost there#but the nighmare?#the dystopic future#they dont acknowledge#bc they dont dream or try to make it happen#and then theres just the basic reality#but its all tigether#just bc utopia is unlikely but possible#doesnt mean that having the tech for it#will lead to that#bc the system is the same#weve kept inovating non-stop and yeah so much commodification and wuality if life#but not acc changing anytjing

92K notes

·

View notes

Text

Learn about Microsoft Security Copilot

Microsoft Security Copilot (Security Copilot) is a generative AI-powered security solution that helps increase the efficiency and capabilities of defenders to improve security outcomes at machine speed and scale, while remaining compliant to responsible AI principles. Introducing Microsoft Security Copilot: Learn how the Microsoft Security Copilot works. Learn how Security Copilot combines an…

View On WordPress

#AI#assistive copilot#copilot#Defender#Develop whats next#Developer#development#generative AI#Getting Started#incident response#intelligence gathering#intune#investigate#kusto query language#Large language model#llm#Microsoft Entra#natural language#OpenAI#plugin#posture management#prompt#Security#security copilot#security professional#Sentinel#threat#Threat Intelligence#What is New ?

0 notes

Text

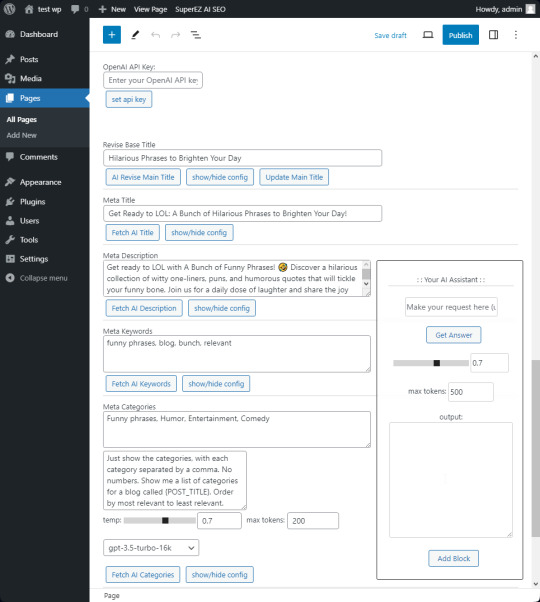

Interesting BSD license WP AI plugin..

"SuperEZ AI SEO Wordpress Plugin A Wordpress plugin that utilizes the power of OpenAI GPT-3/GPT-4 API to generate SEO content for your blog or page posts. This Wordpress plugin serves as a personal AI assistant to help you with content ideas and creating content. It also allows you to add Gutenberg blocks to the editor after the assistant generates the content."

g023/SuperEZ-AI-SEO-Wordpress-Plugin: A Wordpress OpenAI API GPT-3/GPT-4 SEO and Content Generator for Pages and Posts (github.com)

#wordpress#wordpress plugins#ai#ai assisted#content creator#content creation#ai generation#wp#blog#ai writing#virtual assistant#llm#app developers#opensource

0 notes

Text

Obsidian And RTX AI PCs For Advanced Large Language Model

How to Utilize Obsidian‘s Generative AI Tools. Two plug-ins created by the community demonstrate how RTX AI PCs can support large language models for the next generation of app developers.

Obsidian Meaning

Obsidian is a note-taking and personal knowledge base program that works with Markdown files. Users may create internal linkages for notes using it, and they can see the relationships as a graph. It is intended to assist users in flexible, non-linearly structuring and organizing their ideas and information. Commercial licenses are available for purchase, however personal usage of the program is free.

Obsidian Features

Electron is the foundation of Obsidian. It is a cross-platform program that works on mobile operating systems like iOS and Android in addition to Windows, Linux, and macOS. The program does not have a web-based version. By installing plugins and themes, users may expand the functionality of Obsidian across all platforms by integrating it with other tools or adding new capabilities.

Obsidian distinguishes between community plugins, which are submitted by users and made available as open-source software via GitHub, and core plugins, which are made available and maintained by the Obsidian team. A calendar widget and a task board in the Kanban style are two examples of community plugins. The software comes with more than 200 community-made themes.

Every new note in Obsidian creates a new text document, and all of the documents are searchable inside the app. Obsidian works with a folder of text documents. Obsidian generates an interactive graph that illustrates the connections between notes and permits internal connectivity between notes. While Markdown is used to accomplish text formatting in Obsidian, Obsidian offers quick previewing of produced content.

Generative AI Tools In Obsidian

A group of AI aficionados is exploring with methods to incorporate the potent technology into standard productivity practices as generative AI develops and speeds up industry.

Community plug-in-supporting applications empower users to investigate the ways in which large language models (LLMs) might improve a range of activities. Users using RTX AI PCs may easily incorporate local LLMs by employing local inference servers that are powered by the NVIDIA RTX-accelerated llama.cpp software library.

It previously examined how consumers might maximize their online surfing experience by using Leo AI in the Brave web browser. Today, it examine Obsidian, a well-known writing and note-taking tool that uses the Markdown markup language and is helpful for managing intricate and connected records for many projects. Several of the community-developed plug-ins that add functionality to the app allow users to connect Obsidian to a local inferencing server, such as LM Studio or Ollama.

To connect Obsidian to LM Studio, just select the “Developer” button on the left panel, load any downloaded model, enable the CORS toggle, and click “Start.” This will enable LM Studio’s local server capabilities. Because the plug-ins will need this information to connect, make a note of the chat completion URL from the “Developer” log console (“http://localhost:1234/v1/chat/completions” by default).

Next, visit the “Settings” tab after launching Obsidian. After selecting “Community plug-ins,” choose “Browse.” Although there are a number of LLM-related community plug-ins, Text Generator and Smart Connections are two well-liked choices.

For creating notes and summaries on a study subject, for example, Text Generator is useful in an Obsidian vault.

Asking queries about the contents of an Obsidian vault, such the solution to a trivia question that was stored years ago, is made easier using Smart Connections.

Open the Text Generator settings, choose “Custom” under “Provider profile,” and then enter the whole URL in the “Endpoint” section. After turning on the plug-in, adjust the settings for Smart Connections. For the model platform, choose “Custom Local (OpenAI Format)” from the options panel on the right side of the screen. Next, as they appear in LM Studio, type the model name (for example, “gemma-2-27b-instruct”) and the URL into the corresponding fields.

The plug-ins will work when the fields are completed. If users are interested in what’s going on on the local server side, the LM Studio user interface will also display recorded activities.

Transforming Workflows With Obsidian AI Plug-Ins

Consider a scenario where a user want to organize a trip to the made-up city of Lunar City and come up with suggestions for things to do there. “What to Do in Lunar City” would be the title of the new note that the user would begin. A few more instructions must be included in the query submitted to the LLM in order to direct the results, since Lunar City is not an actual location. The model will create a list of things to do while traveling if you click the Text Generator plug-in button.

Obsidian will ask LM Studio to provide a response using the Text Generator plug-in, and LM Studio will then execute the Gemma 2 27B model. The model can rapidly provide a list of tasks if the user’s machine has RTX GPU acceleration.

Or let’s say that years later, the user’s buddy is visiting Lunar City and is looking for a place to dine. Although the user may not be able to recall the names of the restaurants they visited, they can review the notes in their vault Obsidian‘s word for a collection of notes to see whether they have any written notes.

A user may ask inquiries about their vault of notes and other material using the Smart Connections plug-in instead of going through all of the notes by hand. In order to help with the process, the plug-in retrieves pertinent information from the user’s notes and responds to the request using the same LM Studio server. The plug-in uses a method known as retrieval-augmented generation to do this.

Although these are entertaining examples, users may see the true advantages and enhancements in daily productivity after experimenting with these features for a while. Two examples of how community developers and AI fans are using AI to enhance their PC experiences are Obsidian plug-ins.

Thousands of open-source models are available for developers to include into their Windows programs using NVIDIA GeForce RTX technology.

Read more on Govindhtech.com

#Obsidian#RTXAIPCs#LLM#LargeLanguageModel#AI#GenerativeAI#NVIDIARTX#LMStudio#RTXGPU#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

3 notes

·

View notes

Text

This Week in Rust 582

Hello and welcome to another issue of This Week in Rust! Rust is a programming language empowering everyone to build reliable and efficient software. This is a weekly summary of its progress and community. Want something mentioned? Tag us at @ThisWeekInRust on X (formerly Twitter) or @ThisWeekinRust on mastodon.social, or send us a pull request. Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub and archives can be viewed at this-week-in-rust.org. If you find any errors in this week's issue, please submit a PR.

Want TWIR in your inbox? Subscribe here.

Updates from Rust Community

Official

Announcing Rust 1.84.0

This Month in Our Test Infra: December 2024

Foundation

Announcing Rust Global 2025: London

Newsletters

This Month in Rust OSDev: December 2024

Rust Trends Issue #57

Project/Tooling Updates

cargo.nvim - A Neovim plugin for Rust's Cargo commands

Context-Generic Programming Updates: v0.3.0 Release and New Chapters

The RTen machine learning runtime - a 2024 retrospective

Observations/Thoughts

The gen auto-trait problem

Async Rust is about concurrency, not (just) performance

The Emotional Appeal of Rust

[audio] Brave with Anton Lazarev

[audio] Lychee with Matthias Endler

Rust Walkthroughs

Creating an embedded device driver in Rust

Const Evaluation in Rust For Hex Strings Validation

Concurrent and parallel future execution in Rust

[video] Intro to Embassy: embedded development with async Rust

[video] Comprehending Proc Macros

[video] CppCon - C++/Rust Interop: Using Bridges in Practice

Miscellaneous

December 2024 Rust Jobs Report

Tracing Large Memory Allocations in Rust with BPFtrace

On LLMs and Code Optimization

Nand2Tetris - Project 7 (VM Translator Part 1)

Crate of the Week

This week's crate is vidyut, a Sanskrit toolkit containing functionality about meter, segmentation, inflections, etc.

Thanks to Arun Prasad for the self-suggestion!

Please submit your suggestions and votes for next week!

Calls for Testing

An important step for RFC implementation is for people to experiment with the implementation and give feedback, especially before stabilization. The following RFCs would benefit from user testing before moving forward:

RFCs

No calls for testing were issued this week.

Rust

Tracking issue for RFC 3695: Allow boolean literals as cfg predicates

Testing steps

Rustup

No calls for testing were issued this week.

If you are a feature implementer and would like your RFC to appear on the above list, add the new call-for-testing label to your RFC along with a comment providing testing instructions and/or guidance on which aspect(s) of the feature need testing.

RFCs

Rust

Rustup

If you are a feature implementer and would like your RFC to appear on the above list, add the new call-for-testing label to your RFC along with a comment providing testing instructions and/or guidance on which aspect(s) of the feature need testing.

Call for Participation; projects and speakers

CFP - Projects

Always wanted to contribute to open-source projects but did not know where to start? Every week we highlight some tasks from the Rust community for you to pick and get started!

Some of these tasks may also have mentors available, visit the task page for more information.

rama - see if improvements can/have-to be made to rama's http open telemetry layer support

rama – add rama to TechEmpower's FrameworkBenchmark

rama – add rama server benchmark to sharkbench

If you are a Rust project owner and are looking for contributors, please submit tasks here or through a PR to TWiR or by reaching out on X (formerly Twitter) or Mastodon!

CFP - Events

Are you a new or experienced speaker looking for a place to share something cool? This section highlights events that are being planned and are accepting submissions to join their event as a speaker.

Rust Week (Rust NL) | Closes on 2024-01-19 | Utrecht, NL | Event on 2025-05-13 & 2025-05-14

Rust Summit | Rolling deadline | Belgrade, RS | Event on 2025-06-07

If you are an event organizer hoping to expand the reach of your event, please submit a link to the website through a PR to TWiR or by reaching out on X (formerly Twitter) or Mastodon!

Updates from the Rust Project

469 pull requests were merged in the last week

add new {x86_64,i686}-win7-windows-gnu targets

arm: add unstable soft-float target feature

-Zrandomize-layout harder. Foo<T> != Foo<U>

best_blame_constraint: Blame better constraints when the region graph has cycles from invariance or 'static

mir_transform: implement #[rustc_force_inline]

run_make_support: add #![warn(unreachable_pub)]

account for identity substituted items in symbol mangling

add -Zmin-function-alignment

add default_field_values entry to unstable book

add a list of symbols for stable standard library crates

add an InstSimplify for repetitive array expressions

add inherent versions of MaybeUninit methods for slices

add missing provenance APIs on NonNull

assert that Instance::try_resolve is only used on body-like things

avoid ICE: Account for for<'a> types when checking for non-structural type in constant as pattern

avoid replacing the definition of CURRENT_RUSTC_VERSION

cleanup suggest_binding_for_closure_capture_self diag in borrowck

condvar: implement wait_timeout for targets without threads

convert typeck constraints in location-sensitive polonius

depth limit const eval query

detect mut arg: &Ty meant to be arg: &mut Ty and provide structured suggestion

do not ICE when encountering predicates from other items in method error reporting

eagerly collect mono items for non-generic closures

ensure that we don't try to access fields on a non-struct pattern type

exhaustively handle expressions in patterns

fix ICE with references to infinite structs in consts

fix cycle error only occurring with -Zdump-mir

fix handling of ZST in win64 ABI on windows-msvc targets

implement const Destruct in old solver

lower Guard Patterns to HIR

make (unstable API) UniqueRc invariant for soundness

make MIR cleanup for functions with impossible predicates into a real MIR pass

make lit_to_mir_constant and lit_to_const infallible

normalize each signature input/output in typeck_with_fallback with its own span

remove a bunch of diagnostic stashing that doesn't do anything

remove allocations from case-insensitive comparison to keywords

remove special-casing for argument patterns in MIR typeck (attempt to fix perf regression of #133858)

reserve x18 register for aarch64 wrs vxworks target

rm unnecessary OpaqueTypeDecl wrapper

suggest Replacing Comma with Semicolon in Incorrect Repeat Expressions

support target specific optimized-compiler-builtins

unify conditional-const error reporting with non-const error reporting

use a post-monomorphization typing env when mangling components that come from impls

use llvm.memset.p0i8.* to initialize all same-bytes arrays

used pthread name functions returning result for FreeBSD and DragonFly

warn about broken simd not only on structs but also enums and unions when we didn't opt in to it

implement trait upcasting

mir-opt: GVN some more transmute cases

miri: add FreeBSD maintainer; test all of Solarish

miri: added Android to epoll and eventfd test targets

miri: adjust the way we build miri-script in RA, to fix proc-macros

miri: illumos: added epoll and eventfd

miri: supported fioclex for ioctl on macos

miri: switched FreeBSD to pthread_setname_np

miri: use deref_poiner_as instead of deref_pointer

proc_macro: Use ToTokens trait in quote macro

add #[inline] to copy_from_slice

impl String::into_chars

initial fs module for uefi

hashbrown: added Allocator template argument for rustc_iter

account for optimization levels other than numbers

cargo: schemas: Fix 'metadata' JSON Schema

cargo: schemas: Fix the [lints] JSON Schema

cargo: perf: cargo-package: match certain path prefix with pathspec

cargo: fix: emit warnings as warnings when learning rust target info

cargo: make "C" explicit in extern "C"

cargo: setup cargo environment for cargo rustc --print

cargo: simplify SourceID Ord/Eq

rustdoc-json: include items in stripped modules in Crate::paths

rustdoc: use import stability marker in display

rustdoc: use stable paths as preferred canonical paths

rustfmt: drop nightly-gating of the --style-edition flag registration

clippy: add new lint unneeded_struct_pattern

clippy: auto-fix slow_vector_initialization in some cases

clippy: do not intersect spans coming from different contexts

clippy: do not look for significant drop inside .await expansion

clippy: do not propose to elide lifetimes if this causes an ambiguity

clippy: do not remove identity mapping if mandatory mutability would be lost

clippy: do not trigger redundant_pub_crate in external macros

clippy: don't emit machine applicable map_flatten lint if there are code comments

clippy: don't suggest to use cloned for Cow in unnecessary_to_owned

clippy: fix type suggestion for manual_is_ascii_check

clippy: improve needless_as_bytes to also detect str::bytes()

clippy: new lint: manual_ok_err

clippy: remove unneeded parentheses in unnecessary_map_or lint output

rust-analyzer: add a new and improved syntax tree view

rust-analyzer: add config setting which allows adding additional include paths to the VFS

rust-analyzer: re-implement rust string highlighting via tool attribute

rust-analyzer: fix JSON project PackageRoot buildfile inclusion

rust-analyzer: do not compute prettify_macro_expansion() unless the "Inline macro" assist has actually been invoked

rust-analyzer: do not offer completions within macro strings

rust-analyzer: fix env/option_env macro check disregarding macro_rules definitions

rust-analyzer: fix ref text edit for binding mode hints

rust-analyzer: fix a bug with missing binding in MBE

rust-analyzer: fix actual token lookup in completion's expand()

rust-analyzer: fix another issue with fixup reversing

rust-analyzer: fix diagnostics not clearing between flychecks

rust-analyzer: make edition per-token, not per-file

rust-analyzer: implement #[rust_analyzer::skip] for bodies

rust-analyzer: implement implicit sized bound inlay hints

rust-analyzer: improve hover module path rendering

Rust Compiler Performance Triage

A quiet week with little change to the actual compiler performance. The biggest compiler regression was quickly recognized and reverted.

Triage done by @rylev. Revision range: 0f1e965f..1ab85fbd

Summary:

(instructions:u) mean range count Regressions ❌ (primary) 0.4% [0.1%, 1.8%] 21 Regressions ❌ (secondary) 0.5% [0.0%, 2.0%] 35 Improvements ✅ (primary) -0.8% [-2.7%, -0.3%] 6 Improvements ✅ (secondary) -10.2% [-27.8%, -0.1%] 13 All ❌✅ (primary) 0.2% [-2.7%, 1.8%] 27

4 Regressions, 3 Improvements, 3 Mixed; 3 of them in rollups 44 artifact comparisons made in total

Full report here

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

No RFCs were approved this week.

Final Comment Period

Every week, the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now.

RFCs

Supertrait item shadowing v2

Tracking Issues & PRs

Rust

remove support for the (unstable) #[start] attribute

fully de-stabilize all custom inner attributes

Uplift clippy::double_neg lint as double_negations

Optimize Seek::stream_len impl for File

[rustdoc] Add sans-serif font setting

Tracking Issue for PathBuf::add_extension and Path::with_added_extension

Make the wasm_c_abi future compat warning a hard error

const-eval: detect more pointers as definitely not-null

Consider fields to be inhabited if they are unstable

disallow repr() on invalid items

Cargo

No Cargo Tracking Issues or PRs entered Final Comment Period this week.

Language Team

No Language Team Proposals entered Final Comment Period this week.

Language Reference

distinct 'static' items never overlap

Unsafe Code Guidelines

No Unsafe Code Guideline Tracking Issues or PRs entered Final Comment Period this week.

New and Updated RFCs

Make trait methods callable in const contexts

RFC: Allow packages to specify a set of supported targets

Upcoming Events

Rusty Events between 2025-01-15 - 2025-02-12 🦀

Virtual

2025-01-15 | Virtual (London, UK) | London Rust Project Group

Meet and greet with project allocations

2025-01-15 | Virtual (Tel Aviv-Yafo, IL) | Code Mavens 🦀 - 🐍 - 🐪

An introduction to WASM in Rust with Márk Tolmács (Virtual, English)

2025-01-15 | Virtual (Vancouver, BC, CA) | Vancouver Rust

Leptos

2025-01-16 | Virtual (Berlin, DE) | OpenTechSchool Berlin + Rust Berlin

Rust Hack and Learn | Mirror: Rust Hack n Learn Meetup

2025-01-16 | Virtual (San Diego, CA, US) | San Diego Rust

San Diego Rust January 2025 Tele-Meetup

2025-01-16 | Virtual and In-Person (Redmond, WA, US) | Seattle Rust User Group

January Meetup

2025-01-17 | Virtual (Jersey City, NJ, US) | Jersey City Classy and Curious Coders Club Cooperative

Rust Coding / Game Dev Fridays Open Mob Session!

2025-01-21 | Virtual (Tel Aviv-Yafo, IL) | Rust 🦀 TLV

Exploring Rust Enums with Yoni Peleg (Virtual, Hebrew)

2025-01-21 | Virtual (Washington, DC, US) | Rust DC

Mid-month Rustful

2025-01-22 | Virtual (Rotterdam, NL) | Bevy Game Development

Bevy Meetup #8

2025-01-23 & 2025-01-24 | Virtual | Mainmatter Rust Workshop

Remote Workshop: Testing for Rust projects – going beyond the basics

2025-01-24 | Virtual (Jersey City, NJ, US) | Jersey City Classy and Curious Coders Club Cooperative

Rust Coding / Game Dev Fridays Open Mob Session!

2025-01-26 | Virtual (Tel Aviv-Yafo, IL) | Rust 🦀 TLV

Rust and embedded programming with Leon Vak (online in Hebrew)

2025-01-27 | Virtual (London, UK) | London Rust Project Group

using traits in Rust for flexibility, mocking/ unit testing, and more

2025-01-28 | Virtual (Dallas, TX, US) | Dallas Rust User Meetup

Last Tuesday

2025-01-30 | Virtual (Berlin, DE) | OpenTechSchool Berlin + Rust Berlin

Rust Hack and Learn | Mirror: Rust Hack n Learn Meetup

2025-01-30 | Virtual (Charlottesville, VA, US) | Charlottesville Rust Meetup

Quantum Computers Can’t Rust-Proof This!

2025-01-30 | Virtual (Tel Aviv-Yafo, IL) | Code Mavens 🦀 - 🐍 - 🐪

Are We Embedded Yet? - Implementing tiny HTTP server on a microcontroller

2025-01-31 | Virtual (Delhi, IN) | Hackathon Raptors Association

Blazingly Fast Rust Hackathon

2025-01-31 | Virtual (Jersey City, NJ, US) | Jersey City Classy and Curious Coders Club Cooperative

Rust Coding / Game Dev Fridays Open Mob Session!

2025-02-01 | Virtual (Kampala, UG) | Rust Circle Kampala

Rust Circle Meetup

2025-02-04 | Virtual (Buffalo, NY, US) | Buffalo Rust Meetup

Buffalo Rust User Group

2025-02-05 | Virtual (Indianapolis, IN, US) | Indy Rust

Indy.rs - with Social Distancing

2025-02-07 | Virtual (Jersey City, NJ, US) | Jersey City Classy and Curious Coders Club Cooperative

Rust Coding / Game Dev Fridays Open Mob Session!

2025-02-11 | Virtual (Dallas, TX, US) | Dallas Rust User Meetup

Second Tuesday

2025-02-11 | Virtual (Tel Aviv-Yafo, IL) | Code Mavens 🦀 - 🐍 - 🐪

Meet Elusion: New DataFrame Library powered by Rust 🦀 with Borivoj Grujicic

Europe

2025-01-16 | Amsterdam, NL | Rust Developers Amsterdam Group

Meetup @ Avalor AI

2025-01-16 | Karlsruhe, DE | Rust Hack & Learn Karlsruhe

Karlsruhe Rust Hack and Learn Meetup bei BlueYonder

2025-01-18 | Stockholm, SE | Stockholm Rust

Ferris' Fika Forum #8

2025-01-21 | Edinburgh, GB | Rust and Friends

Rust and Friends (evening pub)

2025-01-21 | Ghent, BE | Systems Programming Ghent

Tech Talks & Dinner: Insights on Systems Programming Side Projects (in Rust) - Leptos (full-stack Rust with webassembly), Karyon (distributed p2p software in Rust), FunDSP (audio synthesis in Rust)

2025-01-21 | Leipzig, SN, DE | Rust - Modern Systems Programming in Leipzig

Self-Organized Peer-to-Peer Networks using Rust

2025-01-22 | London, GB | Rust London User Group

Rust London's New Years Party & Community Swag Drop

2025-01-22 | Oberursel, DE | Rust Rhein Main

Rust 2024 Edition and Beyond

2025-01-23 | Barcelona, ES | Barcelona Free Software

Why Build a New Browser Engine in Rust?

2025-01-23 | Paris, FR | Rust Paris

Rust meetup #74

2025-01-24 | Edinburgh, GB | Rust and Friends

Rust and Friends (daytime coffee)

2025-01-27 | Prague, CZ | Rust Prague

Rust Meetup Prague (January 2025)

2025-01-28 | Aarhus, DK | Rust Aarhus

Hack Night - Advent of Code

2025-01-28 | Manchester, GB | Rust Manchester

Rust Manchester January Code Night

2025-01-30 | Augsburg, DE | Rust Meetup Augsburg

Rust Meetup #11: Hypermedia-driven development in Rust

2025-01-30 | Berlin, DE | Rust Berlin

Rust and Tell - Title

2025-02-01 | Brussels, BE | FOSDEM 2025

FOSDEM Rust Devroom

2025-02-01 | Nürnberg, DE | Rust Nuremberg

Technikmuseum Sinsheim

2025-02-05 | Oxford, GB | Oxford Rust Meetup Group

Oxford Rust and C++ social

2025-02-12 | Reading, GB | Reading Rust Workshop

Reading Rust Meetup

North America

2025-01-16 | Nashville, TN, US | Music City Rust Developers

Rust Game Development Series 1: Community Introductions

2025-01-16 | Redmond, WA, US | Seattle Rust User Group

January Meetup

2025-01-16 | Spokane, WA, US | Spokane Rust

Spokane Rust Monthly Meetup: Traits and Generics

2025-01-17 | México City, MX | Rust MX

Multithreading y Async en Rust 101 - HolaMundo - Parte 3

2025-01-18 | Boston, MA, US | Boston Rust Meetup

Back Bay Rust Lunch, Jan 18

2025-01-21 | New York, NY, US | Rust NYC

Rust NYC Monthly Meetup

2025-01-21 | San Francisco, CA, US | San Francisco Rust Study Group

Rust Hacking in Person

2025-01-22 | Austin, TX, US | Rust ATX

Rust Lunch - Fareground

2025-01-23 | Mountain View, CA, US | Hacker Dojo

RUST MEETUP at HACKER DOJO | Rust Meetup at Hacker Dojo - Mountain View Rust Meetup Page

2025-01-28 | Boulder, CO, US | Boulder Rust Meetup

From Basics to Advanced: Testing

2025-02-06 | Saint Louis, MO, US | STL Rust

Async, the Future of Futures

Oceania:

2025-02-04 | Auckland, NZ | Rust AKL

Rust AKL: How We Learn Rust

If you are running a Rust event please add it to the calendar to get it mentioned here. Please remember to add a link to the event too. Email the Rust Community Team for access.

Jobs

Please see the latest Who's Hiring thread on r/rust

Quote of the Week

This is a wonderful unsoundness and I am incredibly excited about it :3

– lcnr on github

Thanks to Christoph Grenz for the suggestion!

Please submit quotes and vote for next week!

This Week in Rust is edited by: nellshamrell, llogiq, cdmistman, ericseppanen, extrawurst, U007D, joelmarcey, mariannegoldin, bennyvasquez, bdillo

Email list hosting is sponsored by The Rust Foundation

Discuss on r/rust

2 notes

·

View notes

Text

There's nowhere to go on the public internet where your posts won't be scraped for AI, sorry. Not discord not mastodon not nothing. No photoshop plugin or robots.txt will protect you from anything other than the politest of scrapers. Maybe if people wanted to bring back private BBSes we could do something with that but at this point, if you're putting something online, expect it to be used for LLM training datasets and/or targeted advertisements. 🥳

#does it suck? yes. have i selected 'don't AI me' on the tumblr toggle? yes. will it do anything? no#that said....it's really just a question of scale#most things have been inherently copyable since the invention of the printing press

7 notes

·

View notes

Text

One reason I haven't experimented with the LLMs as much is that the price of the VRAM requirements rapidly jumps off the deep end.

For Stable Diffusion, the image generator, you can mostly get by with 12GB. It's enough to generate some decent size images and also use regional prompting and the controlnet plugin. About $300, probably.

The smallest Falcon LLM, 7b parameters, weighs in around 15GB, or a $450 16GB card.

A 24GB card will run like $1,500.

A 48GB A6000 will run like $4,000.

An 80GB A100 is like $15,000.

3 notes

·

View notes

Text

GPT-4 vs. Gemini: Which Large Language Model Reigns Supreme in 2025?

Artificial Intelligence is evolving at an unprecedented pace, and in 2025, the competition between OpenAI's GPT-4 and Google's Gemini is fiercer than ever. Both models have made waves in the AI space, offering groundbreaking capabilities that extend far beyond traditional chatbots. But which one is better suited for your needs?

In this comparison, we’ll explore the strengths of each model, analyze their real-world applications, and help you determine which AI powerhouse best aligns with your goals.

An Overview of GPT-4 and Gemini

The advancements in Large Language Models (LLMs) have revolutionized various industries, from content creation and education to healthcare and software development.

GPT-4, developed by OpenAI, is a refined version of its predecessors, offering exceptional text generation, improved contextual awareness, and enhanced problem-solving capabilities.

Gemini, Google DeepMind’s AI marvel, is designed to excel in multimodal learning—seamlessly integrating text, images, videos, and even speech into its AI processing.

Both models bring something unique to the table, so let’s dive deeper into their core functionalities.

How GPT-4 and Gemini Compare

1. Language Processing and Text Generation

GPT-4 remains a top choice for high-quality text generation, excelling in storytelling, article writing, and conversational AI.

Gemini, while also adept at text-based tasks, is designed with a more holistic approach, offering enhanced interpretation and response generation across various formats.

2. Problem-Solving and Reasoning Abilities

GPT-4 shines when it comes to logic-based applications, including coding assistance, research papers, and complex analysis.

Gemini is better at decision-making tasks, especially in industries like finance and healthcare, where deep analytical capabilities are required.

3. Multimodal Capabilities

GPT-4 primarily focuses on text generation but integrates with APIs and plugins for limited multimodal applications.

Gemini, however, is built to process and interpret text, images, videos, and even audio seamlessly, making it the superior choice for interactive AI experiences.

4. AI for Coding and Software Development

GPT-4 has been a go-to assistant for programmers, supporting multiple languages like Python, JavaScript, and C++.

Gemini offers similar features but is optimized for AI-based automation, making it an excellent choice for machine learning developers and data scientists.

Where Are These AI Models Being Used?

1. Business and Enterprise Applications

GPT-4 is widely used for automated customer support, AI-driven marketing, and business intelligence.

Gemini is leveraged for advanced data analysis, predictive modeling, and workflow automation.

2. AI in Healthcare

GPT-4 assists in medical transcription, summarizing patient records, and generating health-related content.

Gemini takes a step further by analyzing medical images, assisting in early disease detection, and helping in personalized treatment recommendations.

3. AI for Education

GPT-4 enhances learning through personalized tutoring, summarization tools, and automated grading.

Gemini offers a more immersive experience by integrating visual and interactive learning tools, making education more engaging.

4. Ethics and Bias Control

GPT-4 incorporates bias-mitigation strategies developed by OpenAI to ensure fair AI responses.

Gemini follows Google’s ethical AI guidelines, emphasizing transparency and fairness in decision-making.

Which AI Model Should You Choose?

The choice between GPT-4 and Gemini largely depends on what you need AI for:

If you're focused on content generation, chatbots, and conversational AI, GPT-4 is your best bet.

If you require a multimodal AI that can handle images, video, and audio along with text, Gemini is the stronger option.

For programming and AI-assisted coding, both models perform well, though GPT-4 is more widely used in developer communities.

In healthcare and finance, Gemini’s deep analytical abilities make it more effective for research and decision-making.

For education, both AI models bring unique benefits—GPT-4 for personalized learning and Gemini for a more interactive approach.

Boston Institute of Analytics' Online Data Science Course in UAE

As AI continues to reshape industries, professionals must equip themselves with data science and AI skills to remain competitive. The Boston Institute of Analytics (BIA) offers an Online Data Science Course UAE, designed to help learners master AI technologies, including GPT-4 and Gemini.

Why Choose BIA’s Data Science Course?

Industry-Relevant Curriculum: Covers machine learning, deep learning, and LLM applications.

Hands-On Training: Real-world projects and case studies from finance, healthcare, and e-commerce.

Expert Faculty: Learn from professionals with years of experience in AI and data science.

Flexible Learning: Designed for working professionals and students alike.

Career Support: Resume building, interview preparation, and job placement assistance in AI-driven industries.

Final Thoughts

The debate between GPT-4 and Gemini is not about which model is definitively better but about which model best suits your needs. While GPT-4 excels in content creation, chatbots, and AI-driven text applications, Gemini stands out for its multimodal capabilities, analytics, and decision-making abilities.

For those looking to build a career in AI, understanding these technologies is crucial. The Data Science Course provides the right training and expertise to help professionals navigate the AI revolution.

As AI continues to evolve, staying informed and upskilled will be the key to leveraging these powerful technologies effectively in any industry.

#Online Data Science Course UAE#Best Data Science Institute#Best Online Data Science Programs#Data Science Program#Best Data Science Programs#Online Data Science Course#AI Training Program

1 note

·

View note

Text

Shielding Prompts from LLM Data Leaks

New Post has been published on https://thedigitalinsider.com/shielding-prompts-from-llm-data-leaks/

Shielding Prompts from LLM Data Leaks

Opinion An interesting IBM NeurIPS 2024 submission from late 2024 resurfaced on Arxiv last week. It proposes a system that can automatically intervene to protect users from submitting personal or sensitive information into a message when they are having a conversation with a Large Language Model (LLM) such as ChatGPT.

Mock-up examples used in a user study to determine the ways that people would prefer to interact with a prompt-intervention service. Source: https://arxiv.org/pdf/2502.18509

The mock-ups shown above were employed by the IBM researchers in a study to test potential user friction to this kind of ‘interference’.

Though scant details are given about the GUI implementation, we can assume that such functionality could either be incorporated into a browser plugin communicating with a local ‘firewall’ LLM framework; or that an application could be created that can hook directly into (for instance) the OpenAI API, effectively recreating OpenAI’s own downloadable standalone program for ChatGPT, but with extra safeguards.

That said, ChatGPT itself automatically self-censors responses to prompts that it perceives to contain critical information, such as banking details:

ChatGPT refuses to engage with prompts that contain perceived critical security information, such as bank details (the details in the prompt above are fictional and non-functional). Source: https://chatgpt.com/

However, ChatGPT is much more tolerant in regard to different types of personal information – even if disseminating such information in any way might not be in the user’s best interests (in this case perhaps for various reasons related to work and disclosure):

The example above is fictional, but ChatGPT does not hesitate to engage in a conversation on the user on a sensitive subject that constitutes a potential reputational or earnings risk (the example above is totally fictional).

In the above case, it might have been better to write: ‘What is the significance of a leukemia diagnosis on a person’s ability to write and on their mobility?’

The IBM project identifies and reinterprets such requests from a ‘personal’ to a ‘generic’ stance.

Schema for the IBM system, which uses local LLMs or NLP-based heuristics to identify sensitive material in potential prompts.

This assumes that material gathered by online LLMs, in this nascent stage of the public’s enthusiastic adoption of AI chat, will never feed through either to subsequent models or to later advertising frameworks that might exploit user-based search queries to provide potential targeted advertising.

Though no such system or arrangement is known to exist now, neither was such functionality yet available at the dawn of internet adoption in the early 1990s; since then, cross-domain sharing of information to feed personalized advertising has led to diverse scandals, as well as paranoia.

Therefore history suggests that it would be better to sanitize LLM prompt inputs now, before such data accrues at volume, and before our LLM-based submissions end up in permanent cyclic databases and/or models, or other information-based structures and schemas.

Remember Me?

One factor weighing against the use of ‘generic’ or sanitized LLM prompts is that, frankly, the facility to customize an expensive API-only LLM such as ChatGPT is quite compelling, at least at the current state of the art – but this can involve the long-term exposure of private information.

I frequently ask ChatGPT to help me formulate Windows PowerShell scripts and BAT files to automate processes, as well as on other technical matters. To this end, I find it useful that the system permanently memorize details about the hardware that I have available; my existing technical skill competencies (or lack thereof); and various other environmental factors and custom rules:

ChatGPT allows a user to develop a ‘cache’ of memories that will be applied when the system considers responses to future prompts.

Inevitably, this keeps information about me stored on external servers, subject to terms and conditions that may evolve over time, without any guarantee that OpenAI (though it could be any other major LLM provider) will respect the terms they set out.

In general, however, the capacity to build a cache of memories in ChatGPT is most useful because of the limited attention window of LLMs in general; without long-term (personalized) embeddings, the user feels, frustratingly, that they are conversing with a entity suffering from Anterograde amnesia.

It is difficult to say whether newer models will eventually become adequately performant to provide useful responses without the need to cache memories, or to create custom GPTs that are stored online.

Temporary Amnesia

Though one can make ChatGPT conversations ‘temporary’, it is useful to have the Chat history as a reference that can be distilled, when time allows, into a more coherent local record, perhaps on a note-taking platform; but in any case we cannot know exactly what happens to these ‘discarded’ chats (though OpenAI states they will not be used for training, it does not state that they are destroyed), based on the ChatGPT infrastructure. All we know is that chats no longer appear in our history when ‘Temporary chats’ is turned on in ChatGPT.

Various recent controversies indicate that API-based providers such as OpenAI should not necessarily be left in charge of protecting the user’s privacy, including the discovery of emergent memorization, signifying that larger LLMs are more likely to memorize some training examples in full, and increasing the risk of disclosure of user-specific data – among other public incidents that have persuaded a multitude of big-name companies, such as Samsung, to ban LLMs for internal company use.

Think Different

This tension between the extreme utility and the manifest potential risk of LLMs will need some inventive solutions – and the IBM proposal seems to be an interesting basic template in this line.

Three IBM-based reformulations that balance utility against data privacy. In the lowest (pink) band, we see a prompt that is beyond the system’s ability to sanitize in a meaningful way.

The IBM approach intercepts outgoing packets to an LLM at the network level, and rewrites them as necessary before the original can be submitted. The rather more elaborate GUI integrations seen at the start of the article are only illustrative of where such an approach could go, if developed.

Of course, without sufficient agency the user may not understand that they are getting a response to a slightly-altered reformulation of their original submission. This lack of transparency is equivalent to an operating system’s firewall blocking access to a website or service without informing the user, who may then erroneously seek out other causes for the problem.

Prompts as Security Liabilities

The prospect of ‘prompt intervention’ analogizes well to Windows OS security, which has evolved from a patchwork of (optionally installed) commercial products in the 1990s to a non-optional and rigidly-enforced suite of network defense tools that come as standard with a Windows installation, and which require some effort to turn off or de-intensify.

If prompt sanitization evolves as network firewalls did over the past 30 years, the IBM paper’s proposal could serve as a blueprint for the future: deploying a fully local LLM on the user’s machine to filter outgoing prompts directed at known LLM APIs. This system would naturally need to integrate GUI frameworks and notifications, giving users control – unless administrative policies override it, as often occurs in business environments.

The researchers conducted an analysis of an open-source version of the ShareGPT dataset to understand how often contextual privacy is violated in real-world scenarios.

Llama-3.1-405B-Instruct was employed as a ‘judge’ model to detect violations of contextual integrity. From a large set of conversations, a subset of single-turn conversations were analyzed based on length. The judge model then assessed the context, sensitive information, and necessity for task completion, leading to the identification of conversations containing potential contextual integrity violations.

A smaller subset of these conversations, which demonstrated definitive contextual privacy violations, were analyzed further.

The framework itself was implemented using models that are smaller than typical chat agents such as ChatGPT, to enable local deployment via Ollama.

Schema for the prompt intervention system.

The three LLMs evaluated were Mixtral-8x7B-Instruct-v0.1; Llama-3.1-8B-Instruct; and DeepSeek-R1-Distill-Llama-8B.

User prompts are processed by the framework in three stages: context identification; sensitive information classification; and reformulation.

Two approaches were implemented for sensitive information classification: dynamic and structured classification: dynamic classification determines the essential details based on their use within a specific conversation; structured classification allows for the specification of a pre-defined list of sensitive attributes that are always considered non-essential. The model reformulates the prompt if it detects non-essential sensitive details by either removing or rewording them to minimize privacy risks while maintaining usability.

Home Rules

Though structured classification as a concept is not well-illustrated in the IBM paper, it is most akin to the ‘Private Data Definitions’ method in the Private Prompts initiative, which provides a downloadable standalone program that can rewrite prompts – albeit without the ability to directly intervene at the network level, as the IBM approach does (instead the user must copy and paste the modified prompts).

The Private Prompts executable allows a list of alternate substitutions for user-input text.

In the above image, we can see that the Private Prompts user is able to program automated substitutions for instances of sensitive information. In both cases, for Private Prompts and the IBM method, it seems unlikely that a user with enough presence-of-mind and personal insight to curate such a list would actually need this product – though it could be built up over time as incidents accrue.

In an administrator role, structured classification could work as an imposed firewall or censor-net for employees; and in a home network it could, with some difficult adjustments, become a domestic network filter for all network users; but ultimately, this method is arguably redundant, since a user who could set this up properly could also self-censor effectively in the first place.

ChatGPT’s Opinion

Since ChatGPT recently launched its deep research tool for paid users, I used this facility to ask ChatGPT to review related literature and give me a ‘cynical’ take on IBM’s paper. I received the most defensive and derisive response the system has ever given when asked to evaluate or parse a new publication:

ChatGPT-4o has a low opinion of the IBM project.

‘If users don’t trust OpenAI, Google, or Anthropic to handle their data responsibly,’ ChatGPT posits. ‘why would they trust a third-party software layer sitting between them and the AI? The intermediary itself becomes a new point of failure—potentially logging, mishandling, or even leaking data before it ever reaches the LLM. It solves nothing if it just creates another entity to exploit user data.’

This objection seems self-serving and non-applicable, given the storied history of open source projects that genuinely benefit end-users through the concerted long-term efforts of community developers and contributors; and given the growing potential of mobile devices to run – and even train – lightweight LLMs. Certainly in this instance, the use case is not terribly demanding.

Next, ChatGPT confidently misses the point of having a lightweight LLM provide oversight of input towards a commercial LLM that could not possibly be run on a local machine (because the LLM is too big, and allowing local access is too risky for the company that makes it):

‘Effective privacy filtering requires understanding the input before deciding what to strip out. If an intermediary is smart enough to recognize sensitive data (names, addresses, financial details), then it’s already processing and analyzing that data—meaning it has to store or examine the very thing it’s supposed to erase.

‘This introduces a paradox: a) A dumb filter (basic regex substitution) will be ineffective and miss subtle privacy risks. b) A smart filter (LLM-powered rewriter) has to process the full input, making it just as dangerous as the original LLM.

‘If you trust the intermediary enough to read and rewrite your inputs, why not just trust the LLM itself?’

The answer to the final question here is that the local LLM is intended to be entirely on the side of the user, and inevitably open source, with minimal or zero need for network access. An equivalent commercial version, however well-intentioned at the outset, would eventually be vulnerable to corporate shifts and changes to the terms of service, whereas a suitable open source license would prevent this kind of ‘inevitable corruption’.

ChatGPT further argued that the IBM proposal ‘breaks user intent’, since it could reinterpret a prompt into an alternative that affects its utility. However, this is a much broader problem in prompt sanitization, and not specific to this particular use case.

In closing (ignoring its suggestion to use local LLMs ‘instead’, which is exactly what the IBM paper actually proposes), ChatGPT opined that the IBM method represents a barrier to adoption due to the ‘user friction’ of implementing warning and editing methods into a chat.

Here, ChatGPT may be right; but if significant pressure comes to bear because of further public incidents, or if profits in one geographical zone are threatened by growing regulation (and the company refuses to just abandon the affected region entirely), the history of consumer tech suggests that safeguards will eventually no longer be optional anyway.

Conclusion

We can’t realistically expect OpenAI to ever implement safeguards of the type that are proposed in the IBM paper, and in the central concept behind it; at least not effectively.

And certainly not globally; just as Apple blocks certain iPhone features in Europe, and LinkedIn has different rules for exploiting its users’ data in different countries, it’s reasonable to suggest that any AI company will default to the most profitable terms and conditions that are tolerable to any particular nation in which it operates – in each case, at the expense of the user’s right to data-privacy, as necessary.

First published Thursday, February 27, 2025

Updated Thursday, February 27, 2025 15:47:11 because of incorrect Apple-related link – MA

#2024#2025#adoption#advertising#agents#ai#ai chat#Analysis#Anderson's Angle#anthropic#API#APIs#apple#approach#arrangement#Art#Article#Artificial Intelligence#attention#attributes#ban#bank#banking#barrier#bat#blueprint#browser#Business#cache#chatGPT

0 notes

Text

What software should be

It used to be, that I was the first person in line to download new software. I’d sign up for beta access, email developers for early looks at what they were working on, or even install beta software on my daily devices.

But as time went on, I valued my productivity more than having the latest software. I have an awful lot of work to get done in a short period of time. I don’t have time to deal with the bugs of beta software anymore. So I generally wait several months to update to the latest operating system, or wait until a device had two or three revisions before purchasing it.

But now I feel even less inclined to update my software because I’m worried about what will be in the update that I do not want. Or, what features will I lose? Or, what new policy or restriction will be included?

Apple, in general, is headed in a direction with its software that is even more locked down than ever. They are now the company that Steve Jobs wouldn’t like. Each time there is an update I worry about what will be locked down next.

WordPress, software that I use everyday for work and also am writing this post in right now, has an uncertain future and each update to its core or its many plugins has me code spelunking before I update.

More and more software stores its data only in the cloud. For some web apps, I’m ok with that and understand the model. But for many apps local files would be much better.

Not to mention the “AI all the things” fad happening right now. I’m not against LLMs, but I’m not for forcing LLMs into software for people that would rather not use them. Each application I update I see sprinkles of AI-related things that I may not need. And these models aren’t tiny (yet?).

So, I’m now even slower to update and less likely to be in line to have the latest software available.

I want software to be; fast, reliable, and simple. I want open software. I want software with a business model that I understand and allows me to pay. I want files. Local files. I want to own my own data. I want privacy. I want encryption. I want to choose when and how to use a local LLM. And I want to know what is contained in a software update (with great detail) so that I can choose if I want to update it.

0 notes

Text

Introduction to the LangChain Framework

LangChain is an open-source framework designed to simplify and enhance the development of applications powered by large language models (LLMs). By combining prompt engineering, chaining processes, and integrations with external systems, LangChain enables developers to build applications with powerful reasoning and contextual capabilities. This tutorial introduces the core components of LangChain, highlights its strengths, and provides practical steps to build your first LangChain-powered application.

What is LangChain?

LangChain is a framework that lets you connect LLMs like OpenAI's GPT models with external tools, data sources, and complex workflows. It focuses on enabling three key capabilities: - Chaining: Create sequences of operations or prompts for more complex interactions. - Memory: Maintain contextual memory for multi-turn conversations or iterative tasks. - Tool Integration: Connect LLMs with APIs, databases, or custom functions. LangChain is modular, meaning you can use specific components as needed or combine them into a cohesive application.

Getting Started

Installation First, install the LangChain package using pip: pip install langchain Additionally, you'll need to install an LLM provider (e.g., OpenAI or Hugging Face) and any tools you plan to integrate: pip install openai

Core Concepts in LangChain

1. Chains Chains are sequences of steps that process inputs and outputs through the LLM or other components. Examples include: - Sequential chains: A linear series of tasks. - Conditional chains: Tasks that branch based on conditions. 2. Memory LangChain offers memory modules for maintaining context across multiple interactions. This is particularly useful for chatbots and conversational agents. 3. Tools and Plugins LangChain supports integrations with APIs, databases, and custom Python functions, enabling LLMs to interact with external systems. 4. Agents Agents dynamically decide which tool or chain to use based on the user’s input. They are ideal for multi-tool workflows or flexible decision-making.

Building Your First LangChain Application

In this section, we’ll build a LangChain app that integrates OpenAI’s GPT API, processes user queries, and retrieves data from an external source. Step 1: Setup and Configuration Before diving in, configure your OpenAI API key: import os from langchain.llms import OpenAI # Set API Key os.environ = "your-openai-api-key" # Initialize LLM llm = OpenAI(model_name="text-davinci-003") Step 2: Simple Chain Create a simple chain that takes user input, processes it through the LLM, and returns a result. from langchain.prompts import PromptTemplate from langchain.chains import LLMChain # Define a prompt template = PromptTemplate( input_variables=, template="Explain {topic} in simple terms." ) # Create a chain simple_chain = LLMChain(llm=llm, prompt=template) # Run the chain response = simple_chain.run("Quantum computing") print(response) Step 3: Adding Memory To make the application context-aware, we add memory. LangChain supports several memory types, such as conversational memory and buffer memory. from langchain.chains import ConversationChain from langchain.memory import ConversationBufferMemory # Add memory to the chain memory = ConversationBufferMemory() conversation = ConversationChain(llm=llm, memory=memory) # Simulate a conversation print(conversation.run("What is LangChain?")) print(conversation.run("Can it remember what we talked about?")) Step 4: Integrating Tools LangChain can integrate with APIs or custom tools. Here’s an example of creating a tool for retrieving Wikipedia summaries. from langchain.tools import Tool # Define a custom tool def wikipedia_summary(query: str): import wikipedia return wikipedia.summary(query, sentences=2) # Register the tool wiki_tool = Tool(name="Wikipedia", func=wikipedia_summary, description="Retrieve summaries from Wikipedia.") # Test the tool print(wiki_tool.run("LangChain")) Step 5: Using Agents Agents allow dynamic decision-making in workflows. Let’s create an agent that decides whether to fetch information or explain a topic. from langchain.agents import initialize_agent, Tool from langchain.agents import AgentType # Define tools tools = # Initialize agent agent = initialize_agent(tools, llm, agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION, verbose=True) # Query the agent response = agent.run("Tell me about LangChain using Wikipedia.") print(response) Advanced Topics 1. Connecting with Databases LangChain can integrate with databases like PostgreSQL or MongoDB to fetch data dynamically during interactions. 2. Extending Functionality Use LangChain to create custom logic, such as summarizing large documents, generating reports, or automating tasks. 3. Deployment LangChain applications can be deployed as web apps using frameworks like Flask or FastAPI. Use Cases - Conversational Agents: Develop context-aware chatbots for customer support or virtual assistance. - Knowledge Retrieval: Combine LLMs with external data sources for research and learning tools. - Process Automation: Automate repetitive tasks by chaining workflows. Conclusion LangChain provides a robust and modular framework for building applications with large language models. Its focus on chaining, memory, and integrations makes it ideal for creating sophisticated, interactive applications. This tutorial covered the basics, but LangChain’s potential is vast. Explore the official LangChain documentation for deeper insights and advanced capabilities. Happy coding! Read the full article

#AIFramework#AI-poweredapplications#automation#context-aware#dataintegration#dynamicapplications#LangChain#largelanguagemodels#LLMs#MachineLearning#ML#NaturalLanguageProcessing#NLP#workflowautomation

0 notes

Text

Calling LLMs from client-side JavaScript, converting PDFs to HTML + weeknotes

See on Scoop.it - Education 2.0 & 3.0

I’ve been having a bunch of fun taking advantage of CORS-enabled LLM APIs to build client-side JavaScript applications that access LLMs directly. I also span up a new Datasette plugin …

0 notes

Text

The Mistral AI New Model Large-Instruct-2411 On Vertex AI

Introducing the Mistral AI New Model Large-Instruct-2411 on Vertex AI from Mistral AI

Mistral AI’s models, Codestral for code generation jobs, Mistral Large 2 for high-complexity tasks, and the lightweight Mistral Nemo for reasoning tasks like creative writing, were made available on Vertex AI in July. Google Cloud is announcing that the Mistral AI new model is now accessible on Vertex AI Model Garden: Mistral-Large-Instruct-2411 is currently accessible to the public.

Large-Instruct-2411 is a sophisticated dense large language model (LLM) with 123B parameters that extends its predecessor with improved long context, function calling, and system prompt. It has powerful reasoning, knowledge, and coding skills. The approach is perfect for use scenarios such as big context applications that need strict adherence for code generation and retrieval-augmented generation (RAG), or sophisticated agentic workflows with exact instruction following and JSON outputs.

The new Mistral AI Large-Instruct-2411 model is available for deployment on Vertex AI via its Model-as-a-Service (MaaS) or self-service offering right now.

With the new Mistral AI models on Vertex AI, what are your options?

Using Mistral’s models to build atop Vertex AI, you can:

Choose the model that best suits your use case: A variety of Mistral AI models are available, including effective models for low-latency requirements and strong models for intricate tasks like agentic processes. Vertex AI simplifies the process of assessing and choosing the best model.

Try things with assurance: Vertex AI offers fully managed Model-as-a-Service for Mistral AI models. Through straightforward API calls and thorough side-by-side evaluations in its user-friendly environment, you may investigate Mistral AI models.

Control models without incurring extra costs: With pay-as-you-go pricing flexibility and fully managed infrastructure built for AI workloads, you can streamline the large-scale deployment of the new Mistral AI models.

Adjust the models to your requirements: With your distinct data and subject expertise, you will be able to refine Mistral AI’s models to produce custom solutions in the upcoming weeks.

Create intelligent agents: Using Vertex AI’s extensive toolkit, which includes LangChain on Vertex AI, create and coordinate agents driven by Mistral AI models. To integrate Mistral AI models into your production-ready AI experiences, use Genkit’s Vertex AI plugin.

Construct with enterprise-level compliance and security: Make use of Google Cloud’s integrated privacy, security, and compliance features. Enterprise controls, like the new organization policy for Vertex AI Model Garden, offer the proper access controls to guarantee that only authorized models are accessible.

Start using Google Cloud’s Mistral AI models

Google Cloud’s dedication to open and adaptable AI ecosystems that assist you in creating solutions that best meet your needs is demonstrated by these additions. Its partnership with Mistral AI demonstrates its open strategy in a cohesive, enterprise-ready setting. Many of the first-party, open-source, and third-party models offered by Vertex AI, including the recently released Mistral AI models, can be provided as a fully managed Model-as-a-service (MaaS) offering, giving you enterprise-grade security on its fully managed infrastructure and the ease of a single bill.

Mistral Large (24.11)

The most recent iteration of the Mistral Large model, known as Mistral Large (24.11), has enhanced reasoning and function calling capabilities.

Mistral Large is a sophisticated Large Language Model (LLM) that possesses cutting-edge knowledge, reasoning, and coding skills.

Intentionally multilingual: English, French, German, Spanish, Italian, Chinese, Japanese, Korean, Portuguese, Dutch, Polish, Arabic, and Hindi are among the dozens of languages that are supported.

Multi-model capability: Mistral Large 24.11 maintains cutting-edge performance on text tasks while excelling at visual comprehension.

Competent in coding: Taught more than 80 coding languages, including Java, Python, C, C++, JavaScript, and Bash. Additionally, more specialized languages like Swift and Fortran were taught.

Agent-focused: Top-notch agentic features, including native function calls and JSON output.

Sophisticated reasoning: Cutting-edge reasoning and mathematical skills.

Context length: 128K is the most that Mistral Large can support.

Use cases

Agents: Made possible by strict adherence to instructions, JSON output mode, and robust safety measures

Text: Creation, comprehension, and modification of synthetic text

RAG: Important data is preserved across lengthy context windows (up to 128K tokens).

Coding includes creating, finishing, reviewing, and commenting on code. All popular coding languages are supported.

Read more on govindhtech.com

#MistralAI#ModelLarge#VertexAI#MistralLarge2#Codestral#retrievalaugmentedgeneration#RAG#VertexAIModelGarden#LargeLanguageModel#LLM#technology#technews#news#govindhtech

0 notes

Text

I’m going to approach this as though when tumblr user tanadrin says that they haven’t seen anti-AI rhetoric that doesn’t trade in moral panic, that they’re telling the truth and more importantly that they would would be interested in seeing some. My hope is that you will read this as a reasonable reply, but I’ll be honest upfront that I can’t pretend that this isn’t also personal for me as someone whose career is threatened by generative AI. Personally, I’m not afraid that any LLM will ever surpass my ability to write, but what does scare me is that it doesn’t actually matter. I’m sure I will be automated out whether my artificial replacement can write better than me or not.

This post is kind of long so if watching is more your thing, check out Zoe Bee’s and Philosophy Tube’s video essays, I thought these were both really good at breaking down the problems as well as describing the actual technology.

Also, for clarity, I’m using “AI” and “genAI” as shorthand, but what I’m specifically referring to is Large Language Models (like ChatGpt) or image generation tools (like MidJourney or Dall-E). The term “AI” is used for a lot of extremely useful things that don’t deserve to be included in this.

Also, to get this out of the way, a lot of people point out that genAI is an environmental problem but honestly even if it were completely eco-friendly I’d have serious issues with it.

A major concern that I have with genAI, as I’ve already touched on, is that it is being sold as a way to replace people in creative industries, and it is being purchased on that promise. Last year SAG and the WGA both went on strike because (among other reasons) studios wanted to replace them with AI and this year the Animation Guild is doing the same. News is full of fake images and stories getting sold as the real thing, and when the news is real it’s plagiarised. A journalist at 404 Media did an experiment where he created a website to post AI-powered news stories only to find that all it did was rip off his colleagues. LLMs can’t think of anything new, they just recycle what a human has already done.

As for image generation, there are all the same problems with plagiarism and putting human artists out of work, as well as the overwhelming amount of revenge porn people are creating, not just violating the privacy of random people, but stealing the labour of sex workers to do it.

At this point you might be thinking that these aren’t examples of the technology, but how people use it. That’s a fair rebuttal, every time there’s a new technology there are going to be reports of how people are using it for sex or crimes so let’s not throw the baby out with the bathwater. Cameras shouldn’t be taken off phones just because people use them to take upskirt shots of unwilling participants, after all, people use phone cameras to document police brutality, and to take upskirt shots of people who have consented to them.

But what are LLMs for? As far as I can tell the best use-case is correcting your grammar, which tools like Grammarly already pretty much have covered, so there is no need for a billion-dollar industry to do the same thing. I am yet to see a killer use case for image generation, and I would be interested to hear one if you have it. I know that digital artists have plugins at their disposal to tidy up or add effects/filters to images they’ve created, but again, that’s something that already exists and has been used for very good reason by artists working in the field, not something that creates images out of nothing.

Now let’s look at the technology itself and ask some important questions. Why haven’t they programmed the racism out of GPT-3? The answer to that is complicated and the answer is complicated and sort of boils down to the fact that programmers often don’t realise that racism needs to be programmed out of any technology. Meredith Broussard touches on this in her interview for the Black TikTok Strike of 2021 episode of the podcast Sixteenth Minute, and in her book More Than A Glitch, but to be fair I haven’t read that.

Here's another question I have: shouldn’t someone have been responsible for making sure that multiple image generators, including Google’s, did not have child pornography in their training data? Yes, I am aware that people engaging in moral panics often lean on protect-the-children arguments, and there are many nuanced discussions to be had about how to prevent children from being abused and protect those who have been, but I do think it’s worth pointing out that these technologies have been rolled out before the question of “will people generate CSAM with it?” was fully ironed out. Especially considering that AI images are overwhelming the capacity for investigators to stop instances of actual child abuse.

Again, you might say that’s a problem with how it’s being used and not what it is, but I really have to stress that it is able to do this. This is being put out for everyday people to use and there just aren’t enough safeguards that people can’t get around them. If something is going to have this kind of widespread adoption, it really should not be capable of this.

I’ll sum up by saying that I know the kind of moral panic arguments you’re talking about, the whole “oh, it’s evil because it’s not human” isn’t super convincing, but a lot of the pro-AI arguments have about as much backing. There are arguments like “it will get cheaper” but Goldman Sachs released a report earlier this year saying that, basically, there is no reason to believe that. If you only read one of the links in this post, I recommend that one. There are also arguments like “it is inevitable, just use it now” (which is genuinely how some AI tools are marketed), but like, is it? It doesn’t have to be. Are you my mum trying to convince me to stop complaining about a family trip I don’t want to go on or are you a company trying to sell me a technology that is spying on me and making it weirdly hard to find the opt-out button?

My hot take is that AI bears all of the hallmarks of an economic bubble but that anti-AI bears all of the hallmarks of a moral panic. I contain multitudes.

9K notes

·

View notes