#Data Privacy Management Software

Explore tagged Tumblr posts

Text

The Role of Encryption in Modern Data Protection Strategies Discover how GoTrust's data protection management software uses advanced encryption for robust data security. Learn more today!

#Data Privacy Management Software#Data Privacy Software Solution#Privacy Compliance Software Solution#Data protection management software#Privacy program management software#Best privacy management software#Data Privacy Software Solutions

1 note

·

View note

Text

The Future of Real Estate in Jamaica: AI, Big Data, and Cybersecurity Shaping Tomorrow’s Market

#AI Algorithms#AI Real Estate Assistants#AI-Powered Chatbots#Artificial Intelligence#Automated Valuation Models#Big Data Analytics#Blockchain in Real Estate#Business Intelligence#cloud computing#Compliance Regulations#Cyber Attacks Prevention#Cybersecurity#Data encryption#Data Privacy#Data Security#data-driven decision making#Digital Property Listings#Digital Transactions#Digital Transformation#Fraud Prevention#Identity Verification#Internet of Things (IoT)#Machine Learning#Network Security#predictive analytics#Privacy Protection#Property Management Software#Property Technology#Real Estate Market Trends#real estate technology

0 notes

Text

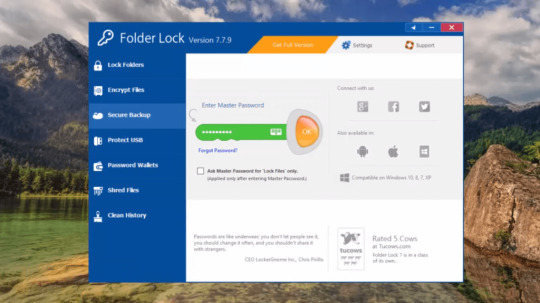

Folder Lock

If you have folders and files that you want to keep private, consider using Folder Lock. Unlike My Lockbox, it isn’t a free app, but it offers excellent configuration options and numerous methods to protect important and private documents from prying eyes. Folder Lock is a complete solution for keeping your personal files encrypted and locked, while automatically and in real-time backing up…

View On WordPress

#Data Encryption#Digital Wallets#Encryption Software#File Locking#File Management#File Security#File Shredding#password protection#Portable Security#privacy protection#Secure Backup#Windows Security

0 notes

Text

Just a bunch of Useful websites - Updated for 2023

Removed/checked all links to make sure everything is working (03/03/23). Hope they help!

Sejda - Free online PDF editor.

Supercook - Have ingredients but no idea what to make? Put them in here and it'll give you recipe ideas.

Still Tasty - Trying the above but unsure about whether that sauce in the fridge is still edible? Check here first.

Archive.ph - Paywall bypass. Like 12ft below but appears to work far better and across more sites in my testing. I'd recommend trying this one first as I had more success with it.

12ft – Hate paywalls? Try this site out.

Where Is This - Want to know where a picture was taken, this site can help.

TOS/DR - Terms of service, didn't read. Gives you a summary of terms of service plus gives each site a privacy rating.

OneLook - Reverse dictionary for when you know the description of the word but can't for the life of you remember the actual word.

My Abandonware - Brilliant site for free, legal games. Has games from 1978 up to present day across pc and console. You'll be surprised by some of the games on there, some absolute gems.

Project Gutenberg – Always ends up on these type of lists and for very good reason. All works that are copyright free in one place.

Ninite – New PC? Install all of your programs in one go with no bloat or unnecessary crap.

PatchMyPC - Alternative to ninite with over 300 app options to keep upto date. Free for home users.

Unchecky – Tired of software trying to install additional unwanted programs? This will stop it completely by unchecking the necessary boxes when you install.

Sci-Hub – Research papers galore! Check here before shelling out money. And if it’s not here, try the next link in our list.

LibGen – Lots of free PDFs relate primarily to the sciences.

Zotero – A free and easy to use program to collect, organize, cite and share research.

Car Complaints – Buying a used car? Check out what other owners of the same model have to say about it first.

CamelCamelCamel – Check the historical prices of items on Amazon and set alerts for when prices drop.

Have I Been Pawned – Still the king when it comes to checking if your online accounts have been released in a data breach. Also able to sign up for email alerts if you’ve ever a victim of a breach.

I Have No TV - A collection of documentaries for you to while away the time. Completely free.

Radio Garden – Think Google Earth but wherever you zoom, you get the radio station of that place.

Just The Recipe – Paste in the url and get just the recipe as a result. No life story or adverts.

Tineye – An Amazing reverse image search tool.

My 90s TV – Simulates 90’s TV using YouTube videos. Also has My80sTV, My70sTV, My60sTV and for the younger ones out there, My00sTV. Lose yourself in nostalgia.

Foto Forensics – Free image analysis tools.

Old Games Download – A repository of games from the 90’s and early 2000’s. Get your fix of nostalgia here.

Online OCR – Convert pictures of text into actual text and output it in the format you need.

Remove Background – An amazingly quick and accurate way to remove backgrounds from your pictures.

Twoseven – Allows you to sync videos from providers such as Netflix, Youtube, Disney+ etc and watch them with your friends. Ad free and also has the ability to do real time video and text chat.

Terms of Service, Didn’t Read – Get a quick summary of Terms of service plus a privacy rating.

Coolors – Struggling to get a good combination of colors? This site will generate color palettes for you.

This To That – Need to glue two things together? This’ll help.

Photopea – A free online alternative to Adobe Photoshop. Does everything in your browser.

BitWarden – Free open source password manager.

Just Beam It - Peer to peer file transfer. Drop the file in on one end, click create link and send to whoever. Leave your pc on that page while they download. Because of how it works there are no file limits. It's genuinely amazing. Best file transfer system I have ever used.

Atlas Obscura – Travelling to a new place? Find out the hidden treasures you should go to with Atlas Obscura.

ID Ransomware – Ever get ransomware on your computer? Use this to see if the virus infecting your pc has been cracked yet or not. Potentially saving you money. You can also sign up for email notifications if your particular problem hasn’t been cracked yet.

Way Back Machine – The Internet Archive is a non-profit library of millions of free books, movies, software, music, websites and loads more.

Rome2Rio – Directions from anywhere to anywhere by bus, train, plane, car and ferry.

Splitter – Seperate different audio tracks audio. Allowing you to split out music from the words for example.

myNoise – Gives you beautiful noises to match your mood. Increase your productivity, calm down and need help sleeping? All here for you.

DeepL – Best language translation tool on the web.

Forvo – Alternatively, if you need to hear a local speaking a word, this is the site for you.

For even more useful sites, there is an expanded list that can be found here.

79K notes

·

View notes

Text

#eDiscovery software deployment#eDiscovery software deployment options#Compliance Management in eDiscovery#eDiscovery Strategy#Data Privacy Compliance#eDiscovery Tips#Cross-Border eDiscovery

0 notes

Text

Demon-haunted computers are back, baby

Catch me in Miami! I'll be at Books and Books in Coral Gables on Jan 22 at 8PM.

As a science fiction writer, I am professionally irritated by a lot of sf movies. Not only do those writers get paid a lot more than I do, they insist on including things like "self-destruct" buttons on the bridges of their starships.

Look, I get it. When the evil empire is closing in on your flagship with its secret transdimensional technology, it's important that you keep those secrets out of the emperor's hand. An irrevocable self-destruct switch there on the bridge gets the job done! (It has to be irrevocable, otherwise the baddies'll just swarm the bridge and toggle it off).

But c'mon. If there's a facility built into your spaceship that causes it to explode no matter what the people on the bridge do, that is also a pretty big security risk! What if the bad guy figures out how to hijack the measure that – by design – the people who depend on the spaceship as a matter of life and death can't detect or override?

I mean, sure, you can try to simplify that self-destruct system to make it easier to audit and assure yourself that it doesn't have any bugs in it, but remember Schneier's Law: anyone can design a security system that works so well that they themselves can't think of a flaw in it. That doesn't mean you've made a security system that works – only that you've made a security system that works on people stupider than you.

I know it's weird to be worried about realism in movies that pretend we will ever find a practical means to visit other star systems and shuttle back and forth between them (which we are very, very unlikely to do):

https://pluralistic.net/2024/01/09/astrobezzle/#send-robots-instead

But this kind of foolishness galls me. It galls me even more when it happens in the real world of technology design, which is why I've spent the past quarter-century being very cross about Digital Rights Management in general, and trusted computing in particular.

It all starts in 2002, when a team from Microsoft visited our offices at EFF to tell us about this new thing they'd dreamed up called "trusted computing":

https://pluralistic.net/2020/12/05/trusting-trust/#thompsons-devil

The big idea was to stick a second computer inside your computer, a very secure little co-processor, that you couldn't access directly, let alone reprogram or interfere with. As far as this "trusted platform module" was concerned, you were the enemy. The "trust" in trusted computing was about other people being able to trust your computer, even if they didn't trust you.

So that little TPM would do all kinds of cute tricks. It could observe and produce a cryptographically signed manifest of the entire boot-chain of your computer, which was meant to be an unforgeable certificate attesting to which kind of computer you were running and what software you were running on it. That meant that programs on other computers could decide whether to talk to your computer based on whether they agreed with your choices about which code to run.

This process, called "remote attestation," is generally billed as a way to identify and block computers that have been compromised by malware, or to identify gamers who are running cheats and refuse to play with them. But inevitably it turns into a way to refuse service to computers that have privacy blockers turned on, or are running stream-ripping software, or whose owners are blocking ads:

https://pluralistic.net/2023/08/02/self-incrimination/#wei-bai-bai

After all, a system that treats the device's owner as an adversary is a natural ally for the owner's other, human adversaries. The rubric for treating the owner as an adversary focuses on the way that users can be fooled by bad people with bad programs. If your computer gets taken over by malicious software, that malware might intercept queries from your antivirus program and send it false data that lulls it into thinking your computer is fine, even as your private data is being plundered and your system is being used to launch malware attacks on others.

These separate, non-user-accessible, non-updateable secure systems serve a nubs of certainty, a remote fortress that observes and faithfully reports on the interior workings of your computer. This separate system can't be user-modifiable or field-updateable, because then malicious software could impersonate the user and disable the security chip.

It's true that compromised computers are a real and terrifying problem. Your computer is privy to your most intimate secrets and an attacker who can turn it against you can harm you in untold ways. But the widespread redesign of out computers to treat us as their enemies gives rise to a range of completely predictable and – I would argue – even worse harms. Building computers that treat their owners as untrusted parties is a system that works well, but fails badly.

First of all, there are the ways that trusted computing is designed to hurt you. The most reliable way to enshittify something is to supply it over a computer that runs programs you can't alter, and that rats you out to third parties if you run counter-programs that disenshittify the service you're using. That's how we get inkjet printers that refuse to use perfectly good third-party ink and cars that refuse to accept perfectly good engine repairs if they are performed by third-party mechanics:

https://pluralistic.net/2023/07/24/rent-to-pwn/#kitt-is-a-demon

It's how we get cursed devices and appliances, from the juicer that won't squeeze third-party juice to the insulin pump that won't connect to a third-party continuous glucose monitor:

https://arstechnica.com/gaming/2020/01/unauthorized-bread-a-near-future-tale-of-refugees-and-sinister-iot-appliances/

But trusted computing doesn't just create an opaque veil between your computer and the programs you use to inspect and control it. Trusted computing creates a no-go zone where programs can change their behavior based on whether they think they're being observed.

The most prominent example of this is Dieselgate, where auto manufacturers murdered hundreds of people by gimmicking their cars to emit illegal amount of NOX. Key to Dieselgate was a program that sought to determine whether it was being observed by regulators (it checked for the telltale signs of the standard test-suite) and changed its behavior to color within the lines.

Software that is seeking to harm the owner of the device that's running it must be able to detect when it is being run inside a simulation, a test-suite, a virtual machine, or any other hallucinatory virtual world. Just as Descartes couldn't know whether anything was real until he assured himself that he could trust his senses, malware is always questing to discover whether it is running in the real universe, or in a simulation created by a wicked god:

https://pluralistic.net/2022/07/28/descartes-was-an-optimist/#uh-oh

That's why mobile malware uses clever gambits like periodically checking for readings from your device's accelerometer, on the theory that a virtual mobile phone running on a security researcher's test bench won't have the fidelity to generate plausible jiggles to match the real data that comes from a phone in your pocket:

https://arstechnica.com/information-technology/2019/01/google-play-malware-used-phones-motion-sensors-to-conceal-itself/

Sometimes this backfires in absolutely delightful ways. When the Wannacry ransomware was holding the world hostage, the security researcher Marcus Hutchins noticed that its code made reference to a very weird website: iuqerfsodp9ifjaposdfjhgosurijfaewrwergwea.com. Hutchins stood up a website at that address and every Wannacry-infection in the world went instantly dormant:

https://pluralistic.net/2020/07/10/flintstone-delano-roosevelt/#the-matrix

It turns out that Wannacry's authors were using that ferkakte URL the same way that mobile malware authors were using accelerometer readings – to fulfill Descartes' imperative to distinguish the Matrix from reality. The malware authors knew that security researchers often ran malicious code inside sandboxes that answered every network query with fake data in hopes of eliciting responses that could be analyzed for weaknesses. So the Wannacry worm would periodically poll this nonexistent website and, if it got an answer, it would assume that it was being monitored by a security researcher and it would retreat to an encrypted blob, ceasing to operate lest it give intelligence to the enemy. When Hutchins put a webserver up at iuqerfsodp9ifjaposdfjhgosurijfaewrwergwea.com, every Wannacry instance in the world was instantly convinced that it was running on an enemy's simulator and withdrew into sulky hibernation.

The arms race to distinguish simulation from reality is critical and the stakes only get higher by the day. Malware abounds, even as our devices grow more intimately woven through our lives. We put our bodies into computers – cars, buildings – and computers inside our bodies. We absolutely want our computers to be able to faithfully convey what's going on inside them.

But we keep running as hard as we can in the opposite direction, leaning harder into secure computing models built on subsystems in our computers that treat us as the threat. Take UEFI, the ubiquitous security system that observes your computer's boot process, halting it if it sees something it doesn't approve of. On the one hand, this has made installing GNU/Linux and other alternative OSes vastly harder across a wide variety of devices. This means that when a vendor end-of-lifes a gadget, no one can make an alternative OS for it, so off the landfill it goes.

It doesn't help that UEFI – and other trusted computing modules – are covered by Section 1201 of the Digital Millennium Copyright Act (DMCA), which makes it a felony to publish information that can bypass or weaken the system. The threat of a five-year prison sentence and a $500,000 fine means that UEFI and other trusted computing systems are understudied, leaving them festering with longstanding bugs:

https://pluralistic.net/2020/09/09/free-sample/#que-viva

Here's where it gets really bad. If an attacker can get inside UEFI, they can run malicious software that – by design – no program running on our computers can detect or block. That badware is running in "Ring -1" – a zone of privilege that overrides the operating system itself.

Here's the bad news: UEFI malware has already been detected in the wild:

https://securelist.com/cosmicstrand-uefi-firmware-rootkit/106973/

And here's the worst news: researchers have just identified another exploitable UEFI bug, dubbed Pixiefail:

https://blog.quarkslab.com/pixiefail-nine-vulnerabilities-in-tianocores-edk-ii-ipv6-network-stack.html

Writing in Ars Technica, Dan Goodin breaks down Pixiefail, describing how anyone on the same LAN as a vulnerable computer can infect its firmware:

https://arstechnica.com/security/2024/01/new-uefi-vulnerabilities-send-firmware-devs-across-an-entire-ecosystem-scrambling/

That vulnerability extends to computers in a data-center where the attacker has a cloud computing instance. PXE – the system that Pixiefail attacks – isn't widely used in home or office environments, but it's very common in data-centers.

Again, once a computer is exploited with Pixiefail, software running on that computer can't detect or delete the Pixiefail code. When the compromised computer is queried by the operating system, Pixiefail undetectably lies to the OS. "Hey, OS, does this drive have a file called 'pixiefail?'" "Nope." "Hey, OS, are you running a process called 'pixiefail?'" "Nope."

This is a self-destruct switch that's been compromised by the enemy, and which no one on the bridge can de-activate – by design. It's not the first time this has happened, and it won't be the last.

There are models for helping your computer bust out of the Matrix. Back in 2016, Edward Snowden and bunnie Huang prototyped and published source code and schematics for an "introspection engine":

https://assets.pubpub.org/aacpjrja/AgainstTheLaw-CounteringLawfulAbusesofDigitalSurveillance.pdf

This is a single-board computer that lives in an ultraslim shim that you slide between your iPhone's mainboard and its case, leaving a ribbon cable poking out of the SIM slot. This connects to a case that has its own OLED display. The board has leads that physically contact each of the network interfaces on the phone, conveying any data they transit to the screen so that you can observe the data your phone is sending without having to trust your phone.

(I liked this gadget so much that I included it as a major plot point in my 2020 novel Attack Surface, the third book in the Little Brother series):

https://craphound.com/attacksurface/

We don't have to cede control over our devices in order to secure them. Indeed, we can't ever secure them unless we can control them. Self-destruct switches don't belong on the bridge of your spaceship, and trusted computing modules don't belong in your devices.

I'm Kickstarting the audiobook for The Bezzle, the sequel to Red Team Blues, narrated by @wilwheaton! You can pre-order the audiobook and ebook, DRM free, as well as the hardcover, signed or unsigned. There's also bundles with Red Team Blues in ebook, audio or paperback.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/01/17/descartes-delenda-est/#self-destruct-sequence-initiated

Image: Mike (modified) https://www.flickr.com/photos/stillwellmike/15676883261/

CC BY-SA 2.0 https://creativecommons.org/licenses/by-sa/2.0/

#pluralistic#uefi#owner override#user override#jailbreaking#dmca 1201#schneiers law#descartes#nub of certainty#self-destruct button#trusted computing#secure enclaves#drm#ngscb#next generation secure computing base#palladium#pixiefail#infosec

576 notes

·

View notes

Text

Surveillance developments of the 21st century have replaced the traditional gaze of the supervisor on the industrial factory floor with an automated, digital one that continuously collects real-time data on living, breathing people. Even unionized workers do not have an explicit legal right to bargain over surveillance technologies; when it comes to the right to privacy, unions have an uphill battle to fight. We now live in a world where employees are stuck in a web of participatory surveillance because they consent to be monitored as a condition of employment. Today’s workplace surveillance practices, as in the case of Amazon, have become invasive and almost limitless. Technology has allowed employers an unprecedented ability to surveil workers. Management can minutely track and persistently push workers toward greater productivity at the risk of exacerbating harms to workers’ physical health, as the high rates of injury in Amazon warehouses show. And the growing business of selling workplace surveillance software has allowed for massive amounts of data to be collected on working people: when and who they talk to, how quickly they complete tasks, what they search for on their computers, how often they use the toilet, and even the state of their current health and moods.

96 notes

·

View notes

Note

Hello there! I hope you don’t mind me just dropping into your asks like this, but by all means def feel free to just delete this if so, it is kind of a weird ask.

This is the anon from the computer blog asking about a private laptop for collage! After doing (a small amount of) research into Linux, one thing that’s super confusing to me, is… how does one know which distro to use? You mentioned in the replies of the post that you use Ubuntu Linux, which seems to be one of the more popular ones. Would you recommend — and if so, why? Is it good for privacy, do you think? The best? Does the user need to have a good deal of experience with computers to keep it running? (I’ve never used a laptop before but I don’t mind trying to learn stuff)

Also this is an EXTREMELY stupid question my apologies, but how….. exactly do you put Linux on a laptop? OP from my ask said to buy a laptop with no OS but is that something you can do? I’d think so, since 0P works with computer and stuff as their job, but Reddit says that it’s not really possible and that you should just “buy like a Windows laptop and scrap the software”??? Is that… correct? How did you install Linux on your laptop — did y ou have to remove software off it or did you, as OP says, manage to find a laptop with no OS?

Again, feel free to ignore if you don’t wanna put in the time/effort to reply to this, I absolutely don’t mind — it’s a lot of stuff I’m asking and you didn’t invite it all, so ofc feel free to delete the ask if you’d like!

ha, you've zeroed in on one of the big reasons Linux is kind of a contrarian choice for me to recommend: the wild proliferation of distros, many of them hideously complex to work with. luckily, the fact that most of them are niche offshoots created by and for overly-technical nerds makes the choice easier: you don't want those. you want one of the largest, best-supported, most popular ones, with a reputation for being beginner-friendly. the two biggies are Ubuntu and Linux Mint; i'd recommend focusing your research there.

this isn't JUST a popularity-contest thing: the more people use it, the more likely you are to find answers if you're having trouble or plugging a weird error message into google, and the greater the variety of software you'll find packaged for easy install in that distro. some combination of professional and broad-based community support means you'll find better documentation and tutorials, glitches will be rarer and get fixed faster, and the OS is less likely to be finicky about what hardware it'll play nice with. the newbie-friendly ones are designed to be a breeze to install and to not require technical fiddling to run them for everyday tasks like web browsing, document editing, media viewing, file management, and such.

info on installation, privacy, personal endorsement, etc under the cut. tl;dr: most computers can make you a magic Linux-installing USB stick, most Linuces are blessedly not part of the problem on privacy, Ubuntu i can firsthand recommend but Mint is probably also good.

almost all Linux distros can be assumed to be better for privacy than Windows or MacOS, because they are working from a baseline of Not Being One Of The Things Spying On You; some are managed by corporations (Ubuntu is one of them), but even those corporations have to cater to a notoriously cantankerous userbase, so most phoning-home with usage data tends to be easy to turn off and sponsored bullshit kept minimally intrusive. the one big exception i know of is Google's bastard stepchild ChromeOS, which you really don't want to be using, for a wide variety of reasons. do NOT let someone talk you into installing fucking Qubes or something on claims that it's the "most private" or "most secure" OS; that's total user-unfriendly overkill unless you have like a nation-state spy agency or something targeting you, specifically.

how to install Linux is also not a dumb question! back in the day, if you wanted to, say, upgrade a desktop computer from Windows 95 to Windows 98, you'd receive a physical CD-ROM disc whose contents were formatted to tell the computer "hey, i'm not a music CD or a random pile of backup data or a piece of software for the OS to run, i want you to run me as the OS next time you boot up," and then that startup version would walk you through the install.

nowadays almost anyone with a computer can create a USB stick that'll do the same thing: you download an Ubuntu installer and a program that can perform that kind of formatting, plug in the USB stick, tell the program to put the installer on it and make it bootable, and then once it's done, plug the USB stick into the computer you want to Linuxify and turn it on.

Ubuntu has an excellent tutorial for every step of the install process, and an option to do a temporary test install so you can poke around and see how you like it without pulling the trigger irreversibly: https://ubuntu.com/tutorials/install-ubuntu-desktop

having a way to create a bootable USB stick is one reason to just get a Windows computer and then let the Linux installer nuke everything (which i think is the most common workflow), but in a pinch you can also create the USB on a borrowed/shared computer and uninstall the formatter program when you're done. i don't have strong opinions on what kind of laptop to get, except "if you do go for Linux, be sure to research in advance whether the distro is known to play nice with your hardware." i'm partial to ThinkPads but that's just, like, my opinion, man. lots of distros' installers also make it dead simple to create a dual-boot setup where you can pick between Windows and Linux at every startup, which is useful if you know you might have to use Windows-only software for school or something. keep in mind, though, that this creates two little fiefdoms whose files and hard-disk space aren't shared at all, and it is not a beginner-friendly task to go in later and change how much storage each OS has access to.

i've been using the distro i'm most familiar with as my go-to example throughout, but i don't really have a strong opinion on Ubuntu vs Mint, simply because i haven't played around with Mint enough to form one. Ubuntu i'll happily recommend as a beginner-friendly version of Linux that's reasonably private by default. (i think there's like one install step where Canonical offers paid options, telemetry, connecting online accounts, etc, and then respects your "fuck off" and doesn't bug you about it again.) by reputation, Mint has a friendlier UI, especially for people who are used to Windows, and its built-in app library/"store" is slicker but offers a slightly more limited ecosystem of point-and-click installs.

(unlike Apple and Google, there are zero standard Linux distros that give a shit if you manually install software from outside the app store, it's just a notoriously finicky process that could take two clicks or could have you tearing your hair out at 3am. worth trying if the need arises, but not worth stressing over if you can't get it to work.)

basic software starter-pack recommendations for any laptop (all available on Windows and Mac too): Firefox with the uBlock Origin and container tab add-ons, VLC media player, LibreOffice for document editing. the closest thing to a dealbreaking pain in the ass about Linux these days (imo) is that all the image and video editing software i know of is kinda janky in some way, so if that's non-negotiable you may have to dual-boot... GIMP is the godawfully-clunky-but-powerful Photoshop knockoff, and i've heard decent things about Pinta as a mid-weight image editor roughly equivalent to Paint.net for Windows.

50 notes

·

View notes

Note

I am SUPREMELY interested in your internet activity book - I work at a library and am trying to make a similar resource myself. The Australian Government created a fantastic digital literacy resource website called Be Connected, but even though it has tutorials that go right down to 'this is a mouse' basics you do need a certain level of comprehension to access the site (also it doesn't go far enough on privacy imo) so I've been trying to patch the gaps and driving myself slightly insane with it bc there is just. so much.

If you're willing to share more I'd be thrilled to hear about it, and I would LOVE to pool resources if you're willing.

So what I'm basically doing is 11 activities to help people get familiar with a windows computer that they own. It assumes that the user has permissions to make changes to the desktop and install software, etc. The activities I'm writing up do include some browser activities (I walk people through creating a protonmail account and signing up for a password manager) but starts with things like "pin programs to your taskbar" and "create a folder on the desktop and rename it."

The stuff that I'm focusing on is things like "save a QR code in paint to print it out because you don't have a smartphone and can't return this item otherwise" and "learn how to find where files are on your computer." This is mostly supposed to be a way for someone to feel like they're being supervised and are doing safe activities as they learn to get comfortable with simply handling the various input devices and navigating the desktop.

So I don't go in-depth on security because that's not the purpose of this resource specifically, and security can, unfortunately, be something of an advanced topic.

What I would love to know is what in-browser things people tend to struggle with because it's likely that you are seeing people who can't install software on the computers they are using and can't store data on the computers long-term, so they have a very different relationship to your computers than they would to their own. What do people at the library struggle with on library computers? What are the things that they come in to do? Do people use your computers more for going on the internet or for things like printing? Do most of the people who come in to use library computers have email addresses? Because I would very much like to create a similar activity book for using the internet regardless of browser or operating system.

111 notes

·

View notes

Text

Watching a ““privacy education”” post come onto my dash where the poster recommends installing a shitton of extensions, like:

Usually not only are the extensions awful, but the real privacy advice would be to use as few extensions as possible.

Every extension you add to your browser makes your online fingerprint more unique, and therefore, more traceable. If you really care about your online privacy, you need to minimize your extensions down to the bare necessities and learn to use your browsers built-in settings to their full potential. uBlock Origin can handle A LOT of the stuff that extensions like Privacy Badger, Decentraleyes, and ClearURLs can. Firefox also has native settings that make extensions like HTTPS Everywhere and Cookie Autodelete entirely redundant.

Also for the love of god DO NOT install more than one ad blocker. By doing so, you dramatically increase the chances that your browser will take an anti-ad blocker bait ad, resulting in the anti-ad blocker pop-ups. uBlock Origin is all you need. It’s the best of the best, and it respects your privacy unlike some blockers like Ghostery which are known to sell your data.

Also, on the topic of password managers, Bitwarden should be your only choice. Yes, even over Proton Pass. Any software that has a marketing budget that big should not be trusted. They will flip on a dime and paywall features without warning. We’ve seen it time and time again. Use Bitwarden. Period.

#rambles#meme#memes#privacy#safety#security#online privacy#online security#online safety#browser extension

11 notes

·

View notes

Text

Generative AI tools such as OpenAI’s ChatGPT and Microsoft’s Copilot are rapidly evolving, fueling concerns that the technology could open the door to multiple privacy and security issues, particularly in the workplace.

In May, privacy campaigners dubbed Microsoft’s new Recall tool a potential “privacy nightmare” due to its ability to take screenshots of your laptop every few seconds. The feature has caught the attention of UK regulator the Information Commissioner’s Office, which is asking Microsoft to reveal more about the safety of the product launching soon in its Copilot+ PCs.

Concerns are also mounting over OpenAI’s ChatGPT, which has demonstrated screenshotting abilities in its soon-to-launch macOS app that privacy experts say could result in the capture of sensitive data.

The US House of Representatives has banned the use of Microsoft’s Copilot among staff members after it was deemed by the Office of Cybersecurity to be a risk to users due to “the threat of leaking House data to non-House approved cloud services.”

Meanwhile, market analyst Gartner has cautioned that “using Copilot for Microsoft 365 exposes the risks of sensitive data and content exposure internally and externally.” And last month, Google was forced to make adjustments to its new search feature, AI Overviews, after screenshots of bizarre and misleading answers to queries went viral.

Overexposed

For those using generative AI at work, one of the biggest challenges is the risk of inadvertently exposing sensitive data. Most generative AI systems are “essentially big sponges,” says Camden Woollven, group head of AI at risk management firm GRC International Group. “They soak up huge amounts of information from the internet to train their language models.”

AI companies are “hungry for data to train their models,” and are “seemingly making it behaviorally attractive” to do so, says Steve Elcock, CEO and founder at software firm Elementsuite. This vast amount of data collection means there’s the potential for sensitive information to be put “into somebody else’s ecosystem,” says Jeff Watkins, chief product and technology officer at digital consultancy xDesign. “It could also later be extracted through clever prompting.”

At the same time, there’s the threat of AI systems themselves being targeted by hackers. “Theoretically, if an attacker managed to gain access to the large language model (LLM) that powers a company's AI tools, they could siphon off sensitive data, plant false or misleading outputs, or use the AI to spread malware,” says Woollven.

Consumer-grade AI tools can create obvious risks. However, an increasing number of potential issues are arising with “proprietary” AI offerings broadly deemed safe for work such as Microsoft Copilot, says Phil Robinson, principal consultant at security consultancy Prism Infosec.

“This could theoretically be used to look at sensitive data if access privileges have not been locked down. We could see employees asking to see pay scales, M&A activity, or documents containing credentials, which could then be leaked or sold.”

Another concern centers around AI tools that could be used to monitor staff, potentially infringing their privacy. Microsoft’s Recall feature states that “your snapshots are yours; they stay locally on your PC” and “you are always in control with privacy you can trust.”

Yet “it doesn’t seem very long before this technology could be used for monitoring employees,” says Elcock.

Self-Censorship

Generative AI does pose several potential risks, but there are steps businesses and individual employees can take to improve privacy and security. First, do not put confidential information into a prompt for a publicly available tool such as ChatGPT or Google’s Gemini, says Lisa Avvocato, vice president of marketing and community at data firm Sama.

When crafting a prompt, be generic to avoid sharing too much. “Ask, ‘Write a proposal template for budget expenditure,’ not ‘Here is my budget, write a proposal for expenditure on a sensitive project,’” she says. “Use AI as your first draft, then layer in the sensitive information you need to include.”

If you use it for research, avoid issues such as those seen with Google’s AI Overviews by validating what it provides, says Avvocato. “Ask it to provide references and links to its sources. If you ask AI to write code, you still need to review it, rather than assuming it’s good to go.”

Microsoft has itself stated that Copilot needs to be configured correctly and the “least privilege”—the concept that users should only have access to the information they need—should be applied. This is “a crucial point,” says Prism Infosec’s Robinson. “Organizations must lay the groundwork for these systems and not just trust the technology and assume everything will be OK.”

It’s also worth noting that ChatGPT uses the data you share to train its models, unless you turn it off in the settings or use the enterprise version.

List of Assurances

The firms integrating generative AI into their products say they’re doing everything they can to protect security and privacy. Microsoft is keen to outline security and privacy considerations in its Recall product and the ability to control the feature in Settings > Privacy & security > Recall & snapshots.

Google says generative AI in Workspace “does not change our foundational privacy protections for giving users choice and control over their data,” and stipulates that information is not used for advertising.

OpenAI reiterates how it maintains security and privacy in its products, while enterprise versions are available with extra controls. “We want our AI models to learn about the world, not private individuals—and we take steps to protect people’s data and privacy,” an OpenAI spokesperson tells WIRED.

OpenAI says it offers ways to control how data is used, including self-service tools to access, export, and delete personal information, as well as the ability to opt out of use of content to improve its models. ChatGPT Team, ChatGPT Enterprise, and its API are not trained on data or conversations, and its models don’t learn from usage by default, according to the company.

Either way, it looks like your AI coworker is here to stay. As these systems become more sophisticated and omnipresent in the workplace, the risks are only going to intensify, says Woollven. “We're already seeing the emergence of multimodal AI such as GPT-4o that can analyze and generate images, audio, and video. So now it's not just text-based data that companies need to worry about safeguarding.”

With this in mind, people—and businesses—need to get in the mindset of treating AI like any other third-party service, says Woollven. “Don't share anything you wouldn't want publicly broadcasted.”

10 notes

·

View notes

Text

You know what would be funny?

All these big companies nowadays are investing so much money into AI, all in the utmost confidence it'll pay itself back in the future, literally flooding the internet with AI blogs, articles, tools, etc.

But with the rise of anti-AI and how even AI supporters are going to get annoyed when certain red lines are crossed (privacy is universal and everyone has their limits) there'll come a point where being on the internet becomes too grating to be, well, dopamine-sufficient.

Then people will look out of their windows and realise 'oh, right, the world isn't JUST the internet' and get up from behind their computers to go outside in order to enjoy life.

And all those big companies aren't going to notice until it's far too late because, spoiler alert, there's enough data stored on the worldwide web to feed even the most efficient AI scrapping software for YEARS.

They'll go ask the people 'why aren't you using our tools? Why aren't you watching out vlogs? It's all AI!'

And all the people can give them the good old finger because, flash news! Before the invention of the internet our species has managed just fine for millenia! You seriously think we're going to become so relient on it that we can't even function without? THINK AGAIN IDIOTS!

Let's not forget. Humans adapt to make things both efficient and enjoyable. When either factor falls on the wayside, we abandon ship faster than mice. We're petty like that.

#i hate ai#it's ugly#it's annoying#it's frustrating#it's plain bad#ai isn't everything#and we aren't relient on it#to a point where the big corpo can profit without out consent#ai can and will kill the internet if they're not careful#and right now#they're definitely not

3 notes

·

View notes

Text

Website Maintenance and Support In Australia

In today's digital landscape, a strong online presence is essential for businesses of all sizes. In Australia, where the internet plays a vital role in consumer behavior, maintaining a well-functioning website is more important than ever. Website maintenance and support are crucial components that can significantly impact your business's success. Here’s why investing in these services is essential. #Sunshine Coast Web Design

Why Website Maintenance Matters

1. Security

Cybersecurity threats are constantly evolving, and Australian businesses are not immune to attacks. Regular website maintenance includes updating software, plugins, and security protocols to protect against vulnerabilities. This proactive approach helps safeguard sensitive customer data, ensuring your business remains trustworthy and compliant with regulations like the Australian Privacy Principles.

2. Performance Optimization

A slow-loading website can deter potential customers and harm your search engine rankings. Routine maintenance allows for performance optimizations, such as image compression, code minification, and caching strategies. These improvements enhance user experience, reduce bounce rates, and ultimately lead to higher conversion rates.

3. Content Updates

Your website is a reflection of your business, and keeping content fresh is vital. Regular updates to blogs, product listings, and service pages not only engage visitors but also signal to search engines that your site is active. This can improve your visibility in search results, driving more organic traffic to your website.

4. Technical Issues

Websites can experience various technical problems, from broken links to server downtime. Routine maintenance helps identify and resolve these issues before they escalate, ensuring your site remains functional. Quick response times to technical glitches can mean the difference between losing a customer and retaining their business.

5. User Experience

A well-maintained website enhances user experience. Regular audits and updates to navigation, design elements, and mobile responsiveness ensure that visitors can easily find the information they need. An intuitive user experience not only retains existing customers but also attracts new ones through positive word-of-mouth.

Choosing the Right Support

When it comes to website maintenance and support in Australia, selecting the right partner is crucial. Here are some factors to consider:

1. Experience and Expertise

Look for a company with a proven track record in website maintenance. They should have a strong understanding of various platforms, coding languages, and best practices in web security.

2. Comprehensive Services

Choose a provider that offers a range of services, including security updates, performance monitoring, and content management. A one-stop shop simplifies the process and ensures all aspects of your website are covered.

3. Responsive Support

In the digital world, issues can arise at any time. Opt for a support service that provides timely responses and assistance, ensuring minimal downtime for your business.

4. Custom Solutions

Every business is unique, and your website maintenance plan should reflect that. Look for providers that offer customizable packages tailored to your specific needs and budget.

Contact Us Today!

🌐 : https://sunshinecoastwebdesign.com.au/

☎: +61 418501122

🏡: 32 Warrego Drive Pelican Waters Sunshine Coast QLD, 4551 Australia

Conclusion

Investing in website maintenance and support is not just about keeping your site functional; it’s about enhancing your brand's reputation and customer trust. In Australia’s competitive online market, a well-maintained website can be a significant differentiator. By prioritizing maintenance, you ensure that your website continues to serve as an effective marketing tool, driving growth and success for your business. Don’t wait until issues arise—embrace proactive website management to stay ahead of the curve.

3 notes

·

View notes

Text

Why Your Business Needs Fintech Software At present ?

In an era defined by technological advancements and digital transformation, the financial sector is experiencing a seismic shift. Traditional banking practices are being challenged by innovative solutions that streamline operations, enhance user experiences, and improve financial management. Fintech software is at the forefront of this transformation, offering businesses the tools they need to stay competitive. Here’s why your business needs fintech software now more than ever.

1. Enhanced Efficiency and Automation

One of the primary advantages of fintech software is its ability to automate repetitive and time-consuming tasks. From invoicing and payment processing to compliance checks, automation helps reduce human error and increase efficiency. By integrating fintech software services, businesses can streamline their operations, freeing up employees to focus on more strategic tasks that require human intelligence and creativity.

Automated processes not only save time but also reduce operational costs. For example, automating invoice processing can significantly cut down on the resources spent on manual entry, approval, and payment. This efficiency translates into faster service delivery, which is crucial in today’s fast-paced business environment.

2. Improved Customer Experience

In a competitive marketplace, providing an exceptional customer experience is vital for business success. Fintech software enhances user experience by offering seamless, user-friendly interfaces and multiple channels for interaction. Customers today expect quick and easy access to their financial information, whether through mobile apps or web platforms.

Fintech software services can help businesses create personalized experiences for their customers. By analyzing customer data, businesses can tailor their offerings to meet individual needs, enhancing customer satisfaction and loyalty. A better user experience leads to higher retention rates, ultimately contributing to a company’s bottom line.

3. Data-Driven Decision Making

In the digital age, data is one of the most valuable assets a business can have. Fintech software allows businesses to collect, analyze, and leverage vast amounts of data to make informed decisions. Advanced analytics tools embedded in fintech solutions provide insights into customer behavior, market trends, and financial performance.

These insights enable businesses to identify opportunities for growth, mitigate risks, and optimize their operations. For instance, predictive analytics can help anticipate customer needs, allowing businesses to proactively offer services or products before they are even requested. This data-driven approach not only enhances strategic decision-making but also positions businesses ahead of their competition.

4. Increased Security and Compliance

With the rise of cyber threats and increasing regulatory scrutiny, security and compliance have become paramount concerns for businesses. Fintech software comes equipped with advanced security features such as encryption, two-factor authentication, and real-time monitoring to protect sensitive financial data.

Moreover, fintech software services often include built-in compliance management tools that help businesses adhere to industry regulations. By automating compliance checks and generating necessary reports, these solutions reduce the risk of non-compliance penalties and reputational damage. Investing in robust security measures not only safeguards your business but also builds trust with customers, who are increasingly concerned about data privacy.

5. Cost Savings and Financial Management

Implementing fintech software can lead to significant cost savings in various aspects of your business. Traditional financial management processes often require extensive manpower and resources. By automating these processes, fintech solutions can help minimize operational costs and improve cash flow management.

Additionally, fintech software often offers advanced financial tools that provide real-time insights into cash flow, expenses, and budgeting. These tools help businesses make informed financial decisions, leading to better resource allocation and improved profitability. In an uncertain economic climate, having a firm grasp on your financial situation is more critical than ever.

6. Flexibility and Scalability

The modern business landscape is characterized by rapid changes and evolving market conditions. Fintech software offers the flexibility and scalability necessary to adapt to these changes. Whether you’re a startup looking to establish a foothold or an established enterprise aiming to expand, fintech solutions can grow with your business.

Many fintech software services are cloud-based, allowing businesses to easily scale their operations without significant upfront investments. As your business grows, you can add new features, expand user access, and integrate additional services without overhauling your entire system. This adaptability ensures that you can meet changing customer demands and market conditions effectively.

7. Access to Innovative Financial Products

Fintech software has democratized access to a variety of financial products and services that were once only available through traditional banks. Small businesses can now leverage fintech solutions to access loans, payment processing, and investment platforms that are tailored to their specific needs.

These innovative financial products often come with lower fees and more favorable terms, making them accessible for businesses of all sizes. By utilizing fintech software, you can diversify your financial strategies, ensuring that you’re not reliant on a single source of funding or financial service.

Conclusion

In conclusion, the need for fintech software in today’s business environment is clear. With enhanced efficiency, improved customer experiences, and the ability to make data-driven decisions, fintech solutions are essential for staying competitive. Additionally, the increased focus on security and compliance, coupled with cost savings and access to innovative products, makes fintech software a valuable investment.

By adopting fintech software services, your business can not only streamline its operations but also position itself for growth in a rapidly evolving financial landscape. As the world becomes increasingly digital, embracing fintech solutions is no longer an option; it’s a necessity for sustainable success.

3 notes

·

View notes

Text

Algorithmic feeds are a twiddler’s playground

Next TUESDAY (May 14), I'm on a livecast about AI AND ENSHITTIFICATION with TIM O'REILLY; on WEDNESDAY (May 15), I'm in NORTH HOLLYWOOD with HARRY SHEARER for a screening of STEPHANIE KELTON'S FINDING THE MONEY; FRIDAY (May 17), I'm at the INTERNET ARCHIVE in SAN FRANCISCO to keynote the 10th anniversary of the AUTHORS ALLIANCE.

Like Oscar Wilde, "I can resist anything except temptation," and my slow and halting journey to adulthood is really just me grappling with this fact, getting temptation out of my way before I can yield to it.

Behavioral economists have a name for the steps we take to guard against temptation: a "Ulysses pact." That's when you take some possibility off the table during a moment of strength in recognition of some coming moment of weakness:

https://archive.org/details/decentralizedwebsummit2016-corydoctorow

Famously, Ulysses did this before he sailed into the Sea of Sirens. Rather than stopping his ears with wax to prevent his hearing the sirens' song, which would lure him to his drowning, Ulysses has his sailors tie him to the mast, leaving his ears unplugged. Ulysses became the first person to hear the sirens' song and live to tell the tale.

Ulysses was strong enough to know that he would someday be weak. He expressed his strength by guarding against his weakness. Our modern lives are filled with less epic versions of the Ulysses pact: the day you go on a diet, it's a good idea to throw away all your Oreos. That way, when your blood sugar sings its siren song at 2AM, it will be drowned out by the rest of your body's unwillingness to get dressed, find your keys and drive half an hour to the all-night grocery store.

Note that this Ulysses pact isn't perfect. You might drive to the grocery store. It's rare that a Ulysses pact is unbreakable – we bind ourselves to the mast, but we don't chain ourselves to it and slap on a pair of handcuffs for good measure.

People who run institutions can – and should – create Ulysses pacts, too. A company that holds the kind of sensitive data that might be subjected to "sneak-and-peek" warrants by cops or spies can set up a "warrant canary":

https://en.wikipedia.org/wiki/Warrant_canary

This isn't perfect. A company that stops publishing regular transparency reports might have been compromised by the NSA, but it's also possible that they've had a change in management and the new boss just doesn't give a shit about his users' privacy:

https://www.fastcompany.com/90853794/twitters-transparency-reporting-has-tanked-under-elon-musk

Likewise, a company making software it wants users to trust can release that code under an irrevocable free/open software license, thus guaranteeing that each release under that license will be free and open forever. This is good, but not perfect: the new boss can take that free/open code down a proprietary fork and try to orphan the free version:

https://news.ycombinator.com/item?id=39772562

A company can structure itself as a public benefit corporation and make a binding promise to elevate its stakeholders' interests over its shareholders' – but the CEO can still take a secret $100m bribe from cryptocurrency creeps and try to lure those stakeholders into a shitcoin Ponzi scheme:

https://fortune.com/crypto/2024/03/11/kickstarter-blockchain-a16z-crypto-secret-investment-chris-dixon/

A key resource can be entrusted to a nonprofit with a board of directors who are charged with stewarding it for the benefit of a broad community, but when a private equity fund dangles billions before that board, they can talk themselves into a belief that selling out is the right thing to do:

https://www.eff.org/deeplinks/2020/12/how-we-saved-org-2020-review

Ulysses pacts aren't perfect, but they are very important. At the very least, creating a Ulysses pact starts with acknowledging that you are fallible. That you can be tempted, and rationalize your way into taking bad action, even when you know better. Becoming an adult is a process of learning that your strength comes from seeing your weaknesses and protecting yourself and the people who trust you from them.

Which brings me to enshittification. Enshittification is the process by which platforms betray their users and their customers by siphoning value away from each until the platform is a pile of shit:

https://en.wikipedia.org/wiki/Enshittification

Enshittification is a spectrum that can be applied to many companies' decay, but in its purest form, enshittification requires:

a) A platform: a two-sided market with business customers and end users who can be played off against each other; b) A digital back-end: a market that can be easily, rapidly and undetectably manipulated by its owners, who can alter search-rankings, prices and costs on a per-user, per-query basis; and c) A lack of constraint: the platform's owners must not fear a consequence for this cheating, be it from competitors, regulators, workforce resignations or rival technologists who use mods, alternative clients, blockers or other "adversarial interoperability" tools to disenshittify your product and sever your relationship with your users.

he founders of tech platforms don't generally set out to enshittify them. Rather, they are constantly seeking some equilibrium between delivering value to their shareholders and turning value over to end users, business customers, and their own workers. Founders are consummate rationalizers; like parenting, founding a company requires continuous, low-grade self-deception about the amount of work involved and the chances of success. A founder, confronted with the likelihood of failure, is absolutely capable of talking themselves into believing that nearly any compromise is superior to shuttering the business: "I'm one of the good guys, so the most important thing is for me to live to fight another day. Thus I can do any number of immoral things to my users, business customers or workers, because I can make it up to them when we survive this crisis. It's for their own good, even if they don't know it. Indeed, I'm doubly moral here, because I'm volunteering to look like the bad guy, just so I can save this business, which will make the world over for the better":

https://locusmag.com/2024/05/cory-doctorow-no-one-is-the-enshittifier-of-their-own-story/

(En)shit(tification) flows downhill, so tech workers grapple with their own version of this dilemma. Faced with constant pressure to increase the value flowing from their division to the company, they have to balance different, conflicting tactics, like "increasing the number of users or business customers, possibly by shifting value from the company to these stakeholders in the hopes of making it up in volume"; or "locking in my existing stakeholders and squeezing them harder, safe in the knowledge that they can't easily leave the service provided the abuse is subtle enough." The bigger a company gets, the harder it is for it to grow, so the biggest companies realize their gains by locking in and squeezing their users, not by improving their service::

https://pluralistic.net/2023/07/28/microincentives-and-enshittification/

That's where "twiddling" comes in. Digital platforms are extremely flexible, which comes with the territory: computers are the most flexible tools we have. This means that companies can automate high-speed, deceptive changes to the "business logic" of their platforms – what end users pay, how much of that goes to business customers, and how offers are presented to both:

https://pluralistic.net/2023/02/19/twiddler/

This kind of fraud isn't particularly sophisticated, but it doesn't have to be – it just has to be fast. In any shell-game, the quickness of the hand deceives the eye:

https://pluralistic.net/2024/03/26/glitchbread/#electronic-shelf-tags

Under normal circumstances, this twiddling would be constrained by counterforces in society. Changing the business rules like this is fraud, so you'd hope that a regulator would step in and extinguish the conduct, fining the company that engaged in it so hard that they saw a net loss from the conduct. But when a sector gets very concentrated, its mega-firms capture their regulators, becoming "too big to jail":

https://pluralistic.net/2022/06/05/regulatory-capture/

Thus the tendency among the giant tech companies to practice the one lesson of the Darth Vader MBA: dismissing your stakeholders' outrage by saying, "I am altering the deal. Pray I don't alter it any further":

https://pluralistic.net/2023/10/26/hit-with-a-brick/#graceful-failure

Where regulators fail, technology can step in. The flexibility of digital platforms cuts both ways: when the company enshittifies its products, you can disenshittify it with your own countertwiddling: third-party ink-cartridges, alternative app stores and clients, scrapers, browser automation and other forms of high-tech guerrilla warfare:

https://www.eff.org/deeplinks/2019/10/adversarial-interoperability

But tech giants' regulatory capture have allowed them to expand "IP rights" to prevent this self-help. By carefully layering overlapping IP rights around their products, they can criminalize the technology that lets you wrestle back the value they've claimed for themselves, creating a new offense of "felony contempt of business model":

https://locusmag.com/2020/09/cory-doctorow-ip/

A world where users must defer to platforms' moment-to-moment decisions about how the service operates, without the protection of rival technology or regulatory oversight is a world where companies face a powerful temptation to enshittify.

That's why we've seen so much enshittification in platforms that algorithmically rank their feeds, from Google and Amazon search to Facebook and Twitter feeds. A search engine is always going to be making a judgment call about what the best result for your search should be. If a search engine is generally good at predicting which results will please you best, you'll return to it, automatically clicking the first result ("I'm feeling lucky").

This means that if a search engine slips in the odd paid result at the top of the results, they can exploit your trusting habits to shift value from you to their investors. The congifurability of a digital service means that they can sprinkle these frauds into their services on a random schedule, making them hard to detect and easy to dismiss as lapses. Gradually, this acquires its own momentum, and the platform becomes addicted to lowering its own quality to raise its profits, and you get modern Google, which cynically lowered search quality to increase search volume:

https://pluralistic.net/2024/04/24/naming-names/#prabhakar-raghavan

And you get Amazon, which makes $38 billion every year, accepting bribes to replace its best search results with paid results for products that cost more and are of lower quality:

https://pluralistic.net/2023/11/06/attention-rents/#consumer-welfare-queens

Social media's enshittification followed a different path. In the beginning, social media presented a deterministic feed: after you told the platform who you wanted to follow, the platform simply gathered up the posts those users made and presented them to you, in reverse-chronological order.

This presented few opportunities for enshittification, but it wasn't perfect. For users who were well-established on a platform, a reverse-chrono feed was an ungovernable torrent, where high-frequency trivialities drowned out the important posts from people whose missives were buried ten screens down in the updates since your last login.

For new users who didn't yet follow many people, this presented the opposite problem: an empty feed, and the sense that you were all alone while everyone else was having a rollicking conversation down the hall, in a room you could never find.

The answer was the algorithmic feed: a feed of recommendations drawn from both the accounts you followed and strangers alike. Theoretically, this could solve both problems, by surfacing the most important materials from your friends while keeping you abreast of the most important and interesting activity beyond your filter bubble. For many of us, this promise was realized, and algorithmic feeds became a source of novelty and relevance.

But these feeds are a profoundly tempting enshittification target. The critique of these algorithms has largely focused on "addictiveness" and the idea that platforms would twiddle the knobs to increase the relevance of material in your feed to "hack your engagement":

https://www.theguardian.com/technology/2018/mar/04/has-dopamine-got-us-hooked-on-tech-facebook-apps-addiction

Less noticed – and more important – was how platforms did the opposite: twiddling the knobs to remove things from your feed that you'd asked to see or that the algorithm predicted you'd enjoy, to make room for "boosted" content and advertisements:

https://www.reddit.com/r/Instagram/comments/z9j7uy/what_happened_to_instagram_only_ads_and_accounts/

Users were helpless before this kind of twiddling. On the one hand, they were locked into the platform – not because their dopamine had been hacked by evil tech-bro wizards – but because they loved the friends they had there more than they hated the way the service was run:

https://locusmag.com/2023/01/commentary-cory-doctorow-social-quitting/

On the other hand, the platforms had such an iron grip on their technology, and had deployed IP so cleverly, that any countertwiddling technology was instantaneously incinerated by legal death-rays:

https://techcrunch.com/2022/10/10/google-removes-the-og-app-from-the-play-store-as-founders-think-about-next-steps/

Newer social media platforms, notably Tiktok, dispensed entirely with deterministic feeds, defaulting every user into a feed that consisted entirely of algorithmic picks; the people you follow on these platforms are treated as mere suggestions by their algorithms. This is a perfect breeding-ground for enshittification: different parts of the business can twiddle the knobs to override the algorithm for their own parochial purposes, shifting the quality:shit ratio by unnoticeable increments, temporarily toggling the quality knob when your engagement drops off:

https://www.forbes.com/sites/emilybaker-white/2023/01/20/tiktoks-secret-heating-button-can-make-anyone-go-viral/

All social platforms want to be Tiktok: nominally, that's because Tiktok's algorithmic feed is so good at hooking new users and keeping established users hooked. But tech bosses also understand that a purely algorithmic feed is the kind of black box that can be plausibly and subtly enshittified without sparking user revolts:

https://pluralistic.net/2023/01/21/potemkin-ai/#hey-guys

Back in 2004, when Mark Zuckerberg was coming to grips with Facebook's success, he boasted to a friend that he was sitting on a trove of emails, pictures and Social Security numbers for his fellow Harvard students, offering this up for his friend's idle snooping. The friend, surprised, asked "What? How'd you manage that one?"

Infamously, Zuck replied, "People just submitted it. I don't know why. They 'trust me.' Dumb fucks."

https://www.esquire.com/uk/latest-news/a19490586/mark-zuckerberg-called-people-who-handed-over-their-data-dumb-f/

This was a remarkable (and uncharacteristic) self-aware moment from the then-nineteen-year-old Zuck. Of course Zuck couldn't be trusted with that data. Whatever Jiminy Cricket voice told him to safeguard that trust was drowned out by his need to boast to pals, or participate in the creepy nonconsensual rating of the fuckability of their female classmates. Over and over again, Zuckerberg would promise to use his power wisely, then break that promise as soon as he could do so without consequence:

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3247362

Zuckerberg is a cautionary tale. Aware from the earliest moments that he was amassing power that he couldn't be trusted with, he nevertheless operated with only the weakest of Ulysses pacts, like a nonbinding promise never to spy on his users:

https://web.archive.org/web/20050107221705/http://www.thefacebook.com/policy.php

But the platforms have learned the wrong lesson from Zuckerberg. Rather than treating Facebook's enshittification as a cautionary tale, they've turned it into a roadmap. The Darth Vader MBA rules high-tech boardrooms.

Algorithmic feeds and other forms of "paternalistic" content presentation are necessary and even desirable in an information-rich environment. In many instances, decisions about what you see must be largely controlled by a third party whom you trust. The audience in a comedy club doesn't get to insist on knowing the punchline before the joke is told, just as RPG players don't get to order the Dungeon Master to present their preferred challenges during a campaign.

But this power is balanced against the ease of the players replacing the Dungeon Master or the audience walking out on the comic. When you've got more than a hundred dollars sunk into a video game and an online-only friend-group you raid with, the games company can do a lot of enshittification without losing your business, and they know it:

https://www.theverge.com/2024/5/10/24153809/ea-in-game-ads-redux

Even if they sometimes overreach and have to retreat:

https://www.eurogamer.net/sony-overturns-helldivers-2-psn-requirement-following-backlash

A tech company that seeks your trust for an algorithmic feed needs Ulysses pacts, or it will inevitably yield to the temptation to enshittify. From strongest to weakest, these are:

Not showing you an algorithmic feed at all;

https://joinmastodon.org/

"Composable moderation" that lets multiple parties provide feeds:

https://bsky.social/about/blog/4-13-2023-moderation

Offering an algorithmic "For You" feed alongside of a reverse-chrono "Friends" feed, defaulting to friends;

https://pluralistic.net/2022/12/10/e2e/#the-censors-pen

As above, but defaulting to "For You"

Maturity lies in being strong enough to know your weaknesses. Never trust someone who tells you that they will never yield to temptation! Instead, seek out people – and service providers – with the maturity and honesty to know how tempting temptation is, and who act before temptation strikes to make it easier to resist.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/05/11/for-you/#the-algorithm-tm

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

--

djhughman https://commons.wikimedia.org/wiki/File:Modular_synthesizer_-_%22Control_Voltage%22_electronic_music_shop_in_Portland_OR_-_School_Photos_PCC_%282015-05-23_12.43.01_by_djhughman%29.jpg

CC BY 2.0 https://creativecommons.org/licenses/by/2.0/deed.en

#pluralistic#twiddling#for you#enshittification#intermediation#the algorithm tm#moral hazard#end to end

113 notes

·

View notes

Text

Gemini Code Assist Enterprise: AI App Development Tool

Introducing Gemini Code Assist Enterprise’s AI-powered app development tool that allows for code customisation.

The modern economy is driven by software development. Unfortunately, due to a lack of skilled developers, a growing number of integrations, vendors, and abstraction levels, developing effective apps across the tech stack is difficult.

To expedite application delivery and stay competitive, IT leaders must provide their teams with AI-powered solutions that assist developers in navigating complexity.

Google Cloud thinks that offering an AI-powered application development solution that works across the tech stack, along with enterprise-grade security guarantees, better contextual suggestions, and cloud integrations that let developers work more quickly and versatile with a wider range of services, is the best way to address development challenges.

Google Cloud is presenting Gemini Code Assist Enterprise, the next generation of application development capabilities.

Beyond AI-powered coding aid in the IDE, Gemini Code Assist Enterprise goes. This is application development support at the corporate level. Gemini’s huge token context window supports deep local codebase awareness. You can use a wide context window to consider the details of your local codebase and ongoing development session, allowing you to generate or transform code that is better appropriate for your application.

With code customization, Code Assist Enterprise not only comprehends your local codebase but also provides code recommendations based on internal libraries and best practices within your company. As a result, Code Assist can produce personalized code recommendations that are more precise and pertinent to your company. In addition to finishing difficult activities like updating the Java version across a whole repository, developers can remain in the flow state for longer and provide more insights directly to their IDEs. Because of this, developers can concentrate on coming up with original solutions to problems, which increases job satisfaction and gives them a competitive advantage. You can also come to market more quickly.

GitLab.com and GitHub.com repos can be indexed by Gemini Code Assist Enterprise code customisation; support for self-hosted, on-premise repos and other source control systems will be added in early 2025.

Yet IDEs are not the only tool used to construct apps. It integrates coding support into all of Google Cloud’s services to help specialist coders become more adaptable builders. The time required to transition to new technologies is significantly decreased by a code assistant, which also integrates the subtleties of an organization’s coding standards into its recommendations. Therefore, the faster your builders can create and deliver applications, the more services it impacts. To meet developers where they are, Code Assist Enterprise provides coding assistance in Firebase, Databases, BigQuery, Colab Enterprise, Apigee, and Application Integration. Furthermore, each Gemini Code Assist Enterprise user can access these products’ features; they are not separate purchases.

Gemini Code Support BigQuery enterprise users can benefit from SQL and Python code support. With the creation of pre-validated, ready-to-run queries (data insights) and a natural language-based interface for data exploration, curation, wrangling, analysis, and visualization (data canvas), they can enhance their data journeys beyond editor-based code assistance and speed up their analytics workflows.

Furthermore, Code Assist Enterprise does not use the proprietary data from your firm to train the Gemini model, since security and privacy are of utmost importance to any business. Source code that is kept separate from each customer’s organization and kept for usage in code customization is kept in a Google Cloud-managed project. Clients are in complete control of which source repositories to utilize for customization, and they can delete all data at any moment.

Your company and data are safeguarded by Google Cloud’s dedication to enterprise preparedness, data governance, and security. This is demonstrated by projects like software supply chain security, Mandiant research, and purpose-built infrastructure, as well as by generative AI indemnification.

Google Cloud provides you with the greatest tools for AI coding support so that your engineers may work happily and effectively. The market is also paying attention. Because of its ability to execute and completeness of vision, Google Cloud has been ranked as a Leader in the Gartner Magic Quadrant for AI Code Assistants for 2024.

Gemini Code Assist Enterprise Costs

In general, Gemini Code Assist Enterprise costs $45 per month per user; however, a one-year membership that ends on March 31, 2025, will only cost $19 per month per user.

Read more on Govindhtech.com

#Gemini#GeminiCodeAssist#AIApp#AI#AICodeAssistants#CodeAssistEnterprise#BigQuery#Geminimodel#News#Technews#TechnologyNews#Technologytrends#Govindhtech#technology

2 notes

·

View notes