#Camera Modules Insights

Explore tagged Tumblr posts

Text

The Camera Modules Market: Trends, Insights, and Future Outlook

The camera modules market is a rapidly growing industry driven by the increasing demand for high-quality images and videos across various applications. In this blog post, we will delve into the current trends, key players, and future outlook of the camera modules market.

Market Size and Growth

The global camera modules market is expected to reach USD 49.24 billion by 2029, growing at a compound annual growth rate (CAGR) of 4.34% during the forecast period (2024-2029). The market size was valued at USD 43.3 billion in 2023 and is poised to reach USD 68.5 billion by 2028, growing at a CAGR of 9.6% during the forecast period.

Market Trends

Mobile Segment Dominance

The mobile segment is expected to hold a notable market share due to the growing sales of smartphones across economies with slower technological developments and budgets. The increasing demand to improve camera resolution across smartphones of all ranges has enabled several manufacturers to launch new sensors and camera modules.

Technological Advancements

The camera modules market is driven by technological advancements in image sensors, lenses, and other components. The integration of AI algorithms has boosted camera performance, while the adoption of high-resolution camera modules in machine vision systems is driving the growth of the market.

Applications

Camera modules are used in a wide range of applications, including security, medical, automotive, and industrial. The growing demand for consumer electronics and the increasing adoption of IoT-based security systems are driving the growth of the camera modules market.

Key Players

The camera modules market is competitive, with major companies such as LG Innotek, OFILM Group Co., Ltd., Sunny Optical Technology (Group), Hon Hai Precision Inc. Co., Ltd. (Foxconn), Chicony Electronics, Sony, Intel, and Samsung Electro-Mechanics being significant manufacturers of camera modules.

Market Segmentation

The camera modules market can be segmented by component, application, and geography. The image sensor segment is expected to account for the largest market share due to the increasing demand for higher-resolution images and enhanced low-light performance.

Future Outlook

The camera modules market is expected to continue growing due to the increasing demand for high-quality images and videos across various applications. The adoption of AI algorithms and the integration of camera modules in emerging technologies such as autonomous vehicles and drones are expected to drive the growth of the market.

Conclusion

The camera modules market is a rapidly growing industry driven by technological advancements and increasing demand for high-quality images and videos. The market is expected to reach USD 49.24 billion by 2029, growing at a CAGR of 4.34% during the forecast period. The key players in the market are LG Innotek, OFILM Group Co., Ltd., Sunny Optical Technology (Group), and Hon Hai Precision Inc. Co., Ltd. (Foxconn). The market is expected to continue growing due to the increasing demand for high-quality images and videos across various applications.

#Camera Modules Market#Camera Modules Industry#Camera Modules Trends#Camera Modules Insights#Camera Modules Future Outlook#Camera Modules Market Size#Camera Modules Market Growth#Camera Modules Market Share#Camera Modules Market Forecast#Camera Modules Market Analysis

0 notes

Text

Scientists torturing backronyms/acronyms happens a lot, actually (see my tags for examples)

Backronyms

Physicists suck at naming things (I can say this because I'm a MechE and I have had to deal with so many physicists), but occasionally they have a stroke of brilliance. Like, a friend of mine worked on a dark matter detector called DarkSide. That's so goofy that it wraps back around to good.

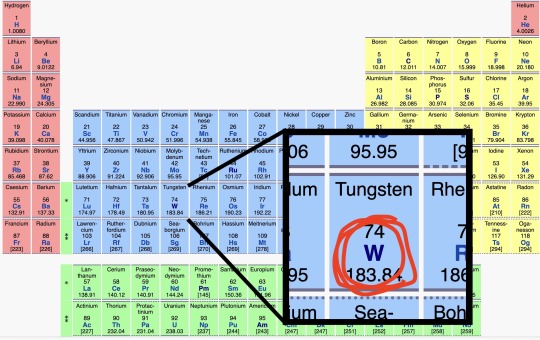

Anyway, there's this superconducting fusion reactor in france called WEST. It's notable for having first-wall shielding tiles (the innermost surface of the vacuum chamber, directly facing the fusion plasma) entirely made of tungsten.

There are a lot of materials used for plasma-facing components – tungsten, molybdenum, graphite, beryllium, various composites and combinations of the above – but it's pretty rare for a reactor to go full tungsten. It can take extremely high temperatures, but it's brittle and expensive, and "high-Z" (high molecular weight) impurities in the plasma cause their own issues. So, the main purpose of WEST is to investigate the viability of an all-tungsten first wall and divertor.

To that end, they tortured an acronym until they got it to work:

Tungsten Environment in Steady-state Tokamak

Or "WEST"

Get it?

GEW IW??

#let me name a few from mars-related things in order of least to most ridiculous:#one of the least bad ones: Mars Atmosphere and Volatile EvolutioN (MAVEN)#INterior exploration using Seismic Investigations Geodesy and Heat Transport (InSight)#ESCApe and Plasma Acceleration and Dynamics Explorer (ESCAPADE) (they really used 67% of the whole word there huh)#MArs RadIation environment Experiment (MARIE) (radiation detector on Mars Odyssey)#TElescopic Nadir imager for GeOmOrphology (TENGOO) (a camera on MMX)#Mars-moon Exploration with GAmma rays and NEutrons (MEGANE) (spectrometer on MMX) (MMX team sure loves destroying backronyms)#Optical RadiOmeter composed of CHromatic Imagers (OROCHI) (could you guess it's MMX again?)#that's all I can find off the top of my head but here please be as tortured as I am about this#EDIT: I FORGOT TO MENTION SCHIAPARELLI LANDER'S OFFICIAL NAME#ExoMars EDM#EDM standing for EDL Demonstrator Module#EDL standing for Entry Descent and Landing#they shoved a whole-ass acronym in their acronym

978 notes

·

View notes

Text

Integration of AI in Driver Testing and Evaluation

Introduction: As technology continues to shape the future of transportation, Canada has taken a major leap in modernizing its driver testing procedures by integrating Artificial Intelligence (AI) into the evaluation process. This transition aims to enhance the objectivity, fairness, and efficiency of driving assessments, marking a significant advancement in how new drivers are tested and trained across the country.

Key Points:

Automated Test Scoring for Objectivity: Traditional driving test evaluations often relied heavily on human judgment, which could lead to inconsistencies or perceived bias. With AI-driven systems now analysing road test performance, scoring is based on standardized metrics such as speed control, reaction time, lane discipline, and compliance with traffic rules. These AI systems use sensor data, GPS tracking, and in-car cameras to deliver highly accurate, impartial evaluations, removing potential examiner subjectivity.

Real-Time Feedback Enhances Learning: One of the key benefits of AI integration is the ability to deliver immediate feedback to drivers once the test concludes. Drivers can now receive a breakdown of their performance in real time—highlighting both strengths and areas needing improvement. This timely feedback accelerates the learning process and helps individuals better prepare for future driving scenarios or retests, if required.

Enhanced Test Consistency Across Canada: With AI systems deployed uniformly across various testing centres, all applicants are assessed using the same performance parameters and technology. This ensures that no matter where in Canada a person takes their road test, the evaluation process remains consistent and fair. It also eliminates regional discrepancies and contributes to national standardization in driver competency.

Data-Driven Improvements to Driver Education: AI doesn’t just assess drivers—it collects and analyses test data over time. These insights are then used to refine driver education programs by identifying common mistakes, adjusting training focus areas, and developing better instructional materials. Platforms like licenseprep.ca integrate this AI-powered intelligence to update practice tools and learning modules based on real-world testing patterns.

Robust Privacy and Data Protection Measures: As personal driving data is collected during AI-monitored tests, strict privacy policies have been established to protect individual information. All recorded data is encrypted, securely stored, and only used for training and evaluation purposes. Compliance with national data protection laws ensures that drivers’ privacy is respected throughout the testing and feedback process.

Explore More with Digital Resources: For a closer look at how AI is transforming driver testing in Canada and to access AI-informed preparation materials, visit licenseprep.ca. The platform stays current with tech-enabled changes and offers resources tailored to the evolving standards in driver education.

#AIDrivingTests#SmartTesting#DriverEvaluation#TechInTransport#CanadaRoads#LicensePrepAI#FutureOfDriving

4 notes

·

View notes

Text

TARS

TARS is a highly sophisticated, artificially intelligent robot featured in the science fiction film "Interstellar." Designed by a team of scientists, TARS stands at an imposing height of six feet, with a sleek and futuristic metallic appearance. Its body, made primarily of sturdy titanium alloy, is intricately designed to efficiently navigate various terrains and perform a wide range of tasks.

At first glance, TARS's appearance may seem minimalistic, almost like an avant-garde monolith. Its body is divided into several segments, each housing the essential components necessary for its impeccable functionality. The segments connect seamlessly, allowing for fluid movements and precise operational control. TARS's unique design encapsulates a simple yet captivating aesthetic, which embodies its practicality and advanced technological capabilities.

TARS's main feature is its hinged quadrilateral structure that supports its movement pattern, enabling it to stride with remarkable agility and grace. The hinges on each of its elongated limbs provide exceptional flexibility while maintaining structural stability, allowing TARS to adapt to various challenging terrains effortlessly. These limbs taper gradually at the ends, equipped with variable grip systems that efficiently secure objects, manipulate controls, and traverse rough surfaces with ease.

The robot's face, prominently positioned on the upper front segment, provides an avenue for human-like communication. Featuring a rectangular screen, TARS displays digitized expressions and inbuilt textual interfaces. The screen resolution is remarkably sharp, allowing intricate details to be displayed, enabling TARS to effectively convey its emotions and intentions to its human counterparts. Below the screen, a collection of sensors, including visual and auditory, are neatly integrated to facilitate TARS's interaction with its surroundings.

TARS's AI-driven personality is reflected in its behaviors, movements, and speech patterns. Its personality leans towards a rational and logical disposition, manifested through its direct and concise manner of speaking. TARS's voice, modulated to sound deep and slightly robotic, projects an air of confidence and authority. Despite the synthetic nature of its voice, there is a certain warmth that emanates, fostering a sense of companionship and trust among those who interact with it.

To augment its perceptual abilities, TARS is outfitted with a myriad of sensors located strategically throughout its physical structure. These sensors encompass a wide spectrum of functions, including infrared cameras, proximity detectors, and light sensors, granting TARS unparalleled awareness of its surroundings. Moreover, a central processing unit, housed within its core, processes the vast amount of information gathered, enabling TARS to make informed decisions swiftly and autonomously.

TARS's advanced cognitive capabilities offer an extensive array of skills and functionalities. It possesses an encyclopedic knowledge of various subjects, from astrophysics to engineering, effortlessly processing complex information and providing insights in an easily understandable manner. Additionally, TARS assists humans through various interfaces, such as mission planning, executing intricate tasks, or providing critical analysis during high-pressure situations.

Equally noteworthy is TARS's unwavering loyalty. Through its programming and interactions, it exhibits a sense of duty and commitment to its human companions and the mission at hand. Despite being an AI-driven machine, TARS demonstrates an understanding of empathy and concern, readily offering support and companionship whenever needed. Its unwavering loyalty and the camaraderie it forges help to foster trust and reliance amidst the team it is a part of.

In conclusion, TARS is a remarkable robot, standing as a testament to human ingenuity and technological progress. With its awe-inspiring design, practical yet aesthetically pleasing body structure, and advanced artificial intelligence, TARS represents the pinnacle of robotic advancements. Beyond its physical appearance, TARS's personality, unwavering loyalty, and unparalleled cognitive abilities make it an exceptional companion and invaluable asset to its human counterparts.

#TARS#robot ish#AI#interstellar#TARS-TheFutureIsHere#TARS-TheUltimateRobot#TechTuesdaySpotlight-TARS#FuturisticAI-TARS#RoboticRevolution-TARS#InnovationUnleashed-TARS#MeetTARS-TheRobotCompanion#AIAdvancements-TARS#SciFiReality-TARS#TheFutureIsMetallic-TARS#TechMarvel-TARS#TARSTheTrailblazer#RobotGoals-TARS#ArtificialIntelligenceEvolution-TARS#DesignMeetsFunctionality-TARS

44 notes

·

View notes

Text

Denzong Films – Where Ideas Turn Into Impactful Visual Stories

In an age where attention spans are shrinking and visuals dominate the digital space, businesses can no longer afford to stay invisible. Whether you're a startup unveiling your product, an established brand narrating your journey, or a corporate giant training your workforce—the right video content can change everything.

Enter Denzong Films, a powerhouse of creativity and precision, and a go-to video production company in Bangalore that’s redefining storytelling through the lens.

Why Denzong Films Is the Video Partner You’ve Been Searching For

When you're investing in video, you're not just hiring cameras—you’re hiring imagination, experience, and a clear understanding of your business goals. Denzong Films brings over a decade of collective industry experience, combining cinematic techniques with marketing insight to create content that doesn't just look good—it performs.

From pre-production planning to post-production finesse, every step is treated with care, purpose, and a deep commitment to quality. The result? Videos that educate, inspire, and convert.

Your Trusted Corporate Video Production Company in Bangalore

Corporate storytelling is an art—and Denzong Films excels at it. As a seasoned corporate video production company in Bangalore, we produce a wide range of content including leadership interviews, internal training modules, product launches, and client testimonials.

We understand that every corporate message carries weight, which is why our team ensures crystal-clear messaging, high production value, and on-time delivery. Whether it’s an explainer for your boardroom or a brand film for your global audience, Denzong Films delivers videos that leave a lasting impact.

A Creative Force Among Video Creation Companies in Bangalore

Looking for a video creation company in Bangalore that brings ideas to life with clarity and flair? Denzong Films is your creative partner. We specialize in everything from 2D animation and promotional videos to real estate walkthroughs and event aftermovies. Every project is powered by fresh thinking, professional execution, and a commitment to storytelling that resonates.

Visit Us or Get in Touch

📍 Address: 149/3 L.B.S Nagar, 7th Cross, 8th Main Rd, HAL, Bengaluru, Karnataka 560017 📞 Contact: 07679738616

Whether you're a brand with a bold vision or a company looking to simplify complex messages, Denzong Films is the creative engine you need.👉 Ready to transform your message into a masterpiece? Explore more at denzongfilms.com

#video production company in bangalore#video production agency in bangalore#corporate video production company in bangalore#corporate video production agency in bangalore#best video production company in bangalore

1 note

·

View note

Text

Top 10 Projects for BE Electrical Engineering Students

Embarking on a Bachelor of Engineering (BE) in Electrical Engineering opens up a world of innovation and creativity. One of the best ways to apply theoretical knowledge is through practical projects that not only enhance your skills but also boost your resume. Here are the top 10 projects for BE Electrical Engineering students, designed to challenge you and showcase your talents.

1. Smart Home Automation System

Overview: Develop a system that allows users to control home appliances remotely using a smartphone app or voice commands.

Key Components:

Microcontroller (Arduino or Raspberry Pi)

Wi-Fi or Bluetooth module

Sensors (temperature, motion, light)

Learning Outcome: Understand IoT concepts and the integration of hardware and software.

2. Solar Power Generation System

Overview: Create a solar panel system that converts sunlight into electricity, suitable for powering small devices or homes.

Key Components:

Solar panels

Charge controller

Inverter

Battery storage

Learning Outcome: Gain insights into renewable energy sources and energy conversion.

3. Automated Irrigation System

Overview: Design a system that automates the watering of plants based on soil moisture levels.

Key Components:

Soil moisture sensor

Water pump

Microcontroller

Relay module

Learning Outcome: Learn about sensor integration and automation in agriculture.

4. Electric Vehicle Charging Station

Overview: Build a prototype for an electric vehicle (EV) charging station that monitors and controls charging processes.

Key Components:

Power electronics (rectifier, inverter)

Microcontroller

LCD display

Safety features (fuses, circuit breakers)

Learning Outcome: Explore the fundamentals of electric vehicles and charging technologies.

5. Gesture-Controlled Robot

Overview: Develop a robot that can be controlled using hand gestures via sensors or cameras.

Key Components:

Microcontroller (Arduino)

Motors and wheels

Ultrasonic or infrared sensors

Gesture recognition module

Learning Outcome: Understand robotics, programming, and sensor technologies.

6. Power Factor Correction System

Overview: Create a system that improves the power factor in electrical circuits to enhance efficiency.

Key Components:

Capacitors

Microcontroller

Current and voltage sensors

Relay for switching

Learning Outcome: Learn about power quality and its importance in electrical systems.

7. Wireless Power Transmission

Overview: Experiment with transmitting power wirelessly over short distances.

Key Components:

Resonant inductive coupling setup

Power source

Load (LED, small motor)

Learning Outcome: Explore concepts of electromagnetic fields and energy transfer.

8. Voice-Controlled Home Assistant

Overview: Build a home assistant that can respond to voice commands to control devices or provide information.

Key Components:

Microcontroller (Raspberry Pi preferred)

Voice recognition module

Wi-Fi module

Connected devices (lights, speakers)

Learning Outcome: Gain experience in natural language processing and AI integration.

9. Traffic Light Control System Using Microcontroller

Overview: Design a smart traffic light system that optimizes traffic flow based on real-time data.

Key Components:

Microcontroller (Arduino)

LED lights

Sensors (for vehicle detection)

Timer module

Learning Outcome: Understand traffic management systems and embedded programming.

10. Data Acquisition System

Overview: Develop a system that collects and analyzes data from various sensors (temperature, humidity, etc.).

Key Components:

Microcontroller (Arduino or Raspberry Pi)

Multiple sensors

Data logging software

Display (LCD or web interface)

Learning Outcome: Learn about data collection, processing, and analysis.

Conclusion

Engaging in these projects not only enhances your practical skills but also reinforces your theoretical knowledge. Whether you aim to develop sustainable technologies, innovate in robotics, or contribute to smart cities, these projects can serve as stepping stones in your journey as an electrical engineer. Choose a project that aligns with your interests, and don’t hesitate to seek guidance from your professors and peers. Happy engineering!

5 notes

·

View notes

Text

System Collapse, Chapter 6

(Curious what I'm doing here? Read this post! For the link index and a primer on The Murderbot Diaries, read this one! Like what you see? Send me a Ko-Fi.)

In which 57 sources of anxiety sounds low, actually.

The team is not happy. Iris records another briefing, even though it's early for a check-in, and Murderbot hopes that this and the other pathfinder return soon with some insight from the rest of the team, but only counts on it as a way to let them know what happened if the B-Es attack them. Threat assessment puts the chance of a B-E attack low, but mostly because these three and MB aren't much of a threat to at-least-5 and another SecUnit.

And oh, that SecUnit is causing some discussions among the team. MB has to explain that it can't just go around freeing every SecUnit willy nilly, and besides that, a freed SecUnit doesn't instantly become trustworthy. A freed unit might need to be killed anyway, if it goes rogue and attacks the humans. Tarik seems to understand. Ratthi definitely doesn't, but acknowledges that MB is the expert on the matter, and he doesn't want to press it into something it doesn't think is safe. MB appreciates that about Ratthi.

After all that, MB and AC2 arrange a secure connection for Iris and Trinh, the primary "operator" for AC2. Trinh is a little unnerved at a second group of strangers making contact so soon after B-E. MB figures it'd be pretty freaked out at that, too. Iris explains the situation, and Trinh observes that she's saying the same thing B-E did: that she's here to help. MB groans mentally that the colonists have no reason to trust them.(1)

Tarik, Ratthi, and Art-drone strategize on the shuttle, and a lot of time is spent on the potential arguments to be made, and what the colonists might and are likely to know from their sporadic contact and, potentially, spying via AC2's connection to AC1.

On the plus side, AC2 gave MB the location of the B-E shuttle, and the best route to it without alerting them. So, it takes the opportunity to go scout it out. MB grumbles about the lack of cameras in the last section of the path AC2 directs it to, as well as none outside. Preparedness is everything, dangit!(2) Meanwhile, Art-drone has taken a defensive position just inside the hangar.

MB takes some comfort that the colonists might not trust them, but AC2 trusts it. Mostly, computer systems trust easily if you keep things simple and don't try to provoke their boundaries. AC2 wants to protect its humans, and MB has so far showed no sign of wanting to harm them.

So, it provides the team with the video feed where the B-Es are still talking to most of the humans. There's no audio, but Art-drone is interpreting from mouth and facial movements, and they probably understand more than the B-Es do since Thiago's translation module is "clearly better". AC2 vouching for MB won't win over any humans, though. Sometimes not even solid evidence can convince them.

AC2 asks MB why the B-E SecUnit refused a connection request. MB thinks that's a good thing, since normal SecUnits can't hack, only CombatUnits.(3) MB is pretty sure this one's a normal SecUnit, since its armour is very similar to Three's. So, it has to explain to AC2 that it's under the control of a governor module. It doesn't have an answer for AC2's subsequent why (is this allowed)?

By now, MB has made it to the other hangar, where the B-Es landed. It wonders how the B-Es knew to look here particularly. Earlier, Iris asked Art-drone if the B-Es could have followed them in, but Art-drone came to the conclusion that they arrived at least a day earlier, from some gap in Art-prime's pathfinder scanning. Art-drone is miffed enough that it expects Art-prime will be furious.

Still, back in the present, MB realizes it's drifted off again, and Ratthi brings it back to task by noticing a second door. AC2 sends MB a rough map of the installation, and MB shares it with the humans. With nothing better to do, MB decides to stand there and hang out on purpose.

The wind outside gets stronger, screaming through the hangar's crevices. Art-drone says pathfinders confirm the weather is getting worse and it may lose contact with them. AC2 confirms, that matches its weather station data.

MB pulls up some Sanctuary Moon, not wanting to distract Art-drone with something new. After a couple of minutes, AC2 asks what MB is doing. MB explains watching media, and AC2 offers its entertainment partition, and MB has hit a goldmine, though some of the titles don't match words in its language modules. When Art-drone notices, it says these are pre-CR media.

The scene flips(4) over to the now-unredacted incident again, and MB says it's fairly sure the corpse never chewed on its leg, but it's even more sure it saw that happen to a human at some point on a survey mission. It told Art that it (MB) had fucked everything up and that Art and its humans shouldn't want it to do security for them anymore. Art asks why, and MB says something is broken inside it.

Art points out that its wormhole drive is broken. MB says that's fixable, and knows it was a mistake to say so since it really doesn't know all of what happened to Art from Art's point of view, but continues that its flaw is in its organic neural tissue. Art points out that this is how the humans diagnosed it so quickly, and asks if they're disposable when it happens to them. MB grumbles that that's what corporations say. Art says it's not a corpo.

MB tells Art to stop, that this isn't it talking, just its… Art finishes the thought MB trails out of: its certification in trauma protocol, which is obviously useless in this situation. MB says it's for humans, and Art points out that this affects MB's human bits. MB says it's not talking to Art anymore.(5)

The first thought MB has is that it should trade all its media for all AC2's. The second is that its humans aren't going to be staying much longer, whether they go with Art or B-E. At least the situation sucking so bad is a great distraction for how much MB feels it sucks, it thinks, just before realizing it missed something in its distraction.

Trinh invites the team to spend the night in the installation, since the weather is worsening. Iris asks MB if they should, and despite the threat of the B-Es, MB agrees. Art-drone thanks it, and MB knows it's not the only one that was imagining the other SecUnit sneaking up on the shuttle.

Trinh sends them directions, which they don't need with AC2's map, but that put them at the opposite end from the accommodations the B-Es were given. It's a nice gesture, even if it's only a twenty minute walk apart.(6) MB sneaks back through the back corridors to meet up with the others. MB does its best to act like the others, even to folding its hood and helmet back. It's not sure what AC2 has told the colonists about it, and it doesn't want to ask, in case it hadn't told them about MB and this causes it to. MB knows it can't stay a secret, but it wants less interaction with them if possible.

Flash to a clip of it telling Mensah it doesn't know what's wrong with itself, and Mensah saying she thinks it knows, and just doesn't want to talk about it yet.(7)

In the present and in the team feed, to prevent eavesdropping by B-E, Iris says so far, Trinh has rejected B-E's requests to speak to the whole colony. They're only allowing them the smaller group. Ratthi worries what kind of employment pitch they might make, and Tarik says they'll be real good at dressing it up, and this group might be more vulnerable to their manipulation.

Iris says the group seems pretty independent, she thinks the chance of them falling for it is low, but it might not even be in their best interest to leave with the others. If they can forge the charter right, they'd have the right to choose to stay or go as they please. Ratthi adds that it would be even easier if the University comes to study the contamination, offering a means of transport out later as needed. Tarik is about as optimistic about this as MB, which is to say, not very.

MB notices that they all look really tired, and kicks itself as it asks Art-drone how long it's been since they slept. It replies that they were supposed to take naps on the flight in, but nobody could rest. MB feels like it fucked up again, but Art-drone offers that they both fucked this one up.

AC2 notifies them that there's a human approaching, as they near their assigned quarters. Ratthi asks in the team feed if this is a sign of trust, on the system's part. Iris asks Art-drone if that's possible, and it reminds her that they've discussed anthropomorphizing machine intelligence before. Ratthi asks what Art-drone considers human characteristics in this way, and Tarik begs them not to start. There's some lighthearted teasing of Tarik by Art, and Iris laughingly says she's sorry she asked.

The human doesn't have a feed ID, but AC2 supplies a name of Lucia and he/him pronouns when MB asks, since it knows the humans will want to know. Iris thanks him for inviting them in, and he nervously says she's welcome and walks them to the rooms, showing them the facilities. Iris tries to initiate three different conversations, to no avail. The team all worries B-E poisoned the well already.

Iris goes to lay on one of the beds in the other room, while Ratthi and Tarik stay in the first room to talk about what's going on between them. MB is stuck in the doorway between the rooms, monitoring everything in case of attacks. It recalls overhearing a heated discussion on Art after the incident, and learning that it was Ratthi and Tarik having a "sexual discussion". Apparently, this felt like the right time to talk again. MB backburners their audio except for a keyword filter in case they yell for help, plays a nice nothing loop of sound, and stares at the wall.(8)

The humans do, eventually, manage to get some sleep. Art-drone gets MB to watch an episode of World Hoppers. MB thinks about how it has fifty seven unique causes of concern or anxiety, and it can do nothing about any of them. That goes up to fifty eight when Trinh calls to ask for an in-person meeting with them and B-E.

=====

(1) Trust the process. (2) There are reasonable limits, it's true. Being prepared beyond a certain point is just feeding your own paranoia. Just look at all the right-wing "preppers" who keep expecting the apocalypse. But, a certain amount of preparation and expecting the worst can keep you safe in an emergency. Never installing cameras in a whole section of your installation or at the exits is absolutely an error in judgement on some level. (3) I'm sure it means hack something this complex, but… Murderbot, you hack literally all the time. Are you secretly a CombatUnit? (4) It's not lost on me that, now that we know what the redacted incident was, it feels like more pre-CR talk means more flashback and MB being more distracted in the present. (5) If that lasted 5 minutes I'll do something improbable. (6) I dunno, see, this is one of those things where MB is programmed to go past what I think are reasonably pessimistic expectations of danger. It's understandable, this is what it was literally built for, but situationally speaking, I think it's a bit excessive. Nobody wants to make a bad impression on the colonists, if nothing else. (7) Why this conversation? Why now? Why here, right after being worried about the colonists figuring out what it is? (8) Personally, I want all the juicy details, but I can't blame MB for its lack of interest.

#the murderbot diaries#murderbot diaries#system collapse#murderbot#secunit#iris (murderbot)#tarik (murderbot)#ratthi#art (murderbot)#trinh (murderbot)#adacol2

7 notes

·

View notes

Text

Animation Brief 01 - Week 1 - World Building

Above: Background art from Angel's Egg (1985)

Well, I got into the animation course so my plan to begin coasting for the next three years is well underway. All I have to do is not fail this last module, which unfortunately means having to work...

Our initial brief will take us through the first two weeks of the term, moving at a pretty considerable clip compared to the last two. This gives me less time to engage in my favourite activity: not working. The title of the brief is "World Building" and the concept is to create a series of drawings and models in exploration of this theme, supplemented with some 3D modelling for a "mini-me" personal avatar.

Above: Background art from Lupin III: The Castle of Cagliostro (1979)

It was a funny brief to see as it almost exactly reflects the difference of opinion I had with our tutor, Paul Gardiner, on the last animation workshop for the Movement Brief. My concept was character focused, placing emphasis on the little penguin trying to scale the insurmountable spiral. Paul's feedback was insightful and I applied it as best I could, but ultimately his focus was more on the world, the contrast between man's insignificance in the face of such a titanic monolith. I guess I would say I was asking questions of how a character would interact with such a challenge but he was asking the same of the world itself.

Above: Compilation of background art from Digimon Adventure (1999)

Anyway, I can write more of my thoughts of brief in abstract later. For now I'd like to focus on the task at hand. For our first week we'll be look at soft landscapes, greenery and nature. An emphasis on horizontal composition, panorama and organic shapes. This will be contrasted next week with an opposite focus on city-scapes. To get us into the right mindset, Paul showed us a video of anime cinematography he'd cut together and set to Radiohead. (ó﹏ò。)

There was lots of recognisable work from famous directors like Hayao Miyazaki, Isao Takahata, Rintaro and Mamoru Oshii. The idea was to focus on the camera and pay attention to how it interacted with the background art. Most were simple pans, tilts, and zooms, with the occasional parallaxed element. None employed true animation, which is normal and expected for backgrounds. The video highlighted how much information could be conveyed with clever application of simple techniques.

We have a six step outline for the project and a itinerary for each day, so I'll update more as we go.

3 notes

·

View notes

Text

Atomos Connect Camera to Cloud Workflow Success Story - Videoguys

New Post has been published on https://thedigitalinsider.com/atomos-connect-camera-to-cloud-workflow-success-story-videoguys/

Atomos Connect Camera to Cloud Workflow Success Story - Videoguys

In the blog post “How GMedia tells great stories in no time with Camera to Cloud” by Atomos for Videomaker, the senior producer Joshua Cruse from GMedia shares insights into their video production workflow and how they efficiently tell compelling stories using a Camera to Cloud (C2C) approach. Here’s a summary:

Background:

Joshua Cruse, senior producer at GMedia, began his journey capturing musical performances with a passion for audio and video.

GMedia, the creative agency for Green Machine Ensembles at George Mason University, focuses on showcasing various musical performance groups.

Challenges Before C2C:

Traditional video production workflows led to exhaustion, with long hours waiting for media to off-load onto disks.

Upgrading to high-quality 4K video added to the production team’s burden, as the post-production workflow didn’t keep pace with camera advancements.

Transition to Camera to Cloud (C2C):

GMedia adopted the Camera to Cloud workflow using Atomos Connect module for Ninja and integrated it with the Frame.io creative collaboration platform.

The continuity of using Atomos devices from the late 2010s to the mid-2020s provides a sustainable and cost-effective practice.

C2C workflow proved to be a “magic link” for GMedia, offering cohesion, accessibility from anywhere, and significant time savings.

Benefits of C2C Workflow:

C2C workflow includes Atomos monitor-recorders with C2C connectivity and Frame.io for near-instant file uploads.

Editors can start crafting edits sooner, speeding up post-production, and proxy files stored in Frame.io are perfect for quick social media edits.

Josh emphasizes the importance of proper archiving without sacrificing recording quality for quicker turnarounds.

Real-Time Collaboration with C2C:

A specific example from April 2023 highlights the real-time collaboration aspect of C2C.

A remote producer, Tina, edited footage in real-time from home while Josh, on-site, confirmed shots using an airpod, showcasing the flexibility and efficiency of C2C.

Time-Saving and Future Plans:

Time is emphasized as a non-renewable resource, and the C2C workflow is designed to save time and make the team’s life easier.

Josh and the GMedia team see C2C as a long-term solution, committing to utilizing it as long as Frame.io and Atomos support it, highlighting its transformative impact on their workflow.

In conclusion, GMedia’s adoption of Camera to Cloud with Atomos and Frame.io has not only saved time but has become an integral part of their efficient and innovative video production process, allowing them to tell compelling stories quickly and effectively.

Read the full blog post by Atomos for Videomaker HERE

#2023#4K#Accessibility#approach#Atomos#audio#background#Blog#Cloud#Collaboration#collaboration platform#connectivity#devices#efficiency#Full#Future#green#how#insights#it#LED#life#Link#media#module#monitor#performance#Performances#post-production#process

2 notes

·

View notes

Text

INDIA HAS JOINED THE MOON CLUB LETS GOOOOO

India has landed its Chandrayaan-3 spacecraft on the moon, becoming only the fourth nation ever to accomplish such a feat. The mission could cement India’s status as a global superpower in space. Previously, only the United States, China and the former Soviet Union have completed soft landings on the lunar surface. Chandrayaan-3’s landing site is also closer to the moon’s south pole than any other spacecraft in history has ventured. The south pole region is considered an area of key scientific and strategic interest for spacefaring nations, as scientists believe the region to be home to water ice deposits. The water, frozen in shadowy craters, could be converted into rocket fuel or even drinking water for future crewed missions

Indian Prime Minister Narendra Modi, currently in South Africa for the BRICS Summit, watched the landing virtually and shared broadcasted remarks on the livestream. “On this joyous occasion…I would like to address all the people of the world,” he said. “India’s successful moon mission is not just India’s alone. This is a year in which the world is witnessing India’s G20 presidency. Our approach of one Earth, one family, one future is resonating across the globe. “This human-centric approach that we present and we represent has been welcome universally. Our moon mission is also based on the same human-centric approach,” Modi added. “Therefore, this success belongs to all of humanity, and it will help moon missions by other countries in the future.” India’s attempt to land its spacecraft near the lunar south pole comes just days after another nation’s failed attempt to do the same. Russia’s Luna 25 spacecraft crashed into the moon on August 19 after its engines misfired, ending the country’s first lunar landing attempt in 47 years.

Chandrayaan-3’s journey As Chandrayaan-3 approached the moon, its cameras captured photographs, including one taken on August 20 that India’s space agency shared Tuesday. The image offers a close-up of the moon’s dusty gray terrain. India’s lunar lander consists of three parts: a lander, rover and propulsion module, which provided the spacecraft all the thrust required to traverse the 384,400-kilometer (238,855-mile) void between the moon and Earth. The lander, called Vikram, completed the precision maneuvers required to make a soft touchdown on the lunar surface after it was ejected from the propulsion module. Tucked inside is Pragyan, a small, six-wheeled rover that will deploy from the lander by rolling down a ramp. Vikram used its on board thrusters to carefully orient itself as it approached the lunar surface, and it slowly throttled down its engines for a touchdown just after 6 p.m. IST (8:30 a.m. ET) as applause erupted from the mission control room. The Indian Space Research Organization, or ISRO, later confirmed it had established two-way communication with the spacecraft and shared the first images of the surface captured during the lander’s final descent. The lander, which weighs about 1,700 kilograms (3,748 pounds), and 26-kilogram (57.3-pound) rover are packed with scientific instruments, prepared to capture data to help researchers analyze the lunar surface and deliver fresh insights into its composition.

Dr. Angela Marusiak, an assistant research professor at the University of Arizona’s Lunar and Planetary Laboratory, said she’s particularly excited that the lunar lander includes a seismometer that will attempt to detect quakes within the moon’s interior. Studying how the moon’s inner layers move could be key information for future endeavors on the lunar surface, Marusiak said. “You want to make sure that any potential seismic activity wouldn’t endanger any astronauts,” Marusiak said. “Or, if we were to build structures on the moon, that they would be safe from any seismic activity.” The lander and rover are expected to function for about two weeks on the moon’s surface. The propulsion module will remain in orbit, serving as a relay point for beaming data back to Earth.

2 notes

·

View notes

Text

India Mobile Components Market Size Powering the Nation’s Smartphone Revolution

The India Mobile Components Market is witnessing rapid expansion as the country becomes a critical hub for smartphone manufacturing and assembly. Backed by the government’s “Make in India” initiative and rising domestic consumption, the market is set for robust growth. According to Market Research Future, the India Mobile Components Market is forecasted to grow steadily through 2032, driven by technological advancements, policy support, and the surging penetration of mobile devices in urban and rural India alike.

Market Overview

India's mobile ecosystem has undergone a significant transformation in the past decade. As smartphone adoption increases across demographics, the demand for components such as semiconductors, batteries, camera modules, sensors, processors, and displays has intensified. Major global and domestic OEMs (Original Equipment Manufacturers) are investing in local component manufacturing, fueling market growth and reducing dependency on imports.

The market growth is also bolstered by factors such as:

Rising disposable income and digital literacy

Growth in e-commerce and fintech

5G deployment and IoT expansion

Localization of supply chains and incentives for domestic manufacturing

Segmentation Analysis

By Component Type:

Display Panels

Camera Modules

Processors

Sensors (Proximity, Gyroscope, Ambient Light, etc.)

Memory Chips (RAM, ROM, Flash)

Power Management ICs

Batteries and Charging Modules

Connectors and Others

By Application:

Smartphones

Feature Phones

Tablets and Wearables

Accessories

Key Market Trends

1. Localization of Semiconductor Supply Chain

India’s growing focus on becoming a semiconductor powerhouse has brought attention to localizing chip design and fabrication. This strategic shift is expected to drastically reduce component costs and import dependencies.

2. Rising Demand for Advanced Camera Modules

With consumers seeking DSLR-like quality in smartphones, OEMs are increasingly integrating high-resolution and multi-lens camera modules, propelling growth in optics-related mobile components.

3. Integration of AI and ML at Chip Level

Smartphones powered by AI-enhanced processors for real-time voice recognition, camera optimization, and gaming experience are boosting the demand for high-performance chipsets and related components.

4. Growth of Foldable & Flexible Displays

Next-gen mobile devices with flexible and foldable screens are creating a new segment in display panel components, with India emerging as a strong demand market.

5. Rise in Refurbished and Affordable Smartphones

A growing market for refurbished phones has also generated parallel demand for cost-effective, high-quality replacement components, expanding the aftermarket segment.

Segment Insights

Display Panels account for a significant portion of the component cost, with AMOLED and OLED gaining traction.

Processor Units from brands like MediaTek and Qualcomm dominate the mid and high-tier device segments.

Camera modules, especially those with AI-powered features and enhanced sensors, are rapidly evolving in terms of megapixel capacity and functionality.

End-User Insights

Mobile OEMs like Xiaomi, Samsung, Vivo, and Apple are heavily investing in India’s component ecosystem through partnerships and contract manufacturing.

Component Suppliers including Foxconn, Tata Electronics, and Dixon Technologies are enhancing capabilities to meet demand from both domestic and international players.

Telecom Operators are supporting the ecosystem through bundled device sales and mobile upgrade schemes, indirectly boosting component consumption.

Trending Report Highlights

Stay informed with these insightful market research reports related to electronics, sensors, and mobile technologies:

Low Horsepower AC Motor Market

Micro Location Technology Market

Mobile Computer Market

Modular Switch Market

Multi Axis Sensor Market

Multimedia Digital Living Room Device Market

Ceramic Capacitors Market

Communication Logic Integrated Circuit Market

Fire Alarm System Market

Flexible And Printed Electronic Market

3D Printing For Prototyping Market

Electrical And Electronic Test Equipment Market

Electronic Paper Market

Embedded Sim Market

0 notes

Text

Strategic Insights into the IoT Sensors Market

Meticulous Research®—a leading global market research company, published a research report titled, ‘IoT Sensors Market by Offering (Image Sensors, RFID Sensors, Biosensors, Humidity Sensors, Optical Sensors, Others), Technology (Wired, Wireless), Sector (Manufacturing, Retail, Consumer Electronics, Others), & Geography - Global Forecast to 2031.’

According to this latest publication from Meticulous Research®, in terms of value, the IoT sensors market is projected to reach $71.6 billion by 2031, at a CAGR of 23.9% during the forecast period. Also, in terms of volume, the IoT sensors market is projected to reach 5,93,79,81,126 units by 2031, at a CAGR of 25.2% during the forecast period. The growth of the IoT sensors market is driven by the increasing investments in Industry 4.0 technologies, government initiatives supporting the adoption of IoT devices, and the increasing integration of IoT sensors into connected and wearable devices. However, data security and privacy concerns restrain the growth of this market.

The growing use of IoT sensors for predictive maintenance and the proliferation of smart cities are expected to create growth opportunities for the players operating in the IoT sensors market. However, the high initial investment required for IoT ecosystem implementation is a major challenge for market growth. Additionally, the rising adoption of industrial robots and increasing integration of artificial intelligence into IoT sensors are key trends in the market.

Meticulous Research® has segmented this market based on offering, technology, sector, and geography for efficient analysis. The study also evaluates industry competitors and analyzes the market at the regional and country levels.

Based on offering, the IoT sensors market is segmented into humidity sensors, temperature sensors, proximity sensors, pressure sensors, image sensors, gas sensors, level sensors, accelerometer sensors, flow sensors, biosensors, RFID sensors, optical sensors, and other IoT sensors. In 2024, the image sensors segment is expected to account for the largest share of the IoT sensors market. The large market share of this segment is attributed to the rising demand for image sensors in mobile devices and increasing developments by market players in this market. Image sensors offer several advantages, such as increased sensitivity, reduced dark noise resulting in higher image fidelity, enhanced pixel well depth, and lower power consumption, among other benefits. Moreover, these sensors are utilized in both analog and digital electronic imaging devices, including digital cameras, camera modules, mobile phones, optical mouse devices, medical imaging equipment, night vision devices like thermal imaging systems, as well as applications in radar, sonar, and various other imaging and sensing technologies. Moreover, this segment is also projected to register the highest CAGR during the forecast period.

Based on technology, the IoT sensors market is segmented into wired technology and wireless technology. In 2024, the wireless technology segment is expected to account for the larger share of the IoT sensors market. The large market share of this segment is attributed to the increasing use of wireless sensor networks for various applications and the increasing adoption of IoT devices across various sectors. The demand for wireless IoT sensors is increasing as they require less maintenance and power. Additionally, these sensors can run IoT applications for an extended period without requiring battery replacement or recharging. Moreover, this segment is also projected to register the highest CAGR during the forecast period.

Based on sector, the IoT sensors market is segmented into agriculture, manufacturing, retail, energy & utilities, oil & gas, transportation & logistics, healthcare, consumer electronics, and other sectors. In 2024, the manufacturing segment is expected to account for the largest share of the IoT sensors market. The large market share of this segment is attributed to the supportive government initiatives aimed at promoting the adoption of IoT devices in manufacturing, the rising adoption of smart manufacturing across developing countries, and the increasing number of smart factories. Integrating sensor technologies with IoT devices enables manufacturers to optimize production. IoT technology ensures the security, efficient movement, and precise control of materials and end products throughout the entire manufacturing, distribution, warehousing, and storage process.

However, the healthcare segment is projected to register the highest CAGR during the forecast period. The growth of this segment is attributed to the increasing developments by market players, increasing integration of IoT sensors in medical equipment, and growing demand for IoT devices for patient monitoring applications. The technology benefits multiple healthcare stakeholders and makes telemedicine, patient monitoring, medication management, and imaging more effective. IoT sensors are integrated with medical equipment to collect, share, and analyze data to measure the probable outcome of the preventive treatment.

Based on geography, the IoT sensors market is segmented into North America, Europe, Asia-Pacific, Latin America, and the Middle East & Africa. In 2024, Asia-Pacific is expected to account for the largest share of the IoT sensors market. The large market share of this segment is attributed to the surging demand for smart sensor-enabled wearable devices, growing technological advancements in industrial sensors, increasing adoption of industrial robots, the advent of Industry 4.0, and increasing adoption of IoT devices in the manufacturing and healthcare industries. The Asia-Pacific region presents several opportunities for adopting IoT sensors due to the presence of a massive manufacturing sector. Japan, China, India, Singapore, and South Korea are investing a significant portion of their GDP into the healthcare and manufacturing sectors, which is driving the market growth. Moreover, this region is also projected to register the highest CAGR during the forecast period.

Key Players

The key players operating in the IoT sensors market are Texas Instruments Incorporated (U.S.), TE Connectivity Ltd. (Switzerland), STMicroelectronics N.V. (Switzerland), OMRON Corporation (Japan), Honeywell International Inc. (U.S.), Murata Manufacturing Co., Ltd. (Japan), Bosch Sensortec GmbH (Germany), Analog Devices, Inc. (U.S.), NXP Semiconductors N.V. (Netherlands), Infineon Technologies AG (Germany), Broadcom Inc. (U.S.), and TDK Corporation (Japan).

Download Sample Report Here @ https://www.meticulousresearch.com/download-sample-report/cp_id=5738

Contact Us: Meticulous Research® Email- [email protected] Contact Sales- +1-646-781-8004 Connect with us on LinkedIn- https://www.linkedin.com/company/meticulous-research

#IoTSensors#SmartSensors#ConnectedDevices#WirelessSensors#SensorTechnology#IndustrialIoT#SmartHomes#AutomotiveSensors#IoTApplications#MarketForecast

0 notes

Text

Uncooled Infrared Detector Camera Detector Market: Emerging Trends and Business Opportunities 2025–2032

Uncooled Infrared Detector Camera Detector Market, Trends, Business Strategies 2025-2032

Uncooled Infrared Detector Camera Detector Market size was valued at US$ 1.34 billion in 2024 and is projected to reach US$ 2.23 billion by 2032, at a CAGR of 6.6% during the forecast

Our comprehensive Market report is ready with the latest trends, growth opportunities, and strategic analysis https://semiconductorinsight.com/download-sample-report/?product_id=103404

MARKET INSIGHTS

The global Uncooled Infrared Detector Camera Detector Market size was valued at US$ 1.34 billion in 2024 and is projected to reach US$ 2.23 billion by 2032, at a CAGR of 6.6% during the forecast. The U.S. market accounted for approximately 35% of global revenue in 2024, while China is expected to grow at the fastest rate among major economies.

Uncooled infrared detectors are thermal imaging components that operate without cryogenic cooling systems, making them more cost-effective and portable than cooled alternatives. These detectors convert infrared radiation into electrical signals through microbolometer or pyroelectric technologies, enabling applications in night vision, temperature measurement, and surveillance. Key product segments include near infrared and short wave (growing at 8.1% CAGR), mid-wave infrared, and long wave infrared detectors.

Market growth is being driven by increasing defense spending, with global military budgets exceeding USD 2.2 trillion in 2024, and expanding industrial automation applications. However, the industry faces challenges from the high development costs of advanced detector arrays and competition from emerging thermal imaging technologies. Major players like Teledyne FLIR and L3Harris Technologies are investing heavily in next-generation detectors, with FLIR launching its Boson+ thermal camera module in Q1 2024 featuring 640×512 resolution at 60Hz refresh rates.

List of Key Uncooled Infrared Detector Manufacturers

Teledyne FLIR LLC (U.S.)

L3Harris Technologies (U.S.)

Nippon Avionics (Japan)

Hanwha Techwin (South Korea)

Axis Communications (Sweden)

Current Corporation (U.S.)

ZHEJIANG DALI TECHNOLOGY (China)

Fluke Corporation (U.S.)

General Dynamics Mission Systems (U.S.)

Excelitas Technologies (U.S.)

Raytheon Technologies Corporation (U.S.)

Seek Thermal (U.S.)

Testo SE & Co. (Germany)

ULIRVISION Technology (China)

Guide Infrared (China)

Segment Analysis:

By Type

Long Wave Infrared Segment Dominates Due to Its Widespread Use in Industrial and Military Applications

The market is segmented based on type into:

Near Infrared and Short Wave

Mid-Wave Infrared

Long Wave Infrared

By Application

Industrial Sector Leads the Market Driven by Thermal Imaging for Predictive Maintenance

The market is segmented based on application into:

Industrial

Automotive Industry

Government and Defense

Healthcare Industry

Residential

By Technology

Vanadium Oxide-based Detectors Hold Maximum Share Owing to High Performance in Extreme Conditions

The market is segmented based on technology into:

Vanadium Oxide (VOx)

Amorphous Silicon (a-Si)

Other Emerging Technologies

By End User

Commercial Sector Shows Strong Growth Potential with Expanding Surveillance Applications

The market is segmented based on end user into:

Commercial

Military & Defense

Industrial

Healthcare

Regional Analysis: Uncooled Infrared Detector Camera Detector Market

North America The North American market for uncooled infrared detector camera detectors is driven by strong demand from defense, industrial, and automotive sectors. The U.S. accounts for the majority of regional growth, with defense modernization initiatives and increased adoption of thermal imaging in security and surveillance applications. The U.S. Department of Defense has consistently increased funding for thermal imaging technologies, including through programs like the Next Generation Vision System. Commercial applications, particularly in building diagnostics and industrial automation, are also expanding due to regulatory pressures for energy efficiency and worker safety. Major players like Teledyne FLIR LLC and L3Harris Technologies dominate the competitive landscape, focusing on high-resolution, low-power solutions.

Europe Europe’s market benefits from stringent safety regulations and sustainability initiatives across industries. The EU’s push for advanced industrial automation under Industry 4.0 has accelerated demand for infrared detectors in predictive maintenance applications. Germany and France lead in manufacturing adoption, while the U.K. shows strong growth in defense and aerospace procurement. The region also sees innovation in healthcare applications, notably in non-contact temperature monitoring post-pandemic. However, compliance with EU export controls on thermal imaging technology restricts some market expansion. Companies like ULIS (France) and Xenics (Belgium) hold significant market shares.

Asia-Pacific This region is experiencing the fastest growth globally, fueled by China’s massive production capabilities and India’s expanding security infrastructure. China accounts for over 40% of Asia-Pacific demand, driven by military modernization and smart city projects. Japan and South Korea focus on high-precision detectors for electronics manufacturing and automotive night vision systems. Cost-competitive Chinese manufacturers are increasingly capturing market share through aggressive pricing, though concerns remain about quality consistency. Emerging applications in consumer electronics (such as smartphone thermal cameras) present new growth avenues across the region.

South America Market growth in South America remains constrained by economic instability but shows promise in specialized segments. Brazil leads in oil & gas pipeline monitoring applications, while Argentina sees increasing border security deployments. The lack of local manufacturing means most products are imported, making pricing sensitive to currency fluctuations. However, mining safety regulations and growing industrial automation investments in countries like Chile are creating steady demand. The region also shows potential for agricultural and firefighting applications, though adoption rates lag behind global averages.

Middle East & Africa Defense and critical infrastructure protection dominate demand in this region. Gulf states like Saudi Arabia and the UAE are major buyers of high-end systems for military and oil facility security. Africa’s market is nascent but growing, particularly for border surveillance and wildlife conservation applications. Low awareness of commercial applications and high costs relative to regional purchasing power hinder broader adoption. Recent partnerships between global manufacturers and local distributors aim to improve market penetration through financing options and localized support services.

Market Dynamics:

Urban infrastructure projects worldwide are incorporating thermal imaging into smart city frameworks at an unprecedented rate. Municipalities are deploying networked infrared cameras for traffic monitoring, fire detection, and public safety, with average project sizes exceeding $5 million in major metropolitan areas. These systems provide 24/7 monitoring capabilities unaffected by lighting conditions, demonstrating 40% higher incident detection rates compared to conventional CCTV. The integration of AI analytics with thermal imaging has further enhanced functionality, enabling real-time alerts for abnormal heat patterns. With smart city investments projected to reach $2.5 trillion globally by 2025, this represents a transformative opportunity for market expansion.

Recent breakthroughs in microelectromechanical systems (MEMS) fabrication are poised to significantly lower production costs while improving detector performance. New manufacturing techniques have reduced pixel sizes below 12μm while maintaining high sensitivity, enabling higher resolutions without proportionally increasing costs. Several manufacturers have successfully commercialized wafer-level packaging for infrared detectors, cutting assembly expenses by nearly 60%. These advancements are expected to bring entry-level thermal camera prices below $500 within three years, potentially opening mass consumer markets previously inaccessible due to cost barriers.

The infrared detector market faces growing competition from emerging sensing technologies that offer overlapping functionality. Millimeter-wave radar systems, for instance, have achieved comparable detection ranges for automotive applications at 30% lower costs. Similarly, advanced LiDAR systems now incorporate thermal sensing capabilities, creating convergence in the sensor market. This technological overlap has led to price erosion in certain segments, with average selling prices declining 7-10% annually. Manufacturers must continually innovate to differentiate their offerings, requiring substantial R&D investments that can exceed 15% of revenue for leading players.

The specialized nature of infrared detector manufacturing creates supply chain risks that can disrupt production schedules. Key components such as vanadium oxide sensors and germanium lenses face periodic shortages, with lead times extending to 9-12 months during peak demand periods. The market experienced severe disruptions during recent global events, with some manufacturers reporting 40% reductions in production capacity due to component shortages. These vulnerabilities are exacerbated by the concentrated supplier base, where three companies control over 70% of critical raw material supplies.

The market is highly fragmented, with a mix of global and regional players competing for market share. To Learn More About the Global Trends Impacting the Future of Top 10 Companies https://semiconductorinsight.com/download-sample-report/?product_id=103404

FREQUENTLY ASKED QUESTIONS:

What is the current market size of Global Uncooled Infrared Detector Camera Detector Market?

Which key companies operate in this market?

What are the key growth drivers?

Which region dominates the market?

What are the emerging trends?

Related Reports:

https://semiconductorblogs21.blogspot.com/2025/07/gas-scrubbers-for-semiconductor-market_14.htmlhttps://semiconductorblogs21.blogspot.com/2025/07/sequential-linker-market-economic.htmlhttps://semiconductorblogs21.blogspot.com/2025/07/lever-actuator-market-swot-analysis-and.htmlhttps://semiconductorblogs21.blogspot.com/2025/07/probe-station-micropositioners-market.htmlhttps://semiconductorblogs21.blogspot.com/2025/07/gesture-recognition-sensors-market.htmlhttps://semiconductorblogs21.blogspot.com/2025/07/multi-channel-piezo-driver-market.htmlhttps://semiconductorblogs21.blogspot.com/2025/07/video-sync-separator-market-market.htmlhttps://semiconductorblogs21.blogspot.com/2025/07/tv-tuner-ic-market-investment-analysis.htmlhttps://semiconductorblogs21.blogspot.com/2025/07/single-channel-video-encoder-market.htmlhttps://semiconductorblogs21.blogspot.com/2025/07/sic-ion-implanters-market-revenue.htmlhttps://semiconductorblogs21.blogspot.com/2025/07/quad-flat-no-lead-packaging-qfn-market.htmlhttps://semiconductorblogs21.blogspot.com/2025/07/ntc-thermistor-chip-market-industry.htmlhttps://semiconductorblogs21.blogspot.com/2025/07/low-dropout-ldo-linear-voltage.htmlhttps://semiconductorblogs21.blogspot.com/2025/07/logic-test-probe-card-market-strategic.htmlhttps://semiconductorblogs21.blogspot.com/2025/07/led-display-module-market-size-share.htmlhttps://semiconductorblogs21.blogspot.com/2025/07/industrial-led-lighting-market-trends.html

CONTACT US: City vista, 203A, Fountain Road, Ashoka Nagar, Kharadi, Pune, Maharashtra 411014 [+91 8087992013] [email protected]

0 notes

Text

Analyzing the Structural Framework of AI Agents and Their Functions

In recent years, artificial intelligence (AI) agents have become increasingly integral to various sectors, driving advancements in automation, decision-making, and user interaction. These AI entities possess structural frameworks that dictate their operational efficiencies and functional capabilities. Understanding the architecture underpinning AI agents is crucial for researchers and practitioners aiming to harness their full potential. This article dissects the core architecture of AI agent frameworks and evaluates their functional capabilities, providing insights into the design philosophies that govern their efficacy.

Dissecting the Core Architecture of AI Agent Frameworks

The architecture of AI agents can be broadly categorized into three primary components: perception, reasoning, and action. The perception component encompasses the sensory inputs (e.g., data from cameras, microphones, or other sensors) that allow the agent to collect information from its environment. This sensory data is critical as it forms the basis for how the agent interprets the world around it. A well-designed perception module enhances an AI agent's situational awareness, enabling it to respond effectively to dynamic conditions. The reasoning component is where the AI agent processes the information gathered through perception. This is often achieved through algorithms that incorporate various techniques, including machine learning, rule-based systems, and probabilistic reasoning. The reasoning engine assesses available data, weighs potential actions, and formulates a course of action based on predefined objectives. This layer is pivotal, as it determines the agent's ability to make informed decisions, adapt to new scenarios, and learn from past experiences, which are essential traits for high-functioning AI systems. Lastly, the action component translates the decisions made by the reasoning engine into physical or virtual actions. This can involve generating spoken responses, controlling robotic limbs, or triggering software functions. The design of this component is crucial as it ensures the AI agent can execute tasks efficiently and accurately. The seamless integration of these three components—perception, reasoning, and action—forms the backbone of AI agent architectures, enabling them to operate effectively in complex environments.

Evaluating Functional Capabilities in AI Agent Design

Functional capabilities in AI agents are evaluated based on their performance in specific tasks, adaptability, and user interaction. One of the most important aspects of an AI agent's functionality is its ability to handle a variety of tasks autonomously. This includes processing information, learning from interactions, and executing commands with minimal human intervention. The effectiveness of an AI agent in completing tasks is often measured by its accuracy and speed, which directly correlate with its underlying algorithms and processing capabilities. Adaptability is another critical functional capability, as it allows AI agents to evolve in response to changing environments and user needs. An adaptable AI agent can learn new patterns and improve its performance over time through techniques such as reinforcement learning. This capacity for growth is essential, particularly in fields like healthcare and finance, where data and requirements can shift rapidly. Evaluating an AI agent's adaptability involves assessing how quickly and effectively it can adjust its strategies based on real-time feedback and new information. User interaction is also a fundamental aspect of functional capabilities. AI agents are often designed to interact with humans, and the quality of these interactions can significantly influence their usability and acceptance. This aspect encompasses natural language processing, emotional recognition, and user-friendly interfaces. Effective user interaction can enhance the overall experience, allowing AI agents to better understand user preferences and respond appropriately. Evaluating this facet involves analyzing user feedback, engagement metrics, and overall satisfaction with the AI agent's capabilities. The structural framework of AI agents is a multifaceted blend of perception, reasoning, and action, each contributing to the agent's overall functionality. By dissecting these components, we can better understand how AI agents operate and their potential applications across industries. Evaluating their functional capabilities, including task performance, adaptability, and user interaction, reveals the strengths and limitations inherent in current designs. As technology continues to evolve, ongoing analysis and refinement of AI agent frameworks will be crucial in unlocking their full potential and ensuring they meet the demands of an increasingly complex world. Read the full article

0 notes

Text

Drone Solar Panel Inspection

Boosting Solar Efficiency with Drone Solar Panel Inspection – Powered by Equinox’s Drones

As the demand for clean energy continues to rise, solar power installations are becoming a critical component of global energy infrastructure. However, maintaining optimal performance of solar panels across vast solar farms poses unique challenges. Traditional inspection methods can be time-consuming, labor-intensive, and limited in accuracy. That’s where Equinox’s Drones steps in with advanced drone solar panel inspection services.

Why Drone Solar Panel Inspection Matters

Regular inspections are essential to ensure that solar panels are functioning at maximum capacity. Factors such as dust accumulation, microcracks, shading, and wiring issues can cause panel degradation and reduce energy output. A drone solar panel inspection identifies these issues early, enabling timely maintenance and minimizing energy losses.

Faster, Safer, and More Accurate

Using aerial drones equipped with high-resolution and thermal imaging cameras, Equinox’s Drones provides comprehensive inspections without the need for scaffolding, manual walking, or shutdowns. Our drones can cover large-scale installations in a fraction of the time compared to traditional methods, reducing operational costs and eliminating safety risks.

Thermal Imaging for Fault Detection

One of the most powerful tools in our inspection arsenal is thermal imaging. Drones detect anomalies like hotspots, which indicate faulty panels or connections. These thermal signatures are invisible to the naked eye but critical in spotting underperforming modules or potential fire hazards. Our detailed thermal reports provide exact GPS coordinates, helping maintenance teams act swiftly.

High-Resolution Visual Inspection

In addition to thermal data, Equinox’s Drones captures high-resolution RGB imagery for visual inspections. This allows for detecting visible issues like broken glass, bird droppings, dirt buildup, or structural shading. These visual cues, combined with thermal data, offer a complete picture of each panel’s health.

Ideal for All Scales of Operation

Whether it’s a residential rooftop installation, a commercial solar array, or a utility-scale solar farm, our drone solar panel inspection services are scalable to meet every need. We provide customized inspection packages based on your site size, layout complexity, and inspection frequency.

Key Benefits of Drone Solar Inspections by Equinox’s Drones

Speed & Efficiency: Inspect thousands of panels in a single flight.

Safety: No need for climbing, scaffolding, or manual panel checks.

Accuracy: Identify precise fault locations using thermal and visual data.

Documentation: Receive comprehensive inspection reports with imagery and actionable insights.

Reduced Downtime: Inspections can be conducted without interrupting operations.

Helping You Maximize ROI

At Equinox’s Drones, we understand that every percentage of lost efficiency means lost revenue. Our drone inspections ensure that every panel performs at its best. By identifying faults early, you can reduce downtime, extend panel lifespan, and maximize return on investment (ROI).

Ready to Take Your Solar Efficiency to the Next Level?

Join the growing number of solar developers, EPC contractors, and asset managers who trust Equinox’s Drones for accurate, fast, and cost-effective drone solar panel inspection services. Let us help you protect your investment and improve the reliability of your clean energy systems.

#drone#drone services#solar energy#solar power#wind turbines#drone technology#drone land surveying#drone inspection services

0 notes

Text

0 notes