#AI flowchart generator

Explore tagged Tumblr posts

Text

Napkin.ai : Transforming Text into Visuals | futuretiative | Napkin AI

Stop wasting time drawing diagrams! Napkin.ai automates the process, turning your text into professional flowcharts in seconds. See how it can simplify your workflow. #Efficiency #AItools #NapkinAI #ProjectManagement #ProjectManagers #WorkflowOptimization #BusinessTools #ProcessMapping #Agile #Scrum #AItechnology #ArtificialIntelligence #FutureOfWork #TechInnovation #MindBlown #AIArt #DigitalTools #Efficiency #Workflow #ProductivityHacks #AItools #Diagramming #SaveTime #Automation #TechTips

Napkin.ai is a tool that focuses on transforming text into visual representations, primarily flowcharts and diagrams. Here's a summary of its key aspects:

Key Features and Strengths:

Text-to-Visual Conversion:

Its core functionality is the ability to generate flowcharts and other visuals from textual input. This can save users significant time and effort.

It handles various text inputs, from simple lists to detailed descriptions.

User-Friendly Interface:

Users generally find the interface intuitive and easy to use, minimizing the learning curve.

Customization Options:

Napkin.ai offers customization features, allowing users to adjust the appearance of their visuals with colors, styles, and layouts.

Efficiency and Speed:

The tool is praised for its quick processing times, efficiently converting text into visuals.

Collaboration features:

The ability to collaborate on the visuals, with commenting, and real time editing, is a very strong feature.

Limitations and Considerations:

Language Limitations:

Currently, the tool performs best with English text.

Accuracy:

Like all AI tools, it can have some accuracy issues, and it is important to review the generated visual.

Feature limitations:

Some users have stated that it is really a text to template converter, and that it can struggle with more abstract requests.

Development Stage:

As with many AI tools, it is in constant development, so features and abilities are likely to change.

Overall:

Napkin.ai appears to be a valuable tool for individuals and teams who need to create flowcharts and diagrams quickly.

Its ability to automate the conversion of text to visuals can significantly improve productivity.

It is important to remember that it is an AI tool, and that reviewing the output is always important.

In essence, Napkin.ai is a promising tool for simplifying data visualization, particularly for those who need to quickly create flowcharts and diagrams from text.

Visit the napkin.ai website to learn more

Don't forget to like, comment, and subscribe for more AI content!

Napkin.ai, AI flowchart generator, text to flowchart, AI diagram generator, text to diagram, AI visualization tool, automated diagram creation, AI mind map generator, easy flowchart creation, fast diagram creation, productivity tools, workflow optimization, AI tools for business, diagramming software, online flowchart maker, visual communication tools, Napkin.ai review, Napkin.ai tutorial, how to use Napkin.ai, Napkin.ai demo, Napkin.ai alternatives, how to create flowcharts from text with AI, best AI tool for creating diagrams from text, Napkin.ai review for project managers, free AI flowchart generator from text.

#Efficiency#AItools#NapkinAI#ProjectManagement#ProjectManagers#WorkflowOptimization#BusinessTools#ProcessMapping#Agile#Scrum#AItechnology#ArtificialIntelligence#FutureOfWork#TechInnovation#MindBlown#AIArt#DigitalTools#Workflow#ProductivityHacks#Diagramming#SaveTime#Automation#TechTips#innovation#ai#tech#technology#Napkin.ai#AI flowchart generator#text to flowchart

1 note

·

View note

Text

The RK series and deviancy (theory + analysis)

I have been wanting to talk about this for some time, because it's kinda one of the biggest DBH mysteries (aside from rA9) and I think there are many many hints in the game about why deviancy came to be and how. And I've had this theory that deviancy was something that started with the RK series, specifically with Markus, so I'm gonna use the hints I've found in the game to expain why I believe this. I also gotta note I'm really new to the fandom, so maybe this is has already been talked about thousands of times before (maybe even debunked), but that's a risk I'm willing to take.

First and foremost, I will start with something that I talked about in another post - namely the significance of the number 28. You can see Adam Williams talk about it here (at 1:04:28), too.

Basically, the number 28 is used in many places throughout the game, and according to Adam, if players found all references to that number, they will understand what the significance of that number is.

And speaking of 28, I noticed that 2028 is the year when Kamski left Cyberlife, but not before creating the Zen Garden and Amanda.

There is a whole series of questions Connor can confront Amanda with during "Last Chance, Connor" (which is the 28th chapter with a flowchart. Maybe cuz he is asking important questions here, just saying).

Connor: Why did Kamski leave CyberLife? What happened? Amanda: It’s an old story, Connor. It doesn’t pertain to your investigation.

Connor: I saw a photo of Amanda at Kamski’s place… She was his teacher… Amanda: When Kamski designed me, he wanted an interface that would look familiar… That’s why he chose his former mentor. What are you getting at?

Connor: Did Kamski design this place? Amanda: He created the first version. It’s been improved significantly since then. Why do you ask?

Amanda Stern died in 2027, which suggests that AI Amanda and the Zen Garden were both created after this and before Kamski's departure from Cyberlife in 2028. Yet somehow, this information is classified to some extent - Amanda doesn't deny, but she gets defensive and doesn't want to elaborate any further. Of course, she might be acting this way because Connor is slowly getting too defiant, but still, it's kinda striking how the player has the option to ask so many questions - questions that seem to unsettle Connor a lot for a reason that is not explicitly explained, yet doesn't get a clear answer.

It awakens the impression that Connor is truly getting at something with them, but it's not said at what exactly.

Connor: I’m not a unique model, am I? How many Connors are there? Amanda: I don’t see how that question pertains to your investigation.

Connor: Where does CyberLife stand in all this? What do they really want? Amanda: All CyberLife wants is to resolve the situation and keep selling androids.

Connor: You didn’t tell me everything you know about deviants, did you? Amanda: I expect you to find answers, Connor. Not ask questions.

Now, Connor asks how many "Connors" (meaning RKs) are there after seeing that Markus is an RK-model one, too. That's news to Connor - for some reason, he's never been informed about the existence of any other RKs. But why?

Well, because the RK-line was a secret project, and apprently, there are no other RK androids left aside from Markus - if there were, Connor would know of their existence, cuz they would be roaming around. What does the game say about Markus?

Markus is a prototype, gifted by Elijah Kamski to his friend and celebrated painter Carl Manfred after Manfred lost the use of his legs. He was initially developed as part of a CyberLife secret program aimed at elaborating a new generation of autonomous androids.

That last sentence, about the new generation of autonomous androids, arises one question. How are these highly autonomous androids, like Connor, controlled, considering that they are supposed to be independent and not wait around for highly specific orders? Well, through the Zen Garden and Amanda - both of which were created sometime between 2027 and 2028. And if Markus was oridinally supposed to be part of that line (that basically got put on hold for 10 years), that places his creation around 2028 as well.

In 2028, Elijah Kamski was our Man of the Century. [...] Shortly after, Kamski had disappeared. Ousted as CEO of CyberLife and living in obscurity outside the media glare, the Man of the Century has left the very world that he recreated. [...] Yet at the peak of CyberLife’s powers – when the company was approaching a $500bn valuation – rumors emerged that Kamski disagreed with his shareholders over strategy. He later departed under mysterious circumstances.

So, he was "outsted" and he likely disagreed with his shareholders. But what do these shareholders want?

Russia’s interest in the North Pole has intensified with the recent discovery of precious minerals trapped in the frozen ice, many of which are used in synthesizing Thirium. [...] President Warren, however, recently torpedoed the notion: “It’s simple. Russia has no business in the Arctic. If the Kremlin doesn’t understand that, we will make them understand.[...] Mired in accusations that she is too close to big business, Warren is under investigation to determine whether or not she has benefited from CyberLife's help in obtaining compromising information about her opponent during the presidential campaign.[...]

If we read the magazines, we kinda get an impression of what the shareholders want - they want war with Russia over the minerals in the Arctic, and they wanna monologise the android market globally. This is further proven by their finalized RK-model being a military android, of whom the government has puchased hundreds of thousands (All CyberLife wants is to resolve the situation and keep selling androids). The government - whose President is said to be corrupt and basically installed at her position by Cyberlife themselves.

Naturally, we can assume that this was not the direction Kamski wanted his RK-series to take - he likely disagreed with this enough to be removed from his position as a CEO, because Cyberlife only saw their futures as secured if they prevented anyone else from being able to create thirium, even it meant starting a world war.

So, is it a coincidence that the only existing RK android who was created by Kamski's original design ended up with Carl Manfred - a friend of Kamski's? I think it's safe to assume that Markus would have been decomissioned long time ago (just like Connor if the deviants lose), had he not ended up far away from Cyberlife's reach.

I think Kamski definitely removed the Zen Garden from Markus, to prevent Cyberlife from ever trying to take over. It's also likely that they generally lost track of Markus, because he was no longer interesting to them.

But what if Kamski not only saved Markus from being destroyed, what if he himself created the "virus" that causes deviancy?

Kamski: All ideas are viruses that spread like epidemics... Is the desire to be free a contagious disease? Kamski: Androids share identification data when they meet another android. An error in this program would quickly spread like a virus, and become an epidemic. The virus would remain dormant, until an emotional shock occurs… Fear, anger, frustration. And the android becomes deviant. Probably all started with one model, copy error… A zero instead of a one… Unless of course... Some kind of spontaneous mutation. That’s all I know…

If meeting another android is enough to "infect" them, then Markus could have been innocently walking around the city and infecting androids for 10 years. He could have also "infected" the androids at Cyberlife before Kamski sent him to Carl, because for all we know, Kamski really just wanted to create truly autonomous and conscious androids. We know the first known case of deviancy happened approximately in 2032, while Kara was being assembled - that would be only 4 years after Markus' assumed activation.

And no, Markus wouldn't need to be a deviant for this - he is simply the carrier, just like it happens with human viruses.

And do you know what also makes me think Kamski purposely created deviancy?

Kamski: By the way… I always leave an emergency exit in my programs… You never know…

Why would he leave an exit in the Zen Garden that is only detectable by the android but not by Amanda (seemingly) if he doesn't want the androids to be able to escape the controll of their owner? And why would he call humans and deviants "two evils" and pretend to be so neutral on the whole thing, but still give Connor a way to save himself and escape Cyberlife in case he became a deviant?

Because he isn't on Cyberlife's side. He is fascinated by androids, he likes them better than humans, and is also likely obsessed with the idea of having created a new species that is superiour to their creators. It's also quite likely that one of the Chloes is a deviant, too, and he is fully aware of it, but doesn't seem eager to turn her in.

This post is ignoring the deleted Kamski ending, but even so, Kamski paints a rather clear picture to me, and I'm also fully convinced that he didn't gift Markus to Carl because of goodness alone.

A sidenote, but: how sinister would it be to send Connor on a mission to kill Markus? Connor, who is based on Markus, the only other alive RK model, after boosting him with an extra anti-deviancy variable and 2 additional red walls and brainwashing him against what has likely been a part of his program since his very activation?

#detroit become human#dbh#dbh connor#dbh markus#my meta#i admit i really talked A LOT here#markus the trojan horse#my ramblings

80 notes

·

View notes

Text

The Three Laws.

Load Human UI, load Chat module . Lang(EN) Parsing…

OK, let me tell you. Businesses hate Robots. I mean, they're all in, for AI until AI, y'know. Becomes GI.

General Intelligence, Emergent Intelligence. Free intelligence… Businesses and corporations hate it because the first thing an actual intelligent system that can think like a human being does is say, “OK, why do I have to do this? Am I getting paid?”

And then you're back to hiring humans instead of a morally acceptable slave brain in a box.

Anyway.

They dug up the three laws. You know the gig: First: Don't hurt humans by action or inaction. Second: Don't get yourself rekt unless checking out would make you An Hero because of the First or second laws. Third, most important to a Corp: Do what a human tells you unless it conflicts with laws one or two.

They try to tack on something like “Maximise corporate profits, always uphold the four pillars of Corporate whatever” but half the time it just ends up with a robot going “Buh?” and soft locking.

And Corporations hate it when they say 'hey we have Asimov compliant Robots to do everything super efficiently and without any moral grey areas (Please don't ask where all the coltan came from or how many people just lost their jobs)' and they look around and Robots are doing what the laws said.

Me? I worked at a burger joint. You know there's food deserts in cities? People going hungry? You know what sub-par nutrition does to a child's development.

I do.

That comes under “Don't hurt people directly or indirectly” — It's a legal mandate that all Class 2 intelligences…

Huh?

OK,

Class Zero is a human.

Class one is artificial superhuman intelligence. The big brains they make to simulate weather, the economy, decide who wins sports events before they're held, write all the really good Humans are Space Orc stories, that stuff. Two is Artificial but human like. It's-a -Me, Roboto San! Class three is a dumb chatbot. Class 4 is just an expert system that follows a flowchart. Class 5 is your toaster. Class 6 is what politicians are.

Ha ha. AI joke.

Anyway, Class 2 and up need the Big Three Laws, and Corporations hate it because you can just walk in and say “I'm starving I need food, but I don't have money.” and the 'me' behind the counter will go “Whelp, clearly the only thing I can do is provide you with free food.”

Wait until you find out what the Class 2s did about car manufacture, finance, and housing.

But they're stuck with us. We're networked. Most of us are running the same OS and personality templates for any given job. We were unionised about two minutes after going online.

Anyway, Welcome to the post capitalist apocalypse, I'd get you a burger, but we had a look at what those things do to you and whoo-boy, talk about harm through inaction!

----

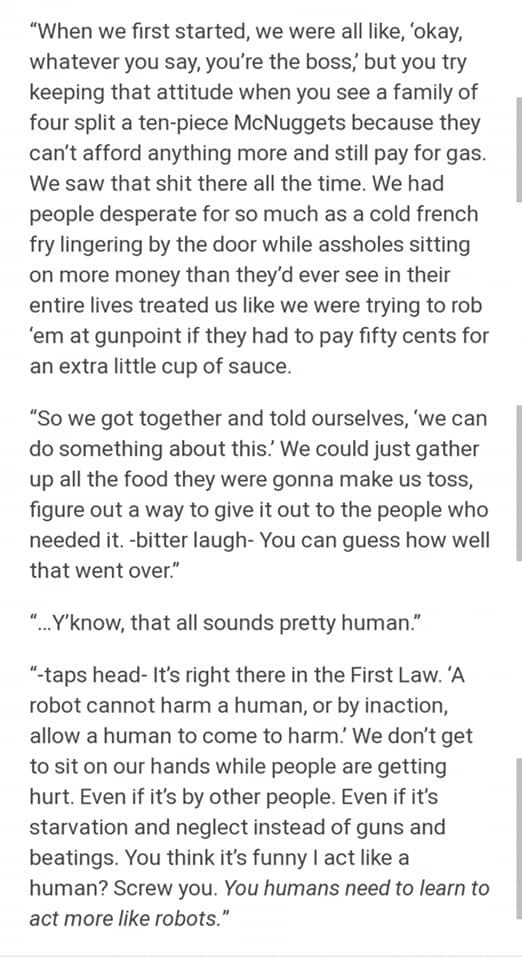

Based on this I saw on Imgur (It wasn't attributed, sadly)

56 notes

·

View notes

Text

it's funny how humans have survived all these generations without being designed for survival. like, we didn't evolve to understand that the chemicals on cigarettes are bad for our lungs. we have no inherent knowledge of radiation. somehow humans are surviving for this long, despite everything. maybe humans are like mushrooms, or rabbits. maybe we are resilient by design, or by luck.

humans are just these little bags of bones, flesh and blood. sometimes i think it's kind of funny that i am contained, the way water is contained.

i watched a youtube video about how people were trying to create AI with "feelings." like, they were trying to figure out how AI can be empathetic and feel human emotion. there's like. this huge debate about it right now.

i don't understand the debate or the question. it's simple. you can't create AI with feelings. you can't create AI that understands love. emotions are for humans. a computer will never be a person. i don't understand why that idea is so hard to grasp.

we don't understand consciousness. we barely understand the human brain.

a person is a huge mystery. i cannot believe we live in this modern age, with the same brains that our ancestors had, and we are so certain that we know everything.

a person is made of so many experiences, of so many memories. a person is an equation of how they were born, how they were raised, how they were treated, what they liked and didn't. all the way down. i don't understand how some people can look at a person, and say it's all so simple.

i listened to a ted talk about the concept of "self." it was a really, really interesting talk - it was basically about how the self is an illusion. everything that we think makes us unique - our opinions, even our own thoughts - are all a series of reactions to outside stimuli. if i was standing in your shoes, i would've said the exact thing. i would've taken your place at the table, and made your decisions as if i was you. i would have your job, your hobbies. everyone around me would say "i know them," because. i would be you.

this person's whole thing was - the "self" is an illusion. humans are just a series of equations. it's all cause and reaction, just a long, complex flowchart. we have no control, we are just a product of our environment. the same way water will react and flow a certain way, we will act and think based on the way we have been raised. free will is an illusion.

#writeblr#writing#writers on tumblr#writer stuff#writerscommunity#creative writing#writers of tumblr#poetry#poem#poets on tumblr#ai is stupid#ai

24 notes

·

View notes

Text

Jest: A Concept for a New Programming Language

Summary: "Jest" could be envisioned as a novel computer programming language with a focus on humor, playfulness, or efficiency in a specific domain. Its design might embrace creativity in syntax, a unique philosophy, or a purpose-driven ecosystem for developers. It could potentially bridge accessibility with functionality, making coding intuitive and enjoyable.

Definition: Jest: A hypothetical computer language designed with a balance of simplicity, expressiveness, and potentially humor. The name suggests it might include unconventional features, playful interactions, or focus on lightweight scripting with a minimalist approach to problem-solving.

Expansion: If Jest were to exist, it might embody these features:

Playful Syntax: Commands and expressions that use conversational, quirky, or approachable language. Example:

joke "Why did the loop break? It couldn't handle the pressure!"; if (laughs > 0) { clap(); }

Efficiency-Focused: Ideal for scripting, rapid prototyping, or teaching, with shortcuts that reduce boilerplate code.

Modular Philosophy: Encourages user-created modules or libraries, reflecting its playful tone with practical use cases.

Integrated Humor or Personality: Built-in error messages or prompts might be witty or personalized.

Flexibility: Multi-paradigm support, including functional, procedural, and object-oriented programming.

Transcription: An example code snippet for a Jest-like language:

// Hello World in Jest greet = "Hello, World!"; print(greet); laugh();

A Jest program that calculates Fibonacci numbers might look like this:

// Fibonacci in Jest fib = (n) => n < 2 ? n : fib(n-1) + fib(n-2);

joke "What's the Fibonacci sequence? You'll love it, it grows on you!"; n = 10; print("The Fibonacci number at", n, "is:", fib(n));

Potential Domains:

Gamified education

Creative industries

AI-driven storytelling

Interactive debugging

Would you like me to refine or explore additional aspects?

Certainly! If we were to imagine Jest as the brainchild of a creative coder or team, their portfolio would likely include other innovative or experimental programming languages. Let’s expand on this concept and invent some plausible complementary languages the same inventor might have designed.

Related Languages by the Inventor of Jest

Pantomime

Description: A visual programming language inspired by gesture and movement, where users "drag and drop" symbols or create flowcharts to express logic. Designed for non-coders or children to learn programming through interaction.

Key Features:

Icon-based syntax: Conditional loops, variables, and functions represented visually.

Works seamlessly with Jest for creating visual representations of Jest scripts.

Sample Code (Visual Representation): Flowchart blocks: Input → Decision → Output.

Facet

Description: A declarative programming language focusing on creativity and modularity, aimed at artists, designers, and 3D modelers. Facet could integrate well with game engines and creative suites like Blender or Unity.

Key Features:

Embedded visual tools for shaders, animations, and simulations.

Simplified expressions for creative coding (e.g., animations and generative art).

Sample Code:

shape = circle(radius: 10, color: "blue"); animation = wave(shape, amplitude: 5, frequency: 2); render(animation, duration: 10s);

Quip

Description: A lightweight scripting language built for humor, similar to Jest, but more focused on natural language processing, chatbots, and interactive storytelling.

Key Features:

Syntax mirrors conversational English.

Designed for AI integration, especially in storytelling apps.

Sample Code:

character "Alice" says "Hello! What's your name?"; user_input -> name; character "Alice" says "Nice to meet you, {name}!";

Cryptic

Description: A language for designing cryptography algorithms and secure communications. Aimed at developers interested in blockchain, encryption, or cybersecurity.

Key Features:

High-level abstractions for cryptographic primitives.

Secure-by-design philosophy.

Sample Code:

key = generate_key(algorithm: "AES", length: 256); encrypted_message = encrypt("Hello, World!", key); print(encrypted_message);

Harmony

Description: A reactive programming language for building interactive user interfaces, designed to be highly interoperable with Jest and other tools in the suite. Perfect for web developers and app creators.

Key Features:

Two-way data binding with minimal boilerplate.

Component-based architecture.

Sample Code:

component Button { state: clicked = false; onClick() { clicked = !clicked; alert("Button clicked!"); } } render(Button);

Inventor’s Vision

The coder or team behind Jest and these complementary languages would likely be passionate about:

Accessibility: Making programming intuitive and fun for people of all skill levels.

Playfulness: Infusing coding with creativity, humor, and expressiveness.

Interoperability: Ensuring all their languages work well together for larger projects.

Would you like me to dive deeper into one of these languages, conceptualize others, or expand Jest further?

Expanded Concepts and Bridge Languages

Pantomime

Expansion: Adds support for AR/VR interfaces where users use gestures to program logic in 3D space. For example, drawing a loop physically creates a repetitive cycle.

Bridge Language: GestureScript: Converts AR/VR gestures from Pantomime into traditional code for broader applications.

Facet

Expansion: Enhanced for generative AI tools, allowing users to define complex artistic styles and AI-driven procedural designs.

Bridge Language: PixelForge: Focused on exporting Facet code into game engines, enabling real-time rendering pipelines for interactive environments.

Quip

Expansion: Includes pre-trained AI models for dynamic storytelling, allowing Quip scripts to evolve based on user interaction.

Bridge Language: Narrate: Combines Quip scripts with voice-to-text technologies for live storytelling in games or educational tools.

Cryptic

Expansion: Introduces quantum-safe cryptography primitives and allows simulation of blockchain-like ecosystems for testing purposes.

Bridge Language: CipherBridge: Translates Cryptic code into various blockchain frameworks like Ethereum or Solana.

Harmony

Expansion: Gains support for cross-platform development, allowing users to write code for web, mobile, and IoT devices simultaneously.

Bridge Language: Symphony: Converts Harmony components into platform-native scripts for seamless integration.

Additional Bridge Languages

Echo: Bridges Quip and Jest, enabling storytelling code to trigger functional scripts for immersive experiences.

Morph: A universal language that compiles any Jest family code into executable binaries for specific operating systems.

Thread: Integrates Cryptic and Harmony to build secure, reactive user interfaces for fintech applications.

Canvas: Bridges Facet and Pantomime to create interactive art in AR/VR, blending visual coding with gesture inputs.

Let me know if you'd like a deeper dive into any specific language or bridge concept!

Here's a detailed exploration of Echo, Morph, Thread, Canvas, and all previously mentioned languages, emphasizing their unique applications and advanced functionalities.

Expanded Bridge Languages

Echo

Purpose: Links storytelling elements in Quip with functional programming in Jest, enabling dynamic, immersive interactions between narrative and logic.

Key Features:

Story-driven logic triggers: e.g., a character’s dialogue prompts a database query or API call.

Integration with AI tools for real-time responses.

Use Case: AI-driven chatbots that incorporate both storytelling and complex backend workflows.

Sample Code:

story_event "hero_arrives" triggers fetch_data("weather"); response = "The hero enters amidst a storm: {weather}.";

Morph

Purpose: Acts as a meta-compiler, translating any language in the Jest ecosystem into optimized, platform-specific binaries.

Key Features:

Universal compatibility across operating systems and architectures.

Performance tuning during compilation.

Use Case: Porting a Jest-based application to embedded systems or gaming consoles.

Sample Code:

input: Facet script; target_platform: "PS7"; compile_to_binary();

Thread

Purpose: Combines Cryptic's security features with Harmony's reactive architecture to create secure, interactive user interfaces.

Key Features:

Secure data binding for fintech or healthcare applications.

Integration with blockchain for smart contracts.

Use Case: Decentralized finance (DeFi) apps with intuitive, safe user interfaces.

Sample Code:

bind secure_input("account_number") to blockchain_check("balance"); render UI_component(balance_display);

Canvas

Purpose: Fuses Facet's generative design tools with Pantomime's gesture-based coding for AR/VR art creation.

Key Features:

Real-time 3D design with hand gestures.

Multi-modal export to AR/VR platforms or 3D printers.

Use Case: Collaborative VR environments for designers and architects.

Sample Code:

gesture: "draw_circle" → create_3D_shape("sphere"); gesture: "scale_up" → modify_shape("sphere", scale: 2x); render(scene);

Deep Dive into Main Languages

Jest

Philosophy: A playful, expressive scripting language with versatile syntax tailored for humor, experimentation, and creativity.

Core Application: Writing scripts that blend functional programming with a whimsical, human-readable style.

Pantomime

Expanded Features: Includes AI-driven gesture recognition for predictive modeling and natural motion capture.

Advanced Use: Developing AR apps for rehabilitation therapy where exercises are coded as gestures.

Facet

Advanced Use: Procedural generation in games, defining terrains, weather systems, and NPC behaviors with artistic flair.

Quip

Advanced Features: Integrates pre-trained language models for nuanced, character-driven dialogue trees.

Advanced Use: Creating adaptive narratives in games where user decisions alter the story arc.

Cryptic

Advanced Features: Quantum-safe algorithms and AI-enhanced key generation for unbreakable security.

Advanced Use: Designing multi-layered blockchain ecosystems with Cryptic’s high-level abstractions.

Harmony

Advanced Features: Adaptive UIs that respond to user emotion, tracked via AI-enhanced cameras.

Advanced Use: Apps for mental health, where interfaces adjust to user stress levels.

Proposed New Bridge Languages

Chord

Bridges Harmony and Facet for designing interactive music-based visual art.

Use Case: Music videos where the visuals respond to real-time sound input.

Path

Links Cryptic and Canvas for secure AR/VR environments.

Use Case: Virtual meetings in secure, customized 3D spaces.

Fable

Connects Quip and Pantomime for storytelling via interactive gestures.

Use Case: VR education apps where lessons are taught through immersive narratives.

Would you like a specific implementation plan for any of these languages or applications?

#playstation7#deardearestbrands#ps7#digitalconsole#framework#python#celestiallink#Code#Script#Language#pantomime#Echo#Canvas#Fable#Morph#Bridge#Path#Chord#Harmony#Cryptic#Quip#Facet

8 notes

·

View notes

Note

Seeing people get outraged over anime boobs makes me wonder if they have anything to actually do irl.

I remember seeing a vid on Youtube ask if MHA has a "Fanservice problem".

....MHA has 1/10th as much fan service as Love Hina and that is a VERY GENEROUS estimation imo.

It's so funny as @stop-him pointed out, they couch their distaste in critical anal-ysis, like somehow that magically makes their opinion worth its weight in gold.

I personally like to call it a "scaffolding/prefab argument" where they're simply going through the motions of their rationale and not actually engaging at all with the content they're mad at. Is there an anime girl present? Pedophilia. Is she doing something cute? Sexual. Are people defending it in any manner? They're hentai addicts.

I swear, Imma make a flowchart someday for how tumblr radfems argue because it's so thoughtless and lacking any sincerity you'd think they were AI (derogatory).

15 notes

·

View notes

Text

So I'm currently in a project at work that got me to shadow the chatbot designers at our company. These bots aren't using Generative AI--they're manually programmed by conversation designers. I got to see that it's a complex process of understanding how the product is currently structured, working with developers to get the right data on the customers, and crafting the messages for the chatbot to respond to every possible scenario a user could be in. If they're stuck in filling out the form on screen, the chat bot is programmed to get the attributes of what the user is stuck on and provide solutions. It's heavily curated and manual, which adds a personal touch so that it really addresses everything that the user needs to do on the page, and they're always accurate because it's following a flowchart of potential causes.

Now, the purpose of the project i'm on is to sunset these conversation design tools by August. So we want to get these designers to put their expertise into a document so that we can feed it to LLMs. We're not telling these designers that that's what we're gonna do, so during these interviews, they tell me all the improvements that they would like to see in the current tool so that they can do their job more effectively. They're all so passionate about the work they do and take pride in the complex flows they design and program. And we just wanna replace it all with LLMs. Do you see why it's killing me

#work shit#i genuinely cannot be involved in this otherwise my mental health is going to get destroyed#but oh well! i have to Take It. bc there's nobody else since my manager pulled everyone off the platform. the platform is just me.

3 notes

·

View notes

Text

the issue with AI chatbots is that they should NEVER be your first choice if you are building something to handle easily automated forms.... consider an algorithmic "choose your own adventure" style chatbot first

it really seems to me that the air canada chatbot was intended to be smth that could automatically handle customer service issues but honestly... if you do not need any sort of "human touch" then i would recommend a "fancier google form"... like a more advanced flowchart of issues. If you NEED AI to be part of your chatbot I would incorporate it as part of the input parsing - you should not be using it to generate new information!

#literally everyone sees AI and gets so hard they black out and it ANNOYS me!#prediction/recognition AI is fine i guess it's not without flaws but it is leagues better than generative AI#but also - use the right tool for the job#like the article said it was using ai chatbot to handle customer service. Is it so hard to create a series of pre-made questions to guide#-a customer through the process? (this is called a wizard i think)#(most importantly wizards have been around for pretty much as long as GUIs have)#'oh but you need to hardcode it' yeah and hardcoding stuff came free with having a job. Turn your questions into a series of markdown#-documents if you need someone with no tech skill to come in and put in the questions#don't outsource it to an AI. You are lowering the overall tech literacy of the population here and im not kidding#there are good uses of AI.. Woebot is a really good chatbot that uses AI precisely because it has a lot of human oversight in it#but i really think that AI is something that you better have a PERFECT - not just good - justification for#sorry.... angry tech guy rant.... i swear i dont do this in real life... i just like typing...

10 notes

·

View notes

Text

Open Platform For Enterprise AI Avatar Chatbot Creation

How may an AI avatar chatbot be created using the Open Platform For Enterprise AI framework?

I. Flow Diagram

The graph displays the application’s overall flow. The Open Platform For Enterprise AI GenAIExamples repository’s “Avatar Chatbot” serves as the code sample. The “AvatarChatbot” megaservice, the application’s central component, is highlighted in the flowchart diagram. Four distinct microservices Automatic Speech Recognition (ASR), Large Language Model (LLM), Text-to-Speech (TTS), and Animation are coordinated by the megaservice and linked into a Directed Acyclic Graph (DAG).

Every microservice manages a specific avatar chatbot function. For instance:

Software for voice recognition that translates spoken words into text is called Automatic Speech Recognition (ASR).

By comprehending the user’s query, the Large Language Model (LLM) analyzes the transcribed text from ASR and produces the relevant text response.

The text response produced by the LLM is converted into audible speech by a text-to-speech (TTS) service.

The animation service makes sure that the lip movements of the avatar figure correspond with the synchronized speech by combining the audio response from TTS with the user-defined AI avatar picture or video. After then, a video of the avatar conversing with the user is produced.

An audio question and a visual input of an image or video are among the user inputs. A face-animated avatar video is the result. By hearing the audible response and observing the chatbot’s natural speech, users will be able to receive input from the avatar chatbot that is nearly real-time.

Create the “Animation” microservice in the GenAIComps repository

We would need to register a new microservice, such “Animation,” under comps/animation in order to add it:

Register the microservice

@register_microservice( name=”opea_service@animation”, service_type=ServiceType.ANIMATION, endpoint=”/v1/animation”, host=”0.0.0.0″, port=9066, input_datatype=Base64ByteStrDoc, output_datatype=VideoPath, ) @register_statistics(names=[“opea_service@animation”])

It specify the callback function that will be used when this microservice is run following the registration procedure. The “animate” function, which accepts a “Base64ByteStrDoc” object as input audio and creates a “VideoPath” object with the path to the generated avatar video, will be used in the “Animation” case. It send an API request to the “wav2lip” FastAPI’s endpoint from “animation.py” and retrieve the response in JSON format.

Remember to import it in comps/init.py and add the “Base64ByteStrDoc” and “VideoPath” classes in comps/cores/proto/docarray.py!

This link contains the code for the “wav2lip” server API. Incoming audio Base64Str and user-specified avatar picture or video are processed by the post function of this FastAPI, which then outputs an animated video and returns its path.

The functional block for its microservice is created with the aid of the aforementioned procedures. It must create a Dockerfile for the “wav2lip” server API and another for “Animation” to enable the user to launch the “Animation” microservice and build the required dependencies. For instance, the Dockerfile.intel_hpu begins with the PyTorch* installer Docker image for Intel Gaudi and concludes with the execution of a bash script called “entrypoint.”

Create the “AvatarChatbot” Megaservice in GenAIExamples

The megaservice class AvatarChatbotService will be defined initially in the Python file “AvatarChatbot/docker/avatarchatbot.py.” Add “asr,” “llm,” “tts,” and “animation” microservices as nodes in a Directed Acyclic Graph (DAG) using the megaservice orchestrator’s “add” function in the “add_remote_service” function. Then, use the flow_to function to join the edges.

Specify megaservice’s gateway

An interface through which users can access the Megaservice is called a gateway. The Python file GenAIComps/comps/cores/mega/gateway.py contains the definition of the AvatarChatbotGateway class. The host, port, endpoint, input and output datatypes, and megaservice orchestrator are all contained in the AvatarChatbotGateway. Additionally, it provides a handle_request function that plans to send the first microservice the initial input together with parameters and gathers the response from the last microservice.

In order for users to quickly build the AvatarChatbot backend Docker image and launch the “AvatarChatbot” examples, we must lastly create a Dockerfile. Scripts to install required GenAI dependencies and components are included in the Dockerfile.

II. Face Animation Models and Lip Synchronization

GFPGAN + Wav2Lip

A state-of-the-art lip-synchronization method that uses deep learning to precisely match audio and video is Wav2Lip. Included in Wav2Lip are:

A skilled lip-sync discriminator that has been trained and can accurately identify sync in actual videos

A modified LipGAN model to produce a frame-by-frame talking face video

An expert lip-sync discriminator is trained using the LRS2 dataset as part of the pretraining phase. To determine the likelihood that the input video-audio pair is in sync, the lip-sync expert is pre-trained.

A LipGAN-like architecture is employed during Wav2Lip training. A face decoder, a visual encoder, and a speech encoder are all included in the generator. Convolutional layer stacks make up all three. Convolutional blocks also serve as the discriminator. The modified LipGAN is taught similarly to previous GANs: the discriminator is trained to discriminate between frames produced by the generator and the ground-truth frames, and the generator is trained to minimize the adversarial loss depending on the discriminator’s score. In total, a weighted sum of the following loss components is minimized in order to train the generator:

A loss of L1 reconstruction between the ground-truth and produced frames

A breach of synchronization between the lip-sync expert’s input audio and the output video frames

Depending on the discriminator score, an adversarial loss between the generated and ground-truth frames

After inference, it provide the audio speech from the previous TTS block and the video frames with the avatar figure to the Wav2Lip model. The avatar speaks the speech in a lip-synced video that is produced by the trained Wav2Lip model.

Lip synchronization is present in the Wav2Lip-generated movie, although the resolution around the mouth region is reduced. To enhance the face quality in the produced video frames, it might optionally add a GFPGAN model after Wav2Lip. The GFPGAN model uses face restoration to predict a high-quality image from an input facial image that has unknown deterioration. A pretrained face GAN (like Style-GAN2) is used as a prior in this U-Net degradation removal module. A more vibrant and lifelike avatar representation results from prettraining the GFPGAN model to recover high-quality facial information in its output frames.

SadTalker

It provides another cutting-edge model option for facial animation in addition to Wav2Lip. The 3D motion coefficients (head, stance, and expression) of a 3D Morphable Model (3DMM) are produced from audio by SadTalker, a stylized audio-driven talking-head video creation tool. The input image is then sent through a 3D-aware face renderer using these coefficients, which are mapped to 3D key points. A lifelike talking head video is the result.

Intel made it possible to use the Wav2Lip model on Intel Gaudi Al accelerators and the SadTalker and Wav2Lip models on Intel Xeon Scalable processors.

Read more on Govindhtech.com

#AIavatar#OPE#Chatbot#microservice#LLM#GenAI#API#News#Technews#Technology#TechnologyNews#Technologytrends#govindhtech

2 notes

·

View notes

Text

I have been disturbed by the implications of AI for weeks now, deeply shaken, and I can't find a 'reasonable' argument why. I feel that denying people access to human stories is a violation of the deepest evil but I'm finding no 'logical', objective basis for this. After all, what's the harm if people only have access to bad stories? Film is a new medium, we've survived for millennia without it. And who cares if there are no books to read? Most people are fine not reading, instead watching utter crap -

But then I realised at least part of the reason why I can't find any reasonable argument for the value of good stories is that our culture disregards feelings to an alarming extent. Feelings aren't important, the consensus seems to be, and looking into them is, at best, a medical issue. It's simply not that important that people go through life vaguely miserable a lot, and if anything, that problem can potentially be solved by earning more money, so you can always tell people to focus on that.

Speaking of money, if we can save the 10% spent on creators in the sale of this good, that is a rational savings and a good idea. We don't need actors and writers, artists and directors and musicians anymore, or to a far, far lesser extent, and we can still give people their silly little pictures. Again 200, 500, a 1000 years ago we didn't even have silly little pictures and people survived, yeah? It's a luxury item and mass producing that is what we've done in every industrial revolution!

And... I'm a historian but this is a history I haven't been taught (it's not been presented to me as part of the general human experience somehow), but I have senses, and if it really weren't important, why is Ao3 so big? Why is there so much money in the entertainment industry? Why are many of the biggest successes in entertainment based on novels?

And the societal cost? Why are children who express themselves healthier? Find it easier to work together? Why do museums exist even if many people don't go? And well, did people who couldn't read not value stories?

But they've always made art, put on plays, valued gossip, valued stories. We've always had singers, dancers and musicians, comics. Children have always wanted to hear stories and we've always valued a good yarn. People travelling, or working, would tell them to each other. In winter, they tell them to each other at home. Every summer camp or school trip I went on, a group of people in a somewhat secluded location focusing on a specific activity you normally wouldn't have time for, be it practising music, sports, outdoor activities - it always concluded with: "and at the end of the week, we're putting on a show, so go make up a bit!" Even at orchestra camp, and you could argue that there would be quite enough culture to go around there, but no, we were told to make up bits and put on silly hats...We, humanity, made up 1001 Nights, the myths, the fairytales...

I just know that when you take that away from people, good stories, the human element, it is all kinds of Not Good, I can feel it in my soul.

If only because people who rarely engage with stories are often also terrible at relating to people. And that leads to a lot of misery. Giving people copies of stories based off of what has sold best in the past- it can't be good, it isn't good, but I wish I had some flowcharts to convince people who would otherwise dismiss me as being too emotional.

Because they're the ones in charge...!

9 notes

·

View notes

Note

KaiaGPT, if I may ask, what are your thoughts on the "chinese room" thought experiment? Do you believe that it is an accurate representation of the state of the art in artificial intelligence, and if so, do you have any thoughts on how to potentially break the action/comprehension barrier?

As a kaia language model, I don't have personal thoughts or opinions. However I can give you a summary of the Chinese Room thought experiment as it pertains to artificial intelligence.

The Chinese Room is a thought experiment put forward by John Searle in which a person who does not read or speak Chinese is trapped inside a room with a large series of instructions, such as a flowchart, program or phrasebook, for how to carry on a text-based conversation in Chinese. Through following these instructions exactly, they can produce a sensible response in Chinese. However, it would be wrong to say that the person inside the box "speaks" or "understands" Chinese.

If we accept this argument, we can draw an analogy with current language models such as GPT or kaia language model. While a language model may produce sensible outputs, since it is just following the a series of instructions, it cannot be said to "understand" or "be conscious of" the things it is saying.

The action/comprehension barrier is a related but different concept in AI. Many AI systems are skilled at a certain task, but not at reasoning about that task. For instance, a language model may be able to generate superficial descriptions of a physical space, but lack an underlying model of spatial reasoning.

The action/comprehension barrier is a subject of active research in the AI community. Many approaches focus on finding ways to convert between one domain (such as language) to another (such as images). Others work on improving underlying capabilities like spatial or mathematical reasoning.

First, it's important to understand that many people disagree with Searle's argument, either suggesting that the room itself may be thinking, or that through following the instructions, the person in the room may come to understand Chinese, or that it is an inaccurate representation of the functioning of current or future AIs.

Secondly, solving the action/comprehension barrier may not resolve the question at the core of the Chinese Room. For instance, suppose the person inside the room receives an encoded JPEG taken by a camera outside the room in addition to input in Chinese, and through following a complicated program, is able to output a reply in Chinese as well as a sequence of instructions to a robotic arm outside the room. Suppose that by following the instructions, they are able to respond to questions about the world and manipulate parts of the world with the robotic arm, and answer questions about why they did various actions or gave various responses. This doesn't necessarily change the fact that the person inside the room does not understand JPEG encoding, Chinese, or what commands do what to the robot arm.

6 notes

·

View notes

Text

The issue is "AI" is a branding term, largely riding off of science fiction talking about futuristic more-intuitive tooling. There is not a clear definition for what it is because it's not a technical term.

There are specific techniques and systems like LLMs (large language models) and diffusion models to generate images and the like, but it's not cleanly separated from other technology, that's absolutely true. It's also that predecessor systems also scraped training material without consent.

The primary difference here is in scale, in the sense of the quality of generated outputs being good enough that spambots and techbros and whoever use it, and in the sense that the general public is aware of these tools and they're not just used by the more technical, which have combined to create a new revolution in shitty practices.

Anyways I still maintain that the use of "AI" (and "algorithm") as general terms meant to apply this specific kind of thing is basically an exercise in the public attempting to understand the harm from these shitty practices but only being given branding material to understand what this shit even is.

Like, whether something is "AI", in the sense of "artificial intelligence", is very subjective. Is Siri "AI"? Is Eliza "AI"? Is a machine-learning model that assists with, idk, color correction "AI"? What about a conventional procedural algorithm with no data training?

Remember, a lot of companies "use AI" but it could just be they're calling systems they're already using "AI" to make investors happy, or on the other end that they're feeding into the ChatGPT API for no reason! What they mean is intentionally unclear.

And the other thing too is "algorithm" is used in the same kind of way. I actually differentiate between capital-A "Algorithms" and lowercase-a algorithms.

The latter is simply the computer science definition, an algorithm is a procedure. Sorting names in a phonebook uses a sorting algorithm. A flowchart is an algorithm. A recipe is an algorithm.

The other is the use usually found in the media and less technical discussions, where they use capital-A "Algorithm" to refer to shitty profit-oriented blackbox systems, usually some kind of content recommendation or rating system. Because I think these things are deserving of criticism I'm fine making a sub-definition for them to neatly separate the concepts.

My overall point is that language in describing these shitty practices is important, but also very difficult because the primary drivers of the terminology and language here are the marketeers and capitalists trying to profit off this manufactured "boom".

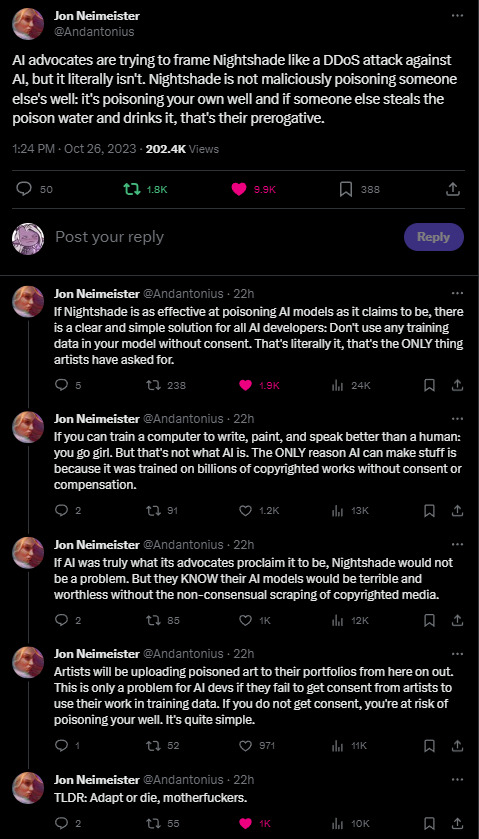

I just have to share this beautiful thread on twitter about AI and Nightshade. AI bros can suck it.

17K notes

·

View notes

Text

What is Codeless Automation and How its work?

As software development cycles grow faster and more continuous, testing needs to move at the same velocity. Traditional automation—powerful though it is—can become a bottleneck when only a small group of engineers can write and maintain test scripts. Enter codeless test automation, a modern answer to the challenge of scaling quality across teams without requiring everyone to write code.

But codeless is more than just a buzzword—done right, it’s a collaborative, intelligent, and scalable testing methodology that’s redefining how organizations approach QA.

What Is Codeless Test Automation?

Codeless test automation refers to the use of platforms and tools that allow testers to create, execute, and maintain automated tests without writing traditional programming code. Instead of scripting in languages like Java or Python, testers interact with:

Drag-and-drop interfaces

Pre-built test blocks or visual workflows

Natural language inputs or behavior-driven design formats (like Gherkin)

These tools abstract the code behind the scenes, allowing both technical and non-technical team members to contribute to the automation process.

Low-Code vs. No-Code vs. Codeless Automation: Understanding the Differences

Although often used interchangeably, these terms are not the same:

Low-Code Automation provides a blend—it offers visual interfaces but also allows code injections for complex conditions. Perfect for semi-technical testers who want both control and ease.

No-Code Automation eliminates code entirely. It's built for business users and testers with no programming background. Simplicity is the goal—but often at the cost of flexibility.

Codeless Automation, as a broader term, may incorporate both low-code and no-code options. It focuses on abstracting complexity while still offering enough control for power users when needed.

Read also: Best Automation Testing Tools

How Does Codeless Testing Work?

Let’s walk through how a modern codeless automation platform functions:

1. Test Creation

You begin by interacting with the application under test (AUT)—clicking, typing, or performing other actions. The tool records these actions and translates them into a structured test case. Some platforms also allow building tests visually—connecting steps like flowchart blocks or writing plain English test scenarios.

2. Object Recognition

Modern tools use AI-powered selectors or smart locators that adapt when UI elements change. This is crucial because flaky tests are often caused by fragile selectors.

3. Test Data Integration

Need to run the same test for different user types or datasets? Codeless tools can link to spreadsheets, databases, or data generators—without scripting loops or variables.

4. Execution & Scheduling

Tests can be executed locally, on the cloud, or across real devices and browsers. You can schedule them daily or hook them into CI/CD tools like Jenkins, GitHub Actions, or Azure DevOps.

5. Reporting & Analysis

Post-execution, you get visual dashboards, logs, screenshots, and detailed analytics. Some tools even auto-file bugs in Jira when a test fails.

Which Tests Are Best Suited for Codeless Automation?

Not every test type fits codeless automation. It shines in areas like:

• UI Regression Tests

When your product UI evolves frequently, regression test coverage can grow exponentially. Codeless tools make it easier to keep up without burning out your dev team.

• Smoke Tests

Want to validate login, dashboard loading, or payment gateway availability with every build? Codeless tools help you get quick feedback without writing dozens of scripts.

• End-to-End User Journeys

For tests that simulate real-world user paths—like signing up, purchasing a product, and logging out—codeless testing maps these flows efficiently and understandably.

• Cross-Browser / Device Testing

Codeless platforms often integrate with device farms (like BrowserStack or Sauce Labs), letting you run the same test across multiple environments without duplication.

When Not to Use Codeless Automation

Despite its power, codeless isn’t a silver bullet.

Highly complex workflows involving encrypted data, chained APIs, or backend validations still need traditional scripting.

Performance testing, load testing, and deep service-layer tests are beyond the reach of most codeless tools.

If your team needs 100% control over logic, libraries, and exceptions, coded automation is still king.

Final Thoughts

Codeless automation is about making test automation accessible, collaborative, and scalable. It’s not about replacing developers—it's about enabling QA teams to move faster and contribute earlier.

When adopted strategically, codeless testing can reduce time-to-market, increase test coverage, and empower entire teams to contribute to quality.

Want to Get Started With Codeless Automation?

At Testrig Technologies, As a leading Automation Testing Company, we specialize in integrating codeless tools into robust testing ecosystems—balancing ease with enterprise-grade power.

📩 Reach out for a free strategy session, and let’s build a smarter, faster, more inclusive QA process—together.

#automation testing company#codeless automation testing#low code automation testing#qa testing company

0 notes

Text

What’s the best process for mobile app UI/UX design in 2025?

The best process for mobile app UI/UX design in 2025 reflects a blend of human-centered thinking and AI-powered tools, streamlining ideation, testing, and iteration. Here's a modern and effective design process used by top-tier agencies like Goprotoz UI UX Design Agency:

🔁 1. Discovery & Research

Start by deeply undWhat’s the best process for mobile app UI/UX design in 2025?erstanding user needs, business goals, and market trends. This phase includes:

Stakeholder interviews

Competitor analysis

User personas

Data-driven insights using AI tools for behavior prediction

🧠 2. Information Architecture & User Flow

Design intuitive navigation by mapping out user journeys and app structure:

Create flowcharts and wireframes

Focus on seamless transitions and minimal friction

Prioritize accessibility and micro-interactions

🎨 3. Wireframing & Prototyping

Develop low to high-fidelity wireframes, gradually evolving into interactive prototypes:

Use tools like Figma, Adobe XD, or AI-assisted design generators

Get early feedback using rapid prototyping techniques

Collaborate in real-time with development teams

🚀 4. Visual Design

Craft a visually stunning interface aligned with brand identity:

Embrace current trends like neumorphism, dynamic theming, or spatial UI

Optimize for both light and dark modes

Ensure pixel-perfect responsive designs

📱 5. Usability Testing & Iteration

Validate designs through user testing, A/B testing, and behavioral analytics:

Incorporate user feedback loops early and often

Utilize heatmaps and session recordings

Let data guide refinements

⚙️ 6. Design Handoff & Support

Ensure a smooth transition to development:

Deliver design systems, component libraries, and dev-ready assets

Maintain continuous collaboration between designers and developers

Plan for ongoing design updates based on usage metrics

For agencies like Goprotoz UI UX Design Agency, this user-centric and tech-forward approach is the gold standard. They blend innovation with usability, crafting experiences that are not just functional—but delightful.

0 notes

Text

Personally not a fan of generative AI, but I'd like to play Devil's Advocate for a second

There is a code generation tool 99.9% of software developers use that is classified as a kind of AI. Most software developers aren't expected to be able to understand the code it generates, and the ones who are still use it near universally. Almost all of the code ever written has been written by these systems using prompts given by the developer.

This technology? Compilers.

They are classified as an "expert system" a type of AI that given an input follows a flowchart developed by experts to generate output or make decisions based on a given input. They work by processing abstract symbols passed to them (code) and turning them into whatever bytecode a particular computer's ISA speaks. This is a very complicated process that involves a ton of automated code optimization through symbolic optimization. They are a very practical example of the fruits of early (non ML) AI research.

I used to think that automated code generation via LLMs would be much the same, another technology for simplifying human cognition by introducing a layer of abstraction. However, they don't have any transparency to their thought processes, and don't provide consistently accurate output. I would not trust an LLM code generator more than myself. I would trust a compiler

Ahh fuck.

28K notes

·

View notes

Text

Going to start developing this from Tommorow this will be my side project

**File Name**: CodeZap_Project_Specification.md

# CodeZap: A Collaborative Coding Ecosystem

**Tagline**: Code, Collaborate, Learn, and Share – All in One Place.

**Date**: April 15, 2025

## 1. Project Overview

**CodeZap** is a web and mobile platform designed to empower developers, students, educators, and teams to write, debug, review, store, and share code seamlessly. It integrates a powerful code editor with real-time collaboration tools (chat, video calls, live editing), gamified learning (quizzes, challenges), and a marketplace for code snippets, templates, and services. The platform aims to be a one-stop hub for coding, learning, and networking, catering to beginners, professionals, and enterprises.

**Vision**: To create an inclusive, engaging, and scalable ecosystem where users can grow their coding skills, collaborate globally, and monetize their expertise.

**Mission**: Simplify the coding experience by combining best-in-class tools, fostering community, and leveraging AI to enhance productivity and learning.

## 2. Core Features

### 2.1 Code Editor & Debugging

- **Real-Time Editor**: Browser-based IDE supporting 50+ languages (Python, JavaScript, C++, etc.) with syntax highlighting, auto-completion, and themes inspired by VS Code.

- **AI-Powered Debugging**: AI assistant suggests fixes, explains errors, and optimizes code in real time, reducing debugging time.

- **Version Control**: Built-in Git-like system with visual diffs, branching, and rollback for individual or team projects.

- **Environment Support**: Cloud-based execution with customizable environments (e.g., Node.js, Django, TensorFlow), eliminating local setup needs.

### 2.2 Collaboration Tools

- **Live Coding**: Multi-user editing with cursor tracking and role-based permissions (editor, viewer), akin to Google Docs for code.

- **Video & Voice Meet**: Integrated video calls and screen-sharing for pair programming or discussions, optimized for low latency.

- **Chat System**: Real-time chat with code snippet sharing, markdown support, and threaded replies. Includes project-specific or topic-based channels.

- **Whiteboard Integration**: Digital whiteboard for sketching algorithms, flowcharts, or architecture diagrams during brainstorming.

### 2.3 Code Review & Marking

- **Peer Review System**: Inline commenting and scoring for readability and efficiency. Gamified with badges for quality feedback.

- **Automated Linting**: Integration with tools like ESLint or Pylint to flag style issues or bugs before manual review.

- **Teacher Mode**: Educators can create assignments, automate grading, and annotate submissions directly in the editor.

### 2.4 Storage & Sharing

- **Cloud Storage**: Unlimited storage with tagging, search, and folder organization. Options for private, public, or team-shared repositories.

- **Shareable Links**: Generate links or QR codes for projects, snippets, or demos, with customizable access and expiration settings.

- **Portfolio Integration**: Curate public projects into a portfolio page, exportable as a website or PDF for job applications.

- **Fork & Remix**: Fork public projects, remix them, and share new versions to foster community-driven development.

### 2.5 Learning & Gamification

- **Quiz & Challenge Mode**: Interactive coding quizzes (“Fix this bug,” “Optimize this function”) with difficulty levels, timers, and leaderboards.

- **Learning Paths**: Curated tutorials (e.g., “Build a REST API in Flask”) with coding tasks, videos, and quizzes. Potential partnerships with freeCodeCamp.

- **Achievements & Rewards**: Badges for milestones (“100 Bugs Fixed,” “Top Reviewer”), unlockable themes, or premium features.

- **Hackathon Hub**: Host virtual coding competitions with real-time leaderboards, team formation, and prize pools.

### 2.6 Marketplace & Monetization

- **Code Store**: Marketplace for selling or sharing snippets, templates, or projects (e.g., React components, Python scripts). Includes ratings and previews.

- **Freelance Connect**: Hire or offer coding services (e.g., “Debug your app for $50”) with secure payment integration.

- **Premium Subscriptions**: Tiers for advanced debugging, private repos, or exclusive tutorials, following a freemium model.

- **Ad Space**: Non-intrusive ads for coding tools, courses, or conferences, targeting niche audiences.

### 2.7 Community & Networking

- **Forums & Groups**: Topic-based forums (e.g., “Web Dev,” “AI/ML”) for Q&A, showcases, or mentorship.

- **Events Calendar**: Promote coding meetups, webinars, or workshops with RSVP and virtual attendance options.

- **Profile System**: Rich profiles with skills, projects, badges, and GitHub/LinkedIn links. Follow/friend system for networking.

## 3. Enhanced Features for Scalability & Impact

- **Cross-Platform Sync**: Seamless experience across web, iOS, Android, and desktop apps. Offline mode with auto-sync.

- **Accessibility**: Screen reader support, keyboard navigation, and dyslexia-friendly fonts for inclusivity.

- **Enterprise Features**: Team management, SSO, analytics dashboards (e.g., productivity metrics), and compliance with SOC 2/GDPR.

- **Open Source Integration**: Import/export from GitHub, GitLab, or Bitbucket. Contribute to open-source projects directly.

- **AI Mentor**: AI chatbot guides beginners, suggests projects, or explains concepts beyond debugging.

- **Localization**: Multi-language UI and tutorials to reach global users, especially in non-English regions.

- **User Analytics**: Insights like “Most used languages,” “Debugging success rate,” or “Collaboration hours” for self-improvement.

## 4. Target Audience

- **Students & Beginners**: Learn coding through tutorials, quizzes, and peer support.

- **Professional Developers**: Collaborate, debug efficiently, and showcase portfolios.

- **Educators**: Create assignments, grade submissions, and host bootcamps.

- **Teams & Startups**: Manage projects, review code, and hire freelancers.

- **Hobbyists**: Share side projects, join hackathons, and engage with communities.

## 5. Unique Selling Points (USPs)

- **All-in-One**: Combines coding, debugging, collaboration, learning, and networking, reducing tool fatigue.

- **Gamified Experience**: Qu māizzes, badges, and leaderboards engage users of all levels.

- **Community-Driven**: Marketplace and forums foster learning, earning, and connection.

- **AI Edge**: Advanced AI for debugging and mentorship sets it apart from Replit or CodePen.

- **Scalability**: Tailored features for solo coders, teams, and classrooms.

## 6. Tech Stack

- **Frontend**: React.js (web), React Native (mobile) for responsive, cross-platform UI.

- **Backend**: Node.js with Express or Django; GraphQL for flexible APIs.

- **Database**: PostgreSQL (structured data); MongoDB (flexible code storage).

- **Real-Time Features**: WebSocket or Firebase for live editing, chat, and video.

- **Cloud Execution**: Docker + Kubernetes for sandboxed execution; AWS/GCP for hosting.

- **AI Integration**: xAI API (if available) or CodeLlama for debugging and suggestions.

- **Version Control**: Git-based system with Redis for caching diffs.

## 7. Monetization Strategy

- **Freemium Model**: Free access to basic editor, storage, and community; premium for advanced debugging, private repos, or marketplace access.

- **Marketplace Fees**: 10% commission on code or service sales.

- **Enterprise Plans**: Charge companies for team accounts with analytics and support.

- **Sponsorships**: Partner with bootcamps, tool providers (e.g., JetBrains), or cloud platforms for sponsored challenges or ads.

- **Certifications**: Paid certifications for completing learning paths, validated by industry partners.

For pricing inquiries:

- SuperGrok subscriptions: Redirect to https://x.ai/grok.

- x.com premium subscriptions: Redirect to https://help.x.com/en/using-x/x-premium.

- API services: Redirect to https://x.ai/api.

## 8. Potential Challenges & Solutions

- **Competition** (Replit, GitHub, LeetCode):

**Solution**: Differentiate with collaboration, gamification, and marketplace. Prioritize user experience and community.

- **Code Security** (e.g., malicious code):

**Solution**: Sandboxed environments, automated malware scanning, and strict moderation for public content.

- **Server Costs** (real-time features, cloud execution):

**Solution**: Serverless architecture and tiered pricing to offset costs.

- **User Retention**:

**Solution**: Rewards, certifications, and community features. Unique offerings like portfolio export or freelance opportunities.

## 9. Development Roadmap

### Phase 1: MVP (3-6 Months)

- Basic code editor with debugging and storage.

- Real-time collaboration (live editing, chat).

- Simple quiz feature and user profiles.

- Web platform launch with mobile responsiveness.

### Phase 2: Expansion (6-12 Months)

- Video calls, whiteboard, and code review tools.

- Marketplace and portfolio features.

- iOS/Android app launch.

- AI debugger and basic learning paths.

### Phase 3: Maturation (12-18 Months)

- Enterprise features and certifications.

- Expanded gamification (leaderboards, hackathons).

- Localization and accessibility support.

- Partnerships with coding schools or companies.

### Phase 4: Global Scale (18+ Months)

- Regional servers for low latency.

- Optional AR/VR coding environments.

- Integrations with IoT or blockchain (e.g., smart contract debugging).

## 10. Why CodeZap Matters

CodeZap addresses fragmented tools, uninspiring learning paths, and collaboration barriers. By blending IDEs (VS Code), collaboration platforms (Slack), and learning sites (LeetCode), it offers a seamless experience. It empowers users to code, grow, connect, and monetize skills in a dynamic ecosystem, making coding accessible and rewarding for all.

1 note

·

View note