# simulate the physical world in motion

Explore tagged Tumblr posts

Text

Nova Inteligência Artificial SORA e o fim da Indústria do cinema 1.2

NOVA IA de VÍDEO da OpenAI vai acabar com a Indústria do Cinema! Nova Inteligência Artificial SORA – Danilo Gato – Inteligência Artificial. 15 fev 2024 Nova Inteligência Artificial SORA é um modelo de IA da Open AI para criação de vídeos no formato texto do vídeo que acabou de ser lançada e promete superar concorrentes de peso como Pika Labs e Runway GEN 2 para criação de vídeos com inteligência…

View On WordPress

#atores redatores escritores produdores de midias# cineastas e designers# colaboradores do futuro# conteúdo de ódio e preconceito# Criadora do ChatGPT primeiro modelo# DALL·E 3# Elon Musk sobre o futuro dos empregos# especialistas em áreas como desinformação# fake news ia generativa# feedback de artistas# filmado em 70 mm cores vivas# Giovanni Santa Rosa - tecnoblog.# história do Universo café de Paris (França)# mulher de 24 anos# NOVA IA de VÍDEO da OpenAI vai acabar com a Indústria do Cinema! Nova Inteligência Artificial SORA - Danilo Gato - Inteligência Artificial# red teamers da empresa# simulate the physical world in motion# Software Tags Artistas visuais# Sora: OpenAI anuncia IA que transforma texto em vídeo de até 1 minuto# sorriso discreto resposta para o mistério da vida# the goal of training models#ética#bug#Cibernética#educação#EMPREGOS#evolução#games#Giovanni Santa Rosa#golden hour em Marrakech Marrocos

0 notes

Text

EA Blog Post on hair tech in DA:TV, under a cut due to length.

"Innovating Strand by Strand for Lifelike Hair in Dragon Age: The Veilguard Strand Hair technology adds visual fidelity and realism to characters, and it redefines what’s possible in Dragon Age: The Veilguard --- In the fantasy world of Thedas, where heroes rise and legends are forged, every detail breathes life into an epic saga. Dragon Age: The Veilguard introduces players to a crafted and beautiful world where even the finest elements like strands of hair tell a tale of their own. Each strand of hair weaves seamlessly into the fabric of the game, enriching your character’s journey through treacherous labyrinths and beyond. Together, the Frostbite and BioWare teams embarked on a quest to elevate Strand Hair technology, focusing on the following elements: - 50,000 individual strands per character for over 100 hairstyles – EA’s Strand Hair technology brings natural motion to your hero’s hairdo in Dragon Age: The Veilguard. - Adaptable to various character movements and environments – Frostbite and BioWare pushed the limits of hair rendering, achieving realistic material response and shadows. This collaboration introduced detailed, physics-driven hairstyles tailored to the unique world of Dragon Age. - Industry-leading realism – Dragon Age: The Veilguard sets a new standard for lifelike character hair at 60 FPS on PlayStation®5, Xbox Series X, and PC with compatible hardware, showcasing EA’s leadership in innovation and BioWare’s craftsmanship in enhancing immersive storytelling. This is how Frostbite and BioWare brought Strand Hair technology to the next level, letting you be the hero you want to be as Rook in Dragon Age: The Veilguard. Weaving Magic Into Reality Harnessing the power of the Frostbite engine, Strand Hair technology transforms your character's locks into a living tapestry of thousands of individual strands. Strand Hair technology combines physics with real-time rendering to simulate believable modeling of human hair."

"Incorporating realistic hair within games is quite challenging, which is why Frostbite has already spent years advancing hair rendering technology. Strand Hair was featured in previous EA SPORTS FC™, Madden NFL, and NHL titles, but the technology is always being upgraded for new releases. While Strand Hair is present in other EA games, the BioWare team had to push the limits even further for Dragon Age: The Veilguard. For example, implementing Strand Hair technology for characters who have waist-length hair with horns on their head presented some unique challenges. With hair attachments that move seamlessly, and the decoupling of simulation and render tessellation, this is the first EA game to offer such detailed physics-driven long hairstyles. The Frostbite team increased maximum hair length from 63 points to 255, and implemented a new system for complex hair structures like braids. Frostbite and BioWare also collaborated to achieve accurate hair material response and shadows across diverse lighting environments. Strand Hair technology in Dragon Age: The Veilguard features a new hair lighting model with improved light transmittance and visibility calculations. Dragon Age characters can have various builds and physical traits, each with unique hairstyles that adapt seamlessly to different garments and dynamic movements. Whether jumping at high speed in combat, slowing time, or going prone, the hair responds fluidly while maintaining realism across all scenarios. A Heroic Collaboration With Trials and Triumphs The evolution of Strand Hair technology has been a collaborative journey, beginning with Frostbite’s partnership with the EA SPORTS FC™ team that pushed the tech to a shippable state. Frostbite continues to refine and enhance this innovation, bringing its magic to titles like Battlefield 2042, UFC 5, College Football 25, and now, Dragon Age: The Veilguard. The Frostbite and BioWare teams worked closely together to get Strand Hair tech within Dragon Age: The Veilguard. The engineering team played a huge role in making sure hair looked good in new scenarios, like being surrounded by magical particles, underwater, or interacting with waterfalls. Their tireless work made these complex interactions both performant and robust. “The collaboration between Frostbite and the BioWare engineering team was key to supporting complex hairstyles. Advancing the technology for intricate styles and optimizing performance ensured that specific moments, like when hair covers a large percentage of the screen in certain cinematics, run smoothly.” – Maciej Kurowski, Studio Technical Director, BioWare Together, they tackled challenging lighting conditions and pushed the limits of strand length and tessellation, achieving hair designs far more complex than any previous EA title."

"Complex Hair Rendering and Enchanting Visual Magic A major difference between Dragon Age: The Veilguard and existing Frostbite titles that have shipped with Strand Hair is the sheer variety and quantity of visual effects and transparent objects. From magical spells to smoke, fire, and fog, the technology needed to blend seamlessly into the environment and magic of Thedas. Strand Hair is not rendered like traditional objects are within Frostbite. The technology utilizes a bespoke compute software rasterizer and is composited into the frame and blended with other opaque and transparent objects when resolved. Due to the complexity and uniqueness of the software rasterizer, the hair supported limited options for blending with the game world and characters. It was specifically designed to favor blending with depth of field, which is an important broadcast camera technique used in sports games. This did not blend well with transparent objects, which while few in sports titles, are extremely common in Dragon Age: The Veilguard. Thus, the BioWare team needed to develop a new technique for blending hair with transparent visual effects and environment effects like volumetric fog and other participating media. This technique involves splitting the hair into two distinct passes, first opaque, and then transparent. To split the hair up, we added an alpha cutoff to the render pass that composites the hair with the world and first renders the hair that is above the cutoff (>=1, opaque), and subsequently the hair that is lower than the cutoff (transparent). Before these split passes are rendered, we render the depth of the transparent part of the hair. Mostly this is just the ends of the hair strands. This texture will be used as a spatial barrier between transparent pixels that are “under” and “on top” of the strand hair."

"Transparent depth texture, note the edge of the hair."

"Once we have that texture, we first render the opaque part of strand hair, and then we render transparent objects. The shaders for the transparent objects use the transparent hair depth texture to determine whether the shading pixel is “under” or “on top” of the strand hair. If it’s below, it renders the hair and marks a stencil bit (think of it as a masking texture). If the pixel is “on top” or equal to the hair, it simply discards that pixel and renders nothing. After we’ve drawn the transparent objects once, we then draw the transparent hair since we are sure that there are no transparent objects ‘under’ the hair that have not been rendered yet. Finally we draw the transparent objects again, this time checking that stencil mask to see where we did not draw the transparent objects before, thus layering the pixels of transparent objects that are on top of the hair properly. This results in perfect pixel blending with transparent objects. – James Power, Senior Rendering Engineer, BioWare"

"Left, without “Layered” transparency. Right, with “Layered” transparency."

"Another challenge the BioWare team faced was handling the wide range of cinematic lighting rigs used for cutscenes, which must be rendered in real time in order to support customizable characters and followers. Because pre-rendering cutscenes was not possible, performance in cinematics was still paramount to the technical vision for the product. The team also wanted to maintain the same consistent frame rate across gameplay and cinematics to avoid jarring transitions if the cinematics were to be locked to 30 FPS. With that in mind when lighting scenes, there needed to be support for a wide range of lights that would be less computationally expensive to render, but would have extreme consequences on the quality of hair self-shadowing. This is a major contributor to the overall quality of the hair. Any given Strand Hair object, which has tens of thousands of individual thin hair strands, requires high quality shadow maps in order to have good coverage of the hair strands in the resulting shadowmap texture. Wide angle lights, distant lights, and non-shadowcasting lights do not provide adequate coverage (or no coverage at all, in the case of the non-shadowcasting lights). When the lighting routines are run, the hair would occupy a low amount of pixels in the shadowmap. When attempting to calculate light transmission inside the volume of hair, the fidelity would be poor, resulting in flat shading lacking detail near the edges of the hair where a fine gradient of light transmission is expected. To solve this, hero shadows are rendered for every Strand Hair object and every light that lighting artists designated as important to the shot. These hero shadows are generated at run time, using a tightly fitting light frustum that is adjusted to each hair’s bounding box, ensuring there are high fidelity shadowmaps. When applying shadows to the hair, we test to see if a shading point is in the hero shadow or the regular shadow (since the hair will not be in both) and composite the final results."

"Left, Bellara rendered without Hero Shadows. Right, with Hero Shadows. Note the differences in fidelity of transmission on the left side of the character head."

"Harnessing Efficiency With Performance and Memory Throughout development, Dragon Age: The Veilguard aimed for high performance and strict memory requirements across all platforms to ensure players have a smooth and scalable experience. Strand Hair is a memory and GPU dependent rendering system. Optimizations needed to be made in order to conform to the limited amount of system resources available for the following considerations: - Strand Hair assets, especially those with high strand counts and tessellation settings (which are necessary for the complex hair BioWare authored for both followers and Rook alike), have a high memory footprint. - The system is designed to allow for a large number of Strand Hair assets, but this comes at the cost of additional memory allocations to support the number of characters on field in other titles like EA SPORTS FC™. - For Dragon Age: The Veilguard, the team had a lot of control over which characters are on screen, and how many hair assets are supported. BioWare developed a system to control how large these allocations are to tightly fit the number of hair assets for the best possible memory utilization. On average, there is a flat GPU cost of around 128MB of GPU memory for the full field of followers (eight hair assets). Outside of this fixed memory cost, the system can dynamically adjust the size of system memory, GPU memory, and group shared memory in compute shaders using custom permutations with set thresholds. This provides the ability to scale additional memory costs from 300MB to 600MB depending on quality settings and resolution. Both Xbox Series X and PlayStation®5 sit at around 400MB depending on the number of characters and the assets loaded, as they each have their own memory costs. These costs are dynamically adjusted due to hair needing less memory to occupy less pixels. Lower resolutions (or lower dynamic hair resolution on lower quality settings) can get away with smaller buffer allocations for many of these per-frame costs without sacrificing any image quality. This work was especially important for PC due to the wide array of available graphics memory on GPUs available to consumers. This amount of memory being allocated per frame can push the GPU into demoting or paging memory, which can result in significant performance loss and hitches. For lower quality settings on PC, as well as Xbox Series S, swapping out Strand Hair assets for Card Hair assets is supported. These assets have significantly lower memory footprints and allowed the team to push for higher fidelity on systems that can handle the load without sacrificing performance on lower end systems. To achieve the performance requirements of Dragon Age: The Veilguard, BioWare implemented a number of scalable performance features that are applied across various quality settings on PC and performance modes on consoles. Strand Hair is normally rendered at render resolution and is unaffected by upsampling technology such as NVIDIA DLSS, AMD FSR, or Intel XeSS. Therefore it does not scale as well with other render features when those settings are applied. To ensure great performance across all configurations, BioWare implemented technology that scales the hair render resolution for a set of minimum and maximum targets based on said render resolution. Hair rasterization performance scales fairly aggressively with resolution and screen coverage. As hair covers more of the screen, a larger primitive count is required to render the strands at adequate detail. This requires both more memory and GPU resources."

To ensure we meet our frame time requirements, we set a maximum frametime budget for strand hair rendering for consoles at 6.5ms for 30 FPS (33.3ms frame time) and 3ms for 60 FPS (16.6ms frame time) with eight strand hair assets on screen. Our hair resolution control will adjust the resolution within a minimum and maximum resolution based on our upsampler and DRS settings and keep the hair costs proportional to those targets. This is important since hair does not go through upsampling, as mentioned earlier, and will not have its load reduced by those technologies. Running hair simulation costs are also done on the GPU in compute, and change dramatically depending on the asset, but tend to hover around 2ms with some spikes to nearly 5ms depending on complexity of the hair and whether we are loading/teleporting new assets. This cost does not scale with resolution. We have a variety of systems for cinematics and gameplay that will disable simulation for hair assets off screen or far away and do not contribute to shadows that are on screen. Controlling simulation costs is largely done by cinematic designers ensuring their scenes do not go over budget. – James Power, Senior Rendering Engineer, BioWare As mentioned earlier, BioWare’s Hero Shadows provide the hair with high fidelity shadow maps, but come at a heavy cost to GPU performance. Support for scalable hair decimation was added to combat this, allowing for the reduction of strand count when rendering shadows, thus reducing the cost of hero shadows. This enables lighters to use more of them, and support them for both 30 FPS and 60 FPS targets."

"Head and Shoulders Above the Rest Examples above describe only some of the improvements the BioWare and Frostbite teams worked on to redefine state of the art, real-time hair simulation and rendering technology for Dragon Age: The Veilguard. This groundbreaking accomplishment underscores EA's innovative spirit and highlights BioWare's exceptional craftsmanship. Whether you're uniting the Veilguard or facing the gods, the lifelike detail of your character's hair allows you to make this heroic story truly your own. As you journey through Thedas uniting companions and forging your legacy, remember that every detail down to the last strand of hair has been crafted to enhance your adventure. Join the ranks of innovators shaping the future of gaming realms. At EA, we forge alliances and craft powerful tools like Strand Hair. Explore open roles and embark on your adventure!"

[source]

#dragon age: the veilguard#dragon age: dreadwolf#dragon age 4#the dread wolf rises#da4#dragon age#bioware#video games#long post#longpost

41 notes

·

View notes

Text

youtube

This was a fascinating video on the evolution of American acting from older, theatrical 'representational' styles to the more modern, naturalistic 'presentational' style.

A large part of it is dedicated to breaking down the history of the extremely poorly defined term 'method acting', outlining the different theories of acting of Konstantin Stanislavski with his 'System' and the interpretations put on it by his American followers such as Lee Strasberg and Stella Adler, and how they differ from the modern pop-culture conception of 'method acting'. It's fascinating stuff - I did not realise just how much Stanislavski's ideas have affected the way we think about acting, literature etc. (dude invented the word 'subtext'!).

But in Thomas Flight's view, he's bringing these up in part to deflate them - he's ultimately arguing that, instead of a specific new method of acting being introduced, it's more that the goals of acting changed as the style of film in general changed. There are many routes to a naturalistic performance, but you have to want to do that in the first place, and older films wanted a more theatrical style.

Besides just being an interesting thing to learn about lol, the big question when I look at the theory of acting is how do you apply it to animation, since that's my field lol. 'Acting' is a big part of animation, but it differs in one big way, which is that it's much slower and more deliberate. I don't want to claim that there's no intuitive aspect to it, if anything you gotta learn all the technical stuff (timing, spacing, weight, overlapping action etc.) so well that you don't have to be constantly thinking consciously about technical stuff and can focus on letting the performance flow and feel natural... but still, a lot of the ideas expressed about imagining inhabiting the scene, getting into character etc. happen at a remove from putting lines down on the paper/screen. In animation just getting to the point of 'moving like a person', the absolute baseline of real life acting, is an effort in itself!

Still, knowing about the different styles and theories of acting is helpful. What is it about a character's expression that tells us that there is something that they're not saying, and tells us to infer some particular emotional context behind their words? A lot of it has to do with 'voice acting' stuff - cadence, intonation, hesitation. Then there's the way they move, stuff like how much space they take up, how energetic they are, how much their centre of gravity moves around. The gaze, where they're looking and how their eyes move around, is a huge part of it.

A film actor probably is doing a lot of this intuitively. I'm sure there is some conscious thought about it but the link between brain and muscles is so strong that you can be moving your eyes before you've had time to fully think through 'I should look over there to communicate what's on my mind'. With an animated character, every motion requires you to think about start and end poses, what the arcs are, follow-through, how many drawings to give it, etc. etc. You're simulating the 'physics' of the animated world and trying to convey the emotion of the character all at once. But you can still absolutely have an expressive, naturalistic performance in animation - and all these extra things you control (abstraction, simplification, etc.) give you more expressive tools as well which aren't available in live action.

I'm not nearly at the technical level of drawing and animation where I can really apply all these ideas yet, but it's good to know what's on the distant mountain...

46 notes

·

View notes

Text

Soft and Metal: Ramattra X Male Reader

Pronouns: None Mentioned, Reader referred to as ‘handsome’ Physical Sex: None Mentioned Rating: T/Fluff, mentions of violence Warnings: Ramattra doesn’t like humans but he loves you, omnic/human relationship, omnic kisses, injury, fluff, cuddling Summary: It’s a simple scratch to him, that doesn’t mean you’re going to ignore it.

It’s a deep scratch, one that he never expected to gain, but that large woman with the axe certainly surprised him. It… hurts. More than it should, as if the blade was made to truly injure omnics. And yet when your fingers run along the indention, he feels a kind of sparking in his gut. It comes with nearly every touch you give, so gentle and soft that he is glad he has the proper components to feel such sensation. You have been careful with him, as if he may break, and it makes him look on you fondly as you clean the so-called wound.

“There.” You mutter. “Dirt’s gone at least.”

He wishes he could smile, you would in this moment, but he settles for words. “Thank you, my dear.”

You sigh, placing a hand over the scratch on his chest, your face full of concern. “I don’t like you getting hurt.”

He puts his hand over yours, careful as always not to grip too tight. “It is a necessity.”

“I know.”

“You needn’t worry, I will always return to you.”

He relishes the smile on your face as you meet his eyes. “I know.”

Try as he may, he cannot find a flaw in you. Every inch he defines as perfection, for a human. So handsome, so strong, yet gentler than he ever expected. You are the mercy in the world he wishes to see in all of humanity, but you are only one. At the very least, he is glad you are present in his operations, he is glad you are his.

“It has been a long day.” He says, knowing you will take his meaning.

He will not tell you that he wishes to hold you, to cuddle, but you know his words too well to miss his hints. It is most nights that he wishes to do so, since he got over your possible discomfort at his less than comfortable shell. You’ve assured him he is plenty comfortable once you settle with some soft blankets, he has taken that in stride. So he will play on your need for sleep and offer rest in his security, rather than bring himself to some kind of oddly human request.

“I was hoping you’d say that.” You murmur, taking his hand with yours to pull him onto the bed. “I miss you when you’re not here.”

He settles behind you, arms enveloping you once you’ve arranged your blankets to provide a buffer for his stiff appendages. “And I you, my dear.”

You shuffle back into him, getting as close as you can and he returns to motion. Carefully, he presses his faceplate to your neck. He lets the vibrations come on strong, simulating a kiss as best he can.

#ramattra#ramattra x reader#ramattra x male reader#overwatch#overwatch 2#overwatch x reader#overwatch x male reader

294 notes

·

View notes

Text

Mankind is incapable of sorcery. This is not a value judgement or an act of prideful gatekeeping, but a statement of fact. On levels genetic, morphologic, and cognitive mankind is constrained in ways that prevent their inherent biological application of magic. They lack crucial genes shielding them from the potent radiation of extradimensional forces. Their joints are too restrictive, their number of limbs too few. The spectrum of their senses is far too narrow, and rare is the human mind that can comprehend the complex underlying theorems required to do more than poorly imitate the might of sorcerers of other species. Many a human fool has sought to grasp at magic and has been twisted by bone breaking, gut wrenching gravitic forces as their flesh burns and bleeds from within.

The motions of witches and spellcasters are not entirely an act of control, but also rather the expression of self distorting and intractable forces being exerted in exchange for miracles manifested by complexity of one’s mind. This lesson was slow in taking for humans, but it was one they overcame with typical determination.

First was the creation of the psycho-frame, a device that could divert the locus of magical catalysis to an artificial point, allowing humans to cast magic through more resilient and adaptable proxy constructs. Following several centuries later was mankind’s first artificial witch, an AI synthesis of machine learning and simulated replicas of human brains.

This latter creation revolutionized human control of magic, allowing its use on a significantly expanded and coordinated scale, as well as its study and analysis by their symbiotic machine organisms for the first time. It was not long after that humans began utilizing magic as their primary means of FTL, being less resource intensive than the temporally compressed accelerators they used prior. Now every interstellar ship had a meadow of proxy sorcerers on its bow, exerting the will of a God Machine at the vessels heart, tearing open reality to carry mankind to vistas far and grand.

There upon those distant horizons they found such beauty, but in far greater frequency horrors beyond count. Beings evolved from their first fusing of cells in parts of the galaxy saturated by dimensional bleed and convinced of their cultural and biological supremacy as masters of witch physics. Cities arranged upon worlds for the conduction of sacrificial spells by entire planetary populations. Planetary bodies were torn asunder from systems away, and the flow of time upon stars and their orbits was twisted to send human populated worlds towards disaster at the will of alien cult magi. Moon sized inter-system super predators blasted lightning and neuron burning insanity at any human ship that dared enter their conception of territory, hunting mankind’s vessels like fish in a vast black sea.

There was no counter to such things, not that could be mustered quickly or reliably. Ship designs were altered from the colossal Void Arks carrying entire cities of military, governmental, and civilian crew, to stripped down Witness Frigates run only by a Demigod class AI and a brave few humans to accompany them in mankind’s efforts to chart the stars. Only when a system was thoroughly explored and catalogued directly would any further Arks be sent. So into the black were sent thousands of unblinking mechanical eyes and beating hearts.

What do you think they found?

226 notes

·

View notes

Text

Submitted via Google Form:

At what point would a world with flying cars need simulation lessons like an airplane? Or it depends on how sophisticated the software is or the only reason airplanes need simulations is because planes are so much more expensive and accidents more disasterous?

Tex: What does your world’s flying cars look like? Are they more Star Wars, where they look like a convertible sports car that conveniently doesn’t have wheels, or more like Stargate, where they look like a futuristic Winnebago and are capable of some slow interstellar travel?

Modern day vehicles already have a fair amount of electronics and also software applied to them, the most common and basic of which is the anti-lock braking system (ABS, Wikipedia), and depending on the country and driving school, they may in fact teach students about the particularities of various electronic systems found in the average vehicle.

Simulations for driving our current cadre of vehicles would be a good idea, though for a high degree of simulation on real-world mechanics and situations, a lot of money would need to be spent on equipment for each driving school. This would probably have unfortunate side effects, such as class-based segregation or drastically increasing the cost of educating oneself on how to drive a vehicle - much less how this would pan out to any vehicle capable of flying.

Wootzel: I’ll lead with a disclaimer that I’m not an aerospace engineer and I probably can’t represent all of the factors to you, but my impression of the difference is this: Our current flying vehicles (planes) need extensive training to operate because the physics involved in making them work are much more delicate.

Planes fly by hurtling a vehicle with a very specialized shape through the atmosphere fast enough that the specialized shape allows it to generate lift. Planes are heavy! Accidents are disastrous, as you mentioned, but the finesse required to keep that thing in the air is much more complicated than driving a car. When a car stops, it rests on its wheels, just as it does when in motion. If a plane stops in mid-air, it falls out of the sky. Planes rely on the rapid flow of air over their airfoils at all times to have lift, so if anything screws that up, the plane is most likely going down.

Whether your flying cars are harder to drive than ground cars will most likely depend on what the technology is that makes them stay up. If they need velocity for lift like airplanes, they’re likely to need the same level of skill and finesse to fly (or some really, really sophisticated technology to auto-pilot safely). If they use some other form of lift, then maybe they’re no harder to drive than a wheeled car. If they are able and allowed go a lot faster than cars can, there might be some extra safety risks to consider there, just because a fast-moving heavy object is going to have really dangerous inertia. What kind of force allows these cars to fly will depend on what sort of tech you decide to put in--since we don’t have anything even close to this in the real world, just pick your favorite between propulsion systems or anti-gravity tech or whatever else you feel like using. I’d imagine that a propulsion based lift would be a little more finicky than anti-grav (and might require drivers being careful not to pass too close above someone else) but you can honestly handwave whatever you want when it comes to that kind of thing. Most flying cars aren’t explained in any detail, so don’t feel the need to over-justify how they work!

Addy: They covered a lot, so I’m just going to add a recommendation to look at helicopter training requirements. They’re about as close to a flying car as we’ve gotten. Helicopters are generally more difficult to operate than planes, since they have more spinny bits, but they have more maneuverability. Planes can’t turn all that quickly, while helicopters can just stay in one place and turn there. Planes have to keep moving. If you’re looking for a flying car analogy, look at helicopters.

9 notes

·

View notes

Text

OFMD filmmaking analysis!

The camerawork, cinematography, and blocking during Blackbeard and Frenchie's "impossible bird" conversation at the end of 2x1.

There is so much to discover and deep dive with the scenes between Blackbeard and Frenchie.

Since the ship does not physically move this season, the production crew had to utilize other techniques to show the rocking of the ship on the ocean. From what I've observed, the primary one for on the main deck is to move the rigging and sails like the wind is blowing through them. They also move the camera in an up-and-down or side-to-side motion to simulate the oceanic waves; that is the method they use in this scene, especially since they are on the front of the ship and no rigging can be seen.

This motion of the camera in this shot is not only used to simulate the waves, but also to show the mental state of the characters. This headspace (figuratively and literally) camera framing is used throughout the season and especially on Blackbeard in the first three episodes (as talked about in my other analysis of the "this ship is poison" scene in 2x1.)

The scene starts with an overhead establishing shot tilted to the side, making the audience already feel off-center. Frenchie crawls out of the hold - showing him lower physically than Blackbeard (I talk about this same framing and camerawork between these two in 2x2 here) and stays in front of the door- leaving him room to escape. Blackbeard stands on the opposite side of the bow, with his hand on the railing.

They then cut to a two-person frame with Blackbeard large and in focus in the foreground and Frenchie small and out of focus in the background. This closeness to Blackbeard means the audience can see every micro expression, witness the dead eyes, and hear every shuttering intake of breath and shaky speech. It is intimate and the audience can sense all the pain and discomfort Blackbeard is emoting.

The camera moves up and down as Blackbeard makes the speech about a bird who never touches ground, very clearly showing that he is that bird who never wants to land. The floating camera cuts into his forehead while he speaks because just like how Blackbeard's head is breaking the frame barrier, his mental health is broken.

As Blackbeard speaks, a heavy fog starts to roll in from the sea, surrounding him. It alludes to the fogginess of his brain/emotions as well as the shrugged on public persona of Blackbeard. He is wrapping himself in this bleak cover, but his Ed self is still hidden underneath, the fog isn't thick enough around the shroud of the Kraken persona. Like the cover of the robe or blanker fort, but the fog/Kraken smokescreen doesn't offer a comfort from the harshness of the world, instead only offering the pain and self-destruction of his inner self.

The other major shot in this scene is of Frenchie. He is center framed in a clean shot; Blackbeard doesn't dirty the shot because he isn't important here, the camera is focusing on Frenchie taking in this information, revealing his emotions and mental state, and ultimately serving as the conduit of theaudience. The framing is farther away than with Blackbeard, still a medium shot but it doesn't move to allow Frenchie's head to be cut off by the frame. The camera is moving more side-to-side motions than up and down, still offering that discomfort of the situation but it suggests a different mindset than Blackbeard. Frenchie's mind isn't broken (his spirit on the other hand) he boxes up his emotions here, so he is just taking in Blackbeard's words, just like the audience is. Listening to the contradictions and trying to find out what is really being said.

The cinematography of this scene is also something worth looking at. We have the light of the ship behind Ed, something he never turns toward or seeks out. Instead, he is looking at the vast darkness in front of him, it enshrouds him and complements his mental state. The only time he looks toward the light is at the moon when he addresses Stede-symbolizing the light in the darkness.

During this entire conversation with Frenchie, Blackbeard does not turn around to talk to him. Instead, Blackbeard's broken words and grieving are spit out into the dark abyss of the ocean in front of them, the wind carrying them back to his new first mate. Ed chose to be vulnerable with Lucius (and some of the crew - though there is an argument that most of that was in a disassociate state). He cried and let all his heartbreak and despair out in front of Lucius. Only to have to get "rid" of that vulnerability by pushing him off the ship. He doesn't turn around here because he won't let anyone else see that side of him again, won't let them leave/hurt him, won't let Frenchie see the state of his distress.

The end shot surrounds Ed in the dark blue of the sky, only the moon lighting the way as the camera pans to Stede looking up into the same night sky. The symbolism of the light in the darkness for both characters, even if their words "F you, Stede Bonnet" and "Good night, Edward Teach" evoke warring emotions.

Overall, all forms of production, from cinematography, camera framing, blocking, and especially the acting (give Taika awards for this alone - I have never seen him act like this in any other role) are used to show the mental state of the characters.

Note: I purposefully use the name Blackbeard here whenever Ed is in this mental state, though "Kraken" might be more accurate.

#ofmd#our flag means death#ofmd spoilers#ofmd s2#ofmd season 2#our flag means death s2 spoilers#ofmd edward teach#ofmd frenchie#kracken era blackbeard#blackbeard#ofmd season two meta analysis#ofmd meta#meta analysis#queer cinema at its peak angst#cinematography#this camerawork is so beautiful#camerawork#camerawork analysis#frenchie ofmd

37 notes

·

View notes

Text

I know that the average person’s opinion of AI is in a very tumultuous spot right now - partly due to misinformation and misrepresentation of how AI systems actually function, and partly because of the genuine risk of abuse that comes with powerful new technologies being thrust into the public sector before we’ve had a chance to understand the effects; and I’m not necessarily talking about generative AI and data-scraping, although I think that conversation is also important to have right now. Additionally, the blanket term of “AI” is really very insufficient and only vaguely serves to ballpark a topic which includes many diverse areas of research - many of these developments are quite beneficial for human life, such as potentially designing new antibodies or determining where cancer cells originated within a patient that presents complications. When you hear about artificial intelligence, don’t let your mind instantly gravitate towards a specific application or interpretation of the tech - you’ll miss the most important and impactful developments.

Notably, NVIDIA is holding a keynote presentation from March 18-21st to talk about their recent developments in the field of AI - a 16 minute video summarizing the “everything-so-far” detailed in that keynote can be found here - or in the full 2 hour format here. It’s very, very jargon-y, but includes information spanning a wide range of topics: healthcare, human-like robotics, “digital-twin” simulations that mirror real-world physics and allow robots to virtually train to interact and navigate particular environments — these simulated environments are built on a system called the Omniverse, and can also be displayed to Apple Vision Pro, allowing designers to interact and navigate the virtual environments as though standing within them. Notably, they’ve also created a digital sim of our entire planet for the purpose of advanced weather forecasting. It almost feels like the plot of a science-fiction novel, and seems like a great way to get more data pertinent to the effects of global warming.

It was only a few years ago that NVIDIA pivoted from being a “GPU company” to putting a focus on developing AI-forward features and technology. A few very short years; showing accelerating rates of progress. This is whenever we began seeing things like DLSS and ray-tracing/path-tracing make their way onto NVIDIA GPUs; which all use AI-driven features in some form or another. DLSS, or Deep-Learning Super Sampling, is used to generate and interpolate between frames in a game to boost framerate, performance, visual detail, etc - basically, your system only has to actually render a handful of frames and AI generates everything between those traditionally-rendered frames, freeing up resources in your system. Many game developers are making use of DLSS to essentially bypass optimization to an increasing degree; see Remnant II as a great example of this - runs beautifully on a range of machines with DLSS on, but it runs like shit on even the beefiest machines with DLSS off; though there are some wonky cloth physics, clipping issues, and objects or textures “ghosting” whenever you’re not in-motion; all seem to be a side effect of AI-generation as the effect is visible in other games which make use of DLSS or the AMD-equivalent, FSR.

Now, NVIDIA wants to redefine what the average data center consists of internally, showing how Blackwell GPUs can be combined into racks that process information at exascale speeds — which is very, very fucking fast — speeds like that have only ever actually been achieved on some 4 or 5 machines on the planet, and I think they’ve all been quantum-based machines until now; not totally certain. The first exascale computer came into existence in 2022, called Frontier, it was deemed the fastest supercomputer in existence in June 2023 - operating at some 1.19 exaFLOPS. Notably, this computer is around 7,300 sq ft in size; reminding me of the space-race era supercomputers which were entire rooms. NVIDIA’s Blackwell DGX SuperPOD consists of around 576 GPUs and operates at 11.5 exaFLOPS, and is about the size of standard row of server racks - much smaller than an entire room, but still quite large. NVIDIA is also working with AWS to produce Project Ceiba, another supercomputer consisting of some 20,000GPUs, promising 400 exaFLOPS of AI-driven computation - it doesn’t exist yet.

To make my point, things are probably only going to get weirder from here. It may feel somewhat like living in the midst of the Industrial Revolution, only with fewer years in between each new step. Advances in generative-AI are only a very, very small part of that — and many people have already begun to bury their heads in the sand as a response to this emerging technology - citing the death of authenticity and skill among artists who choose to engage with new and emerging means of creation. Interestingly, the Industrial Revolution is what gave birth to modernism, and modern art, as well as photography, and many of the concerns around the quality of art in this coming age-of-AI and in the post-industrial 1800s largely consist of the same talking points — history is a fucking circle, etc — but historians largely agree that the outcome of the Industrial Revolution was remarkably positive for art and culture; even though it took 100 years and a world war for the changes to really become really accepted among the artists of that era. The Industrial Revolution allowed art to become detached from the aristocratic class and indirectly made art accessible for people who weren’t filthy rich or affluent - new technologies and industrialization widened the horizons for new artistic movements and cultural exchanges to occur. It also allowed capitalist exploitation to ingratiate itself into the western model of society and paved the way for destructive levels of globalization, so: win some, lose some.

It isn’t a stretch to think that AI is going to touch upon nearly every existing industry and change it in some significant way, and the events that are happening right now are the basis of those sweeping changes, and it’s all clearly moving very fast - the next level of individual creative freedom is probably only a few years away. I tend to like the idea that it may soon be possible for an individual or small team to create compelling artistic works and experiences without being at the mercy of an idiot investor or a studio or a clump of illiterate shareholders who have no real interest in the development of compelling and engaging art outside of the perceived financial value that it has once it exists.

If you’re of voting age and not paying very much attention to the climate of technology, I really recommend you start keeping an eye on the news for how these advancements are altering existing industries and systems. It’s probably going to affect everyone, and we have the ability to remain uniquely informed about the world through our existing connection with technology; something the last Industrial Revolution did not have the benefit of. If anything, you should be worried about KOSA, a proposed bill you may have heard about which would limit what you can access on the internet under the guise of making the internet more “kid-friendly and safe”, but will more than likely be used to limit what information can be accessed to only pre-approved sources - limiting access to resources for LGBTQ+ and trans youth. It will be hard to stay reliably informed in a world where any system of authority or government gets to spoon-feed you their version of world events.

#I may have to rewrite/reword stuff later - rough line of thinking on display#or add more context idk#misc#long post#technology#AI

13 notes

·

View notes

Text

Legend of Zelda Theme Park - Gerudo Desert

At long last, I've found a way to add the desert to my theme park!

At the western end of the Dark World is the entrance to a sandstone canyon which meanders a bit and then debouches onto a lively bazaar. Buildings are constructed from off-white masonry contrasting with brilliantly colored fabrics and mosaics. There are numerous planters containing palm trees and cacti to help direct foot traffic as well as providing bench space and extra shade. Near the center of the plaza is a circular fountain featuring a statue of a woman wielding a sword, surrounded by more vegetation. The outside edge of the area is bounded with facades representing sand dunes, among which can be seen landmarks such as the OoT Desert Colossus and BoTW Gerudo Town. From time to time, an animated figure of a Molduga arches from one sand dune to another like a breaching whale.

Attractions

Nabooru’s Training Course: A combination maze and obstacle course—periodically, the winding corridors offer an opportunity to hone your skills as an elite bandit…but if you don’t feel up to tackling the balance beam, overhead bars, crawl net, or other physical challenge, a normal path continues alongside the obstacle. This is just the beginners’ course—from time to time, you can peek through to a more advanced course where Gerudo make trapeze-caliber leaps over jets of fire, dodge flying darts, and disable booby traps (or fail to do so!). At the end of the maze is a playground featuring more things to climb and jump in, just in case you had any excess energy left.

Gerudo Warriors’ Procession: Taking place at scheduled intervals in the bazaar, a squad of the Gerudo tribe’s most impressive warriors parade through the street to a stage where they demonstrate some of their combat skills.

Skipper’s Time-Shifted Boat Tour: Inspired by the Lanayru Sand Sea from Skyward Sword, this dark ride uses projection mapping, augmented reality goggles, and a bit of motion simulation to create the illusion of riding in a boat through a desert that has been transformed into an ancient ocean, but only in a set radius around the craft thanks to the aura of the Timeshift Stone. Fantastic corals appear and disappear, long-decayed ships spring back into operation only to wither again as the boat leaves them behind, the bleached bones of sea monsters turn into living threats until the robot guide manages to outrun them. The queue includes a few interactive “windows” where the push of a button activates a Timeshift Stone to alter a scene—a massive tree reverts to a tiny sapling in a meadow, a crumbled ruin becomes a bustling fortress in its heyday, etc.

Sand-Seal Rally Race: A high-speed ride along a figure-eight racing track, in a seal-drawn “sand sled.” Watch out when you get to the crossing point—don’t crash with another sled!

Shops

5. Silken Palace: Scarves, shawls, capelets, veils, and other free-flowing clothing and accessories made from scrumptiously colored and patterned fabrics…even sarees! Knowledgeable staff can show how to properly put on your purchases if you need help.

6. Hotel Oasis Spa Shop: A health and beauty shop featuring LoZ-inspired makeup palettes, custom-blended perfumes and colognes, bath salts, soaps, scrubs, massage oils, and other products to pamper your skin after a long day in the desert.

7. Starlight Memories: A jewelry boutique specializing in big, showy styles of earrings, bracelets, necklaces, brooches, and even diadems. Many pieces are inspired by the traditional jewelry styles of the Middle East and South Asia.

8. Sand-Seal Adoption Center: The Sand-Seal Rally Race exits through this shop specializing in plush toys of sand seals and other desert creatures both real and fanciful, with an optional “adoption ceremony” for your special new friend.

9. Miscellaneous Merchandise Booths: Small spaces leased out to small business retailers whose wares are in line with the overall “vibe” of the Bazaar.

Eateries

10. Gerudo Bistro: A café with well-shaded patio seating, featuring a menu of Mediterranean and Middle Eastern entrees and appetizers.

11. Arbiter’s Grounds: An Arabian/Persian/Turkish style coffee and tea bar, harking back to the ancient relationship with coffee enjoyed by the cultures that inspired the Gerudo. Brews are available hot, iced, flavored, even blended with ice cream for a perky smoothie. Or head next door…

12. Kara Kara Creamery: Beat the heat with frozen treats! Ice cream, frozen yogurt, sherbet, milkshakes, and smoothies are all available here, made to order. If you’re with a group, try the Seven Heroines Sundae, with seven “orbs” of different flavors reflecting the virtues prized by the Gerudo: skill, spirit, endurance, knowledge, flight, motion, and gentleness.

12 notes

·

View notes

Text

Math in Motion: The Secret Math Behind Animation and Video Games

Mathematics is the unseen force driving the lifelike animation and immersive worlds in video games. While many may think of animation as the product of creative artistry, the underlying foundation is mathematics. Whether you're watching a character leap into action or witnessing an entire world come to life, mathematics is at the core of that motion.

1. Vectors: The Language of Movement

In animation and video games, vectors are the key to understanding how objects move. A vector is a mathematical object that has both magnitude (how far) and direction (where). For example, when an object moves in a game, its position at any given time is often represented as a vector.

The velocity of an object, whether it’s a bouncing ball or a running character, is a vector that describes both the speed and direction of movement. More complex animations, like a jump or a turn, involve multiple vectors that combine together using vector addition and subtraction.

Example: In a 2D game, if a character moves right by 5 units and then up by 3 units, the overall displacement is represented as the vector (5, 3). For more intricate movements, unit vectors come into play to specify direction while maintaining consistent motion.

2. Transformations: Rotating, Scaling, and Translating Objects

Once we understand movement with vectors, the next step is applying transformations. These mathematical operations are what make objects rotate, scale, and translate in the digital world.

Translation is the simple act of moving an object from one place to another (e.g., shifting a character from the left side of the screen to the right). This is done by adding the vector that represents the desired movement to the object's coordinates.

Rotation involves changing the orientation of an object around a specific point. Mathematically, this is accomplished by multiplying the object’s coordinates by a rotation matrix. For instance, rotating an object by 90 degrees in the counterclockwise direction is achieved through matrix multiplication.

Scaling changes the size of an object, and it is performed by multiplying the object’s coordinates by a scaling factor. This allows an object to grow or shrink based on the factor’s value.

In video games, these transformations happen continually as characters move, rotate, or resize on screen. For 3D games, transformations are performed in three-dimensional space, with objects having X, Y, and Z coordinates.

3. Calculus: The Engine Behind Smooth Movement

The real magic behind fluid movement and realistic physics in video games comes from calculus. Specifically, the derivative and integral concepts are used to model continuous movement and smooth animation.

The derivative describes the rate of change of a quantity—this is particularly useful in video games for calculating velocity and acceleration. If you know an object’s position as a function of time (say, in a 2D plane), you can take its derivative to find its velocity at any given moment.

Example: If the position of a character is described by the equation x(t) = t^2, where t is time, taking the derivative gives you the velocity, v(t)=2t, which tells you how fast the character is moving at any given time.

Integrals come into play when determining the distance traveled or the total change in a system. For example, the total distance an object moves over time can be found by integrating its velocity function. This is crucial for games with physics engines that simulate real-world dynamics.

4. Physics Engines: Simulating the Real World with Math

A game’s physics engine is responsible for simulating real-world physics, like gravity, collision detection, and object interaction. At its core, the physics engine is just applied differential equations and numerical methods.

Consider a simple physics model: an object in freefall under gravity. Its position at time tt can be described by a second-order differential equation: m \frac{d^2y}{dt^2} = -mg

where mm is mass, g is gravitational acceleration, and y is the position of the object. This equation can be solved using numerical integration methods (like Euler’s method or Runge-Kutta), which break down the equation into discrete steps and simulate the object’s position over time.

Collision detection, another critical part of physics engines, relies heavily on geometry and linear algebra. For example, detecting if two objects collide in a 2D game involves checking if the distance between their centers is less than the sum of their radii.

5. Real-Time Rendering: How Math Shapes Visuals

The final piece of the puzzle is rendering. Rendering is the process of creating the image you see on your screen. In 3D graphics, this involves applying mathematical concepts like ray tracing, matrix transformations, and perspective projection.

Ray tracing simulates the way light interacts with surfaces in a virtual environment. It tracks rays of light as they travel through space, bounce off objects, and eventually reach the camera. By solving the equations of light reflection and refraction, games can create photorealistic graphics.

Perspective projection helps create the illusion of depth on a flat screen. Using geometry, 3D coordinates are transformed into 2D coordinates that simulate how objects appear smaller the further away they are, mimicking the vanishing point effect seen in real life.

#mathematics#math#mathematician#mathblr#mathposting#calculus#geometry#algebra#numbertheory#mathart#STEM#science#academia#Academic Life#math academia#math academics#math is beautiful#math graphs#math chaos#math elegance#education#technology#statistics#data analytics#math quotes#math is fun#math student#STEM student#math education#math community

5 notes

·

View notes

Text

Planning autonomous surface missions on ocean worlds

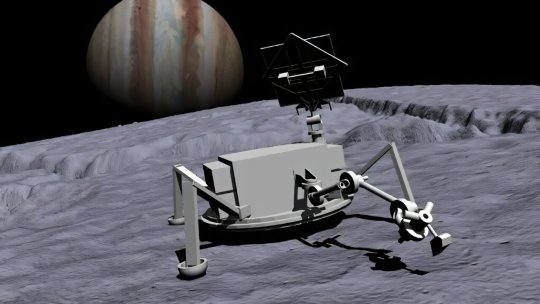

hrough advanced autonomy testbed programs, NASA is setting the groundwork for one of its top priorities—the search for signs of life and potentially habitable bodies in our solar system and beyond. The prime destinations for such exploration are bodies containing liquid water, such as Jupiter's moon Europa and Saturn's moon Enceladus.

Initial missions to the surfaces of these "ocean worlds" will be robotic and require a high degree of onboard autonomy due to long Earth-communication lags and blackouts, harsh surface environments, and limited battery life.

Technologies that can enable spacecraft autonomy generally fall under the umbrella of Artificial Intelligence (AI) and have been evolving rapidly in recent years. Many such technologies, including machine learning, causal reasoning, and generative AI, are being advanced at non-NASA institutions.

NASA started a program in 2018 to take advantage of these advancements to enable future icy world missions. It sponsored the development of the physical Ocean Worlds Lander Autonomy Testbed (OWLAT) at NASA's Jet Propulsion Laboratory in Southern California and the virtual Ocean Worlds Autonomy Testbed for Exploration, Research, and Simulation (OceanWATERS) at NASA's Ames Research Center in Silicon Valley, California.

NASA solicited applications for its Autonomous Robotics Research for Ocean Worlds (ARROW) program in 2020, and for the Concepts for Ocean worlds Life Detection Technology (COLDTech) program in 2021.

Six research teams, based at universities and companies throughout the United States, were chosen to develop and demonstrate autonomy solutions on OWLAT and OceanWATERS. These two- to three-year projects are now complete and have addressed a wide variety of autonomy challenges faced by potential ocean world surface missions.

OWLAT

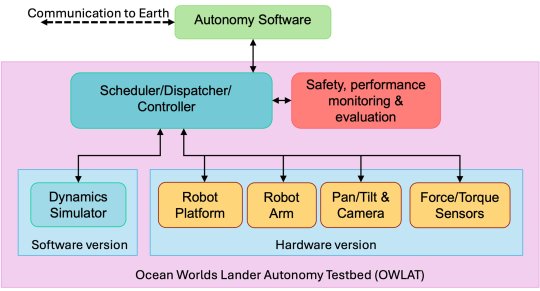

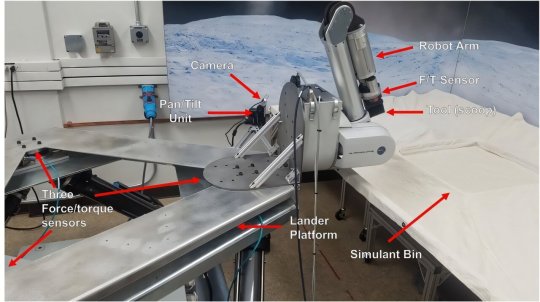

OWLAT is designed to simulate a spacecraft lander with a robotic arm for science operations on an ocean world body. Each of the OWLAT components is detailed below.

The hardware version of OWLAT is designed to physically simulate motions of a lander as operations are performed in a low-gravity environment using a six degrees-of-freedom (DOF) Stewart platform. A seven DOF robot arm is mounted on the lander to perform sampling and other science operations that interact with the environment. A camera mounted on a pan-and-tilt unit is used for perception.

The testbed also has a suite of onboard force/torque sensors to measure motion and reaction forces as the lander interacts with the environment. Control algorithms implemented on the testbed enable it to exhibit dynamics behavior as if it were a lightweight arm on a lander operating in different gravitational environments.

The team also developed a set of tools and instruments to enable the performance of science operations using the testbed. These various tools can be mounted to the end of the robot arm via a quick-connect-disconnect mechanism. The testbed workspace where sampling and other science operations are conducted incorporates an environment designed to represent the scene and surface simulant material potentially found on ocean worlds.

The software-only version of OWLAT models, visualizes, and provides telemetry from a high-fidelity dynamics simulator based on the Dynamics And Real-Time Simulation (DARTS) physics engine developed at JPL. It replicates the behavior of the physical testbed in response to commands and provides telemetry to the autonomy software.

The autonomy software module interacts with the testbed through a Robot Operating System (ROS)-based interface to issue commands and receive telemetry. This interface is defined to be identical to the OceanWATERS interface. Commands received from the autonomy module are processed through the dispatcher/scheduler/controller module and used to command either the physical hardware version of the testbed or the dynamics simulation (software version) of the testbed.

Sensor information from the operation of either the software-only or physical testbed is reported back to the autonomy module using a defined telemetry interface. A safety and performance monitoring and evaluation software module ensures that the testbed is kept within its operating bounds. Any commands causing out of bounds behavior and anomalies are reported as faults to the autonomy software module.

OceanWATERS

At the time of the OceanWATERS project's inception, Jupiter's moon Europa was planetary science's first choice in searching for life. Based on ROS, OceanWATERS is a software tool that provides a visual and physical simulation of a robotic lander on the surface of Europa.

OceanWATERS realistically simulates Europa's celestial sphere and sunlight, both direct and indirect. Because we don't yet have detailed information about the surface of Europa, users can select from terrain models with a variety of surface and material properties. One of these models is a digital replication of a portion of the Atacama Desert in Chile, an area considered a potential Earth-analog for some extraterrestrial surfaces.

JPL's Europa Lander Study of 2016, a guiding document for the development of OceanWATERS, describes a planetary lander whose purpose is collecting subsurface regolith/ice samples, analyzing them with onboard science instruments, and transmitting results of the analysis to Earth.

The simulated lander in OceanWATERS has an antenna mast that pans and tilts; attached to it are stereo cameras and spotlights. It has a 6 degree-of-freedom arm with two interchangeable end effectors—a grinder designed for digging trenches, and a scoop for collecting ground material. The lander is powered by a simulated non-rechargeable battery pack. Power consumption, the battery's state, and its remaining life are regularly predicted with the Generic Software Architecture for Prognostics (GSAP) tool.

To simulate degraded or broken subsystems, a variety of faults (e.g., a frozen arm joint or overheating battery) can be "injected" into the simulation by the user; some faults can also occur "naturally" as the simulation progresses, e.g., if components become over-stressed. All the operations and telemetry (data measurements) of the lander are accessible via an interface that external autonomy software modules can use to command the lander and understand its state. (OceanWATERS and OWLAT share a unified autonomy interface based on ROS.)

The OceanWATERS package includes one basic autonomy module, a facility for executing plans (autonomy specifications) written in the PLan EXecution Interchange Language, or PLEXIL. PLEXIL and GSAP are both open-source software packages developed at Ames and available on GitHub, as is OceanWATERS.

Mission operations that can be simulated by OceanWATERS include visually surveying the landing site, poking at the ground to determine its hardness, digging a trench, and scooping ground material that can be discarded or deposited in a sample collection bin. Communication with Earth, sample analysis, and other operations of a real lander mission, are not presently modeled in OceanWATERS except for their estimated power consumption.

Because of Earth's distance from the ocean worlds and the resulting communication lag, a planetary lander should be programmed with at least enough information to begin its mission. But there will be situation-specific challenges that will require onboard intelligence, such as deciding exactly where and how to collect samples, dealing with unexpected issues and hardware faults, and prioritizing operations based on remaining power.

Results

All six of the research teams used OceanWATERS to develop ocean world lander autonomy technology and three of those teams also used OWLAT. The products of these efforts were published in technical papers, and resulted in the development of software that may be used or adapted for actual ocean world lander missions in the future.

TOP IMAGE: Artist's concept image of a spacecraft lander with a robot arm on the surface of Europa. Credits: NASA/JPL – Caltech

CENTRE IMAGE The software and hardware components of the Ocean Worlds Lander Autonomy Testbed and the relationships between them. Credit: NASA/JPL – Caltech

LOWER IMAGE: The Ocean Worlds Lander Autonomy Testbed. A scoop is mounted to the end of the testbed robot arm. Credit: NASA/JPL – Caltech

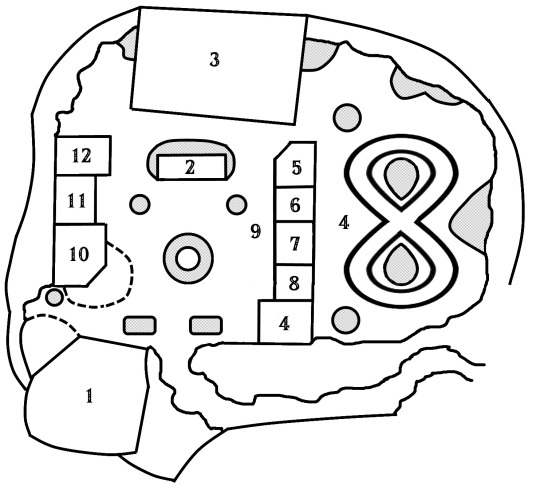

BOTTOM IMAGE: Screenshot of OceanWATERS. Credit: NASA/JPL – Caltech

2 notes

·

View notes

Text

🎉 Ready to unleash chaos and creativity? Meet the Ragdoll Playground in Physics! Fun! 💥

Dive into a world where YOU control the action! Launch, toss, or gently nudge your ragdoll creations. Whether you’re building epic crashes or watching them stand up and recover, the possibilities are endless.

💡 Key Features: 🔧 Fully customizable ragdolls 🧍 Animate them to stand up or fall in style 💥 Breakable or unbreakable—your choice! 🎮 Smooth physics for realistic motion

Get ready to experiment, laugh, and create your ultimate physics sandbox. What will your ragdolls do today? 😎

📲 Download Physics! Fun now and let the chaos begin! 🚀

#PhysicsFun #RagdollMadness #SandboxGaming #IndieGames #GamePhysics

2 notes

·

View notes

Note

The dawn on Reach was as artificial as the concept of a normal childhood for its Spartan-II candidates. Yet, under this simulated morning light, Fred-104 was proving himself to be as real and gritty as they come. At eight years old, he was a compact bundle of determination, tearing through the obstacle course with the focus of someone who had never known cartoons and cereal Saturdays.

His next challenge? The zipline. A wire stretched between two points, seemingly innocent, but today, it was the stage for an unexpected encounter. Fred grabbed the handle and pushed off, feeling the familiar thrill of the wind against his face, the controlled fear of hurtling through the air. It was going smoothly until it wasn't.

Enter Kelly-087. If speed had a form, it would look like her—another eight-year-old missile with a mane of blue-dyed hair that seemed to mock physics itself. She was fast, faster than anyone had a right to be, and her control of the course up to this point had been impeccable. That is, until she decided that Fred's zipline ride looked too lonely to pass up.

With a whoop that was all enthusiasm and zero caution, Kelly launched herself onto the zipline, colliding with Fred in a spectacular fashion that sent them both careening off course and into the welcoming arms of a mud pit below.

The world turned into a slow-motion ballet of flailing limbs and surprised shouts before they hit the mud with a splat that would've made any cartoon proud.

"I'm so sorry!" Fred exclaimed, momentarily forgetting his Spartan-II stoicism. He scrambled to his feet, mud sliding off him in gloopy rivulets, offering a hand to Kelly. Kelly, for her part, lay in the mud, laughter bubbling out of her like a natural spring. "What for!? That was awesome!" she managed to say between giggles, her grin wide and infectious.

Fred blinked, the situation's absurdity finally hitting him. "You're not mad? I thought... Well, I thought you'd be mad."

"Why would I be mad at a free mud bath? Best part of the day!" Kelly said, accepting his hand and pulling herself up with an ease that spoke of her agility. She was a mess, covered in mud from head to toe, but she seemed to wear it like a badge of honor.

Fred couldn't help the laugh that escaped him, a sound so rare it felt foreign. "We're definitely going to pay for this, you know. Mendez is going to have our heads."

Kelly shrugged, her spirits undampened. "So we'll run extra laps. Big deal. It'll be worth it to see the look on his face when we show up like two swamp monsters."

That image, Mendez's face trying to maintain its usual stern composure at the sight of them, had Fred chuckling. "Alright, you're on. But let's make it fun. First one back to the starting line gets the other's dessert for a week."

"You're on, slowpoke!" Kelly shot back, her competitive fire ignited. They shook hands, sealing the deal in mud and spirit.

Off they sprinted, leaving behind footprints that were more sludge than sole...

Yesssss

This was adorable. Just little dorks playing in mud, going full-gremlin-mode. I loved it!

9 notes

·

View notes

Text

incredibly stupid physics problems

(crossposting from cohost)

i posted this in the secret fox flux $4 discord channel last night and it reveals A Problem

i mean, a problem besides the barrel's layering being kind of dubious

the pistons stop trying to extend as soon as they're blocked. and here, you may notice, the one extending left pushes the slime a little bit before giving up. this turns out to be caused by a combination of:

the piston is "moving", but it's not using the normal motion machinery (obviously), so it doesn't actually have any velocity.

when object A tries to push object B, if it determines that object B is moving away from it faster than A is moving, it doesn't bother. i don't remember what specific problem this was meant to solve, but it does make intuitive sense — if i'm pushing a boulder down a hill then it's just going to roll away from me and i am going to accomplish nothing. if i let the push happen, i'd make B move even faster (however briefly) which is silly.

as long as the slime is walking towards the piston, everything is fine. but the slime immediately notices it's blocked on one side, turns around, and starts walking the other way. now the slime has a leftwards velocity, but the piston has zero velocity, so the piston thinks the slime is speeding away from it. but that isn't happening, and the slime is still there, so the piston thinks it's now blocked and stops extending.

love it. chef kiss. pushing objects is the hardest thing in the world. anyway i guess i can think of two potential solutions here

① delete the special case for a pushee moving away. checking velocity in the middle of the (hairy) push code is already kind of weird, and it's only done for this specific case. it also shouldn't matter; even if we push something slightly this frame, if it really is moving away from us, then it should be beyond our reach next frame anyway.

i suspect this is a leftover from an older and vastly more complicated iteration of the push code that tried to conserve momentum and whatnot — in which case, doing this even for a single frame would have made A and B look like a single unit, combined their momentum, and given both the same velocity, which means B slows down. so that's bad. but that was an endless nightmare of increasingly obscure interactions so i eventually gave up and scrapped it all. the current implementation won't let you simulate newton's cradle, i guess, but that's not the kind of game this is so whatever?

on the other hand, if i'm wrong and this is still important in some exceptionally obscure case, it would be bad to remove it! exciting.

(if you're curious: what i do now is give lexy — or other push-capable actors — a maximum push mass, and then when she tries to push something, i scale her movement down proportional to how much she's pushing. for example, rubber lexy's push capacity is 8, and a wooden crate weighs 4, so she moves at half speed when pushing one crate and at zero speed when pushing two. i think if a sliding crate hits another one now, they'll both just move at the first one's original speed, but friction skids everything to a halt fast enough that it mostly doesn't come up.)

② give the piston a fake velocity to fool the check. i might want to do this anyway for stuff like climbing, where the player is moving but is considered to have zero velocity because it's being done manually. but lying like this feels like it'll come back to bite me somewhere down the line.

anyway this is just the sort of thing i bumble into occasionally. hope it's interesting. i should probably go try to fix it now

23 notes

·

View notes

Text

The Matrix (1999)

If all movies belong somewhere on a spectrum between “Confrontational” and “Wish Fulfilment”, then The Matrix is so far on the side of power fantasies it circles back around to say something about the oppressive system that reality can be. Unmistakably 1999, extremely stylish and teeming with groundbreaking special effects, it's got dozens of quotable lines, moments, characters and action scenes. This is a movie everyone should see at least once.

Thomas Anderson (Keanu Reeves) is a computer programmer who moonlights as the hacker “Neo”. When he encounters the legendary Trinity (Carrie-Anne Moss) online, he becomes privy to a dark secret about our world. Joining a small band of rebels and told by their leader Morpheus (Laurence Fishburne) that he is the prophecyzed hero they’ve been waiting for, Neo might be the only one who can save humanity from the shackles of “The Matrix” and the hidden oppressors that have us all in an elaborate cage.

Today, The Matrix is the first part of a franchise that includes several sequels, an animated anthology film, video games, and more. You probably know the film’s big secret: although initially, the film looks like it’s taking place in 1999 New York, that's a lie. This story is set at an unknown point in the future in which humanity has been enslaved. After losing the war between man and machine, everyone alive is imprisoned in a virtual world, unaware that everything around them is nothing more than signals fed through their brains. Meanwhile, their real bodies are fed intravenously and the electrical currents and heat our bodies generate power our mechanical oppressors. Woah. There’s no way you would’ve seen that coming in 1999. Even today, it’s a great premise that opens up a world of insane possibilities the film is eager to engage with when it isn't putting its focus on the action.

You see, Neo, Trinity, Morpheus, Cypher (Joe Pantoliano) and the others know the truth - they’ve chosen the red pill and have had their eyes opened. They know the real world isn’t real at all. This allows them to “cheat” at reality. The mechanical ports in their skulls (a great bit of skin-crawling cyberpunk horror) allow them to instantly upload knowledge into their minds. One moment you’re a regular pale-faced keyboard operator. The next, you’re a superhero with an unparalelled mastery of every martial arts known to man. Is there a more fulfilling fantasy? Our heroes instantly stand out from a crowd thanks to their impossibly cool long leather coats and dark sunglasses. They effortlessly blow away their opponents with weapons they conjure out of thin air (one of the perks of living in a digital world is that you can hack it) and when the bullets run out, they pull off the kinds of punches and kicks only possible in a video game.

The Matrix excels at delivering entertaining sights and sounds, at showing the audience what it wants to see. Though unassuming, “Neo” is “the One”, a human prophesied to free humankind from the big machine that’s got us all living mundane lives. At one point or another (probably in our teenage years) we've all thought “I wish someone would tell me I'm special”. The Wachowskis have taken our deepest desires and made them physical. Neo and his brothers-in-arms look impossibly cool, they can do things no one else possibly could. They’ve woken up from the dream. Now, societal norms and rules they only followed out of obligation no longer apply. Even the laws of gravity start backing off. People dodge bullets. Their jumps, punches and kicks are shot in glorious stop-motion, allowing us to see how impossibly well-choreographed and ferocious they are. If, by the end of the movie, the hero doesn’t get the girl, I’ll eat my hat.

Perfectly embodying the oppressive simulated reality prison these rebels are fighting against is Hugo Weaving as Agent Smith. He’s the kind of bad guy you can never forget. He's not a man. He's literally part of the system; a program within the Matrix that’s allowed to bend the rules of physics so he can squash any rebellion. It’s clear the actor is having the time of his life with this character. He’s cartoonish in a way but it works because the whole film is exaggerated. It wouldn't have been enough for him to have been nasty; he needed to be larger than life and smug too.

It doesn’t take long for The Matrix to get going and once the energy starts to crackle, you get non-stop bolts of lightning directly into your eyes. Is the movie deep, or is it just a steady flow of would-be religious and philosophical themes? It doesn’t matter. The expertly choreographed action scenes and dazzling special effects keep things moving. In other circumstances, the characters (who range from well-developed to mere archetypes) would feel like tools used to segway us from one scene to the next but something about the entire package makes you believe this is everything the Wachowskis have always wanted to show, fully realized. It’s a dream come true, and you can’t wait to see what’s next. (On Blu-ray, January 1, 2022)

#The Matrix#movies#films#movie reviews#film reviews#The Wachowskis#Keanu Reeves#Laurence Fishburne#Carrie-Anne Moss#Hugo Weaving#Joe Pantoliano#1999 movies#1999 films

6 notes

·

View notes

Text

the underworld is great because while it superficially seems like a generic medieval stasis fantasy setting but with system commands for a magic system, it just... well it's not stasis. or well it *was* and then the one enforcing it got dethroned

and then there's a subjective 200 year timeskip and they're in the middle of a kind of industrial revolution but the basis of the industry is just, abusing the physics engine of the simulation in controlled ways to essentially have the equivalents of perpetual motion machines

like they're actually *fully* taking advantage of the way their world works in a way that feels really believable. because to them the "physics engine of the simulation" is just... physics

and they use it to their advantage to completely ignore thermodynamics and the rocket equation

it's great!

2 notes

·

View notes