#serverless deployment

Explore tagged Tumblr posts

Video

youtube

Serverless Architecture & Deployment Design Pattern for Microservices wi... Full Video Link https://youtu.be/b9Gpt4OOlgoHello friends, new #video on #serverless #deployment #designpattern for #microservices #tutorial for #developer #programmers with #examples are published on #codeonedigest #youtube channel. @java #java #aws #awscloud @awscloud @AWSCloudIndia #salesforce #Cloud #CloudComputing @YouTube #youtube #azure #msazure #codeonedigest @codeonedigest #microservices #microservices #cloud #serverless #whataremicroservices #microservicestutorial #microservicesarchitecture #serverlessmicroservices #serverlessframeworksetup #serverlessdeployment #serverlessdeploymentaws #serverlessdeploymentbucket #serverlessdeploymentazure #serverlessdeploymentplatform #serverlessdeployfunction #serverlessdesignpattern #serverlessarchitecture #serverlesscomputing #serverlessmicroservicesexplained #serverlessmicroservicesexample #design

#youtube#serverless#serverless deployment#aws lambda#aws fargate#azure function#google cloud function#serverless infrastructure

1 note

·

View note

Text

Building Your Serverless Sandbox: A Detailed Guide to Multi-Environment Deployments (or How I Learned to Stop Worrying and Love the Cloud)

Introduction Welcome, intrepid serverless adventurers! In the wild world of cloud computing, creating a robust, multi-environment deployment pipeline is crucial for maintaining code quality and ensuring smooth transitions from development to production.Here is part 1 and part 2 of this series. Feel free to read them before continuing on. This guide will walk you through the process of setting…

#automation#aws#AWS S3#CI/CD#Cloud Architecture#cloud computing#cloud security#continuous deployment#DevOps#GitLab#GitLab CI#IAM#Infrastructure as Code#multi-environment deployment#OIDC#pipeline optimization#sandbox#serverless#software development#Terraform

0 notes

Text

Mastering Azure Container Apps: From Configuration to Deployment

Thank you for following our Azure Container Apps series! We hope you're gaining valuable insights to scale and secure your applications. Stay tuned for more tips, and feel free to share your thoughts or questions. Together, let's unlock the Azure's Power.

#API deployment#application scaling#Azure Container Apps#Azure Container Registry#Azure networking#Azure security#background processing#Cloud Computing#containerized applications#event-driven processing#ingress management#KEDA scalers#Managed Identities#microservices#serverless platform

0 notes

Text

#serverlesscomputing#devops#cloudcomputing#coding#developer#business#cloud#aws#it#programming#azure#technology#googlecloud#multicloud#serverless#softwaredevelopment#microservices#kubernetes#deployment#startup#techcommunity#progammer#development#itsolutions#techcompany#cloudsolutions#engineering#technews#cloudengineer#server

0 notes

Text

Building a Serverless React Application with AWS Amplify

View On WordPress

#ahsan mahmood#Amplify#aoneahsan#AWS#AWS Cognito#AWS Lambda#Deployment#React#React application#Serverless#Serverless architecture#Tutorial#zaions

0 notes

Note

hi hi! love your blog! I am also working on building sites for my portfolio but am a little stumped on how/where to deploy them. would you mind sharing what you are using for deployment? thanks!

Places to Deploy Your Website

Hiya! I know a few places I've tried in the past and some I am yet to try but I know other developers use them!

GitHub pages

GitHub Pages is a free static site hosting service that allows you to publish your website directly from a GitHub repository. It supports HTML, CSS, and JavaScript, as well as Jekyll, a static site generator. I used GitHub pages a lot since I use GitHub to keep all my repositories.

Replit

Replit is a cloud-based development environment that provides an integrated IDE, code editor, and hosting platform all in one place. With Replit, you can easily create and deploy web apps, games, and other projects in multiple programming languages such as Python, HTML, CSS, and JavaScript. I use Replit a lot too for my other much smaller projects that I can’t upload on GitHub to run the program online!

Netlify

Netlify offers a free plan for static site hosting that includes features such as continuous deployment, custom domains, and SSL encryption. It supports HTML, CSS, and JavaScript, as well as serverless functions and other backend technologies.

Heroku

Heroku offers a free plan for hobbyist developers that allows you to deploy up to 5 applications. It supports many languages and frameworks, including Ruby, Node.js, Python, Java, PHP, and Go. Heroku allows free hosting for small applications.

Firebase Hosting

Firebase Hosting is a free service that allows you to host and deploy your web app or static content to a global content delivery network (CDN) with SSL encryption. It supports HTML, CSS, JavaScript, and other static assets. It allows free hosting for small applications.

Surge

Surge is a free static site hosting service that allows you to publish your website with a custom domain or a Surge subdomain. It supports HTML, CSS, JavaScript, and other static assets. Allows free hosting with unlimited bandwidth.

Each of these free deployment options has its own cons such as:

Its lack of server-side functionality

Limited database support

The cost of advanced features

Limited control over the infrastructure

May not be suitable for more complex websites or applications

However, for small projects, I think you’ll be fine with the free options!

Hoped this helps and good luck with your websites’ deployments! 🥰🙌🏾💗

#my asks#codeblr#coding#progblr#programming#studying#studyblr#developer#developers#comp sci#computer science#cs student#cs studyblr#resources#coding resources#deployment#deploy websites#portfolio#deployment websites#tech#web dev

213 notes

·

View notes

Text

Exploring the Azure Technology Stack: A Solution Architect’s Journey

Kavin

As a solution architect, my career revolves around solving complex problems and designing systems that are scalable, secure, and efficient. The rise of cloud computing has transformed the way we think about technology, and Microsoft Azure has been at the forefront of this evolution. With its diverse and powerful technology stack, Azure offers endless possibilities for businesses and developers alike. My journey with Azure began with Microsoft Azure training online, which not only deepened my understanding of cloud concepts but also helped me unlock the potential of Azure’s ecosystem.

In this blog, I will share my experience working with a specific Azure technology stack that has proven to be transformative in various projects. This stack primarily focuses on serverless computing, container orchestration, DevOps integration, and globally distributed data management. Let’s dive into how these components come together to create robust solutions for modern business challenges.

Understanding the Azure Ecosystem

Azure’s ecosystem is vast, encompassing services that cater to infrastructure, application development, analytics, machine learning, and more. For this blog, I will focus on a specific stack that includes:

Azure Functions for serverless computing.

Azure Kubernetes Service (AKS) for container orchestration.

Azure DevOps for streamlined development and deployment.

Azure Cosmos DB for globally distributed, scalable data storage.

Each of these services has unique strengths, and when used together, they form a powerful foundation for building modern, cloud-native applications.

1. Azure Functions: Embracing Serverless Architecture

Serverless computing has redefined how we build and deploy applications. With Azure Functions, developers can focus on writing code without worrying about managing infrastructure. Azure Functions supports multiple programming languages and offers seamless integration with other Azure services.

Real-World Application

In one of my projects, we needed to process real-time data from IoT devices deployed across multiple locations. Azure Functions was the perfect choice for this task. By integrating Azure Functions with Azure Event Hubs, we were able to create an event-driven architecture that processed millions of events daily. The serverless nature of Azure Functions allowed us to scale dynamically based on workload, ensuring cost-efficiency and high performance.

Key Benefits:

Auto-scaling: Automatically adjusts to handle workload variations.

Cost-effective: Pay only for the resources consumed during function execution.

Integration-ready: Easily connects with services like Logic Apps, Event Grid, and API Management.

2. Azure Kubernetes Service (AKS): The Power of Containers

Containers have become the backbone of modern application development, and Azure Kubernetes Service (AKS) simplifies container orchestration. AKS provides a managed Kubernetes environment, making it easier to deploy, manage, and scale containerized applications.

Real-World Application

In a project for a healthcare client, we built a microservices architecture using AKS. Each service—such as patient records, appointment scheduling, and billing—was containerized and deployed on AKS. This approach provided several advantages:

Isolation: Each service operated independently, improving fault tolerance.

Scalability: AKS scaled specific services based on demand, optimizing resource usage.

Observability: Using Azure Monitor, we gained deep insights into application performance and quickly resolved issues.

The integration of AKS with Azure DevOps further streamlined our CI/CD pipelines, enabling rapid deployment and updates without downtime.

Key Benefits:

Managed Kubernetes: Reduces operational overhead with automated updates and patching.

Multi-region support: Enables global application deployments.

Built-in security: Integrates with Azure Active Directory and offers role-based access control (RBAC).

3. Azure DevOps: Streamlining Development Workflows

Azure DevOps is an all-in-one platform for managing development workflows, from planning to deployment. It includes tools like Azure Repos, Azure Pipelines, and Azure Artifacts, which support collaboration and automation.

Real-World Application

For an e-commerce client, we used Azure DevOps to establish an efficient CI/CD pipeline. The project involved multiple teams working on front-end, back-end, and database components. Azure DevOps provided:

Version control: Using Azure Repos for centralized code management.

Automated pipelines: Azure Pipelines for building, testing, and deploying code.

Artifact management: Storing dependencies in Azure Artifacts for seamless integration.

The result? Deployment cycles that previously took weeks were reduced to just a few hours, enabling faster time-to-market and improved customer satisfaction.

Key Benefits:

End-to-end integration: Unifies tools for seamless development and deployment.

Scalability: Supports projects of all sizes, from startups to enterprises.

Collaboration: Facilitates team communication with built-in dashboards and tracking.

4. Azure Cosmos DB: Global Data at Scale

Azure Cosmos DB is a globally distributed, multi-model database service designed for mission-critical applications. It guarantees low latency, high availability, and scalability, making it ideal for applications requiring real-time data access across multiple regions.

Real-World Application

In a project for a financial services company, we used Azure Cosmos DB to manage transaction data across multiple continents. The database’s multi-region replication ensure data consistency and availability, even during regional outages. Additionally, Cosmos DB’s support for multiple APIs (SQL, MongoDB, Cassandra, etc.) allowed us to integrate seamlessly with existing systems.

Key Benefits:

Global distribution: Data is replicated across regions with minimal latency.

Flexibility: Supports various data models, including key-value, document, and graph.

SLAs: Offers industry-leading SLAs for availability, throughput, and latency.

Building a Cohesive Solution

Combining these Azure services creates a technology stack that is flexible, scalable, and efficient. Here’s how they work together in a hypothetical solution:

Data Ingestion: IoT devices send data to Azure Event Hubs.

Processing: Azure Functions processes the data in real-time.

Storage: Processed data is stored in Azure Cosmos DB for global access.

Application Logic: Containerized microservices run on AKS, providing APIs for accessing and manipulating data.

Deployment: Azure DevOps manages the CI/CD pipeline, ensuring seamless updates to the application.

This architecture demonstrates how Azure’s technology stack can address modern business challenges while maintaining high performance and reliability.

Final Thoughts

My journey with Azure has been both rewarding and transformative. The training I received at ACTE Institute provided me with a strong foundation to explore Azure’s capabilities and apply them effectively in real-world scenarios. For those new to cloud computing, I recommend starting with a solid training program that offers hands-on experience and practical insights.

As the demand for cloud professionals continues to grow, specializing in Azure’s technology stack can open doors to exciting opportunities. If you’re based in Hyderabad or prefer online learning, consider enrolling in Microsoft Azure training in Hyderabad to kickstart your journey.

Azure’s ecosystem is continuously evolving, offering new tools and features to address emerging challenges. By staying committed to learning and experimenting, we can harness the full potential of this powerful platform and drive innovation in every project we undertake.

#cybersecurity#database#marketingstrategy#digitalmarketing#adtech#artificialintelligence#machinelearning#ai

2 notes

·

View notes

Text

Aible And Google Cloud: Gen AI Models Sets Business Security

Enterprise controls and generative AI for business users in real time.

Aible

With solutions for customer acquisition, churn avoidance, demand prediction, preventive maintenance, and more, Aible is a pioneer in producing business impact from AI in less than 30 days. Teams can use AI to extract company value from raw enterprise data. Previously using BigQuery’s serverless architecture to save analytics costs, Aible is now working with Google Cloud to provide users the confidence and security to create, train, and implement generative AI models on their own data.

The following important factors have surfaced as market awareness of generative AI’s potential grows:

Enabling enterprise-grade control

Businesses want to utilize their corporate data to allow new AI experiences, but they also want to make sure they have control over their data to prevent unintentional usage of it to train AI models.

Reducing and preventing hallucinations

The possibility that models may produce illogical or non-factual information is another particular danger associated with general artificial intelligence.

Empowering business users

Enabling and empowering business people to utilize gen AI models with the least amount of hassle is one of the most beneficial use cases, even if gen AI supports many enterprise use cases.

Scaling use cases for gen AI

Businesses need a method for gathering and implementing their most promising use cases at scale, as well as for establishing standardized best practices and controls.

Regarding data privacy, policy, and regulatory compliance, the majority of enterprises have a low risk tolerance. However, given its potential to drive change, they do not see postponing the deployment of Gen AI as a feasible solution to market and competitive challenges. As a consequence, Aible sought an AI strategy that would protect client data while enabling a broad range of corporate users to swiftly adapt to a fast changing environment.

In order to provide clients complete control over how their data is used and accessed while creating, training, or optimizing AI models, Aible chose to utilize Vertex AI, Google Cloud’s AI platform.

Enabling enterprise-grade controls

Because of Google Cloud’s design methodology, users don’t need to take any more steps to ensure that their data is safe from day one. Google Cloud tenant projects immediately benefit from security and privacy thanks to Google AI products and services. For example, protected customer data in Cloud Storage may be accessed and used by Vertex AI Agent Builder, Enterprise Search, and Conversation AI. Customer-managed encryption keys (CMEK) can be used to further safeguard this data.

With Aible‘s Infrastructure as Code methodology, you can quickly incorporate all of Google Cloud’s advantages into your own applications. Whether you choose open models like LLama or Gemma, third-party models like Anthropic and Cohere, or Google gen AI models like Gemini, the whole experience is fully protected in the Vertex AI Model Garden.

In order to create a system that may activate third-party gen AI models without disclosing private data outside of Google Cloud, Aible additionally collaborated with its client advisory council, which consists of Fortune 100 organizations. Aible merely transmits high-level statistics on clusters which may be hidden if necessary instead of raw data to an external model. For instance, rather of transmitting raw sales data, it may communicate counts and averages depending on product or area.

This makes use of k-anonymity, a privacy approach that protects data privacy by never disclosing information about groups of people smaller than k. You may alter the default value of k; the more private the information transmission, the higher the k value. Aible makes the data transmission even more secure by changing the names of variables like “Country” to “Variable A” and values like “Italy” to “Value X” when masking is used.

Mitigating hallucination risk

It’s crucial to use grounding, retrieval augmented generation (RAG), and other strategies to lessen and lower the likelihood of hallucinations while employing gen AI. Aible, a partner of Built with Google Cloud AI, offers automated analysis to support human-in-the-loop review procedures, giving human specialists the right tools that can outperform manual labor.

Using its auto-generated Information Model (IM), an explainable AI that verifies facts based on the context contained in your structured corporate data at scale and double checks gen AI replies to avoid making incorrect conclusions, is one of the main ways Aible helps eliminate hallucinations.

Hallucinations are addressed in two ways by Aible’s Information Model:

It has been shown that the IM helps lessen hallucinations by grounding gen AI models on a relevant subset of data.

To verify each fact, Aible parses through the outputs of Gen AI and compares them to millions of responses that the Information Model already knows.

This is comparable to Google Cloud’s Vertex AI grounding features, which let you link models to dependable information sources, like as your company’s papers or the Internet, to base replies in certain data sources. A fact that has been automatically verified is shown in blue with the words “If it’s blue, it’s true.” Additionally, you may examine a matching chart created only by the Information Model and verify a certain pattern or variable.

The graphic below illustrates how Aible and Google Cloud collaborate to provide an end-to-end serverless environment that prioritizes artificial intelligence. Aible can analyze datasets of any size since it leverages BigQuery to efficiently analyze and conduct serverless queries across millions of variable combinations. One Fortune 500 client of Aible and Google Cloud, for instance, was able to automatically analyze over 75 datasets, which included 150 million questions and answers with 100 million rows of data. That assessment only cost $80 in total.

Aible may also access Model Garden, which contains Gemini and other top open-source and third-party models, by using Vertex AI. This implies that Aible may use AI models that are not Google-generated while yet enjoying the advantages of extra security measures like masking and k-anonymity.

All of your feedback, reinforcement learning, and Low-Rank Adaptation (LoRA) data are safely stored in your Google Cloud project and are never accessed by Aible.

Read more on Govindhtech.com

#Aible#GenAI#GenAIModels#BusinessSecurity#AI#BigQuery#AImodels#VertexAI#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

2 notes

·

View notes

Text

How can you optimize the performance of machine learning models in the cloud?

Optimizing machine learning models in the cloud involves several strategies to enhance performance and efficiency. Here’s a detailed approach:

Choose the Right Cloud Services:

Managed ML Services:

Use managed services like AWS SageMaker, Google AI Platform, or Azure Machine Learning, which offer built-in tools for training, tuning, and deploying models.

Auto-scaling:

Enable auto-scaling features to adjust resources based on demand, which helps manage costs and performance.

Optimize Data Handling:

Data Storage:

Use scalable cloud storage solutions like Amazon S3, Google Cloud Storage, or Azure Blob Storage for storing large datasets efficiently.

Data Pipeline:

Implement efficient data pipelines with tools like Apache Kafka or AWS Glue to manage and process large volumes of data.

Select Appropriate Computational Resources:

Instance Types:

Choose the right instance types based on your model’s requirements. For example, use GPU or TPU instances for deep learning tasks to accelerate training.

Spot Instances:

Utilize spot instances or preemptible VMs to reduce costs for non-time-sensitive tasks.

Optimize Model Training:

Hyperparameter Tuning:

Use cloud-based hyperparameter tuning services to automate the search for optimal model parameters. Services like Google Cloud AI Platform’s HyperTune or AWS SageMaker’s Automatic Model Tuning can help.

Distributed Training:

Distribute model training across multiple instances or nodes to speed up the process. Frameworks like TensorFlow and PyTorch support distributed training and can take advantage of cloud resources.

Monitoring and Logging:

Monitoring Tools:

Implement monitoring tools to track performance metrics and resource usage. AWS CloudWatch, Google Cloud Monitoring, and Azure Monitor offer real-time insights.

Logging:

Maintain detailed logs for debugging and performance analysis, using tools like AWS CloudTrail or Google Cloud Logging.

Model Deployment:

Serverless Deployment:

Use serverless options to simplify scaling and reduce infrastructure management. Services like AWS Lambda or Google Cloud Functions can handle inference tasks without managing servers.

Model Optimization:

Optimize models by compressing them or using model distillation techniques to reduce inference time and improve latency.

Cost Management:

Cost Analysis:

Regularly analyze and optimize cloud costs to avoid overspending. Tools like AWS Cost Explorer, Google Cloud’s Cost Management, and Azure Cost Management can help monitor and manage expenses.

By carefully selecting cloud services, optimizing data handling and training processes, and monitoring performance, you can efficiently manage and improve machine learning models in the cloud.

2 notes

·

View notes

Text

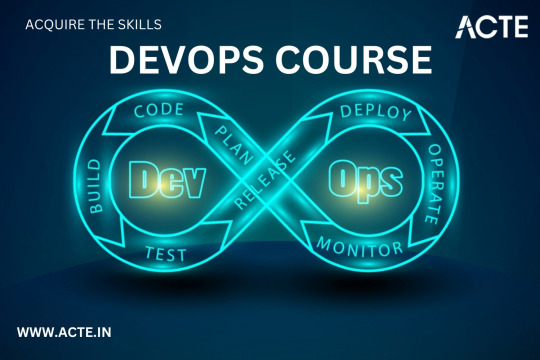

Level Up Your Software Development Skills: Join Our Unique DevOps Course

Would you like to increase your knowledge of software development? Look no further! Our unique DevOps course is the perfect opportunity to upgrade your skillset and pave the way for accelerated career growth in the tech industry. In this article, we will explore the key components of our course, reasons why you should choose it, the remarkable placement opportunities it offers, and the numerous benefits you can expect to gain from joining us.

Key Components of Our DevOps Course

Our DevOps course is meticulously designed to provide you with a comprehensive understanding of the DevOps methodology and equip you with the necessary tools and techniques to excel in the field. Here are the key components you can expect to delve into during the course:

1. Understanding DevOps Fundamentals

Learn the core principles and concepts of DevOps, including continuous integration, continuous delivery, infrastructure automation, and collaboration techniques. Gain insights into how DevOps practices can enhance software development efficiency and communication within cross-functional teams.

2. Mastering Cloud Computing Technologies

Immerse yourself in cloud computing platforms like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform. Acquire hands-on experience in deploying applications, managing serverless architectures, and leveraging containerization technologies such as Docker and Kubernetes for scalable and efficient deployment.

3. Automating Infrastructure as Code

Discover the power of infrastructure automation through tools like Ansible, Terraform, and Puppet. Automate the provisioning, configuration, and management of infrastructure resources, enabling rapid scalability, agility, and error-free deployments.

4. Monitoring and Performance Optimization

Explore various monitoring and observability tools, including Elasticsearch, Grafana, and Prometheus, to ensure your applications are running smoothly and performing optimally. Learn how to diagnose and resolve performance bottlenecks, conduct efficient log analysis, and implement effective alerting mechanisms.

5. Embracing Continuous Integration and Delivery

Dive into the world of continuous integration and delivery (CI/CD) pipelines using popular tools like Jenkins, GitLab CI/CD, and CircleCI. Gain a deep understanding of how to automate build processes, run tests, and deploy applications seamlessly to achieve faster and more reliable software releases.

Reasons to Choose Our DevOps Course

There are numerous reasons why our DevOps course stands out from the rest. Here are some compelling factors that make it the ideal choice for aspiring software developers:

Expert Instructors: Learn from industry professionals who possess extensive experience in the field of DevOps and have a genuine passion for teaching. Benefit from their wealth of knowledge and practical insights gained from working on real-world projects.

Hands-On Approach: Our course emphasizes hands-on learning to ensure you develop the practical skills necessary to thrive in a DevOps environment. Through immersive lab sessions, you will have opportunities to apply the concepts learned and gain valuable experience working with industry-standard tools and technologies.

Tailored Curriculum: We understand that every learner is unique, so our curriculum is strategically designed to cater to individuals of varying proficiency levels. Whether you are a beginner or an experienced professional, our course will be tailored to suit your needs and help you achieve your desired goals.

Industry-Relevant Projects: Gain practical exposure to real-world scenarios by working on industry-relevant projects. Apply your newly acquired skills to solve complex problems and build innovative solutions that mirror the challenges faced by DevOps practitioners in the industry today.

Benefits of Joining Our DevOps Course

By joining our DevOps course, you open up a world of benefits that will enhance your software development career. Here are some notable advantages you can expect to gain:

Enhanced Employability: Acquire sought-after skills that are in high demand in the software development industry. Stand out from the crowd and increase your employability prospects by showcasing your proficiency in DevOps methodologies and tools.

Higher Earning Potential: With the rise of DevOps practices, organizations are willing to offer competitive remuneration packages to skilled professionals. By mastering DevOps through our course, you can significantly increase your earning potential in the tech industry.

Streamlined Software Development Processes: Gain the ability to streamline software development workflows by effectively integrating development and operations. With DevOps expertise, you will be capable of accelerating software deployment, reducing errors, and improving the overall efficiency of the development lifecycle.

Continuous Learning and Growth: DevOps is a rapidly evolving field, and by joining our course, you become a part of a community committed to continuous learning and growth. Stay updated with the latest industry trends, technologies, and best practices to ensure your skills remain relevant in an ever-changing tech landscape.

In conclusion, our unique DevOps course at ACTE institute offers unparalleled opportunities for software developers to level up their skills and propel their careers forward. With a comprehensive curriculum, remarkable placement opportunities, and a host of benefits, joining our course is undoubtedly a wise investment in your future success. Don't miss out on this incredible chance to become a proficient DevOps practitioner and unlock new horizons in the world of software development. Enroll today and embark on an exciting journey towards professional growth and achievement!

10 notes

·

View notes

Text

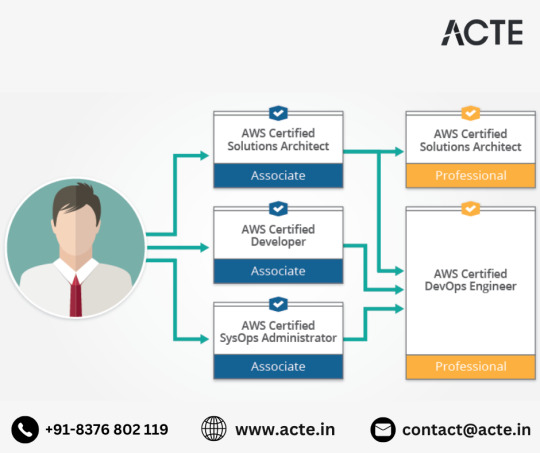

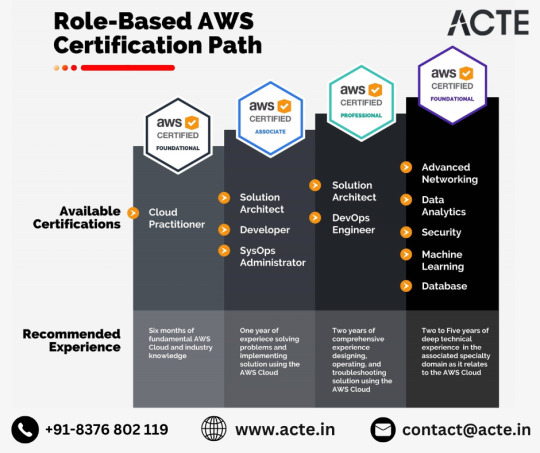

Crafting a Career Odyssey: AWS Certification Unveiled for Solution Architects

Embarking on the journey of AWS certification as a Solution Architect unveils a plethora of career avenues, transforming your professional trajectory in the dynamic landscape of cloud computing. Let's explore the myriad paths that unfold for certified AWS Solution Architects:

1. Architecting Excellence: Steering Digital Transformations AWS certification catapults you into roles where you architect and implement cutting-edge solutions. As a linchpin in digital transformations, you play a pivotal role in creating scalable, secure, and cost-effective solutions aligned with organizational objectives.

2. Cloud Architect Mastery: Orchestrating Comprehensive Cloud Strategies The journey doesn't stop at Solution Architect; it seamlessly transitions into broader Cloud Architect roles. Here, you orchestrate end-to-end cloud strategies, ensuring optimal performance, security, and efficiency in cloud-based environments.

3. Enterprise Architect Pinnacle: Shaping Holistic IT Strategies With AWS certification, the pathway extends to Enterprise Architect roles. This involves shaping the overarching IT strategy, aligning technology solutions with business goals, and ensuring seamless integration across the enterprise.

4. Cloud Consulting Expertise: Guiding Clients on Cloud Journey Organizations seek AWS-certified Solution Architects for Cloud Consultant positions, where you provide guidance on cloud strategies, migration plans, and optimize AWS infrastructure for enhanced performance.

5. Technical Leadership Zenith: Guiding Development Initiatives Expertise gained through AWS certification positions you favorably for technical leadership roles. Leading teams, guiding development projects, and offering strategic input on technology initiatives become part of your purview.

6. DevOps Alchemy: Bridging Development and Operations The fusion of AWS expertise and Solution Architect skills opens doors to DevOps Engineer opportunities. Your grasp of cloud infrastructure proves invaluable in optimizing continuous integration and deployment pipelines.

7. Pre-Sales Artistry: Crafting Compelling Solutions Leverage AWS certification in Pre-Sales Solutions Architect positions. Engaging with clients during the pre-sales phase, you become instrumental in understanding their needs and crafting compelling solutions.

8. Specialized Architectural Prowess: Exploring Niche Opportunities As technology evolves, specialized Solution Architect roles emerge. Depending on your interests and the evolving AWS service landscape, opportunities in areas like AI/ML architecture, IoT solutions, or serverless architectures beckon.

9. Entrepreneurial Odyssey: Beyond Conventional Paths Armed with AWS certification, entrepreneurial pursuits become viable. Whether offering specialized AWS services or launching a tech startup, the certification serves as a foundation for innovative endeavors.

10. Lifelong Learning Odyssey: Staying Ahead in the Dynamic AWS Realm The AWS ecosystem is dynamic, with constant updates and new services. Your certification journey becomes a springboard for continuous learning and professional development, ensuring you remain at the forefront of cloud technology.

In conclusion, AWS certification for Solution Architects is not just a validation; it's a compass guiding you through a rich tapestry of career possibilities. Whether crafting digital landscapes, steering enterprises through the cloud, or exploring niche opportunities, the certification becomes a catalyst for continuous growth, learning, and innovation in the ever-evolving cloud computing domain.

2 notes

·

View notes

Text

Azure’s Evolution: What Every IT Pro Should Know About Microsoft’s Cloud

IT professionals need to keep ahead of the curve in the ever changing world of technology today. The cloud has become an integral part of modern IT infrastructure, and one of the leading players in this domain is Microsoft Azure. Azure’s evolution over the years has been nothing short of remarkable, making it essential for IT pros to understand its journey and keep pace with its innovations. In this blog, we’ll take you on a journey through Azure’s transformation, exploring its history, service portfolio, global reach, security measures, and much more. By the end of this article, you’ll have a comprehensive understanding of what every IT pro should know about Microsoft’s cloud platform.

Historical Overview

Azure’s Humble Beginnings

Microsoft Azure was officially launched in February 2010 as “Windows Azure.” It began as a platform-as-a-service (PaaS) offering primarily focused on providing Windows-based cloud services.

The Azure Branding Shift

In 2014, Microsoft rebranded Windows Azure to Microsoft Azure to reflect its broader support for various operating systems, programming languages, and frameworks. This rebranding marked a significant shift in Azure’s identity and capabilities.

Key Milestones

Over the years, Azure has achieved numerous milestones, including the introduction of Azure Virtual Machines, Azure App Service, and the Azure Marketplace. These milestones have expanded its capabilities and made it a go-to choice for businesses of all sizes.

Expanding Service Portfolio

Azure’s service portfolio has grown exponentially since its inception. Today, it offers a vast array of services catering to diverse needs:

Compute Services: Azure provides a range of options, from virtual machines (VMs) to serverless computing with Azure Functions.

Data Services: Azure offers data storage solutions like Azure SQL Database, Cosmos DB, and Azure Data Lake Storage.

AI and Machine Learning: With Azure Machine Learning and Cognitive Services, IT pros can harness the power of AI for their applications.

IoT Solutions: Azure IoT Hub and IoT Central simplify the development and management of IoT solutions.

Azure Regions and Global Reach

Azure boasts an extensive network of data centers spread across the globe. This global presence offers several advantages:

Scalability: IT pros can easily scale their applications by deploying resources in multiple regions.

Redundancy: Azure’s global datacenter presence ensures high availability and data redundancy.

Data Sovereignty: Choosing the right Azure region is crucial for data compliance and sovereignty.

Integration and Hybrid Solutions

Azure’s integration capabilities are a boon for businesses with hybrid cloud needs. Azure Arc, for instance, allows you to manage on-premises, multi-cloud, and edge environments through a unified interface. Azure’s compatibility with other cloud providers simplifies multi-cloud management.

Security and Compliance

Azure has made significant strides in security and compliance. It offers features like Azure Security Center, Azure Active Directory, and extensive compliance certifications. IT pros can leverage these tools to meet stringent security and regulatory requirements.

Azure Marketplace and Third-Party Offerings

Azure Marketplace is a treasure trove of third-party solutions that complement Azure services. IT pros can explore a wide range of offerings, from monitoring tools to cybersecurity solutions, to enhance their Azure deployments.

Azure DevOps and Automation

Automation is key to efficiently managing Azure resources. Azure DevOps services and tools facilitate continuous integration and continuous delivery (CI/CD), ensuring faster and more reliable application deployments.

Monitoring and Management

Azure offers robust monitoring and management tools to help IT pros optimize resource usage, troubleshoot issues, and gain insights into their Azure deployments. Best practices for resource management can help reduce costs and improve performance.

Future Trends and Innovations

As the technology landscape continues to evolve, Azure remains at the forefront of innovation. Keep an eye on trends like edge computing and quantum computing, as Azure is likely to play a significant role in these domains.

Training and Certification

To excel in your IT career, consider pursuing Azure certifications. ACTE Institute offers a range of certifications, such as the Microsoft Azure course to validate your expertise in Azure technologies.

In conclusion, Azure’s evolution is a testament to Microsoft’s commitment to cloud innovation. As an IT professional, understanding Azure’s history, service offerings, global reach, security measures, and future trends is paramount. Azure’s versatility and comprehensive toolset make it a top choice for organizations worldwide. By staying informed and adapting to Azure’s evolving landscape, IT pros can remain at the forefront of cloud technology, delivering value to their organizations and clients in an ever-changing digital world. Embrace Azure’s evolution, and empower yourself for a successful future in the cloud.

#microsoft azure#tech#education#cloud services#azure devops#information technology#automation#innovation

2 notes

·

View notes

Text

Skyrocket Your Efficiency: Dive into Azure Cloud-Native solutions

Join our blog series on Azure Container Apps and unlock unstoppable innovation! Discover foundational concepts, advanced deployment strategies, microservices, serverless computing, best practices, and real-world examples. Transform your operations!!

#Azure App Service#Azure cloud#Azure Container Apps#Azure Functions#CI/CD#cloud infrastructure#cloud-native applications#containerization#deployment strategies#DevOps#Kubernetes#microservices architecture#serverless computing

0 notes

Text

Demystifying Microsoft Azure Cloud Hosting and PaaS Services: A Comprehensive Guide

In the rapidly evolving landscape of cloud computing, Microsoft Azure has emerged as a powerful player, offering a wide range of services to help businesses build, deploy, and manage applications and infrastructure. One of the standout features of Azure is its Cloud Hosting and Platform-as-a-Service (PaaS) offerings, which enable organizations to harness the benefits of the cloud while minimizing the complexities of infrastructure management. In this comprehensive guide, we'll dive deep into Microsoft Azure Cloud Hosting and PaaS Services, demystifying their features, benefits, and use cases.

Understanding Microsoft Azure Cloud Hosting

Cloud hosting, as the name suggests, involves hosting applications and services on virtual servers that are accessed over the internet. Microsoft Azure provides a robust cloud hosting environment, allowing businesses to scale up or down as needed, pay for only the resources they consume, and reduce the burden of maintaining physical hardware. Here are some key components of Azure Cloud Hosting:

Virtual Machines (VMs): Azure offers a variety of pre-configured virtual machine sizes that cater to different workloads. These VMs can run Windows or Linux operating systems and can be easily scaled to meet changing demands.

Azure App Service: This PaaS offering allows developers to build, deploy, and manage web applications without dealing with the underlying infrastructure. It supports various programming languages and frameworks, making it suitable for a wide range of applications.

Azure Kubernetes Service (AKS): For containerized applications, AKS provides a managed Kubernetes service. Kubernetes simplifies the deployment and management of containerized applications, and AKS further streamlines this process.

Exploring Azure Platform-as-a-Service (PaaS) Services

Platform-as-a-Service (PaaS) takes cloud hosting a step further by abstracting away even more of the infrastructure management, allowing developers to focus primarily on building and deploying applications. Azure offers an array of PaaS services that cater to different needs:

Azure SQL Database: This fully managed relational database service eliminates the need for database administration tasks such as patching and backups. It offers high availability, security, and scalability for your data.

Azure Cosmos DB: For globally distributed, highly responsive applications, Azure Cosmos DB is a NoSQL database service that guarantees low-latency access and automatic scaling.

Azure Functions: A serverless compute service, Azure Functions allows you to run code in response to events without provisioning or managing servers. It's ideal for event-driven architectures.

Azure Logic Apps: This service enables you to automate workflows and integrate various applications and services without writing extensive code. It's great for orchestrating complex business processes.

Benefits of Azure Cloud Hosting and PaaS Services

Scalability: Azure's elasticity allows you to scale resources up or down based on demand. This ensures optimal performance and cost efficiency.

Cost Management: With pay-as-you-go pricing, you only pay for the resources you use. Azure also provides cost management tools to monitor and optimize spending.

High Availability: Azure's data centers are distributed globally, providing redundancy and ensuring high availability for your applications.

Security and Compliance: Azure offers robust security features and compliance certifications, helping you meet industry standards and regulations.

Developer Productivity: PaaS services like Azure App Service and Azure Functions streamline development by handling infrastructure tasks, allowing developers to focus on writing code.

Use Cases for Azure Cloud Hosting and PaaS

Web Applications: Azure App Service is ideal for hosting web applications, enabling easy deployment and scaling without managing the underlying servers.

Microservices: Azure Kubernetes Service supports the deployment and orchestration of microservices, making it suitable for complex applications with multiple components.

Data-Driven Applications: Azure's PaaS offerings like Azure SQL Database and Azure Cosmos DB are well-suited for applications that rely heavily on data storage and processing.

Serverless Architecture: Azure Functions and Logic Apps are perfect for building serverless applications that respond to events in real-time.

In conclusion, Microsoft Azure's Cloud Hosting and PaaS Services provide businesses with the tools they need to harness the power of the cloud while minimizing the complexities of infrastructure management. With scalability, cost-efficiency, and a wide array of services, Azure empowers developers and organizations to innovate and deliver impactful applications. Whether you're hosting a web application, managing data, or adopting a serverless approach, Azure has the tools to support your journey into the cloud.

#Microsoft Azure#Internet of Things#Azure AI#Azure Analytics#Azure IoT Services#Azure Applications#Microsoft Azure PaaS

2 notes

·

View notes

Text

Devops training in ameerpet

DevOps is a methodology that combines software development and IT operations to improve the speed and quality of software delivery. As the demand for skilled DevOps professionals continues to grow, APEC IT Training offers comprehensive DevOps training programs that are designed to teach participants the skills necessary to become proficient DevOps engineers.

The DevOps training program offered by APEC IT Training covers a wide range of topics, including continuous integration and deployment, infrastructure automation, containerization, monitoring and logging, and security. Participants are also introduced to more advanced topics such as site reliability engineering and serverless computing.

visit: http://www.apectraining.com/devops/

2 notes

·

View notes

Text

How to Deploy Your Full Stack Application: A Beginner’s Guide

Deploying a full stack application involves setting up your frontend, backend, and database on a live server so users can access it over the internet. This guide covers deployment strategies, hosting services, and best practices.

1. Choosing a Deployment Platform

Popular options include:

Cloud Platforms: AWS, Google Cloud, Azure

PaaS Providers: Heroku, Vercel, Netlify

Containerized Deployment: Docker, Kubernetes

Traditional Hosting: VPS (DigitalOcean, Linode)

2. Deploying the Backend

Option 1: Deploy with a Cloud Server (e.g., AWS EC2, DigitalOcean)

Set Up a Virtual Machine (VM)

bash

ssh user@your-server-ip

Install Dependencies

Node.js (sudo apt install nodejs npm)

Python (sudo apt install python3-pip)

Database (MySQL, PostgreSQL, MongoDB)

Run the Server

bash

nohup node server.js & # For Node.js apps gunicorn app:app --daemon # For Python Flask/Django apps

Option 2: Serverless Deployment (AWS Lambda, Firebase Functions)

Pros: No server maintenance, auto-scaling

Cons: Limited control over infrastructure

3. Deploying the Frontend

Option 1: Static Site Hosting (Vercel, Netlify, GitHub Pages)

Push Code to GitHub

Connect GitHub Repo to Netlify/Vercel

Set Build Command (e.g., npm run build)

Deploy and Get Live URL

Option 2: Deploy with Nginx on a Cloud Server

Install Nginx

bash

sudo apt install nginx

Configure Nginx for React/Vue/Angular

nginx

server { listen 80; root /var/www/html; index index.html; location / { try_files $uri /index.html; } }

Restart Nginx

bash

sudo systemctl restart nginx

4. Connecting Frontend and Backend

Use CORS middleware to allow cross-origin requests

Set up reverse proxy with Nginx

Secure API with authentication tokens (JWT, OAuth)

5. Database Setup

Cloud Databases: AWS RDS, Firebase, MongoDB Atlas

Self-Hosted Databases: PostgreSQL, MySQL on a VPS

bash# Example: Run PostgreSQL on DigitalOcean sudo apt install postgresql sudo systemctl start postgresql

6. Security & Optimization

✅ SSL Certificate: Secure site with HTTPS (Let’s Encrypt) ✅ Load Balancing: Use AWS ALB, Nginx reverse proxy ✅ Scaling: Auto-scale with Kubernetes or cloud functions ✅ Logging & Monitoring: Use Datadog, New Relic, AWS CloudWatch

7. CI/CD for Automated Deployment

GitHub Actions: Automate builds and deployment

Jenkins/GitLab CI/CD: Custom pipelines for complex deployments

Docker & Kubernetes: Containerized deployment for scalability

Final Thoughts

Deploying a full stack app requires setting up hosting, configuring the backend, deploying the frontend, and securing the application.

Cloud platforms like AWS, Heroku, and Vercel simplify the process, while advanced setups use Kubernetes and Docker for scalability.

WEBSITE: https://www.ficusoft.in/full-stack-developer-course-in-chennai/

0 notes