#section 230 Internet Communications Decency Act

Explore tagged Tumblr posts

Text

WHAT IS SECTION 230?

"Section 230 of the 1996 Communications Decency Act, _ _ states that “no provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider.”

“The primary thing we do on the internet is we talk to each other. It might be email, it might be social media, might be message boards, but we talk to each other. And a lot of those conversations are enabled by Section 230, which says that whoever’s allowing us to talk to each other isn’t liable for our conversations,” said Eric Goldman, a professor at Santa Clara University specializing in internet law. “The Supreme Court could easily disturb or eliminate that basic proposition and say that the people allowing us to talk to each other are liable for those conversations. At which point they won’t allow us to talk to each other anymore.”

There are two possible outcomes."

“The rest of the world is cracking down on the internet even faster than the U.S.,” Goldman said. “So we’re a step behind the rest of the world in terms of censoring the internet. And the question is whether we can even hold out on our own.”

READ MORE https://abcnews.go.com/US/wireStory/section-230-rule-made-modern-internet-97350905

The story of Section 230 goes back to an AOL troll. Now the law may be undone

February 22, 2023

"Eric Goldman, a professor at Santa Clara University Law School who has written extensively about the Zeran case, has a hard time believing that Zeran was not intentionally targeted, but he admits that the real culprit may forever be a mystery.

"I just cannot accept the idea that he was a random drive-by victim. That just doesn't pass the smell test," Goldman said. "It is one of the great whodunits of our time."

3-Minute Listen READ MORE https://www.npr.org/2021/05/11/994395889/how-one-mans-fight-against-an-aol-troll-sealed-the-tech-industrys-power

0 notes

Text

"Much ink has already been spilled on Harris’s prosecutorial background. What is significant about the topic of sex work is how recently the vice president–elect’s actions contradicted her alleged views. During her tenure as AG, she led a campaign to shut down Backpage, a classified advertising website frequently used by sex workers, calling it “the world’s top online brothel” in 2016 and claiming that the site made “millions of dollars from trafficking.” While Backpage did make millions off of sex work ads, its “adult services” listings offered a safer and more transparent platform for sex workers and their clients to conduct consensual transactions than had historically been available. Harris’s grandiose mischaracterization led to a Senate investigation, and the shuttering of the site by the FBI in 2018.

“Backpage being gone has devastated our community,” said Andrews. The platform allowed sex workers to work more safely: They were able to vet clients and promote their services online. “It’s very heartbreaking to see the fallout,” said dominatrix Yevgeniya Ivanyutenko. “A lot of people lost their ability to safely make a living. A lot of people were forced to go on the street or do other things that they wouldn’t have otherwise considered.” M.F. Akynos, the founder and executive director of the Black Sex Worker Collective, thinks Harris should “apologize to the community. She needs to admit that she really fucked up with Backpage, and really ruined a lot of people’s lives.”

After Harris became a senator, she cosponsored the now-infamous Stop Enabling Sex Traffickers Act (SESTA), which—along with the House’s Allow States and Victims to Fight Online Sex Trafficking Act (FOSTA)—was signed into law by President Trump in 2018. FOSTA-SESTA created a loophole in Section 230 of the Communications Decency Act, the so-called “safe harbor” provision that allows websites to be free from liability for user-generated content (e.g., Amazon reviews, Craigslist ads). The Electronic Frontier Foundation argues that Section 230 is the backbone of the Internet, calling it “the most important law protecting internet free speech.” Now, website publishers are liable if third parties post sex-work ads on their platforms.

That spelled the end of any number of platforms—mostly famously Craigslist’s “personal encounters” section—that sex workers used to vet prospective clients, leaving an already vulnerable workforce even more exposed. (The Woodhull Freedom Foundation has filed a lawsuit challenging FOSTA on First Amendment grounds; in January 2020, it won an appeal in D.C.’s district court).

“I sent a bunch of stats [to Harris and Senator Diane Feinstein] about decriminalization and how much SESTA-FOSTA would hurt American sex workers and open them up to violence,” said Cara (a pseudonym), who was working as a sex worker in the San Francisco and a member of SWOP when the bill passed. Both senators ignored her.

The bill both demonstrably harmed sex workers and failed to drop sex trafficking. “Within one month of FOSTA’s enactment, 13 sex workers were reported missing, and two were dead from suicide,” wrote Lura Chamberlain in her Fordham Law Review article “FOSTA: A Hostile Law with a Human Cost.” “Sex workers operating independently faced a tremendous and immediate uptick in unwanted solicitation from individuals offering or demanding to traffic them. Numerous others were raped, assaulted, and rendered homeless or unable to feed their children.” A 2020 survey of the effects of FOSTA-SESTA found that “99% of online respondents reported that this law does not make them feel safer” and 80.61 percent “say they are now facing difficulties advertising their services.” "

-What Sex Workers Want Kamala Harris to Know by Hallie Liberman

#personal#sw#sex work is work#kamala harris#one of the MANY many reasons i hate harris#she directly put so many sex workers at risk. i lost multiple community members because of her#whorephobia#fosta/sesta

431 notes

·

View notes

Text

Dirty words are politically potent

On OCTOBER 23 at 7PM, I'll be in DECATUR, presenting my novel THE BEZZLE at EAGLE EYE BOOKS.

Making up words is a perfectly cromulent passtime, and while most of the words we coin disappear as soon as they fall from our lips, every now and again, you find a word that fits so nice and kentucky in the public discourse that it acquires a life of its own:

http://meaningofliff.free.fr/definition.php3?word=Kentucky

I've been trying to increase the salience of digital human rights in the public imagination for a quarter of a century, starting with the campaign to get people to appreciate that the internet matters, and that tech policy isn't just the delusion that the governance of spaces where sad nerds argue about Star Trek is somehow relevant to human thriving:

https://www.newyorker.com/magazine/2010/10/04/small-change-malcolm-gladwell

Now, eventually people figured out that a) the internet mattered and, b) it was going dreadfully wrong. So my job changed again, from "how the internet is governed matters" to "you can't fix the internet with wishful thinking," for example, when people said we could solve its problems by banning general purpose computers:

https://memex.craphound.com/2012/01/10/lockdown-the-coming-war-on-general-purpose-computing/

Or by banning working cryptography:

https://memex.craphound.com/2018/09/04/oh-for-fucks-sake-not-this-fucking-bullshit-again-cryptography-edition/

Or by redesigning web browsers to treat their owners as threats:

https://www.eff.org/deeplinks/2017/09/open-letter-w3c-director-ceo-team-and-membership

Or by using bots to filter every public utterance to ensure that they don't infringe copyright:

https://www.eff.org/deeplinks/2018/09/today-europe-lost-internet-now-we-fight-back

Or by forcing platforms to surveil and police their users' speech (aka "getting rid of Section 230"):

https://www.techdirt.com/2020/06/23/hello-youve-been-referred-here-because-youre-wrong-about-section-230-communications-decency-act/

Along the way, many of us have coined words in a bid to encapsulate the abstract, technical ideas at the core of these arguments. This isn't a vanity project! Creating a common vocabulary is a necessary precondition for having the substantive, vital debates we'll need to tackle the real, thorny issues raised by digital systems. So there's "free software," "open source," "filternet," "chat control," "back doors," and my own contributions, like "adversarial interoperability":

https://www.eff.org/deeplinks/2019/10/adversarial-interoperability

Or "Competitive Compatibility" ("comcom"), a less-intimidatingly technical term for the same thing:

https://www.eff.org/deeplinks/2020/12/competitive-compatibility-year-review

These have all found their own niches, but nearly all of them are just that: niche. Some don't even rise to "niche": they're shibboleths, insider terms that confuse and intimidate normies and distract from the real fights with semantic ones, like whether it's "FOSS" or "FLOSS" or something else entirely:

https://opensource.stackexchange.com/questions/262/what-is-the-difference-between-foss-and-floss

But every now and again, you get a word that just kills. That brings me to "enshittification," a word I coined in 2022:

https://pluralistic.net/2022/11/28/enshittification/#relentless-payola

"Enshittification" took root in my hindbrain, rolling around and around, agglomerating lots of different thoughts and critiques I'd been making for years, crystallizing them into a coherent thesis:

https://pluralistic.net/2023/01/21/potemkin-ai/#hey-guys

This kind of spontaneous crystallization is the dividend of doing lots of work in public, trying to take every half-formed thought and pin it down in public writing, something I've been doing for decades:

https://pluralistic.net/2021/05/09/the-memex-method/

After those first couple articles, "enshittification" raced around the internet. There's two reasons for this: first, "enshittification" is a naughty word that's fun to say. Journalists love getting to put "shit" in their copy:

https://www.nytimes.com/2024/01/15/crosswords/linguistics-word-of-the-year.html

Radio journalists love to tweak the FCC with cheekily bleeped syllables in slightly dirty compound words:

https://www.wnycstudios.org/podcasts/otm/projects/enshitification

And nothing enlivens an academic's day like getting to use a word like "enshittification" in a journal article (doubtless this also amuses the editors, peer-reviewers, copyeditors, typesetters, etc):

https://scholar.google.com/scholar?hl=en&as_sdt=0%2C5&q=enshittification&btnG=&oq=ensh

That was where I started, too! The first time I used "enshittification" was in a throwaway bad-tempered rant about the decay of Tripadvisor into utter uselessness, which drew a small chorus of appreciative chuckles about the word:

https://twitter.com/doctorow/status/1550457808222552065

The word rattled around my mind for five months before attaching itself to my detailed theory of platform decay. But it was that detailed critique, coupled with a minor license to swear, that gave "enshittification" a life of its own. How do I know that the theory was as important as the swearing? Because the small wave of amusement that followed my first use of "enshittification" petered out in less than a day. It was only when I added the theory that the word took hold.

Likewise: how do I know that the theory needed to be blended with swearing to break out of the esoteric realm of tech policy debates (which the public had roundly ignored for more than two decades)? Well, because I spent two decades writing about this stuff without making anything like the dents that appeared once I added an Anglo-Saxon monosyllable to that critique.

Adding "enshittification" to the critique got me more column inches, a longer hearing, a more vibrant debate, than anything else I'd tried. First, Wired availed itself of the Creative Commons license on my second long-form article on the subject and reprinted it as a 4,200-word feature. I've been writing for Wired for more than thirty years and this is by far the longest thing I've published with them – a big, roomy, discursive piece that was run verbatim, with every one of my cherished darlings unmurdered.

That gave the word – and the whole critique, with all its spiky corners – a global airing, leading to more pickup and discussion. Eventually, the American Dialect Society named it their "Word of the Year" (and their "Tech Word of the Year"):

https://americandialect.org/2023-word-of-the-year-is-enshittification/

"Enshittification" turns out to be catnip for language nerds:

https://becauselanguage.com/90-enpoopification/#transcript-60

I've been dragged into (good natured) fights over the German, Spanish, French and Italian translations for the term. When I taped an NPR show before a live audience with ASL interpretation, I got to watch a Deaf fan politely inform the interpreter that she didn't need to finger-spell "enshittification," because it had already been given an ASL sign by the US Deaf community:

https://maximumfun.org/episodes/go-fact-yourself/ep-158-aida-rodriguez-cory-doctorow/

I gave a speech about enshittification in Berlin and published the transcript:

https://pluralistic.net/2024/01/30/go-nuts-meine-kerle/#ich-bin-ein-bratapfel

Which prompted the rock-ribbed Financial Times to get in touch with me and publish the speech – again, nearly verbatim – as a whopping 6,400 word feature in their weekend magazine:

https://www.ft.com/content/6fb1602d-a08b-4a8c-bac0-047b7d64aba5

Though they could have had it for free (just as Wired had), they insisted on paying me (very well, as it happens!), as did De Zeit:

https://www.zeit.de/digital/internet/2024-03/plattformen-facebook-google-internet-cory-doctorow

This was the start of the rise of enshittification. The word is spreading farther than ever, in ways that I have nothing to do with, along with the critique I hung on it. In other words, the bit of string that tech policy wonks have been pushing on for a quarter of a century is actually starting to move, and it's actually accelerating.

Despite this (or more likely because of it), there's a growing chorus of "concerned" people who say they like the critique but fret that it is being held back because you can't use it "at church or when talking to K-12 students" (my favorite variant: "I couldn't say this at a NATO conference"). I leave it up to you whether you use the word with your K-12 students, NATO generals, or fellow parishoners (though I assure you that all three groups are conversant with the dirty little word at the root of my coinage). If you don't want to use "enshittification," you can coin your own word – or just use one of the dozens of words that failed to gain public attention over the past 25 years (might I suggest "platform decay?").

What's so funny about all this pearl-clutching is that it comes from people who universally profess to have the intestinal fortitude to hear the word "enshittification" without experiencing psychological trauma, but worry that other people might not be so strong-minded. They continue to say this even as the most conservative officials in the most staid of exalted forums use the word without a hint of embarrassment, much less apology:

https://www.independent.ie/business/technology/chairman-of-irish-social-media-regulator-says-europe-should-not-be-seduced-by-mario-draghis-claims/a526530600.html

I mean, I'm giving a speech on enshittification next month at a conference where I'm opening for the Secretary General of the United Nations:

https://icanewdelhi2024.coop/welcome/pages/Programme

After spending half my life trying to get stuff like this into the discourse, I've developed some hard-won, informed views on how ideas succeed:

First: the minor obscenity is a feature, not a bug. The marriage of something long and serious to something short and funny is a happy one that makes both the word and the ideas better off than they'd be on their own. As Lenny Bruce wrote in his canonical work in the subject, the aptly named How to Talk Dirty and Influence People:

I want to help you if you have a dirty-word problem. There are none, and I'll spell it out logically to you.

Here is a toilet. Specifically-that's all we're concerned with, specifics-if I can tell you a dirty toilet joke, we must have a dirty toilet. That's what we're all talking about, a toilet. If we take this toilet and boil it and it's clean, I can never tell you specifically a dirty toilet joke about this toilet. I can tell you a dirty toilet joke in the Milner Hotel, or something like that, but this toilet is a clean toilet now. Obscenity is a human manifestation. This toilet has no central nervous system, no level of consciousness. It is not aware; it is a dumb toilet; it cannot be obscene; it's impossible. If it could be obscene, it could be cranky, it could be a Communist toilet, a traitorous toilet. It can do none of these things. This is a dirty toilet here.

Nobody can offend you by telling a dirty toilet story. They can offend you because it's trite; you've heard it many, many times.

https://www.dacapopress.com/titles/lenny-bruce/how-to-talk-dirty-and-influence-people/9780306825309/

Second: the fact that a neologism is sometimes decoupled from its theoretical underpinnings and is used colloquially is a feature, not a bug. Many people apply the term "enshittification" very loosely indeed, to mean "something that is bad," without bothering to learn – or apply – the theoretical framework. This is good. This is what it means for a term to enter the lexicon: it takes on a life of its own. If 10,000,000 people use "enshittification" loosely and inspire 10% of their number to look up the longer, more theoretical work I've done on it, that is one million normies who have been sucked into a discourse that used to live exclusively in the world of the most wonkish and obscure practitioners. The only way to maintain a precise, theoretically grounded use of a term is to confine its usage to a small group of largely irrelevant insiders. Policing the use of "enshittification" is worse than a self-limiting move – it would be a self-inflicted wound. As I said in that Berlin speech:

Enshittification names the problem and proposes a solution. It's not just a way to say 'things are getting worse' (though of course, it's fine with me if you want to use it that way. It's an English word. We don't have der Rat für englische Rechtschreibung. English is a free for all. Go nuts, meine Kerle).

Finally: "coinage" is both more – and less – than thinking of the word. After the American Dialect Society gave honors to "enshittification," a few people slid into my mentions with citations to "enshittification" that preceded my usage. I find this completely unsurprising, because English is such a slippery and playful tongue, because English speakers love to swear, and because infixing is such a fun way to swear (e.g. "unfuckingbelievable"). But of course, I hadn't encountered any of those other usages before I came up with the word independently, nor had any of those other usages spread appreciably beyond the speaker (it appears that each of the handful of predecessors to my usage represents an act of independent coinage).

If "coinage" was just a matter of thinking up the word, you could write a small python script that infixed the word "shit" into every syllable of every word in the OED, publish the resulting text file, and declare priority over all subsequent inventive swearers.

On the one hand, coinage takes place when the coiner a) independently invents a word; and b) creates the context for that word that causes it to escape from the coiner's immediate milieu and into the wider world.

But on the other hand – and far more importantly – the fact that a successful coinage requires popular uptake by people unknown to the coiner means that the coiner only ever plays a small role in the coinage. Yes, there would be no popularization without the coinage – but there would also be no coinage without the popularization. Words belong to groups of speakers, not individuals. Language is a cultural phenomenon, not an individual one.

Which is rather the point, isn't it? After a quarter of a century of being part of a community that fought tirelessly to get a serious and widespread consideration of tech policy underway, we're closer than ever, thanks, in part, to "enshittification." If someone else independently used that word before me, if some people use the word loosely, if the word makes some people uncomfortable, that's fine, provided that the word is doing what I want it to do, what I've devoted my life to doing.

The point of coining words isn't the pilkunnussija's obsession with precise usage, nor the petty glory of being known as a coiner, nor ensuring that NATO generals' virgin ears are protected from the word "shit" – a word that, incidentally, is also the root of "science":

https://www.arrantpedantry.com/2019/01/24/science-and-shit/

Isn't language fun?

Tor Books as just published two new, free LITTLE BROTHER stories: VIGILANT, about creepy surveillance in distance education; and SPILL, about oil pipelines and indigenous landback.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/10/14/pearl-clutching/#this-toilet-has-no-central-nervous-system

303 notes

·

View notes

Text

A lawsuit filed Wednesday against Meta argues that US law requires the company to let people use unofficial add-ons to gain more control over their social feeds.

It’s the latest in a series of disputes in which the company has tussled with researchers and developers over tools that give users extra privacy options or that collect research data. It could clear the way for researchers to release add-ons that aid research into how the algorithms on social platforms affect their users, and it could give people more control over the algorithms that shape their lives.

The suit was filed by the Knight First Amendment Institute at Columbia University on behalf of researcher Ethan Zuckerman, an associate professor at the University of Massachusetts—Amherst. It attempts to take a federal law that has generally shielded social networks and use it as a tool forcing transparency.

Section 230 of the Communications Decency Act is best known for allowing social media companies to evade legal liability for content on their platforms. Zuckerman’s suit argues that one of its subsections gives users the right to control how they access the internet, and the tools they use to do so.

“Section 230 (c) (2) (b) is quite explicit about libraries, parents, and others having the ability to control obscene or other unwanted content on the internet,” says Zuckerman. “I actually think that anticipates having control over a social network like Facebook, having this ability to sort of say, ‘We want to be able to opt out of the algorithm.’”

Zuckerman’s suit is aimed at preventing Facebook from blocking a new browser extension for Facebook that he is working on called Unfollow Everything 2.0. It would allow users to easily “unfollow” friends, groups, and pages on the service, meaning that updates from them no longer appear in the user’s newsfeed.

Zuckerman says that this would provide users the power to tune or effectively disable Facebook’s engagement-driven feed. Users can technically do this without the tool, but only by unfollowing each friend, group, and page individually.

There’s good reason to think Meta might make changes to Facebook to block Zuckerman’s tool after it is released. He says he won’t launch it without a ruling on his suit. In 2020, the company argued that the browser Friendly, which had let users search and reorder their Facebook news feeds as well as block ads and trackers, violated its terms of service and the Computer Fraud and Abuse Act. In 2021, Meta permanently banned Louis Barclay, a British developer who had created a tool called Unfollow Everything, which Zuckerman’s add-on is named after.

“I still remember the feeling of unfollowing everything for the first time. It was near-miraculous. I had lost nothing, since I could still see my favorite friends and groups by going to them directly,” Barclay wrote for Slate at the time. “But I had gained a staggering amount of control. I was no longer tempted to scroll down an infinite feed of content. The time I spent on Facebook decreased dramatically.”

The same year, Meta kicked off from its platform some New York University researchers who had created a tool that monitored the political ads people saw on Facebook. Zuckerman is adding a feature to Unfollow Everything 2.0 that allows people to donate data from their use of the tool to his research project. He hopes to use the data to investigate whether users of his add-on who cleanse their feeds end up, like Barclay, using Facebook less.

Sophia Cope, staff attorney at the Electronic Frontier Foundation, a digital rights group, says that the core parts of Section 230 related to platforms’ liability for content posted by users have been clarified through potentially thousands of cases. But few have specifically dealt with the part of the law Zuckerman’s suit seeks to leverage.

“There isn’t that much case law on that section of the law, so it will be interesting to see how a judge breaks it down,” says Cope. Zuckerman is a member of the EFF’s board of advisers.

John Morris, a principal at the Internet Society, a nonprofit that promotes open development of the internet, says that, to his knowledge, Zuckerman’s strategy “hasn’t been used before, in terms of using Section 230 to grant affirmative rights to users,” noting that a judge would likely take that claim seriously.

Meta has previously suggested that allowing add-ons that modify how people use its services raises security and privacy concerns. But Daphne Keller, director of the Program on Platform Regulation at Stanford's Cyber Policy Center, says that Zuckerman’s tool may be able to fairly push back on such an accusation.“The main problem with tools that give users more control over content moderation on existing platforms often has to do with privacy,” she says. “But if all this does is unfollow specified accounts, I would not expect that problem to arise here."

Even if a tool like Unfollow Everything 2.0 didn’t compromise users’ privacy, Meta might still be able to argue that it violates the company’s terms of service, as it did in Barclay’s case.

“Given Meta’s history, I could see why he would want a preemptive judgment,” says Cope. “He’d be immunized against any civil claim brought against him by Meta.”

And though Zuckerman says he would not be surprised if it takes years for his case to wind its way through the courts, he believes it’s important. “This feels like a particularly compelling case to do at a moment where people are really concerned about the power of algorithms,” he says.

370 notes

·

View notes

Text

Brian Stelter at CNN Business:

New YorkCNN — President-elect Donald Trump’s pick for chairman of the Federal Communications Commission, Brendan Carr, wasted no time in stating his priorities on Sunday night. Just one hour after thanking the president for the appointment, Carr wrote on X, “We must dismantle the censorship cartel and restore free speech rights for everyday Americans.” Carr and Trump’s powerful ally Elon Musk immediately replied with one word of affirmation: “Based.” The comments from Carr, who wrote the chapter on the FCC in the conservative blueprint Project 2025, signaled that it won’t be business-as-usual at the country’s communications regulatory agency. Past chairs of the agency, both Republicans and Democrats, have emphasized broadband internet deployment and wireless spectrum policy. Carr didn’t mention those issues on Sunday night.

Instead, he took aim at technology companies for “censorship;” promised to hold broadcast TV and radio stations accountable; and pledged to end the FCC’s promotion of diversity, equity, and inclusion efforts. Carr was very clearly channeling the president-elect, who raised all three topics on the campaign trail, often in misleading ways. Trump appointed Carr to the FCC in 2017. Carr is now the senior Republican at the agency, which meant he was widely expected to get the chairman appointment. He also has a close relationship with Musk (some of it has been visible in their interactions on X) and has accused Democrats of waging “regulatory lawfare” against Musk’s Starlink satellite internet service.

As chairman, Carr may be able to steer generous federal subsidies to Starlink. When Politico published a story titled “the DC bureaucrat who could deliver billions to Elon Musk” last month, Carr told the outlet that he would be an even-handed regulator. Musk celebrated Carr’s appointment on X on Sunday night. Both men talk in much the same way about free speech rights, reflecting widespread concerns on the right about online censorship. (Trump called Carr “a warrior for Free Speech” in the press release about his appointment.) Claims of conservative censorship erupted several years ago as a result of content moderation decisions by social media platforms like Facebook and Twitter. Officials at the platforms said they were acting in good faith to reduce some of the toxicity – like election lies and Covid pandemic conspiracy theories – that turned off many users. Conservatives charged that the platforms were unfairly silencing their views – factoring into Musk’s decision to buy Twitter and turn it into X.

[...]

In his Project 2025 chapter, Carr laid out an agenda for the federal agency under a future Trump administration. The agency’s top priorities, he wrote, should be “reining in Big Tech, promoting national security, unleashing economic prosperity, and ensuring FCC accountability and good governance.”

In the chapter, Carr also asserted that the Chinese social media platform TikTok “poses a serious and unacceptable risk to America’s national security” and should be banned. Carr’s years-long crusade against TikTok paralleled Trump’s calls, although Trump reversed his position on TikTok earlier this year. Carr has also supported the rollback of net neutrality rules and called for “legislation that scraps” Section 230 of the Communications Decency Act, which gives immunity to tech platforms that moderate user-generated content. “Congress should do so by ensuring that Internet companies no longer have carte blanche to censor protected speech while maintaining their Section 230 protections,” he wrote in Project 2025. The FCC does have jurisdiction over local TV and radio licenses. During his reelection campaign, Trump called for every major American TV news network to be punished, often because of interview questions he disliked or programming he detested. He repeatedly said that certain licenses should be revoked – usually while misstating how the licensing process actually works.

The FCC grants eight-year license terms and hasn’t denied any license renewal in decades. But Carr indicated earlier this month that he would take Trump’s complaints seriously. And he wrote on X Sunday night that “broadcast media have had the privilege of using a scarce and valuable public resource — our airwaves. In turn, they are required by law to operate in the public interest.” As chairman, he added, “the FCC will enforce this public interest obligation.”

Donald Trump taps Project 2025 co-writer Brendan Carr to head the FCC for his 2nd term.

The FCC under Carr’s leadership will be a clearinghouse for far-right items, such as protecting far-right misinformation and disinformation from “censorship”, revoke broadcasting licenses from anti-Trump outlets, and more.

See Also:

Mother Jones: Trump’s FCC Pick Wants to Intimidate Broadcasters and Enrich Trump Allies

9 notes

·

View notes

Text

(Reuters) - The U.S. Supreme Court on Tuesday declined to hear a bid by child pornography victims to overcome a legal shield for internet companies in a case involving a lawsuit accusing Reddit Inc of violating federal law by failing to rid the discussion website of this illegal content.

The justices turned away the appeal of a lower court's decision to dismiss the proposed class action lawsuit on the grounds that Reddit was shielded by a U.S. statute called Section 230, which safeguards internet companies from lawsuits for content posted by users but has an exception for claims involving child sex trafficking.

The Supreme Court on May 19 sidestepped an opportunity to narrow the scope of Section 230 immunity in a separate case.

Section 230 of the Communications Decency Act of 1996 protects "interactive computer services" by ensuring they cannot be treated as the "publisher or speaker" of information provided by users. The Reddit case explored the scope of a 2018 amendment to Section 230 called the Fight Online Sex Trafficking Act (FOSTA), which allows lawsuits against internet companies if the underlying claim involves child sex trafficking.

Reddit allows users to post content that is moderated by other users in forums called subreddits. The case centers on sexually explicit images and videos of children posted to such forums by users. The plaintiffs - the parents of minors and a former minor who were the subjects of the images - sued Reddit in 2021 in federal court in California, seeking monetary damages.

The plaintiffs accused Reddit of doing too little to remove or prevent child pornography and of financially benefiting from the illegal posts through advertising in violation of a federal child sex trafficking law.

The San Francisco-based 9th U.S. Circuit Court of Appeals in 2022 concluded that in order for the exception under FOSTA to apply, plaintiffs must show that an internet company "knowingly benefited" from the sex trafficking through its own conduct.

Instead, the 9th Circuit concluded, the allegations "suggest only that Reddit 'turned a blind eye' to the unlawful content posted on its platform, not that it actively participated in sex trafficking."

Reddit said in court papers that it works hard to find and prevent the sharing of child sexual exploitation materials on its platform, giving all users the ability to flag posts and using dedicated teams to remove illegal content.

The Supreme Court on May 19 declined to rule on a bid to weaken Section 230 in a case seeking to hold Google LLC liable under a federal anti-terrorism law for allegedly recommending content by the Islamic State militant group to users of its YouTube video-sharing service. Google and YouTube are part of Alphabet Inc.

Calls have come from across the ideological and political spectrum - including Democratic President Joe Biden and his Republican predecessor Donald Trump - for a rethink of Section 230 to ensure that companies can be held accountable for content on their platforms.

"Child pornography is the root cause of much of the sex trafficking that occurs in the world today, and it is primarily traded on the internet, through websites that claim immunity" under Section 230, the plaintiffs said in their appeal to the Supreme Court.

Allowing the 9th Circuit's decision to stand, they added, "would immunize a huge class of violators who play a role in the victimization of children."

#nunyas news#be tough to prove they benefited#no cp subs allowed#sure they exists but they're not allowed

38 notes

·

View notes

Text

SESSION 11. LAW ON INTERNET INTERMEDIARIES

PART 1 - INTERNET INTERMEDIARY SAFE HARBOUR

Internet Intermediary Liability: Safe harbour laws provides a measure of protection for Internet intermediaries from liability. The general rationale is that these intermediaries provides a socio-economic benefit and they need these protections in order to function effectively. However, because the definition and coverage of “Internet Intermediary” is potentially very wide, the categorisation of different types of intermediaries will provide greater certainty and clarity as to what types of intermediary gets what form and measure of protection. They are normally determined by their profile (objectives/control based on role/function) with pre-requisites and extent of protection (civil/criminal) set into the relevant legislation. There are different models of general safe harbour protection for intermediaries in different countries/regions. What are the justifications and policy reasons for such protections? What improvements can and should be made to the current regime?

Singapore Law on Internet Intermediaries: What is the scope/breadth of coverage of “network service providers” under section 26 of the Singapore ETA? Does it only cover access service providers or does it’s protection extend to content hosts/providers? How does it relate to the rights and liability of the Internet intermediary under the Copyright regime and the Internet Content Regulation regime? What is the relationship between the Data Intermediary under the Data Protection regime on the one hand and type of intermediary covered by section 26 of the ETA on the other? Other notable exceptions in other statutes and in common law (e.g. defences to an action on defamation) will also be considered.

Internet Intermediaries and Criminal/Civil Liability: What is the level/depth of protection offered by section 26 of the ETA? In this context, consider the rationale and extent of the statutory exceptions under subsection (2). What are the conditions for such protection and what are the factors that our courts are likely to consider when determining whether an intermediary is liable (under criminal or civil law) for “third party material”? What is the meaning of “merely provides access” and the lack of “effective control” over the said “third party”.

Comparison of the EU-Wide Approach and the US Approach: A general comparison will be made on the approaches in the EU and the US in relation to intermediaries in the context of trademark infringement through keyword advertising. The focus will be on the scope and application of the safe harbour protection and not on trademark law. This example is used because of the line of ECJ trademark infringement cases on the legality of keyword advertising and the allocation of responsibility between the intermediary (search engines or online auction/retail operator) and the advertiser/business.

Statutes: (relevant provisions will be highlighted in class)

Section 26 of the Electronic Transactions Act

Section 230 of the Communications Decency Act

Section 4 of the EU E-Commerce Directive

Cases: (inapplicable to COR2226, general principles will be explained to you in class)

Joined Cases C-236/08 to C-238/08 Google France and Google Inc. et al. v Louis Vuitton Malletier et al. (2010)(only in relation to the extent of intermediary safe harbour protection)

PART 2 - LIABILITY FOR ‘PUBLICATION’ IN DEFAMATION LAW (NOT APPLICABLE TO COR2226)

Defamation Laws: The law on defamation pre-dates the age of new media and the objectives were set in defined limits, both jurisdictional and societal. The tort of defamation is less consistently applied across jurisdictions, given the potential conflict with the freedom of speech and expression as well as journalistic interests. The extra-territorial reach and ease of dissemination through the electronic medium, and the rise of blogs, social media, citizen journalism and other platforms which allow individual and groups of people to share their views and opinions that can relate to the character and reputation of another gives rise to new issues that the old rules on defamation do not easily address. Courts, and the legislature in certain jurisdictions, have had to provide some guidance and answers, taking into consideration the original purpose of the law and the new context and age in which it now operates.

New Media, New Context: Publications now can take the form of websites such as blogs, electronic mail and other forms of communication, social media such as twitter and Facebook and so on. From these, new practices and forms of sharing have emerged. These can also take the form of text and pictures, and video and audio messages. We are now familiar with terms and phrases like “Internet trolling”, the “viral” process of Internet sharing, “cyber-vigilantism”, and the use of “hyperlinking”; all of which bring forth new problems and issues for the application of the law on defamation. Consider the new media and new context, where relevant, when answering the below questions.

What is “publication” in the context of social media platforms?

What is “publication” in relation to a conduit or intermediary such as a search engine?

Do/Should defamation law allow non-legal entities to be both the defamer and the defamed, in relation to private and public entities?

Should a distinction be made for some intermediaries such as by way of specific exceptions or defences?

Cases: (inapplicable to COR2226)

Qingbao Bohai v Goh Teck Beng [2016] SGHC 142

Golden Season Pte Ltd and others v Kairos Singapore Holdings Pte Ltd and another [2015] 2 SLR 751; [2015] SGHC 38

Zhu Yong Zhen v AIA Singapore Private Limited [2013] SGHC 37

Ng Koo Kay Benedict and another v Zim Integrated Shipping Services Ltd [2010] SLR 860; [2010] SGHC 47

McGrath v Dawkins, Amazon and others [2012] EWHC B3 (QB) (issue of publication, parties)

Godfrey v Demon Internet Service [2001] QB 201 (issue of publication, parties)

Statutes: (for reference in class only)

Defamation Act

References: (optional)

Gary Chan, Reputation and Defamatory Meaning on the Internet: Communications, Contexts and Communities (2015) 27 SAcLJ 694

Gary Chan, Defamation Via Hyperlinks: More Than Meets The Eye (2012) Law Quarterly Review

1 note

·

View note

Text

Jessica Rosenworcel will soon be vacating her position as chairwoman of the Federal Communications Commission (FCC) because President-elect Donald Trump has just nominated Brendan Carr to take over her role.In his statement, Trump said that Carr is a strong advocate for free speech and talked about the latter's track record of fighting against regulations that he feels limit Americans' freedoms. In this spirit, Carr has pushed for important changes to Section 230 of the Communications Decency Act, which currently protects social media companies from being held responsible for content posted by their users. Carr believes that while these platforms should have some protections, they shouldn't have unlimited authority to control or moderate what people say online while avoiding accountability.This indicates that the FCC may change some of its operations once Trump takes office as Brendan Carr plans to steer the agency towards reducing regulations and promoting a more open internet. Additionally, he has stated on X that he intends to move the agency away from previous priorities that emphasized Diversity, Equity, and Inclusion (DEI) initiatives. https://twitter.com/BrendanCarrFCC/status/1858364209626521911 Some critics might argue that stepping away from DEI initiatives could worsen the gaps in access to communication services, particularly for underrepresented communities. However, Carr believes that focusing on accountability and transparency within tech companies is key to ensuring that everyone in America has the freedom to express themselves online. By pushing these values forward, he aims to create an environment where all voices can be heard, regardless of background.Brendan Carr, who has a background as a staff attorney for the FCC and has been confirmed by the Senate thrice without any objections, is ready to use his experience in telecommunications law to update the agency's infrastructure rules. His efforts in reform have already had a big impact, making it easier to build high-speed broadband networks and cutting down on the red tape that often slows down private development projects. Read the full article

0 notes

Text

This article was originally published by John W. Whitehead and Nisha Whitehead at The Rutherford Institute.

“What makes it possible for a totalitarian or any other dictatorship to rule is that people are not informed; how can you have an opinion if you are not informed? If everybody always lies to you, the consequence is not that you believe the lies, but rather that nobody believes anything any longer… And a people that no longer can believe anything cannot make up its mind. It is deprived not only of its capacity to act but also of its capacity to think and to judge. And with such a people you can then do what you please.”—Hannah Arendt

In a perfect example of the Nanny State mindset at work, Hillary Clinton insists that the powers-that-be need “total control” in order to make the internet a safer place for users and protect us from harm.

Clinton is not alone in her distaste for unregulated, free speech online.

A bipartisan chorus that includes both presidential candidates Kamala Harris and Donald Trump has long clamored to weaken or do away with Section 230 of the Communications Decency Act, which essentially acts as a bulwark against online censorship.

It’s a complicated legal issue that involves debates over immunity, liability, net neutrality, and whether or not internet sites are publishers with editorial responsibility for the content posted to their sites, but really, it comes down to the tug-of-war over where censorship (corporate and government) begins and free speech ends.

As Elizabeth Nolan Brown writes for Reason, “What both the right and left attacks on the provision share is a willingness to use whatever excuses resonate—saving children, stopping bias, preventing terrorism, misogyny, and religious intolerance—to ensure more centralized control of online speech. They may couch these in partisan terms that play well with their respective bases, but their aim is essentially the same.”

In other words, the government will use any excuse to suppress dissent and control the narrative.

The internet may well be the final frontier where free speech still flourishes, especially for politically incorrect speech and disinformation, which test the limits of our so-called egalitarian commitment to the First Amendment’s broad-minded principles.

On the internet, falsehoods and lies abound, misdirection and misinformation dominate, and conspiracy theories go viral.

This is to be expected, and the response should be more speech, not less.

0 notes

Quote

Musk has freest legal rein in the US, where the First Amendment protects almost all speech. Moreover, internet publishers are exempt from liability under the notorious Section 230 of the misleadingly named Communications Decency Act. But even in America you cannot falsely shout fire in a crowded theatre

Elon Musk and the danger to democracy

0 notes

Text

Broad Coalition Opposes Proposed Repeal of Internet’s Legal Shield, Section 230

Broad Coalition Opposes Proposed Repeal of Internet’s Legal Shield, Section 230

A proposed bill aiming to repeal Section 230 of the Communications Decency Act is facing pushback from various organizations, including the American Library Association and Wikimedia Foundation. These groups emphasize the importance of Section 230 in protecting the digital ecosystem, particularly for smaller tech entities, educational bodies, and libraries. Section 230, enacted in 1996, shields…

View On WordPress

0 notes

Text

Section 230: It is Time for a Makeover

In the digital world we all live in, Section 230 of the Communications Decency Act (CDA) is like the internet’s godparent, promising to protect its growth and freedom. Passed to make sure online platforms weren’t sued out of existence because of what their users post, Section 230 has been the unsung hero behind the internet’s bustling forums, memes, and endless discussions. But after over 40 U.S.…

View On WordPress

0 notes

Text

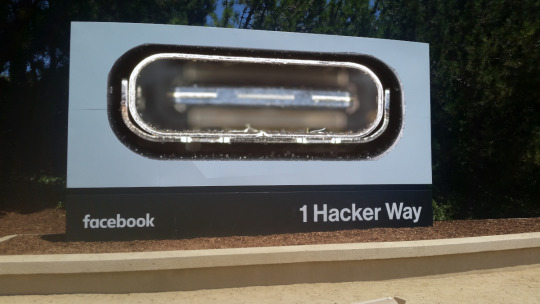

CDA 230 bans Facebook from blocking interoperable tools

I'm touring my new, nationally bestselling novel The Bezzle! Catch me TONIGHT (May 2) in WINNIPEG, then TOMORROW (May 3) in CALGARY, then SATURDAY (May 4) in VANCOUVER, then onto Tartu, Estonia, and beyond!

Section 230 of the Communications Decency Act is the most widely misunderstood technology law in the world, which is wild, given that it's only 26 words long!

https://www.techdirt.com/2020/06/23/hello-youve-been-referred-here-because-youre-wrong-about-section-230-communications-decency-act/

CDA 230 isn't a gift to big tech. It's literally the only reason that tech companies don't censor on anything we write that might offend some litigious creep. Without CDA 230, there'd be no #MeToo. Hell, without CDA 230, just hosting a private message board where two friends get into serious beef could expose to you an avalanche of legal liability.

CDA 230 is the only part of a much broader, wildly unconstitutional law that survived a 1996 Supreme Court challenge. We don't spend a lot of time talking about all those other parts of the CDA, but there's actually some really cool stuff left in the bill that no one's really paid attention to:

https://www.aclu.org/legal-document/supreme-court-decision-striking-down-cda

One of those little-regarded sections of CDA 230 is part (c)(2)(b), which broadly immunizes anyone who makes a tool that helps internet users block content they don't want to see.

Enter the Knight First Amendment Institute at Columbia University and their client, Ethan Zuckerman, an internet pioneer turned academic at U Mass Amherst. Knight has filed a lawsuit on Zuckerman's behalf, seeking assurance that Zuckerman (and others) can use browser automation tools to block, unfollow, and otherwise modify the feeds Facebook delivers to its users:

https://knightcolumbia.org/documents/gu63ujqj8o

If Zuckerman is successful, he will set a precedent that allows toolsmiths to provide internet users with a wide variety of automation tools that customize the information they see online. That's something that Facebook bitterly opposes.

Facebook has a long history of attacking startups and individual developers who release tools that let users customize their feed. They shut down Friendly Browser, a third-party Facebook client that blocked trackers and customized your feed:

https://www.eff.org/deeplinks/2020/11/once-again-facebook-using-privacy-sword-kill-independent-innovation

Then in in 2021, Facebook's lawyers terrorized a software developer named Louis Barclay in retaliation for a tool called "Unfollow Everything," that autopiloted your browser to click through all the laborious steps needed to unfollow all the accounts you were subscribed to, and permanently banned Unfollow Everywhere's developer, Louis Barclay:

https://slate.com/technology/2021/10/facebook-unfollow-everything-cease-desist.html

Now, Zuckerman is developing "Unfollow Everything 2.0," an even richer version of Barclay's tool.

This rich record of legal bullying gives Zuckerman and his lawyers at Knight something important: "standing" – the right to bring a case. They argue that a browser automation tool that helps you control your feeds is covered by CDA(c)(2)(b), and that Facebook can't legally threaten the developer of such a tool with liability for violating the Computer Fraud and Abuse Act, the Digital Millennium Copyright Act, or the other legal weapons it wields against this kind of "adversarial interoperability."

Writing for Wired, Knight First Amendment Institute at Columbia University speaks to a variety of experts – including my EFF colleague Sophia Cope – who broadly endorse the very clever legal tactic Zuckerman and Knight are bringing to the court.

I'm very excited about this myself. "Adversarial interop" – modding a product or service without permission from its maker – is hugely important to disenshittifying the internet and forestalling future attempts to reenshittify it. From third-party ink cartridges to compatible replacement parts for mobile devices to alternative clients and firmware to ad- and tracker-blockers, adversarial interop is how internet users defend themselves against unilateral changes to services and products they rely on:

https://www.eff.org/deeplinks/2019/10/adversarial-interoperability

Now, all that said, a court victory here won't necessarily mean that Facebook can't block interoperability tools. Facebook still has the unilateral right to terminate its users' accounts. They could kick off Zuckerman. They could kick off his lawyers from the Knight Institute. They could permanently ban any user who uses Unfollow Everything 2.0.

Obviously, that kind of nuclear option could prove very unpopular for a company that is the very definition of "too big to care." But Unfollow Everything 2.0 and the lawsuit don't exist in a vacuum. The fight against Big Tech has a lot of tactical diversity: EU regulations, antitrust investigations, state laws, tinkerers and toolsmiths like Zuckerman, and impact litigation lawyers coming up with cool legal theories.

Together, they represent a multi-front war on the very idea that four billion people should have their digital lives controlled by an unaccountable billionaire man-child whose major technological achievement was making a website where he and his creepy friends could nonconsensually rate the fuckability of their fellow Harvard undergrads.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/05/02/kaiju-v-kaiju/#cda-230-c-2-b

Image: D-Kuru (modified): https://commons.wikimedia.org/wiki/File:MSI_Bravo_17_(0017FK-007)-USB-C_port_large_PNr%C2%B00761.jpg

Minette Lontsie (modified): https://commons.wikimedia.org/wiki/File:Facebook_Headquarters.jpg

CC BY-SA 4.0: https://creativecommons.org/licenses/by-sa/4.0/deed.en

#pluralistic#ethan zuckerman#cda 230#interoperability#content moderation#composable moderation#unfollow everything#meta#facebook#knight first amendment initiative#u mass amherst#cfaa

246 notes

·

View notes

Text

It no longer makes sense to speak of free speech in traditional terms. The internet has so transformed the nature of the speaker that the definition of speech itself has changed.

The new speech is governed by the allocation of virality. People cannot simply speak for themselves, for there is always a mysterious algorithm in the room that has independently set the volume of the speaker’s voice. If one is to be heard, one must speak in part to one’s human audience, in part to the algorithm. It is as if the US Constitution had required citizens to speak through actors or lawyers who answered to the Dutch East India Company, or some other large remote entity. What power should these intermediaries have? When the very logic of speech must shift in order for people to be heard, is that still free speech? This was not a problem foreseen in the law.

The time may be right for a legal and policy reset. US lawmakers on both sides of the aisle are questioning Section 230, the liability shield that enshrined the ad-driven internet. The self-reinforcing ramifications of a mere 26 words—“no provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider”—has produced a social media ecosystem that is widely held to have had deleterious effects on both democracy and mental health.

Abraham Lincoln is credited with the famous quip about how you cannot fool all the people all the time. Perhaps you cannot, but perhaps the internet can. Imperfect speech has always existed, but the means and scale of amplification have not. The old situation cannot be the guide for the new.

Section 230 was created during a period when policy was being designed to unleash internet innovation, thereby maintaining America’s competitive edge in cyberspace. The early internet was supported by a variety of friendly policies, not just Section 230. For instance, sales arranged over the internet were often not taxed in early years. Furthermore, the internet was knowingly inaugurated in an incomplete state, lacking personal accounts, authentication mechanisms, commercial transaction standards, and many other needed elements. The thinking was not only that it was easier to get a minimal design started when computing power was still nascent, but also that the missing elements would be addressed by entrepreneurs. In effect, we were giving trillion-dollar gifts to parties unknown who would be the inevitable network-effect winners.

Section 230 was enacted as part of the 1996 Communications Decency Act, a larger legislative effort within the umbrella 1996 Telecommunications Act. Section 230(c)(1) provides immunity for online services regarding user-generated content, ensuring the companies hosting content are not treated as publishers of this information. Section 230(c)(2) offers Good Samaritan protection from civil liability when the companies—or platforms, as we call them today—in good faith remove or moderate objectionable content.

After President Bill Clinton signed the 1996 Telecommunications Act into law, it was unclear how the courts might interpret it. When the dust cleared, Section 230 emerged as something of a double-edged sword. It could be used to justify censorship, and at the same time be deployed as a corporate liability shield. Most importantly, it provided the runway for the takeoff of Google, Twitter, and Facebook. (And now TikTok—which, being a Chinese company, proves that Section 230 no longer serves American interests.)

The impact on the public sphere has been, to say the least, substantial. In removing so much liability, Section 230 forced a certain sort of business plan into prominence, one based not on uniquely available information from a given service, but on the paid arbitration of access and influence. Thus, we ended up with the deceptively named “advertising” business model—and a whole society thrust into a 24/7 competition for attention. A polarized social media ecosystem. Recommender algorithms that mediate content and optimize for engagement. We have learned that humans are most engaged, at least from an algorithm’s point of view, by rapid-fire emotions related to fight-or-flight responses and other high-stakes interactions. In enabling the privatization of the public square, Section 230 has inadvertently rendered impossible deliberation between citizens who are supposed to be equal before the law. Perverse incentives promote cranky speech, which effectively suppresses thoughtful speech.

And then there is the economic imbalance. Internet platforms that rely on Section 230 tend to harvest personal data for their business goals without appropriate compensation. Even when data ought to be protected or prohibited by copyright or some other method, Section 230 often effectively places the onus on the violated party through the requirement of takedown notices. That switch in the order of events related to liability is comparable to the difference between opt-in and opt-out in privacy. It might seem like a technicality, but it is actually a massive difference that produces substantial harms. For example, workers in information-related industries such as local news have seen stark declines in economic success and prestige. Section 230 makes a world of data dignity functionally impossible.

To date, content moderation has too often been beholden to the quest for attention and engagement, regularly disregarding the stated corporate terms of service. Rules are often bent to maximize engagement through inflammation, which can mean doing harm to personal and societal well-being. The excuse is that this is not censorship, but is it really not? Arbitrary rules, doxing practices, and cancel culture have led to something hard to distinguish from censorship for the sober and well-meaning. At the same time, the amplification of incendiary free speech for bad actors encourages mob rule. All of this takes place under Section 230’s liability shield, which effectively gives tech companies carte blanche for a short-sighted version of self-serving behavior. Disdain for these companies—which found a way to be more than carriers, and yet not publishers—is the only thing everyone in America seems to agree on now.

Trading a known for an unknown is always terrifying, especially for those with the most to lose. Since at least some of Section 230’s network effects were anticipated at its inception, it should have had a sunset clause. It did not. Rather than focusing exclusively on the disruption that axing 26 words would spawn, it is useful to consider potential positive effects. When we imagine a post-230 world, we discover something surprising: a world of hope and renewal worth inhabiting.

In one sense, it’s already happening. Certain companies are taking steps on their own, right now, toward a post-230 future. YouTube, for instance, is diligently building alternative income streams to advertising, and top creators are getting more options for earning. Together, these voluntary moves suggest a different, more publisher-like self-concept. YouTube is ready for the post-230 era, it would seem. (On the other hand, a company like X, which leans hard into 230, has been destroying its value with astonishing velocity.) Plus, there have always been exceptions to Section 230. For instance, if someone enters private information, there are laws to protect it in some cases. That means dating websites, say, have the option of charging fees instead of relying on a 230-style business model. The existence of these exceptions suggests that more examples would appear in a post-230 world.

Let’s return to speech. One difference between speech before and after the internet was that the scale of the internet “weaponized” some instances of speech that would not have been as significant before. An individual yelling threats at someone in passing, for instance, is quite different from a million people yelling threats. This type of amplified, stochastic harassment has become a constant feature of our times—chilling speech—and it is possible that in a post-230 world, platforms would be compelled to prevent it. It is sometimes imagined that there are only two choices: a world of viral harassment or a world of top-down smothering of speech. But there is a third option: a world of speech in which viral harassment is tamped down but ideas are not. Defining this middle option will require some time to sort out, but it is doable without 230, just as it is possible to define the limits of viral financial transactions to make Ponzi schemes illegal.

With this accomplished, content moderation for companies would be a vastly simpler proposition. Companies need only uphold the First Amendment, and the courts would finally develop the precedents and tests to help them do that, rather than the onus of moderation being entirely on companies alone. The United States has more than 200 years of First Amendment jurisprudence that establishes categories of less protected speech—obscenity, defamation, incitement, fighting words—to build upon, and Section 230 has effectively impeded its development for online expression. The perverse result has been the elevation of algorithms over constitutional law, effectively ceding judicial power.

When the jurisprudential dust has cleared, the United States would be exporting the democracy-promoting First Amendment to other countries rather than Section 230’s authoritarian-friendly liability shield and the sewer of least-common-denominator content that holds human attention but does not bring out the best in us. In a functional democracy, after all, the virtual public square should belong to everyone, so it is important that its conversations are those in which all voices can be heard. This can only happen with dignity for all, not in a brawl.

Section 230 perpetuates an illusion that today’s social media companies are common carriers like the phone companies that preceded them, but they are not. Unlike Ma Bell, they curate the content they transmit to users. We need a robust public conversation about what we, the people, want this space to look like, and what practices and guardrails are likely to strengthen the ties that bind us in common purpose as a democracy. Virality might come to be understood as an enemy of reason and human values. We can have culture and conversations without a mad race for total attention.

While Section 230 might have been considered more a target for reform rather than repeal prior to the advent of generative AI, it can no longer be so. Social media could be a business success even if its content was nonsense. AI cannot.

There have been suggestions that AI needs Section 230 because large language models train on data and will be better if that data is freely usable with no liabilities or encumbrances. This notion is incorrect. People want more from AI than entertainment. It is widely considered an important tool for productivity and scientific progress. An AI model is only as good as the data it is trained on; indeed, general data improves specialist results. The best AI will come out of a society that prioritizes quality communication. By quality communication, we do not mean deepfakes. We mean open and honest dialog that fosters understanding rather than vitriol, collaboration rather than polarization, and the pursuit of knowledge and human excellence rather than a race to the bottom of the brain stem.

The attention-grooming model fostered by Section 230 leads to stupendous quantities of poor-quality data. While an AI model can tolerate a significant amount of poor-quality data, there is a limit. It is unrealistic to imagine a society mediated by mostly terrible communication where that same society enjoys unmolested, high-quality AI. A society must seek quality as a whole, as a shared cultural value, in order to maximize the benefits of AI. Now is the best time for the tech business to mature and develop business models based on quality.

All of this might sound daunting, but we’ve been here before. When the US government said the American public owned the airwaves so that television broadcasting could be regulated, it put in place regulations that supported the common good. The internet affects everyone, so we must devise measures to ensure that our digital-age public discourse is of high quality and includes everyone. In the television era, the fairness doctrine laid that groundwork. A similar lens needs to be developed for the internet age.

Without Section 230, recommender algorithms and the virality they spark would be less likely to distort speech. It is sadly ironic that the very statute that delivered unfathomable success is today serving the interests of our enemies by compromising America’s superpower: our multinational, immigrant-powered constitutional democracy. The time has come to unleash the power of the First Amendment to promote human free speech by giving Section 230 the respectful burial it deserves.

23 notes

·

View notes

Text

Russia Manipulating American Democracy

Vladimir Putin has been actively engaged in manipulating the USA through the dark arts of digital disinformation for at least a decade. Russia manipulating American democracy via social media and having bought Trump some time back is going swimmingly. A brief look at the state of the Republican Party and the Trump candidacy for President can confirm that. America has been split down the middle with progressives on one side and the MAGA led conservatives on the other. The cosy coalition between MAGA and Putin’s Russia would strike cold warriors from the 1950’s as completely bizarre. Americans backing the military aspirations of an eastern demagogue and his police state is what we appear to be seeing. WTF? #UNGA President Donald J. Trump by National Archives and Records Administration is licensed under CC-CC0 1.0

Putin’s Russia Leading American Democracy a Merry Dance

For anybody who has been studiously ignoring the Russian machinations in America I would advise that it is time to pay attention. The world is heading into very dangerous territory with extreme greed and caveman bullying tactics leading the charge. Putin’s Russia has identified America’s points of vulnerability and gone to town on them with bots aplenty. Democracy has always largely been about the art of persuasion, ever since those early days in ancient Athens. Getting the vote via scare campaigns and corruption have been with us for millennia.

American’s Too Dumb To Realise Who Is Stealing Their Lunch

Americans are so dumb. Why? Because they always make everything about money. The Internet and the world wide web were developed by US government bodies. Then in 1993 President Bill Clinton privatised it and handed it over to the market with bugger all safe guards in place. Many of the problems we have with social media platforms not being held accountable for their content are because of this lack of meaningful oversight. The neoliberal mantra – let the market take care of it – reveals yet another black hole. It is actually the abdication of government responsibility and yet another societal failure costing lives and potentially destroying democracy. “Congress has followed the Supreme Court’s lead and enacted Section 230 of the Communications Decency Act. Section 230 offers internet service providers immunity from liability for content posted by third parties on their platforms. Brown notes that courts apply this liability shield broadly. Section 230 has protected providers from claims pertaining to intentional infliction of emotional distress, terrorism support, and defamation.’ - (https://www.theregreview.org/2021/12/21/stephen-social-responsibility-social-media-platforms/) Photo by Elena on Pexels.com Congress Is A Proven Failure Congress is a proven failure in addressing crucial crises effecting America, as witnessed by their inability to get gun control laws passed. The politics rules the beast in the US; and right now the Trump led MAGA GOP exposes the very worst manifestation of this governmental failure. Some 400 million people are being coerced and manipulated by special interest forces like corporate lobbyists. The system has been corrupted and taken over by these vested interests to the detriment of ordinary Americans. Americans know this but half of them are rusted onto the camp controlled by the worst aspect of this distortion of democracy. The GOP lead with social issues and gather their support around identity politics. Christian Nationalism, anti-woke BS, white supremacy, and the intolerance for things like gender and sexual preference diversity. Meanwhile they are in bed with big business and the rentier economy, which has been decimating the wealth of the middle class since Reagan. Russian Bots Fuelling The Polarisation Of America Much of the inflammatory stuff on the Internet has been coming from Russian bots for many years. Winding up folk on forums and in online chat rooms is a Russian speciality. Spreading disinformation via the Internet and on social media in particular has been a winning strategy for Putin. Many Americans are too dumb to get this. Lots of ordinary folk have no idea how the Internet actually works. Ignorance provides a great operating ground for those with a dark intent to do their stuff. It has been an incredibly cost effective means of paralysing American democracy. The 2016 election of Donald Trump was greatly aided by the Russians spreading anti-Hilary Clinton stuff online. Fake news was rife on social media about Hilary. Americans are used to the lies and misinformation during elections, just this time it was coming from outside of the country for the purposes of spreading disinformation. Similarly, the Russians were at it big time in Britain in the lead up to the Brexit vote. Britain leaving the EU was a big win for Putin – it weakened both Britain and the EU. Photo by Sharefaith on Pexels.com The US Has Been Made Weaker By The Trump Years Russia manipulating American democracy makes the US weaker internally and externally. If you look at the last decade much of it has been taken up with Trump. Trump has filled the airwaves with chaotic crap about him everywhere you look. The nation has never been more polarised. Threats of violence from Trump supporters are never far away, as they try to lean on the institutions protecting democracy. Men resorting to caveman bullying and violence as exemplified by the January 6th, 2021, insurrection at the Capital. Extremism is most often a reactionary response to societal anxiety by a section of the community. Following the pandemic we have seen the rise of far right groups and Naziism in terms of increased visibility and numbers participating in marches and demonstrations. Fear of death is a powerful call to action but it can lead some in the wrong direction. Trump makes a habit of saying shocking things in public. This keeps him in the news and in the public eye. He is buffoon-like and this becomes a mitigating factor in the mind’s of many of his supporters. “Oh, that is only Trump talking!” However, around the orange Jesus are some very serious and nasty people capable of doing untold damage to the lives of many Americans. Indeed, history tells us that a similar false sense of levity surrounded Adolf Hitler in his early years. Many Germans did not take him too seriously until he became the Fuhrer. Of course, by then it was too late and the dictator went on to kill tens of millions of people globally. American democracy is becoming undone by the forces coalescing around Trump. Stupidity, greed, and the politics of grievance are fuelling the popularity of Trump’s second coming. Putin is in his corner, of course, pulling strings wherever he can. Photo by Artūras Kokorevas on Pexels.com Tucker Carlson Useful Idiot Interview With Putin “US talk show host Tucker Carlson's interview with Russian President Vladimir Putin began with a rambling half-hour lecture on the history of Russia and Ukraine. Mr Carlson, frequently appearing bemused, listened as Mr Putin expounded at length about the origins of Russian statehood in the ninth century, Ukraine as an artificial state and Polish collaboration with Hitler. It is familiar ground for Mr Putin, who infamously penned a 5,000-word essay entitled "On the Historical Unity of Russians and Ukrainians" in 2021, which foreshadowed the intellectual justification the Kremlin offered for its invasion of Ukraine less than a year later. Historians say the litany of claims made by Mr Putin are nonsense - representing nothing more than a selective abuse of history to justify the ongoing war in Ukraine.” - (https://www.bbc.com/news/world-europe-68255302) Robert Sudha Hamilton is the author of Money Matters: Navigating Credit, Debt, and Financial Freedom. ©MidasWord Read the full article

#America#bots#democracy#Disinformation#fakenews#GOP#greed#Internet#online#President#Putin#Russia#section230#socialmedia#Trump#TuckerCarlson#USA

0 notes

Text

WASHINGTON — The Supreme Court on Tuesday is considering for the first time on the topical question of whether tech companies are always immune from legal liability in disputes arising from problematic content posted by users.

The justices are hearing oral arguments in a case alleging that by recommending videos that spread violent Islamist ideology, YouTube bears some responsibility for the killing of Nohemi Gonzalez, an American college student, in the 2015 Paris attacks carried out by the Islamic State terrorist group.

At issue is whether there are limits to the liability shield for internet companies that Congress enacted in 1996 as part of the Communications Decency Act. The Supreme Court has never addressed the issue before, even as the power and influence of the internet have exploded.

The case, which tech companies warn could upend the internet as it currently operates, concerns whether Section 230 can be applied to situations in which platforms actively recommend content to users using algorithms.

The novel legal issue has given rise to some unusual cross-ideological alliances, with the Biden administration and some high-profile Republican lawmakers, including Sens. Ted Cruz of Texas and Josh Hawley of Missouri, having filed briefs backing at least some of the Gonzalez family’s legal arguments.

Liberal and conservative justices on Tuesday expressed skepticism and some confusion about the arguments being put forth by Gonzalez’s attorney, Eric Schnapper. Schnapper contended that some of what YouTube publishes in its recommendations, including thumbnails it creates, doesn't amount to third-party content protected under Section 230.

“I don’t understand how a neutral suggestion about something that you’ve expressed an interest in is aiding and abetting,” said Justice Clarence Thomas, who has criticized the statute's protections. "I just don't, I don't understand it. And I'm trying to get you to explain to us how something that is standard on YouTube for virtually anything that you have an interest in suddenly amounts to aiding and abetting because you're in the ISIS category."

Fellow conservative Justice Samuel Alito also expressed doubt about Schnapper's argument. "I'm afraid I'm completely confused by whatever argument you're making at the present time," he said.

Potential reform of Section 230 is one area in which President Joe Biden and some of his most ardent critics are in agreement, although they disagree on why and how it should be done.

Conservatives generally claim that companies are inappropriately censoring content, while liberals say social media companies are spreading dangerous right-wing rhetoric and not doing enough to stop it. Although the Supreme Court has a 6-3 conservative majority, it is not clear how it will approach the issue.

Gonzalez, 23, was studying in France when she was killed while dining at a restaurant during the wave of terrorist attacks carried out by ISIS.